Oxford University Press's Blog, page 68

October 4, 2022

Memorable years, formative years: why do boys stop singing in their teens?

Fifty-five years ago, a fourteen-year-old boy spent a week in the mountains of Snowdonia, staying at a youth hostel called Bryn Dinas. Ever since, that boy has loved the mountains, been a staunch defender of the natural environment and has led later generations of young adolescents on similar expeditions. Many other adults recall fondly a similar experience that set their life course and values orientation during those critically formative years. It is odd, then, that the choral world complains frequently about the relative shortage of tenors and basses yet devotes so little time and resource to thirteen- and fourteen-year-old boys important to its future. Perhaps this neglect is a legacy of the days when it was believed that the voice should be “rested” after it had “broken”?

There are many adult men who sang as small boys but now either don’t sing at all or who have had long gaps in their lives with no singing. I met one of them yesterday. He told me of his childhood when he enjoyed singing, and of how he had been silenced at the age of 14 when a schoolteacher decided his voice had “broken”. Worse was to come. At the age of 16, he was allowed to sing again when the teacher had decided he was a “bass”. He wasn’t a bass. He couldn’t properly access the bass range, didn’t enjoy his singing as a result, so gave up. Rather belatedly, some decades later he has at last found the tenor voice he now enjoys in a large choral society. Many more never do.

“There really is no excuse for ignorance or carelessness with regard to the management of the adolescent male voice.”

All too often, we hear those tired old tropes, “it’s not cool” or “it’s peer pressure.” These are not the primary reasons adolescent boys won’t sing. It’s only “not cool” if adults make it so through a lack of knowledge, planning, and leadership. I can take you to choirs or into schools where the peer pressure is positive. The boys will sing because their mates do and it’s actually “cool” to be in the choir. I can guarantee that the common feature in every case is a leader who (a) believes in young adolescent boys and thinks it’s important for them to be in a choir and (b) who knows how to monitor their voices regularly and allocate them to an appropriate part, which may well be different in June from what it was in November.

The only real complication is that the “appropriate part” may be neither soprano, alto, tenor, nor bass. Most adolescent boys go through phases when their voice is none of those. Quite often, that phase is called “cambiata,” a term devised by the late Irvine Cooper in the United States. Cooper had witnessed the fact that boys would sing lustily at camp when they could choose their own tessitura but refused to sing in school when the teacher gave them pitches and parts that were inappropriate. A boy whose lowest clear note will be the E in the tenor octave but who cannot reach tenor C will be a cambiata. It matters less what the parts are called than that the ranges of those parts are comfortable for the voices that are to sing them. There are plenty of books, chapters, and papers published that explain all this for the musician who thinks it important to add the knowledge to their skill set.

“It matters less what the parts are called than that the ranges of those parts are comfortable for the voices that are to sing them.”

To return to my Snowdonia analogy, two weeks ago I was at the National Youth Boys Choir summer course. I took some boys out of rehearsal and asked them to sing the tune of Happy Birthday to the words “You owe me five pounds”. I gave them no starting note. I wanted to find out how each identified his own tessitura. Had he got it wrong, he’d have come to grief on that octave leap. None did. Most importantly, without exception, each boy chose a pitch range that matched closely the range of the part he had been allocated for the week. The music had been chosen carefully from the limited catalogues available so that the part ranges matched the unique “cambiata” ranges. That is not an easy task. There is so much music out there that is unsuitable. But the leaders had high expectations. They believed in the boys, had searched carefully for suitable repertoire, and checked each boy’s voice range.

Photo by Martin Ashley, used with permission

Photo by Martin Ashley, used with permissionTwo other things stick in my mind from that week. Waiting in the queue to go into the concert I overheard a conversation between parents. It went something like this: “He so loves his singing. He so wishes he could do this all year, but there’s no choir at school and none in the area we can get to.” Later, whilst sitting in the car park arguing with the satnav, I witnessed boys dragging suitcases and chatting excitedly to the families that had come to pick them up. What a wonderful, memorable, and formative week each had had! How sad that such experiences are so rare and hard to come by.

It’s a good job that fourteen-year-old boy who was to remember his week in Snowdonia was also to remember singing Handel’s The King shall Rejoice and Kodaly’s Missa Brevis as a twelve-year-old. He attended a school where choral singing and the natural environment were thought to be equally important and necessary experiences for boys. This blog post would not otherwise have been written.

October 1, 2022

Philosophy for Public Health and Public Policy: an interview with James Wilson

James Wilson is Professor of Philosophy at University College London, and co-director of the UCL Health Humanities Centre. He has been a member of advisory groups to the National Health Service on data collection and data access, and he serves on the National Data Guardian’s steering group. James is interviewed here by Peter Momtchiloff, philosophy editor at OUP.

“Public health” is a familiar phrase in our everyday lives now. Do you think philosophy has been a bit slow to get to grips with public health? I note that the journal Public Health Ethics was only founded in 2008.

Philosophy, along with bioethics, has definitely been slow to address public health. Leading political philosophers of the previous generation, such as John Rawls, now often seem simply naive or poorly informed when they address health. Usually they don’t address the literature on the social determinants of health at all. Some topics such as risk imposition, which are foundational for work on the philosophy of public health, have become mainstream within philosophy only in the past 15 years.

How do philosophical issues to do with public health differ from typical issues in medical ethics? Is it particularly a case of going beyond the ethical?

Work in medical ethics and bioethics has often been very individualistic, focusing on questions such as doctor-patient relationship and informed consent in medical research. Public health focuses attention on what societies as a whole do to protect the health of populations. Public health ethics requires us to think about the design of institutions, but it’s vital we don’t forget that those helped or failed by institutions are individuals. We need to integrate the ethical and the political, rather than going beyond the ethical.

“Trustworthy communication over the long term establishes high levels of public trust and citizens are likely to adhere to government guidance.”

You have worked on government healthcare advisory groups. Have you formed any general thoughts about how government can communicate better with citizens about public health?

Public health practice much more often aims to steer citizens’ behaviour by informing and persuading than via direct coercion. How governments communicate makes a significant difference to outcomes. Trustworthy communication over the long term establishes high levels of public trust, and citizens are then much more likely to adhere to government guidance. This requires honesty, integrity, and consistency from government ministers. It’s much easier to lose trust than to regain it.

Do you think there is a valuable role for “nudging” or other non-transparent methods of managing public health?

Nudging alone is unlikely to shift the dial on structural causes of inequities in health, so it would be a dereliction of duty for public health policy to deploy only nudges. Nudges can nonetheless be useful. Some public health nudges can be cost-effective and obviously beneficial—such as the rumble strips that provide tactile feedback to an inattentive driver who wanders out of their line. What matters most is how publicly defensible the rationale for the nudge is, and if a nudge is the best solution for the public health problem in question.

How did the impact of COVID-19 affect your writing of the book?

The complete manuscript was already with OUP by the time COVID-19 struck. I argue that philosophers can’t do philosophy well unless they engage with complex real-world cases, and so COVID-19 provided a powerful test for the adequacy of the book’s analysis. In the first wave, I was able to use the book’s ethical framework in providing urgent ethics advice on resource allocation and data ethics to a range of public institutions. After this, I spent a few months trying to work out what needed to change in my arguments. It became clear that the pandemic had, if anything, vindicated the complex systems approach I’d argued for in the book. This focuses on how change and dynamism occur continually at all levels relevant to public health, and why we should expect change to be mediated and shaped in real time by structural injustice.

“Just as the pandemic supercharged public health, so it supercharged contestation of public health.”

Do you think COVID-19 will have made it easier or more difficult for governments to promote public health?

COVID-19 has given us precedents of governments doing extraordinary things to protect public health. Three years ago, nobody would have anticipated that our governments would criminalise everyday activities such as going to see your parents, or that most people would agree that this was a fair way of distributing the burdens of risk reduction.

Once you’ve shown that radical action is politically possible, that opens the door to more muscular action on climate change, or air quality, or health inequalities. But just as the pandemic supercharged public health, so it supercharged contestation of public health. We’ve seen the rise of antivax movements, and the morphing of mask-wearing into a “culture war” issue in many countries. So, while COVID-19 creates a precedent and an anchoring point for proponents of public health, it also provides a rallying point for opponents of public health.

Given the volatility of politics at present, it’s too early to say which framing will win out. One thing philosophers can do to help is to articulate the case for public health in a way that’s accessible even to those suspicious of the state, as my book attempts to do.

Featured image by Anna Shvets via Pexels, public domain

September 30, 2022

Howard Carter and Tutankhamun: a different view

For Egyptologists the year 2022 is a special one—not one but two momentous anniversaries are on tap. Two hundred years ago, on 27 September 1822, French scholar Jean-François Champollion leapfrogged over his peers to crack the “code” of hieroglyphs—scholars could finally read ancient Egyptian inscriptions, and Egyptology was established as a new discipline. On 4 November 1922, exactly 100 years later, Englishman Howard Carter, excavating on behalf of his patron Lord Carnarvon of Highclere Castle (now best known as the setting for “Downton Abbey”) acted on a “hunch” and discovered the tomb of Tutankhamun in a previously neglected part of the Valley of the Kings, setting the world at large on fire, archaeologically speaking. “King Tut’s tomb” and the (much older) Pyramids of Giza: have any other monuments come to symbolize ancient Egyptian civilization—and archaeology—better?

Howard Carter is celebrated as a folk hero in some circles today. Egyptologists commend his meticulous documentation of more than 6,000 objects cleared from the four small chambers of Tutankhamun’s tomb, enriching the Egyptian collection in Cairo today, and, as I write this, they are moving from the Egyptian Museum in central Cairo’s Tahrir Square to just north of the Giza Pyramids where they will be exhibited in the sprawling Grand Egyptian Museum/GEM, set to open soon. One shudders to think how these objects might have fared had their discovery occurred two or even just one century prior to Carter’s 1922 field season.

But Howard Carter was no angel. By most accounts an often abrasive personality, he made his great discovery during an outburst of Egyptian nationalism against the British occupation that had stifled Egyptian independence since 1882. Archaeology does not exist in a vacuum, and both foreigners and Egyptians quickly pounced on the Tutankhamun find to suit their own political agendas. Carter and Carnarvon sold exclusive newspaper coverage to The Times of London, incensing their Egyptian hosts. The Egyptian Antiquities Service (Service des Antiquités), by colonialist tradition in the hands of a Frenchman, tried to rein in Carter while ensuring responsible treatment of the tomb’s treasures. Tensions flared, and, for a time in 1924-25, Carter was locked out of the tomb, with the sarcophagus lid hanging precariously by ropes in mid-air.

At the Giza Pyramids, west of Cairo, a very different approach to archaeology was in evidence during these years. George A. Reisner was Egypt’s premier archaeologist of his era, and he was excavating the pyramid field at Giza while living on-site at “Harvard Camp” just west of the site. Since 1899 he had directed the Hearst Expedition (funded by American philanthropist Phoebe Apperson Hearst) and then the Harvard University-Boston Museum of Fine Arts Expedition. Eventually he explored 23 different sites in Egypt, Nubia (modern Sudan), and Palestine.

“Largely due to Reisner … Egyptian archaeology was at the forefront of world archaeology”

While Carter received no formal education in his field, George Reisner obtained his BA, MA, and PhD degrees from Harvard University (1889, 1891, and 1893 respectively). This training was in the comparative philology of Semitic languages, but postdoctoral work in Berlin introduced him to Egyptology. Carter took an adversarial view of his Egyptian hosts; Reisner, with his fluent Arabic, demonstrated greater affinity for the local population, especially his workforce from the Upper (southern) Egyptian town of Quft. Years earlier, Carter ran afoul of some unruly French tourists at Saqqara, and the ensuing row cost him his inspector job. Reisner by contrast followed all the rules, whether issued by local Egyptian omdas (head men), the French authorities at the Service des Antiquités, or by the British governmental officials. Carter dealt in the antiquities trade on behalf of Western collectors, a practice Reisner studiously avoided. And no one would call Carter a founding father of modern scientific archaeology, while Reisner, augmenting and improving on his British predecessor Flinders Petrie’s methods, was precisely that. His focus on stratigraphy (layers of deposits), strict documentation of every phase of the excavation process, and the creation of typologies for every type of object and monument discovered, set him apart from all other archaeologists of his day. Largely due to Reisner, one could claim that during the first half of the twentieth century, Egyptian archaeology was at the forefront of world archaeology in general.

Reisner viewed Carter with his impolite behavior as the bull destined to ruin the archaeological china shop for all the other expeditions. In 1922, the partage system, providing for a 50-50 division of finds between Cairo and an expedition’s home institution, was already ending; by 1927 foreign scholars feared they would be excluded entirely from the Service des Antiquités. The machinations around the “Tooten-Carter” tomb, as Reisner called it, spelled trouble for all concerned.

Reisner claimed confidentially that he had heard the Egyptian government possessed evidence of Carnarvon and Carter engaging in illicit excavations, instigating thefts from sites, and smuggling antiquities: “The record is one of the most disgraceful things that has happened in our time. They are also accused of having instigated thefts from the Cairo Museum, an act of which I have long suspected them; and the proof of that may be forthcoming at any time.” In correspondence with his superiors back in Boston, Reisner reported that such damning evidence might be published if Carter did not accept the government’s terms for returning to work. Through it all Reisner had steered clear; despite Carter’s earlier visits to his excavations at Giza, he had not spoken to him since 1917 and “never accepted [Carter] or Carnarvon as a scientific colleague nor admitted that either of them came within the categories of persons worthy of receiving excavation permits from the Egypt Government.” Swallowing particularly sour grapes, Reisner even pooh-poohed the Tutankhamun find, lamenting the lack of any historical texts found in the tomb to provide answers for some of the larger questions of Eighteenth Dynasty Egyptian history and chronology. Carter for his part once returned the “favor” by refusing to allow Reisner’s assistant Dows Dunham to visit the tomb, doubtless due to Dunham’s affiliation with the Harvard-MFA Expedition. During Carter’s “exile” from the tomb, he embarked on a lecture tour in America, but mocked the reception he received in Boston, thanks to Reisner’s associates there making “asses of themselves.” No love was lost between the two men.

“Carter’s talented but difficult character, Reisner’s disdain (and envy?), and the political tensions surrounding archaeology, colonialism, and nationalism, made for a heady mixture in 1920s Egypt.”

Later conversations between Carter and some of his confidants revealed that he had known all along where to search for the tomb of Tutankhamun, based on earlier finds of a colleague in the Valley of the Kings back in 1914 which had not been followed up. Carter’s professed “hunch” and archaeological acumen in locating the tomb were in fact fictions.

Carter’s talented but difficult character, Reisner’s disdain (and envy?), and the political tensions surrounding archaeology, colonialism, and nationalism, made for a heady mixture in 1920s Egypt. We might wonder how differently the Tutankhamun clearance would have proceeded under Reisner rather than Carter. For starters, there would have been no acrimony with the Egyptian authorities. Secondly, Reisner would have worked much more slowly, as he proved in 1925 when he spent 321 days on one tiny royal burial chamber—with deteriorated contents—belonging to Queen Hetep-heres at Giza, amassing 1,490 photographs and 1,701 pages of notes. Tutankhamun’s tomb by contrast contained four chambers packed with complete objects.

The boy-king’s own history, at the center of a religious schism perpetrated by his father, the pharaoh Akhenaten, will forever be tied to the career of the gifted but mercurial Howard Carter. But Reisner had even trained Carter’s best assistant, Arthur Mace, and so we should not forget Carter’s archaeological contemporaries on the banks of the Nile. The greatest among these archaeologists, the golden glitz of Tutankhamun’s tomb notwithstanding, was George Reisner.

Featured image by Badawi Ahmed via The Giza Project at Harvard University, public domain

September 28, 2022

Three unwilling partners: “heath,” “heathen,” and “heather”

Did heathens live in a heath, surrounded by heather? You will find thoughts on this burning question of our time at the end of today’s blog post. Apparently, the word heathen in our oldest texts stood in opposition to Christian, and since the new faith came to the “barbarians” from Rome, at least some words for “non-Christians” were coined in Latin or Greek. English speakers still know the word Gentiles, a reflex (continuation) of Latin gentilis, which meant “pertaining to a gēns, that is, tribe or stock.” Quite often, the word for “tribe; one’s own ethnic group” also means “language,” because nothing cements a community more strongly than a shared tongue. A good analog of gentilis ~ Gentiles is Russian iazyk (stress on the second syllable). It means “language; tongue” and its other archaic sense is “a people.” When medieval Slavic clerics needed a term for “non- Christian,” they took the native word for “language; tribe” and added a suffix to it. Hence Russian iazych-nik (stress again on the second syllable).

The earliest Germanic clerics resorted to a similar procedure. Consider the German adjective deutsch “German.” The original noun corresponding to it was thiota “people.” Among the tribes speaking Germanic languages, the Goths were the first to be converted to Christianity, and, as mentioned more than once in this blog, the fourth-century Gothic Bible has miraculously come down to us (part of the New Testament). The Gothic counterpart of German thiota was þiuda (þ as th in English thick), recognizable from names like Theodoric. Þiuda also meant “people.”

Theodoric the great, the ruler of his people.

Theodoric the great, the ruler of his people.(Photo by James Steakley, via Wikimedia Commons, CC BY-SA 3.0)

Bishop Wulfila, the translator of the Gothic Bible, used þiuda for coining the word for “Gentiles.” But once (only once, as far as this word is concerned!), he found himself in trouble. This is the difficult passage (Mark VII: 26; I am quoting from the Revised Version): “The woman was a Greek, a Syrophenitian by nation.” The word in the original, translated into English as “Greek,” is Hellēnís, and Wulfila wrote haiþno. In the days of Jesus, the Greeks were of course “heathen,” but why did Wulfila suddenly use such an exotic word, why did he associate the heathen Greek woman (and only her!) with haiþi “field”? This question would have bothered only specialists in the history of Gothic, if heathen, an obvious cognate of haiþno had not become the main word for “Gentile” elsewhere in Germanic. Wulfila, as we can see, knew the word, but used it a single time.

In those days, Christian clerics all over the world consulted one another about the terminology of the new faith, and Armenia was very much part of the network. The Armenian word for “heathen” by chance resembles its counterpart in Germanic. It has been suggested that the Armenian adjective became known to Wulfila. This is not very probable but possible. However, if haiþno was indeed taken over from Armenian, in Germanic, folk etymology produced a tie between the non-Christians and the wild, unenlightened “others” living in the heath, far from civilized people.

But even so, the main question remains unanswered. Why did Wulfila use the word haiþno only once, to translate the adjective for “Greek’? And if haiþno was such a rare adjective, fit only for rendering an ethnic term (here, “Greek”), how could it spread far and wide and become the main word for “pagan” all over the Germanic-speaking world? At one time, the best historical linguists believed in the Armenian source of haiþno ~ heathen. Alf Torp, whom I mentioned in my recent blog on mattock, was among them. Like many other modern researchers, I think they were mistaken, and the hypothesis seems to have been abandoned for good reason.

If such an important religious term had been taken over from Armenian, it would not have cropped up only once in Wulfila’s translation and become the main word for “non-Christian” in West Germanic and Scandinavian. Wilhelm Braune, one of the brightest stars in the area of Germanic antiquities, wrote that Wulfila had coined the word himself, to translate Hellēnis. Even if he did (a most improbable suggestion), let us repeat: we still don’t know how its twin became the main term for “pagan” in the entire Germanic-speaking world.

A jigsaw as a symbol of an imperfect etymology.

A jigsaw as a symbol of an imperfect etymology.(Photo by Nathalia Segato on Unsplash, public domain)

The plot thickens when we discover that in Gothic, the noun Kreks “a Greek” (for Greek ‘Hellēn), with the plural Krekos, also existed. This is a puzzling word. It is not clear why the first consonant is k, rather than g, and why Wulfila did not use it when he needed it in Mark VII: 26. Those riddles will, most probably, never be solved. We are left with the suggestion that English heathen (as well as its cognates elsewhere in West and North Germanic) indeed goes back to the idea of “savage” non-Christians inhabiting open country. Thus, the Gothic adjective, used only once, remains in limbo.

In a jigsaw puzzle, all pieces should fit together. In our puzzle, Gothic haiþno has been left out, which means that the sought-for etymology has not been solved to everybody’s satisfaction. The only consolation may be that the adjective pagan goes back to Latin pāgus “district, country,” thus, also to some territory. I am quoting from The Oxford Dictionary of English Etymology: “The sense ‘heathen’ (Tertullian) of pagānus derived from that of ‘civilian’ (Tacitus), the Christians calling themselves enrolled soldiers of Christ (members of his militant church) and regarding non-Christians as not of the army so enrolled.” The tie with heath is not entirely clear.

Frustrated, perhaps even heart-broken, we want to take solace in the fact that at least heather grows in a heath. Well, in nature, sometimes, but in the English language, not really. The word heather surfaced in the fourteenth century in the form hathir; heather was first recorded about 400 years later. Hathir was initially confined to Scotland with the contiguous parts of the English border, that is, to the regions in which heath was unknown (!). The Old English for “heath” was hæþ, with long æ (this letter has the value of a in English at).

In all the old Indo-European languages, vowels were allowed to alternate according to a rigid scheme, known as ablaut. We can see the traces of that scheme in Modern English ride—rode—ridden, bind—bound, get—got, speak—spoke, and so forth. Short a, as in hathir, was not allowed to alternate with long æ! To be sure, one is forced to accept, even if grudgingly, some untraditional cases. The great Swedish historical linguist Adolf Noreen drew up a list of such exceptions, but hathir emerged too late to join that rather suspicious club.

Heather, a casualty of ablaut.

Heather, a casualty of ablaut.(Photo by Aqwis, via Wikimedia Commons, CC BY-SA 3.0)

Many clever hypotheses aim at connecting heath and heather. In my opinion, none of them carries conviction. As a general rule, the more intricate and ingenious an etymology is, the greater the chance that it is wrong. Those interested in minor details will find them in my An Analytic Dictionary of English Etymology. The root of heather (if we deny the word’s connection with heath) also remains unknown.

What a frustrating journey! The origin of heathen remains partly undiscovered, and heather (the word, not the plant) continues to wither in darkness. Even if so, we need not lose heart. The origin of many words will never be discovered, because we know too little about their history. (As regards pagan, we seem to know too much; hence the confusion.) But we are brave, we will not flinch and will meet again next week for new exploits. Shall we not?

Featured image: Osterheide bei Schneverdingen by Willow, via Wikimedia Commons, CC BY-SA 3.0

September 27, 2022

The language of labor

September means back to school for students, but for those of us in unions, it is also the celebration the American Labor Movement.

In 1894, President Grover Cleveland signed the law making Labor Day, celebrated on the first Monday in September, a federal holiday. That’s also the year that Labour Day was established in Canada, where the word gets that extra “u”.

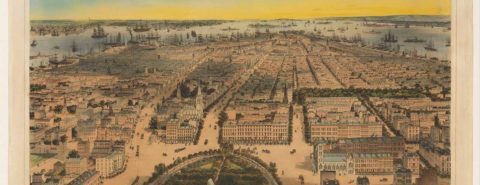

By the time Cleveland established the federal holiday, 27 states had already recognized Labor Day, beginning with Oregon in 1887. But the first US Labor Day celebration was even earlier, on 5 September 1882. The anniversary of that first parade, when ten thousand workers marched from New York’s City Hall to Union Square, is a good opportunity for us to take a look at some of the language of the labor movement.

Let’s start with union. The word was borrowed from French and had a whole host of historical meanings: political, ecclesiastical, matrimonial, and sexual. It came to refer to organizations of workers in the early 1800s: the first labor-related citation in the Oxford English Dictionary is from 1818, referring to the General Union of Trades, founded in Manchester, England. The General Union of Trades was also known as the Philanthropic Society, a name chosen to hide the organization’s real purpose in a time when trade unions were still illegal.

The term strike has an especially curious history. Strike comes not from hitting or from baseball, but from nautical terminology. To strike a ship’s sail or a mast meant to lower it. A citation from 1769 refers to sailors who “read a paper, setting forth their grievances” and afterwards “went on board the several ships in that harbour, and struck their yards, in order to prevent them from proceeding to sea.” In 1769, we also find that “the hatters struck, and refused to work till their wages are raised.” The term strike also referred to taking down tents or encampments, and in 1793 a group of artisans similarly “struck their poles, and proceeded in a mutinous manner to Guildhall, respecting the granting of their licenses.” By the early 1800s, strike was common use, and over the years we learn of general strikes, outlaw strikes, sit-down strikes, slow-down strikes, sympathy strikes, wildcat strikes, and even extensions like hunger strikes, consumer strikes, rent strikes, and sexual strikes.

The labor movement also has its specialized terminology, and many union contracts—also known as collective bargaining agreements—contain definitions specific to their interpretation. Some terminology is general to the labor-management relations, such as good faith bargaining, which refers to the legal requirement that parties in a collective bargaining relationship meet and negotiate at reasonable times and places, and with a willingness to reach an agreement. There is also the term grievance, which is not just any perceived wrong but a formal complaint filed by a union alleging a violation of a bargaining agreement.

For a helpful glossary of terms, historical and contemporary, you can visit the U.S. Department of Labor website, and for more labor history, there is The Oxford Encyclopedia of American Business, Labor, and Economic History.

Featured image: “Union Square, New York” by John Bachmann. Public domain via Wikimedia Commons.

Distrust in institutions: past, present, and future [podcast]

Research shows that American distrust in government, scientists, and media has reached new heights, and this distrust in institutions is reflected in much of the world.

In his play, Orestes, Euripides opines, “When one with honeyed words but evil mind persuades the mob, great woes befall the state.” Might we still overcome this onslaught of misinformation and preserve our trust in the very institutions that have governed and enriched us, in some form or another, for centuries?

On today’s episode of The Oxford Comment, we spoke with Brian Levack, author of Distrust of Institutions in Early Modern Britain and America, Robert Faris, co-author of Network Propaganda: Manipulation, Disinformation, and Radicalization in American Politics, and Tom Nichols, author of Our Own Worst Enemy: The Assault from within on Modern Democracy and The Death of Expertise: The Campaign against Established Knowledge and Why it Matters, to discuss the past, present, and future of institutional distrust, with a particular focus on the contentious 2016 and 2020 US presidential elections.

Check out Episode 76 of The Oxford Comment and subscribe to The Oxford Comment podcast through your favourite podcast app to listen to the latest insights from our expert authors.

Oxford Academic (OUP) · Distrust in Institutions: Past, Present, and Future – The Oxford Comment – Episode 76Recommended readingTo learn more about the themes raised in this podcast, we’re pleased to share a selection of free-to-read chapters and articles:

Here you can read the Introduction to Distrust of Institutions in Early Modern Britain and America by Brian Levack.

Network Propaganda, by Yochai Benkler, Robert Faris, and Hal Roberts, is fully Open Access, but we wish to highlight “Chapter 1: Epistemic Crisis” and “Chapter 8: Are the Russians Coming?”.

Tom Nichols, author of The Death of Expertise and Our Own Worst Enemy, has written numerous blog posts and quizzes for the OUPblog, including “Reality check: the dangers of confirmation bias” and “The news media: are you an expert?”.

Additional articles and blog posts on distrust, conspiracy theories, election fraud, and public health disinformation can also be found on the OUPblog, such as:

“How conspiracy theories hurt vaccination numbers” by Michael Stein and Sandro Galea “The fight against fake news and electoral disinformation” by Bente Kalsnes“The Black Death: how did the world’s deadliest pandemic change society?” by Mark BaileyAnd in journals, such as:

“From Bad to Worse? The Media and the 2019 Election Campaign” by Dominic Wring and Stephen Ward, from the September 2020 issue of Parliamentary Affairs“Russian News Media, Digital Media, Informational Learned Helplessness, and Belief in COVID-19 Misinformation” by Erik C Nisbet and Olga Kamenchuk, from the Autumn 2021 issue of the International Journal of Public Opinion Research.Lastly, the Open Access articles “State, media and civil society in the information warfare over Ukraine: citizen curators of digital disinformation” by Yevgeniy Golovchenko, Mareike Hartmann, and Rebecca Adler-Nissen, and “You Are Wrong Because I Am Right! The Perceived Causes and Ideological Biases of Misinformation Beliefs” by Michael Hameleers and Anna Brosius, can be found in the journals International Affairs and International Journal of Public Opinion Research, respectively.

Featured image: “United States Capitol outside protesters with US flag” by Tyler Merbler, CC BY 2.0 via Flickr/Wikimedia Commons.

A life map of Equity and Trusts [interactive map]

Through our lives, the law of Equity and Trusts is very often working in the background. If a parent or guardian wants to provide for their child, she will need to set up a trust. If we fall in love and move in with a partner, the law of Equity and Trusts might control who owns the family home. Similarly, Equity and Trusts also allows us to contribute to the welfare of others, through providing the foundation for the creation and operation of charitable organisations. When we get older and start to plan for death, Equity and Trusts controls the ways in which we can provide for our loved ones. Equity, as the name suggests, can also regulate bad behaviour of others, and provide remedies for financial mismanagement and fraud.

The map below imagines Equity and Trusts as a life journey. The law, as described in our book, regulates all these major events. Obviously, it is not a life course that everyone will take. Not everyone will have children. Not everyone will split up with a partner. Not everyone will go into business or write a will. Nevertheless, mapping the law in this way shows the deep impact of Equity on all of us.

Featured image by Jesse Bowser on Unsplash (public domain)

September 26, 2022

The Ukraine invasion: wrestling at the edge of the nuclear cliff

The paradoxical combination of loud saber-rattling and cautious military strategy on both sides of the Ukraine war follows the new rules of conflict involving nuclear powers. At the beginning of the invasion, Russia threatened nuclear attack in order to deter NATO and the United States from intervening in Ukraine’s defense. The US and NATO called Russia’s bluff, sending weapons, ammunition, and intelligence information to the Ukrainian defenders. The war then morphed into a “wrestling match at the edge of a cliff,” with both sides accepting limits on their strategy and tactics in order to avoid escalation to nuclear war: the US and NATO by not allowing their troops to engage directly with the Russians, the Russians by not attacking supply chains outside of Ukraine. Both the threats and the restraint fall within the new rules of engagement.

Here’s the wider background. At the beginning of its invasion of Ukraine, Russia followed its counter-intuitive military doctrine of “escalate to de-escalate.” In keeping with this doctrine, it tried to deter any outside power from intervening in the regional conflict by threatening to be the first to explode a nuclear weapon. Now that the invasion has gone badly, it threatened to explode a nuclear weapon and, in this way, to give NATO and the US the choice either to withdraw or else to retaliate and risk escalation to all-out nuclear war.

“The nuclear arms control and non-proliferation regimes need strengthening and updating to control new technology.”

Experience with numerous wargames has led to the conclusion that it would be difficult to prevent such escalation once the first nuclear weapon has been exploded in anger. This means that the doctrine of escalate to de-escalate leads directly to the teenagers’ game of chicken, in which cars are driven toward each other at high speed and the “loser” is the one who turns aside to avoid a head-on collision. The game of chicken favors the adversary that is more willing to accept the risk of collision in order to achieve its objective of “winning”—the more so if the risk-accepting driver is thought to be crazy, or worse, has thrown away the steering wheel.

Applying the model of the game of chicken to the conflict in Ukraine, either the US would “turn aside” (“lose”) by abandoning its ally, or Russia would “turn aside” (“lose”) by not carrying out its nuclear threat. If neither side “turned aside,” the resulting “head-on collision” would be nuclear war. This high-stakes “game” could also apply to possible future invasions of Estonia, Latvia, Lithuania, or even Taiwan.

So far, the US and NATO have accepted the risk of nuclear war by continuing to send weapons and other support to Ukraine. The Russians have not carried through on their threat. Even so, both sides are treading carefully. We have avoided direct conflict between US and Russian forces. We have not tried to break the Russian blockade of grain export from Odessa by military action, despite the impact of that blockade on the world’s food supply. The Russians, for their part, have also held back, refraining from attacking the cross-border logistical facilities by which weapons are conveyed into Ukraine. For now, instead of a game of chicken, we have a wrestling match at the edge of a nuclear cliff, both sides trying at the same time to win the contest and to avoid falling off the cliff together to mutual destruction. But the more the Russians repeat their threats without acting on them, the more they come to resemble the boy who cried wolf, and the greater the danger that they will be moved to carry them out.

“Many hundreds of missiles in the US and in Russia are still on hair-trigger alert, ready to be launched on warning, whether true or false, of an incoming attack.”

There is yet another danger: that of accidental nuclear war. The risk increases as the hostility and the sense of crisis between Russia and NATO grows. It’s worth remembering here that we owe our lives to two Soviet military officers: first, Colonel Stanislav Petrov, who realized that the signal he had received of a US missile attack in 1983 was a false alarm; and second, submarine chief of staff Vasili Arkhipov, who refused permission to a Soviet submarine commander who wanted to launch a nuclear missile at the US at the height of the Cuban Missile Crisis in 1962. Both Petrov and Vasiliev probably saved the world from all-out nuclear war. The nuclear arms control and non-proliferation regimes of treaties, hotlines, and regular consultations among militaries, painfully built up after World War II, have so far protected us from nuclear war, aided by a good bit of luck. These regimes have been allowed to deteriorate. They need strengthening and updating, in large part because of the need to control new technology. This calls for collaborative research among natural and social scientists from both allies and potential enemies, as well as urgent, frank discussions about nuclear stability among hostile and distrustful governments and militaries.

The good news is that the nuclear taboo still holds. There have been many conventional wars since World War II, but no one has exploded a nuclear weapon in anger. We’re down a long way from the 68,000 nuclear weapons that the US and the USSR maintained at the height of the Cold War. The bad news is that many hundreds of missiles in the US and in Russia are still on hair-trigger alert, ready to be launched on warning, whether true or false, of an incoming attack. This is dangerous and unnecessary, given that both we and the Russians could ride out a strategic nuclear attack and still be able to retaliate, so that we each have sufficient “second-strike capability” to deter the other from attacking.

Meanwhile, China is watching the Ukraine situation carefully. Unlike the US and the Soviets during the Cold War, China does not have the experience with near misses that forced the US and the USSR to come to a shared understanding of the requirements for nuclear stability and avoidance of accidental nuclear war. To make matters still more dangerous, several powers, including the US, Russia, and China, are developing hypersonic missiles that decrease the time available to respond to a warning of possible strategic nuclear attack, and hence greatly increase the risk of accidental nuclear war—a classic case of failure to control the march of technology.

“To defend Ukrainian democracy requires us to do our best to avoid nuclear war and at the same time to accept the risk that it may happen.”

This is the most dangerous situation the world has faced since the Cuban Missile Crisis. To defend Ukrainian democracy requires us to do our best to avoid nuclear war and at the same time to accept the risk that it may happen—a tricky, high-stakes navigation that attracts criticism from all sides. We have every right to debate the importance and the dangers involved in intervening in regional conflicts like Ukraine in order to defend democratic values. Considering the stakes involved, there has been relatively little public discussion regarding the risks in intervening in regional conflicts like Ukraine in order to defend democratic values. The public is not accustomed to thinking about nuclear war. It may not appreciate the seriousness of the repeated Russian threats to use nuclear weapons, the limits imposed on both Russian and NATO strategy by the need to avoid a nuclear cataclysm, or the speed and ease with which a single use of nuclear weapons can escalate into full-scale nuclear war.

We face an unstable situation where the Ukraine war—or any future confrontation between nuclear powers—can quickly get out of hand. In the longer run, and assuming that the current situation in Ukraine can somehow be resolved, we urgently need strengthened and updated agreements on arms control and the avoidance of accidental nuclear war that take into account newly empowered nuclear powers, new technology and new weapons systems. We don’t have a lot of time for trial and error.

Featured image by Max Kukurudziak on Unsplash, public domain

September 25, 2022

Jean-Luc Godard’s filmic legacy

Jean-Luc Godard died at the age of 91 on 13 September 2022 at his home in Rolle at the Lake of Geneva in Switzerland. The uncompromising French-Swiss cineaste was arguably one of the most influential filmmakers of the last 60 years. With his innovative approach to cinema, he broke with tried-and-tested conventions and taught us instead how to see and hear films differently and thus, how to perceive life, and the world, from a more nuanced and critical angle. Throughout his life, Godard remained a film critic who critiqued cinema not with a pen but with film itself.

Godard once stated that he was a child of the Cinémathèque in Paris. Indeed, his formation as a critic and filmmaker began in the early 1950s at this venerated institution, founded and run by Henri Langlois. There, he saw innumerable films from Hollywood, Europe, and other parts of the world, silent and sound films, of all possible genres. There, he also met his fellow film aficionados François Truffaut, Éric Rohmer, Jacques Rivette, Claude Chabrol, and others. Under the wings of film theorist André Bazin, these young enthusiasts worked as critics at the then, newly founded and now legendary film journal, Cahiers du Cinéma.

With the knowledge accrued during that time, Godard released his first feature film in 1960, the low-budget production Breathless. Already in this first film, Godard showed his aesthetic program. He set out to find his own filmic language by violating the conventions and rules established in Hollywood and European mainstream cinema. As an open sceptic of the traditionally made French films of the 1950s, Godard expressed in his 1960s’ films that after the Second World War different films had to be made.

He sought inspiration in Hollywood B movies, gangster and crime genres, the MGM musical, and also Italian Neorealism. This hodgepodge of contrasting influences is on display in Godard’s copious output of the 1960s. In Contempt (1963), he reflects on the melodrama, in Band of Outsiders (1964) and Made in U.S.A. (1966) on the gangster genre, in Alphaville (1965) on sci-fi films, in A Woman Is a Woman (1961) on the Hollywood musical, in The Little Soldier (1963) on spy thrillers, and in Vivre sa vie (1962) and A Married Woman (1964) on Ingmar Bergman’s cinema.

In these films, Godard experimented with unconventional storytelling techniques, with freeing up the camera in handheld shots, with unusual techniques of editing the images and sounds, such as jump-cuts and abrupt beginnings and endings of music. This experimentation with the filmic material generated an alternative approach to filmmaking, which only became common currency, primarily in television making, within the last 10 and 20 years, as seen in many HBO, Netflix, Amazon Prime, and Starz productions.

In the late 1960s, Godard’s work became increasingly political, with a decisive, and dogmatic Marxist program. Together with Jean-Pierre Gorin and the Dziga Vertov Group collective, Godard engaged in radical agitprop filmmaking from British Sounds (1969) to Tout Va Bien (1972), distancing himself more and more from the established norms of the film industry.

A critical break occurred in his life when he abandoned his left-wing agenda and moved away from Paris to the Alpine city of Grenoble in 1974. There, as a pioneer of early videomaking, Godard produced independently social-critical television programs such as Numéro deux (1975) and Ici et ailleurs (1976). The experimentation made during these years with image and sound—as expressed in the name of his production company SonImage (SoundImage)—bore fruit in his films of the second wave, made in the 1980s at the Lake of Geneva, where he moved in the late 1970s.

With his return to cinema, he created such poetic and lyrical films as Sauve qui peut (la vie) (1980), Passion (1982), and First Name: Carmen (1983). In these films, Godard’s mature style comes to the fore. By now, he had internalized his youthful, rebellious experimentations aimed at Hollywood; they featured his unique, distinct style and were now adopted in his profoundly moving, philosophical, and deeply intellectual films.

One of these productions is Godard’s monumental, experimental television epic Histoire(s) du cinéma, an eight-part series completed between 1988 and 1998. In a highly original fashion, as a virtuoso of the images and sounds, Godard came to terms with film art by presenting his unique, very personal history of cinema in an essayistic style, challenging his audience to the limits of their sensorial and intellectual capabilities. His provocative, encyclopedic work explores the function and influence of cinema in history and society of the twentieth century. With this work, Godard expanded even further his filmic style and presented the material as superimposed layers of images and sounds creating a challenging collage consisting of textual, visual, and musical quotations from the vast vault of Western art, literature, and philosophy. With the collage the viewer-listener is forced to not only reevaluate cinema and Western thinking in general, but also to question the image- and soundtrack’s function in an audio-visual artwork per se.

After Histoire(s) du cinéma, he continued to make films with his expressive, profound, and innovative language, exploring historical, social-critical, and political issues. Of his late films, Nouvelle Vague (1990), his intimate self-portrait JLG/JLG, autoportrait de décembre (1994), In Praise of Love (2004), and his experimentation with 3D film, Adieu au langage (2014), are noteworthy. With his last film, Le Livre d’image (2018), Godard returned to the questions he raised in Histoire(s) du cinéma. Particularly, this last production, for which he was awarded the Special Palme d’Or at the Cannes Festival, creates the facility of wonderment or judgment in the audience, bringing the viewer-listener closer to Godard’s own, personal position as a director and innovative filmmaker.

In the words of film critic Manohla Dargis, Godard “insisted that we come to him, that we navigate the densities of his thought, decipher his epigrams and learn a new language” (New York Times). Perhaps it will take years, if not decades, until we are able to grasp—perhaps only partly—the profound, complex audio-visual language that “this vexing giant, the cool guy with the dark sunglasses and cigar” (Dargis) left behind for us to decipher.

Featured image by Ian W. Hill via Flickr, CC BY-NC 2.0)

September 23, 2022

Can you match the famous opening line to the story? [Quiz]

Do you know your Austen from your Orwell? Consider yourself a literature whiz? Or do you just love a compelling story opening? Try out this quiz and see if you can match the famous opening line to the story and put your knowledge to the test. Good luck!

If you want to find out more about the power of a good opening and other interesting storytelling techniques, check out The Short Story: A Very Short Introduction from our Very Short Introduction series. The “Openings” chapter is currently available to sample for free on the Oxford Academic platform.

Featured image by Florian Klauer via Unsplash , public domain

Oxford University Press's Blog

- Oxford University Press's profile

- 238 followers