Robert H. Edwards's Blog: Great 20th century mysteries, page 6

March 25, 2024

Voynich Reconsidered: the "truncation effect"

In the course of my research for Voynich Reconsidered (Schiffer Publishing, 2024), I made a series of statistical tests on the Voynich manuscript, based on the incidence of hapax legomena: that is, words that occur only once.

As Alexander Boxer demonstrated in his presentation to the Voynich 2022 conference, the prevalence of hapax legomena can be an indicator of the presence or absence of semantic meaning. Specifically, for a document of a given length, a lower incidence of hapax legomena is indicative of meaningful content. A higher incidence is a "fingerprint" of gibberish.

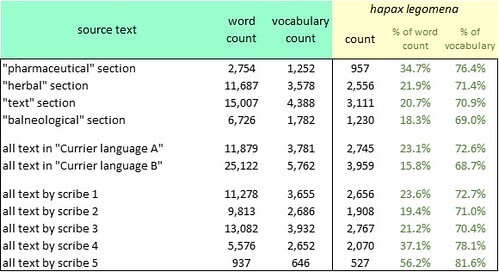

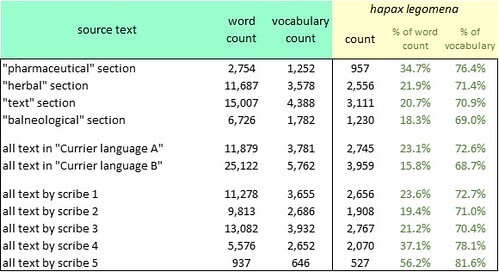

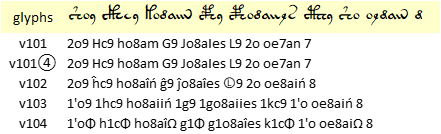

Below is a summary of the incidence of hapax legomena in the major thematic sections of the manuscript; in the two "languages" identified by Prescott Currier; and in the pages written by the five scribes identified by Dr Lisa Fagin Davis.

The incidence of hapax legomena in the Voynich manuscript, by section, "language" and scribe. Author's analysis.

These calculations show that the incidence of hapax legomena, as a percentage of the word count, is generally in the range 15 to 37 percent, depending on which element of the manuscript we measure. The longer the chunk of text, the lower the incidence of hapax legomena. This is as we should expect, since a longer text gives more opportunity for a word to be re-used.

The outlier is the work of Scribe 5, who wrote five pages of the "herbal" section and one page of the "text" section.

As a comparator, I have only one example of presumed gibberish: the compilation of "angelic messages" written by John Dee and Edward Kelley between 1581 and 1583 (as cited by Boxer). This document, of about 4,000 words, has an incidence of hapax legomena of about 57 percent of the word count.

Against this, the Voynich manuscript generally gives the impression of meaningful content, however we slice it: with the possible exception of the contribution of Scribe 5.

The "truncation effect"

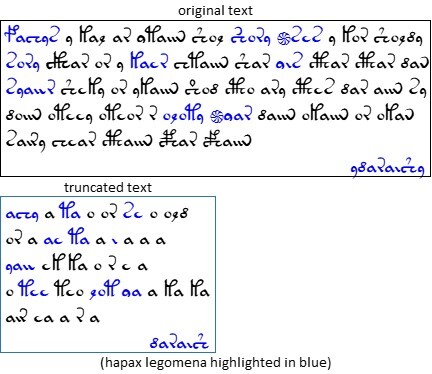

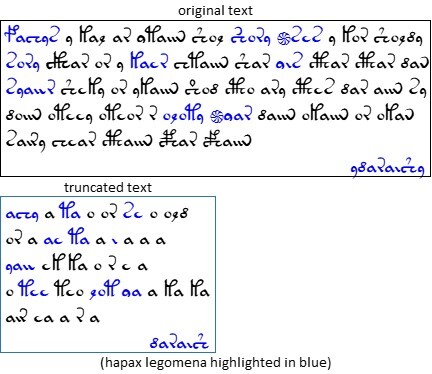

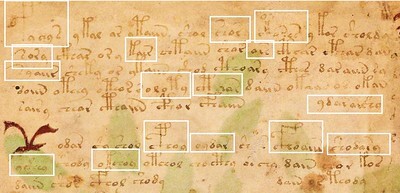

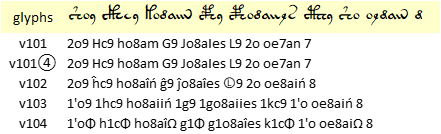

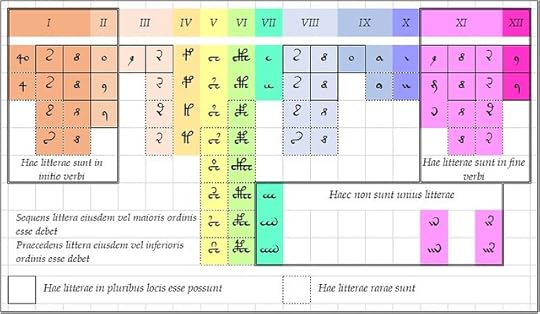

In one set of tests, on the text in Language B, I examined the impact of removing the initial and final glyphs of every "word". This made all the "words" of one or two glyphs disappear; and shortened all the remaining "words" by two glyphs. An example is shown below.

Voynich manuscript, page f1r, lines 1-6: identification of hapax legomena. Author's analysis.

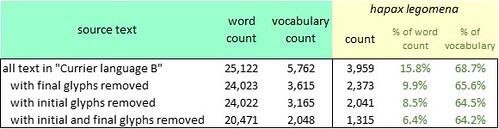

Voynich manuscript: the incidence of hapax legomena after removing initial or final glyphs. Author's analysis.

The removal of initial or final glyphs, or both, had the effect of reducing the incidence of hapax legomena: that is, increasing the probability that there was meaning in the remaining text. I was inclined to call this phenomenon "the truncation effect".

In a natural language, to some extent this effect should be expected. For example if a document in English contained the words "fonder", "wonder" and "yonder", after removal of the initial letter the three would become "onder". If any of these three words had been a hapax legomenon, it would cease to be so; and the incidence of hapax legomena would probably decrease.

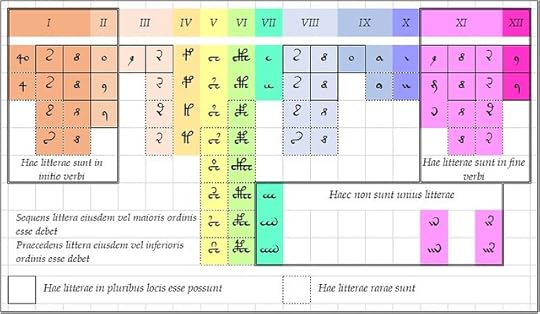

The order of the letters

I thought that perhaps we could use the "truncation effect" for another purpose. Perhaps we could determine whether, when the Voynich scribes mapped from the presumed natural languages to glyphs, they preserved the order of the letters within words.

In natural languages, what we call a meaningful text is made up of words in which the letters are in their original order. In the Voynich manuscript, we have reason to suspect that this is not the case. This will be evident from a reading of two technical papers on the Voynich manuscript:

Them's not the breaks

With regard to the Voynich manuscript, we have seen that we can remove all the initial glyphs, and all the final glyphs, and the text somehow appears to retain whatever meaning it may possess. My first reaction was that the interior glyphs held the meaning of the text, and that the initial and final glyphs were something like punctuation, or maybe junk.

But removing glyphs from Voynich "words" makes those "words" impossibly short, by comparison with natural languages. In the v101 transliteration, the average length of "words" is 3.90 glyphs; if we remove two glyphs from each "word", the average drops to 2.44 glyphs. To my knowledge, there is no natural language in which the words are that short.

If the initial and final glyphs are really punctuation or junk, it is an inescapable inference that the Voynich "words" are not words. Prescott Currier said as much, at Mary D'Imperio's seminar in 1976. Specifically, the spaces are not word breaks.

By analogy, let's imagine that we wished to hide the Gettysburg Address within brackets of junk letters. Firstly we would insert spaces at random points between the letters. Thus, the first two words:

An alphabetic cipher?

If the initial and final glyphs are not punctuation or junk, then another possibility is that they are indicators of an alphabetic sorting, of the kind that D'Imperio's and Zattera’s papers imply. In English, we expect that after an alphabetic sorting, words will often begin with a or e, and will often end in t or u.

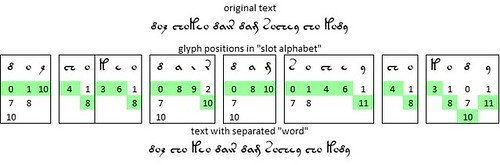

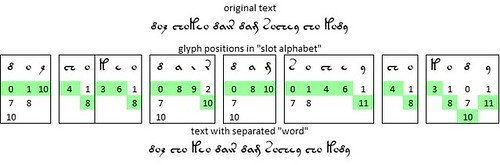

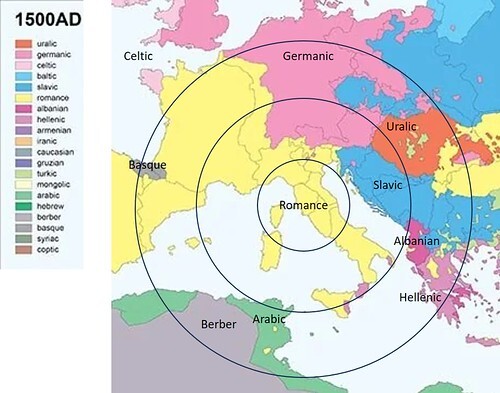

Likewise, in the Voynich manuscript, "words" often begin with the v101 glyphs {4o} or {o} or {9}, and often end with the glyph {9}. This would be the case if {4o} and {o} were among the first letters of the Voynich “alphabet” and {9} was both among the first and among the last; and if the glyphs in every word were in “alphabetic” order. This phenomenon found expression in Zattera's "slot alphabet", in which, for example, {4} is invariably in slot 0, {o} is usually in slot 1, and {9} can be in slot 1 or slot 11.

Further research

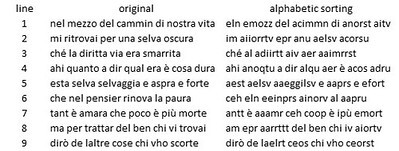

To me it seems that the "truncation effect" needs testing on an appropriate document. We need a document of a comparable length to the Voynich manuscript, say at least 40,000 words. Also, as long as we assume a medieval European provenance for the text (which I think is a reasonable assumption), we might start with a document in medieval Latin or Italian. I am working on Dante’s La Divina Commedia and will report in another post.

As Alexander Boxer demonstrated in his presentation to the Voynich 2022 conference, the prevalence of hapax legomena can be an indicator of the presence or absence of semantic meaning. Specifically, for a document of a given length, a lower incidence of hapax legomena is indicative of meaningful content. A higher incidence is a "fingerprint" of gibberish.

Below is a summary of the incidence of hapax legomena in the major thematic sections of the manuscript; in the two "languages" identified by Prescott Currier; and in the pages written by the five scribes identified by Dr Lisa Fagin Davis.

The incidence of hapax legomena in the Voynich manuscript, by section, "language" and scribe. Author's analysis.

These calculations show that the incidence of hapax legomena, as a percentage of the word count, is generally in the range 15 to 37 percent, depending on which element of the manuscript we measure. The longer the chunk of text, the lower the incidence of hapax legomena. This is as we should expect, since a longer text gives more opportunity for a word to be re-used.

The outlier is the work of Scribe 5, who wrote five pages of the "herbal" section and one page of the "text" section.

As a comparator, I have only one example of presumed gibberish: the compilation of "angelic messages" written by John Dee and Edward Kelley between 1581 and 1583 (as cited by Boxer). This document, of about 4,000 words, has an incidence of hapax legomena of about 57 percent of the word count.

Against this, the Voynich manuscript generally gives the impression of meaningful content, however we slice it: with the possible exception of the contribution of Scribe 5.

The "truncation effect"

In one set of tests, on the text in Language B, I examined the impact of removing the initial and final glyphs of every "word". This made all the "words" of one or two glyphs disappear; and shortened all the remaining "words" by two glyphs. An example is shown below.

Voynich manuscript, page f1r, lines 1-6: identification of hapax legomena. Author's analysis.

Voynich manuscript: the incidence of hapax legomena after removing initial or final glyphs. Author's analysis.

The removal of initial or final glyphs, or both, had the effect of reducing the incidence of hapax legomena: that is, increasing the probability that there was meaning in the remaining text. I was inclined to call this phenomenon "the truncation effect".

In a natural language, to some extent this effect should be expected. For example if a document in English contained the words "fonder", "wonder" and "yonder", after removal of the initial letter the three would become "onder". If any of these three words had been a hapax legomenon, it would cease to be so; and the incidence of hapax legomena would probably decrease.

The order of the letters

I thought that perhaps we could use the "truncation effect" for another purpose. Perhaps we could determine whether, when the Voynich scribes mapped from the presumed natural languages to glyphs, they preserved the order of the letters within words.

In natural languages, what we call a meaningful text is made up of words in which the letters are in their original order. In the Voynich manuscript, we have reason to suspect that this is not the case. This will be evident from a reading of two technical papers on the Voynich manuscript:

• Mary D'Imperio's paper "An Application of PTAH to the Voynich Manuscript", written for the National Security Agency around 1978 but classified until 2009;D'Imperio and Zattera made a compelling case that the "words" in the Voynich manuscript had a specific interior sequence of glyphs: what D'Imperio called "the five states"; what Zattera called a “slot alphabet”; and what, in a natural language, we would call an alphabetical order.

• Massimilano Zattera’s paper “A New Transliteration Alphabet Brings New Evidence of Word Structure and Multiple "Languages" in the Voynich Manuscript”, presented at the Voynich 2022 conference.

Them's not the breaks

With regard to the Voynich manuscript, we have seen that we can remove all the initial glyphs, and all the final glyphs, and the text somehow appears to retain whatever meaning it may possess. My first reaction was that the interior glyphs held the meaning of the text, and that the initial and final glyphs were something like punctuation, or maybe junk.

But removing glyphs from Voynich "words" makes those "words" impossibly short, by comparison with natural languages. In the v101 transliteration, the average length of "words" is 3.90 glyphs; if we remove two glyphs from each "word", the average drops to 2.44 glyphs. To my knowledge, there is no natural language in which the words are that short.

If the initial and final glyphs are really punctuation or junk, it is an inescapable inference that the Voynich "words" are not words. Prescott Currier said as much, at Mary D'Imperio's seminar in 1976. Specifically, the spaces are not word breaks.

By analogy, let's imagine that we wished to hide the Gettysburg Address within brackets of junk letters. Firstly we would insert spaces at random points between the letters. Thus, the first two words:

four scorewould become, for example:

f ou rs c or eWe would then add random letters before and after each block of letters. We would choose the initial letter randomly from the first five letters of the Latin alphabet (a through e) and the final letter randomly from the last five (v through z). The result would be somewhat as follows:

cfy douy crsw acv borx aey.Something like this could be occurring in the Voynich manuscript, where a small set of glyphs predominates as initial glyphs, and another small set predominates as final glyphs.

An alphabetic cipher?

If the initial and final glyphs are not punctuation or junk, then another possibility is that they are indicators of an alphabetic sorting, of the kind that D'Imperio's and Zattera’s papers imply. In English, we expect that after an alphabetic sorting, words will often begin with a or e, and will often end in t or u.

Likewise, in the Voynich manuscript, "words" often begin with the v101 glyphs {4o} or {o} or {9}, and often end with the glyph {9}. This would be the case if {4o} and {o} were among the first letters of the Voynich “alphabet” and {9} was both among the first and among the last; and if the glyphs in every word were in “alphabetic” order. This phenomenon found expression in Zattera's "slot alphabet", in which, for example, {4} is invariably in slot 0, {o} is usually in slot 1, and {9} can be in slot 1 or slot 11.

Further research

To me it seems that the "truncation effect" needs testing on an appropriate document. We need a document of a comparable length to the Voynich manuscript, say at least 40,000 words. Also, as long as we assume a medieval European provenance for the text (which I think is a reasonable assumption), we might start with a document in medieval Latin or Italian. I am working on Dante’s La Divina Commedia and will report in another post.

Published on March 25, 2024 22:32

•

Tags:

alexander-boxer, hapax-legomena, voynich, zattera

March 21, 2024

Voynich Reconsidered: La Divina Commedia

Any mapping of the Voynich text to natural languages is necessarily based on the assumption that the Voynich scribes created the manuscript by some kind of process based on precursor documents in such languages. To my mind, the text gives little clue as to what those languages might have been. (The illustrations possibly yield clues, but I have no expertise in that area.)

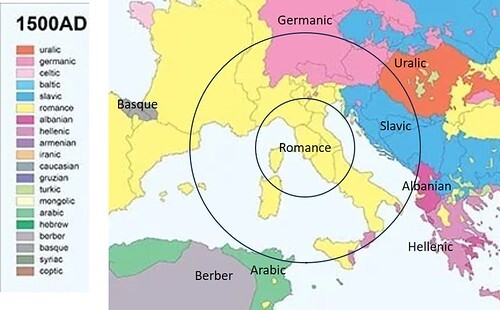

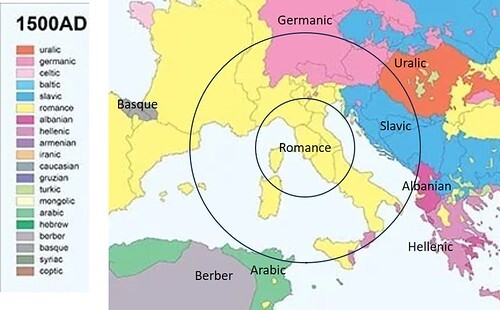

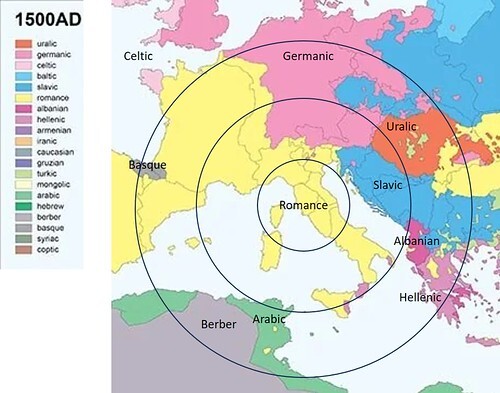

However, the distance-decay hypothesis (to which I have alluded in other posts) gives us some reason to think that a manuscript found in Frascati, Italy, might have some link with the languages spoken or written in Italy.

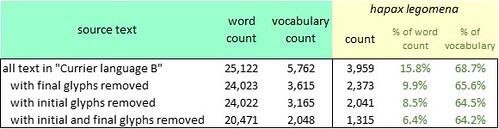

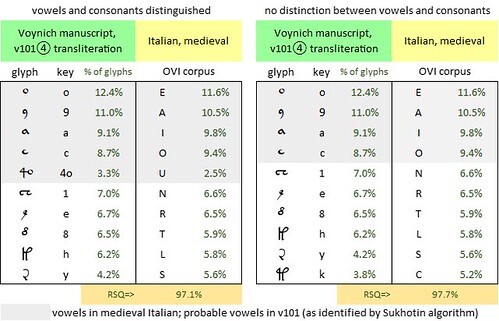

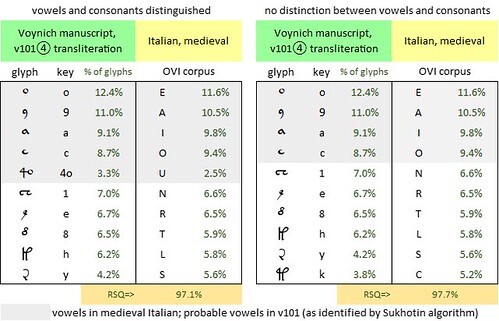

In my early research for my book Voynich Reconsidered (Schiffer Publishing, 2024), I experimented with mapping from the Voynich text to medieval Italian. The mapping was based on the frequencies of the Voynich glyphs (in various transliterations of my own devising) and the frequencies of the letters in medieval Italian (as represented by the OVI corpus). This mapping yielded a sequence of Italian text strings. Nearly all of the strings could be found as real words in the corpus.

The OVI corpus is intended primarily for speakers of Italian (of whom, I am not one), and does not include translations of Italian words into any other language. I was not able to determine the meanings of all of the words that I found; nor to judge whether the words, in the mapped sequence, made any sense.

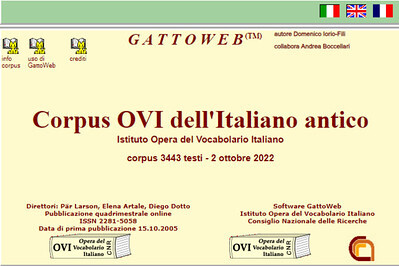

The home page of the OVI corpus of medieval Italian at http://gattoweb.ovi.cnr.it. Image credit: Istituto Opera del Vocabulario Italiano.

In any case, I wondered whether the OVI corpus was an accurate reflection of the presumed source documents that the Voynich scribes had on their walls or tables. My reasons for doubt included the following:

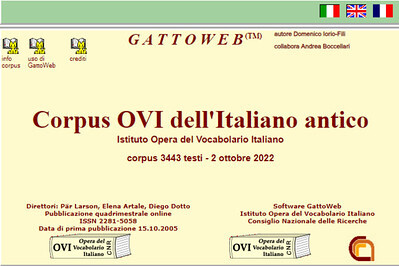

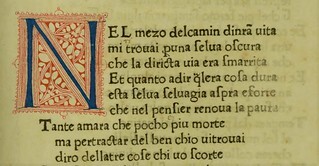

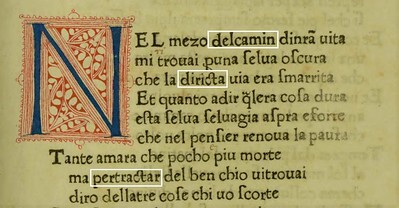

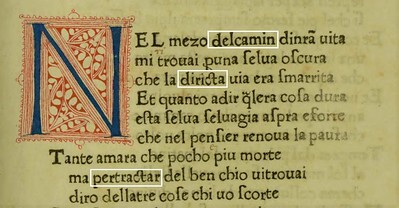

The first nine lines of La Divina Commedia, Foligno edition of 1472. Image credit: Biblioteca Europea di Informazione e Cultura; public domain.

The full text of La Divina Commedia is available online, from Project Gutenberg and elsewhere; but as far as I can tell, all the online versions are written in (what appears to be) a modernised Italian which diverges from that of the 1472 edition. To take just one example, namely the first line:

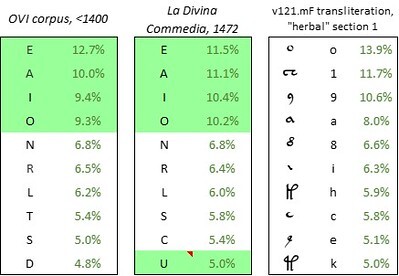

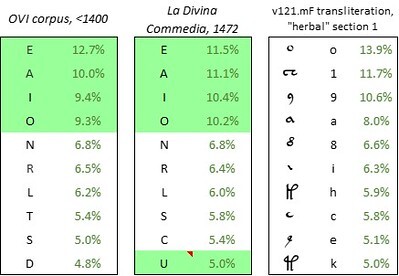

Of the twenty-one letters in the alphabet of the reconstructed Commedia, nine had the same rankings as those in the OVI corpus. For example, the seven most frequent letters in OVI and in the Commedia were E, A, I, O, N, R, and L, in that order. Only from the eighth letter onwards were there some slight divergences in the rankings. In particular the letter U, which in the 1472 edition was also used in place of V, moved into the top ten.

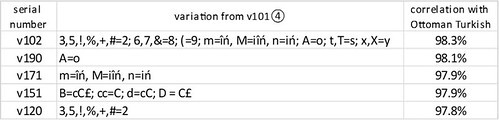

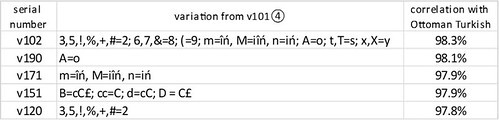

Having generated a frequency table for Italian letters, I then tested a range of alternative transliterations of the Voynich manuscript, which I numbered from v101④ to v202. As I have mentioned in other posts, the ④ reflects my view that the v101 glyph pair {4o} is a single glyph; in all my transliterations, I assigned this glyph the Unicode symbol ④.

In prioritising my transliterations vis-à-vis any presumed precursor language, I used two metrics, as follows:

On this metric, the transliteration which best fitted the Italian language of 1472 (as represented by my reconstruction of the Foligno edition of La Divina Commedia) was the one that I numbered v121.mF. This transliteration yielded both the highest frequency correlation (98.3 percent) and the lowest average frequency difference (0.30 percent).

What I call v121 is in fact a family of transliterations, with some variations. The differences between v121 and v101 are as follows:

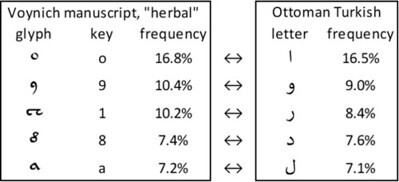

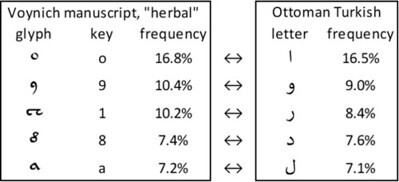

The top ten letters in the OVI corpus and in La Divina Commedia (reconstructed 1472 edition), and the top ten glyphs in the Voynich manuscript, v121.mF transliteration, “herbal” section. Author’s analysis.

The next heroic step was to explore these juxtapositions as correspondences or mappings: in other words, to conjecture that the Voynich scribes mapped the Italian E to the glyph {o}, the Italian A to the glyph {1}, and so on.

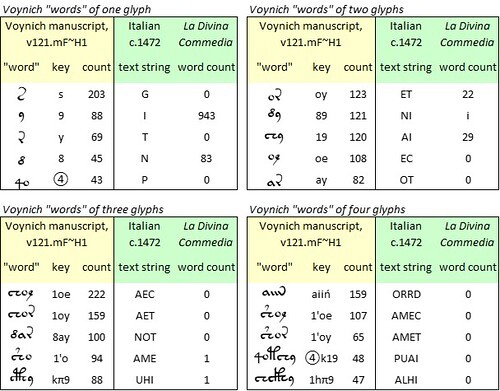

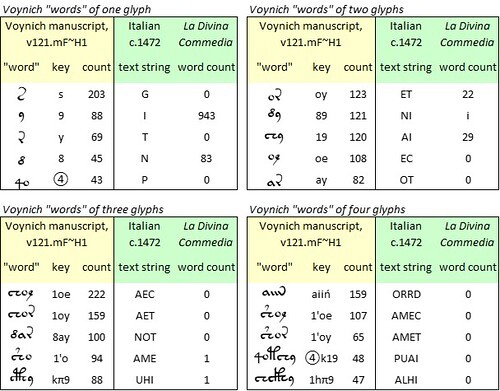

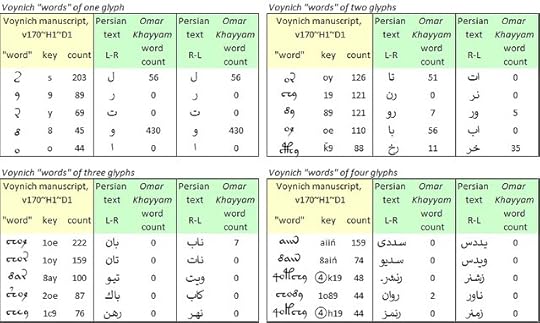

It was simple enough to test this conjecture. We could take, say, the five most common Voynich “words” and see whether they map to real words in Italian. In order to see to what extent such a mapping might hold water, I selected the most common Voynich “words” of one, two, three and four glyphs. The results were as follows.

The five most common "words" of one, two, three and four glyphs in the Voynich manuscript, v121.mF transliteration, "herbal" section; and test mappings of these "words" to Italian as written in 1472. Author's analysis.

Notwithstanding the good statistical fit between the v121.mF transliteration and La Divina Commedia, this test did not produce many real Italian words, apart from a few words of one or two letters.

We may draw a number of possible conclusions: that the precursor languages of the Voynich manuscript do not include Italian; or that they do, but not Italian as it appeared in printed books around 1472; or that the period is about right, but that La Divina Commedia is a not a good representation of the precursor documents. Alternatively: that the v121.mF transliteration is not the best one; or that, as Prescott Currier said in 1976, the Voynich “words” are not really words.

Finally, we could recall Massimiliano’s concept of the “slot” alphabet” and conjecture that the Voynich scribes re-ordered the glyphs in each Voynich “word”. In that case, we could conceive the possibility that at some point, a scribe came across the real Italian word TEMA, which he mapped to the glyph string {yo’1}. Since the “slot alphabet” did not permit this sequence, he re-ordered it to {1’oy}, or {2oy} as it is written in v101: which is a real Voynich “word”.

However, the distance-decay hypothesis (to which I have alluded in other posts) gives us some reason to think that a manuscript found in Frascati, Italy, might have some link with the languages spoken or written in Italy.

In my early research for my book Voynich Reconsidered (Schiffer Publishing, 2024), I experimented with mapping from the Voynich text to medieval Italian. The mapping was based on the frequencies of the Voynich glyphs (in various transliterations of my own devising) and the frequencies of the letters in medieval Italian (as represented by the OVI corpus). This mapping yielded a sequence of Italian text strings. Nearly all of the strings could be found as real words in the corpus.

The OVI corpus is intended primarily for speakers of Italian (of whom, I am not one), and does not include translations of Italian words into any other language. I was not able to determine the meanings of all of the words that I found; nor to judge whether the words, in the mapped sequence, made any sense.

The home page of the OVI corpus of medieval Italian at http://gattoweb.ovi.cnr.it. Image credit: Istituto Opera del Vocabulario Italiano.

In any case, I wondered whether the OVI corpus was an accurate reflection of the presumed source documents that the Voynich scribes had on their walls or tables. My reasons for doubt included the following:

• The OVI corpus consists of texts written before the year 1400.For these reasons, I thought it worthwhile to calculate the Italian letter frequencies on the basis of another corpus, from a slightly later period than the OVI. For this purpose, I again turned to Dante Alighieri’s La Divina Commedia, specifically the first printed edition, launched by Johann Neumeister in the city of Foligno in the year 1472.

• Of five samples from the parchment of the Voynich manuscript, the most recent (from folio 8) was carbon-dated to the period 1394 to 1458 with 92.2 percent probability.

• We might reasonably assume that the scribes wrote the manuscript after the latest date of production of the parchment.

• We might conjecture that the scribes worked from printed source documents (as opposed to manuscripts). Since Johannes Gutenberg introduced commercial printing in Europe around 1455, this assumption would date the Voynich text to about 1455 at the earliest.

• The Italian language (like any language) surely evolved over time, and must have undergone changes in the letter frequencies.

The first nine lines of La Divina Commedia, Foligno edition of 1472. Image credit: Biblioteca Europea di Informazione e Cultura; public domain.

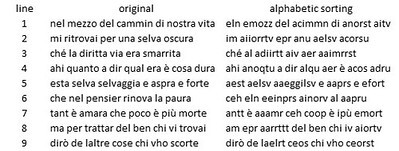

The full text of La Divina Commedia is available online, from Project Gutenberg and elsewhere; but as far as I can tell, all the online versions are written in (what appears to be) a modernised Italian which diverges from that of the 1472 edition. To take just one example, namely the first line:

The 1472 edition reads: Nel mezo delcamin dinrã uitaI wanted to reconstruct the text that the Voynich scribes would have seen if, hypothetically, they had had the 1472 edition of the Commedia on their work table. Accordingly, working from the Gutenberg version, I restored the abbreviations and spelling conventions that I could detect in the 1472 edition. Having done so, I recalculated the letter frequencies.

The Gutenberg version reads: Nel mezzo del cammin di nostra vita.

Of the twenty-one letters in the alphabet of the reconstructed Commedia, nine had the same rankings as those in the OVI corpus. For example, the seven most frequent letters in OVI and in the Commedia were E, A, I, O, N, R, and L, in that order. Only from the eighth letter onwards were there some slight divergences in the rankings. In particular the letter U, which in the 1472 edition was also used in place of V, moved into the top ten.

Having generated a frequency table for Italian letters, I then tested a range of alternative transliterations of the Voynich manuscript, which I numbered from v101④ to v202. As I have mentioned in other posts, the ④ reflects my view that the v101 glyph pair {4o} is a single glyph; in all my transliterations, I assigned this glyph the Unicode symbol ④.

In prioritising my transliterations vis-à-vis any presumed precursor language, I used two metrics, as follows:

• The statistical correlation (R-squared} between the glyph frequencies in the transliteration and the letter frequencies in the precursor language;The frequency correlations do not differ much from one transliteration to another, and are typically well over 90 percent for any pairing of transliteration and precursor language. I am inclined therefore to use the average frequency difference as the more powerful metric.

• The average frequency difference, defined as the average of the absolute differences between glyph frequencies and equally-ranked letter frequencies in the precursor language.

On this metric, the transliteration which best fitted the Italian language of 1472 (as represented by my reconstruction of the Foligno edition of La Divina Commedia) was the one that I numbered v121.mF. This transliteration yielded both the highest frequency correlation (98.3 percent) and the lowest average frequency difference (0.30 percent).

What I call v121 is in fact a family of transliterations, with some variations. The differences between v121 and v101 are as follows:

• As mentioned above, I replaced the v101 {4o} with the single glyph ④.The differences between v121.mF and v121 are as follows:

• I redefined the v101 glyph {2} and all its variants {3}, {5}, {!}, {%}, {+} and {#} as 1', in other words as the glyph {1} plus a catch-all accent {‘}.

• I disaggregated the v101 glyph {m} into the string iiN; but allowed the v101 {M} and {n} to remain as distinct glyphs from {N}.As an illustration, a juxtaposition of the frequencies of the top ten letters in the 1472 La Divina Commedia, and those of the top ten glyphs in v121.mF, looks like this:

• I disaggregated each of the “bench gallows” glyphs into its vertical component and its “bench”. The “bench” resembles an elongated {1}, but I did not wish to assume that it was the same as {1}; so I assigned it a new key, π (the Greek letter pi). Under this process, {F} became fπ, {G} became gπ, and so on.

The top ten letters in the OVI corpus and in La Divina Commedia (reconstructed 1472 edition), and the top ten glyphs in the Voynich manuscript, v121.mF transliteration, “herbal” section. Author’s analysis.

The next heroic step was to explore these juxtapositions as correspondences or mappings: in other words, to conjecture that the Voynich scribes mapped the Italian E to the glyph {o}, the Italian A to the glyph {1}, and so on.

It was simple enough to test this conjecture. We could take, say, the five most common Voynich “words” and see whether they map to real words in Italian. In order to see to what extent such a mapping might hold water, I selected the most common Voynich “words” of one, two, three and four glyphs. The results were as follows.

The five most common "words" of one, two, three and four glyphs in the Voynich manuscript, v121.mF transliteration, "herbal" section; and test mappings of these "words" to Italian as written in 1472. Author's analysis.

Notwithstanding the good statistical fit between the v121.mF transliteration and La Divina Commedia, this test did not produce many real Italian words, apart from a few words of one or two letters.

We may draw a number of possible conclusions: that the precursor languages of the Voynich manuscript do not include Italian; or that they do, but not Italian as it appeared in printed books around 1472; or that the period is about right, but that La Divina Commedia is a not a good representation of the precursor documents. Alternatively: that the v121.mF transliteration is not the best one; or that, as Prescott Currier said in 1976, the Voynich “words” are not really words.

Finally, we could recall Massimiliano’s concept of the “slot” alphabet” and conjecture that the Voynich scribes re-ordered the glyphs in each Voynich “word”. In that case, we could conceive the possibility that at some point, a scribe came across the real Italian word TEMA, which he mapped to the glyph string {yo’1}. Since the “slot alphabet” did not permit this sequence, he re-ordered it to {1’oy}, or {2oy} as it is written in v101: which is a real Voynich “word”.

March 20, 2024

Voynich Reconsidered: Persian as precursor

In the sourse of my ongoing search for meaning in the text of the Voynich manuscript, I have investigated the hypothesis that the underlying or precursor language was Persian.

Persian, like Arabic, Hebrew and Ottoman Turkish, uses an abjad script in which the long vowels are written but the short vowels usually are not. The pronunciation of a written Persian word therefore has to be inferred from the context, or from diacritics which the writer may have placed above or below the consonants.

Pareidolia

In any exercise of mapping from the Voynich manuscript to a language in an abjad script, there is a risk of pareidolia: of seeing words that are not necessarily there. This is because of what I am inclined to call the abjad effect: in the absence of short vowels, any short string of letters is quite likely to be a real word.

A classic example of pareidolia: the “Face on Mars”. Image credit: NASA.

Here is an example.

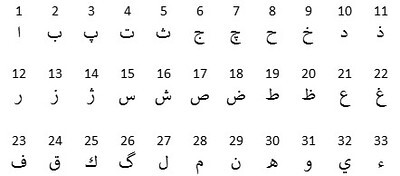

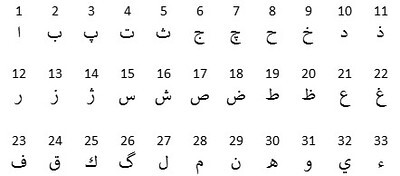

The modern Persian alphabet has thirty-three letters, as follows, with acknowledgement to Dr Mehrzad Mansouri, “Examining the frequency of Persian characters and their suitability on the computer keyboard” (Journal of Linguistics and Khorasan Dialects, No. 7, fall and winter 2013).

We can use the =RAND() function in Microsoft Excel to generate three random numbers between 1 and 33. My first run of this function yielded the following numbers:

To my mind it follows that, if we wish to map the Voynich manuscript to Persian (or to any other language which uses an abjad script), we cannot be satisfied with finding individual words. We must seek to map at least a whole line, or a paragraph, or a whole page, and the result must make sense.

Upsides of abjad

The upside of any abjad script proposed as a precursor to the Voynich text is that the words are typically short.

For example, I have a corpus of Persian, drawn from the works of forty-eight Persian poets. It contains 8,102,157 words with 26,572,744 letters. The average length of words is 3.27 letters.

In Arabic, Dr Jiří Milička of Charles University has studied a large diachronic corpus (Corpus Linguae Arabicae Universalis Diachronicus, or CLAUDia), with about 420 million words. He found that in the fifteenth century, the average length of Arabic words was 4.12 letters.

The Voynich manuscript, in the v101 transliteration, has an average of 3.90 glyphs per "word". Thus in terms of length, Persian and Arabic words match Voynich "words" much better than those in most European languages.

A Voynich-Persian mapping

The researcher on the Voynich Ninja forum proposed a mapping from Voynich glyphs to Persian letters based (in my understanding) on visual similarities. For example, the v101 glyphs {m} and {N} were proposed to map (as I understand, interchangeably) to the Persian letters ﻡ (m) and ﻥ (n).

A consequence of this proposed mapping is that in the resulting Persian text, the letters have a frequency distribution which is greatly different from that of the Persian language as a whole.

With thanks to bi3mw of the Voynich ninja forum, I was able to calculate that in my Persian corpus, the letters ﻡ (m) and ﻥ (n) together accounted for 13.7 percent of the total. In the Voynich manuscript, if we use the v101 transliteration, the glyphs {m} and {N}, and their variants {M} and {n}, together account for 4.5 percent of all the glyphs.

The proposed mapping would therefore create a Persian text in which the letters ﻡ (m) and ﻥ (n) were unusually rare: a text quite unlike the corpus of written Persian.

In any language, individual documents can have letter frequency distributions that diverge from that the language as a whole. In my experience, these divergences are not large, and are typically evident from around the tenth most common letter onwards. For example, in the OVI corpus of medieval Italian, and in Dante’s La Divina Commedia, the nine most common letters are the same, and in the same order. The tenth and eleventh most common letters in OVI are respectively D and C; in La Divina Commedia, they are C and D.

Likewise, we can make a comparison of my corpus of Persian poets with the Ruba’iyat of Omar Khayyam, which in my copy has 19,876 letters. The top ten letters are the same in the Ruba’iyat as in the larger corpus, although in a slightly different order. For the whole Persian alphabet, the correlation of letter frequencies between the Ruba’iyat and the corpus is 98.6 percent. In short, we do not expect an individual document to have a greatly different letter frequency from that of the language as a whole.

There can of course be exceptions, such as lipograms: texts which intentionally omit a selected letter or letters. For example, in the English language we have the novel Gadsby by Ernest Vincent Wright, and in French La Disparition by Georges Perec, neither of which contains the letter e, the most common letter in both English and French. We have to hope that the Voynich manuscript is not a lipogram.

Letter frequencies

My own predilection is to ignore any visual similarities that may exist between Voynich glyphs and letters in natural languages, and to focus on glyph and letter frequencies. This was a device used by Edgar Allen Poe in his short story The Gold Bug, and by Sir Arthur Conan Doyle in his Sherlock Holmes tale The Adventure of the Dancing Men. In both cases the protagonist used frequency comparisons to solve enciphered messages.

In the case of the Voynich manuscript, following Occam’s Razor, we could adopt the simplest assumptions: that the scribes worked from a precursor document or documents in a natural language or languages; and that they chose to use, or the producer instructed them to use, a one-to-one mapping between letters and glyphs. If so, the frequencies of the precursor letters should have been preserved. If the precursor language was Persian, then the frequencies of the Persian letters should match, in some respect, the frequencies of the glyphs that the scribes committed to vellum.

As a start, we could look at the ten most common symbols in Persian and in Voynich.

For Persian, I used the above-mentioned corpus of the works of forty-eight poets: for which my colleague bi3mw kindly calculated the letter frequencies. For the Voynich manuscript, I used a range of alternative transliterations to Glen Claston’s classic v101 transliteration. To select the best-fitting transliteration, I used the average frequency difference (which I defined in another post on this platform). The best fit was the transliteration which I numbered v170. This had the following differences from v101:

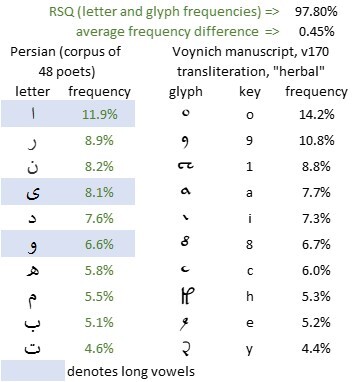

The results are as follows.

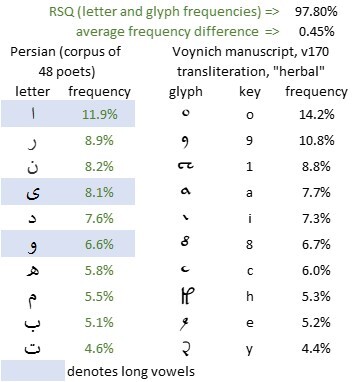

The Voynich manuscript, v170 transliteration, “herbal” section, the ten most frequent glyphs; and the ten most frequent letters in a corpus of the works of forty-eight Persian poets (frequencies by courtesy of bi3mw)

Here it is tempting to see not merely a visual resemblance but a correspondence between the v101 glyph {9} and the Persian letter و. But to minimise the element of subjectivity, I felt that it would be better to stay with the frequencies, and to see where that led.

The correlation between the v170 glyph frequencies and the Persian letter frequencies is 97.8 percent. This correlation is comparable with my results for many modern and medieval European languages. They do not, in themselves, imply that Persian is more or less likely than a European language to be a precursor of the Voynich manuscript.

The common “words”

If the above juxtapositions of frequencies have any merit as correspondences (we might say: mappings), then we should be able to map some of the common “words” in the Voynich manuscript to letter strings in Persian, and see whether this process yields any real Persian words.

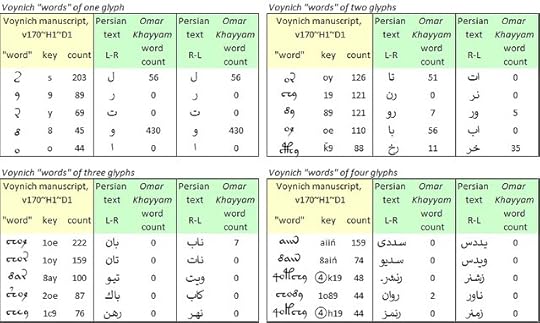

In the v170 transliteration, "herbal" section, I identified the top five "words" of one, two, three and four glyphs, and mapped each of them to Persian, one glyph at a time, according to the rankings in the frequency tables.

The results were as follows:

Voynich manuscript, v170 transliteration, “herbal” section: the five most frequent “words” of one, two, three and four glyphs; and test mappings to Persian. Author's analysis.

In short, the results are weak. Notwithstanding the abjad effect, few of the Voynich “words” map to real words in Persian, whether we read the glyphs from left to right or from right to left.

Postscript: the scribes

Persian is, and always has been, written from right to left; and by (I think) universal agreement, the Voynich manuscript is written from left to right. Therefore, if the manuscript is in Persian, we are compelled to conjecture that the Voynich scribes did not work directly from written documents. I imagine them, rather, taking dictation.

If so (and this would apply to dictation in any language), I could imagine that they would not always be sure where words began and ended. That might help us understand why the "word" breaks are so irregular, and why there are so many instances of what Zattera calls "separable words".

Finally: the quality and consistency of the Voynich script leads us to believe that the scribes were professionals. They made a living by writing what other people wanted written. If they wrote from dictation, is it possible they wrote phonetically from a language that they did not themselves understand?

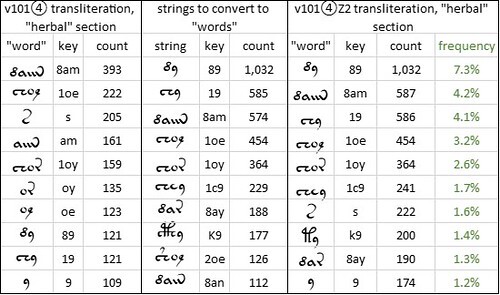

Separable “words”

In any phonetic language, as George Zipf observed when he formulated Zipf’s Law, the most frequent words are short: like "the" and "and" in English. In an abjad language, with short vowels omitted, the most frequent words will be even shorter. The top ten words in medieval Persian have two letters, or one: for example و ("and").

Some of the top ten Voynich "words" are too long to match the top ten Persian words. Examples, in the v170 transliteration, are the "words" {1oe}, {1oy} and {8ay}.

It seems to me possible that Voynich “words” do not map very well to Persian words because the “words” are not words. As Preston Currier remarked at Mary D’Imperio’s Voynich seminar in 1976:

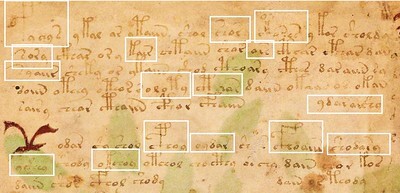

Zattera, in his paper at Voynich 2022, did not specify the "separable words" that he had identified. I have not yet formulated a systematic approach to the identification of "separable words". For any given transliteration, my provisional process has the following steps:

A sequence of steps to identify the “separable words” in the Voynich manuscript, v101 transliteration. Author’s analysis.

If the Voynich manuscript has a Persian precursor, we may need to apply Zattera's concept of "separable words", and break up some of the common Voynich “words”. That is a line of research that seems worth some effort.

Other languages

The approach that I outlined above would apply equally to a mapping from Voynich to any phonetic natural language.

I have already tested mappings from corpora and selected documents in several medieval languages including Albanian, Arabic, Bohemian, English, French, Galician-Portuguese, German, Italian, Latin and Ottoman Turkish. Some of my results are reported in Voynich Reconsidered: others have appeared elsewhere on this platform, or may appear in due course. Readers who would like me to test other languages are invited to send me examples of the respective corpora, preferably as .txt files.

Persian, like Arabic, Hebrew and Ottoman Turkish, uses an abjad script in which the long vowels are written but the short vowels usually are not. The pronunciation of a written Persian word therefore has to be inferred from the context, or from diacritics which the writer may have placed above or below the consonants.

Pareidolia

In any exercise of mapping from the Voynich manuscript to a language in an abjad script, there is a risk of pareidolia: of seeing words that are not necessarily there. This is because of what I am inclined to call the abjad effect: in the absence of short vowels, any short string of letters is quite likely to be a real word.

A classic example of pareidolia: the “Face on Mars”. Image credit: NASA.

Here is an example.

The modern Persian alphabet has thirty-three letters, as follows, with acknowledgement to Dr Mehrzad Mansouri, “Examining the frequency of Persian characters and their suitability on the computer keyboard” (Journal of Linguistics and Khorasan Dialects, No. 7, fall and winter 2013).

We can use the =RAND() function in Microsoft Excel to generate three random numbers between 1 and 33. My first run of this function yielded the following numbers:

5, 12, 23.The fifth, twelfth and twenty-third letters in the Persian alphabet are ف, ر, ث. These three letters, read from right to left, form the string

فرثwhich is a real word in Persian, according to the Dehkhoda dictionary, maintained by the Dehkhoda Lexicon Institute and International Centre for Persian Studies at the University of Tehran. Thus we are able by a random process to create a real word.

To my mind it follows that, if we wish to map the Voynich manuscript to Persian (or to any other language which uses an abjad script), we cannot be satisfied with finding individual words. We must seek to map at least a whole line, or a paragraph, or a whole page, and the result must make sense.

Upsides of abjad

The upside of any abjad script proposed as a precursor to the Voynich text is that the words are typically short.

For example, I have a corpus of Persian, drawn from the works of forty-eight Persian poets. It contains 8,102,157 words with 26,572,744 letters. The average length of words is 3.27 letters.

In Arabic, Dr Jiří Milička of Charles University has studied a large diachronic corpus (Corpus Linguae Arabicae Universalis Diachronicus, or CLAUDia), with about 420 million words. He found that in the fifteenth century, the average length of Arabic words was 4.12 letters.

The Voynich manuscript, in the v101 transliteration, has an average of 3.90 glyphs per "word". Thus in terms of length, Persian and Arabic words match Voynich "words" much better than those in most European languages.

A Voynich-Persian mapping

The researcher on the Voynich Ninja forum proposed a mapping from Voynich glyphs to Persian letters based (in my understanding) on visual similarities. For example, the v101 glyphs {m} and {N} were proposed to map (as I understand, interchangeably) to the Persian letters ﻡ (m) and ﻥ (n).

A consequence of this proposed mapping is that in the resulting Persian text, the letters have a frequency distribution which is greatly different from that of the Persian language as a whole.

With thanks to bi3mw of the Voynich ninja forum, I was able to calculate that in my Persian corpus, the letters ﻡ (m) and ﻥ (n) together accounted for 13.7 percent of the total. In the Voynich manuscript, if we use the v101 transliteration, the glyphs {m} and {N}, and their variants {M} and {n}, together account for 4.5 percent of all the glyphs.

The proposed mapping would therefore create a Persian text in which the letters ﻡ (m) and ﻥ (n) were unusually rare: a text quite unlike the corpus of written Persian.

In any language, individual documents can have letter frequency distributions that diverge from that the language as a whole. In my experience, these divergences are not large, and are typically evident from around the tenth most common letter onwards. For example, in the OVI corpus of medieval Italian, and in Dante’s La Divina Commedia, the nine most common letters are the same, and in the same order. The tenth and eleventh most common letters in OVI are respectively D and C; in La Divina Commedia, they are C and D.

Likewise, we can make a comparison of my corpus of Persian poets with the Ruba’iyat of Omar Khayyam, which in my copy has 19,876 letters. The top ten letters are the same in the Ruba’iyat as in the larger corpus, although in a slightly different order. For the whole Persian alphabet, the correlation of letter frequencies between the Ruba’iyat and the corpus is 98.6 percent. In short, we do not expect an individual document to have a greatly different letter frequency from that of the language as a whole.

There can of course be exceptions, such as lipograms: texts which intentionally omit a selected letter or letters. For example, in the English language we have the novel Gadsby by Ernest Vincent Wright, and in French La Disparition by Georges Perec, neither of which contains the letter e, the most common letter in both English and French. We have to hope that the Voynich manuscript is not a lipogram.

Letter frequencies

My own predilection is to ignore any visual similarities that may exist between Voynich glyphs and letters in natural languages, and to focus on glyph and letter frequencies. This was a device used by Edgar Allen Poe in his short story The Gold Bug, and by Sir Arthur Conan Doyle in his Sherlock Holmes tale The Adventure of the Dancing Men. In both cases the protagonist used frequency comparisons to solve enciphered messages.

In the case of the Voynich manuscript, following Occam’s Razor, we could adopt the simplest assumptions: that the scribes worked from a precursor document or documents in a natural language or languages; and that they chose to use, or the producer instructed them to use, a one-to-one mapping between letters and glyphs. If so, the frequencies of the precursor letters should have been preserved. If the precursor language was Persian, then the frequencies of the Persian letters should match, in some respect, the frequencies of the glyphs that the scribes committed to vellum.

As a start, we could look at the ten most common symbols in Persian and in Voynich.

For Persian, I used the above-mentioned corpus of the works of forty-eight poets: for which my colleague bi3mw kindly calculated the letter frequencies. For the Voynich manuscript, I used a range of alternative transliterations to Glen Claston’s classic v101 transliteration. To select the best-fitting transliteration, I used the average frequency difference (which I defined in another post on this platform). The best fit was the transliteration which I numbered v170. This had the following differences from v101:

• I replaced the v101 glyph pair {4o} by the single Unicode symbol ④Furthermore, since the Voynich manuscript has evidence of multiple languages, I thought it advisable to work with only the “herbal” section, which appeared to use a single homogenous language. I followed Rene Zandbergen’s definition of the “herbal” section: that is, the folios which contain full-page drawings of objects that resemble plants. We do not need to assume that the text of this section has anything to do with plants or herbs.

• I disaggregated three related v101 glyphs as follows: m => iiN, M= iiiN, n => iN.

The results are as follows.

The Voynich manuscript, v170 transliteration, “herbal” section, the ten most frequent glyphs; and the ten most frequent letters in a corpus of the works of forty-eight Persian poets (frequencies by courtesy of bi3mw)

Here it is tempting to see not merely a visual resemblance but a correspondence between the v101 glyph {9} and the Persian letter و. But to minimise the element of subjectivity, I felt that it would be better to stay with the frequencies, and to see where that led.

The correlation between the v170 glyph frequencies and the Persian letter frequencies is 97.8 percent. This correlation is comparable with my results for many modern and medieval European languages. They do not, in themselves, imply that Persian is more or less likely than a European language to be a precursor of the Voynich manuscript.

The common “words”

If the above juxtapositions of frequencies have any merit as correspondences (we might say: mappings), then we should be able to map some of the common “words” in the Voynich manuscript to letter strings in Persian, and see whether this process yields any real Persian words.

In the v170 transliteration, "herbal" section, I identified the top five "words" of one, two, three and four glyphs, and mapped each of them to Persian, one glyph at a time, according to the rankings in the frequency tables.

The results were as follows:

Voynich manuscript, v170 transliteration, “herbal” section: the five most frequent “words” of one, two, three and four glyphs; and test mappings to Persian. Author's analysis.

In short, the results are weak. Notwithstanding the abjad effect, few of the Voynich “words” map to real words in Persian, whether we read the glyphs from left to right or from right to left.

Postscript: the scribes

Persian is, and always has been, written from right to left; and by (I think) universal agreement, the Voynich manuscript is written from left to right. Therefore, if the manuscript is in Persian, we are compelled to conjecture that the Voynich scribes did not work directly from written documents. I imagine them, rather, taking dictation.

If so (and this would apply to dictation in any language), I could imagine that they would not always be sure where words began and ended. That might help us understand why the "word" breaks are so irregular, and why there are so many instances of what Zattera calls "separable words".

Finally: the quality and consistency of the Voynich script leads us to believe that the scribes were professionals. They made a living by writing what other people wanted written. If they wrote from dictation, is it possible they wrote phonetically from a language that they did not themselves understand?

Separable “words”

In any phonetic language, as George Zipf observed when he formulated Zipf’s Law, the most frequent words are short: like "the" and "and" in English. In an abjad language, with short vowels omitted, the most frequent words will be even shorter. The top ten words in medieval Persian have two letters, or one: for example و ("and").

Some of the top ten Voynich "words" are too long to match the top ten Persian words. Examples, in the v170 transliteration, are the "words" {1oe}, {1oy} and {8ay}.

It seems to me possible that Voynich “words” do not map very well to Persian words because the “words” are not words. As Preston Currier remarked at Mary D’Imperio’s Voynich seminar in 1976:

“That’s just the point – they’re not words!”I am mindful that, as Massimiliano Zattera demonstrated at the Voynich 2022 conference, the Voynich manuscript contains thousands of “words” that look like compound “words”. To take just one example: the v101 “word” {2coehcc89} can be disaggregated into {2coe} {hcc89} or {2co} {ehcc89} or {2co} {e} {hcc89}, and in each case the components are Voynich “words”. As I observed in a previous post, Zattera estimated that such “separable words” accounted for 10.4 percent of the text and for 37.1 percent of the vocabulary of the manuscript.

Zattera, in his paper at Voynich 2022, did not specify the "separable words" that he had identified. I have not yet formulated a systematic approach to the identification of "separable words". For any given transliteration, my provisional process has the following steps:

* to identify the twenty most common “words” (delimited by spaces or line breaks), by means of an online word frequency counter such as https://www.browserling.com/tools/wor...

* to exclude single-glyph "words" such as {s}: by analogy with single-letter words such as "e" in Italian, which do not carry the implication that other occurrences of such letters are words

* to exclude "words" which are parts of other frequent "words": for example, to exclude {am} which is part of {8am}

* for each of the remaining "words", to search for other occurrences of those “words” as strings within “words”;

* to select the ten most frequent such strings;

* to convert such strings to “words” by adding a preceding and following space.

A sequence of steps to identify the “separable words” in the Voynich manuscript, v101 transliteration. Author’s analysis.

If the Voynich manuscript has a Persian precursor, we may need to apply Zattera's concept of "separable words", and break up some of the common Voynich “words”. That is a line of research that seems worth some effort.

Other languages

The approach that I outlined above would apply equally to a mapping from Voynich to any phonetic natural language.

I have already tested mappings from corpora and selected documents in several medieval languages including Albanian, Arabic, Bohemian, English, French, Galician-Portuguese, German, Italian, Latin and Ottoman Turkish. Some of my results are reported in Voynich Reconsidered: others have appeared elsewhere on this platform, or may appear in due course. Readers who would like me to test other languages are invited to send me examples of the respective corpora, preferably as .txt files.

Published on March 20, 2024 08:49

•

Tags:

omar-khayyam, persian, voynich

March 18, 2024

Voynich Reconsidered: Arabic as precursor

In my ongoing search for meaning in the text of the Voynich manuscript, I have considered Arabic as a possible precursor language.

In order to assess Arabic as a precursor, we could start by examining whether there are any statistical similarities between the Voynich manuscript and Arabic documents of its era (which we may reasonably assume to be the fifteenth century). One useful metric is the frequency distribution of Arabic letters as written at that time.

An example is the letter frequency distribution in البداية والنهاية (The Beginning and the End), by Abulfida' ibn Kathir (1300-1373).

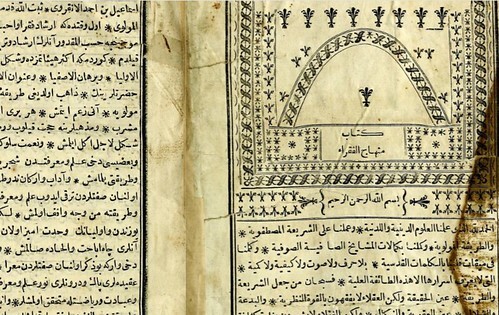

A modern edition of Al bidayah wal nihayah by ibn Kathir, in twenty-one volumes. Image credit: amazon.

For the Voynich manuscript, Glen Claston’s v101 transliteration is a starting point, The frequencies of the glyphs in the v101 transliteration, in descending order, and the frequencies of the letters in the works of ibn Kathir, have a correlation of 94.4 percent. That in itself is not remarkable: with two short sequences in descending order, it's easy to obtain a correlation of over 90 percent. Many European languages, as well as Hebrew, Persian and Ottoman Turkish, yield a similar correlation with the v101 transliteration.

However, it seems to me that the juxtaposition of these frequency tables opens the possibility of a provisional mapping of any chunk of Voynich text to Arabic. Having done so, it would probably be necessary to reverse the order of the letters in each transliterated word (for which there exist online tools such as https://onlinetexttools.com/reverse-text).

If the resulting text contained any recognisable Arabic words, we might be on the right track. If not, it might be necessary to try different approaches.

Here it should be remembered that Arabic uses an abjad script, in which the long vowels are written but the short vowels usually are not.

Alternative transliterations

One necessary consideration is whether the v101 transliteration is the right one to use.

v101 has a basic character set of seventy-one glyphs, which is far more than the number of letters in any alphabet of a phonetic natural language. There are several groups of visually similar glyphs such as {6}, {7}, {8} and {&}; it makes sense to combine each such group into a single glyph. We can disaggregate glyphs that look like strings, e.g. {m} => {iN}, {n} => {iN}. In both cases, that will reduce the size of the character set and make v101 more like a representation of a natural language.

Conversely, we can make distinctions between initial, interior, final and isolated glyphs. That will increase the size of the character set.

In all cases, these variants change the frequency table and consequently change the mapping from glyphs to any natural language.

In exploring Arabic and other natural languages as possible precursors to the Voynich manuscript, I felt it advisable to examine alternatives to v101. Accordingly, I developed a range of alternative transliterations of the Voynich text, all based on v101 but differing from v101 in one or more respects. I numbered these transliterations v101④ through v202. The ④ signifies that in all the transliterations, I treated the v101 glyph pair {4o} as a single glyph, to which I assigned the Unicode symbol ④.

For comparison of the Voynich text with the Arabic language, I used letter frequencies derived from the works of Ibn Kathir.

To prioritize my Voynich transliterations, I started by calculating the statistical correlations between the glyph frequencies and the Arabic letter frequencies, using the R-squared function (RSQ in Microsoft Excel). However, as expected with two short descending sequences, most of the correlations were well in excess of 90 percent. Substantial differences between transliterations, for example combining the {2} group of glyphs, resulted in quite small changes in the frequency correlations.

Frequency differences

I therefore adopted an alternative metric, namely the average frequency difference. Mathematically, this is the average of the absolute differences between the frequency of a precursor letter and the frequency of the equally ranked Voynich glyph. My idea was that the lowest average frequency difference should represent the best fit between a transliteration and the presumed precursor language.

On this metric, I found that the transliteration which I had numbered v171 was the best fit for ibn Kathir's Arabic alphabet. Apart from the treatment of {4o}, the v171 transliteration has the following differences from v101:

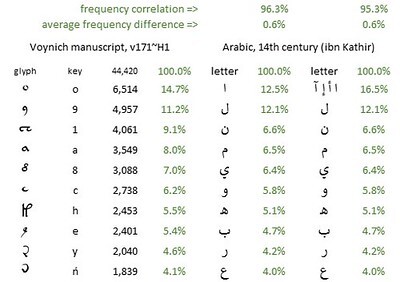

Below is a juxtaposition of the frequencies of the top ten glyphs in the v171 transliteration, and of those of the top ten Arabic letters. The average frequency difference between v171 and Ibn Kathir's Arabic (calculated on all 43 letters) is 0.64 percent.

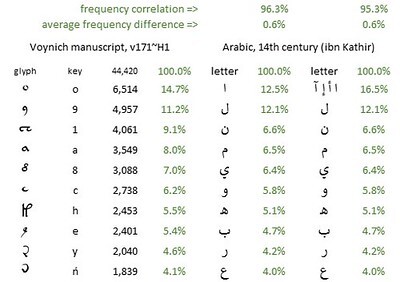

The frequencies of the ten most common glyphs in the Voynich manuscript; and those of the ten most common letters in fourteenth-century Arabic. The glyph frequencies are from author's v171 transliteration, "herbal" section. The Arabic letter frequencies are based on the works of Ibn Kathir, with the variants of alef (ﺍ ﺃ ﺇ ﺁ) shown separately or combined. Author's analysis.

The next step is to explore the potential of these juxtapositions as correspondences or mappings. For example, the Voynich {o} could map to and from the Arabic ا (alef). Thereby, we could map some of the most common Voynich "words", such as {8am}, {oe} and {1oe}, to text strings in Arabic. We could then search appropriate corpora of the Arabic language to determine whether these strings are real words.

Test mappings

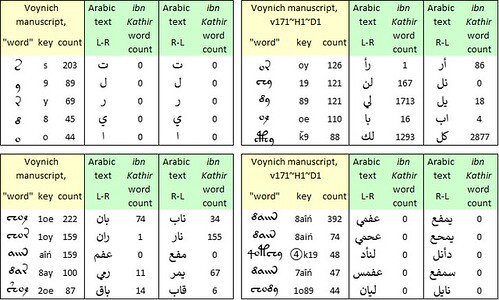

Below is a summary of my test mappings of the top five Voynich "words" of one, two, three and four glyphs.

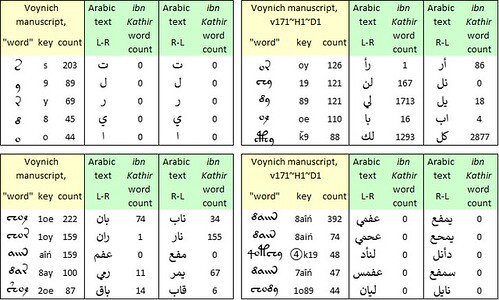

Test mappings of the top five "words" of one, two, three and four glyphs in the Voynich manuscript, v171 transliteration, "herbal" section, to text strings in medieval Arabic. Author's analysis.

We see here what I am inclined to call the abjad effect, which I had already observed with Hebrew, Persian and Ottoman Turkish. Most of the Voynich "words" of two or three glyphs map to real Arabic words. They do so whether the glyphs are read from left to right, or from right to left. But in an abjad script, almost any random string of two or three letters will be a real word. At the levels of one glyph and four glyphs, the mapping breaks down.

An alphabetic cipher?

These test mappings do not entirely exclude Arabic as a precursor language of the Voynich manuscript.

As Massimiliano Zattera demonstrated at the Voynich 2022 conference, in almost every Voynich "word" the glyphs follow a sequence, a kind of alphabetic order. Indeed, Zattera called the sequence a "slot alphabet". We are compelled to imagine that if the Voynich scribes mapped their manuscript from precursor documents, they re-ordered the glyphs in every "word". That would imply that we could take any one of our Arabic text strings, scramble the letters, and reverse-engineer it to the same Voynich "word" from which we started.

For example, in the above tests I mapped the Voynich "word" {1o89} to the Arabic strings ليان and نايل which are not real words. However, both strings have an anagram الين which is rare, occurring just six times in ibn Kathir. According to our mapping, if the Voynich scribes had read it from right to left, they would have mapped it to {o981}. If read from left to right, it would map to {189o}. The slot alphabet does not permit either of these sequences, so the scribes would have re-ordered them to {1o89}.

To take this idea further would require a good knowledge of Arabic (preferably medieval Arabic), a head for anagrams or Scrabble, and plenty of computing power (or patience).

In order to assess Arabic as a precursor, we could start by examining whether there are any statistical similarities between the Voynich manuscript and Arabic documents of its era (which we may reasonably assume to be the fifteenth century). One useful metric is the frequency distribution of Arabic letters as written at that time.

An example is the letter frequency distribution in البداية والنهاية (The Beginning and the End), by Abulfida' ibn Kathir (1300-1373).

A modern edition of Al bidayah wal nihayah by ibn Kathir, in twenty-one volumes. Image credit: amazon.

For the Voynich manuscript, Glen Claston’s v101 transliteration is a starting point, The frequencies of the glyphs in the v101 transliteration, in descending order, and the frequencies of the letters in the works of ibn Kathir, have a correlation of 94.4 percent. That in itself is not remarkable: with two short sequences in descending order, it's easy to obtain a correlation of over 90 percent. Many European languages, as well as Hebrew, Persian and Ottoman Turkish, yield a similar correlation with the v101 transliteration.

However, it seems to me that the juxtaposition of these frequency tables opens the possibility of a provisional mapping of any chunk of Voynich text to Arabic. Having done so, it would probably be necessary to reverse the order of the letters in each transliterated word (for which there exist online tools such as https://onlinetexttools.com/reverse-text).

If the resulting text contained any recognisable Arabic words, we might be on the right track. If not, it might be necessary to try different approaches.

Here it should be remembered that Arabic uses an abjad script, in which the long vowels are written but the short vowels usually are not.

Alternative transliterations

One necessary consideration is whether the v101 transliteration is the right one to use.

v101 has a basic character set of seventy-one glyphs, which is far more than the number of letters in any alphabet of a phonetic natural language. There are several groups of visually similar glyphs such as {6}, {7}, {8} and {&}; it makes sense to combine each such group into a single glyph. We can disaggregate glyphs that look like strings, e.g. {m} => {iN}, {n} => {iN}. In both cases, that will reduce the size of the character set and make v101 more like a representation of a natural language.

Conversely, we can make distinctions between initial, interior, final and isolated glyphs. That will increase the size of the character set.

In all cases, these variants change the frequency table and consequently change the mapping from glyphs to any natural language.

In exploring Arabic and other natural languages as possible precursors to the Voynich manuscript, I felt it advisable to examine alternatives to v101. Accordingly, I developed a range of alternative transliterations of the Voynich text, all based on v101 but differing from v101 in one or more respects. I numbered these transliterations v101④ through v202. The ④ signifies that in all the transliterations, I treated the v101 glyph pair {4o} as a single glyph, to which I assigned the Unicode symbol ④.

For comparison of the Voynich text with the Arabic language, I used letter frequencies derived from the works of Ibn Kathir.

To prioritize my Voynich transliterations, I started by calculating the statistical correlations between the glyph frequencies and the Arabic letter frequencies, using the R-squared function (RSQ in Microsoft Excel). However, as expected with two short descending sequences, most of the correlations were well in excess of 90 percent. Substantial differences between transliterations, for example combining the {2} group of glyphs, resulted in quite small changes in the frequency correlations.

Frequency differences

I therefore adopted an alternative metric, namely the average frequency difference. Mathematically, this is the average of the absolute differences between the frequency of a precursor letter and the frequency of the equally ranked Voynich glyph. My idea was that the lowest average frequency difference should represent the best fit between a transliteration and the presumed precursor language.

On this metric, I found that the transliteration which I had numbered v171 was the best fit for ibn Kathir's Arabic alphabet. Apart from the treatment of {4o}, the v171 transliteration has the following differences from v101:

• m=INTo have some assurance of mapping from a single Voynich “language”, I used the text of the “herbal” section only.

• M=iIN

• n=iN.

Below is a juxtaposition of the frequencies of the top ten glyphs in the v171 transliteration, and of those of the top ten Arabic letters. The average frequency difference between v171 and Ibn Kathir's Arabic (calculated on all 43 letters) is 0.64 percent.

The frequencies of the ten most common glyphs in the Voynich manuscript; and those of the ten most common letters in fourteenth-century Arabic. The glyph frequencies are from author's v171 transliteration, "herbal" section. The Arabic letter frequencies are based on the works of Ibn Kathir, with the variants of alef (ﺍ ﺃ ﺇ ﺁ) shown separately or combined. Author's analysis.

The next step is to explore the potential of these juxtapositions as correspondences or mappings. For example, the Voynich {o} could map to and from the Arabic ا (alef). Thereby, we could map some of the most common Voynich "words", such as {8am}, {oe} and {1oe}, to text strings in Arabic. We could then search appropriate corpora of the Arabic language to determine whether these strings are real words.

Test mappings

Below is a summary of my test mappings of the top five Voynich "words" of one, two, three and four glyphs.

Test mappings of the top five "words" of one, two, three and four glyphs in the Voynich manuscript, v171 transliteration, "herbal" section, to text strings in medieval Arabic. Author's analysis.

We see here what I am inclined to call the abjad effect, which I had already observed with Hebrew, Persian and Ottoman Turkish. Most of the Voynich "words" of two or three glyphs map to real Arabic words. They do so whether the glyphs are read from left to right, or from right to left. But in an abjad script, almost any random string of two or three letters will be a real word. At the levels of one glyph and four glyphs, the mapping breaks down.

An alphabetic cipher?

These test mappings do not entirely exclude Arabic as a precursor language of the Voynich manuscript.

As Massimiliano Zattera demonstrated at the Voynich 2022 conference, in almost every Voynich "word" the glyphs follow a sequence, a kind of alphabetic order. Indeed, Zattera called the sequence a "slot alphabet". We are compelled to imagine that if the Voynich scribes mapped their manuscript from precursor documents, they re-ordered the glyphs in every "word". That would imply that we could take any one of our Arabic text strings, scramble the letters, and reverse-engineer it to the same Voynich "word" from which we started.

For example, in the above tests I mapped the Voynich "word" {1o89} to the Arabic strings ليان and نايل which are not real words. However, both strings have an anagram الين which is rare, occurring just six times in ibn Kathir. According to our mapping, if the Voynich scribes had read it from right to left, they would have mapped it to {o981}. If read from left to right, it would map to {189o}. The slot alphabet does not permit either of these sequences, so the scribes would have re-ordered them to {1o89}.

To take this idea further would require a good knowledge of Arabic (preferably medieval Arabic), a head for anagrams or Scrabble, and plenty of computing power (or patience).

Published on March 18, 2024 11:09

•

Tags:

arabic, ibn-kathir, voynich

March 9, 2024

Voynich Reconsidered: Turkish as precursor

According to Ethel Lilian Voynich, her husband Wilfred Voynich discovered or purchased his eponymous manuscript in Frascati, Italy. In recent posts on this platform, I advanced the idea that wherever the manuscript was produced, it was more likely to have had a shorter journey to Frascati than a longer one. This is simply an example of the well-documented distance-decay hypothesis, whereby human interactions are more probable over short distances than over long ones.

Accordingly, I thought that if the Voynich manuscript had had precursor documents in natural languages, the most probable languages were those written and spoken within a specifiable geographical radius of Italy. Among such languages, those of the Romance group seemed the most promising; followed by those of the Germanic and Slavic groups. If we chose to cast our net wider within Europe, we could include languages of the Hellenic and Uralic groups.

The purpose of this article is to cast the net farther afield, to the periphery of medieval Europe, and to assess the possibility that the precursor language, or one of the precursor languages, of the Voynich manuscript was Turkish.

Radio-carbon analysis, performed on four samples of the vellum, yielded dates between 1400 and 1461, with standard errors of between 35 and 38 years. We might reasonably make the assumption (and it is no more than that) that the manuscript was written sometime in the fifteenth century. If so, and if its precursor documents were in Turkish, those documents would have been written in Ottoman Turkish.

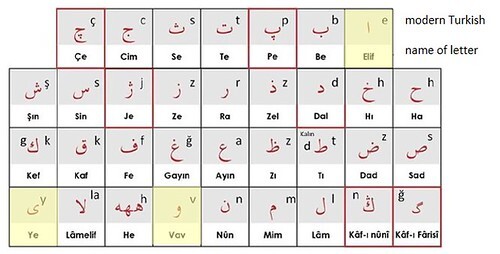

The letters in Ottoman Turkish, like those in Persian, were written in a variant of the Arabic script. This script was an abjad, in which the long vowels were written but the short vowels were not (except sometimes as diacritics above or below the letters).

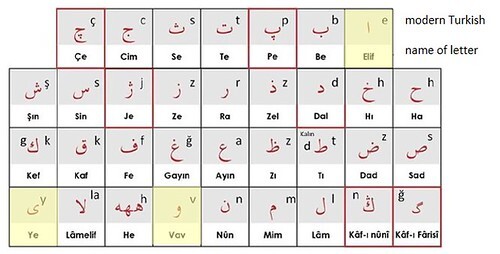

The Ottoman Turkish alphabet. Image by courtesy of Bedrettin Yazan. Highlighted letters are long vowels, which can sometimes also serve as consonants.

In order to assess whether documents in Ottoman Turkish could have been a precursor of the Voynich text, I followed a variant of a strategy which I have outlined in other articles on this platform. The elements of the strategy were as follows:

Corpora of reference

My friend Mustafa Kamel Sen kindly provided a link to an archive of documents in Ottoman Turkish, held by Ataturk University, at https://archive.org/details/dulturk?&.... Among these were a number of documents by authors who lived in the fifteenth or sixteenth centuries.

These documents had been scanned by OCR software and required some cleaning to remove Latin letters and punctuation marks, as well as Arabic numerals, which had been misidentified by the software. A less tractable problem was that some of the original manuscripts had been written in a cramped or compact style, and the software often did not recognise the word breaks. For example, the OCR “word” فرآنوسنت is recognisable as two words by the final ن, and should have been فرآن وسنت. A consequence is that the average length of words, and the incidence of hapax legomena, are overstated.

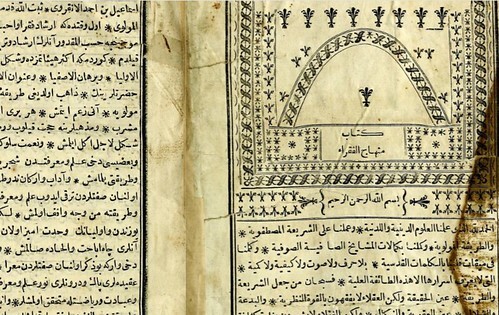

From these archives I selected, as a corpus of reference, Kitab-I Minhac ul-Fukara by Ismail Ankaravi. Although the title is an Arabic phrase, meaning approximately The Book of the Path of the Poor, the text is in Ottoman Turkish. Ankaravi wrote the book around 1624. The digitised edition, after my cleaning, has 71,232 words with 376,150 characters: equivalent to an average word length of 5.28 characters (as noted above, probably an over-estimate).

The first page of Kitab-i Minhac ul-Fukara. Image by courtesy of Duke University Libraries.

Transliterations and correlations

I calculated the Ottoman letter frequencies on the basis of the full text of Kitab-i Minhac ul-Fukara; and compared these with the glyph frequencies in twenty alternative transliterations of the Voynich manuscript. In terms of correlations with the letter frequencies, the most promising transliterations were the following:

Correspondences

As a trial, I took the v102 transliteration as the best fit with Ottoman Turkish (this does not exclude alternative trials with other transliterations). As in my experiments with other languages, in order to have a reasonable assurance of mapping from a single language, I calculated the glyph frequencies on the basis of the “herbal” section of the Voynich manuscript.

The mappings of the five most frequent glyphs and letters, for example, were as follows:

The five most frequent glyphs in the Voynich manuscript, v102 transliteration, “herbal” section; and the five most frequent letters in Kitab-I Minhac ul-Fukara. Author’s analysis.

Trial mappings

The next step was to select a suitable sample of Voynich text for the trial mappings.

One possible approach was to take a randomly selected line from the Voynich manuscript. However, I felt that this process was uncertain, since we do not know whether the whole of the manuscript contains meaning; it could be that some small or large part of the text is meaningless filler or junk. I thought it preferable, therefore, to take the most frequent “words”, map them to text strings in Ottoman Turkish, and see, by reference to the corpus, whether the resulting strings were real words.

In order to assess the abjad effect (of which, more below), I selected the most frequent Voynich “words” of one, two, three and four glyphs.

The results, in summary, are below.

The five most frequent “words” of one, two, three and four glyphs in the Voynich manuscript, v102 transliteration, “herbal” section; and trial mappings to text strings in Ottoman Turkish on the basis of frequency rankings. Author’s analysis.

We can see that the top five “words” of one glyph, the top five “words” of two glyphs, and the top two “words” of three glyphs, map to text strings which are real words in Kitab-I Minhac ul-Fukara. But thereafter the mapping breaks down. None of the less frequent “words” of three glyphs, and none of the “words” of four glyphs, maps to words in Ottoman Turkish.

In these results, I detect the abjad effect. Ottoman Turkish, like Arabic, Hebrew and Persian, is an abjad language, with no written short vowels. As I have demonstrated in my forthcoming book Voynich Reconsidered (Schiffer, 2024), in such a language, almost any random string of up to three letters is quite likely to be a real word.

With regard to the apparent words that I found: not being a speaker of Turkish, I do not know the English equivalents. I established only that these strings existed as words in Kitab-i Minhac ul-Fukara. Google Translate is no help, since it only works on modern Turkish. Readers of Turkish who also know the Ottoman script would be able to confirm whether these words are real.

In this light, I was not motivated to pursue a possible further step: namely, to attempt a mapping of a whole line of Voynich text to Ottoman Turkish. If “words” of four glyphs do not map to words, there is not much chance that lines of text will produce meaningful phrases.

In summary, I do not have an expectation that, through a systematic and objective process, it is possible to extract meaningful content in the Ottoman Turkish language from the Voynich manuscript.

Where next?

It would be possible to refine this process by selecting other corpora of reference than Kitab-i Minhac ul-Fukara; by testing other transliterations than v102, with comparable correlations; and by selecting other samples of Voynich text for mapping. I do not have much confidence that these variations would yield meaningful narrative text in Ottoman Turkish.

An alternative would be to recall Massimiliano Zattera’s discovery of the “slot alphabet”, with its quasi-rigid marching order of the glyphs within the Voynich “words”. We would then consider its near-inescapable corollary, that in nearly every Voynich “word”, the scribes mapped letters in some natural language to glyphs, and then re-ordered the glyphs from their original sequence. If so, the mapped text strings in Ottoman Turkish are not the last step; we would have to look for anagrams, which the Voynich scribes would have mapped to the identical Voynich “words”.

Here again, only speakers or readers of Ottoman Turkish could take this analysis to the next level.

Accordingly, I thought that if the Voynich manuscript had had precursor documents in natural languages, the most probable languages were those written and spoken within a specifiable geographical radius of Italy. Among such languages, those of the Romance group seemed the most promising; followed by those of the Germanic and Slavic groups. If we chose to cast our net wider within Europe, we could include languages of the Hellenic and Uralic groups.

The purpose of this article is to cast the net farther afield, to the periphery of medieval Europe, and to assess the possibility that the precursor language, or one of the precursor languages, of the Voynich manuscript was Turkish.

Radio-carbon analysis, performed on four samples of the vellum, yielded dates between 1400 and 1461, with standard errors of between 35 and 38 years. We might reasonably make the assumption (and it is no more than that) that the manuscript was written sometime in the fifteenth century. If so, and if its precursor documents were in Turkish, those documents would have been written in Ottoman Turkish.

The letters in Ottoman Turkish, like those in Persian, were written in a variant of the Arabic script. This script was an abjad, in which the long vowels were written but the short vowels were not (except sometimes as diacritics above or below the letters).

The Ottoman Turkish alphabet. Image by courtesy of Bedrettin Yazan. Highlighted letters are long vowels, which can sometimes also serve as consonants.

In order to assess whether documents in Ottoman Turkish could have been a precursor of the Voynich text, I followed a variant of a strategy which I have outlined in other articles on this platform. The elements of the strategy were as follows: