Brian Solis's Blog, page 14

May 13, 2024

Brian Solis to Explore the Future of Customer Experience Innovation at CX Midwest May 2024

Brian Solis will keynote CX Midwest on 14 May, 2024.

Brian’s keynote is titled, “Experience Innovation: The Rise of Generation Novel and the New CX Imperative.”

Description: Brian Solis is a world-leading digital anthropologist, best-selling author, and innovator in experience design. His research went into overdrive these last few years, combining the evolution of digital, mobile and social with the incredible effects of the pandemic, and now, how it all converges with AI, spatial computing! In this eye-opening presentation, Brian will share his insights into the state and future of experience design and how the emergence of a new generation of consumers need you to reimagine CX not as customer experience, but as the customer’s experience.

For those who don’t know, Brian is considered as one of the most influential pioneers in CX innovation. His book, X: The Experience When Business Meets Design, is considered the book on the subject. In fact, it’s been named to the list of “Best Customer Experience Books of All Time,” according to BookAuthority!

Book Brian to speak! Learn more here.

The post Brian Solis to Explore the Future of Customer Experience Innovation at CX Midwest May 2024 appeared first on Brian Solis.

May 10, 2024

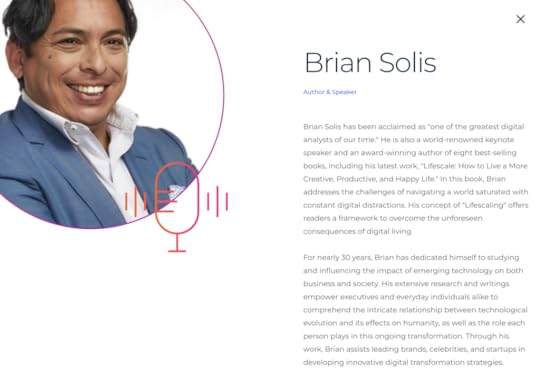

Top 20 LinkedIn Influencers to Follow

Brian Solis was named in Insidea’s “Top 20 LinkedIn Influencers to Follow.” It’s an important list, so please take a look at the others to make the list…you’ll be smarter for it!

As the world’s premier professional networking platform, LinkedIn hosts a myriad of thought leaders, industry pioneers, and mavens who constantly share their wisdom, experiences, and forecasts about the business realm. But with millions of users vying for your attention, whom should you really tune into? To streamline your feed and ensure it’s valuable, we’ve curated a list of the top 20 LinkedIn influencers you should follow today. Dive in and prepare to be inspired, educated, and motivated.

The post Top 20 LinkedIn Influencers to Follow appeared first on Brian Solis.

AInsights: Microsoft’s VASA-1 Model Uses AI to Create Hyper-Realistic Digital Twins Using a Picture and Voice Sample

AInsights: Executive-level insights on the latest in generative AI

Just when you’ve seen it all, there’s always something new that will surprise you, almost to the point, where you may lose the magic of surprise. We live in some incredible times, don’t we? As OpenAI co-founder and CEO Sam Altman said recently, “This is the most interesting year in human history, except for all future years.”

Well, I just read a research paper published by Microsoft Asia that blew my mind.  And as you can imagine, it takes a lot to blow me away!

And as you can imagine, it takes a lot to blow me away!

The paper essentially introduces what it calls the VASA framework for generating lifelike talking faces with “visual affective skills” (VAS).

Its first iteration, VASA-1, is a real-time, audio-driven talking face generation technology. It can create lifelike animated faces that closely match the speaker’s voice and facial movements, with, get this, single portrait picture, a same of speech audio, control signals such as main eye gaze direction and head distance, and emotion offsets, create a real-time hyper-realistic talking head video…all with scarily convincing gestures.

Unless you knew the person, and even then, it would be difficult for the untrained eye to detect that they were watching a machine-produced video (or in some cases, a deepfake).

Certainly, Microsoft Research is exploring the boundaries for what’s possible with the best of intentions. So, in this piece, let’s focus on this technology with that perspective. From that point of view, key benefits and use cases of VASA-1 include:

Highly realistic and natural-looking animated faces: VASA-1 can generate talking faces that are indistinguishable from real people, enabling more immersive and engaging virtual experiences.

Real-time performance: The system can produce the animated faces in real-time, allowing for seamless integration into interactive applications, gaming, and video conferencing.

Broad applicability: VASA-1 has potential use cases in areas such as virtual assistants, video games, online education, and telepresence, where lifelike animated characters can enhance the user experience.

Potentially interesting use cases could include:

Virtual avatars and digital assistants: VASA-1 can be used to create virtual avatars and digital assistants that can engage in natural, human-like conversations. These avatars could be used in video conferencing, customer service, education, and entertainment applications to provide a more immersive and engaging experience.

Dubbing and lip-syncing: The ability to accurately synchronize facial movements with audio can be leveraged for dubbing foreign language content or creating lip-synced animations. This could streamline the localization process and enable more seamless multilingual experiences

Telepresence and remote collaboration: It can enhance remote communication and collaboration, allowing participants to maintain eye contact and perceive non-verbal cues as if they were physically present.

Synthetic media creation: VASA-1 could generate create highly realistic synthetic media, such as virtual news anchors or digital characters in films and games. This could open up new creative possibilities and streamline content production workflows.

Accessibility and inclusion: VASA-1 could improve accessibility for individuals with hearing or speech impairments, providing them with more natural and engaging communication experiences.

Microsoft Research Asia: Sicheng Xu*, Guojun Chen*, Yu-Xiao Guo*, Jiaolong Yang*‡, Chong Li, Zhenyu Zang, Yizhong Zhang, Xin Tong, Baining Guo Microsoft Research Asia *Equal Contributions ‡Corresponding Author: jiaoyan@microsoft.com

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Microsoft’s VASA-1 Model Uses AI to Create Hyper-Realistic Digital Twins Using a Picture and Voice Sample appeared first on Brian Solis.

May 8, 2024

AInsights: Meta’s Llama 3 LLM Redefines the Limits of Artificial Intelligence and Makes Meta One of the Leading AI Players

Created by Meta AI

Your AInsights: Executive-level insights on the latest in generative AI…

Meta released its Llama 3 Large Language Model (LLM), serving as the foundation for Meta AI. Furthermore, Meta is making Llama 3 open source for other third-party developers to use, modify, and distribute for research and commercial purposes, without any licensing fees or restrictions. More on that in a bit.

Meta AI serves as the AI engine for Messenger, Instagram, Facebook, as well as my go-to sunglasses created in partnership with Ray-Ban.

Mark Zuckerberg announced the news on Threads.

“We’re upgrading Meta AI with our new state-of-the-art Llama 3 AI model, which we’re open sourcing,” Zuckerberg posted. “With this new model, we believe Meta AI is now the most intelligent AI assistant that you can freely use.”

Meta also introduced a free website designed to compete (and look like) OpenAI’s ChatGPT, available at Meta.AI. Note, the service is free currently, but asks you to login in with your Facebook account. You can bypass this for now, though if you do log in, you are contributing toward training LLAMA 4 and beyond, based on your data and activity. This isn’t new for Facebook or any social media company, as you, and me, as users, have always been the product, the training grounds, and the byproduct of social algorithms.

Like ChatGPT, you can prompt via text for responses and also “imagine” images for Meta.AI to create for you. What’s cool about the image imagination (creation) process, it offers a real-time preview as you’re prompting. Compare this to say, ChatGPT or Google Gemini, where you have to wait for the image to be generated in order to fine tune the prompt. What’s more, users can also animate images to product short MP4 videos. Interestingly, all content is watermarked. And similarly, Meta.AI will also generate a playback video of your creation process.

All-in-all, the performance and capabilities race between Claude, ChatGPT, Gemini, Perplexity, et al, benefits us the users, as genAI is like the Wild West right now. We get to test the highest performer, at low costs or no costs, until the dust settles a bit more.

AInsightsWhat sets Llama 3 apart are its performance claims. Llama 3 introduces new models with 8 billion and 70 billion parameters, which demonstrate notable improvements in reasoning and code generation capabilities. This aligns with industry benchmarks for advanced performance.

The number of parameters in a large language model like Llama 3 is a measure of the model’s size and complexity. More parameters generally allow the model to capture more intricate patterns and relationships in the training data, leading to improved performance on various tasks.

Unlike many other prominent LLMs like GPT-4 and Google’s Gemini which are proprietary, Llama 3 is freely available for research and commercial purposes. This open-source accessibility fuels innovation and collaboration within the AI community.

Meta is developing multimodal versions of Llama 3 that can work with various modalities like images, handwritten text, video, and audio clips, expanding its potential applications. For example, with the Meta Ray-Ban glasses, users can activate the camera and prompt, “hey Meta” to recognize an object, translate signage and text, even in different languages, and create text

Multilingual training is integrated into versions of Llama 3, enabling it to handle multiple languages effectively.

It’s reported that Meta is also training a 400 billion parameter version of Llama 3, showcasing its scalability to handle even larger and more complex models.

The “Instruct” versions of Llama 3 (8B-Instruct and 70B-Instruct) have been fine-tuned to better follow human instructions, making them more suitable for conversational AI applications.

Like with any model, accuracy and biases are always a concern. This is true with all AI chatbots. Sometimes, inaccurate results can have significant impacts though.

Nonetheless, Zuckerberg expects Meta AI to be “the most used and best AI assistant in the world.” With integration into some of the world’s most utilized social media and messaging platforms, his install base is certainly there.

Disclaimer: Meta.ai drafted half of this story’s headline. It still needed a human touch.

This is your latest executive-level dose of AInsights. Now, go out there and be the expert everyone around you needs!

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Meta’s Llama 3 LLM Redefines the Limits of Artificial Intelligence and Makes Meta One of the Leading AI Players appeared first on Brian Solis.

May 7, 2024

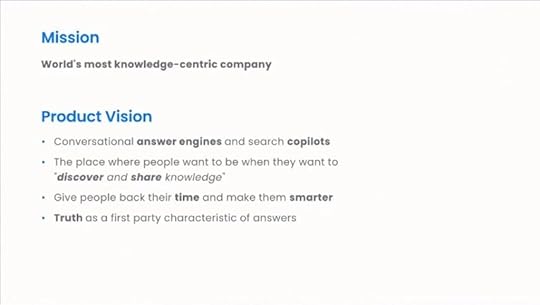

AInsights: Perplexity AI is Now a Unicorn, Counts Jeff Bezos as an Investor, and Releases Enterprise Pro

Source: Perplexity

AInsights: Executive-level insights on the latest in generative AI…

Perplexity AI announced that it raised a whopping $62.7M+ at a valuation of 1.04 billion, led by Daniel Gross, former head of AI at Y Combinator. This officially makes Perplexity the latest minted Unicorn.  Other investors include a who’s who list of industry leaders, Stan Druckenmiller, NVIDIA, Jeff Bezos, Tobi Lutke, Garry Tan, Andrej Karpathy, Dylan Field, Elad Gil, Nat Friedman, IVP, NEA, Jakob Uszkoreit, Naval Ravikant, Brad Gerstner, and Lip-Bu Tan.

Other investors include a who’s who list of industry leaders, Stan Druckenmiller, NVIDIA, Jeff Bezos, Tobi Lutke, Garry Tan, Andrej Karpathy, Dylan Field, Elad Gil, Nat Friedman, IVP, NEA, Jakob Uszkoreit, Naval Ravikant, Brad Gerstner, and Lip-Bu Tan.

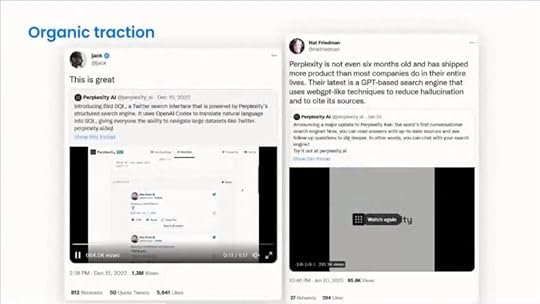

Perplexity isn’t just another AI chatbot. It’s an entirely new answers engine that offers a glimpse of the future of intelligent search. I recently explored how Perplexity and AI are even threatening Google’s search business. This could be one of the reasons that Perplexity’s valuation has doubled in three months.

Excited to announce we've raised 62.7M$ at 1.04B$ valuation, led by Daniel Gross, along with Stan Druckenmiller, NVIDIA, Jeff Bezos, Tobi Lutke, Garry Tan, Andrej Karpathy, Dylan Field, Elad Gil, Nat Friedman, IVP, NEA, Jakob Uszkoreit, Naval Ravikant, Brad Gerstner, and Lip-Bu… pic.twitter.com/h0a986t4Md

— Aravind Srinivas (@AravSrinivas) April 23, 2024

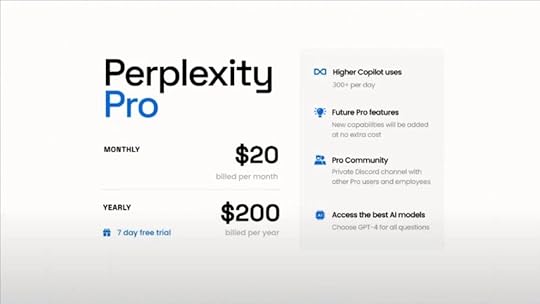

Additionally, Perplexity launched Enterprise Pro, designed to keep work and data secure and private.

How does it work?

Think about your day-to-day search experience today. Typically when you search, you become the “answer filter,” browsing through SEO-manipulated or spammy websites full of affiliated links, all meant to boot their visibility, not the most relevant information. Perplexity streamlines search for everyday users and now, enterprise employees to help them save time while improving output.

Perplexity’s answer engine browses the internet in real time and provides complete, verifiable answers with citations (I love this part as I drill down into each for further context). Perplexity also provides multimedia-enriched answers that include charts, videos, and images.

In a statement, Databricks CEO Ali Ghodsi shared how Perplexity powers his teams, “Perplexity Enterprise Pro has allowed Databricks to substantially accelerate R&D, making it easier for our engineering, marketing, and sales teams to execute faster. We estimate it helps our team save 5k working hours monthly.”

AInsightsSo what is an answers engine exactly? If you think about about how you use Google for more sophisticated or specific searches, you may often ask it questions versus inputting keywords. Smart marketers practice “keyword anthropology” to understand the questions people are asking to then optimize their pages against those searches. This leaves users having to become a human filter to search, scroll, click, and most likely, refine the search and repeat. This was just business as usual.

With Perplexity, it takes your prompt to then analyze potential results against trusted or regarded sources providing output that better aligns with your search criteria. The results are also footnoted if you’d like to dive deeper for context. More so, prompt-based searched facilitate prompt-based layers. You can dive deeper to further unpack the answers to help you steer your quest.

Enterprise Pro aims to offer a rich search experience for its business users.

Here’s how standout companies use Perplexity…

Product teams at Zoom use Perplexity’s Focus functionality for targeted search.

HP’s salesforce taps into Perplexity for rapid, in-depth prospect research, helping them to craft compelling pitches, which expedites the sales process.

Innovation attorneys at Latham & Watkins are piloting Perplexity to conduct targeted research.

Health editorial teams at Thrive Global are creating validated behavior change microsteps based on the latest peer-reviewed science.

Data teams at the Cleveland Cavaliers research ticket sales trends and do partnership prospecting.

Strategy teams at Paytm draft market landscape insights to inform their roadmaps.

Marketing and product teams at Amplitude use Perplexity to draft market landscape insights.

Below is the pitch deck for Perplexity Pro courtesy of PitchDeckGuy.

And here you are. You’re up to date on the trends I’m paying attention too. Now share this with key players around you to become a trusted resource in your community!

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Perplexity AI is Now a Unicorn, Counts Jeff Bezos as an Investor, and Releases Enterprise Pro appeared first on Brian Solis.

May 6, 2024

AInsights: Reid Hoffman Interviews His AI Digital Twin and It’s Both Creepy and Mind-Blowing

Your AInsights: Executive-level insights on the latest in generative AI…

You may know Reid Hoffman as the co-founder and executive chairman of LinkedIn. He’s also a prolific investor and author, writing a book with my friend Chris Yeh, one I refer a lot to founders, Blitzscaling.

Hoffman recently recorded an interview with Reid AI, his digital twin, and it is wild! It’s innovative while also blurring the line between fantastical and the uncanny valley.

Like ChatGPT itself, Reid AI was trained on a large language model (LLM), but instead focused on Reid’s writings, speeches, interviews, podcasts, appearances, and books. Reid AI’s digital brain is not only trained on his work, but also his mannerisms, voice, conversational style, and appearance.

There’s even an awkward moment when Reid AI wipes his nose and then proceeds to wipe his hand on the table. And at times, Reid AI appears to breathe!

Hour One developed the regenerative AI models for the video and 11ElevenLabs worked on the audio.

“I think these avatars,” writes Hoffman, “when built thoughtfully, have the potential to act as mirrors to ourselves – ones that reflect back our own ideas and personality to examine and consider.”

More so, digital twins offer the ability to teach or mentor others, or to provide a virtual legacy for personalities to engage future generations . Just a couple of years ago, Soul Machines created a digital twin of golf legend Jack Nicklaus (aka the Golden Bear) to interact with fans or even offer advice to budding golfers.

At the same time, there are causes for concern as AI deepfakes have defrauded a bank for $25 million and most recently, a South Korean woman was scammed for $50k to a fake digital twin of Elon Musk.

While both incredible and controversial, this technology is inevitable however, and at some point in the near future, we’ll have the ability to create digital twins of our loved ones much in the same way. For this reason, future generations will learn to capture the work, words, and all creations to thoroughly train models to best represent those they care about.

Also consider creating virtual markets populated with digital twins of customers and aspirational prospects. The opportunity to sample marketing or service concepts, test market PoCs, experiment with customer and employee journeys, or assess competitive products.

AInsightsThe significance of Reid Hoffman interviewing his AI-generated digital twin demonstrates how far we’ve come in the evolution of generative AI and its ability to recreate someone as an avatar in ways that could be indistinguishable from the real person…or from a real person.

In this case, Hoffman and team can test the capabilities of the AI system and how closely it can mirror his own thinking, behaviors, and responses. By challenging the AI with questions on topics like “blitzscaling” and AI regulation, Hoffman is evaluating how well the AI can formulate answers in a way that aligns with his own perspectives and communication style. It gets close, but it will also only get better.

The interview also provides Hoffman, and the rest of us, the opportunity to explore the ethical considerations around AI and deepfakes. He acknowledges the risks and dangers of this technology, while also recognizing its potential benefits.

By engaging with his AI twin, Hoffman is gaining firsthand experience on how this technology could disrupt and challenge individuals, including public figures like himself. This hands-on experiment likely informs his views on how to responsibly develop and regulate AI systems going forward.

The video also demonstrates the awareness and literacy we all need in being mindful of what’s real and what’s not. Additionally, it’s a call to tech companies to regulate their own tech and also develop tools to help the rest of the world identify deepfakes and potential bad actors

Enjoy the interview below!

Well, this is your latest AInsights on digital twins, deepfakes, and the crazy new world we’re living in. See you next time!

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Reid Hoffman Interviews His AI Digital Twin and It’s Both Creepy and Mind-Blowing appeared first on Brian Solis.

April 29, 2024

AInsights: AI is a New Digital Species — a Companion in Guiding You Toward Innovative Outcomes

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Mustafa Suleyman is now CEO of Microsoft AI following Microsoft’s “acquisition” of Inflection AI, a company Suleyman co-founded after leaving Google of DeepMind, an AI company previously acquired by Google.

Following the news of Inflection’s move to Microsoft, Suleyman spoke at TED in April to share his view on the long-game of AI and it is captivating.

First, I need to observe his incredible gift to make a complex and even scary topic like AI approachable by a mainstream audience while also possessing the technical skills to actually build the future.

Suleyman positions AI in a light that challenges us to think beyond the boundaries of known realms in which we’ve operated our entire careers. Instead, he introduces AI as becoming and also unlocking something entirely new. He likens it to a new digital species, a personal being that exists to help each and every one of us expand our capabilities and horizons, to think of AI as a cognitive exoskeleton, to help perform and grow exponentially and achieve outcomes not possible or even imaginable before.

“AI is to the mind what nuclear fusion is to energy,” he said. “Limitless. Abundant. World-changing.”

I think that’s really the crux of what makes this moment so monumental. We weren’t prepared for how to respond to AI’s sudden disruption, say, in the way we’ve been trained to respond in other scenarios and experiences.

So be it.

This is our moment to rise up, embrace change, and become part of a new genre of leaders who are willing to see the world anew and to humbly embrace curiosity, imagination, and boldness. This is how we take our shot.

“AI really is different,” Suleyman explained. “That means we have to think about it creatively and honestly. We have to push our analogies and metaphors to the very limits to be able to grapple with what’s coming.”

We don’t know what we don’t know. For some, that is finding and succumbing to their boundaries. For others, it is the gateway toward exploration into the unknown. Somewhere out there, on the other side of curiosity, bravery, and hope, are discoveries, experiences, and ultimately, innovation,

“This is not just another invention,” Suleyman exclaimed. “AI itself is an infinite inventor.”

While there are many pearls of wisdom from his 20 minute talk, it is his finale that struck a personal chord.

“AI is us. It’s all of us. As we build out AI, we can and must reflect all that is good, all that we love, all that is special about humanity…our empathy, our kindness, our curiosity, and our creativity. This, I would argue, is the greatest challenge of the 21st century, but also the most wonderful, inspiring and hopeful opportunity for all of us.”

It’s time to grow.

It's World Creativity and Innovation Day. This is a reminder of the importance of human creativity, especially in an era of artificial intelligence. Creativity is the foundation of innovation.https://t.co/bOilgWKMz5#WCID https://t.co/QqmHFykc3b

— Brian Solis (@briansolis) April 21, 2024

That’s your latest AInsights update, now imagine what you can do differently!

Please subscribe to AInsights.

Please subscribe to my master mailing list, a Quantum of Solis .

The post AInsights: AI is a New Digital Species — a Companion in Guiding You Toward Innovative Outcomes appeared first on Brian Solis.

April 24, 2024

AInsights: Microsoft Diversifies Its AI Investment Portfolio with Commitment to French Startup Mistral

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Microsoft is on a tear lately. In addition to its investment in OpenAI and its recent “acquisition” of Inflection AI, Microsoft also invested in French startup Mistral.

We're announcing a multi-year partnership with @MistralAI, as we build on our commitment to offer customers the best choice of open and foundation models on Azure. https://t.co/k1L7lfFeES

— Satya Nadella (@satyanadella) February 26, 2024

Microsoft’s Sata Nadella positioned the investment as a commitment to offering enterprise customers the best choice of open and foundation models on Azure.

“We’re paving the way for efficient and cost-effective AI models that accelerate growth and deliver real-world solutions,” Microsoft Azure posted on X.

Mistral AI is a French AI startup that develops advanced large language models (LLMs) and AI technologies.

The key things Mistral AI does are:

Develops open-source and efficient AI models: Mistral AI has released several powerful AI models. These models are designed to be fast, helpful, and trustworthy, with capabilities in areas like language understanding, code generation, and multi-lingual support.

Provides AI services and APIs: Mistral AI offers its AI models through various cloud platforms and APIs, allowing developers and businesses to integrate the technology into their own applications and workflows. This includes services like Mistral Large, Mistral Small, and Mistral Embedded.

Partners with major tech companies: Mistral AI has secured partnerships with companies like Microsoft, which has invested $16 million to bring Mistral Large to the Azure cloud platform.

Focuses on responsible AI development: Mistral AI aims to develop AI models that are “efficient, helpful, and trustworthy” through innovative approaches. The company emphasizes the importance of addressing potential misuse or underperformance of AI systems.

Microsoft invested in Mistral AI for several key reasons:

Diversify its AI portfolio: By investing in Mistral, Microsoft is expanding its AI offerings beyond its partnership with OpenAI. This helps Microsoft diversify its AI portfolio and reduce reliance on a single provider.

Access to Mistral’s models: The partnership will allow Microsoft to make Mistral’s premium AI models, including its ChatGPT-style assistant “Le Chat”, available to customers through Microsoft’s Azure cloud platform.

Leverage Mistral’s technology: Microsoft will support Mistral with its Azure AI supercomputing infrastructure, allowing Mistral to train new AI models at scale.

Collaborate on R&D: The companies will explore collaborating on training purpose-specific AI models, including for European public sector workloads.

Expand in Europe: The investment allows Microsoft to strengthen its presence and partnerships in Europe’s growing AI industry, which could help it navigate increasing regulatory scrutiny from the EU.

AInsightsI guess you can’t lose if you bet on every single player! This came as a surprise to some experts as many expected AWS to make this move.

Here’s what this move means to you…

Expanded AI model options: The partnership will allow Microsoft to offer Mistral’s large language models, including its ChatGPT-style assistant “Le Chat”, to its Azure cloud customers. This gives enterprises more choice beyond just Microsoft’s partnership with OpenAI.

Customized AI models: Microsoft and Mistral will collaborate on developing “purpose-specific models for select customers, including European public sector workloads.” This allows enterprises to access AI models tailored to their specific needs.

Improved regulatory positioning: With Mistral being a European AI company, the partnership may help Microsoft navigate increasing regulatory scrutiny from the EU over its AI offerings and partnerships.

Reduced reliance on OpenAI: By diversifying its AI portfolio beyond just OpenAI, Microsoft will be less dependent on the continued success and dominance of OpenAI in the large language model (LLM) market.

Access to Mistral’s technology: Enterprises can leverage Mistral’s AI models and technology through the Azure platform, benefiting from the startup’s research and development capabilities.

That’s your latest AInsights and you and I are now more competitive as a result!

Please subscribe to AInsights go stay up to date on what AI trends mean to you. .

Please also subscribe to my master mailing list, a Quantum of Solis .

The post AInsights: Microsoft Diversifies Its AI Investment Portfolio with Commitment to French Startup Mistral appeared first on Brian Solis.

April 22, 2024

Join Brian Solis at Evanta’s CIO Executive Summit in Chicago

Head of Global Innovation at ServiceNow, Brian Solis, will lead a very special conversation at Evanta’s CIO Executive Summit in Chicago on May 15th, 2024.

Topic: CIO Impact on Digital Business Strategy

In today’s rapidly evolving digital landscape, technology is the driving factor of business strategy and organizational success. But for CIOs to deliver digital, the technology must have a meaningful impact. Otherwise, the dollars are not worth spending.

In this session participants will discuss:

Directly correlating technology needs with business objectives.

Gaining enterprise-wide agreement on AI and tech investment goals.

Driving successful technology adoption to maximize ROI.

Brian will be joined by Swati Shah, SVP US Markets Technology, TransUnion, and Fares Ghanimah, CIO | Head of Central Technology, William Blair & Company.

Executive boardrooms are intimate and interactive sessions designed to foster dynamic dialogue. around a specific, strategic topic. These private, closed-door discussions encourage attendee. participation and are limited to 15 attendees (seating priority is given to CIOs).

To reserve your seat, please contact: Katherine Williams.

The post Join Brian Solis at Evanta’s CIO Executive Summit in Chicago appeared first on Brian Solis.

AInsights: Microsoft’s Acquisition of Inflection AI and What it Means to Business Customers and Investors

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Microsoft recently acquired Inflection for $650 million, an AI startup founded by Mustafa Suleyman, former head of applied AI at DeepMind, an AI company acquired by Google.

Acquire might be the wrong way to describe this deal though. Technically, Microsoft is licensing Inflection’s software for $650 million while the company hired nearly all of Inflection’s staff. Inflection, in turn, is using that money to pay a “positive” return to investors. I use quotes because positive here is a technicality. I explain more in the AInsights below.

Mustafa Suleyman now becomes CEO of Microsoft AI, filling the void between Satya Nadella’s hands-on role in leading Microsoft’s ambitious AI strategy.

In this role, he will be leading all of Microsoft’s consumer AI products and research, including the Copilot generative AI service, as well as the Bing search engine and Edge browser. uleyman will also be overseeing the integration of AI models and technology from his previous company, Inflection AI, which Microsoft has acquired.Additionally, Karén Simonyan, the chief scientist at Inflection AI, will be joining Microsoft as the chief scientist for the new Microsoft AI division.

Inflection AI’s software and AI models are primarily used for two key purposes:

1) Powering its consumer AI assistant called Pi chatbot: Pi is a conversational AI assistant designed to be a “kind and supportive companion” for users (unlike the ironic robotic chatbots largely deployed today). It uses Inflection’s large language models to engage in open-ended dialogue, provide information, and offer emotional support.

Interestingly, Pi has around 1 million daily active users and 6 million monthly active users as of early 2024. 1

2) Licensing to enterprise customers: After struggling to monetize Pi, Inflection is now pivoting to an enterprise focus by licensing its AI models and technology to businesses. The company developed a “Conversational API” to allow companies to integrate Inflection’s language models into their own applications and services.

This is where Microsoft is going. Inflection’s Pi virtual assistant provides an AI model licensing/integration platform for enterprise customers through its Conversational API.

AInsights #1: This is not the usual way of making an acquisition or even an aquirehire. In June 2023, the WSJ reported that Inflection AI raised $1.3 billion in funding from tech veterans Bill Gates, Eric Schmidt, and Reid Hoffman, along with Microsoft and Nvidia. This round contributed to the venture’s total funding of $1.5 billion at a $4 billion valuation. $650 million isn’t close. Investors aren’t in this business. They don’t seek losses or less than exponential returns.

In reality, Inflection struggled to establish a viable business model for its consumer AI offerings like the Pi chatbot. By accepting this licensing deal, Inflection could provide a 1.5x return to investors rather than pursuing a full acquisition at the elevated valuation.

The unconventional arrangement allowed Microsoft to acquire Inflection’s AI talent and models without an outright acquisition, while Inflection pivots to an enterprise focus under new leadership. This might set a new precedent for future tech “acquisitions.”

The Information founder Jessica Lessin reported it this way, “This is the new way the Magnificent Seven [tech stocks] are going to do acquisitions, you get the [intellectual property] and team without FTC scrutiny or approval.”

AInsights #2: The new division will be responsible for all of Microsoft’s consumer-focused AI products and research, with the goal of bringing cutting-edge AI capabilities to Microsoft’s consumer offerings. This includes expanding and improving Microsoft’s existing consumer AI services like Copilot, Bing, and Edge, as well as potentially developing new consumer-facing AI applications and experiences.

Inflection AI is pivoting away from its consumer-facing chatbot “Pi” and instead focusing on licensing its AI models and technology to enterprise customers. This means business customers will have more opportunities to integrate Inflection’s advanced conversational AI capabilities into their own products and services.

That’s your latest AInsights and you and I are now smarter as a result!

Please subscribe to AInsights.

Please subscribe to my master mailing list, a Quantum of Solis .

The post AInsights: Microsoft’s Acquisition of Inflection AI and What it Means to Business Customers and Investors appeared first on Brian Solis.