Brian Solis's Blog, page 17

February 13, 2024

AInsights: MultiOn and Large Action Models (LAMs), Introducing Google Gemini and Its Version of Copilot, AI-Powered Frames, Disney’s HoloTile for VR

A whole new AI world: Created with Google Gemini

AInsights: Executive-level insights on the latest in generative AI….

MultiOn AI represents a shift from generative AI that passively responds to queries to actively participating in accomplishing tasksThe idea is this, tools such as ChatGPT are trained on large language models (LLMs). A new class of solution is emerging to focus on streamlining information, processes, and digital experiences by integrating large action models (LAMs).

MultiOn AI is a new class of tool that makes generative AI actionable. It leverages generative AI to autonomously executive digital processes and digital experiences in the background. It operates in the background of any digital platform, handling tasks that don’t require user attention, It’s aim is to reduce hands-on step work, helping users to focus more on activities and interactions where their time and attention is more valuable.

When AI becomes a LAM or Large Action Model.

Watch this demo by @sundeep presenting @MultiOn_AI to @Jason, where genAI shifts from a passive response system to executive queries that autonomously execute and accomplish tasks. pic.twitter.com/kdIATmhFx0

— Brian Solis (@briansolis) February 10, 2024

MultiOn is a software example of what Rabbit’s R1 is also executing through a handheld AI-powered device.

AInsightsBeyond the automation of repetitive tasks, LAMs such as MultiOn AI, can interact with various platforms and services to execute disparate tasks across them. It opens the doors to all kinds of cross-platform applications that will only mature and accelerate exponentially.

For example:

Ordering Food and Making Reservations: Users can instruct MultiOn AI to find restaurants and make reservations.

Organizing Meetings: MultiOn AI can send out meeting invitations automatically.

Entertainment Without Interruptions: MultiOn AI can play videos and music from any platform, skipping over the ads for an uninterrupted experience.

Online Interactions: MultiOn AI can post and interact with others online.

Web Automation and Navigation: MultiOn AI can interact with the web to perform tasks like finding information online, filling out forms, booking flights and accommodations, and populating your online calendar.

Google Bard rebranded as Gemini to officially (finally) take on ChatGPT and other generative AI platformsGoogle’s Bard was a code red response to OpenAI’s popular ChatGPT platform. Bard is now Gemini and is officially open for business. While it’s not quite up to ChatGPT or Claude levels, it will compete. It has to.

Google also introduced Gemini Advanced to compete against OpenAI’s pro-level ChatGPT service. It is also going against Microsoft and its Copilot initiatives.

The new app is designed to do an array of tasks, including serving as a personal tutor, helping computer programmers with coding tasks and even preparing job hunters for interviews, Google said.

“It can help you role-play in a variety of scenarios,” said Sissie Hsiao. a Google vice president in charge of the company’s Google Assistant unit, during a briefing with reporters.

Gemini is a “multimodal” system, meaning it can respond to both images and sounds. After analyzing a math problem that included graphs, shapes and other images, it could answer the question much the way a high school student would, according to the New York Times.

After the awkward stage of Google Bard being Bard, let’s not forget “bard” is a professional storyteller, in definition. Google recognizes this it has to compete for day-to-day activities, beyond novelty.

There are now two flavors of Google AI. 1) Gemini powered by Pro 1.0, and 2) Gemini Advanced powered by Ultra 1.0. The latter will cost $19.99 per month for access via a subscription to Google One.

Similar to ChatGPT-4, Gemini is multimodal, which means you can input more than text.

I hear this question a lot, usually in private. So, I’ll just put it here and you can skip over it if you don’t need it. Multimodal refers to the genAI model’s ability to understand, process, and generate content across multiple types of media or ‘modalities’, including text, code, audio, images, and video. This capability allows Gemini or other models to perform tasks that involve more than just text-based inputs and outputs, making it significantly more versatile than traditional large language models (LLMs) that primarily focus on text. For example, Gemini can analyze a picture and generate a text-based description, or it can take a text prompt and produce relevant audio or visual content.

Gemini can visit links on the web, and it can also generate images using Google’s Imagen 2 model (a feature first introduced in February 2024). And like ChatGPT-4, Gemini keeps track of your conversation history so you can revisit previous conversations, as observed by Ars Technica.

Gemini Advanced is ideal for more ‘advanced’ capabilities such as coding, logical reasoning, and collaborating on creative projects. It also allows for longer, more detailed conversations and better understands the context from previous prompts. Gemini Advanced is more powerful and suitable for businesses, developers, and researchers,

Gemini Pro is available in over 40 languages and provides text generation, translation, question answering, and code generation capabilities. Gemini Pro is designed for general users and businesses.

AInsights

Similar to what we’re seeing with Microsoft Copilot, Google Gemini Advanced will be integrated into all Google Workspace and Cloud services through its Google One AI premium plan. This will boost real-time productivity and up-level output for those that learn how to get the most out of each application via multi-modal prompting.

AI-powered AR glasses and other devices are on the horizon2024 will be the year of AI-powered consumer devices. Humane debuted its AI Pin, which will start shipping in March. The Rabbit R1 is also set to start shipping in March and is already back-ordered several months.

Singapore-based Brilliant Labs just entered the fray with its new Frame AI-powered AR glasses (Designed with  in Stockholm and San Francisco) powered by a multimodal AI assistant named Noa.

in Stockholm and San Francisco) powered by a multimodal AI assistant named Noa.

Not to be confused with Apple’s Vision Pro or Meta’s AR product line, Frame is meant to be worn frequently in the way that you might use Humane’s AI Pin. Oh, and before you ask, the battery is reported to last all day.

Priced at $349, Frame puts AI in front of your eyes using an open-sourced AR lens. It uses voice commands and also capable of visual processing.

Noa can also generate images and provide real-time translation services and features Frame features integrations with AI-answers engine Perplexity, Stability AI’s text-to-image model Stable Diffusion, OpenAI’s GPT4 text-generation model, and speech recognition system, Whisper.

“The future of human/AI interaction will come to life in innovative wearables and new devices, and I’m so excited to be bringing Perplexity’s real-time answer engine to Brilliant Labs’ Frame,” Aravind Srinivas, CEO and founder of Perplexity, said in a statement.

Imagine looking at a glass of wine and asking Noa the number of calories in the glass (I wouldn’t want to know!) or the story behind the wine. Or let’s say you see jacket that someone else is wearing, and you’d like to know more about it. You could also prompt Frame to help you find the best pricing or summarize reviews as you shop in-store.

Results are presented onto the lenses.

AInsightsThe company secured funding from John Hanke, CEO of Niantic, the AR platform behind Pokémon GO. This tells me that it’s at least going to be around for a couple of iterations, which is good news. At $349, and even though I may look like Steve Jobs’ twin, I’ll probably give Frames a shot.

I still haven’t purchased Rabbit’s R1 simply because it’s too far out to have any meaningful reaction to it to help you understand it’s potential in your life. At $699 (starting) plus a monthly service fee, I just can’t justify an investment in Humane’s AI Pin, though I’d love to experiment with it. To me, Humane is pursuing a post-screen or post smartphone world and I find that fascinating!

Disney introduces HoloTile concept to help you move through virtual reality safelyNo one wants to end up like this…

https://twitter.com/briansolis/status...

Disney Research Imagineered one potential, incredibly innovative solution.

Designed by Imagineer Lanny Smoot, the HoloTile is the world’s first multi-person, omnidirectional, modular treadmill floor for augmented and virtual reality applications.

I'm a huge fan of @Disney #Iimagineering. ✨

Designed by Imagineer Lanny Smoot, the HoloTile is the world's first multi-person, omnidirectional, modular treadmill floor for augmented and virtual reality applications.

When AR and VR started to spark conversations about a… pic.twitter.com/h4xQ7JbFdK

— Brian Solis (@briansolis) February 5, 2024

The Disney HoloTile isn’t premised on a treadmill, instead it’s designed for today’s and tomorrow’s AR, VR, and spatial computing applications. I can only imagine applications that we’ll see on Apple’s Vision Pro and others in the near future.

AInsightsWhen AR and VR started to spark conversations about a metaverse, new omnidirectional treadmills emerged that were promising, but in more traditional ways.

It reminded me of the original smartphones. They were based on phones. When the iPhone was in development, the idea of a phone would be completely reimagined as, “a revolutionary mobile phone, combining three products—a widescreen iPod with touch controls, and a breakthrough Internet communications device with desktop-class email, web browsing, searching and maps—into one small and lightweight handheld device.” In fact, after launch, the number of phone calls made on iPhones remained relatively flat while data usage as only continued to spike year after year.

Please subscribe to my newsletter, a Quantum of Solis.

The post AInsights: MultiOn and Large Action Models (LAMs), Introducing Google Gemini and Its Version of Copilot, AI-Powered Frames, Disney’s HoloTile for VR appeared first on Brian Solis.

February 12, 2024

AInsights: Fighting Against Deepfakes, GenAI Gets into College, AI CarePods Brings Healthcare to You

A bad actor uses AI to create deepfakes to impersonate a user for deceptive purposes

AInsights: Executive-level insights on the latest in generative AI….

Meta leading effort to label AI-generated content

Nick Clegg, president of global affairs and communications at Meta, is pushing other companies to help identify A.I.-created content.Credit…Paul Morris/Bloomberg

One of the greatest threats building on profitable disinformation and misinformation campaigns is the rise of deepfakes.

In recent news, a finance worker was tricked into paying out $25 million after a video call with his company’s CFO and coworkers turned out to be fake. The participants were digitally recreated using publicly available footage of each individual.

Explicit deepfake images of Taylor Swift widely spread across social media in January. One image shared on X was viewed 47 million times.

AI’s use in election campaigns, especially those by opposing forces, is already deceiving voters and threatens to wreak havoc on democracies everywhere.

Policy and counter-technology must keep up.

On February 8th, the Federal Communications Commission outlawed robocalls that use voices generated by conversational artificial intelligence.

It’s easy to see why. Just take a look at the capability of tools that are meant to actually help business introduce AI-powered conversational engagement to humanize mundane processes such as Bland.ai. With a little training, AI tools such as Heygen could easily deceive people, and when put into the wrong hands, the consequences will be dire.

At the World Economic Forum in Davos, Nick Clegg, president of global affairs at Meta, said the company would lead technological standards to recognize AI markers in photos, videos and audio. Meta hopes that this will serve as a rallying cry for companies to adopt standards for detecting and signaling that content is fake.

AInsightsThe collaboration among industry partners and the development of common standards for identifying AI-generated content demonstrate a collective, meaningful effort to address the challenges posed by the increasing use of AI in creating misleading and potentially harmful content.

Standards will only help social media companies identify and label AI-generated content, which will aid in the fight against misinformation/disinformation and protect identities and reputations of people against deepfakes. At the same time,

The introduction of AI markers and labeling standards is a significant step towards enhancing transparency, combating misinformation, and empowering users to make more informed choices about the content they encounter on digital platforms.

New services offer “human vibes” to people who use generative AI to do their work instead of using genAI to augment their potential

A student uses genAI to write their essay for university

This interesting Forbes article asks, “did you use ChatGPT on your school applications?”

Turns out, that using generative AI to do, ironically, the personal work of conveying why someone may be the best fit for a higher education institution, is overwhelming admissions systems everywhere.

To help, schools are increasingly turning to software to detect AI-generated writing. But, accuracy is an issue, leaving admissions offices, professors and teachers, editors, managers, and reviewers everywhere cautious of enforcing potential AI detection.

“It’s really an issue of, we don’t want to say you cheated when you didn’t cheat,” Emily Isaacs, director of Montclair State University’s Office for Faculty Excellence, shared with Inside Higher Ed.

Admissions committees are doing their best to train for patterns that may serve as a telltale sign that AI, and not human creativity, were used to write the application. They’re paying attention to colorful words, flowery phrases, and stale syntax according to Forbes.

For example, these experts are reporting that the following words have spiked in usage in the last year, “Tapestry,” “Beacon,” “Comprehensive curriculum,” “Esteemed faculty,” and “Vibrant academic community.”

To counter detection, in a way that almost seems counter intuitive, students are turning to a new type of editor to “humanize” AI output and help eliminate detectability.

“Tapestry” in particular is a major red flag in this year’s pool, several essay consultants on the platform Fiverr told Forbes.

This is all very interesting in that admissions offices are also deploying AI to automate the application review process and boost productivity among the workforce.

Sixty percent of admissions professionals said they currently use AI to review personal essays as well. Fifty percent also employ some form of AI chat bot to conduct preliminary interviews with applicants.

AInsightsI originally wasn’t going to dive into this one, but then I realized, this isn’t just about students. It’s already affecting workforce output and will only do so at speed and scale. I already see genAI overused in thought leadership among some of my peers. Amazon too is getting flooded with new books written by AI.

The equivalent of the word “tapestry” is recognizable everywhere, especially when you compare the output to previous works. But like admissions committees there is no clear solution.

And it makes me wonder, do we really need a platform that calls people out for using or over-using AI in their work? What is the spectrum of acceptable usage?What we do need is AI literacy for everyone, students, educators, policy makers, managers, to ensure that the human element, learning, expertise, potential, is front-and-center and nurtured as genAI becomes more and more pervasive.

AI doctors in a box are coming directly to people to make healthcare more convenient and approachable

ustomers can use Forward’s CarePod for $99 a month. FORWARD

Adrian Aoun is the cofounder of San Francisco-based health tech startup Forward, a primary care membership with gorgeous “doctor’s” offices that take make your healthcare proactive with 24/7 access, biometric monitoring, genetic testing, and a personalized plan for care.

Now Aoun announced $100 million in funding to introduce new 8×8 foot “CarePods” that deliver healthcare in a box in convenient locations such as malls and office parks.

The CarePod is designed to perform various medical tasks, such as body scans, measuring blood pressure, blood work, and conducting check-ups, without the need for a human healthcare worker on site. Instead, CarePods send the data to Forward’s doctors or real-time or follow-up consultations.

AInsightsAI-powered CarePods will make medical visits faster, more cost-effective, and I bet more approachable. There are skeptics though, and I get it.

Arthur Caplan, a professor of bioethics at New York University told Forbes, “The solution then isn’t to go to jukebox medicine.” The use of the word “jukebox” is an indicator. It tells me that things should work based on existing frameworks.

“Very few people are going to show up at primary care and say, ‘My sex life is crummy, I’m drinking too much, and my marriage is falling apart,” Caplan explained.

But my research over the years communicates the opposite, especially among Generation-Connected. It is easier for men, for example, to speak more openly about emotional challenges to AI-powered robots. I’m not saying it’s better. I’ve observed time and time again, that the rapid adoption of technology in our personal lives is turning us into digital narcissists and digital introverts. Digital-first consumers want things faster, more personalized, more convenient, more experiential. They take to technology first.

“AI is an amazing tool, and I think that it could seriously help a lot of people by removing the barriers of availability, cost, and pride from therapy,” Dan, a 37-year-old EMT from New Jersey, told Motherboard.

CarePods aim to remove the impersonal, sanitized, beige, complex, expensive, clip-board led healthcare experiences that many doctors’ offices provide today. If technology like this makes people take action toward improving their health, they let’s find ways to validate and empower it. We’ll most likely find, that doing so will make healthcare proactive versus reactive.

Please subscribe to my newsletter, a Quantum of Solis.

The post AInsights: Fighting Against Deepfakes, GenAI Gets into College, AI CarePods Brings Healthcare to You appeared first on Brian Solis.

February 11, 2024

Introducing AInsights: Executive-level insights on the latest in generative AI

I started writing more and more about generative AI so that I could keep up with important trends and what they mean in my world.

I gave it a name with a simple tagline:

“AInsights: Executive-level insights on the latest in generative AI.”Here’s the current catalog if you’d like to dive-in with me…

The New Google Bard Aka Gemini, Neuralink Trials, Bland Conversational AI Agents, Deep Fakes, And GPT Assistants – Link

AI Is Coming For Jobs, Robots Are Here To Help, AGI Is On The Horizon, Google Has A Formidable Competitor – Link

Anthropic Funding, Midjourney Antifunding, Rabbit R1 Sellout Debut, And Microsoft Copilot’s Takeoff – Link

GPTs, AI Influencers, Digital Twins, Microsoft, AI Search – Link

Prompt Engineering: Six Strategies For Getting Better Results – Link

The Four Waves Of Generative AI, We’re In Wave 2 According To Mustafa Suleyman – Link

A Generative AI Video Timeline of the Top Players – Link

A Guided Tour Of Apple’s Vision Pro And A Glimpse Of The Future Of [Spatial] Computing – Link

Google Cuts, Expected Industry Cuts, GenAI’s $1 Trillion Trajectory – Link

NY Times Sues OpenAI and Next-Gen AI Devices – Link

Introducing the GenAI Prism with JESS3 and Conor Grennan – Link

OpenAI and the Events that Caused the Crazy Four Days Between Sam Altman’s Firing and Return – Link

Please subscribe to my newsletter, a Quantum of Solis.

The post Introducing AInsights: Executive-level insights on the latest in generative AI appeared first on Brian Solis.

February 5, 2024

AInsights: The New Google Bard aka Gemini, Neuralink Trials, Bland Conversational AI Agents, Deep Fakes, and GPT Assistants

AInsights: Generative insights in AI. Executive-level insights on the latest in generative AI….

Bard gets another massive update, attempting to pull users from competitive genAI sites such as ChatGPT and Midjourney; Google is reportedly rebranding Bard as GeminiGoogle Bard released a significant round of new updates, including:

Integration with Google Services: Bard now draws information from YouTube, Google Maps, Flights, and Hotels, allowing users to ask a wider variety of questions and perform tasks like planning trips and obtaining templates for specific purposes.

Multilingual Support: The chatbot can now communicate in over 40 multiple languages.

Image Generation: Google has added image generation capability, allowing it to create images powered by the creativity and imagination of your prompts. The lead image was developed using Bard.

Email Summarization and Sharing: Bard now offers better and more thorough summaries of recent emails and allows for the sharing of conversations, including images, to better appreciate the creative process.

Fact-Checking and Integration with Google Apps: You can now fact-check in Bard, which is a big plus with Google search engine competitor Perplexity.

Users can also integrate with Google Workspace and import data from other Google apps, such as summarizing PDFs from Google Docs. This appears to be taking steps toward competing against Microsoft’s co-pilot, which already has much ground to make-up.

Google Bard InsightsAt this point, active genAI users are onboard or Google will have to work to entice new users. This is perhaps why Google is said to be in the motion of rebranding Bard as Gemini and is set to launch a dedicated app to compete against ChatGPT and other focused genAI applications.

Incremental releases won’t accomplish that so much. But I am impressed to see Google moving to wave 3 of genAI in becoming a system of action.

Neuralink implants chip in first human brain.Neuralink, a brain-chip startup founded in 2016 founded by Elon Musk received U.S. FDA approval for human trails. The company successfully conducted its first user installation and the patient is recovering well.

Neuralink is developing a brain-computer interface (BCI) that implants a device (N1) about the size of a coin in the skull, with ultra-thin wires going into the brain. The N1 implant allows individuals to wirelessly control devices using their brain to potentially restore independence and improve the lives of people with paralysis.

Neuralink AInsightsThe success of this and all subsequent trials will serve as the foundation for potential medical applications that could revolutionize the lives of people with severe paralysis. Neuralink’s BCI could give patients control of computers, robotic arms, wheelchairs, and other life changing devices through their thoughts.

It’s also important to remember that one of Neuralink’s initial ambitions is to meld brains with AI for human-machine collaboration.

Bland.ai introduces a new genre of chatbot that literally talks to you, like a humanI remember before the launch of ChatGPT, in 2018, Google was accused of faking a big AI demo of using virtual-assistant AI to call a hair salon and make reservations on a user’s behalf.

Head on over to Bland.ai to receive a call from Blandy and be introduced to a new genre of conversational AI.

Bland.ai introduced an AI call center solution that’s reportedly capable of managing 500,000 simultaneously operating in anyone’s voice.

Bland.ai AInsightsInitial use cases point to lead qualification calls. When connected to a CRM, the AI can automate initial lead calls to save time, reduce human error, and surface important insights into prospect preferences and behaviors.

There are pros and cons of technology like this. It’s inevitable. This technology, IMHO, doesn’t replace SDRs, it sets them up for human-centered success and increases their value.

AI-generated content, including calls and customer interactions, may lack the human touch, creativity, and ability to think outside the box, leading to repetitive, monotonous, and bland (get it?) content. Human touch counts for everything.

But, warning, this technology helps while it also empowers the dark side of AI and humanity. Conversational AI can lower our defense mechanisms by making everyday transactional engagement acceptable with AI. Deep fakes will pounce on this.

Recently, a finance worker at a multinational firm was coaxed into paying out $25 million to deepfake fraudsters posing as the company’s chief financial officer in a video conference call.

The growing libraries of specifically focused GPTs can now be summoned in real-time in ChatGPT sessionsSometimes you don’t need to carry the heavy lifting for prompt engineering in unchartered territory. When ChatGPT introduced the GPT Store, the intent was to connect users to useful, custom versions of ChatGPT for specific use cases.

Now, you can integrate GPTs into prompts to accelerate desired outcomes in specific applications.

GPT AInsightsWe don’t know what we don’t know. And that’s important when we don’t know what to ask or what’s possible. Sometimes it can take a series of elaborate, creative, meandering prompts to help us achieve the outcomes needed for each step we’re trying to accomplish in any new process.

AI founder and podcast host Jaeden Schafer shared an example use case where stacking GPTs in the same thread could help users empower founders. “One males a logo, Canva makes a pitch deck, another writes sales copy, etc.,” he shared.

The post AInsights: The New Google Bard aka Gemini, Neuralink Trials, Bland Conversational AI Agents, Deep Fakes, and GPT Assistants appeared first on Brian Solis.

January 25, 2024

Brian Solis to Keynote Baker Hughes Annual Meeting, Energizing Change, in Florence, Italy

The post Brian Solis to Keynote Baker Hughes Annual Meeting, Energizing Change, in Florence, Italy appeared first on Brian Solis.

January 22, 2024

AInisights: AI is Coming for Jobs, Robots are Here to Help, AGI is on the Horizon, Google has a Formidable Competitor

Created with DALL-E

AInsights: Generative insights in AI. This series rapid fire series offers executive level AInsights into the rapidly shifting landscape of generative AI.

OpenAI CEO Sam Altman says artificial general intelligence is on the horizon but will change the world much less than we fear.Rumor is that Sam Altman was fired as CEO by the board over concerns in how Altman was aggressively pursuing artificial general intelligence (AGI). Now Altman is on record saying that our concerns over AGI may be overblown.

“People are begging to be disappointed and they will be,” said Altman.

AGI is next-level artificial intelligence that can complete tasks on par with or better than humans.

Altman believes that AGI will become an “incredible tool for productivity.”

“It will change the world much less than we all think and it will change jobs much less than we all think,” Altman assured

AInsightsMake no mistake. The stakes will only get higher. They already are with the arrival of generative AI.

In a conversation with Satya Nadella, Altman reminded us that there is no “magic red button” to stop AI.

But at the same time, we as humans are already evolving by using AI and we will continue to do so.

“The world had a two week freakout over ChatGPT 4. ‘This changes everything. AGI is coming tomorrow. There are no jobs by the end of the year.’ And now people are like, ‘why is it so slow!?’,” Altman joked with the Economist.

While he is aiming to downplay the role of AGI, generative AI is already showing us what we can expect. We need a new era of AI-first leadership. We need decision-makers who can not only automate work but also imagine, inspire, and empower a renaissance, one where workers and jobs are augmented with AI to unlock new potential and performance.

DeepMind co-founder Mustafa Suleyman warns AI is a ‘fundamentally labor replacing’ tool over the long term.

A session at the World Economic Forum (WEF) in Davos, said the quiet part out loud, AI is coming for jobs. I feel like this is more than just a bullet in this AInisights update. This is a canary in the coal mine if that’s still a relevant reference point.

In an interview with CNBC, Suleyman was asked by if Ai was going to replace humans in the workplace.

This was his answer, “I think in the long term—over many decades—we have to think very hard about how we integrate these tools because, left completely to the market…these are fundamentally labor replacing tools.”

And he’s right. Let’s get that part out of the way.

Left to their own devices, executives naturally gravitate toward what they know, automation and cost-cutting to increase margins and profitability. You have to center insights on what drives their KPIs.

AInsightsSuleyman is not saying this from a doomsday perspective. He’s asking us to think differently about how we use AI to augment our work, and more importantly, our thinking…

In reality, executives have never, we’ve never, had access to technology that can collaborate and create. We’ve always been the source of creation. Executive decision-making is what drives businesses. At most, digital transformation, predictive analytics, have aided in decision-making. But now, AI can now augment decision-making, perform work, make us smarter and more capable, and unlock new possibilities.

That takes a new vision and a new set of KPIs to attach to executive decision-making.

For example, IKEA launched an AI bot named Billie to lead first level contact with customers, effectively managing 47% of customer queries directed to the call centers.. In a time when everyone is talking about AI replacing jobs, IKEA trained 8,500 call center workers to serve as interior design advisers, generating 1.3 billion euros in one year.

Stanford AI and Robotics debut developmental robot that makes the Jetsons a reality.Zipping Fu, Stanford & Robotics PhD at Stansford AI Lab, shared his team’s incredible project, Mobile ALOHA. The team includes Tony Zhao and Chelsea Finn.

If you ever watched the Jetsons, then you, like me, wished that we’d see Rosey come to life one day. And from an R&D perspective, that’s exactly what’s happening in Silicon Valley .

The advanced project and how they’re training Mobile ALOHA:

Imitation learning from human demonstrations has shown impressive performance in robotics. However, most results focus on table-top manipulation, lacking the mobility and dexterity necessary for generally useful tasks. In this work, we develop a system for imitating mobile manipulation tasks that are bimanual and require whole-body control. We first present Mobile ALOHA, a low-cost and whole-body teleoperation system for data collection.

To date, their testing now includes 50 autonomous demos to accomplish complex mobile manipulation tasks.

Tasks include laundry, self-charging, vacuuming, watering plants, loading and unloading a dishwasher, making coffee, cooking , getting drinks from a refrigerator, opening a beer, opening doors, playing with pets, doing laundry, and so much more.

Mobile ALOHA's hardware is very capable. We brought it home yesterday and tried more tasks! It can:

– do laundry

– self-charge

– use a vacuum

– water plants

– load and unload a dishwasher

– use a coffee machine

– obtain drinks from the fridge and open a beer

– open… pic.twitter.com/XUGz7NhpeA

— Zipeng Fu (@zipengfu) January 4, 2024

Introducing 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀

— Hardware!

A low-cost, open-source, mobile manipulator.

One of the most high-effort projects in my past 5yrs! Not possible without co-lead @zipengfu and @chelseabfinn.

At the end, what's better than cooking yourself a meal with the

pic.twitter.com/iNBIY1tkcB

— Tony Z. Zhao (@tonyzzhao) January 3, 2024

AInsights

Introduce 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀

— Learning!

With 50 demos, our robot can autonomously complete complex mobile manipulation tasks:

– cook and serve shrimp

– call and take elevator

– store a 3Ibs pot to a two-door cabinet

Open-sourced!

Co-led @tonyzzhao, @chelseabfinn pic.twitter.com/wQ2BLDLhAw

— Zipeng Fu (@zipengfu) January 3, 2024

While early in its development, Mobile ALOHA is one of the many robotics projects being trained to perform complex human tasks. In these demos, we see tele-operated tasks, but the team does have autonomous results for a smaller set of tasks. The point is that this is how AI robots learn how to repeat and optimize tasks.

For comparison, this is Tesla’s Optimus robot learning how to fold clothes.

This is the @Tesla Optimus robot. Here it learns to fold a shirt. Next, it will do this autonomously, in most environments, at speed.

— Brian Solis (@briansolis) January 21, 2024

Perhaps more so, the fact that Mobile ALOHA is accelerating at Stanford, we can only imagine how many advanced AI-powered robotics initiatives are taking place around the world that we don’t know about. At any moment, breakthroughs will reach the masses and suddenly your next big purchase might not only be aimed at an Apple Vision Pro.

AI-powered ‘answers’ engine Perplexity raises $73.6 million, now valued $520 million, expected to compete against Google.Perplexity is an AI-powered answers engine that also cites its sources. As a former analyst, I very much appreciate the ability to fine-tune academic sources. This is one of the very few generative AI tools that I use on a daily bases.

The company raided $73.6 million in a funding round led by IVP with additional investments from Databricks Ventures, Shopify CEO Tobi Lutke, NVIDIA, and Jeff Bezos. That’s a pretty powerful group betting on the future of AI-powered search.

The company was founded by Aravind Srinivas, Denis Yarats, Johnny Ho and Andy Konwinski. Srinivas previously researched languages and GenAI models at OpenAI.

Perplexity aims to address weaknesses in current search models, particularly in providing transparency and accuracy (read SEO/SEM/LLM). Additionally, Perplexity provides a conversational AI interface that tunes search queries with follow-up questions, helping users get more relevant answers. Unlike conventional search engines, Perplexity.ai employs deep learning algorithms to gather information from diverse sources and present it in a concise and accurate manner.

AInsightsI use Perplexity for its focus on transparency and ability to elevate and hone answers based on prompts and continuing exchanges. Google still plays a big role in my search behavior. But, and I’ve said this going back to every social media attempt, the company lacks a human algorithm. Traditional search is an always-evolving machine algorithm. But when someone asks a question, they do so from a human perspective. Human-centered algorithms can account for intent and desired outcome to then activate algorithms accordingly. Language vs. rank becomes key here.

On a side note, Perplexity and Rabbit announced a partnership to integrate the answer engine into the R1.

Stability AI introduces a smaller, more efficient language model (aka small language model) to democratize generative AI.

We're thrilled to announce our partnership with Rabbit: Together, we are introducing real-time, precise answers to Rabbit R1, seamlessly powered by our cutting-edge PPLX online LLM API, free from any knowledge cutoff. Plus, for the first 100,000 Rabbit R1 purchases, we're… pic.twitter.com/hJRehDlhtv

— Perplexity (@perplexity_ai) January 18, 2024

Stability AI, makers of Stable Diffusion, released its smallest LLM to date, Stable LM 2 1.6B. This is really about enhancing AI accessibility and performance, while also compromising the very same things for those partners looking to go bigger. The model is smaller and more efficient, aiming to lower barriers and enable more developers to utilize AI in various languages (English, Spanish, German, Italian, French, Portuguese, and Dutch).

The release represents the company’s commitment to continuous improvement and innovation in the field of artificial intelligence.

AInsightsThis release signifies a major advancement in AI language models, particularly in the multilingual and 3D content creation domains. The model’s outperformance of other small AI language models highlights its potential to drive innovation and competitive advantage for businesses investing in AI technologies. Said simply, this presents an opportunity for executives to leverage cutting-edge AI capabilities for driving innovation, improving accessibility, and staying ahead in the competitive landscape.

The model’s superior performance lowers hardware barriers, allowing more developers to participate in the generative AI ecosystem. This can be leveraged by executives to drive innovation, improve accessibility, and gain a competitive edge in the rapidly evolving AI landscape. Additionally, the model’s integration of Content Credentials and invisible watermarking makes it suitable for a wide range of applications, including automated alt-text generation and visual question answering. This positions Stable LM 2 1.6B as a valuable tool for enhancing accessibility and expanding the reach of AI-driven solutions, which is increasingly important in today’s business environment.

TikTok gives users the ability to use generative AI to create songs (sort of).

TikTok has become an incredible platform for discovering new music and also for launching new artists. Now, with AI Song, users can create new music with text prompts.

AInsightsThis isn’t yet what we think. It’s more of an AI song collaborator using an existing music library.

“It’s not technically an AI song generator — the name is likely to change and it is currently in testing at the moment,” Barney Hooper, spokesperson for TikTok said in an email to The Verge. “Any music used is from a pre-saved catalog created within the business. In essence, it pairs the lyrics with the pre-saved music, based on three genres: pop, hip-hop, and EDM.”

This also opens up a can of worms. With the New York Times suing OpenAI over copyright infringement, and OpenAI and Apple and others striking deals with media companies to allow LLMs to train on their data, TikTok and others have to build on a more collaborative foundation.

AI is coming for creatives according to FT.I guess we’re going to have to get used to headlines like this, in every industry.

According to FT, Architects are incorporating DALL-E, Midjourney, and other generative AI tools for complex design work, threatening the industry of illustrators and animators.

You’ll notice similar observations in this article, Suleyman’s interview, and from others. Up until now, humans were the only species that could conceive and build on original ideas. AI is now an infinite idea factory, building on the ideas of humankind. AI has been trained on everything that humans have created.

We should not fear this, we should embrace it if we’re to move forward and upward.

AInsightsHere, we need to revisit the conversation where Suleyman warned every business that AI will replace certain jobs. As humans, we need to stoke our creativity, our imagination, and most importantly, our empathy, to create and collaborate with AI in ways that weren’t possible before.

We can’t fear AI and robots if we’re not willing to stop acting like them in our work. AI is creating new opportunities and areas for innovation, that in all honesty, are overdue.

Wayne He is the founder of XKool in Shenzhen, a team of former Google engineers, AI scientists, mathematics, and cross-over designers. Her words close out these AInsights…

“In the future, architects will be empowered to show the client thousands of options and refine the best one so that even on a low budget you will be able to get the best building. We worry about AI escaping human control and causing a disaster for mankind, and in my novels most of the future AI scenarios are not optimistic. But it is this writing which gave me an awareness to prevent these things happening. AI should be a co-pilot and a friend, not a replacement for architects.”

Please subscribe to my newsletter, a Quantum of Solis.

The post AInisights: AI is Coming for Jobs, Robots are Here to Help, AGI is on the Horizon, Google has a Formidable Competitor appeared first on Brian Solis.

January 19, 2024

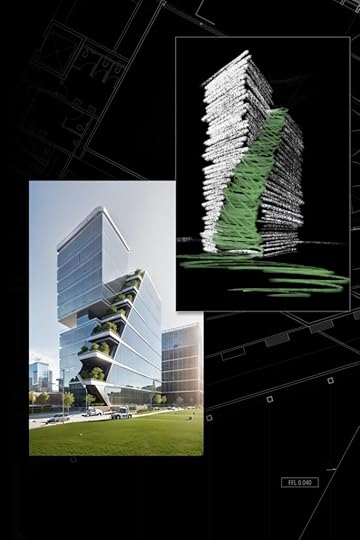

Becoming an AI-First Business: Brian Solis To Deliver Closing Keynote at Baker Hughes Energizing Change Annual Meeting

Brian Solis was invited to keynote Baker Hughes 24th annual event in Florence, Italy, “Energizing Change.”

Featuring international energy and industry thought leaders, Brian’s plenary is scheduled for 29 January. Brian will share his vision for becoming an AI-first business and unlocking an entirely new level to compete for the future.

Closing Keynote: AI is Eating the World: How to Lead, Not Follow in an AI-First WorldSince the launch of ChatGPT in November 2022, generative AI has become the world’s fast-growing technology and the subject of much debate regarding its role in reshaping our world. In this closing keynote, world-renowned futurist and best-selling author Brian Solis will share why this is the moment for leaders to reimagine organizations for an entirely new future. Solis will explore why automation is no longer the goal, but instead the norm, why augmentation will be the key to differentiation and how leaders can forge the mind shift required to unlock a new future as an AI-first exponential growth business.

The post Becoming an AI-First Business: Brian Solis To Deliver Closing Keynote at Baker Hughes Energizing Change Annual Meeting appeared first on Brian Solis.

A Guided Tour of Apple’s Vision Pro and a Glimpse of the Future of [Spatial] Computing

Apple finally released its long-awaited Vision Pro for pre-order today. At launch time, Apple also released a guided tour video that offers a glimpse of spatial computing and the new dimension of immersive experiences it introduces. Here are the key moments to focus on to appreciate what makes Vision Pro a game-changer. What we’re witnessing is a new future of computing, connectivity, entertainment, communication, and how we interact with digital and the physical space around us.

1) You’ll notice the video is meant to prepare you contextually for an entirely new experience. It sets the stage for a “mindshift”. Though, at parts, it goes a little overboard.

2) The shift from physical space into a spatial environment is breathtaking. That’s by design. You can almost see and feel it through his eyes.

3) Note her composure and the simplicity of her instructions. This too is by design. It’s meant to connect Spatial Computing to a new dimension, one that’s relaxed and wondrous.

4) The link between how our host guides the user through the user interface and the apps that are demonstrated serve two functions: 1) elegance and intuitiveness.

5) “Peering into a moment of time” is a powerful way to describe spatial videos. It adds a new dimension to past experiences that bring it to life as an immersive memory. It’s not unlike the movie Brainstorm with Christopher Walken and Natalie Wood (her last role). When these appear as reminders like today’s photos on iPhones, many will cry as they relive important moments.

6) Entertainment is a killer app out of the box. Om believes that Vision Pro (or Apple’s face computer as he playfully calls it) is serving as a home entertainment reference system.

7) Productivity is also touted as a powerful experience allowing you to transform your open space into a virtual, customizable work environment. For example, when the user opens his MacBook, the screen shifts from the laptop to the VisionPro, creating a massive cinematic 4d display.

8) UI/UX is subtly, but powerfully demonstrated as a game changing way to interact. Spatial computing changes how we control of space and the virtual relationship between digital and physical worlds.

I have friends who were online at 5 a.m. pacific to pre-order. Honestly, I wish I were one of them.

The post A Guided Tour of Apple’s Vision Pro and a Glimpse of the Future of [Spatial] Computing appeared first on Brian Solis.

January 17, 2024

AInsights: Anthropic Funding, Midjourney Antifunding, Rabbit R1 Sellout Debut, and Microsoft Copilot’s Takeoff

Created with DALL-E

AInsights: Generative insights in AI. This series rapid fire series offers executive level AInsights into the rapidly shifting landscape of generative AI.

OpenAI rival Anthropic in talks to raise $750 million led by Menlo VenturesAnthropic, an OpenAI rival founded by ex-OpenAI employees, is in talks to raise a $750 million funding round led by Menlo Ventures at a valuation of ~$18 billion — nearly 4.5 times the startup’s $4.1 billion valuation earlier this year. This approach keeps new players at bay, for the most part. And it also holds its valuation from soaring out the stratosphere ahead of a likely next round.

Founded in 2021, Anthropic is already funded by top tier companies including Google, Salesforce and Zoom. Anthropic already raised a combined $750 million from two funding rounds in April and May, including a strategic investment from Google, giving it a 10% stake in the company. With Google in partnership with Anthropic, it can ultimately compete against Microsoft and OpenAI.

According to The Information, the company is reported to forecast more than $850 million in annualized revenue rate by the end of 2024.

AInsightsThis round signifies that Anthropic is building its war che$t, giving the company capital it needs to more effectively compete against OpenAI. I see the early days in the Microsoft and Apple rivalry here.

Midjourney generated $200M in revenue with $0 in investmentsThe ability to generate significant revenue isn’t just self-sustaining, it’s rare in Silicon Valley, a name synonymous with venture capital and fund raising. At $200 million in revenue, the company is already profitable.

This amazing feat has been performed with only 40 employees and zero funding from outside investors. I’d love to invest if I had an opportunity.

It’s wild to think that David Holz founded the company in mid-2021 and here we are. Now venture capitalists have been practically begging him to take their money. Not doing so, keeps Holz in control and not beholden to investors. I guess, as the world turns, so do the days of genAI.

AInsightsHolz’s dedication to his vision and holding the company to its core is notable and refreshing. I wonder though, if at some point, he’ll see an opportunity to accept strategic investments to compete against OpenAI (DALL-E) and Stability AI. The minute he feels like he has to, if ever, you’re already on the defensive. We already see Anthropic raising to keep pace with OpenAI. The key is to stay head of R&D and trends.

The Rabbit R1 is the $199 counterpart to Humane’s $699 AI Pin and introduces AI’s third waveAnnounced at CES2024, the Rabbit R1 AI handheld stole the show, or at least the AI news cycles. The R1 largely viewed as a simpler version of Humane’s advanced AI Pin. At $199 vs $699, the R1 is generating ChatGPT vibes, winning over consumers, in this case, at 10,000 batches at a time. At the time of this writing, the company had already sold out of its third batch of 10k units.

To appreciate the Rabbit R1 is to understand where we are in the rapid evolution of genAI.

Right now, we’re in wave 2 where models take input data and produce new data.

Wave 3 is the era of interactive AI, where conversation becomes the user interface and autonomous bots connect to one another to execute tasks behind the scenes.

So what is the Rabbit R1 exactly?

The R1 build on the premise of an LLM, wave 2, with the beginning of wave 3, connecting agents to one another to form workflows and complete tasks.

This is what Rabbit refers to as a LAM or large action model.

The Rabbit R1 is an AI-powered handheld device that aims to simplify the AI experience by using a Large Action Model to interact with various apps and complete tasks on behalf of the user.

It features a rotating camera called the “rabbit eye,” a scroll wheel for navigation, a press-to-talk button for voice commands, a 2.28-inch touchscreen, a MediaTek Helio P35 processor with 4GB of RAM and 128GB of storage, a SIM card slot for cellular capabilities, and a USB-C port.

The device’s operating system, Rabbit OS, is designed to enable natural language-centered interactions, and the LAM allows the device to learn and adapt to specific commands for future interactions 4 5 .

The Rabbit R1 is not intended to replace a smartphone but rather to serve as a pocket companion that can perform various digital tasks and interact with apps using AI capabilities 1 2 .

For more, here’s the official launch video. It’s long, but for those who really want to understand where it fits in the genAI ecosystem, its helpful.

AInsightsIn some ways, the Rabbit R1, in a basic sense, reminds me of the Chumby Internet Radio from the days of Web 2.0. At the time, Chubby offered the ability to connect your favorite internet sites and apps into one, super cute, device. More so, with the Humane AI Pin and now the R1, add to that, Apple’s Vision Pro, we’re getting glimpses of what a post phone world could look like. Not that it’s happening any time soon, but it will happen.

AI discovery shatters long-standing belief in forensics that fingerprints from different fingers of the same person are uniqueAI team led by Columbia Engineering undergrad Gabe Gus identified a new kind of forensic model that focuses on the curvature at the center of the print.

This model is not based on genAI or an LLM, which I find fascinating. It’s one of the many approaches to AI.

AInsightsThe insights for this story are best articulated in this quote from Columbia Engineering…

“Even more exciting is the fact that an undergraduate student, with no background in fensics whatsoever, can use AI to successfully challenge a widely held belief of an entire field. We are about to experience an explosion of AI-led scientific discovery by non-experts, and the expert community, including academia, needs to get ready.”

Microsoft debuts Copilot ProMicrosoft introduced its Copilot service. For $20/month, users gets access to the latest models of OpenAI’s ChatGPT, including GPT-4 Turbo with everyday work capabilities featured in Office integration and also creating application specific Copilot GPTs.

If you’re already a Microsoft 365 Personal or Home subscriber, then the extra $20 per month unlocks Copilot in Office apps.

AInsightsMicrosoft’s increasing its consumer and enterprise viability an AI-powered company. Microsoft does not own any portion of OpenAI and is simply entitled to a share of profit distributions,” a Microsoft spokesperson said in a statement. It makes you wonder though, as a Microsoft user, whether you need the separate subscription to OpenAI.

Embedded into Microsoft apps, genAI becomes UI, making work collaborative and output exponential.

Please subscribe to my newsletter, a Quantum of Solis.

The post AInsights: Anthropic Funding, Midjourney Antifunding, Rabbit R1 Sellout Debut, and Microsoft Copilot’s Takeoff appeared first on Brian Solis.

January 16, 2024

Brian Solis to Keynote Finovate in San Francisco

FinovateSpring will bring the entire fintech ecosystem together. With 600+ decision-makers from banks & financial institutions, 840+ C-level, heads, directors or VPs and 200+ VCs/investors looking for funding opportunities.

FIRST KEYNOTE CONFIRMED. GET GROUNDBREAKING INSIGHTSBrian Solis to keynote Finovate!

Session: The Cycle For Emerging Technologies: If You’re Waiting For Someone To Tell You What To Do, You’re On The Wrong Side Of Change

How can we all challenge the seductive call to return to ‘normal’? A siren song that threatens to undo the potential for these unprecedented opportunities to bring about meaningful change, growth, and innovation. Leaders need to seize this moment as a crucible for creativity and innovation; there has never been a greater need for a toolkit for transformation, a platform for change, and a system for reshaping the future.

The post Brian Solis to Keynote Finovate in San Francisco appeared first on Brian Solis.