Brian Solis's Blog, page 13

June 10, 2024

AInsights: Forgetting Google? Perplexity Pages Craft Expert Content Search Engines Love

[image error]

AInsights: Your executive-level insights making sense of the latest in generative AI…

Perplexity, my favorite AI-powered answers engine, just introduced Perplexity Pages. This is a game changer on so many fronts. Let’s start with what it is, and then we’ll get into why this is such a big deal.

As a content creator, I do my research. Then, I assemble the information into an article, blog, video, podcast, website, paper, etc., and publish a link where people can visit to learn more. Perplexity now provides a content creation and publishing platform to conduct AI-powered research, vet the information, document sources, and publish your research as a linkable (and shareable) destination. Said simply, Perplexity Pages uses its artificial intelligence engine to transform research into visually compelling and shareable web pages with just a few clicks.

Here’s an example. Today, I spent time researching how to make authentic bolognese. In my research using Google, Youtube, and Perplexity, I learned that there is one registered recipe and another that’s also widely accepted as authentic. The registered recipe doesn’t not use tomatoes or milk. The other does. After all that work, I assembled, manually, after countless resources on both sides, the process to correctly reproduce both recipes. Had I done this using Perplexity Pages, I could have produced my own, personally researched cook book, at the push of a button, saving a ton of time researching, writing, and assembling pictures and videos. UPDATE: I did indeed channel all research to Perplexity Pages and published my results for all the home chefs out there!

Where was this when I started blogging!?

How does Perplexity Pages work?

1) Define the Topic: Specify the subject matter you want to explore, whether it’s an industry overview, product analysis, or market research.

2) Specify the Audience: Indicate the target audience for your content, such as executives, researchers, or customers.

3) Generate the Page: Perplexity’s AI scours its LLM, curates relevant information, and generates a structured draft with sections, images, and citations. You can prompt Perplexity to generate AI images, upload your own, or use web images.

4) Customize and Publish: Refine the content, add or remove sections, adjust the layout, and publish the Page to Perplexity’s library or share it directly with your audience.

AInsightsPages reminds me of the original Blogger and WordPress combined with Canvas and also Quora if answers were generated by artificial intelligence. It’s part publishing tool, part thought leadership/branding, part subject matter expert, and part designer.

For example, here’s my Perplexity Page that explores Perplexity Pages.

With many pundits, myself included, positioning Perplexity as an answers engine and an alternative to Google, Pages are likely to be indexed by Google itself. This means that Perplexity is providing the tools to help curious thinkers, creators, writers, academics, experts, and researchers, accelerate and elevate their thought leadership using its competitors platform to amplify subject matter expertise and potentially, online visibility and fundability.

Google will respond. In the meantime, this is an excellent tool to learn how to hone AI-powered research and hone your editing skills, validating AI-powered results, and up-leveling the output in every dimension

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Forgetting Google? Perplexity Pages Craft Expert Content Search Engines Love appeared first on Brian Solis.

May 29, 2024

Brian Solis Keynote: AI Is Eating the World – Time to Innovate for an AI First World

Brian Solis delivered the keynote at Innov8rs in Los Angeles, “AI Is Eating the World: Time to Innovate for a AI-First World.”

Generative AI is now the fastest growing technology in the world. In this riveting keynote, world-renowned futurist and eight-time best-selling author Brian Solis shares why now is the time to reimagine your business for an entirely new future.

Solis argues why automation is the norm, but not the only end goal. In this session he demonstrates how augmentation becomes the key to differentiation for enterprises that are at the inflection point of thriving or perishing in the age of AI. With the mind shift that this session will initiate, leaders will be able to unlock a new future toward becoming an exponential business.

Please subscribe, like, and comment!

Book Brian to speak at your event!

The post Brian Solis Keynote: AI Is Eating the World – Time to Innovate for an AI First World appeared first on Brian Solis.

Brian Solis Keynote: AI Is Eating the World – Time to Innovate for a AI First World

Brian Solis delivered the keynote at Innov8rs in Los Angeles, “AI Is Eating the World: Time to Innovate for a AI-First World.”

Generative AI is now the fastest growing technology in the world. In this riveting keynote, world-renowned futurist and eight-time best-selling author Brian Solis shares why now is the time to reimagine your business for an entirely new future.

Solis argues why automation is the norm, not a goal. In this session he demonstrates how augmentation becomes the key to differentiation for enterprises that are at the inflection point of thriving or perishing in the age of AI. With the mind shift that this session will initiate, leaders will be able to unlock a new future toward becoming an exponential business.

Please subscribe, like, and comment!

Book Brian to speak at your event!

The post Brian Solis Keynote: AI Is Eating the World – Time to Innovate for a AI First World appeared first on Brian Solis.

May 28, 2024

The Inception of Our New Spatial Reality: Immerse or Die!

In the worlds of AR , VR, gaming, and spatial computing, Cathy Hackl and Irena Cronin are renowned. I’ve known them for the better part of 15+ years and they are still among my favorite subject matter experts to follow.

I’m proud to share that their new book, Spatial Computing: An AI Business Revolution, is now available.

I’m also proud to share that I had the privilege of writing the foreword and I get to share it with you here!

About the BookSpatial Computing: An AI-Driven Business Revolution reveals exclusive insider knowledge of what’s happening today in the convergence of AI and spatial computing. Spatial Computing is an evolving 3D-centric form of computing that uses AI, Computer Vision, and extended reality to blend virtual experiences into the physical world, breaking free from screens into everything you can see, experience, and know.

Spatial Computing: An AI-Driven Business Revolution includes coverage of:

The new paradigm of human-to-human and human-computer interaction, enhancing how we visualize, simulate, and interact with data in physical and virtual locations.

Navigating the world alongside robots, drones, cars, virtual assistants, and beyond―without the limitation of just one technology or device.

Insights, tools and illustrative use cases that enable businesses to harness the convergence of AI and spatial computing today and in the decade to come via both hardware and software.

The impact of spatial computing is just starting to be felt. Spatial Computing: An AI-Driven Business Revolution is a must-have resource for business leaders who wish to fully understand this new form of revolutionary, evolutionary technology that is expected to be even more impactful than personal computing and mobile computing.

Foreword – The Inception of Our New Spatial Reality: Immerse or Die!Apple had its next iPhone moment with the launch of its Vision Pro in 2024. Like in 2007, Apple yet again shifted the trajectory of computing behaviors by developing an intelligent and immersive Spatial Computing platform. And like in 2007, the world will never be the same. An entirely new operating system unlocked entirely new dimensions. These infinite, layered canvases bestowed users with metahuman capabilities. Now, people in any context can interact with information, content, and each other in ways and in spaces that didn’t exist in traditional physical and digital realms. We thus gain access to unprecedented worlds and experiences with unimagined worlds forming in virtual and augmented realms every day.

But wait, there’s more. Eight or so months before the introduction of Apple’s Vision Pro, OpenAI released ChatGPT, introducing generative AI to the masses. In fact, ChatGPT is widely regarded as the fastest-growing con- sumer internet app of all time, reaching an estimated 100 million monthly users in just two months.1 Generative AI is also in its own way giving users superpowers by augmenting their efforts with Artificial Intelligence. In fact, most apps and platforms will automatically integrate generative AI as parts of its user interface (UI). It will just seamlessly blend in to digital experiences. Added to Spatial Computing, AI-powered experiences supercharge human potential by arming them with a cognitive exoskeleton, augmented and virtual vision, and the ability to interact with computers, robots, data, and one another in three-dimensional other-worldly spaces.

And that’s where this story begins.

Humans are now entering into uncharted portals of hybrid realities that are not only intelligent and immersive, but also transcendent. They augment human capabilities to unlock exponential performance and previously unat- tainable outcomes in evolving hybrid dimensions that foster the intermun- dane relationship between physical and digital worlds.

Cathy Hackl and Irena Cronin are about to deliver a transformative inception by planting the idea of spatial innovation to spark your imagination to create new worlds, new ways of working, new ways of learning, new ways of communicating, new ways of exploring, and new ways of dreaming, remembering, inventing, solving, and evolving.

This frontier between the physical and digital world will be shaped by you and fellow readers. You are the architects of these unexplored worlds. You are the astronomers who discover them. You are the pioneers who explore these new territories. And it’s your vision that defines the next chapter of spatial design, experiences, and human potential.

What a virtual world we now live in! Immerse or die!

Brian Solis

Digital Futurist, Anthropologist, Soon-To-Be 9x Best-Selling Author, Metahuman, briansolis.com

The post The Inception of Our New Spatial Reality: Immerse or Die! appeared first on Brian Solis.

May 25, 2024

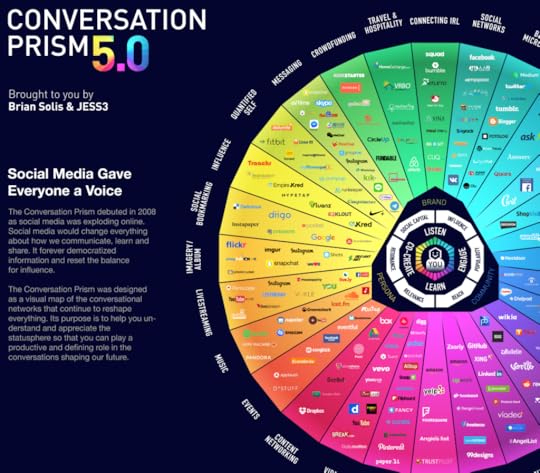

The Story Behind The Conversation Prism, A Viral Social Media Infographic by Brian Solis and JESS3

In 2007, JESS3 and I released v1.0 of The Conversation Prism, a map and mental model for navigating the social media landscape at the time.

Fun fact: this became one of the first ever viral social media infographics.

We would go on to release 4 additional iterations and ship thousands of full-size posters.

Who knows, maybe we’ll release the next version one day soon!

This is the backstory. Please watch!

You can find V5.0 here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post The Story Behind The Conversation Prism, A Viral Social Media Infographic by Brian Solis and JESS3 appeared first on Brian Solis.

May 22, 2024

AInsights: At Google I/O, AI Becomes An Extension of All You Do and Create, and Its Going to Change…Everything

Credit: Marian Villa

AInsights: Your executive-level insights on the latest in generative AI…

Meet Agent 00AI…your new Q to help you navigate your work and personal lives.

Google hosted its I/O event and everything the company announced isn’t just disruptive or progressive in the realm of genAI, but also to itself.

Let’s run through the announcements and then let’s dive deeper to analyze how they rival OpenAI, Meta, Anthropic, and Perplexity.

For starters, Google announced Gemini AI Integration across multiple key products, which is a sign of the future for next-gen hardware and software products. At some point on the horizon, AI will simply become part of the user interface, acting as an assistant to collaborate with you in real-time, or, eventually, as a proactive agent on your behalf.

Automatic movie/TV show description generation in Google TV.Geospatial augmented reality content in Google Maps.AI-generated quizzes on educational YouTube videos.Natural language search in Google Photos (“Ask Photos” feature). This is huge in of itself. How many pictures do you have on your phone or in the cloud that you’re likely never to see again? You can find pictures simply by describing them to AI!AI assistance for email drafting, summarization, and e-commerce returns in Gmail. Now also please enhance email search! Why is this still a challenge in 2024!?Google I/O in Under 10 MinutesGemini 2 Model Update

Google also announced a new 27-billion-parameter model for Gemini 2 (latest 1.5 details here), its next-generation AI offering, optimized for efficient performance on GPUs. This larger model can support the largest input of any commercially available AI model.

Veo and Imagen 3 for CreatorsGoogle showcased Veo, its latest high-definition video generation model designed to compete against Sora and Midjourney, and Imagen 3, its highest-quality text-to-image model, promising more lifelike visuals.

These tools will be available for select creators initially.

Audio Overviews and AI SandboxGoogle introduced ‘Audio Overviews,’ a feature that generates audio discussions based on text input, and ‘AI Sandbox,’ a range of generative AI tools for creating music and sounds from user prompts.

AI Overviews in SearchGoogle Search is launching ‘AI Overviews’ to provide quick summaries of answers to complex search queries, along with assistant-like planning capabilities for multi-step tasks.

Google introduced the ability to ask open-ended questions and receive detailed, coherent responses generated by AI models. This allows users to get more comprehensive information beyond just a list of links.

AI Agents: Google unveiled AI agents that can engage in back-and-forth dialogue to help users accomplish multi-step tasks like research, analysis, and creative projects. These agents leverage the latest language models to provide personalized assistance.

Multimodal Search: Google expanded its search capabilities to understand and generate responses combining text, images, audio, and other modalities. This enables users to search with images or audio clips and receive relevant multimedia results.

Longer Context: Google’s search models now have the ability to understand and incorporate much longer context from a user’s query history and previous interactions. This allows for more contextually relevant and personalized search experiences.

These new AI-powered search features aim to provide more natural, interactive, and comprehensive information access compared to traditional keyword-based search. They leverage Google’s latest advancements in large language models and multimodal AI to deliver a more assistive and intelligent search experience.

What we have yet to see though, are tools for businesses who need to be on the other side of AI search. It’s clear that search behaviors are changing, but how products and services appear on the other side of discovery is the next Wild West.

AI Teammate for Google WorkspaceThe ‘AI Teammate’ feature will integrate into Google Workspace, helping to build a searchable collection of work from messages and email threads, providing analyses and summaries.

Project Astra – AI AssistantGoogle unveiled Project Astra, a prototype AI assistant built by DeepMind that can help users with tasks like identifying surroundings, finding lost items, reviewing code, and answering questions in real-time.

This is by far the most promising of Google’s AI assistants, and for the record, is not available yet. Project Astra represents Google’s vision for the future of AI assistants…and more.

We could also very well be on the cusp of a next-gen version of Google Glass. And this time, it won’t be so awkward now that Meta and Ray-Ban have helped to consumerize wearable AI.

So what is it?

Project Astra is a multimodal AI agent capable of perceiving and responding to real-time information through text, video, images, and speech. It can simultaneously access information from the web and its surroundings using a smartphone camera or smart glasses. The system encodes video frames and speech into a timeline, caching it for efficient recall and response. For example, in the demo below, you’ll see a live video feed panning a room where the user stops, draws and arrow on the screen, and asks the AI assistant to identify the object. In another example, the video feed continues to pan with the user asking it to recognize objects that produce sound. The AI assistant accurately identifies an audio speaker.

Project Astra Key Capabilities

Identifies objects, sounds, and their specific parts in real-time using computer vision and audio processing.

Understands context and location based on visual cues from the environment.

Provides explanations and information related to objects, code snippets, or scenarios it perceives.

Engages in natural, conversational interactions, adapting to interruptions and speech patterns.

Offers proactive assistance and reminders based on the user’s context and past interactions.

Implications for Businesses

Project Astra represents a significant leap in AI capabilities, offering several potential benefits for businesses:

Enhanced Productivity: An AI assistant that can understand and respond to the complexities of real-world scenarios could streamline various tasks, boosting employee productivity and efficiency.

Improved Customer Experience: Businesses could leverage Project Astra’s multimodal capabilities to provide more intuitive and personalized customer support, enhancing the overall customer experience.

Augmented Decision-Making: By processing and synthesizing information from multiple sources in real-time,

Project Astra could assist executives and decision-makers with data-driven insights and recommendations.

Innovation Opportunities: The advanced AI capabilities of Project Astra could pave the way for new products, services, and business models that leverage multimodal interactions and contextual awareness.

While Project Astra is still in development, Google plans to integrate some of its capabilities into products like the Gemini app and web experience later this year. Business executives should closely monitor the progress of Project Astra and explore how its cutting-edge AI capabilities could benefit their organizations and drive innovation.

And that’s your AInsights this time around. Now you and I can think about the future of AI-powered search, work, next-level creations we’ll produce, and how we’ll navigate our world, and our business, with AI by our side.

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: At Google I/O, AI Becomes An Extension of All You Do and Create, and Its Going to Change…Everything appeared first on Brian Solis.

May 20, 2024

AInsights: Exploring OpenAI’s new Flagship Generative AI Model GPT-4o and What It Means to You

OpenAI CTO Mira Murati Credit: OpenAI

AInsights: Your executive-level insights on the latest in generative AI…

OpenAI introduced GPT-4o, its new flagship, real-time generative AI model. The “o” stands for “omni,” which refers to the model’s ability to process multimodal prompts including text, voice, and video.

During its live virtual event, OpenAI CTO Mira Murati explained this version’s significance, “…this is incredibly important, because we’re looking at the future of interaction between ourselves and machines.”

Let’s dive-in to the announcement to explore the new features and what it means to you and me…

Increased Context WindowGPT-4o has a massive 128,000 token context window, equivalent to around 300 pages of text. This allows it to process and comprehend much larger volumes of information compared to previous models, making it invaluable for tasks like analyzing lengthy documents, reports, or datasets.

Multimodal CapabilitiesOne of the most notable additions is GPT-4o’s multimodal capabilities, allowing it to understand and generate content across different modalities:

Vision: GPT-4o can analyze images, videos, and visual data, opening up applications in areas like computer vision, image captioning, and video understanding.

Text-to-Speech: It can generate human-like speech from text inputs, enabling voice interfaces and audio content creation.

Image Generation: Through integration with DALL-E 3, GPT-4o can create, edit, and manipulate images based on text prompts.

These multimodal skills make GPT-4o highly versatile and suitable for a wide range of multimedia applications.

HumanityPerhaps most importantly, CPT-4o features several advancements that make it a more empathetic and emotionally intelligent chatbot. In emotionally-rich scenarios such as healthcare, mental health, and even HR and customer service applications, sympathy, empathy, communications, and other human skills are vital. To date, chatbots have been at best, transactional, and at worst, irrelevant and robotic.

ChatGPT, introduces several key advancements that make it a more empathetic and emotionally intelligent chatbot.

Emotional Tone Detection: GPT-4o can detect emotional cues and the mood of the user from text, audio, and visual inputs like facial expressions. This allows it to tailor its responses in a more appropriate and empathetic manner.

Simulated Emotional Reactions: The model can output simulated emotional reactions through its text and voice responses. For example, it can convey tones of affection, concern, or enthusiasm to better connect with the user’s emotional state.

Human-like Cadence and Tone: GPT-4o is designed to mimic natural human cadences and conversational styles in its verbal responses. This makes the interactions feel more natural, personal, and emotionally resonant.

Multilingual Support: Enhanced multilingual capabilities enable GPT-4o to understand and respond to users in multiple languages, facilitating more empathetic communication across cultural and linguistic barriers.

By incorporating these emotional intelligence features, GPT-4o can provide more personalized, empathetic, and human-like interactions. Studies show that users are more likely to trust and cooperate with chatbots that exhibit emotional intelligence and human-like behavior. As a result, GPT-4o has the potential to foster stronger emotional connections and more satisfying user experiences in various applications.

Improved KnowledgeGPT-4o has been trained on data up to April 2023, providing it with more up-to-date knowledge compared to previous models. This is important for tasks that require more current information, such as news analysis, market research, industry trends, or monitoring rapidly evolving situations.

Cost ReductionOpenAI has significantly reduced the pricing for GPT-4o, making it more affordable for developers and enterprises to integrate into their applications and workflows. Input tokens are now one-third the previous price, while output tokens are half the cost. Input tokens refer to the individual units of text that are fed into a machine learning model for processing. In the context of language models like GPT-4, tokens can be words, characters, or subwords, depending on the tokenization method used.

Faster PerformanceOptimizations have been made to GPT-4o, resulting in faster, near real-time response times compared to its predecessor. This improved speed can enhance user experiences, enable real-time applications, and accelerate time to output.

AInsightsFor executives, GPT-4o’s capabilities open up new possibilities for leveraging AI across various business functions, from content creation and data analysis to customer service and product development. It’s more human than its predecessors and designed to engage in ways that are also more human.

Its multimodal nature allows for more natural and engaging interactions, while its increased context window and knowledge base enable more comprehensive and informed decision-making. Additionally, the cost reductions make it more accessible for enterprises to adopt and scale AI solutions powered by GPT-4o.

Here are some creative ways people are already building on ChatGPT-40.

https://x.com/hey_madni/status/179072...

That’s your latest AInsights, making sense of ChatGPT-4o to save you time and help spark new ideas at work!

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Exploring OpenAI’s new Flagship Generative AI Model GPT-4o and What It Means to You appeared first on Brian Solis.

May 15, 2024

AInsights: Microsoft Developing Its Own AI Model with MAI-1; Serves as a Competitive Lesson for All Businesses

AInsights: Your executive-level insights on the latest in generative AI

Microsoft is perhaps building one of the most prolific genAI investment portfolios of any company out there. The company is a deep investor ($10 billion) in OpenAI. OpenAI not only powers ChatGPT, but also Microsoft’s Copilot. Microsoft recently invested in French startup Mistral. The company also just pseudo-acquired Inflection AI, appointing co-founder Mustafa Suleyman as its CEO of consumer AI.

Compare this to Apple, one of most innovative companies in the world, who let the genAI revolution pass it by. In fact, after exploring ChatGPT, Apple executives believe Siri feels antiquated in comparison, warranting an urgent M&A or partnership strategy to compete.

Now, Microsoft is reportedly working on a new large-scale AI language model called MAI-1 according to The Information. The effort is led by Suleyman. Just as a reminder, Microsoft acquired Inflection’s IP rights and hired most of its staff, including Suleyman, for $650 million in March.

MAI-1 is Microsoft’s first large-scale in-house AI model, marking a departure from its previous reliance on OpenAI’s models. With around 500 billion parameters, it is designed to compete with industry leaders like OpenAI’s GPT-4 (over 1 trillion parameters) and Meta’s Llama 2 models (up to 70 billion parameters).

The pressure may be in part due to its position in the market as less than self-reliant. This comes after internal emails revealed concerns about Microsoft’s lack of progress in AI compared to competitors like Google and OpenAI.

For executives, Microsoft’s move highlights the strategic importance of investing in AI and the need to stay competitive in this rapidly evolving space. It also emphasizes the value of acquiring top AI talent and leveraging strategic acquisitions to accelerate in-house AI development. As the AI arms race continues, executives must carefully navigate the ethical and legal challenges while capitalizing on the opportunities presented by these transformative technologies.

These are lessons for all companies leaning on AI to accelerate business transformation and growth. If Apple and Microsoft are pressured to innovate, imagine where you company is on the spectrum of evolution.

The post AInsights: Microsoft Developing Its Own AI Model with MAI-1; Serves as a Competitive Lesson for All Businesses appeared first on Brian Solis.

May 13, 2024

X: The Experience When Business Meets Design Named to Best Customer Experience Books of All Time List

When Brian Solis published X: The Experience When Business Meets Design, it was lauded as one of the best books to capture the zeitgeist of the digital revolution while laying out a practical blueprint to lead the future of modern customer experience design and innovation.

Even today, the book still leads the way forward, especially in an era of generative AI. And as testament to its endurance relevance and value, Bookauthority just announced X as one of the “Best Customer Experience Books of All Time!”

Additionally, X was and still is recognized for its stunning design, not common, at the time, amongst business books. In fact, X and its predecessor, WTF: What’s the Future of Business – Changing the Way Businesses Create Experiences, sparked a trend for business book design to earn a place both on bookshelves, lobbies, and coffee tables.

Here are a few other interesting facts you may not know about X…

Brian believed that for X to be taken seriously as a book about experience innovation that the book itself had to be an experience.

Because the book explores how digital and mobile are transforming customer expectations and as a result, customer journeys, the book was designed as an “analog app.” It doesn’t feature a traditional table of contents. It is organized by contextual themes vs linear format. And, the balance of written and visual narratives reflect experiences on your favorite apps vs. traditional books. Brian’s inspiration was to create a book that today’s high school, digital-first, students could read intuitively.

The shape of X is formed after an iPad because a smartphone was too small.

X was designed in partnership between Brian and the amazing team at Mekanism.

X was supposed to follow Brian’s second solo book with Wiley, The End of Business as Usual. Instead, the publishers felt that the gap between themes was too great. Brian wrote WTF to bridge the gap. Originally intended as an e-book only, Brian used the exercise to experiment with designing a print book as an analog app. This helped set the stage for X.

The “X” on the cover is real. It’s layers of etched glass. To give it the lighting effects, the X was placed on its back on top of a TV screen, which was illuminated to create a spectrum of colors. The video below is the result!

If you read X, please share with your networks on X, LinkedIn, Facebook, Threads, TikTok! Please use this link to it references the list. Thank you!

Invite Brian as your next keynote speaker!

The post X: The Experience When Business Meets Design Named to Best Customer Experience Books of All Time List appeared first on Brian Solis.

AInsights: Everything You Need to Know About Google Gemini 1.5; Surpasses Anthropic, OpenAI, In Performance, For Now

AInsights: Your executive-level insights on the latest in generative AI

Google is making up for lost time in the AI race by following the age old Silicon Valley mantra, move fast and break things.

Google recently released Gemini 1.5, and it’s next level! This release dethrones Anthropic’s brief reign as leading foundation model. But by the time you read this, OpenAI will also have made an announcement about ChatGPT improvements. It’s now become a matter of perpetual leapfrogging, which benefits us as users, but makes it difficult to keep up! Note: I’ll follow up with a post about ChatGPT/DALL-E updates.

Here’s why it matters…

1 million tokens: It’s funny. I picture Dr. Evil raising his pinky to his mouth as he says, “1 million tokens.” Gemini 1.5 boasts a dramatically increased context window with the ability to process up to 1 million tokens. Think of tokens as inputs, i.e. words or parts of words, in a single context window. This is a massive increase from previous models like Gemini 1.0 (32k tokens) and GPT-4 (128k tokens). It also surpasses Anthropic’s context record at 200,000 tokens.

A 1 million token context window allows Gemini 1.5 to understand and process huge amounts of data. This unlocks multimodal super-prompting and higher caliber outputs. A 1 million token context window can support extremely long books, documents, scripts, codebases, video/audio files, specifically:

1 hour of video

11 hours of audio

30,000 lines of code

700,000 words of text

https://twitter.com/briansolis/status...

This long context capability enables entirely new use cases that were not possible before, like analyzing full books, long documents, or videos in their entirety.

Chrome Support: With Perplexity creeping into Google’s long-dominate grasp on internet search (more about the future of search here), Google is at least integrating Gemini prompting directly in the Chrome browser. Here’s how to do it

https://twitter.com/briansolis/status...

More countries, more languages supported: Gemini 1.5 is available in 100 additional countries and now offers support for 9 additional languages. Languages supported include English, Japanese, and Korean: Arabic, Bengali, Bulgarian, Chinese (simplified and traditional), Croatian, Czech, Danish, Dutch, Estonian, Finnish, French, German, Greek, Hebrew, Hindi, Hungarian, Indonesian, Italian, Latvian, Lithuanian, Norwegian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Thai, Turkish, Ukrainian, Vietnamese.

Google has put Gemini 1.5 through rigorous safety evaluations to analyze potential risks and harms before release. Novel safety testing techniques were developed specifically for 1.5’s long context capabilities.

That’s your AInsights in a snapshot to help you make sense of Google’s latest genAI news.

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: Everything You Need to Know About Google Gemini 1.5; Surpasses Anthropic, OpenAI, In Performance, For Now appeared first on Brian Solis.