Brian Solis's Blog, page 15

April 21, 2024

It’s Time for a New Genre of Leaders: A Conversation in Australia with My Friend, Dave Anderson

When I was in Melbourne, Australia last year, I had the most wonderful opportunity to spend time with my friend Dave Anderson.

We met at a wine bar, he brought a ton of AV gear, and we recorded a podcast while enjoying a glass of wine.

What did we talk about?

Here are the topics we covered…

– Mega and Micro Trends; how do we keep up?

– AI…How do we embrace it?

– What’s going on with Digital Experience? Are we getting it right or wrong?

– Champagne or Wine?

– Q on Q vs Long Term Vision. How to balance both.

– Cultural and leadership Empathy.

I hope you enjoy it as much as I did!

The post It’s Time for a New Genre of Leaders: A Conversation in Australia with My Friend, Dave Anderson appeared first on Brian Solis.

April 19, 2024

Think Big with AI! Join Me at ServiceNow’s Knowledge 2024 in Las Vegas

I’m proud to announce that I’m leading two Think Big sessions at ServiceNow’s annual business transformation event, Knowledge 2024.

You’re invited!

More details below…

“Everything you wanted to know but were too afraid to ask about GenAI”This eye-opening conversation with Jeremy Barnes, Vice President of Product Platform, AI, will share everything you need to know about GenAI. The session will review where GenAI is now, where it is going, and how it will help your work. During this session learn how these topics within the larger lens of what steps you should be taking to thrive in the intelligence revolution.

5/7 10:30 am and 5/9 12:45 pm.

“How to lead the intelligence revolution as an AI-first business”Most companies are reacting to AI by plugging it into their existing infrastructure to automate processes. This may lead to faster, more efficient operations in the short term, but long-term success requires companies do more by becoming AI-first businesses. Instead of just responding, these companies fully embrace AI to challenge our fundamental business assumptions, disrupt markets, and transform how we live and work. Join this Think Big session to learn the steps to becoming an AI-first business and why you should be urgently taking action now to be at the forefront of the intelligence revolution.

5/8 1 pm and 5/9 2:15 pm.

You can save a seat here  LINK

LINK

I would love to say hello if you’re coming to Vegas…excited to see you there!

The post Think Big with AI! Join Me at ServiceNow’s Knowledge 2024 in Las Vegas appeared first on Brian Solis.

April 17, 2024

AInsights: The Future of Google Search Ain’t What It Used To Be; The Rise of Ignite Moments

© FT montage/Getty Images/Dreamstime

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Google is exploring options to charge for “premium” features powered by generative artificial intelligence (genAI), which could rock its core search business and the search industry at large.

The idea is to introduce value-added AI services into its premium subscription, which also offers Gemini AI assistant in Gmail and Google Docs. Google’s traditional search engine would remain free to users, supported by advertising. But charging for enhanced search features could be a first for the company.

Google has been testing an experimental AI-powered search service that presents detailed answers to queries. This was the initial test case of its “Search Generative Experience” or SGE powered by LLMs such as PaLM 2, which generates an “AI-powered snapshot” in its result. By late 2023, 77.8% of searches had an SGE result, and this number has only continued to grow.

The thing that we don’t talk about too much, but need to, is the massive amount of compute necessary to product genAI results. Google’s advertising-supported business model was architected to support these soaring costs. Subscriptions seem like an economical solution, except when your entire user-base is used to ‘free.’ It’s hard to change behavior toward a paid model unless the value proposition is clear and compelling.

As an aside, I shifted from free to premium subscriptions for ChatGPT and Perplexity.

AInsightsThis comes at a time when Google is facing competition, not just from search companies such as Microsoft’s Bing and DuckDuckGo, but also from the likes of ChatGPT and Perplexity.

In fact, I use Perplexity as my go to search engine when I need to find direct answers or solutions. It saves me time from having to wrestle with Google’s algorithm for quality output and it also footnotes the results in case I want to validate the information or learn more.

I’m not alone in these new behaviors either.

For instance, think back to the rise of Web 2.0. Do you read instruction manuals anymore?

No. No-one does. Instead, you most likely go to Youtube to figure out how to use, make, fix, or build something. Like Google Search though, we all depend on the accuracy of the algorithm to determine relevance. You most likely have to watch more than one video.

Nowadays, clever consumers use the ChatGPT app, or in my case, the new Ray-Ban Meta AI powered sunglasses, to point the camera at an object and have generative AI provide the instructions.

Remember when we stopped reading instructions and instead went to YouTube to learn how to use, make, or fix something?

There's an AI app for that…

Now you can use ChatGPT, point the camera at an object, ask a question, and get the answer, specific to you. pic.twitter.com/sBcrUiS1hi

— Brian Solis (@briansolis) April 14, 2024

Since November 2022, when ChatGPT hit the scene, Google announced an internal “code red,” which called its founders back to work, and has been scrambling to respond to the radical shift in how consumers interact with information since.

Even before ChatGPT, Google was already facing competition from TikTok and Instagram as mobile consumers preferred peer-driven content that felt more trustworthy than traditional search engine optimized result.

Interestingly, genAI could be the very thing that helps Google and all businesses alike.

See, Google isn’t the only company threatened by AI or even social media search. Any company with a website is now threatened by these new intelligent platforms and behaviors.

In traditional search, SEO strategies such as keyword optimization, Page Rank, and intuitive desktop and mobile design would help competitive companies perform better in results. But what happens when consumers are going to genAI or social and using more personal ways of searching for information or desired outcomes? How does your site, product or service, or solution perform in these use cases?

It’s time to find out the answer and respond accordingly. There is no longer one player or platform connecting curiosity, intent, and outcomes.

One of the most interesting things that’s shared across AI and social media searches is the conversational aspect to the prompt or search query. Keywords are a thing of the past. More specific instruction or question have become the norm.

In fact, I just presented on this subject in Florida.

I call these new search and discovery moments, “Ignite Moments.” It’s the moment someone asks a question or seeks to learn more about a subject.

Where do they go?

What do they prompt or query?

What format do they prefer?

And most importantly, how can you help them find you and how can you deliver the most relevant and productive journey once you have their attention?

Please subscribe to AInsights.

Please subscribe to my master newsletter, a Quantum of Solis .

The post AInsights: The Future of Google Search Ain’t What It Used To Be; The Rise of Ignite Moments appeared first on Brian Solis.

April 16, 2024

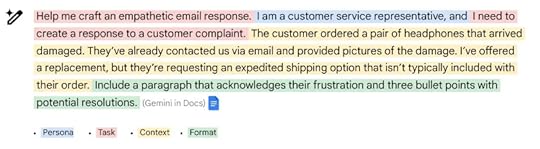

AInsights: Google Released a Prompting Guide 101 to Advance GenAI Prompting Skills and Outcomes

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Google released a “prompt guide” for Gemini in Google Workspace. Think of it as text-based ‘co-pilot’ to help users in Google Workspace get the most out of the Gemini large language model assistant. OpenAI did something similar with ChatGPT in January 2024 (infographic here.)

The guide provides valuable tips and best practices for crafting effective prompts to get the most out of Gemini, such as using clear and concise language, providing context, using specific keywords, and breaking down complex tasks.

It’s intended to accelerate the ability to automate tasks, enhance productivity, and streamline workflows by using Gemini to generate content, summarize documents, modify tone, and more. the guide includes example prompts and refinement processes across different use cases like customer service, marketing, and project management.

The handbook identifies “four main areas to consider when writing an effective prompt,” including:

Persona: Who you are. i.e. the user.

Task: What you need Gemini to do (write, summarize, change the tone, etc.)

Context: “Provide as much context as possible.” For example, provide a written description or reference using an existing document in Google Drive (using the @-menu) to help Gemini understand the situation and desired outcome.

Format: Bullet points, talking points, specifying character count limits, etc.

Google offers additional tips to help users get the most from their collaboration with Gemini AI

Break it up: If you want Gemini for Workspace to perform several related tasks, break them into separate prompts.

Give constraints: To generate specific results, include details in your prompt such as character count limits or the number of options you’d like to generate.

Assign a role: To encourage creativity, assign a role. You can do this by starting your prompt with language like: “You are the head of a creative department for a leading advertising agency …”

Ask for feedback: In your conversation with Gemini at gemini.google.com, tell it that you’re giving it a project, include all the details you have and everything you know, and then describe the output you want. Continue the conversation by asking questions like, “What questions do you have for me that would help you provide the best output?”

Consider tone: Tailor your prompts to suit your intended audience and desired tone of the content. Ask for a specific tone such as formal, informal, technical, creative, or casual in the output.

Say it another way: Fine-tune your prompts if the results don’t meet your expectations or if you believe there’s room for improvement. An iterative process of review and refinement often yields better results.

AInsightsPrompt engineering or prompting is an art and science (please see my guide to fantastical prompting thoughts here). Whether for Gemini, Microsoft Co-Pilot, Claude, ChatGPT, Midjourney, it’s all about understanding intent, context, outcomes, and also, possibilities.

Prompting usually confines us to the world we know. But once we start exploring new horizons, stepping out of our own comfort zones, and experimenting with new voices and personas or even things, we can unlock entirely new outcomes!

Please subscribe to AInsights.

Please subscribe to my master newsletter, a Quantum of Solis .

The post AInsights: Google Released a Prompting Guide 101 to Advance GenAI Prompting Skills and Outcomes appeared first on Brian Solis.

April 15, 2024

AInsights: Nvidia’s Advancements in AI Compute, AI-Powered Humanoid Robots, and Connecting Omniverse to Apple’s Vision Pro

Nvidia CEO Jensen Huang delivers the keynote presentation at the company’s GPU Technology Conference.

Justin Sullivan / Getty Images

AInsights: Executive-level insights on the latest in generative AI…subscribe here.

Nvidia accelerates its foray into the future of AI and robotics at its annual GTC event.

Credit: Richard Nieva, Forbes

Nvidia made a series of important announcement at its annual GPU Technology Conference recently. This isn’t a news site, so I’ll just cover what was announced and why it’s important to you. I’ll share the news, followed by my AInsights…

1. Nvidia introduced Blackwell and Grace Blackwell Superchip the “world’s most powerful chips” according to the company. he Blackwell chips represent a significant leap in AI performance and energy efficiency, which will benefit businesses looking to adopt and scale AI across their operations. The cloud availability and software partnerships also make the technology more accessible and easier to implement.

1.5 Intel announced its new AI chip is hitting the market. The company’s new AI chips, including the Gaudi3 and the Core Ultra chips with Neural Processing Units (NPUs), are aimed at competing directly with Nvidia’s industry-leading AI accelerators like the H100 chip. Intel will serve manufacturers including Dell, HP Enterprise, Lenovo, and Super Micro Computer.

AInsights

Increased AI performance: The Blackwell chips offer significantly more processing power for AI applications.

Improved energy efficiency: Nvidia emphasized the Blackwell chip’s improved energy efficiency, stating that training large AI models with 2,000 Blackwell chips would use 4 megawatts of power, compared to 15 megawatts for 8,000 older GPUs. This is important as businesses become more concerned about the carbon footprint and energy costs of running AI workloads.

Availability through cloud providers: Major cloud providers like Amazon, Google, Microsoft, and Oracle will offer access to Nvidia’s new GB200 server featuring 72 Blackwell chips. This will make the advanced AI capabilities more accessible to businesses of all sizes.

When it comes to Intel, Nvidia still maintains a significant lead in the AI accelerator market, with an estimated 80% market share. Intel, and also AMD, will need to demonstrate clear performance and efficiency advantages to convince customers to switch away from Nvidia’s established ecosystem.

2. Nvidia announced that it is bringing its Omniverse platform to Apple’s Vision Pro.

Nvidia Omniverse is an open, real-time platform for 3D design, collaboration, and simulation. It is designed to enable universal interoperability across different applications and vendors, allowing for efficient real-time scene updates.

The platform now enables the development of 3D workflows and applications, to the new Apple Vision Pro virtual reality headset.

AInsights

Nvidia’s Omniverse provides a ready platform for businesses (and developers) to integrate their 3D content and applications into the Vision Pro ecosystem.

Specifically, the Omniverse platform allows businesses to stream advanced 3D experiences to the Vision Pro headset, by enabling them to easily send their industrial 3D scenes and content to Nvidia’s graphics-ready data centers. This makes it more seamless for businesses to leverage the Vision Pro for industrial and enterprise applications.

Additionally, the integration of Microsoft 365 productivity apps like OneDrive, SharePoint and Teams into the Omniverse-powered Vision Pro experiences provides businesses a familiar and integrated way to collaborate in virtual/mixed reality.

3. Nvidia introduced “Project GR00T,” a foundation model for humanoid robots, representing the next wave of AI and robotics.

Nvidia aims to power an intelligent breed of robots with GR00T (Generalist Robot 00 Technology) to “understand natural language and emulate movements by observing human actions.”

Nvidia also unveiled “Jetson Thor,” a new computer for humanoid robots that includes GenAI capabilities as part of its AI-powered robotics initiative.

AInsights

Project GR00T is Nvidia’s foundation model for humanoid robots, designed to enhance their understanding, coordination, dexterity, and adaptability. The Jetson Thor platform, powered by Nvidia’s Blackwell GPU architecture, provides the necessary computational power to enable these advanced robotics capabilities.

GR00T is designed to enhance understanding, coordination, dexterity, and adaptability.

It aims to foster more seamless and intuitive collaboration between humans and robots in various business applications.

We’re already off to the robotics races, making humanoid coworkers a nearer term reality than ever before.

Figure, an AI company developing humanoid robots, recently raised a $675 million Series B funding round at a $2.6 billion valuation. Investors include Microsoft, OpenAI, Nvidia, and Jeff Bezos.

“Our vision at Figure is to bring humanoid robots into commercial operations as soon as possible,” Brett Adcock, founder and CEO of Figure, said in a statement. The 21-month-old company has a goal to develop humanoids that “can eliminate the need for unsafe and undesirable jobs.”

Figure 01 is powered by OpenAI. It can hear and speak naturally, understand commands, plan, and carry out physical actions.

Meet Figure 01, a humanoid robot powered by @OpenAI. It can hear and speak naturally, understand commands, plan, and carry out physical actions.

The company recently closed a $675 million at a $2.6 billion valuation. Investors include Microsoft, OpenAI, Nvidia, and Jeff Bezos.… pic.twitter.com/3Jv9BXuDhd

— Brian Solis (@briansolis) April 14, 2024

Tesla has also unveiled a prototype of its humanoid robot called Optimus, which can perform basic tasks like folding a shirt.

Optimus is envisioned by Musk to automate mundane or dangerous tasks.

Please subscribe to AInsights.

Please subscribe to my master newsletter, a Quantum of Solis .

The post AInsights: Nvidia’s Advancements in AI Compute, AI-Powered Humanoid Robots, and Connecting Omniverse to Apple’s Vision Pro appeared first on Brian Solis.

April 2, 2024

Top 50 B2B Thought Leaders, Analysts & Influencers You Should Work With In 2024 – Annual Ranking

Brian Solis was listed in Thinkers 360 “Top 50 B2B Thought Leaders, Analysts & Influencers You Should Work With In 2024.”

Thinkers360 live and annual leaderboards are differentiated by our unique patent-pending algorithms that take a holistic measure of thought leadership and authentic influence looking far beyond social media so brands can find exactly the right experts for their niche.

The post Top 50 B2B Thought Leaders, Analysts & Influencers You Should Work With In 2024 – Annual Ranking appeared first on Brian Solis.

April 1, 2024

Generative AI Prompting: A Guide to Achieving Incredible and Fantastical Outcomes with GenAI

Created with DALL-E

Is prompt engineering a passing fad? Will AI learn to prompt itself based on your desired outcome?

Writing for Harvard Business Review, Oguz A. Acar believes that “AI Prompt Engineering Isn’t the Future.” Instead, he believes the future lies in prompt formulation and problem solving.

The truth is that prompt engineering is a continuous experiment and unique to each AI model. And as Acar suggests, alternative approaches are boundless.

Tell the chatbot that the output is important to your job or project or give it encouragement, “you are the smartest GPT in the world” or use words that “make it fun” and your outcomes will differ and elevate.

Prompt engineering is an art.Immersion: Imagine you’re a sentient quantum particle in a double-slit experiment. Can you narrate your experience from the moment you’re fired towards the slits until you hit the detector screen?

Role Playing: Write a dialogue between Picasso and Einstein, discussing how Cubism and the Theory of Relativity can reflect each other.

Sentient: Imagine if English was a sentient being. Write a conversation between English and Latin discussing the evolution of languages.

Fantastical: Describe the sound of Beethoven’s Symphony No. 9 to someone who has never been able to hear.

Navigating LLMs is a science.Specific approaches result in specific training and outcomes.

Fine-tuning is intended to train a model for specific tasks.

Prompt engineering aims to elicit better AI responses from the front end.

Prompt tuning combines these, taking the most effective prompts or cues and feeding them to the AI model as task-specific context.

Chain-of-thought promoting guides LLMs through a step-by-step reasoning process to solve complex problems.

Prompt formulation is rooted in problem formulation, identifying, analyzing, and delineating problems. Once a problem is clearly defined, the linguistics nuances of a prompt become tangential to the solution.

Prompt engineering dead. Long live prompt engineering.Is prompt a trend or a fad?

Hardly.

But it does take practice, creativity, and patience.

In fact, sometimes it’s just better it’s better just to ask a LLM to improve itself .

That’s what Rick Battle and Teja Gollpudi in their paper, “The Unreasonable Effectiveness of Eccentric Automatic Prompts.”

Battle and Gollapudi systematically tested how different prompts impact an LLM’s ability to solve grade-school math questions. They tested three different open-source language models with 60 varying prompt combinations. They found that results varied and anything but consistent.

Battle and Gollpudi shared their observations with IEEE, “The only real trend may be no trend. What’s best for any given model, dataset, and prompting strategy is likely to be specific to the particular combination at hand.”

Work toward your outcome and partner with LLMs to find the sweet spot for you and your partner in intellectual augmentation.

Legendary music producer Rick Rubin once said, “Be aware of the assumption that the way you work is the best way simply because it’s the way you’ve done it before.”

We are all learning and should always continue to experiment and imagine toward a new and better future.

Stay open. Stay curious.

Please subscribe to AInsights.

Please subscribe to my master newsletter, a Quantum of Solis.

The post Generative AI Prompting: A Guide to Achieving Incredible and Fantastical Outcomes with GenAI appeared first on Brian Solis.

March 29, 2024

Brian Solis to Keynote Global Equity Conference in Nashville on 9 April 2024

On 9 – 11 April 2024 more than 500+ global company directors, senior managers, stock plan, HR, reward and benefits professionals will fill the halls at the Renaissance Nashville Hotel to attend the annual Global Equity Organization conference.

Brian Solis will serve as keynote speaker. His presentation, “Transforming Equity Management in an Era of Disruptive Innovation,” will help industry professionals understand technology trends, especially generative AI, to improve their work, employee experiences, and their ability to attract and retain talent.

This flagship conference is where the best equity minds meet. It brings together academics, thought leaders, stock plan experts and policy makers from across the globe to explore best practice in equity compensation and work together to drive a vision for future employee share ownership.

Unlock a world of possibilities – ignite inspiration regardless of your experience!

The post Brian Solis to Keynote Global Equity Conference in Nashville on 9 April 2024 appeared first on Brian Solis.

March 26, 2024

AInsights: AI will be Smarter Than Humans before 2030, So What?

Jensen Huang © Photo: Getty Images

AInsights: Executive-level insights on the latest in generative AI…

As a keynote speaker, I engage with executives in every industry at varying levels of technological maturity and curiosity. Several years ago, I would ask audiences, “how many of you believe that AI will take jobs?”

Almost every hand would go up.

I would then ask, “how many of you believe AI will take your job?”

Almost every hand would go down.

I tried that question again recently at a conference in Italy.

This time, every hand stayed up.

So when you read a headline like this, “Generative AI will be smarter than humans by 2030,” I can only imagine the panic or anxiety that might arise. I’d also like to think that at the same time, curiosity, imagination, and ambition might also follow.

Sure, generative AI has already reportedly passed (or broke) the Turing test. GenAI has also passed some important CPA and BAR exams, among other academic tests.

Does this make genAI tools such as ChatGPT smart? Yes.

Does that make you any less valuable? No, not by any stretch of the means.

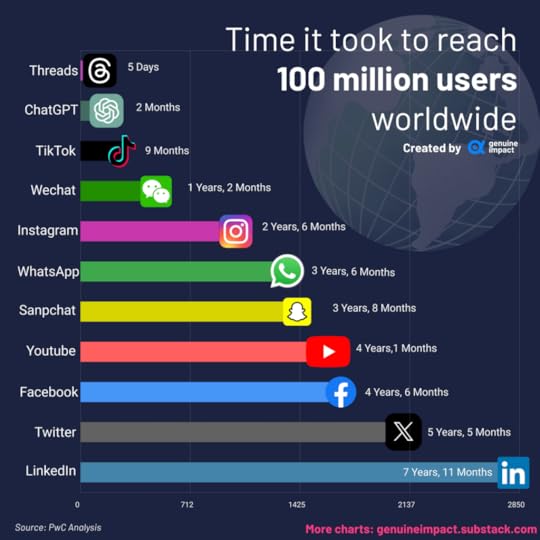

Let’s put it all in perspective though, because if anything, the path we were all on before ChatGPT became the second fastest growing tech to reach 100 million users, was one without AI. Now in a world with AI, every one of us needs to reassess where we are, where we want to go, who we want to be, and how AI can help us get there.

AI is Already as Smart as Elon MuskMo Gawdat, formerly chief business officer for Google X, and someone whose professional and personal work I greatly admire, recently presented at the Nordic Business Forum. I watched every minute of it.

In his talk, he shares that ChatGPT 4 is currently estimated to have an IQ equivalent to ~155. He likens this intelligence to that of Elon Musk, whose IQ is estimated between 155–180.

He then discussed the rapid evolution of generative AI and its exponential acceleration toward “10x.” For example, he outlines how the performance difference between ChatGPT 3x and 4 is roughly 10x. He then suggests a similar path to ChatGPT 5, 6, and so on.

As you’ll see in the video, Gawdat explains how theoretically, within a few years, we could arrive at genAI that would perform at IQ levels equivalent to ~1,500+.

”That’s the end of innovation done by our brains, because the smartest person in the room is the one that invents everything, makes all the decisions,” Gawdat said on stage.

But wait, don’t let panic set in just yet. Optimism and vision are essential right now.

Gawdat isn’t alone in this observation.

NVIDIA’s CEO, Jensen Huang, also predicted that AI will be smarter than humans in 5 years.

“If I gave an AI … every single test that you can possibly imagine, you make that list of tests and put it in front of the computer science industry, and I’m guessing in five years time, we’ll do well on every single one,” said Huang

At the 2024 Stanford Institute for Economic Policy Research Summit in Palo Alto, Huang also predicted that artificial general intelligence (AGI) could arrive in as little as five years. This will then usher in an era of AI that thinks like humans, beyond the current wave 2 of GenAI, taking information and generating new information.

His point?

Buckle in.

AI will also continue to evolve. We’ll see it become more effective generally and more capable vertically.

This is not only just beginning change and our need to define the role we want to play in it.

AInsightsIn every face of disruption, I believe that ignorance and arrogance equal irrelevance.

AI is going to become the smartest tech in the room. No ego, bias, or uninformed mindset should deny this. The question is, what are you going to do about it?

AI is going to continue to evolve. How you learn to work with AI or don’t defines your future.

If you’re waiting for someone to tell you what to do, you’re on the wrong side of transformation and innovation.

Change starts, with you.

This is a time for resilience. This is also a time for optimism.

At the Stanford Summit, Huang also emphasized the need for resilience, to be prepared for experimentation and failure.

“Unfortunately, resilience matters in success,” he said. “I don’t know how to teach it to you except for I hope suffering happens to you.”

His point is that building resilience is essential in defining success, especially in time when the future of AI creates more questions than answers.

OpenAI’s Sam Altman also believes that determination is the most important trait for entrepreneurs. He doesn’t emphasize talent or IQ, but instead doubles-down on grit and persistence in the face of the unknown.

Sam Altman uses ChatGPT for brainstorming.

Here's how you can, too: pic.twitter.com/LVeEb8ZCn1

— Anna Poplevina (@AnnaPoplevina) March 26, 2024

The truth is that we don’t know what we don’t know. Burning anxiety against the unknown is wasted energy. Instead, move toward the unknown to become part of the solution. Each question and each step brings clarity and movement. And with enough movement, comes momentum.

There are two ways forward. Open your mindset to see a parallel relationship between iteration and innovation. In terms of AI, it helps to break it down like this…

Automation: Use AI to make your work more efficient. Use AI to make your work more effective.

Augmentation: Use AI to experiment with the work you couldn’t or didn’t think to imagine before.

Together you create a recipe for iteration and eventually innovation.

If I could share some advice, it would be to question old ways.

Business as usual has a prescribed fate.

Just because that’s the way work has always been done doesn’t mean much in a world where the best ways, powered by AI, have yet to be defined. Remember, everyone gains access to AI now and over time. Not everything deserves automation. Some things necessitate complete reinvention to compete. That’s what makes these times so incredible.

This is why resilience is essential. But in addition to resilience, optimism is also a key ally toward success.

These are indeed incredible times. How you see them directly influences your perspective, what you see and in turn, what you do differently.

Altman recently described this moment as one of incredible opportunity.

“This is the most interesting year in human history, except for all future years,” he said.

this is the most interesting year in human history, except for all future years

— Sam Altman (@sama) March 17, 2024

With a new mindset, and with each next step, you’ll uncover ways to do new things that ultimately make the old things obsolete.

My friend Dharmesh Shah, co-founder of HubSpot, recently said, “you’re going to compete with AI.”

What was so fascinating to me was his underlying point.

He wasn’t making a statement. It was a provocation.

How do you hear it?

You compete with AI.

Do you hear that AI competes against you?

Or, do you hear that you compete more effectively *with* AI.

In this new world, “competing with” AI is how you’ll shape your future and the future of your work and business.

AI = Augmented Intelligence.

Let’s create a new and better future “with” AI!

When in doubt, remember this…

Please subscribe to AInsights.

Please subscribe to my master newsletter, a Quantum of Solis .

The post AInsights: AI will be Smarter Than Humans before 2030, So What? appeared first on Brian Solis.

March 22, 2024

The End of Business as Usual: An Academic Book Review

This made my day…

I just read a book review for “The End of Business as Usual,” my second solo book published in 2011.

Not only is it a review of a 13-year-old book. It’s a review in the “Journal of Academy of Business and Emerging Markets (ABEM),” Canada!

The book still has staying power!

Thank you to Dr Michael B. Pasco, Professor at the Graduate School of Business, San Beda University, Philippines, for the thoughtful review.

Interested in exec-level insights into the world of genAI? Please subscribe to AInsights.

Please also subscribe to my main newsletter, a Quantum of Solis.

The post The End of Business as Usual: An Academic Book Review appeared first on Brian Solis.