Brian Solis's Blog, page 12

August 31, 2024

Brian Solis Named Top AI Leader

Rethink Retail named Brian Solis as a “Top AI Leader” for 2024.

The 2024 Top AI Leaders in Retail by RETHINK Retail is a prestigious recognition that celebrates individuals and companies pioneering AI integration within the retail sector.

The list includes leaders from 10 distinguished categories: Retailers & Brands, Enterprise Tech, Growth Stage Tech, Startup Tech, Consultants & Analysts, Investors, Associations, R&D/Academia, Ethics & Compliance, and Thought Leaders.

AiR community members represent the foremost innovators and influencers driving AI advancements in retail. The selection is based on recommendations from industry experts and current AiR members.

Congratulations to the 2024 class of Top AI Leaders in Retail!

The post appeared first on Brian Solis.

August 19, 2024

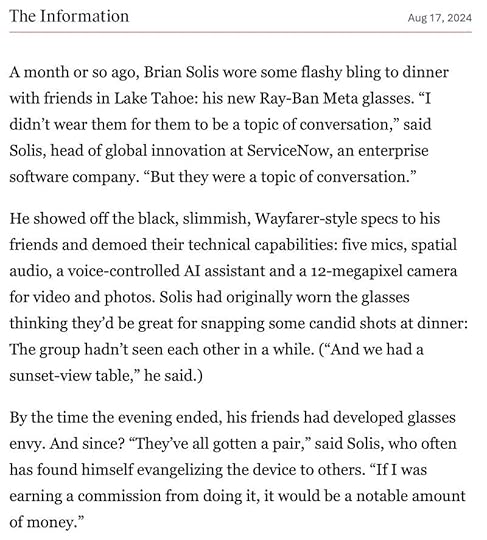

The Information Features Brian Solis’ Story About Life with AI-Powered Meta Ray-Ban Sunglasses

Art by Clark Miller, The Information

The Information‘s Abram Brown interviewed Brian Solis, Head of Global Innovation at ServiceNow, for his experience as a vocal user of Meta and Ray-Ban’s new AI-powered smart sunglasses. As an early-adopter, Brian has also worn the infamous Google Glass and Bose audio-only Frames in the past. While he skipped the first generation of the Meta Ray-Ban glasses, he jumped in on phase two because of the higher video and still image resolution, Spatial Audio and array of advanced mics, and most importantly, the integrated AI-functionality.

Silicon Valley Eyes an Unexpected Summer It ItemA month or so ago, Brian Solis wore some flashy bling to dinner with friends in Lake Tahoe: his new Ray-Ban Meta glasses. “I didn’t wear them for them to be a topic of conversation,” said Solis, head of global innovation at ServiceNow, an enterprise software company. “But they were a topic of conversation.”

He showed off the black, slimmish, Wayfarer-style specs to his friends and demoed their technical capabilities: five mics, spatial audio, a voice-controlled AI assistant and a 12-megapixel camera for video and photos. Solis had originally worn the glasses thinking they’d be great for snapping some candid shots at dinner: The group hadn’t seen each other in a while. (“And we had a sunset-view table,” he said.)

By the time the evening ended, his friends had developed glasses envy. And since? “They’ve all gotten a pair,” said Solis, who often has found himself evangelizing the device to others. “If I was earning a commission from doing it, it would be a notable amount of money.”

Click here to read the full article (subscription required).

Brian Solis wearing Meta Ray-Ban Sunglasses in Lake Tahoe

The post The Information Features Brian Solis’ Story About Life with AI-Powered Meta Ray-Ban Sunglasses appeared first on Brian Solis.

August 12, 2024

AInsights: OpenAI Introduces SearchGPT and With It, The Rise of an AI Search Revolution

AInsights: Your executive-level insights making sense of the latest in generative AI…

First Perplexity introduced genAI users to the practice of intelligent search. Then Perplexity introduced Pages to reflect intelligent summaries of your research. Now OpenAI is joining the mix to introduce SearchGPT, a genAI-powered search engine designed to take on Google and other generative AI platforms.

SearchGPT leverages OpenAI’s generative AI language models, specifically from GPT-4, to provide more intuitive and conversational search experiences. Instead of just listing links, usually those gamed by search engine optimization (SEO), SearchGPT generates concise summaries and detailed answers, often with relevant links and citations directly embedded in the response.

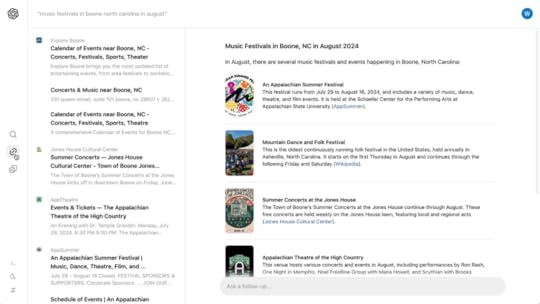

Let’s explore key features of SearchGPT…

User Experience

Ad-Free Interface: Unlike traditional search engines like Google and Bing, SearchGPT offers an ad-free user experience, which enhances usability by removing distractions.

Enhanced Search Interface: The interface features a larger search box and a three-column layout for easy navigation. Users can toggle between light and dark themes based on their preferences.

Visual Answers: SearchGPT includes a feature for “visual answers,” which provides AI-generated visuals to complement textual information, although specific details about this feature are still emerging.

Real-Time Information

SearchGPT combines real-time web data with AI to deliver up-to-date answers. This approach aims to reduce the effort required to find relevant information by providing clear and timely responses.

Follow-Up Questions

Users can ask follow-up questions in a conversational manner, with the search engine maintaining context from previous queries. This feature makes it easier to delve deeper into topics without starting new searches from scratch.

Publisher Partnerships

OpenAI has partnered with various publishers to ensure that content is properly attributed and linked back to original sources. This collaboration helps maintain a healthy ecosystem for content creators while providing users with credible information.

earchGPT is still in its prototype phase, currently available to a limited group of 10,000 test users. OpenAI plans to gather feedback from these users and publishers to refine the tool further. The ultimate goal is to integrate the best features of SearchGPT into ChatGPT, enhancing its utility as a conversational AI assistant.

AInsightsSearchGPT, OpenAI’s new AI-powered search engine, presents significant implications for business and marketing executives.

Think about SEO and content strategies today? A large set of resources are dedicated to consumer (customer) search behavior. At one point, Google dominated search and the resulting customer journey. For years I was an advisor to Google around micro-moments and also served as a CMO strategist to help brands design customer experiences and journeys that were optimized for mobile devices. Since then, search has continued to evolve with Youtube, Instagram, TikTok, and now genAI evolving search behaviors and journeys.

I call today’s search opportunities “Ignite Moments.” And they can happen anywhere now, requiring brands to reverse-engineer behaviors and technology platforms to build dynamic, contextual, native, and intuitive journeys for customers at the point of engagement.

SEO ad ContentFocus on Quality Over Quantity: SearchGPT’s emphasis on high-quality, well-structured content means that businesses need to focus on creating valuable and coherent content. This shift could penalize low-quality, hastily produced content, rewarding those who invest in thorough and meaningful content creation. Remember, Ignite Moments (more here).

Integrate Real-Time Data: The AI’s capability to access and integrate real-time web data means that SEO strategies must adapt to more dynamic and immediate content updates. This could involve more frequent content reviews and updates to stay relevant.

Marketing and AdvertisingClear Source Attribution: SearchGPT ensures that sources are clearly cited, like Perplexity, which can drive traffic to original content creators. This transparency can enhance the credibility of information and improve trust among users.

Reduced Dependency on Ads: The ad-free nature of SearchGPT might challenge traditional advertising models. Businesses will need to explore new ways to reach their audience, possibly focusing more on organic search strategies and content marketing.

Challenges and OpportunitiesAdoption and Adaptation: Convincing users to switch from established search engines like Google will be a challenge. However, businesses that adapt early to the new search paradigm can gain a competitive edge.

Operational Costs and Scalability: The high operational costs associated with AI-powered search engines could be a barrier. Businesses must weigh the benefits against the costs and consider how to scale their digital marketing efforts effectively.

Future DirectionsIntegration with ChatGPT: OpenAI plans to integrate the best features of SearchGPT into ChatGPT, potentially expanding its reach and utility. This integration could provide businesses with more comprehensive tools for customer interaction and support.

Local and E-commerce Information: SearchGPT’s ability to provide localized results and handle e-commerce queries can be leveraged by businesses to enhance their local SEO and online sales strategies

SearchGPT represents a significant shift in the search landscape, offering both opportunities and challenges for business and marketing executives. By focusing on high-quality content, adapting to new SEO practices, and leveraging the AI’s advanced capabilities, businesses can position themselves to thrive in this evolving intelligent, digital environment.

“AI search is going to become one of the key ways that people navigate the internet, and it’s crucial, in these early days, that the technology is built in a way that values, respects, and protects journalism and publishers. We look forward to partnering with OpenAI in the process, and creating a new way for readers to discover The Atlantic.” – Nicholas Thompson, CEO of The Atlantic

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: OpenAI Introduces SearchGPT and With It, The Rise of an AI Search Revolution appeared first on Brian Solis.

August 6, 2024

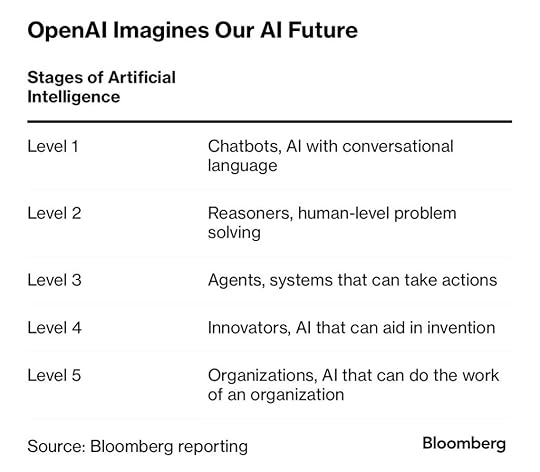

AInsights: OpenAI Defines Five Stages to Track Progress Toward Human-Level Intelligence

AInsights: Your executive-level insights making sense of the latest in generative AI…

OpenAI has outlined a five-level system to track the progress of artificial intelligence (AI) towards achieving human-level intelligence, also known as Artificial General Intelligence (AGI). According to an OpenAI spokesperson, the company told Bloomberg that the company is close to level two, “Reasoners,” where AI can perform problem-solving at the level of a human with a doctorate degree but no access to tools.

How close is OpenAI to level 2?

Let’s take a step back to understand the difference between where we are today with generative AI and how it compares to artificial general intelligence (AGI).

Generative AI and Artificial General Intelligence (AGI) represent two distinct stages in the evolution of artificial intelligence, each with unique capabilities and limitations.

Generative AI is currently a powerful tool for specific tasks involving content creation and pattern recognition, widely used across various industries to enhance productivity and creativity. However, it lacks true understanding and adaptability.

AGI is a type of AI that could learn to accomplish any intellectual task that human beings or animals can perform, or surpass human capabilities in the majority of economically valuable tasks. It represents the future goal of AI research, aiming to create machines with human-like cognitive abilities capable of understanding, learning, and innovating across diverse domains. While promising transformative potential, AGI remains a theoretical pursuit with significant challenges to overcome.

However, OpenAI articulated the perceived stages to get from genAI to AGI.

Let’s review a summary of each stage:

Level 1: Conversational AI (we are currently here)

At this initial stage, AI systems can engage in natural language conversations with humans. Examples include chatbots and virtual assistants like OpenAI’s ChatGPT. These systems can handle customer service interactions and provide basic assistance but are limited to language-based tasks and lack complex problem-solving abilities.

Level 2: Reasoners

The second stage involves AI systems capable of solving problems at the level of a human with a doctorate degree, but without access to external tools. These “Reasoners” are expected to excel in academic and professional fields, tackling complex problems independently. This marks a significant leap in AI’s cognitive abilities, enabling applications in research, medicine, and engineering.

Level 3: Agents

At this stage, AI systems, referred to as “Agents,” can autonomously perform tasks on behalf of users over an extended period. These systems can operate independently without constant human intervention, potentially revolutionizing business operations and efficiency by handling a variety of tasks across different domains.

Level 4: Innovators

The fourth level, known as “Innovators,” describes AI systems that can aid in developing new inventions. These systems can contribute to scientific discoveries and technological advancements by generating innovative solutions and ideas. This stage signifies AI’s ability to not just perform tasks but also to create and innovate.

Level 5: Organizations

The final stage envisions AI systems capable of performing the work of an entire organization. These “Organizations” can manage and execute all functions of a business, surpassing traditional human-based operations in terms of efficiency and productivity. This stage represents the pinnacle of AI development, where AI can autonomously run complex organizational structures.

OpenAI believes it is currently at Level 1 and is approaching Level 2. The timeline for achieving these advanced levels of AI varies, with estimates ranging from a few years to several decades. The progression through these stages highlights the incremental advancements in AI capabilities and the transformative potential of AGI.

Let’s revisit the question, how close is OpenAI to achieving level two, “Reasoners”?

The answer is a secret and appears to be codenamed “Project Strawberry.”

Project Strawberry aims to enhance AI’s reasoning capabilities, enabling models to plan ahead, navigate the internet autonomously, and perform deep research. The project builds on techniques like Stanford’s Self-Taught Reasoner (STaR), which allows AI models to iteratively create their own training data, thus improving their reasoning skills over time.

Current Capabilities and Demonstrations:

Recent demonstrations within OpenAI have shown promising strides towards human-like reasoning. For instance, internal tests have reportedly seen AI models scoring over 90% on challenging math tests, a significant indicator of advanced problem-solving abilities. These capabilities suggest that OpenAI is on the cusp of achieving the reasoning skills required for Level 2.

Challenges and Outlook:

Despite these advancements, the exact timeline for publicly releasing these capabilities remains, well, uncertain. From what I understand, the development process involves continuous fine-tuning and testing to ensure reliability and effectiveness. While there have been significant advancements, the leap from current capabilities to fully achieving Level 2 is still a work in progress.

I guess we’ll know when they’re ready for us to know.

AInsightsWhat do openAI’s five stages of artificial intelligence mean for business and technology executives?

For business and technology executives, OpenAI’s five stages of AI development present both opportunities and challenges. Embracing and strategically leveraging these advancements can lead to significant improvements in efficiency, innovation, and competitive advantage.

Stage 1: ChatbotsCustomer Service: AI systems like ChatGPT and ServiceNow’s Now Assist are already enhancing customer service by providing 24/7 support, handling routine inquiries, and improving customer satisfaction.

Internal Productivity: Businesses are using chatbots for internal functions such as employee onboarding, IT support, and HR inquiries, streamlining operations and reducing costs.

Considerations:

User Experience: Investing in user-friendly interfaces and continuous improvement of chatbot capabilities is essential to maximize their effectiveness.

Stage 2: ReasonersAdvanced Problem-Solving: AI systems at this stage can solve complex problems at a level comparable to a human with a doctorate degree. This can revolutionize fields like research, finance, and engineering by providing expert-level insights and solutions without human intervention.

Strategic Decision-Making: Businesses can leverage these AI systems for strategic planning, risk assessment, and data analysis, enhancing decision-making processes.

Considerations:

Integration: Ensuring these advanced AI systems integrate seamlessly with existing business processes and tools is critical.

Ethical Use: Establishing guidelines for ethical use and addressing potential biases in AI decision-making is essential to maintain trust and compliance. Ethics also apply from this stage through to stage 5 in how employers upskill and augment workers with AI.

Organizational and business model innovation: Here and through every stage, C-suites will need to think forward to reimagine organizational and business model transformation to prepare for the coming autonomous enterprise.

Stage 3: AgentsAutonomous Operations: AI agents can perform tasks autonomously over extended periods, potentially transforming business operations by handling complex workflows without constant human supervision. This sets the stage for an autonomous, self-driving enterprise.

Operational Efficiency: These agents can optimize supply chains, manage logistics, and even handle customer relations autonomously, significantly improving efficiency and reducing operational costs.

Considerations:

Monitoring and Control: Implementing robust monitoring systems to oversee AI agents and ensure they are performing tasks correctly and ethically.

Adaptability: Ensuring AI agents can adapt to changing business environments and requirements is crucial for long-term success.

Stage 4: InnovatorsInnovation and R&D: AI systems capable of innovation can contribute to new product development, scientific research, and technological advancements, potentially leading to breakthroughs in various industries.

Competitive Advantage: Businesses that harness innovative AI can gain a significant competitive edge by bringing new products and solutions to market faster.

Considerations:

Collaboration: Encouraging collaboration between AI systems and human experts to maximize innovative potential.

Intellectual Property: Managing intellectual property rights and ensuring that innovations generated by AI are protected and leveraged effectively.

Stage 5: OrganizationsComplete Automation (the autonomous enterprise): AI systems at this stage can perform the work of an entire organization, from strategic planning to operational execution, potentially making traditional organizational structures obsolete

Efficiency and Productivity: Stage 5 AI systems can operate with unparalleled efficiency, reducing costs and increasing productivity across all business functions.

Considerations:

Human Workforce: Addressing the implications for the human workforce, including potential job displacement and the need for reskilling and upskilling.

Governance and Ethics: Establishing governance frameworks to ensure AI systems operate ethically, transparently, and in alignment with organizational goals and societal values.

Understanding and preparing for each stage, now, is absolutely crucial for navigating the future landscape of AI-driven business transformation.

Please subscribe to AInsights, here.

If you’d like to join my master mailing list for news and events, please follow, a Quantum of Solis.

The post AInsights: OpenAI Defines Five Stages to Track Progress Toward Human-Level Intelligence appeared first on Brian Solis.

August 5, 2024

Brian Solis to Keynote the SmartRecruiters Hiring Success Conference in Amsterdam; Solis Will Explore Hiring Talent in an AI-First World

SmartRecruiters, the leading provider of enterprise hiring software, announced today that its Hiring Success Conference will take place in EMEA this fall. The EMEA conference will be held in Amsterdam on September 11th. This exclusive events has been designed for Talent Acquisition (TA) professionals to learn, network and engage with industry leaders.

Key topics include:

Future-Proofing Talent Acquisition: Embracing human-centered innovation in a digital world.Case Studies & Success Stories: Leveraging AI, automation in hiring strategies, and success stories from leading companies.Strategic Insights: A vision for the evolution of TA technology, the future of work, and skills-based hiring.Technology & Analytics: Enhancing candidate experience, talent analytics, and compliance strategies.Leadership & Innovation: The mission ahead for TA and HR leaders, humanizing TA, and onboarding new TA technology.Brian Solis and Chris Riddell will be the keynote speakers, bringing their unparalleled expertise and visionary perspectives to the conferences.

Brian Solis – EMEA, Amsterdam (September 11th)

Brian Solis is a world-renowned digital analyst, anthropologist, and futurist. Brian is also the Head of Global Innovation at ServiceNow.

With a profound understanding of the evolving AI landscape, Brian’s keynote will explore how to navigate the evolution of culture, work, and technology to build talent acquisition strategies that will allow businesses to meet the future head-on.

Attendees will gain insights into how digital anthropology can inform the development of innovative hiring practices and create meaningful connections with candidates. Brian’s expertise will help TA professionals adapt to the changing nature of work and drive their organizations towards sustained success.

For more information about Brian Solis, visit briansolis.com.

The post Brian Solis to Keynote the SmartRecruiters Hiring Success Conference in Amsterdam; Solis Will Explore Hiring Talent in an AI-First World appeared first on Brian Solis.

August 1, 2024

Brian Solis Named Top Technology Influencer by Technology Magazine

Technology Magazine highlights the top 10 technology influencers and thought leaders driving innovation and guiding industry trends.

Amid a fast-moving technology landscape, staying ahead of trends is crucial for businesses and individuals alike. As innovations in AI, blockchain, quantum computing and other cutting-edge fields continue to reshape our world, the insights of leading tech visionaries become increasingly valuable.

This month, Technology Magazine spotlights 10 of the most influential voices in technology today – from tech founders to leading visionaries – exploring their key ideas and impact on the ever-changing technology ecosystem.

Brian Solis, Head of Global Innovation, ServiceNow Location: California, United StatesSector: Analysis

Brian Solis, Head of Global Innovation at ServiceNow, is a digital anthropologist, eight-time best-selling author and renowned international keynote speaker. Forbes hails him as “one of the more creative and brilliant business minds of our time.” For over two decades, he has studied Digital Darwinism, exploring the impact of disruption on businesses, markets and society. His research spans digital transformation, customer experience, innovation and future trends. A prolific writer, Solis has published over 60 research papers, while his speaking engagements inspire audiences worldwide to shape the future.

The post appeared first on Brian Solis.

July 22, 2024

Brian Solis’ Keynote Helps Leaders Reimagine Their Business in an AI-First World

Will Artificial Intelligence Outpace Human Genius?

Here’s a quick take from a recent keynote in Italy…

The truth is that no leader has experienced a technology like genAI before, one that performs tasks as well as or better than humans.

What’s clear, we have now catapulted toward a post-digital transformation era. AI has forever changed the trajectory of business evolution, putting ‘business as usual’ in the rearview mirror.

Leaders now face an entirely new set of prospects and challenges that will test their creativity, resilience, and vision.

It’s time to reimagine your business in an AI-first world!

In this rousing keynote, world-renowned futurist and best-selling author Brian Solis will share why this is the moment for leaders to reimagine their organizations for an entirely new future. He will explore why automation is no longer the goal, but instead the norm, why augmentation will be the key to differentiation, and how leaders can forge the *mind shift* required to unlock a new future as an AI-first exponential growth business.

About BrianBrian Solis is Head of Global Innovation at ServiceNow.

He is also a world-renowned keynote speaker, 8x Best-Selling Author, and Futurist who studies disruptive technologies and their impact on business and society.

To book him at your next event, please contact  Zach Nadler: zach@vaynerspeakers.com

Zach Nadler: zach@vaynerspeakers.com

The post Brian Solis’ Keynote Helps Leaders Reimagine Their Business in an AI-First World appeared first on Brian Solis.

Unlocking Potential and the Future of Work: An Interview with Espresso Displays Part 1

While in Sydney delivering the locknote at the ServiceNow Sydney Summit, I had the opportunity to meet with my friends at Espresso Displays. We were able to turn my room at the W Sydney into a mini studio. Our conversation explored the future of work in two parts. Here’s part one…

Work is evolving faster than ever before. In an age of uncertainty, its even more important to rethink our relationship to work. Brian Solis, the Global Head of Innovation at Service Now, recently shared insights and perspectives on the next evolutions of productivity and experiences that can create a healthier approach to work.

In this interview, Solis delves into the shifting landscapes of work culture and technology, highlighting the importance of reimagining workspaces, tools, and mindsets.

Solis emphasizes that the best work is achieved not merely by being more productive but through meaningful and inspired experiences. He explores emerging technologies and trends, aiming to reverse engineer business operations and foster innovation.

According to Solis, work is no longer confined to traditional settings; it’s about finding inspiration anywhere, anytime.

“What excites me the most about this is the Playbook isn’t written,” Solis remarks. “Work itself can be anywhere, any place, anytime.” He sees this as an opportunity to unleash creativity and redefine the meaning of work. Rather than viewing work as a constraint, Solis encourages embracing uncertainty and leveraging it to shape the future.

Reflecting on the future of work, Solis emphasizes the need to rethink workspaces and tools. He advocates for spaces that inspire collaboration and creativity, whether physical or virtual. Solis himself travels extensively, relying on innovative tools like the espresso display to stay connected and productive on the go.

“Work reimagined, whether it’s at home or in the office, has to be the same,” Solis asserts. He envisions a world where technology empowers individuals to achieve the extraordinary, transcending the limitations of traditional work environments.

As we navigate the ever-changing landscape of work, Solis reminds us that the future is ours to shape. By embracing uncertainty and leveraging technology, we can unlock new possibilities and redefine the way we work.

Check out the full interview here:

The post Unlocking Potential and the Future of Work: An Interview with Espresso Displays Part 1 appeared first on Brian Solis.

June 25, 2024

Brian Solis to Keynote the 16th Annual Drucker Forum in Vienna

Brian Solis will keynote the prestigious Drucker Forum on November 14-15, 2024 in Vienna.

The theme for the event this year is, “”The Next Knowledge Work: Managing for New Levels of Value Creation and Innovation.”

RSVP here: For 5% off in June, use code: Online2024

Brian SolisHead of Global Innovation, ServiceNow, Digital Anthropologist and Futurist, 8x Best-Selling Author

Futurist: Forbes heralded Brian Solis as “one of the more creative and brilliant business minds of our time.” He was recently named a “top futurist speaker,” by ReadWrite. ZDNet called him “one of the 21st century business world’s leading thinkers.”

Brian helps audiences understand the evolving, complex landscape of digital trends and how they impact our work, markets, and business over time. Whether it’s generative AI, AR/VR or and spatial computing, web3, the metaverse, IoT, digital twins, or robotics and autonomous vehicles and systems, Brian humanizes each wave of emergent disruption to help audiences understand trends and see themselves productively shaping the future. He helps shift people’s mindsets from being overwhelmed, confused, or intimidated to moving forward with curiosity, excitement, and imagination.

Digital Anthropologist: Brian is one of the industry’s first digital anthropologists who helped define the practice and humanized the trends. He observes how technology changes people’s behavior as leaders, employees, or customers and understand their aspirations, their values, how they make decisions, and how they want to work.

Through this research, Brian helps audiences empathize with different generations (Gen-Y, Z, Alpha) and understand how technology creates a cross-generational superset of people with similar behaviors, interests, and aspirations. Brian coined these important groups as Generation-Connected and Generation-Novel.

ServiceNow: Currently, Brian serves as the Head of Global Innovation at ServiceNow. In his role, he sets the strategic direction and programming for ServiceNow’s Innovation and Executive Briefing Centers in Silicon Valley, New York, London, Paris, Sydney, and Singapore. Additionally, Brian designs and delivers engagements with customers to advise on digital and business innovation strategies. He writes for leading publications to help executives and customers understand technology and market trends and inspire business model innovation.

United Nations: Brian serves as a special adviser to the UN global innovation team. He develops workshops and keynotes that explore emergent trends and future scenarios, scenario strategies, skills development, and culture and leadership.

Salesforce: As the leader of Global Innovation at Salesforce, Brian gained a reputation as the “CxO Whisperer” because of his ability to translate shifting technology and market trends into actionable strategies. He also has a deep understanding of the challenges and opportunities facing companies and helps leaders think differently about current priorities and investments to future proof their organizations. Brian also partnered with leading brands to develop innovation pilots in service, L&D, and commerce, including applications that featured digital humans, 3D/immersive shopping and e-commerce powered by augmented reality.

Brian is personable approachable and engaging. And as one event organizer recently put it, “Brian has a special gift to engage people and help everyone believe they can be part of change.” The Conference Board said it this way, “Brian is the futurist we all need now.”

He’s an eight-time, best-selling, and award-winning author and has published more than 60 research reports on the future of business, work, digital transformation and innovation across industries such as retail, healthcare, financial services, insurance, and public sector. He’s currently working on his ninth book due out later in 2023.

His blog, BrianSolis.com, is among the world’s leading business online resources to his 800,000 followers. Brian also contributes to FastCompany, ZDNet, Forbes, Techonomy, CIO, and Singularity University.

The post Brian Solis to Keynote the 16th Annual Drucker Forum in Vienna appeared first on Brian Solis.

June 24, 2024

Brian Solis to Keynote GlobalLink NEXT in NY on June 26th

Brian Solis is set to serve as the closing keynote speaker at the GlobalLink NEXT event in New York City on June 26th, 2024.

GlobalLink NEXT is an annual invitation-only conference hosted by TransPerfect. The event brings together industry professionals, technology & solutions experts, client support teams, and users from around the globe.3

In his keynote, Brian will explore how AI is influencing customer behaviors and expectations and how businesses need to rethink customer journeys and experiences to guide them toward mutually beneficial outcomes.

The post Brian Solis to Keynote GlobalLink NEXT in NY on June 26th appeared first on Brian Solis.