Marina Gorbis's Blog, page 1291

May 28, 2015

How the Navy SEALs Train for Leadership Excellence

Almost every world-class, high-performance organization takes training and education seriously. But Navy SEALs go uncomfortably beyond. They’re obsessive and obsessed. They are arguably the best in the world at what they do. Their dedication to relentless training and intensive preparation, however, is utterly alien to the overwhelming majority of businesses and professional enterprises worldwide. That’s important, not because I think MBAs should be more like SEALS—I don’t—but because real-world excellence requires more than commitment to educational achievement.

As an educator, I fear world-class business schools and high-performance businesses overinvest in “education” and dramatically underinvest in “training.” Human capital champions in higher education and industry typically prize knowledge over skills. Crassly put, leaders and managers get knowledge and education while training and skills go to those who do the work. That business bias is both dangerous and counterproductive. The SEALS can’t afford it. “Under pressure,” according to SEAL lore, “you don’t rise to the occasion, you sink to the level of your training. That’s why we train so hard.” When I see just how difficult and challenging it is for so many smart and talented organizations to innovate and adapt under pressure, I see people who are overeducated and undertrained. That scares me.

So I reached out to Brandon Webb, an innovative SEAL trainer/educator, and CEO of Force12 Media for real-world perspective on what industry could learn from a special operations sensibility. Webb, who served in the Navy from 1993 to 2006 and radically redesigned the SEAL training course curriculum, graciously shared his insight about what works – and what fails – when effecting a training transformation.

A member of Seal Team 3, Webb became the Naval Special Warfare Command Sniper Course Manager in 2003. This was a precarious time. The SEALs leadership recognized that technical excellence—better shooting and better shots—didn’t go nearly far enough in addressing the complex environments and demands that would be made upon sniper teams in wartime deployments in multiple theaters. The wartime challenge demanded better collaboration, greater situational awareness and more strategic application of cutting edge technology for the war-fighter. The post-9/11 environment demanded it. In response, some of the radical changes that Webb designed are the following: He broke the class into pairs, assigning mentors to boost support and accountability; he created classes that explore and explain technologies giving participants greater insight into the physics and underlying mechanics of their equipment; and he adopted the “mental management” techniques of Olympic world-champion marksmen, which we were at first reluctantly but then enthusiastically embraced. The results impressed the war-fighting community. SEALs like Marcus Luttrell (Lone Survivor) and Chris Kyle (American Sniper) observed how that course transformed their field capabilities and effectiveness.

“Our instructors were teaching better, and our students were learning better,” Webb noted in The Red Circle, his 2012 SEAL memoir. “The course standards got harder, if anything—but something fascinating happened: Instead of flunking higher numbers of students, we started graduating more. Before we redid the course, SEAL sniper school had an average attrition rate of about 30 percent. By the time we had gone through the bulk of our overhaul, it had plummeted to less than 5 percent.” And as he told me in a recent email exchange, he accomplished this by drawing from many diverse areas outside the military: “We took best practices from teaching, professional sports, and Olympic champions, and we made our course one of the best in the world in a very short period of time…” Webb noted. “We re-wrote the entire curriculum and saw our graduation rate go from 70% to 98% instantly, and hold there…”

Webb explicitly emphasized four transformational training themes. They’re neither obvious nor cliché. Unfortunately, I rarely see them in Fortune 1000 training programs/executive education or elite MBA programs.

1. Produce Excellence, Not “Above Average”

The first describes where Webb simply would not professionally go. “Being very good wasn’t good enough,” Webb declared. “Training programs shouldn’t be designed to deliver competence; they must be dedicated to producing excellence. Serious organizations don’t aspire to be comfortably above average.” “I honestly don’t even want to focus on good or competent,” Webb wrote, “it’s not in my nature and I don’t want to be part of any team or organization that is willing to set this standard. ‘Aim high, miss high,’ and you can quote me on that.”

In other words, training divorced from excellence is mere compliance. It is more “box ticking” than human capital investment. Is “above average” training really worth the time, energy and expense? A kaizen—continuous improvement—ethos is one thing. But customer service and leadership training that only enhances rather than transforms capabilities and skills doesn’t buy very much.

Webb’s hardcore perspective poses an existential challenge to most organizations’ views of human resources. Do they really want training to empower and bring out the best in their people? Or does everyone train with the tacit expectation that excellence matters less than being a bit better? Webb wonders whether most companies are serious about what training can and should mean.

2. Incentivize Excellence Not Competence

This links directly to his second theme around “getting the incentives right.” Even if the training itself is world-class, organizations need recognition and rewards systems that explicitly acknowledge and promote excellence. And, says Webb, also need the courage and integrity to reposition and replace those who can’t—or won’t—step up.

“For training to work it has to be effective and incentives have to be in place (financial, personal growth, promotion, etc.) for training to be effective in the work place and in order to get employee ‘buy in,’” Webb notes. “I’m a big fan of economist Milton Freidman… it’s as simple as creating alignment through incentives and that’s what we did by creating an instructor/student mentor program. The instructors had accountability (they would be evaluated on their student’s performance) which created the right incentive for them to pass. This made a huge difference. Plus we switched to a positive style of teaching and we saw our graduation rate rocket up.”

Should training overwhelmingly focus on skills enhancement? Or must it be managed to build better bonds and relationships throughout the enterprise? Webb unambiguously champions both. The training transformation made the SEALs culture more open to innovation and exchange. Incentives aligning and facilitating accountability improved the entire organization, not just the trainees.

3. Incorporate New Ideas from the Ground

This amplifies Webb’s third theme: successful training must be dynamic, open and innovative. Ongoing transformation—not just incremental improvement—is as important for trainers as trainees. “It’s every teacher’s job to be rigorous about constantly being open to new ideas and innovation,” Webb asserts. “It’s a huge edge, sometime life-saving, to adopt a good idea early and put it into practice…As an instructor I learned that you are never done learning, and your students can be a wealth of information, especially when guys like Chris Kyle would come back from Iraq and make recommendations on how to better train students to the urban sniper environment. We incorporated this type of mission brief back and actively sought out this knowledge from the SEAL sniper’s who were returning from places like Iraq, Afghanistan, Africa and other not so friendly places. Then we would take this knowledge and incorporate it into our yearly curriculum review; if important enough, we’d make the change within weeks. That’s how fast we could adapt our course curriculum and get approvals.”

4. Lead by Example

Getting better at getting better is a vital organizing principle for learning organizations. Arguably Webb’s most passionate training theme is the one that reflects his battlefield experiences, not just his training triumphs. The most important training behavior a leader can demonstrate, he asserts, is leading by example.

“Leading by example means never asking your team to do something you aren’t willing to do yourself,” Webb writes. “This can’t be faked, do it right and your team will respect you and follow you. Don’t do this, especially in a SEAL team, and you are doomed as a leader. I’ve seen it happen, and careers ended when it did. Lead by example and watch your team elevate you with their own accomplishments.”

If your organization cares about innovation or transforming customer service or being data-driven, how do you lead by example? In Laszlo Bock’s otherwise superb Google-based book on performance analytics—Work Rules!—the phrase “lead by example” is nowhere to be found. That’s both a pity and opportunity missed because, as Webb stresses, leading by example is what truly empowers small teams and teamwork.

“I’ve seen small teams accomplish incredible things in training (my times at the sniper school) and combat (Afghanistan and Iraq),” Webb recalls. “In Special Operations environments and top business environments, you have the privilege of working with people who just get the job done at all costs. They are self-motivators. Even if they don’t have the know-how, they will figure it out and just make it happen. It’s amazing to have a whole team that thinks this way, and to see what they can accomplish.”

There are no panaceas. The level of motivation, dedication and self-sacrifice the SEALs demand from themselves and each other goes far, far beyond what most businesses and business schools should ever ask, let alone expect, from their people. But that said, for leaders and managers who truly care about their people and their customers, the SEALs training template deserves to be taken seriously. No one rightly doubts the vital role education plays in creating and sustaining economic competitiveness worldwide. But it’s long past time that CEOs, boards, business schools and universities revisit what world-class training should mean, as well.

Get Your Message Across to a Skeptical Audience

Persuading decision makers that your proposals and recommendations are worthy of their time and attention is a tough challenge – even for the most experienced and admired experts. So what should you do if you find yourself having to persuade an audience that doesn’t know about – or is even skeptical of – your expertise and experience?

Persuasion researchers know that decision-makers will often place their faith less in what is being said, and more in who is saying it. For good reason–following a trusted authority often reduces feelings of uncertainty. In today’s constantly changing business environment, it’s increasingly the messenger that carries sway, not the message.

Therefore, it’s crucial that you convince your audience you have the necessary expertise to make a recommendation – which can present problems if you lack credibility.

You need to be seen as competent and knowledgeable, yet recounting a list of your accomplishments, successes and triumphs, however impressive, will do little to endear you to others. No one likes a braggart. But arranging for someone to do it on your behalf can be a remarkably efficient tactic in overcoming the self-promotion dilemma.

Take, for example, a set of studies led by Stanford University’s Jeffrey Pfeffer, who found that arranging for an intermediary to toot your horn can be very effective. Participants in one study were asked to play the role of a book publisher dealing with an experienced and successful author and read excerpts from a negotiation for a sizeable book advance. Half read excerpts from the agent, touting the author’s accomplishments. The other group read identical comments made by the author himself. The results were clear. Participants rated the author much more favourably on nearly every dimension—especially likeability—when the author’s agent sang his praises instead of the author himself. Remarkably, despite the fact that participants were aware that agents have a financial interest in their authors’ success and were therefore biased, hardly any took this into account.

Further Reading

HBR Guide to Managing Up and Across

Leadership & Managing People Book

19.95

Add to Cart

Save

Share

In another study with real estate agents, my team and I measured the impact of a receptionist introducing a realtor’s credentials before putting through a call from a prospective client. Customers interested in selling a property were truthfully informed of the agent’s qualifications and training before the inquiry was routed to them. The impact of this honest and cost-free introduction was impressive. The agency immediately measured a 19.6 percent rise in the number of appointments they booked compared to when no introductions were made. So arranging for others to tout your expertise before you make your case can increase the likelihood of people paying attention and acting on your advice.

Remember that the same is true if your proposal is being delivered in written form. When submitting a proposal or recommendation, avoid making the mistake of squirreling away you and your team’s credentials towards the end of an already full document. Instead, make sure that they are prominently positioned up front.

Another approach for winning people over when you lack experience? Play up your potential.

In research led by persuasion scientist Zakary Tormala, participants were asked to evaluate applicants for a senior manager position in a large corporation whose backgrounds and qualifications differed only in one key aspect: one had gained 2 years of relevant industry experience and scored highly on a leadership assessment test and the other had gained little experience but scored highly on a leadership potential test. Despite the experience deficit, the candidate who had scored highly on the leadership potential test was rated as more likely to be a successful hire, even though they were objectively much less qualified.

The persuasive pull of potential doesn’t just hold true in recruitment contexts. Facebook users shown a series of quotes about a comedian registered much greater interest (measured by click-rates) and liking (measured by fan-rates) when informed of the comedian’s promise – “This guy could become the next big thing” – rather than his actual achievements – “Critics say he has become the next big thing.”

If you have an abundance of talent but a lack of on-the-job experience, all is not lost. In addition to introducing your know-how before you make your proposal, also try including a statement that signals the promise of your potential. Doing so might persuade audiences to think about you more positively, which in turn, could tip the balance in your favor – even if you’re not an expert on paper.

What Africa’s Leaders Have Learned About Facing Huge Challenges

There is a sea change going on in African leadership. Over the past decade, six of the fastest growing economies in the world have been African. Since 2000, for example, Rwanda has racked up average annual GDP growth in excess of 8%—exceeding 12% during some quarters of the Great Recession. If this continues, Rwanda will become a middle-income country by 2020.

This is remarkable given that the country was devastated by genocide a decade earlier. Liberia is also a standout—averaging over 8% GDP growth in the past five years, and over 10% in the past year in spite of the crippling influence of Ebola in the region.

A story behind the story of Africa’s growth is the influence of a remarkable organization called the Africa Governance Initiative (AGI), launched by former UK Prime Minister Tony Blair and a small group of organizational experts who believe that influence is a learnable skill. AGI places teams of two or three advisers at the side of elected leaders in Rwanda, Liberia, Sierra Leone, Malawi, and elsewhere, magnifying a president’s ability to get things done.

AGI leaders are the first to admit that nothing they do is rocket science. Great leadership rarely is. In fact, CEO Nick Thompson says, “Our people often tell me their work is not especially intellectually challenging. It is, however, incredibly emotionally challenging.” Leadership usually is.

Here is AGI’s prescription for leaders with huge obstacles in their path.

1. Create a 150-day plan.

The first job of leadership is to focus attention. When Ellen Johnson-Sirleaf was reelected as president of Liberia, she faced unemployment, health crises, social unrest, and feeble infrastructure on one side, and a historically corrupt bureaucracy, few resources, and a mandate for immediate results on the other. For a head of state, having to choose which of such acute issues to pursue can feel perilous. It’s essential to decide what to do and what not to do.

AGI has found that an effective way to force tough tradeoff decisions is to focus on an urgent and defined timeline—one that is long enough to get something meaningful done but short enough to demand disciplined attention. The AGI team focused Johnson-Sirleaf’s Cabinet on developing a 150-day plan. On the wisdom of this timeline, Thompson says, “When a new leader comes to power she must earn the trust she’ll need to produce long-term reforms by delivering meaningful short-term results.”

2. Set Measurable Goals

Within days of her reelection, President Johnson-Sirleaf formed a task force of her most trusted and competent ministers. The AGI team engaged them in vigorous debate about what was necessary, but also about—equally important—what was feasible. The result was a finite set of very concrete goals. Among other things, they promised to:

Put 6,000 young people to work on road maintenance and beach cleanup projects.

Open 150 km of feeder roads, linking 30 communities in two counties.

Open 150 new sanitation facilities.

Complete 11 reinforced concrete bridges.

Open 75 community wells in three counties.

What’s peculiar about the design of these goals is that the president made commitments that were strikingly measurable. And because they were measurable, they were influential.

In order to get things done, Johnson-Sirleaf needed to influence the behavior of thousands of civil servants in her sprawling government bureaucracy. Many of them may not even have voted for her. Quite a number might have disagreed with her priorities. And others may have become complacent with the low expectations the voting public had of their government services. Understanding this challenge, the president mobilized energy and focus by articulating goals that were easily measurable.

3. Go Public

Having gained consensus from her Cabinet on a realistic set of measurable goals, the president’s next challenge was to influence their fulfillment. The AGI team has found that frequent—and excruciatingly public—review of progress (or the lack thereof) is a potent way to build both public trust and leadership accountability.

It’s one thing to drone on in press conferences about your general commitment to building infrastructure. It’s another to specifically declare that within 150 days you and your Cabinet will open 150 kilometers of feeder roads and identify the location of those roads—then be transparent about results. And that’s what the president did.

This transparency immediately motivated and focused her Cabinet. It created a profound and pervasive sense of a very productive emotion: accountability. Johnson-Sirleaf understood that if half the roads weren’t finished halfway through the 150 days, the lapse would be fodder for political opponents in a way that could cripple her leadership. But she concluded that leaving a real legacy to her country would not come without precisely this kind of risk.

On February 1, 2012, she issued a “Statement of Accountability” inviting all Liberians to hold her and her government’s feet to the fire to deliver. It was an instance of remarkable integrity and transparency that showed Liberians they had a new kind of leader.

4. Gentle Pressure. Relentlessly Applied.

When leaders try to get things done, some do a good job of focusing on meaningful and measurable goals, and a few even declare their commitments publicly. But then they fail to build a culture of accountability that values results over harmony. The most crucial moment of truth for leaders is what they do when—not if—people fail to deliver. Liberia’s troubled history was an almost uninterrupted chain of broken promises. The culture was one where others would ignore your failures if you covered for theirs. AGI helped Johnson-Sirleaf’s staffers to develop rigorous, consistent measures of progress that provoked countless salubriously awkward moments.

The president’s task force met regularly and ruthlessly to compare progress to commitments. Some weeks, things looked good. Other weeks, not so much. But Johnson-Sirleaf was unflinching in holding leaders to account, creating both a new culture of productivity and impressive results. At the same time, she was generous in sharing the spotlight with others for early wins, staging photo opportunities and arranging media profiles for ministers who produced results.

74% of the objectives in the 150-day plan were achieved. And even more importantly, Liberian leaders developed a capacity for achievement that continues to inspire.

Simple principles appropriately applied can restore trust, create hope, and improve results. Even in the most complex political environments one can act with independence and integrity to do great things. And when leaders do the work required to mobilize effective behavior, remarkable things can be accomplished.

The Health Care Industry Needs to Start Taking Women Seriously

What is the greatest impediment preventing Americans from getting good health care? Surprisingly, it’s not the cost of care. Instead, according to new research from the Center for Talent Innovation (CTI), the fundamental issue is the health care industry’s failure to develop a nuanced understanding of, and commitment to, women as consumers and decision makers.

According to our report The Power of the Purse: Engaging Women Decision Makers for Healthy Outcomes, which was based on a multi-market survey of 9,218 respondents in the U.S., UK, Germany, Japan, and Brazil, health care consumers are overwhelmingly female and have huge unmet needs. For women, time is at a premium — 77% don’t do what they know they should do to stay healthy because, according to 62%, they lack the time. Women are swimming in health information but don’t know which sources to believe: 53% think they can get the best health information online while only 31% of these women trust online sources. Not only are women starved for resources, but they don’t trust the professionals who try to serve them. Of the women surveyed, 78% don’t fully trust their insurance provider, 83% don’t fully trust the pharmaceutical company that makes their medicine, and 35% don’t fully trust their physician.

Without the time, information, and trusted relationships that inform good decision making, we found more than half (58%) of women surveyed lack confidence in their ability to make good health care decisions for themselves and their families.

These findings should be a huge cause for concern among all players in the health care industry. Women account for a significant chunk of the market. Fifty-nine percent of women in our multi-market sample are making health care decisions for others. That number shoots up to 94% among working mothers with kids under 18. These women set the health and wellness agenda for themselves and their families, choose treatment regimens, and hire and fire doctors, pharmacists, and insurance providers. Given the influence of these consumers, you would expect that the health care industry would be supporting them with tools and services. Yet, strapped for time themselves, many doctors focus on patients to the exclusion of the decision maker accompanying them to the exam room; drug trials continue to ignore sex differences in medical trials and the impact that may have on dosage; and much information about insurance policies continues to be confusing and, at times, opaque.

The good news: By engaging this market segment and rebuilding trust, health care companies can have a first-mover advantage. They must first develop gender smarts with customers and exhibit the behaviors women seek, as decision makers, to serve their needs. As part of our study, we uncovered the behaviors that make the biggest difference in building trust and satisfaction with women:

Doctors can foster dialogue and provide clear communication. Reporting test results in an understandable way, openly discussing preventative care, and proactively managing the health of women and those they make decisions for, as well as providing them with information that help them make those decisions, all go a long way toward creating a trusted partnership.

Insurance companies can provide the coverage women want by making preventative care affordable, making it easy to find doctors in-network, and by providing easy, friendly, informative customer service. These seem like no-brainers, but in our interviews and focus groups, women continually report these provisions absent in their own relationships with insurance providers.

Pharmaceutical companies can win trust by ensuring that clear and comprehensive information accompanies prescriptions and is available both online and by telephone, and by providing gender- and ethnic-specific drug recommendations.

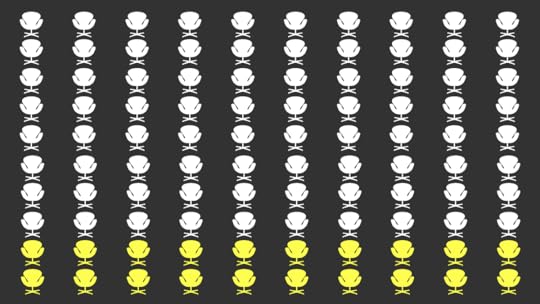

Next, health care companies can see an instant benefit from both putting women in positions of leadership and encouraging them to use their personal experiences and perspectives to shape their approach. After all, women comprise 88% of the health care workforce — but only 4% of health care CEOs. In our report, we uncovered story after story of female health care professionals who use their personal experiences as a family health decision maker to inform their work. Those who have found their way to leadership positions, or who work within forward-thinking organizations, have achieved incredible connections to the female market.

Consider Cleveland Clinic family doctor Lili Lustig, who felt ignored by the physicians treating her mother’s illness and now uses that experience to inform her own approach with patients and female decision makers in the low-income neighborhood where she works. “I am so driven to make a difference, because I understand their frustrations and desires to be taken seriously,” Lustig says. If a patient visits with a family member, she makes sure to treat both with dignity.

Or consider Meredith Ryan-Reid, MetLife senior vice president and mother of two, who draws upon a standout customer service experience she had when she ordered supplies from Diapers.com to inform her contributions at work. After all, it’s directly relevant as MetLife works to ensure its customer service operation is sensitive to the time limits, information needs, and confidence of its accident and critical illness customers — the majority of whom are women.

“It is so complicated for the health care industry to move to a new model,” says Lynn O’Connor Vos, CEO of Grey Health care Group. “Understanding the role and importance of [these female decision makers] can really help get us there faster.”

The more health care companies elevate and amplify women inside their organizations — and build trust with them externally — the better positioned they are to become truly consumer-centric. The roles women play in the lives of others lend a multiplier effect. Their role as decision maker is not receiving the notice or respect it deserves, which is why we have named the women in this market segment the “Chief Medical Officers” of their families. Developing a keen understanding of these women’s wants and needs in health care, and using that understanding at every stage of product development and commercial relations, will help companies uncover and leverage huge market opportunities as well as surprise and delight their customers.

Despite What Zappos Says, Middle Managers Still Matter

Middle managers have not fared well. Their ranks have been decimated in many organizations, and those that have survived are often perceived as powerless or, worse, as bureaucratic sticks-in-the-mud. This is not fair and it’s flat-out wrong.

Take what’s happening with Zappos at the moment. Much has been written about their adoption of a self-management system—holacracy—with no job titles and zero managers. That move earlier this month saw 14% of their workforce choose to leave the retailer. While I applaud their effort to break down unnecessary walls, getting rid of managers is not the answer.

Middle managers are increasingly vital to an organization’s success, though for different reasons than in the past. In the conventional hierarchical organization, middle managers used to be instrumental for controlling information flows and ensuring that frontline workers were producing. Roles were clearly defined and orders flowed from the top down. Those in the middle managed the inputs and outputs. But we’re now in an era where information is far more free-flowing and hierarchical lines are blurred.

Middle managers today need different skills and play a different role than their “command and control” era predecessors. According to a Harvard Business Review study, some 67% of companies recognize that they need to revamp their middle manager development programs. And at Red Hat we support a key set of capabilities that, for our organization, make a middle manager great. With many of our stars in this group—here’s what makes them so invaluable:

Influence

Most people think that middle managers are becoming less important because they make fewer direct decisions. Nothing could be further from the truth. Middle managers need to be able to bridge the gap in understanding that often lies between an organization’s senior leaders and those who are responsible for its daily operations. And they can have a massive impact on performance by catalyzing direction even within the most self-directed of workforces.

Their new charge is to lead not by fiat, but by influence. “Because I said so” doesn’t work with the current workforce. Instead of pulling rank with a subordinate or deferring to an executive, today’s middle managers must build influence and gain credibility by listening to concerns and offering context that leads to better decisions. Creating context is key.

At the same time, they need to influence their peers throughout the organization to ensure that efforts are aligned and pulling in the same direction. That’s especially true in fast growing and globally distributed organizations like Red Hat. We need our middle managers to keep us all on the same page.

Inspiration

We all know that innovation and passion are critical to a company’s success. But let’s face it, you can’t force someone to be creative or passionate about their work. So the middle manager’s role has become less about making sure people do what they’re told, and more about inspiring people to perform at their best.

The best managers are those who marry their IQ with their EQ. They focus on the “whys” and “hows” rather than the “whats.” They are visionary, big picture thinkers who know how to create a sense of shared ownership and responsibility, as opposed to simply issuing orders.

Being a middle manager often means taking less pride in your own achievements than in what your people accomplish. It’s about putting the right people into the right places, looking for ways to tap into their passion and insight, and unlocking their full potential.

Inclusion

An open organization is a meritocracy, where good ideas can come from anywhere, and the best ideas win. Middle management’s job is to create communication channels that allow ideas to percolate and circulate throughout the organization.

Middle managers play a vital role in ensuring that all voices are heard, not just the loudest ones. They invite people to speak up and contribute their ideas, especially when their views diverge from the majority.

In most organizations, the biggest clue that there is disagreement in the room is when nobody says anything at all. Concerns tend to come out around the water cooler, out of management’s earshot. Middle managers can make it safe to raise objections. They can ask frontline workers questions like, “From your perspective, what are we missing with this plan?”

The new roles that middle managers must play require different skills and capabilities than in the past. Open organizations must invest to develop these leaders. It starts with explicitly recognizing their new role. Training around soft skills. Building culture that recognizes and celebrates the right behaviors.

What Is Management Research Actually Good For?

San Jose, California, is home to one of the most peculiar structures ever built: the Winchester Mystery House, a 160-room Victorian mansion that includes 40 bedrooms, two ballrooms, 47 fireplaces, gold and silver chandeliers, parquet floors, and other high-end appointments. It features a number of architectural details that serve no purpose: doorways that open onto walls, labyrinthine hallways that lead nowhere, and stairways that rise only to a ceiling.

According to legend, Sarah Winchester, the extremely wealthy widow of the founder of the Winchester rifle company, was told by a spiritual medium that she would be haunted by the ghosts of the people killed by her husband’s rifles unless she built a house to appease the spirits. Construction began in 1884 and by some accounts continued around the clock until her death in 1922. There was no blueprint or master plan and no consideration of what it would mean to reach completion. The point was simply to keep building, hence the sprawling and incoherent result.

Is the Winchester Mystery House a good house? It’s certainly beautiful in its own way. Any given room might be well proportioned and full of appealing features. A stairway might be made of fine wood, nicely joined and varnished, and covered in a colorful carpet. Yet it ends in a ceiling and serves no useful purpose other than keeping its builders busy. In assessing whether a house is good, we have to ask, “Good for what? Good for whom?” — the questions we would ask about other kinds of constructions. For the Winchester Mystery House, the act of building was an end in itself. It is a paradigmatic folly, “a costly ornamental building with no practical purpose.”

Is management research a folly? If not, whose interests does it serve? And whose interests should it serve?

The questions of good for what and good for whom are worth revisiting. There is reason to worry that the reward system in our field, particularly in the publication process, is misaligned with the goals of good science.

There can be little doubt that a lot of activity goes into management research: according to the Web of Knowledge, over 8,000 articles are published every year in the 170+ journals in the field of “Management,” adding more and more new rooms. But how do we evaluate this research? How do we know what a contribution is or how individual articles add up? In some sciences, progress can be measured by finding answers to questions, not merely reporting significant effects. In many social sciences, however, including organization studies, progress is harder to judge, and the kinds of questions we ask may not yield firm answers (e.g., do nice guys finish last?). Instead we seek to measure the contribution of research by its impact.

There are many ways to assess the impact of scientific work, from book sales (where Who Moved My Cheese? is the best-selling management book in history) to prizes to Google hits and article downloads. By far the dominant measure of impact is citations: how often a piece is cited in subsequent works. An advantage of this measure is that it is easily accessible: Google Scholar and Web of Knowledge are just a click away. Citation metrics are widely used in faculty evaluations and routinely come up in tenure reviews. By this accounting, good science is widely cited science.

But at a fundamental level, impact in this sense may not measure what we want. Consider what happens when police are evaluated according to their numbers of citations and arrests. We might imagine that as a society we want “safety” or “justice,” but if what we count when we evaluate police officers is “number of arrests and citations issued,” we get something rather different—in the worst case, entire populations weighed down with criminal records for trivial offenses. Similarly, it is unclear why “impact” is an apt measure if the goal of research is to answer questions. If anything, raising questions that do not get answered, or being surprising and counterintuitive, may be better strategies for being widely cited than actually answering questions accurately. Being provocative may be more impactful than being right.

What will the advent of big data mean for management research, given the incentives in our publication process I’ve described above? Ironically, it is possible it will make it even more difficult to evaluate researchers’ contributions. Data used to be a constraining factor for organizational research. Gaining access to sufficient data to yield statistically significant results was often difficult, which encouraged researchers to use widely available sources such as Compustat or archives such as ICPSR. Some of the most influential papers from the 1970s featured simple correlations and occasional regressions on cross-sectional data for modest samples, any of which might be rejected out of hand today. Yet the availability of endless data may paradoxically make things worse. Rewarding statistical significance and surprise in a world of big data could easily lead to a grid search for intriguing and counter-intuitive regularities that do not add up to a coherent understanding.

At the same time, it is long past time for the field to have a serious conversation where their data comes from, and the ethics of using big data. Social life and the operation of organizations increasingly leave more or less permanent data traces, like the contrails left by jets. Sometimes these become available to researchers; even more often, they are available to corporations and government agencies such as the National Security Agency. A preview of this is the Enron email archive, containing about one-half million e-mails from 150 senior managers of Enron, which was made public by the Federal Energy Regulatory Commission during its investigations and subsequently cleaned and shared by researchers. Researchers have mapped the networks created by “From” and “To” headers in messages, analyzed the prevalence of sentiments in the text, coded classification schemes, and more. The data provide a fascinating look into a corrupt organization in action. The 2014 hack of Sony Pictures Entertainment’s computers, allegedly by North Korean operatives, yielded scores of embarrassing e-mails and memos, as well as sensitive data on thousands of employees, including “a Microsoft Excel file that includes the name, location, employee ID, network username, base salary and date of birth for more than 6,800 individuals,” according to Time. For some researchers, that spreadsheet could have been an ethically questionable window into contemporary corporate practices. (For instance, journalists have already mined it to explore gender and race pay gap issues.)

Data on the whereabouts, productivity, compensation, demographics, social networks, emotional expression, and perhaps medical records and Fitbit streams of employees can yield horrifyingly intrusive information within individual organizations, and modest versions of these are beginning to appear in the literature. A few companies such as Google and Oracle have some of these data for hundreds of client organizations, potentially allowing unprecedented comparative data on organizational structures and processes. It is inevitable that variants of such data will end up in the hands of researchers, one way or another. Moreover, A/B testing of experimental and control conditions is now standard practice in technology firms such as Google and Facebook, from the mundane (testing which headline yields the most click-throughs) to the less mundane (examining how exposure to positive or negative updates influences one’s own expressed emotions or how knowledge of friends’ voting influences the propensity to show up at the polls). Experiments are nearly costless in this environment, in which informed consent is evidently optional.

This brings us back to the question of whose interests are served by business research. Traditionally, the ultimate constituency for organizational research was managers. Scholars were encouraged to conduct research with “managerial relevance” or possibly “policy relevance.” And as business corporations kept growing bigger during the 1960s and 1970s, the need for managers to staff their internal hierarchies led to a massive expansion in management education. The demand for managerially relevant research was evident. Yet beginning in the 1980s, changes in the economy were reflected in the kinds of jobs taken by MBA students. Instead of seeking management jobs at GM or Eastman Kodak or Westinghouse, MBAs from elite schools went into finance and consulting. Traditional corporations, particularly manufacturers, shrank or even disappeared through multiple rounds of outsourcing and downsizing, while the largest employers came to be in retail, where hierarchies within stores are relatively short. Meanwhile, information technologies increasingly turn the tasks of management (measuring and rewarding performance, scheduling) over to algorithms. There are nearly 7 million Americans classified as “managers,” but the content of their tasks may not involve the actual supervision of other people.

More recently, alternative business models have arisen that dispense with “employees” and “managers” entirely. Uber reported that it had 162,000 “driver-partners” in the U.S. at the end of 2014. These are not employees of Uber — which itself employed perhaps 2,000 people — but independent contractors without need for management. Amazon expands and shrinks by tens of thousands of workers at a time through the use of temporary staffing companies for its warehouses — it added 80,000 temporary workers for the 2014 holiday season. The tasks are straightforward and largely supervised by computer. Retail, fast food, and the “sharing economy” are increasingly moving to a world in which algorithms and platforms replace human management. Meanwhile, GM’s North American workforce has shrunk to under 120,000, Eastman Kodak has 3,200 U.S. employees, and Westinghouse has effectively evaporated.

Management of humans by other humans may be increasingly anachronistic. If managers are not our primary constituency, then who is? Perhaps it is each other. But this might lead us back into the Winchester Mystery House, where novelty rules. Alternatively, if our ultimate constituency is the broader public that is meant to benefit from the activities of business, then this suggests a different set of standards for evaluation.

Businesses and governments are making decisions now that will shape the life chances of workers, consumers, and citizens for decades to come. If we want to shape those decisions for public benefit, on the basis of rigorous research, we need to make sure we know the constituency that research is serving.

This has been adapted from an editorial essay titled “What Is Organizational Research For?” that originally appeared in the Administrative Science Quarterly, June 2015, Vol. 60.

May 27, 2015

An Organization-Wide Approach to Good Decision Making

Behavioral economists and psychologists have uncovered scores of biases that undermine good decision-making. And, along with management experts, they have provided helpful tips that decision-makers can use to try to correct for those biases. But a comprehensive framework for achieving quality decision-making throughout an organization is still rare — almost three-quarters of companies have no formal corporate-wide approach to making major, complex decisions.

Without a proven, organization-wide approach, there may be, at best, isolated pockets of high-quality decision-making where individual leaders have elected to take a rigorous, transparent approach. Otherwise, the organization is at the mercy of the biggest bias of all: the perception that it is good at making decisions.

With an organization-wide approach you can increase the odds of circumventing that bias. Further, an organization-wide common language speeds up the making and reviewing of decisions. Transparency in how decisions are reached replaces the blind faith that people must place in the judgment of their superiors. Most importantly, more high quality decisions, instead of merely “good enough” decisions, together can add up to billions of dollars in additional value.

The first step is to define what a good decision looks like. In the early 1990s, Chevron (where until recently one of us worked) began experimenting with Decision Quality (DQ), a process that defines a high-quality decision as the course of action that will capture the most value or get the most of what you are seeking, given the uncertainties and complexities of the real world.

Armed with that definition, Chevron has applied the tools of Decision Analysis (DA), to the choices it faces. DA rejects the all-too-common approach of deciding between the status quo and a single alternative course of action. Instead, DA involves considering a range of possible outcomes, their probability of occurring, and the results (financial or otherwise) of each. Decision-makers can then compare alternatives in terms of both upside opportunity and downside risk, and make decisions in light of their own appetite for risk and tolerance of uncertainty.

With these methods in mind, we can describe the six elements of DQ that characterize any high-quality decision:

An appropriate frame, including a clear understanding of the problem and what needs to be achieved.

Creative, doable alternatives from which to choose the one likely to achieve the most of what you want.

Meaningful information that is reliable, unbiased, and reflects all relevant uncertainties and intangibles.

Clarity about desired outcomes, including acceptable tradeoffs.

Solid reasoning and sound logic that includes considerations of uncertainty and insight at the appropriate level of complexity.

Commitment to action by all stakeholders necessary to achieve effective action.

DQ won a foothold in Chevron through some eye-catching early successes in some major capital decisions. For example, one of the company’s refineries needed upgrading to remain competitive in the refining business. A first pass at the problem resulted in a proposal to install a unit called a flexi-coker — a major system capable of refining a range of crude oil types and minimizing the ultra-heavy coke residual.

During the installation, the refinery would have to be shut down, resulting in a significant loss of revenue. Flexi-cokers are expensive, and related improvements proposed for implementation during the shutdown would add to the project’s cost. As details of the engineered design matured, the estimated cost escalated, nearly doubling to $2 billion. At that point, senior management asked for a more rigorous review to better understand the risks and risk/reward balance.

To revisit the issue, the team tasked with the review turned to DQ concepts. In the course of the DQ process, the frame was widened to include all improvements that could be made during shutdown of the facility, including those unrelated to the flexi-coker. Doable alternatives, excluding the flexi-coker, were explored. The team then analyzed what would happen if certain variables like feedstock crude oil price, wholesale gasoline prices, and project duration deviated more than had been expected originally. And they analyzed the probability of these specific deviations occurring. The team was then able to articulate the overall risk profile of the project: the potential costs for each deviation and the likelihood of each occurring. These methods revealed that the risk profile of the project as originally conceived was more than Chevron’s management was willing to accept.

A set of acceptable cost/value trade-offs, and the likelihood of achieving them, was determined. Further analysis revealed that it was the proposed new flexi-coker itself that was contributing the bulk of the downside risk. At this point, Chevron’s management publicly announced the shelving of the flexi-coker portion of the project.

Chevron estimates that revisiting the decision and the subsequent change in project scope captured more than 50% of the original projected value at 25% of the cost, regaining the competitive position of the refinery at a much lower level of deployed capital.

For the first 10 years, the use of these decision techniques by various groups in Chevron was voluntary, though there were a string of early successes. During that time, Chevron proceeded to build deep internal competence in the discipline – introducing thousands of decision-makers to DQ and DA and developing hundreds of internal decision support professionals who applied them to many major decisions.

When David O’Reilly became Chairman and CEO in 2000, he insisted that DQ become mandatory on all capital expenditures over $50 million. Decision executives were also required to become certified in the fundamentals of the approach. Decision professionals were embedded in the organization around the world, and became part of the Chevron capital stewardship process.

The impact of many thousands of people making even marginally better decisions was huge in a company Chevron’s size. And in operations, where decisions are made almost daily, the result was, in effect, continuous improvement.

Achieving that level of decision quality throughout your organization takes some work, but you will quickly find that it has great appeal, apart from the value it captures through consistently higher-value decisions. Decision-makers and teams are energized by its capacity to get people to agree on a decision, though they may have begun with widely divergent views about the best course of action.

However, as anyone who has ever seen groupthink in action knows, any number of otherwise intelligent people can come to agree on nonsense. Conversely, autocratic leaders can simply impose their will. But by first defining what constitutes a high quality decision and the elements that go into it, DQ/DA erases lines drawn in the sand by intransigent team members.

Your teams are freed to focus on each element of this rational decision-making model and identify gaps in the quality of a decision. Instead of sticking to biases or getting mired in politics, people work to fill those gaps, with analytics providing a clear line of sight to the most value. Further, by satisfying all six elements of DQ, companies can recognize the quality of a decision as they make it, not just in hindsight. The result: far fewer failed strategies, far less wasted capital in investment decisions, and — to everyone’s great relief — fewer blame games and witch hunts.

The hard truth is we all leave a lot of value on the table – value that we could seize with better decisions. Doing so requires an organization-wide framework for making them.

How People Will Use AI to Do Their Jobs Better

We all know that computers can sometimes automate work, taking jobs away from humans. But it can augment human workers as well, making them more effective. In our ongoing research, we’ve found that so far augmentation is far more common, even in the emerging area of “cognitive computing,” in which machines can sense, comprehend, and even act on their own. In this sense, cognitive computing is more about “Person and Machine” than “Person versus Machine.”

But our view grows out of our observations of organizational applications available today. What about in the future?

For well-informed prognostication, it’s helpful to turn to the brain trust at Edge.org. Each year, Edge.org publishes essays from thinkers, scholars, and researchers on a question related to a hot topic in public and academic discourse. This year’s question was, “What do you think about machines that think?” It prompted 186 respondents to write more than 130,000 words total.

While the essays looked at a range of issues, a number of respondents touched on advances in how thinking machines could augment human intelligence. They discussed carefully-considered forms of human-machine interaction, many of which have implications to various businesses and industries including management, health, and creative fields. These answers help us to imagine what the future of computer-augmented work will look like, and what the challenges are to getting there.

Digital assistants you can trust. The first major scenario for human augmentation based on our research is to improve effectiveness in routine types of work. This sort of work typically involving a lot of coordination, information, and communication, like clinical workflow management in healthcare or account management in banking. To perform tasks more effectively, humans can use virtual assistants to help them with enterprise customer service, workflow management, or collaboration.

Today’s artificial assistants provide a good amount of information to us when we ask for it. Smartphones, for instance, serve up mapping functions, calendars, and reminders as well as access to technical knowledge, references, and more. But what will this sort of augmentation look like in the future?

In order for artificial intelligence to be used effectively within tools for collaboration, AI must become more trustworthy, says Gary Klein, author and senior scientist at MacroCognition LLC.

“Accuracy and reliability are important features of collaborators but trust goes deeper,” Klein writes. “We trust people if we believe they are benevolent and want us to succeed. We trust them if we understand how they think … if they have the integrity to admit mistakes and accept blame … For AI to become a collaborator, it will have to consistently try to be seen as trustworthy.”

As a virtual assistant, an AI that helps manage workflow in healthcare settings, or manage accounts in banking will need to have the smarts and the social savvy to interact in a trustworthy way, so it can more effectively become part of a human-led team. Designers of such systems will need to consider how to build trust into their products.

Software that makes experts better at what they do. The second scenario for augmentation is to sharpen expertise. Experts are those who mentally maintain specialty information and techniques. While smart systems are making it easier for doctors to make faster and more accurate diagnoses, and robots are assisting surgeons in performing technically tricky procedures, there’s still much room for improvement. IBM’s Watson is an example of a system that’s aiding expert knowledge. But how is an expert to best use this information?

We’re still in uncharted territory here, but according to Daniel C. Dennett, philosopher at Tufts University, it will become increasingly important for experts to have a solid understanding of what these systems can and can’t do. In addition to knowing their area of specialty, experts will have to have knowledge of the computing systems that they rely on to make judgments, in order to know how to balance those systems’ recommendations with their own analysis.

Additionally, there should be a way to make sure that use of these systems doesn’t turn into over-reliance. According to Dennett, it’s important that we don’t endow next-generation AI systems with more confidence in their abilities than is warranted. Just as important, we can’t let our own expertise erode in the process.

In the case of augmenting expertise, designers could take a lesson from exercise technology that aids runners or cyclists in their training, increasing their fitness for competition. In the most effective cases, athletes gain fitness, so that during competitions they can compete at a higher level. AI wouldn’t just be used to augment decision-making; it would also offer training for subject-matter experts to improve their own abilities.

Enabling innovation. The final scenario for augmentation is to enable innovation. Such work requires experimentation, creativity, and inventiveness; in these cases the data is “big,” unstructured, and volatile. This is the sort of thing humans do relatively well, but computers can help us do it even better, and the Edge.org participants had much to say with regard to this type of AI augmentation.

“The world faces a set of increasingly complex, interdependent and urgent challenges that require ever more sophisticated response,” write Demis Hassabis, Shane Legg, and Mustafa Suleyman of Google DeepMind. “We’d like to think that successful work in artificial intelligence can contribute by augmenting our collective capacity to extract meaningful insight from data and by helping us to innovate new technologies and processes to address some of our toughest global challenges.”

Other contributors note the potential of tools to enhance imaginative processes when writing prose, composing music, or developing data visualizations before business presentations. Maybe the most intriguing application will be in better enabling people, with all their innate cognitive biases, to decide which innovation to pursue in the first place. By “imparting a degree of informational transparency and predictive aptitude”these machines may one day figure out how to “motivate[s] us to…insist upon empiricism in our decisions,” as Spotify’s D.A. Wallach notes.

The brain as an interface for AI. According to some Edge respondents, the kind of interactions we have with AI could drastically change from external systems to implantable neural prosthetics. Sean Carroll, theoretical physicist at Caltech, notes that barriers between the mind and artificial machine are breaking down. By looking at the state-of-the-art of brain-machine interfaces today, he provides a possible outline for the future.

“We have primitive brain/computer interfaces, offering hope that paralyzed patients will be able to speak through computers and operate prosthetic limbs directly,” Carroll writes. He then goes on to discuss DARPA-sponsored research where “researchers have discovered that the human brain is better than any current computer at quickly analyzing certain kinds of visual data, and developed techniques for extracting the relevant subconscious signals directly from the brain, unmediated by pesky human awareness.”

On future possibilities, Carroll writes: “Ultimately, we’ll want to reverse the process, feeding data (and thoughts) directly into the brain. People, properly augmented, will be able to sift through enormous amounts of information, perform mathematical calculations at supercomputer speeds, and visualize virtual directions well beyond our ordinary three dimensions of space.”

Carroll’s prediction is heady stuff and it applies to all three types of augmentation outlined above. Effectiveness, expertise, and innovation can all be improved when the physical and psychological barriers between human and machine are reduced or at the very least smoothed over. It should be a goal of designers, then, to consider the interactions that best foster these powerful, emerging collaborations.

May 25, 2015

Getting More Women into Senior Management

In my first job out of college, in the late 70s, I was the only woman on the manufacturing floor as a production manager at General Motors. It didn’t take long to realize that I should have asked a few more questions during my job interview. I knew I was in for a challenge the first day I walked into the plant, and everything and everyone stopped. It was like one of those hushed movie moments. Everyone stared at me. At first I thought there was something wrong with my appearance, but soon realized I was the first and only woman who stepped on the production floor for GM who served in a management role. My second day on the job, someone started a fire in my wastebasket. Later that same day, someone sabotaged all the parts that were coming down the assembly line in my area.

I’d taken the job because I knew it would be challenging. But many of the challenges I faced had nothing to do with learning to do my actual job. One of the first things I had to do was prove my worth to people who believed I had no business on a manufacturing floor.

We’ve come a long way since then — or have we? We’ve been talking about how to get more women in leadership roles for, literally, decades. The term “glass ceiling” was coined more than 30 years ago. And yet women still hold less than 20% of the seats on corporate boards at S&P 500 companies. Why?

I met with a CEO recently who said he was trying to promote more women and increase the diversity of his leadership team, but nothing seemed to be happening. In fact, they were actually going backwards — the only woman on his leadership team had just resigned. Add to that, after a decade on top of the market, they were losing market share to a competitor. The desire was there, and the business need was urgent, but it wasn’t happening. And the CEO asked me why.

“I’ve got good news for you,” I said. “I’ve found the problem and a significant part of this issue is you.”

The CEO sat up in his chair in disbelief. “Tell me more.”

I said, “You have made it clear to leaders throughout the organization that diversity is a priority, but this expectation had no teeth. And within your own team, you simply didn’t walk the talk with the only woman on your executive team – and she just gave you her resignation. This leads me to believe that while you speak about the importance for diversity and gender balanced teams I don’t see that really happening.”

I’ve worked with dozens of companies like this. Far too many business leaders are, with the best intentions, failing to put the necessary muscle behind efforts to promote more women. Those organizations that understand the business imperative for gender balanced teams and inclusive cultures realize that it a 3-legged solution: men, women, and organization all have a part to play. Yet to move beyond conversation to action there are four things organizations corporate leaders can do to finally, after decades of talk, actually get something done on this issue:

1. Set clear, numerical goals. Call them quotas if you want, but there’s simply no other way to overcome entrenched biases than to set clear goals and hold leaders accountable for meeting them. What would happen if you gave your sales team an expectation that they’d increase sales, but didn’t tell them by how much, or when you were going to evaluate their progress? Corporate boards in Europe have far more women than boards in the U.S., not because Europe is more progressive than we are, but because multiple European countries, including, most recently, Germany, have passed laws requiring 30-40% of board seats to go to women. We could pass a similar law here — or corporate leaders could set enforceable goals themselves. I would rather see corporate leaders step up than have to rely on government action.

2. Show your commitment through actions, not words. The CEO and other top leaders should sit on the committee responsible for developing diverse slates for promotions and for new hires. If the boss isn’t visibly committed, it’s not going to happen. That’s what I told that CEO who asked me for help — and his executive team is now 25% female.

Given the state of corporate leadership today, however, just putting the executive team front and center on this issue won’t do it. You also need to think about who’s on the front lines doing your recruiting, your hiring, and your development of new leaders. Sending three middle-aged white men in suits to a college campus to recruit will probably net you a bunch of resumes from young white men. It matters who makes the ask — make sure the future leaders you want can see themselves in the current leaders who are reaching out to them.

3. Broaden criteria when necessary. When companies look for someone to fill a board seat, they usually look for a CEO. That expectation automatically eliminates 90% of the women in the market. Corporate leaders need to start thinking more broadly about the actual skill sets they need on their boards. I would argue that there’s a real need for people with experience in HR, in IT, in infrastructure — and you can find women who have all of these competencies. There’s no reason your board has to be made up of entirely CEOs and there’s plenty of evidence to suggest that diversity of experience, as well as other kinds of diversity, brings important benefits to boards.

The same goes for other leadership roles. Research has shown that women tend to take job descriptions at face value, and not apply for positions if they don’t have the skills or experience listed, while men are more likely to reach for jobs they might be under-qualified for. Rewriting job descriptions to emphasize cognitive skills over specific types of experience could go a long way to encouraging more women to apply for positions that would challenge them and put them on the executive track. Changes like this would also force companies to be more specific and thoughtful about what skills they really need to hire for.

4. Build a culture of inclusion and innovation. This is as broad as building and sustaining an inclusive, collaborative culture and rethinking old systems and processes such as recruitment, performance management, succession planning, and talent development to ensure there is a non-biased and consistent process to identify, develop, and advance leaders in their organization. But it’s also as narrow as individual male leaders deciding to serve as champions for change. That CEO I worked with was surprised when the only woman on his executive team left the organization. He shouldn’t have been. At meetings, she consistently found it difficult to get the floor. Often, she’d make a point, nobody would acknowledge it — and then three minutes later, and one of the men at the table would make the same point and get all the credit. No wonder she left! It was the CEO’s responsibility to make sure her voice was heard and her contributions were acknowledged.

The obstacles women face in today’s workplaces tend to be a lot subtler than a fire in a wastebasket. If we want to finally make real progress on promoting more women, after decades of talking about it, men, women and organizations all need to step up and take decisive action to make it happen.

When Should You Fire a “Good Enough” Employee?

Craig*, a VP of inventory for a food packaging company, had always been a high performer. He had been with the company three years, had a reputation for taking an innovative approach, and had good relationships with his team. Craig’s boss, Louise*, had come to count on Craig for his expertise and experience. During a factory move, however, Craig began to disappoint. He took many personal days during the move, and Louise found herself stretched thin covering for him. To add fuel to the fire, when Craig was asked to onboard several new employees, he pointed to a lack of HR leadership as an excuse for delaying the process indefinitely.

So was Craig’s performance an anomaly or a canary in the coal mine? After giving Craig direct feedback, Louise watched in dismay as he failed to take the initiative she had hoped for. Now she was really in a bind—how much credit did she give him for past performance? Should she settle for “good enough” going forward, or was it time to let Craig go?

The decision to terminate an employee is never easy. Firing someone you’ve worked with for years, especially someone you know and respect, is often excruciating. Even the most experienced managers lose sleep over it. It’s almost impossible to take the emotion out of what is a very personal decision—even when it’s a decision that makes rational economic sense on paper.

So amid all of these conflicted feelings, how do you know if your employee is sill an A-player worthy of another chance? How do you know when enough is enough?

Let’s assume you’ve done everything right up until this point—you’ve delivered honest and constructive feedback about your employee’s performance. You’ve set realistic goals and objectives for him or her to meet, with a concrete timeline to follow. And of course, you’ve asked your employee for his or her input around how to improve performance as well. From a management perspective, at least, you’ve done everything in your power to set expectations, give guidance, and empower your underperforming employee to step up to the plate.

If that still doesn’t work, what then? Ask yourself the following three questions to help shed light on the right course of action:

1. Is your employee meeting the responsibilities listed on his job description? This is the baseline, and yet many of us don’t refer back to a job description after we’ve completed the hiring process. Neither do our employees. But by revisiting a job description well into an employee’s tenure, a manager can assess how aligned the employee is with the job description. He or she can then have a meaningful discussion about each part of the role, re-calibrate the employees’ priorities, and revise the job description, as needed. If the employee’s performance isn’t matching with the current or revised job description, it is time to terminate.

In Craig’s case, was he good enough to keep things moving along at the factory? Yes. Was he performing all of his responsibilities with excellence? No. Was he maintaining his role as an exemplary leader of the organization? No. Unless there are extenuating circumstances, an employee who isn’t doing his job brings everyone down. It’s not fair to you, it’s not fair to them and it certainly doesn’t do your clients or customers any good either.

2. Can the market offer you a better employee at the same price? Louise was especially trigger-shy because she hadn’t done any succession planning for Craig’s role. How hard would it be to replace him? How much time, energy and resources would she need to invest to find someone with the skill, talent, and dedication she needed?

We all know that replacing talent is an expensive proposition. Data shows it can cost anywhere from 20% of one salary to upwards of 200% of one’s salary to replace an executive. So there’s no question that the hiring process is daunting, but with few exceptions, everyone is replaceable (as much as we’d like to think otherwise).

Craig was a valued employee with a solid history of performance, yet his eventual replacement brought a fresh pair of eyes, diverse thinking, and a powerful professional skillset. In the end, markets are efficient and talented employees looking to progress forward in their careers are abundant. Managers need to keep this reality in mind, even though it can be hard to see in the moment.

3. If the employee resigned today, would you fight to keep him? This is the final litmus test. By reframing the question this way, you will candidly address your internal debate: How would you feel if he left you? Devastated? Then maybe the relationship is salvageable. Relieved? Then it’s time to show your employee the door.

Louise ultimately realized she was hanging onto Craig because he was the devil she knew. That’s not a good enough reason to retain a good-enough employee. Like Louise, you will find another employee who will exceed your expectations and make you question why you waited so long to act in the first place. And Craig? He has been free to move onto another job with a better fit. While it may be incredibly difficult in the moment, it’s often better off for everyone in the long run.

*Craig and Louise are based on real people, though their names have been changed

Marina Gorbis's Blog

- Marina Gorbis's profile

- 3 followers