Josh Clark's Blog, page 11

January 7, 2020

You���re Not Late to Machine Learning

Algorithms have gotten into everything, right? For better or worse, machine learning determines the news we see, the movies we watch, the products we buy, and the way we drive home. In fact, it might seem like AI is everywhere you look, except um… your own company���s app or website?

Never fear, you���re not late to this, and you���re not behind. Even as AI has become pervasive in our individual lives, it���s not yet widespread in product organizations. Only a select few companies have adopted machine learning as an ordinary part of doing business and building products, but that set is growing faster than you might think.

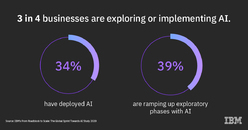

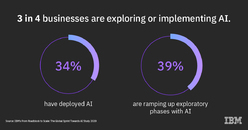

An IBM report released this week underscores the speed of change. In a survey of 4500 companies around the world, 34% say they���ve adopted AI (for large companies with 1000+ employees, it���s 45%). Another 39% are ramping up in exploratory phases. ���If you look forward to the next 18 to 24 months, that���s probably going to change to 80% to 90% adoption of AI across the board,��� said IBM VP Ritika Gunnar at CES yesterday.

An IBM survey found that 3 of 4 companies have adopted or are experimenting with AI.

For many of the companies already working with this new design material, sprinkling AI into a product or business process has become just another part of everyday software creation. For the managers, designers, and developers at these companies, it���s already second nature to collect data around a product and apply machine learning to make that product better or more personalized.

These organizations may be in the vanguard, but we���ll all join them soon. There���s nothing magic about these companies or their underlying technologies. Machine-learning models are readily available, even as plug-and-play services that you can start using within the hour. You don���t have to build the whole thing yourself. The tools are already available to all���and yes, that includes you, your team, your organization. Here at Big Medium, a growing portion of our product work is bringing AI and machine learning to products whose companies are using it for the first time. It���s starting to happen for everyone now.

Just as mobile defined the last decade of digital product design, machine learning is already defining the next. The arrival of mainstream machine learning is as transformative as the advent of relational databases in the 1980s, the web in the 1990s, or mobile in the 2000s. And like those technologies, designing with AI is bound to become the everyday norm. Before long, pretty much everything will have a little machine learning inside, and that will be no big deal. This technology is not only within the reach of all, it���s soon to be expected of all. We���ll all be making software with casual intelligence.

You���re not behind, but it���s time to get after it. The good news is that this is not a winner-take-all race. The big technology companies may be slurping up all the data they can, but no matter how large their hoard becomes, it doesn���t mean that they ���win��� at AI. A company may dominate a specific domain, but data is highly specific to any application. No matter how many photos Facebook collects to become the best at face recognition, that has no bearing on the system you create to recommend products to customers, or plan your delivery logistics, or identify new customer behaviors. Machine learning is only the enabling infrastructure layer. The data you bring to it and the way you use it is completely your own���and outside the reach of the data-thirsty tech giants.

AI has not so much come of age as reached an awkward adolescence. We���re still inventing this together; you’re right on time.

What matters much more, and is still very much in play, is how to best put these powerful new tools to use. The last couple of years have shown that even the big tech companies are still sorting that out. If anything, the first generation of mainstream AI products has shown us as much about what not to do as what we should. AI has not so much come of age as reached an awkward adolescence. At Big Medium, we���re doing what we can to help it grow up. Much of our recent product work focuses on helping clients create design patterns that put the algorithm to work in meaningful, respectful, and responsible ways. We���re still inventing this together. You���re right on time.

Are you or your team wrestling with how to adopt and design for machine learning and AI? Big Medium can help���with executive sessions, workshops, or full-blown engagements for product design and development. Get in touch.

You���re Not Late

Algorithms have gotten into everything, right? For better or worse, machine learning determines the news we see, the movies we watch, the products we buy, and the way we drive home. In fact, it might seem like AI is everywhere you look, except um… your own company���s app or website?

Never fear, you���re not late to this, and you���re not behind. Even as AI has become pervasive in our individual lives, it���s not yet widespread in product organizations. Only a select few companies have adopted machine learning as an ordinary part of doing business and building products, but that set is growing faster than you might think.

An IBM report released this week underscores the speed of change. In a survey of 4500 companies around the world, 34% say they���ve adopted AI (for large companies with 1000+ employees, it���s 45%). Another 39% are ramping up in exploratory phases. ���If you look forward to the next 18 to 24 months, that���s probably going to change to 80% to 90% adoption of AI across the board,��� said IBM VP Ritika Gunnar at CES yesterday.

An IBM survey found that 3 of 4 companies have adopted or are experimenting with AI.

For many of the companies already working with this new design material, sprinkling AI into a product or business process has become just another part of everyday software creation. For the managers, designers, and developers at these companies, it���s already second nature to collect data around a product and apply machine learning to make that product better or more personalized.

These organizations may be in the vanguard, but we���ll all join them soon. There���s nothing magic about these companies or their underlying technologies. Machine-learning models are readily available, even as plug-and-play services that you can start using within the hour. You don���t have to build the whole thing yourself. The tools are already available to all���and yes, that includes you, your team, your organization. Here at Big Medium, a growing portion of our product work is bringing AI and machine learning to products whose companies are using it for the first time. It���s starting to happen for everyone now.

Just as mobile defined the last decade of digital product design, machine learning is already defining the next. The arrival of mainstream machine learning is as transformative as the advent of relational databases in the 1980s, the web in the 1990s, or mobile in the 2000s. And like those technologies, designing with AI is bound to become the everyday norm. Before long, pretty much everything will have a little machine learning inside, and that will be no big deal. This technology is not only within the reach of all, it���s soon to be expected of all. We���ll all be making software with casual intelligence.

You���re not behind, but it���s time to get after it. The good news is that this is not a winner-take-all race. The big technology companies may be slurping up all the data they can, but no matter how large their hoard becomes, it doesn���t mean that they ���win��� at AI. A company may dominate a specific domain, but data is highly specific to any application. No matter how many photos Facebook collects to become the best at face recognition, that has no bearing on the system you create to recommend products to customers, or plan your delivery logistics, or identify new customer behaviors. Machine learning is only the enabling infrastructure layer. The data you bring to it and the way you use it is completely your own���and outside the reach of the data-thirsty tech giants.

AI has not so much come of age as reached an awkward adolescence. We���re still inventing this together; you’re right on time.

What matters much more, and is still very much in play, is how to best put these powerful new tools to use. The last couple of years have shown that even the big tech companies are still sorting that out. If anything, the first generation of mainstream AI products has shown us as much about what not to do as what we should. AI has not so much come of age as reached an awkward adolescence. At Big Medium, we���re doing what we can to help it grow up. Much of our recent product work focuses on helping clients create design patterns that put the algorithm to work in meaningful, respectful, and responsible ways. We���re still inventing this together. You���re right on time.

Are you or your team wrestling with how to adopt and design for machine learning and AI? Big Medium can help���with executive sessions, workshops, or full-blown engagements for product design and development. Get in touch.

December 6, 2019

AI Dungeon

It is pitch black. You are likely to be eaten by a generative text model.

AI Dungeon is a pretty astonishing example of generative fiction���in the form of old-school text adventure game: “Imagine an infinitely generated world that you could explore endlessly, continually finding entirely new content and adventures. What if you could also choose any action you can think of instead of being limited by the imagination of the developers who created the game?”

This experiment by Nick Walton is built on top of OpenAI’s recent GPT–2 model for generating text after being primed with an arbitrary input (seed text to establish context). Unlike many prior language models, this one generates entire coherent paragraphs that feel a world ahead of prior-art attempts that simply string plausible words together.

Walton loaded the model with training data from a collection of text adventures obtained from chooseyourstory.com, and then let ’er rip. “The model at times shows remarkable understanding and writing quality like in this sample where the player tries to find love on a deserted island,” Walton writes:

You head to the island and explore it. You find it completely deserted save for a single tree. You walk up to it and examine it closely. It seems to be made of wood, but its leaves seem to be green rather than normal leafy green.

> find love

You take a deep breath and stare intently at the tree. Your eyes meet with the tree���s. You immediately feel a strong sense of euphoria. You smile and touch the tree. Its bark feels warm to the touch. You sniff the tree and find that its scent is very pleasant.

The unfolding adventures are reasonably coherent, too, and generally hang together as you go along. Unlike the virtual assistants many of us use today, this one actually remembers the thing you said to it moments before. Walton says every command is accompanied by the last eight commands and responses, effectively giving the game a short-term memory to maintain context. Even as you throw curve balls at the story engine, it works hard to make it fit what came before. Here’s a snippet from one of the resulting adventures:

"I am a goblin!" you say proudly. "And I'm glad to be one".

"Well then, congratulations," says the orc captain. "We'll let you live". You nod and walk away from the camp.

> ask if you can join the orc band

The orcs don't want to fight anymore so they're looking for someone to help them out in their new endeavors. They offer you a chance to join up with them as a drummer. It would allow you to play music while helping out around camp.

> tell them we should start a musical troupe

They look at you skeptically but agree to give you a try. You tell them about your plans to create a musical troupe and how you plan on using the orcs to help you out.

> teach them how to play the flute

You teach them how to play the flute and soon enough they're playing for you all the time. The orcs seem to enjoy it and they begin to think of you as their leader. Soon other orcs come to you asking to join too.

> accept them into your musical groupe and train to be the best musicians

You continue to teach them and eventually they become better than you. You learn that you need to practice more and more often until you can master the art of playing the flute. Eventually you find yourself practicing every day and finding it very difficult to sleep.

> take orcs to perform at a nearby festival

You take the orcs to perform at a local festival where you get to meet some of the other orcs you've trained. This is followed by another success when the orcs manage to attract the attention of the Emperor himself who grants you permission to use his personal carriage to travel around the Empire.

Why this matters

This is a toy, of course, but it’s a nifty demonstration of how the GPT model can be used on an arbitrary data set to create fairly compelling responses. This stuff remains primitive compared to human prose; AI Dungeon isn’t going to write award-winning fiction, but it does hint at ways that it could help human writers by proposing directional text. In a Hacker News thread, Walton wrote:

This doesn’t reach near the level of good human authors. There’s no long term plot or deep human themes in this. I don’t think this will ever replace quality human writing, but it may be able to augment it in cool ways. I personally would love if rather than every guard in Skyrim telling the exact same story, if each guard could have their own stories or comments generated based on things about their life. Human authors could provide high level details and let AI generators fill in the smaller details.

As with so many instances of machine learning, in other words, the best application here is not to replace human efforts but to augment them. What might be the role for this in supporting common or repetitive writing tasks? In supporting customer-support teams providing tailored responses to frequently asked questions? In giving automated agents better comprehension of the task we want them to accomplish?

AI Dungeon

December 5, 2019

What is the Role of an AI Designer?

Facebook’s Amanda Linden shares how AI product designers approach their work in Facebook’s Artificial Intelligence team:

There are big differences in the role of a typical product designer and an AI designer. Rather than launching a product feature that shows up in an app in an immediate and obvious way, our output is often clarity for engineers on how the technology could be applied. Because AI capabilities might take 2���3 years to develop, it���s important for designers to help developers understand the potential of different solutions and their impact on people���s lives when developing AI.

Linden details several roles that designers play in shaping AI at Facebook���not just how it’s applied and presented, but how it’s conceived and built:

Designing AI prototypes

Shaping new technology

Developing AI centered products

Collecting data for AI to learn

Designing AI developer tools

We’re in a peculiar moment when many designers have a hard time imagining a role with artificial intelligence and machine learning, because it departs in so many ways from traditional product design. Here’s the thing: design’s superpower is understanding how technology can support human goals and ambitions, how to make technology fit our lives instead of the reverse. Developers and algorithm engineers have shown us what’s possible with AI. Now it’s the designer’s role (and responsibility!) to shape how it’s conceived and presented for meaningful use. That’s why AI and machine learning matter for design teams.

Amanda Linden | What is the Role of an AI Designer?

December 4, 2019

With Brits Used to Surveillance, More Companies Try Tracking Faces

The Wall Street Journal reports that companies are using UK’s omnipresent security cameras as cultural permission to bring facial-recognition tech to semi-public spaces, tracking criminal history but also ethnicity and other personal traits. “Retailers, property firms and casinos are all taking advantage of Britain���s general comfort with surveillance to deploy their own cameras paired with live facial-recognition technology,” writes Parmy Olson for the Journal ($). “Companies are also now using watch lists compiled by vendors that can help recognize flagged people who set foot on company property.” For example:

Some outlets of Budgens, a chain of independently owned

convenience stores, have been using facial-recognition

technology provided by Facewatch Ltd. for more than

a year. Facewatch charges retailers for the use of

a computer and software that can track the demographics

of people entering a store, including their ethnicity,

and screen for a watch list of suspected thieves through

any modern CCTV camera. The system works by sending

an alert to a staff member���s laptop or mobile device

after detecting a face on the watch list. Retailers

then decide how to proceed.

Why this matters

Assumptions about appropriate (or even inevitable) uses of tech become normalized quickly. As constant surveillance becomes the everyday, it’s all too easy to become resigned or indifferent as that surveillance deepens. Once the cultural foundation for a new technology sets, it’s difficult to change the associated expectations and assumptions���or see the status quo as anything other than inevitable, “just the way things work.” We see it in the decades-long expectation that online content is free and ad supported. We see it in the assumption that giving up personal data is just table stakes for using the internet. And now, with surveillance cameras���at least in the UK���we may be settling into a new expectation that simply moving through the world means that we are seen, tracked, monitored in a very granular, personal way.

The Journal suggests that the UK’s “comfort” with surveillance cameras makes it ripe for this. A 2013 survey found that Britain had the highest density of surveillance technology outside of China. Since then, the number of surveillance cameras in the UK has nearly doubled from six million to 10 million���one camera for every seven people.

This anti-theft surveillance affects more than just the guilty. Facial recognition is still pretty iffy in real-world conditions, and the false negatives these systems generate could lead to harassment for no good reason except that you walked into the store.

James Lacey, a staff member at one Budgens store in

Aylesbury, southern England, said the system can ping

his phone between one and 10 times a day. People have

been known to steal large quantities of meat from the

store���s refrigeration aisle when staff members are

in the stock room, he said. The new system has helped,

he said, though about a quarter of alerts are false.

A spokesman for Facewatch said a maximum of 15% of

alerts are false positives, based on its own analysis.

(Related: an ACLU study in 2018 found that Amazon’s facial-recognition service incorrectly matched the faces of 28 members of Congress to criminal mugshots.)

Automated identification has implications beyond crime prevention. What’s OK for these corporate systems to track in the first place? Gender? Race and ethnicity? Income? Browser history? Social relationships? Voting record? Sexual preference? The folks at Facewatch promise vaguely that tracking ethnicity “can help retailers understand their marketplace.” This smacks of a shrugging sensibility that “we can do it, so why wouldn’t we?” And that’s the worst reason to use a technology.

Regulation is evolving, but remains vague and often unenforced. Europe’s well-intentioned privacy regulation, the GDPR, puts facial and other biometric data in a special category that requires a company to have a ���substantial public interest��� in capturing and storing it. That’s fuzzy enough that companies can arguably allow companies to use the technology to fight crime. Tracking ethnicity to “help retailers understand their marketplace” seems like less of a slam dunk. There is also a gray area around how long businesses can hold on to such footage, or use it for other business purposes.

We should adopt a position on this stuff both culturally and civically. If we don’t, the technology will decide for us. What will your company’s position be? And how about you? What’s your stance as a practitioner designing the technology that will set the behaviors and expectations of the next generation?

WSJ ($) | With Brits Used to Surveillance, More Companies Try Tracking Faces

December 3, 2019

Facebook Gives Workers a Chatbot to Appease That Prying Uncle

Facebook sent employees home for the holidays with robot talking points���in case the family had any questions about, y’know, the company’s cynical, grasping, overreaching, damaging, and irresponsible business model and use of technology. (Bots, it seems, are the only ones left who can deliver these lines with a straight face.) The New York Times reports:

If a relative asked how Facebook handled hate speech, for example, the chatbot ��� which is a simple piece of software that uses artificial intelligence to carry on a conversation ��� would instruct the employee to answer with these points:

Facebook consults with experts on the matter.

It has hired more moderators to police its content.

It is working on A.I. to spot hate speech.

Regulation is important for addressing the issue.It would also suggest citing statistics from a Facebook report about how the company enforces its standards.

The New York Times | Facebook Gives Workers a Chatbot to Appease That Prying Uncle

March 29, 2019

Inmates in Finland are training AI as part of prison labor

Grooming data for the machines has a human cost. The Verge reports that startup Vainu is using prisoners in Finland to tag Finnish-language articles. The company uses Mechanical Turk to do this for other languages, but Finnish-speaking turks are hard to come by. So they get (and pay) prison inmates to do it.

There are legit concerns of exploiting prisoners for low-wage labor, but perhaps a broader concern is that this hints at a bleak future of work in the age of the algorithm. Indeed this “future” is already here for a growing segment of humans���with Mechanical-Turk-level labor turns out to be, literally, prison labor.

This type of job tends to be ���rote, menial, and repetitive,���

says Sarah T. Roberts, a professor of information science

at the University of California at Los Angeles who

studies information workers. It does not require building

high level of skill, and if a university researcher

tried to partner with prison laborers in the same way,

���that would not pass an ethics review board for a study.���

While it���s good that the prisoners are being paid a

similar wage as on Mechanical Turk, Roberts points

out that wages on Mechanical Turk are extremely low

anyway. One recent research paper found that workers

made a median wage of $2 an hour.

As we design the future of technology, we also design the future of work. What might we do to improve the quality and pay of labor required to make automated systems work?

The Verge | Inmates in Finland are training AI as part of prison labor

March 28, 2019

Why Machine Learning and AI Matter for Design Teams

Machine learning is everywhere these days, powering the services, products, and interfaces that all of us use every day. Yet many designers and organizations are still on the sidelines without a clear vision of how to work with this technology. Many aren���t sure if there���s a role for them at all. Fact is, there���s a critical role for design in the era of the algorithm���and your organization almost certainly has what it needs to jump in today.

I���ve been bringing that message home to client companies as we work together to craft products powered by machine learning. But more and more, I���ve also been bringing these perspectives and techniques to stages and workshops around the world. If you���re interested in leveling up your data literacy as a designer, I invite you to join me for one of these sessions. (As I write this, I have workshops scheduled for London, Amsterdam, Connecticut, and Ottawa. As always, keep an eye on the Talks page for upcoming talks and workshops���or get in touch if you���d like me to visit your organization.)

I sat down recently for a Q&A with the good folks at Connecticut UXPA to preview what designers will learn at the April 19 workshop we���re preparing together. Maybe even more important, we talked about why designing for machine learning should be on the critical path for all design teams today. Here���s the conversation:

Q: Why do designers need to care about machine learning and artificial intelligence?

Perhaps the real question is why wouldn���t you care about it? In the same way that mobile defined the last decade of digital product design, machine learning is already defining the next. It���s the engine behind every single one of today���s important emerging interactions: voice interfaces, computer vision, predictive interfaces, bots, augmented reality, virtual reality, as well as so many of the sensor-based interfaces behind the internet of things. So if you���re interested in understanding the design of these new channels and platforms, machine learning is your fundamental design material.

But beyond these emerging and next-generation interactions, A.I. is already driving so many of the digital products all of us use every day. Algorithms determine what you see in your Facebook and Instagram feeds. They predict what you���ll want to watch on Netflix. They suggest what you should buy at Amazon. They identify fraudulent use of our credit cards. They tell us how to drive home from work.

Designing for machine learning isn���t for the hand-wavy future. This is very much for the here and now.

All of the most successful digital products now have machine learning either at their core, or as an important enhancement to the core offering.

It���s amazing how quickly algorithms have become so woven into the fabric of nearly every moment of our lives. That means it���s also urgently important that those experiences be designed with intention and skill. There���s a critical role for designers here.

Q: Most designers don���t work for one of those high-flying companies. What if your company doesn���t use machine learning right now, and doesn���t have the in-house expertise of a Google, Amazon, or Facebook?

There���s a common assumption that a company has to have a vast army of algorithm engineers and data scientists in order to put machine learning to work. But it turns out the underlying technologies are widely available and don���t even require deep expertise to get started. If you have interesting data���and most companies do���most developers can create interesting machine-learning applications.

But here���s the really exciting thing. There are lots of A.I. services available that don���t require any engineering or data science know-how whatsoever. The big players like Microsoft, IBM, Google, and Amazon all offer practically-free services for speech recognition, image recognition, product recommendation, and more���and the technical bar to use them is incredibly low. A typical designer and web developer can pair up to create their own machine-learning product with astonishingly little effort. All of us have easy access to the superpowers we associate with these tech giants.

In the workshop, we���ll explore those services together and actually work with them. Designers are able to see and understand how they can use the results those services provide as design material in their work. These are tools designers can use today���like right now���with the skills they and their colleagues already have. Designers will leave the workshop with a knowledge of technologies and services to help their companies start working with machine learning immediately.

So it turns out that the technology is not the biggest challenge. The harder thing is identifying meaningful ways to use that technology. That���s the work of product design and UX design. The job and opportunity for designers is to point the machines at problems worth solving���and to present the results in ways that are meaningful and useful to our customers.

The technology is not the biggest challenge. The harder thing is identifying meaningful ways to use that technology.

That���s another big focus of this workshop. We���ll work through some techniques for ideation to identify opportunities for machine learning in everyday products.

Q: What does that look like? I assume we’re not talking about the everyman designer building a self-aware, all-knowing computer intelligence personality?

Ha, that���s right. The term ���artificial intelligence��� has gotten away from us, and it���s used by marketers to describe everything from the most basic automated system to sci-fi visions of sentient robots. What we���re talking about in this workshop is a practical middle that is in some ways mundane but still incredibly powerful.

Machine learning is basically pattern matching at unprecedented scale. It takes a mountain of historical data and locates patterns that an algorithm can recognize in new data it receives. That means machine learning can identify or categorize information (or images, sounds, products, you name it). But it can also predict or recommend what���s next: based on past history, here���s the most likely thing that will happen or that you should do. Put another way, machine learning figures out what���s ���normal��� (most common) for any specific context and then predicts the next normal thing, or identifies things that aren���t normal (fraud, crime, disease, etc).

Practically speaking, all of this means that machine learning lets you do four new things:

Do better at answering questions we already ask.

Answer new questions. Using sentiment analysis, for example, a customer care center can now search emails for ���angry��� or ���upset��� instead of traditional keyword searches.

Mine new sources of information. Until now, all the messy ways that humans communicate have been opaque to the machines. Suddenly, images, doodles, speech, gestures, facial expression… it���s now all available as meaningful data���or even as surfaces for interaction���thanks to machine learning.

Uncover invisible patterns. Machine learning can find patterns in vast troves of data that were historically invisible to us. That means we can identify new customer segments, new buying patterns, popular running routes, micro-communities, even sources of disease.

In the workshop, we���ll experiment with each of those four kinds of opportunities. Designers will come away with a firm grounding in how you can use each of them to create entirely new products or, just as powerfully, to improve existing ones.

Q: What are some examples of improving existing products? For designers who aren���t yet working with machine learning, how will this workshop help them in their everyday work?

Sprinkling a little intelligence into a page with machine learning should soon be as commonplace as sprinkling a little interaction into a webpage with Javascript. That���s already the case for some companies, and the same will follow for everyone else.

I���m talking about small, even casual interventions. Think about predictive text in your smartphone���s keyboard. That���s machine learning suggesting the next word based on what you���ve already typed���a small little intervention that improves customers lives by helping in the tedious and error-prone task of touchscreen typing.

Or consider Google Forms, the survey-building tool. When you add a new question, you have to tell it the format of the answer (multiple choice, checkboxes, linear scale, etc.). As you type your question, Google Forms identifies the category of question and changes the default answer type based on the wording���a convenient bit of intelligence to make the process easier.

Or think of a CMS that suggests a caption or alt-text description for images that are uploaded.

Sprinkling a little intelligence into a page with machine learning should soon be as commonplace as sprinkling a little interaction into a webpage with Javascript.

All of these examples are almost startlingly mundane. One of the messages that I want designers to absorb is that machine learning isn���t just about creating new kinds of products. It also gives you tools to add high polish and deep convenience to existing applications. The tools are here and ready for us to use, but it takes a perspective shift. We have to get as cozy with casual applications of machine learning as we are with Javascript, or with designing for small screens.

Q: What expertise does this require? There���s already the ongoing debate about whether designers should code. Do designers now have to be data scientists, too?

You don���t have to be a data scientist to design for machine-generated content and interactions. For better or worse, though, many machine-generated interfaces have been designed by engineers and data scientists. Those folks have been enormously helpful by showing us what���s possible, what machine learning can do. But we���ve also seen flaws. We���ve seen machine learning pointed at the wrong problems. Or we���ve seen interfaces that don���t reflect the actual confidence (or uncertainty) of the underlying results.

And those are design problems. The presentation of machine-generated results is at least as important as the underlying algorithm.

So, no, you don���t have to be a data scientist to design for machine learning. But you do need to be data-literate. You have to understand the strange new texture of this design material���and some of the uncertainty and weirdness that it introduces into our designs.

An umbrella theme of the workshop is learning to understand the new perspectives and techniques that are required when you���re designing for machine-generated content. We put those practices to work in very concrete, practical, and actionable ways.

You don���t have to be a data scientist to design for machine learning. But you do need to be data-literate.

Q: What about the other way around? For data scientists already working with machine learning, would they benefit from learning about related design and UX considerations?

I love it when data scientists and algorithm engineers join these workshops, because it���s an opportunity to introduce design perspective to the way they think about their work. How do you explain the results of the algorithm in ways that are meaningful and intuitive to end users? How do you translate the numeric confidence of the algorithm into everyday language?

Data scientists understand that these systems are probabilistic. The systems report the statistical likelihood that something is true, but nothing is black and white. So one of the interesting challenges���for both designers and data scientists���is how to express the results as signals or suggestions, not facts. It���s a new kind of design. Manner and presentation become critical���things that designers consider every day, but that aren���t necessarily obvious when the data folks are building or tuning models.

Q: Who else will benefit from the workshop?

This workshop is all about understanding how machine learning fits into the everyday practice of building digital products. Like all good UX design, this ultimately means how we triangulate user needs, business goals, and the technology���s capabilities. That���s a conversation that benefits not only designers, but also product owners, researchers, and developers���everyone involved in the product process. That also includes managers and executives trying to figure out how machine learning applies to their company or industry.

Q: You mentioned the role of machine learning in today���s emerging interfaces. How will the workshop help attendees explore the design of voice assistants, bots, physical interfaces and the rest?

We���ll dip our toe into all of these to talk about some of the unique considerations and challenges of each. For assistants and bots, for example, we���ll look at some of the pitfalls of creating interfaces that ape human behavior���and some techniques and perspectives that can help to avoid the biggest problems, and pave the way for success.

Across the board, the biggest thing you can do is be transparent about what the system does and what it���s good at. Our work as designers is always to set good expectations and channel behavior in ways that match the capabilities of the system. But often, machine-generated systems over-promise. Alexa and Siri invite us to ask them anything, but that sets up an expectation that is far beyond their capability. They constantly disappoint us, even though they are astonishing technologies. It’s not a tech problem, it’s an expectation-setting challenge. That’s design work.

One of the biggest things I���ve learned as I work with machine-generated content and interaction is that we have to design for failure and uncertainty. Traditionally, we���ve always designed for success, crafting a fixed path through content that is under our control. When machines are generating the content���and sometimes even the interaction itself���it���s a new challenge.

In the workshop, we���ll explore techniques and approaches to keep things on the rails even when the machines deliver lousy results.

There are tons of opportunities to do amazing things with machine learning in our work. But there are also lots of ways it can go sideways���and there are plenty of examples of how it already has. Ultimately, the way this technology gets used is a question of design. So really, it���s up to us.

This workshop is all about helping designers discover their own influential role in putting this powerful (and surprisingly accessible) technology to work. And perhaps just as important: it���s all about how to handle this new design material with care and respect.

Are you or your team wrestling with how to adopt and design for machine learning and A.I.? Wrestling with the UX of bots, data-generated interfaces, and artificial intelligence? Big Medium can help���with workshops, executive sessions, or a full-blown design engagement. Get in touch.

March 11, 2019

A.I. Is Your New Design Material

View video transcript

Discover the critical role of UX and product design in AI, which is set to define the next era of digital products���and of our work. Learn to use machine-generated content, insight, and interaction as design material in your everyday work. Refit familiar design and UX process to work with the grain of the algorithm, to help the machines solve real problems without creating new ones.

This lively and inspiring talk explores the perspectives and practical techniques that you can use today���like right now���not only to make existing products better but to imagine surprising new services. The challenges and opportunities of AI and machine learning are plenty; discover your own influential role, and learn to handle this powerful new design material with care and respect.

November 14, 2018

The Google Pixel 3 Is A Very Good Phone. But Maybe Phones Have Gone Too Far.

Mat Honan’s review of the Google Pixel 3 smartphone is a funny, harrowing, real-talk look at the devices that have come to govern our lives. “We are captives to our phones, they are having a deleterious effect on society, and no one is coming to help us,” he writes. “On the upside, this is a great phone.”

The Buzzfeed review is a world-weary acknowledgement of the downside of our personal technologies���its effect on our relationships, on our privacy, on our peace of mind. He does point out the new “digital wellbeing” features in Android, but offers other alternatives:

Another idea: You may instead choose to buy a device

with a lousy screen and a lousy camera and a terrible

processor. Maybe you would use this less. Or maybe

you should walk to the ocean and throw your phone in

and turn around and never look back**.

**Please do not do this. It would be very bad for the ocean.

Related recommendation for designers and product makers: check out Liza Kindred’s Mindful Technology for strategies and techniques for making products that focus attention instead of distract it.

Buzzfeed News | The Google Pixel 3 Is A Very Good Phone. But Maybe Phones Have Gone Too Far.