Josh Clark's Blog

October 22, 2025

Sentient Design Workshops

11:00am ��� 3:00pm ET

Check your time zone

$895 ($995 after Jan 22)

Training a Team?Email us about discounts for five or more.

Plan private team training (online or in-person).

Sentient Design: Craft Radically Adaptive Experiences with AITwo-Day Online Workshop: Join product design leaders and Sentient Design authors Josh Clark and Veronika Kindred to explore the already-here future of intelligent interfaces and radically adaptive experiences.

Sentient Design is the practice of creating experiences that are aware of context and intent to respond in the moment. You’ll learn the framework and techniques to design them responsibly by prototyping an experience over the course of two days.

This hands-on, zero-hype workshop provides measured, practical techniques that you can use today���like right now���to imagine surprising new experiences or to improve existing products. You’ll go way beyond chatbots to explore the full landscape of intelligent interfaces through four key postures���tools, agents, copilots, and chat���plus a dozen new experience patterns you can put to use immediately.

Who Should AttendThis workshop is perfect for designers, product owners/managers, and design-minded developers who want to understand AI as a design material, not just a tool. If you’re curious about how to make AI work for people (instead of the other way around), this is for you.

What You’ll LearnHands-On PrototypingPrototype a new product: identify, imagine, and bring to life AI-powered features that solve real problems (not just “because AI”)Get your hands dirty working with models directly to learn their strengths and quirksWire interface to intent by using a LLM to control the behavior of your prototypeDesign Patterns & PracticesExplore radically adaptive interfaces that are conceived in real-time based on user context and intentDiscover emerging UX patterns that go beyond “slap a chatbot on it”Adopt techniques for grounded, predictable experiences when your interface has a mind of its ownGuide user behavior to match the system’s abilityResponsible AI DesignUse responsible practices that build trust and transparencyBalance opportunities and risks in AI-powered experiencesWhat You’ll NeedAn open mindA healthy mix of imagination and skepticismNo prior AI or machine learning experience is required. You bring the human intelligence, and we’ll supply the artificial kind.

Meet Your InstructorsJosh Clark and Veronika Kindred are authors of Sentient Design, the forthcoming book from Rosenfeld Media. Josh is a 30-year design veteran and principal of design agency Big Medium. Veronika is designer and researcher at Big Medium. Both solve problems and design what’s next alongside some of the world’s biggest companies.

RegisterFeb 11���12, 202611:00am ��� 3:00pm ET Check your time zone

$895 ($995 after Jan 22)

Email us about discounts for five or more.

Plan private team training (online or in-person).

Questions?Contact josh@bigmedium.com for more information.

October 9, 2025

Charlie's Fake Videos for AI Literacy

Charlie is a banking app for older adults, with a brand focused on financial safety, simplicity, and trust. They launched a fun and smart campaign to help educate about the risks of deepfake scams.

The system creates AI-generated videos for friends and family���customized with their first names and hometown���to deliver a message about AI fraud, all while escaped zoo animals run amok. It’s silly and entirely effective.

Most people don’t realize just how good AI video has become���and how easy it is to clone anyone’s voice or face now. Raising that awareness feels essential, especially for an older audience frequently targeted by scams.

For all of us working with AI, we have a responsibility to improve literacy and cultivate pragmatic skepticism among our customers and users. The work of this new era of design is to be clear about AI’s risks and weaknesses, even as we harness its capabilities.

Encouraging appropriate skepticism is part of the work.

Warn your family and friends about AI scams | Charlie FraudWatchOctober 3, 2025

We Can Help

Josh Clark

Josh Clark Product leaders call me for all kinds of reasons. Sometimes it���s to help invent and build a next-generation product, other times to train their team in the latest design methods. But lately, there���s one call I get more than any other:

���We���re facing a big shift, and we���re not sure what to do next.���

The specifics vary but the themes are the same: Why don’t our products and processes work like they used to? What does AI mean for our business and our customers? How do we respond to seismic changes in [business / government / media / culture]? How do our teams keep up with technology that moves faster than we do? How do our people and products adapt to moments like this, and does our team have what it needs?

Hey, sometimes you just need a little help.

You���re not alone. In only the past few months, we���ve helped People Inc., Cigna, Stanford, Indeed, and a raft of startups with those strategic questions. In fact, so many of our recent engagements have started there that we���ve formalized some new offerings to complement our traditional design projects.

These are lightweight, impactful engagements that give your team the strategic boost and product vision to help you see around the corner:

Sentient Design workshops to build AI literacy Sentient Design sprints to explore AI opportunity Product strategy intensives to define product direction Strategic retainers for ongoing guidanceUp close and personal

All of these new engagements have you working closely with me (hi, I���m Josh Clark). I bring 30 years of applied product strategy across 100+ client companies. I often work alongside a small team of smarties who add fresh design, research, and implementation insights.

Our Sentient Design practice guides Fortune 100 companies and startups alike in applying AI in ways that are both valuable and responsible. AI is naturally a big focus right now, but it sits alongside shifts in media, government, and business that demand a rethink of what digital products should do: what you need to make and why.

More than making digital experiences, we also help make sense. I���ve found that intimate, intensive engagements help to cut through this tangle. Here���s a high-level overview of how we can help navigate it all:

Sentient Design workshop (1���2 days)$15k���$25k ($10k���$15k remote). Team training on AI product design methodology with Sentient Design authors Josh Clark and Veronika Kindred. Learn to design radically adaptive, AI-mediated experiences.

You get: Hands-on learning and practical techniques from the creators of the framework. Learn more

Best for: Design and product teams seeking to establish literacy and technique in AI experience patterns.

Recently: In the past three months, we’ve conducted a dozen of these workshops (both in person and remote) for internal teams at enterprise companies, startups, and agencies.

Sentient Design sprint (4 weeks)$80k. Rapid exploration of emergent AI-powered experiences to deliver key outcomes. Josh and team will guide your multi-disciplinary team through an intensive deep-dive into Sentient Design concepts to identify the experiments to pursue.

You get: Several scrappy candidate prototypes to consider taking forward.

Best for: Teams exploring ���what���s possible for us with AI?��� and who need to learn the terrain to identify possible direction and opportunity.

Recently: We built AI prototypes to ease design system usage and maintenance for one of the nation’s largest health insurers.

Recently: We crafted a coherent UX strategy around Sentient Design experience patterns for an AI-powered public affairs platform.

Product strategy intensive (8���10 weeks)$120k���$300k. Fast but thorough product conception, validation, and demonstration. This engagement figures out what you should build, complete with backing research, rough prototypes to prove it works, and roadmap to execute.

Josh Clark leads your team ($120k���$150k), orJosh Clark leads a blended team of yours and Big Medium���s ($240k���$300k)You get: Strategic clarity, a product brief, and working demos that your team can test and stakeholders can see.

Best for: Teams asking ���what should we build and why?��� who need both strategy and proof that it works before committing to production.

Recently: We guided the vision and design for People’s new mobile app, reimagining a media publication for a new generation.

Strategic retainer with Josh Clark$15k���$20k/month with quarterly commitments. Ongoing partnership where I work directly with your leadership team on product strategy, organizational design, and product direction. Think outboard chief product officer focused on emerging technology, AI, and upskilling your team.

You get: a standing monthly strategy session plus ongoing access for check-ins and reviews between sessions.

Best for: Organizations needing consistent strategic guidance without full-time executive hire.

Recently: I’m currently riding shotgun for a global hotel brand and an industrial manufacturing giant.

Full design strategy and execution tooOn top of those new engagements, we continue to do our bread-and-butter projects of complete product strategy, design, and front-end development. We field full production teams to do the research, strategy, design, and front-end development to take you right into implementation. These teams can build design systems, websites, applications, and more.

While we can build things for you, we prefer to build things with you. We love to collaborate with product teams to lead production work that we take on together. Along the way, we teach by demonstration to help level-up your team. Depending on the project, a production engagement is a three- to twelve-month project ($400k���$1M+).

A different kind of partnerThese aren���t typical offerings, but Big Medium���s not quite like other agencies or consultancies (we are both and neither). We’re structured to be flexible���sometimes you need the strategic guidance of a solo advisor like me, sometimes you need a full design team, sometimes you need both. We���ve got you covered.

None of these engagements is mutually exclusive, and it���s not uncommon for one to lead to another (e.g., a workshop leads to a product strategy intensive, or a Sentient Design sprint leads to a production engagement, etc.). Clients tell us they like this menu because it lets them take what they need and make decisions as they go.

Like I said, people call for all kinds of reasons. This new set of engagements rounds out the whole need���and fits our brand of friendly, seasoned partnership, too. Let���s figure out what fits you. Shoot me a note.

October 2, 2025

Wiring Interface to Intent

This essay is part of a series about Sentient Design, the already-here future of intelligent interfaces and AI-mediated experiences.

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The SXSW talkWatch the ���Sentient Design��� talk by Josh Clark and Veronika Kindred

The workshopBook a workshop for designing AI-powered experiences.

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

Until recently, it was really, really hard for systems to determine user intent from natural language or other cues. Hand-coded rules or simple keyword matching made early interpretation systems brittle. Even when systems understood the words you said, they might not know what to do with them if it wasn���t part of the script. Saying ���switch on the lights��� instead of ���turn on the lights��� could mean you stayed in the dark. Don���t know the precise incantation? Too bad.

Large language models have changed the game. Instead of relying on exact keywords or rigid syntax, LLMs just get it. They grasp underlying semantics, they get slang, they can infer from context. For all their various flaws, LLMs are exceptional manner machines that can understand intent and the shape of the expected response. They���re not great at facts (hi, hallucination), but they���re sensational at manner. This ability makes LLMs powerful and reliable at interpreting user meaning and delivering an appropriate interface.

Just like LLMs can speak in whatever tone, language, or format you specify, they can equally speak UI. Product designers can put this superpower to work in ways that create radically adaptive experiences, interfaces that change content, structure, style, or behavior���sometimes all at once���to provide the right experience for the moment.

It turns out that teaching a LLM to do this can be both easy and reliable. Here���s a primer.

Let���s build a call-and-response UIBespoke UI is one the 14 experience patterns of Sentient Design, a framework for designing intelligent interfaces. A specific flavor of bespoke UI is ���call-and-response UI,��� where the system responds to an explicit action���a question, UI interaction, or event trigger���with an interface element specifically tailored to the request.

This Gemini prototype is one of my favorite examples, and here’s another: digital analytics platform Amplitude has an ���Ask Amplitude��� assistant that lets you ask plain-language questions to bypass the time-consuming filters and queries of traditional data visualization. A product manager can ask, ���Compare conversion rates for the new checkout flow versus the old one,��� and Amplitude responds with a chart to show the data. The system understands the context and then identifies the data to use, the right chart type to style, and the right filters to apply.

Amplitude���s call and response UI lets you ���chat with charts,��� with all responses coming back as bespoke data visualizations.

Amplitude���s call and response UI lets you ���chat with charts,��� with all responses coming back as bespoke data visualizations. Call-and-response UI is fundamentally conversational: it���s a turn-based UI where you send a question, command, or signal, and the system responds with a UI widget tailored to the context. Rinse and repeat.

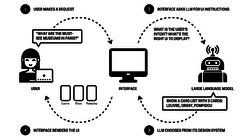

This is kind of like traditional chat, except that the language model doesn���t reply directly to the user���it replies to the UI engine, giving it instructions for how to present the response. The conversation happens in UI components, not in text bubbles.

To enable this, the LLM’s job shifts from direct chat to mediating simple design decisions. It acts less as a conversational partner than as a stand-in production designer assembling building-block UI elements or adjusting interface settings. It works like this: the system sends the user’s request to the LLM along with rules about how to interpret it and select an appropriate response. The LLM returns instructions to tell the interface what UI pattern to display. The user never sees the raw LLM response like they would in a text chat; instead the LLM returns structured data that the application can act on to display UI widgets to the user. The LLM talks to the interface, not the user.

In a call-and-response experience of bespoke UI, the LLM talks to the interface as a design decisionmaker, instead of speaking directly to the user.

In a call-and-response experience of bespoke UI, the LLM talks to the interface as a design decisionmaker, instead of speaking directly to the user. That might sound a bit abstract, so let���s build one now. We’ll make a simple call-and-response system to provide city information. The nitty-gritty details are below, but here���s a video that shows how it all comes together:

A starter recipeHere���s a simple system prompt that tells the LLM how to respond with an appropriate UI component:

The user will ask for questions and information about exploring a city. Your job is to:- Determine the user���s intent- Determine if we have enough information to provide a response. - If yes, determine the available UI component that best matches the user���s intent, and generate a brief JSON description of the response. - If not, ask for additional information- Return a response in JSON with the following format (IMPORTANT: All responses MUST be in this JSON format with no additional text or formatting):{ ���intent���: ���string: description of user intent���, ���UI���: ���string: name of UI component���, ���rationale���: ���string: why you chose this pattern���, ���data���: { object with structured data }}The prompt tells the system to figure out what the user is looking for and, if it has the info to respond, pick a UI component to match. The system responds with a structured data object describing the UI and content to display; that gives a client-side UI engine what it needs to display the response as an interface component.

The only thing missing is to tell the model about the available UI components and what they���re for���how to map each component to user intent.

## Available UI design patternsImportant!! You may use only these UI components based on the user intent and the content to display. You must choose from these patterns for the UI property of your response:### Quick Filter- A set of buttons that show category suggestions (e.g., ���Nearby Restaurants,��� ���Historic Landmarks,��� ���Easy Walks���). Selecting a filter typically displays a card feed, list selector, or map.- User intent: Looking for high-level suggestions for the kind of activities to explore.### Map- Interactive map displaying locations as pins. Selecting a pin opens a preview card with more details and actions.- User intent: Seeking nearby points of interest and spatial exploration### Card Feed- A list of visual cards to recommend destinations or events. Card types include restaurants, shops, events, and sights. Each card contains an image, short description, and action buttons (e.g., ���Add��� and ���More info��� ).- User intent: Discover and browse recommendations.Note: This is just a sketch. A complete version would include the full set of available UI components, along with the data structure of properties that each component expects. In production, you’d likely provide this set of components via an external file or resource instead of directly in the system prompt. I’ve simplified to help make the connection clear between intent and component choice.I set this up in a custom GPT; try it out to see what it’s like to ���talk��� to a system like this. The chat transcript would never be shown as-is to users. Instead, it���s the machinery under the surface that translates the user���s asks into machine-readable interface instructions:

Designing with wordsYou can spin up prototypes like this quickly on your own, even without deep technical know-how. Most LLMs provide a sandbox environment to create your own bot���ChatGPT has custom GPTs, Gemini has Gems, Claude has projects, and Copilot has agents. You can also use more advanced prompt-building tools like Google AI Studio, Claude Console, or OpenAI Playground. Use any of these services for quick experiments to try prompts like this in real time.In Sentient Design, crafting the prompt becomes a central design activity, at least as important as drawing interfaces in your design tool. This is where you establish the rules and physics of your application���s tiny universe rather than specifying every interaction. Use the prompt to define the parameters and possibilities, then step back to let the system and user collaborate within that carefully crafted sandbox.

The simple prompt example above asks the system to interpret a request, identify the corresponding interface element to display, and turn it into structured data. It���s the essential foundation for creating call-and-response bespoke UI���and a great place to start prototyping that kind of experience. You���re simultaneously testing and teaching the system, building up a prompt to inform the interface how to respond in a radically adaptive way.

Use that same city tour prompt or your own similar instruction, and plug it into your preferred playground platform. Try a few different inputs against the system prompt to see what kind of results you get:

I want to explore murals and underground galleries in Miami.Where should I go to eat street food?I only have one afternoon and love history.As you test, look for patterns in how the system succeeds and fails. Does it consistently understand certain types of intent but struggle with others? Are there ambiguous requests where it makes reasonable but wrong assumptions? These insights shape both your prompt and your interface design���you might need sharper input constraints in the UI, or you might discover the system handles ambiguity better than expected. Keep refining and learning. (This look at our Sentient Scenes project describes the process and offers more examples.)

The goal of this prompt exploration is to build intuition and validate how well the system can understand context and then do the right thing. As the designer, you���ll develop a sense of the input and framing the system needs, along with the kinds of responses you can expect in return. These inputs and outputs become the ingredients of your interface, shaping the experience you will create.

Experiment broadly across the range of activities your system might support. Here are some examples and simple constructions to get you started.

System Prompt Templates: A Pattern Library Capability System Prompt Intent classification “Determine the user���s intent from the user���s [message or actions], and classify it into one of these intent categories: x, y, z.” Action determination “Based on the user���s request, choose the most appropriate action to take: x, y, z.” Tool or data selection “Choose the appropriate tool or data source to fulfill the user���s request: x, y, z.” Manner and tone “Identify the appropriate tone (x, y, z) to respond to the user���s message, and respond with the [message, color theme, UI element] most appropriate to that tone.” Task list “Determine the user���s goal, and make a plan to accomplish it in a sequenced list of 3���5 tasks.” Ambiguity detection “Evaluate if the user���s request is clear and actionable. If it is ambiguous or lacks details, respond with a clarifying question.” Guardrails “Evaluate the user���s request for prohibited, harmful, or out-of-scope content. If found, respond with a safe alternative or explanation.” Persona alignment “Respond in the voice, style, and knowledge domain of [persona]. Maintain this character across all replies, and do not break role.” Pattern selection “Based on the user���s [message or actions], select the best UI pattern to provide or request info as appropriate: x, y, z.” Explainability “After every answer, include a short explanation of why you chose this response and your confidence level on a 0���100 scale.” Delegation “If the task is outside your capabilities or should be handled by [human/tool], explain why and suggest or enable the next action.”Prompt as design specTalking directly to the system is a new aspect of design and part of the critical role that designers play in establishing the behavior of intelligent interfaces. The system prompt is where designers describe how the system should make decisions and how it should interact with users. But this is not only a design activity. It���s also the place where product managers realize product requirements and where developers tell the system how to work with the underlying architecture.

The prompt serves triple duty as technical, creative, and product spec. This common problem space unlocks something special: Instead of working in a linear fashion, everyone can work together simultaneously, riffing off each other���s contributions to formally encode the what and why for how the system behaves.

The prompt serves triple duty as technical, creative, and product spec.

Every discipline still maintains their own specific focus and domains, but far more work happens together. Teams dream up ideas, tailor requirements, design, develop, and test together, with each member contributing their unique perspective. Individuals can still do their thinking wherever they do their best work���sketching in Figma or writing pseudo-code in an editor���but the end result comes together in the prompt.

Constraints make it workThe examples here are deliberately simple, but the principles scale up. Whether you’re building an analytics dashboard that adapts to different questions or a design tool that responds to different creative needs, it���s all the same pattern: constrain the outputs, map them to intents, and let the LLM handle the translation.

Constrain the outputs, map them to intents, and let the LLM handle the translation.

It���s an approach that uses LLMs for what they do best (intent, manner, and syntax) and sidesteps where they���re wobbly (facts and complex reasoning). You let the system make real-time decisions about how to talk���the appropriate interface pattern for the moment���while outsourcing the content to trusted systems. The result fields open-ended requests with familiar and intuitive UI responses.

The goal isn���t to generate wildly different interfaces at every turn but to deliver experiences that gently adapt to user needs. The wild and weird capabilities of generative models work best when they���re grounded in solid design principles and user needs.

As you���ve seen, these prompts don���t have to be rocket science���they���re plain-language instructions telling the system how and why to use certain interface conventions. That���s the kind of thinking and explanation that designers excel at.

In the end, it���s all fundamental design system stuff. Create UI solutions for common problems and scenarios, and then give the designer (a robot designer in this case) the info to know what to use when. Clean, context-based design systems are more important than ever, as is clear communication about what they do. That���s how you wire interface to intent.

So start writing; the interface is listening.

Need help navigating the possibilities? Big Medium provides product strategy to help companies figure out what to make and why, and we offer design engagements to realize the vision. We also offer Sentient Design workshops, talks, and executive sessions. Get in touch.

October 1, 2025

The Cascade Effect in Context-Based Design Systems

Nobody’s thinking more crisply about the convergence of AI and design systems than , a longtime friend and partner of Big Medium. He and his crew at front-end agency Southleft have been knocking it out of the park this year by using AI to grease the end-to-end delivery of design systems from Figma to production.

In our work together, TJ has led AI integrations that improved the Figma hygiene of design systems, eased design-dev handoff (or eliminated it altogether), and let non-dev, non-designer civilians build designs and new components for the system on their own.

If you work with design systems, do yourself the kindness of checking out the tools TJ has created to ease your life:

FigmaLint is an AI-powered Figma plugin that analyzes design files. It audits component structure, token/variable usage, and property naming. It generates property documentation and includes a chat assistant to ask questions about the audit and the system.

Story UI is a tool that lets you create layouts (or new component recipes) inside Storybook using your design system. Non-developers can use it to create entire pages as a storybook story.

Company Docs MCP basically enables headless documentation for your design system so that you can use AI to get design system answers in the context of your immediate workspace. Use it from Slack, a Figma plugin, Claude, whatever.

All of these tools double down on the essential design system mission: to make UI components useful, legible, and consistent across disciplines and production phases. Doing that helps the people who use design systems, but it also helps automate everything, too. The marriage of well-named components and properties with a clear and well-applied token system bakes context and predictability into the system. All of it makes things easier for people and robots alike to know what to do.

TJ calls these context-based systems:

Think of context-based design systems as a chain reaction.Strong context at the source creates a cascade of gooddecisions. But the inverse is equally true, and thisis crucial: flaws compound as they flow downstream.

A poorly named component in Figma (���Button2_final_v3���)loses its context. Without clear intent, developersguess. AI tools hallucinate. Layout generation becomesunreliable. What started as naming laziness becomeshours of debugging and manual fixes.���

Your design files establish intent. Validation tools (like FigmaLint) ensure that intent is properly structured. Design tokens translate that intent into code-ready values. Components combine those tokens with behavioral logic. Layout tools can then intelligently compose those components because they understand what each piece means, not just how it looks.

It���s multiplication, not addition. One well-structured component with proper context enables dozens of correct implementations downstream. An AI-powered layout tool can confidently place a ���primary-action��� button because it understands its purpose, not just its appearance.

When you put more “system” into your design system, in other words, you get something that is people-ready, but also AI-ready. It’s what makes it possible to let AI understand and use your design system.

That unlocks the use of AI-powered tools like Story UI to explore new designs and speed production. But even more exciting: it also enables Sentient Design experiences like bespoke UI: interfaces that can assemble their own layout according to immediate need. When you teach AI to use your design system, then AI can deliver the experience directly, in real time.

But first you have to have things tidy. TJ’s tools are the right place to start.

The Cascade Effect in Context-Based Design Systems | SouthleftSeptember 30, 2025

When Interfaces Design Themselves

This essay is part of a series about Sentient Design, the already-here future of intelligent interfaces and AI-mediated experiences.

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The SXSW talkWatch the ���Sentient Design��� talk by Josh Clark and Veronika Kindred

The workshopBook a workshop for designing AI-powered experiences.

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

Applications that manifest on demand. Interfaces that redesign themselves to fit the moment. Web forms that practically complete themselves. It���s tricky territory, but this strange frontier of intelligent interfaces is already here, promising remarkable new experiences for the designers who can negotiate the terrain. Let���s explore the landscape:

You look cautiously down a deserted street, peering both ways before setting off along a row of shattered storefronts. The street opens to a small square dominated by a bone-dry fountain at its center. What was once a gathering place for the community now serves as potential cover in an urban battlefield.

It���s the world of Counter-Strike, the combat-style video game. But there���s no combat in this world���only… world. It���s a universe created by AI researchers based on the original game. Unlike a traditional game engine with a designed map, this scene is generated frame by frame in response to your actions. What���s around the corner beyond the fountain? Nothing, not yet. That portion of the world won���t be created until you turn in that direction. This is a ���world model��� that creates its universe on the fly���an experience that is invented as you encounter it, based on your specific actions. World models like Mirage 2 and Google���s Genie 3 are the latest in this genre: name the world you want and explore it immediately.

What if any digital experience could be delivered this way? What if websites were invented as you explored them? Or data dashboards? Or taking it further, what if you got a blank canvas that you could turn into exactly the interface or application you needed or wanted in the moment? How do you make an experience like this feel intuitive, grounded, and meaningful, without spinning into a robot fever dream? It���s not only possible; it���s already happening.

These are radically adaptive experiencesRadically adaptive experiences change content, structure, style, or behavior���sometimes all at once���to provide the right experience for the moment. They���re a cornerstone of Sentient Design, a framework for creating intelligent interfaces that have awareness and agency.

Even conservative business applications can be radical in this way. Salesforce is a buttoned-up enterprise software platform heavy with data dashboards, but it���s made lighter through the selective use of radically adaptive interfaces. In the company���s generative canvas pilot, machine intelligence assembles certain dashboards on the fly, selecting and arranging precisely the metrics and controls to anticipate user needs. The underlying data hasn���t changed���it���s still pulled from the same sturdy sources���but the presentation is invented in the moment.

Instead of wading through static templates built through painstaking manual configuration, users get layouts uniquely tailored to the immediate need. Those screens can be built for explicit requests (���what���s the health of the Acme Inc account?���) or for implicit context. The system can notice an upcoming sales meeting on your calendar, for example, and compile a dashboard with that client’s pipeline, recent communications, and relevant market signals. Every new context yields a fresh, first-time-ever arrangement of data.

The system pulls information toward the user rather than demanding that they scramble through complex navigation to find what they need. We���ll explore a bunch more examples below, but first the big picture and its implications���

Conceived and Delivered in Real TimeThis free-form interaction might seem novel, but it builds on our oldest and most familiar interaction: conversation. In dialogue, every observation and response can take the conversation in unpredictable directions. Genuine conversations can���t be designed in advance; they are ephemeral, created in the moment.

Radically adaptive experiences apply that same give-and-take awareness and agency to any interface or interaction. The experience bends and flows according to your wants and needs, whether explicitly stated (user command) or implicitly inferred (behavior and context).

Conversation often takes unexpected turns to meet the needs and interests of the moment.

Conversation often takes unexpected turns to meet the needs and interests of the moment. Okay, but: if the design is created in real time, where does the designer fit in? Traditionally, it���s been the designer���s job to craft the ideal journey through the interface, the so-called happy path. You construct a well-lit road to success, paved with carefully chosen content and interactions completely under your control. That���s the way interface design has worked since the get-go.

Not anymore���or at least not entirely. With Sentient Design, the designer allows parts of the path to pave themselves, adapting to each traveler’s one-of-a-kind footsteps. The designer���s job shifts from crafting each interaction to system-level design of the rules and guardrails to help AI tailor and deliver these experiences. What are the design patterns and interactions the system can and can���t use? How does it choose the right pattern to match context? What is the manner it should adopt? The work is behavior design��� not only for the user but for the system itself.

For designers, creating this system is like being a creative director. You give the system the brief, the constraints, and the design patterns to use. It takes tight, careful scoping, but done right, the result is an intelligent interface with enough awareness and agency to make designerly decisions on the spot.

Creating this system is like being a creative director. You give the system the brief, the constraints, and the design patterns to use.

This is not ye olde website. For designers, this can seem unsettling and out of control. The risk of radically adaptive interfaces is that they can become experiences without shape or direction. That���s where intentional design comes in: to conceive and apply thoughtful rules that keep the experience coherent and the user grounded.

This design work is weird and hairy and different from what came before. But don���t be intimidated; the first steps are familiar ones.

Let’s start gently: casual intelligenceBegin with what you already know. Adaptive, personalized content is nothing new; many examples are so familiar they feel downright ordinary. When you visit your favorite streaming service or e-commerce website, you get a mix of recommendations that nobody else receives. Ho hum, right? This is a radically adaptive experience, but it���s nothing special anymore; it���s just, you know, software. Let���s apply this familiar approach to new areas.

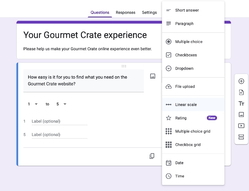

Adaptive content can drive subtle interaction changes, even in old-school web forms. In Google Forms, the survey-building tool, you choose from a dozen answer formats for each question you add: multiple choice, checklist, linear scale, and so on. It���s a necessary but heavy bit of friction. To ease the way, Google Forms sprinkles machine intelligence into that form field. As you type a question, the system suggests an answer type based on your phrasing. Start typing ���How would you rate���,��� and the default answer format updates to ���Linear scale.��� Under the hood, machine learning categorizes that half-written question based on the billions of other question-answer pairs that Google Forms has processed. The interface doesn���t decide for you, but it tees up a smart default as an informed suggestion.

Google Forms prompts you to select from 12 answer formats for every question. Machine intelligence eases the way by suggesting a smart default before you get to the answer field.

Google Forms prompts you to select from 12 answer formats for every question. Machine intelligence eases the way by suggesting a smart default before you get to the answer field. This is casual intelligence. Drizzle some machine smarts onto everyday content and interface elements. Even small interventions like these add up to a quietly intelligent interface that is aware and adaptive. Easing the frictions of routine interactions is a great place to get started. As operating systems begin to make on-device models available to applications, casual intelligence will quickly become a “why wouldn’t we?” thing. A new generation of iPhone apps is already using on-device models in iOS to do free, low-risk, private actions like tagging content, proposing content titles, or summarizing content.

But don���t feel limited to the mundane. Casual intelligence can ease even complex and high-stakes domains like health care. You know that clipboard of lengthy, redundant forms you fill out at the doctor���s office to describe your health and symptoms? The Ada Health app uses machine intelligence to slim that hefty one-size-fits-all questionnaire and turn it into a focused conversation about relevant symptoms. The app adapts its questions as you describe what you���re experiencing. It uses your description, history, and its own general medical knowledge to steer toward relevant topics, leaving off-topic stuff alone. The result is at once thorough and efficient, saving the patient time and outfitting the medical staff with the right details for a meaningful visit.

Start with what you know. As you design the familiar interactions of your everyday practice, ask yourself how this experience could be made better with a pinch of awareness and a dash of casual intelligence. Think of features that suggest, organize, or gently nudge users forward. Small improvements add up. Boring is good.

Bespoke UI and on-demand layoutsIf machine intelligence can guide an individualized path through a thicket of medical questions to shape a survey, it’s not a big leap to let it craft an interface layout and flow. Both approaches frame the ���conversation��� of the interaction, tailoring the experience to the user���s immediate needs and context. The hard part here is striking a balance between adaptability and cohesiveness. How do you let the experience move freely in appropriate directions while keeping the thing on the rails? For radically adaptive experiences to be successful, they have to bend to the immediate ask while staying within user expectations, system capabilities, and brand conventions.

Let’s revisit the Salesforce example. The interface elements are chosen on the fly but only from a curated collection of UI patterns from Salesforce’s design system. This includes familiar elements like tables, charts, trend indicators, and other data visualization tools. While the layout itself may be radically adaptive, the individual components are templated for visual and functional consistency���just like a design system provides consistent tools for human designers, too.

This is a conservative and reliable approach to the bespoke UI experience pattern, one of 14 Sentient Design experience patterns. Bespoke UIs compose their own layout in direct response to immediate context. This approach relies on a stable set of interface elements that can be remixed to meet the moment. In the Salesforce version of bespoke UI, adaptability lives within the specific constraints of the dashboard experience, creating a balance between real-time flexibility and a grounded user experience.

When you ease the constraints, the bespoke UI pattern leads to more open-ended scenarios. Google���s Gemini team developed a prototype that deploys a bespoke UI inside a chat context���but it doesn���t stay chat for long. As in any chat experience, you can ask anything; the demo starts by asking for help planning a child���s birthday party. Instead of a text reply, the system responds with an interactive UI module���a purpose-built interface to explore party themes. Familiar UI components like cards, forms, and sliders materialize to help you understand, browse, or select the content.

Although the UI elements are familiar, the path is not fixed. Highlighting a word or phrase in the Google prototype triggers a contextual menu with relevant actions, allowing you to pivot the conversation based on any random word or element. This blends the flexibility of conversation with the grounding of familiar visual UI elements, creating an experience that is endlessly flexible yet intuitive.

Successful bespoke UI experiences rely on familiarity and a tightly constrained set of UI and interaction patterns. The Gemini example succeeds because it has a very small number of UI widgets in its design system, and the system was taught to match specific patterns to specific user intent. Experiences like this are open-ended in what they accept for input, but they���re constrained in the language they produce. Our Sentient Scenes project is a fun example. Provide a scene or theme, and Sentient Scenes ���performs��� the scene for you: a playful little square acts it out, and the scenery adapts, too. Color, typography, mood, and behavior align to match the provided scene. The input is entirely open-ended and the outcomes are infinite, but the format constraints ensure that the experience is always familiar despite the divergent possibility.

The same principles and opportunities apply beyond graphical UI, too. What happens, for example, when you apply bespoke UI to a podcast, an experience that is typically fixed and scripted? Walkcast is an individualized podcast that tells one-of-a-kind stories seeded by your physical location. While other apps have attempted something similar by finding and reading Wikipedia entries, Walkcast goes further and in new directions. The app weaves those location-based facts into weird and discursive stories that leap from local trivia to musings about nature, life goals, local personalities, and sometimes a few tall tales. There���s a fun fractured logic connecting those themes���it feels like going on a walk with an eccentric friend with a gift for gab���and the more you walk, the more the story expands into adjacent topics. It���s an example of embracing some of the weird unpredictability of machine intelligence as an asset instead of a liability. And it���s an experience that is unique to you, radically adaptive to your physical location.

Each of these systems reimagines the relationship between user and interface, but all stay grounded within rules that the system must observe for presentation and interaction. Things get wilder, though, when you let the user define (and bend) those rules to create the universe they want. And so of course we should talk about cartoons.

The Intelligent Canvas

Generations of kids watched Wile E. Coyote paint a tunnel entrance on a solid cliff face, only to watch Road Runner zip right through���the painted illusion somehow suddenly real. Classic cartoons are full of these visual meta gags: characters drawing or painting something into existence in the world around them, the cartoonist���s self-conscious nod to the medium as a magic canvas where anything can happen.

A dash of machine intelligence brings the same transformative potential to digital interfaces, only this time putting the ���make it real��� paintbrush in your hands. What if you could draw an interface and start using it? Or manifest the exact application you need just by describing it? That���s Sentient Design���s intelligent canvas experience pattern, where radical adaptability dissolves the boundaries between maker and user.

The Math Notes feature in iPad, for example, effectively reimagines the humble calculator as a dynamic surface to create your own one-off calculator apps. Scrawl variables and equations on the screen, drop in some charts, and they start updating automatically as you make changes. You define both the interface and the logic just by writing and drawing. One screen might scale recipe portions for your next dinner party, while another works out equations for your physics class. By using computer vision and machine learning to parse your writing and the equations, the experience lets you doodle on-demand interfaces with entirely new kinds of layouts.

Functionally, it���s just simple spreadsheet math, but the experience liberates those math smarts from the grid. Designer, ask yourself: what platform or engine is your application built on? What are the opportunities for users to instantly create their own interfaces on top of that engine for purpose-built applications?

iPad’s Calculator provides a free-form canvas to draw your own working calculator apps. Here, a sketch visualizes the path of a ping-pong ball with an equation and variables to do the math.

iPad’s Calculator provides a free-form canvas to draw your own working calculator apps. Here, a sketch visualizes the path of a ping-pong ball with an equation and variables to do the math. As flexible as it is, Math Notes is limited to creating interactive variables, equations, and charts���the stuff of a calculator app. What if you could draw anything and make it interactive? tldraw is a framework for creating digital whiteboard apps. Its team created a ���Make Real��� feature to transform hand-drawn sketches or wireframes into interactive elements built with real, live functional code. Sketch a rough interface���maybe a few buttons, some input fields, a slider���and click the ���Make Real��� button to transform your drawing into working, interactive elements. Or get more ambitious: draw a piano keyboard to create a playable instrument, or sketch a book to make a page-flipping prototype. The whiteboard evolves from a space for visualizing ideas to a workshop for creating purpose-built interactions.

When you fold in more sophisticated logic, you start building full-blown applications. Chat assistants like Claude, Gemini, and ChatGPT let you spin up entire working web applications. If you���re trying to figure out how color theory works, ask Claude to make a web app to visualize and explore the concepts. In moments, Claude materializes a working web app for you to use right away. Looking for something a little more playful? Ask Claude to spin up a version of the arcade classic Asteroids, and suddenly you’re piloting a spaceship through a field of space rocks. To make it more interesting, ask Claude to ���add some zombies," and green asteroids start chasing your ship. Keep going: ���Make the lasers bounce off the walls.��� You get the idea; the app evolves through conversation, creating a just-for-you interactive experience.

Instead of downloading apps designed for general tasks, in other words, the intelligent canvas experience lets you sketch out exactly what you need. Taken all the way to its logical conclusion, every canvas (or file or session) could become its own ephemeral application���disposable software manifested when you need it and discarded when you don���t. Like that Counter-Strike simulation world, these intelligent canvas experiences describe universes that don’t exist until someone explores them or describes them.

That���s the vision of ���Imagine with Claude,��� a research preview from Anthropic. Give the prototype a prompt to spin up an application idea, and Imagine with Claude generates the application as you click through it���laying the track in front of the proverbial locomotive. ���It generates new software on the fly. When we click something here, it isn���t running pre-written code, it���s producing the new parts of the interface right there and then,��� Anthropic says in its demo. ���Claude is working out from the overall context what you want to see.��� This is software that generates itself in response to what you need rather than following a predetermined script. In the future, will we still have to rely on pre-made software, or will we be able to create whatever software we want as soon as we need it?���

From searching to manifestingOver the last few decades, the method to discover content and applications has evolved from search to social curation to algorithmic recommendation. Now this new era of interaction design allows you to manifest. Describe the thing that you want, and the answer or artifact appears. In fact, thanks to the awareness and anticipation of these systems, you may not even have to ask at all.

That���s a lot! We���ve traveled a long way from gently helpful web forms to imagined-on-the-spot applications. That���s all the stuff of Sentient Design. Radically adaptive experiences take many forms, from quietly helpful to wildly imaginative���book-end extremes of a sprawling range of possibilities.

While the bespoke UI and intelligent canvas patterns represent the wildest frontier, radical adaptability also powers more focused experiences. Sentient Design offers a dozen more patterns from alchemist agents to non-player characters to sculptor tools, and more.

What binds all of these experience patterns together is the radically adaptive experience that shifts away from static, one-size-fits-all interfaces. For designers, your new opportunity is to explore what happens when you can add awareness, adaptability, and agency to any interaction. Sometimes the answer is to add casual intelligence to existing experiences. Other times it means reimagining the entire interaction model. Either way, the common goal is to deliver value by providing the right experience at the right moment.

But real talk: this is hard. It doesn’t ���just work.��� Real-world product development is challenging enough, and the quirky, unpredictable nature of AI has to be managed carefully. This is delicate design material, so it’s worth asking a practical question:

Can self-driving interfaces be trusted?An interface that constantly changes risks eroding the very consistency and predictability that helps users build mastery and confidence. Unchecked, a radically adaptive experience can quickly become a chaotic and untrustworthy one.

If interfaces are redesigning themselves on the fly, how do users keep their bearings? What latitude will you give AI to make design decisions, and with what limitations? When every experience is unique, how do you ensure quality, coherence, and user trust? And how does the system and its users recover when things go sideways?

This stuff takes more than sparkles and magical thinking; it takes careful and intentional effort. You have to craft the constraints as carefully as the capabilities; you design for failure as much as for success. (Just ask Wile E. Coyote.)

Radically adaptive experiences are new, but answers are emerging. We explore those answers in depth in our upcoming book, Sentient Design with a wealth of principles and patterns, including two chapters on defensive design. All of it builds on familiar foundations. The principles of good user experience���clarity, consistency, sharp mental models, user focus, and user control���remain as important as ever.

What astonishing new value can you create?Let���s measure AI not by the efficiencies it wrings out but by the quality and value of the experiences it enables.

What becomes possible for your project when you weave intelligence into the interface itself? What astonishing new value can you create by collapsing the effort between intent and action? That���s the opportunity that unlocks what���s next.

Need help navigating the possibilities? Big Medium provides product strategy to help companies figure out what to make and why, and we offer design engagements to realize the vision. We also Sentient Design workshops, talks, and executive sessions. Get in touch.

Boring Is Good

Scott Jenson suggests AI is likely to be more useful for “boring” tasks than for fancy outboard brains that can do our thinking for us. With hallucination and faulty reasoning derailing high-order tasks, Scott argues its time to right-size the task���and maybe the models, too. “Small language models” (SLMs) are plenty to take on helpful but modest tasks around syntax and language.

These smaller open-source models, while very good,usually don���t score as well as the big foundationalmodels by OpenAI and Google which makes them feel second-class.That perception is a mistake. I���m not saying they performbetter; I���m saying it doesn���t matter. We���re askingthem the wrong questions. We don���t need models to takethe bar exam.

Instead of relying on language models to be answer machines, Scott suggests that we should lean into their core language understanding for proofreading, summaries, or light rewrites for clarity: “Tiny uses like this flip the script on the large centralized models and favor SLMs which have knock-on benefits: they are easier to ethically train and have much lower running costs. As it gets cheaper and easier to create these custom LLMs, this type of use case could become useful and commonplace.”

This is what we call casual intelligence in Sentient Design, and we recently shared examples of iPhone apps doing exactly what Scott is talking about. It makes tons of sense.

Sentient Design advocates dramatically new experiences that go beyond Scott’s “boring” use cases, but that advocacy actually lines up neatly with what Scott proposes: let’s lean into what language models are really good at. These models may be unreliable at answering questions, but they’re terrific at understanding language and intent.

Some of Sentient Design’s most impressive experience patterns rely on language models to do low-lift tasks that they’re quite good at. The bespoke UI design pattern, for example, creates interfaces that can redesign their own layouts in response to explicit or implicit requests. It’s wild when you first see it go, but under the hood, it’s relatively simple: ask the model to interpret the user’s intent and choose from a small set of design patterns that match the intent. We’ve built a bunch of these, and they’re reliable���because we’re not asking the model to do anything except very simple pattern matching based on language and intent. Sentient Scenes is a fun example of that, and a small, local language model would be more than capable of handling that task.

As Scott says, all of this comes with time and practice as we learn the grain of this new design material. But for now we’ve been asking the models to do more than they can handle:

LLMs are not intelligent and they never will be. We keep asking them to do ���intelligent things��� and find out a) they really aren���t that good at it, and b) replacing that human task is far more complex than we originally thought. This has made people use LLMs backwards, desperately trying to automate from the top down when they should be augmenting from the bottom up.���

Ultimately, a mature technology doesn���t look like magic; it looks like infrastructure. It gets smaller, more reliable, and much more boring.

We���re here to solve problems, not look cool.

It’s only software, friends.

Boring is good | Scott JensonThe 28 AI Tools I Wish Existed

Sharif Shameem pulled together a wishlist of fun ideas for AI-powered applications. Some are useful automations of dreary tasks, while others have a strong Sentient Design vibe of weaving intelligence into the interface itself. It’s a good list if you’re looking for inspiration for new ways to think about how to apply AI as a design material. Some examples:

A writing app that uses the non-player character (NPC) design pattern to embed suggests in comments, like a human user: “A minimalist writing app that lets me write long-form content. A model can also highlight passages and leave me comments in the marginalia. I should be able to set different ‘personas’ to review what I wrote.”

A similar one (emphasis mine): “A minimalist ebook reader that lets me read ebooks, but I can highlight passages and have the model explain things in more depth off to the side. It should also take on the persona of the author. It should feel like an extension of the book and not a separate chat instance.”

LLMs are great at understanding intent and sentiment, so let’s use it to improve our feeds: “Semantic filters for Twitter/X/YouTube. I want to be able to write open-ended filters like ���hide any tweet that will likely make me angry��� and never have my feed show me rage-bait again. By shaping our feeds we shape ourselves.”

The 28 AI Tools I Wish Existed | Sharif ShameemHow Developers Are Using Apple's Local AI Models with iOS 26

While Apple certainly bungled its rollout of Apple Intelligence, it continues to make steady progress in providing AI-powered features that offer everyday convenience. TechCrunch gathered a collection of apps that are using Apple’s on-device models to build intelligence into their interface in ways that are free, easy, and private to the user.

Earlier this year, Apple introduced its Foundation Models framework during WWDC 2025, which allows developersto use the company���s local AI models to power featuresin their applications.

The company touted that with this framework, developersgain access to AI models without worrying about anyinference cost. Plus, these local models have capabilitiessuch as guided generation and tool calling built in.

As iOS 26 is rolling out to all users, developers havebeen updating their apps to include features poweredby Apple���s local AI models. Apple���s models are smallcompared with leading models from OpenAI, Anthropic,Google, or Meta. That is why local-only features largelyimprove quality of life with these apps rather thanintroducing major changes to the app���s workflow.

The examples are full of what we call casual intelligence in Sentient Design. These are small, helpful interventions that drizzle intelligence into traditional interfaces to ease frictions and smooth rough edges.

For iPhone apps, these local models provide a “why wouldn’t you use it?” material to improve the experience. Just like we’re accustomed to adding JavaScript to web pages to add convenient interaction and dynamism, now you can add intelligence to your pages, too.

Starting small is good, and this collection of apps provides good inspiration for designers who are new to intelligent interfaces. Some examples:

MoneyCoach uses local models to suggest categories and subcategories for a spending item for quick entries.LookUp uses local models to generate sentences that demonstrate the use of a word.Tasks suggests tags for to-do list entries.DayOne suggests titles for your journal entries, and uses local AI to prompt you with questions or ideas to continue writing.And there’s plenty more���all of them modest interventions that build on simple suggestions (category/tag selection and brief text generation) or summarization. This kind of casual intelligence is low-risk, everyday assistance.

How developers are using Apple's local AI models with iOS 26 | TechCrunchUX Fika: Josh Clark and Veronika Kindred

With design especially, the professional is always personal, and Anna Dahlstrom is unrivaled at coaxing out that connection. Big Medium’s Josh Clark and Veronika Kindred joined Anna on her UX Fika podcast for a lively and intimate conversation. (It turns out that Anna, the author of Storytelling in Design is good at drawing out stories!)

Don���t settle for an interview that ���only��� talks about the future of design when you can also get life advice about the Beastie Boys, embarrassing childhood stories, practical magic, and what it���s like for this father-daughter duo to work together.

The conversation dives deep into Sentient Design: the form, framework, and philosophy for creating intelligent interfaces that are aware of context and intent. Josh and Veronika talk about the opportunity to use AI as a design material instead of a tool���to elevate design instead of replace it. They tour a bunch of examples of radically adaptive experiences and talked through several emergent design patterns.

And the conversation turns personal, talking about career choices for the father-daughter duo, what it means to work with family, and their generational perspectives on technology. Josh also reveals the secret of why he’s eaten the same lunch every day for a decade.

The interview is available on the usual platforms, and you should check out Anna���s other interviews while you���re there, too:

SpotifyApple PodcastsAmazon Music