Josh Clark's Blog, page 4

October 16, 2024

���The Design System Isn't Working for Me!���

When design system users run into issues designing and developing with the system, they often have a hard time distinguishing between a bug, a missing feature, an intentional design deviation, a new pattern they need to create, or something else. Often,��all they know is the design system isn’t working for them. They need help!

���When in doubt, have a conversation.���

���Master design system governance with this one weird trick

Turns out there’s��one weird trick for helping those people and mastering design system governance:��TALK!

Design system teams need to be proactive and obnoxiously clear about how to connect with them.��Product teams don’t need to understand the details, lingo, and nuances of a��thorough design system governance processes; all they need to know is get in touch with their friendly neighborhood design system team.

Once connected,��the design system team can lean in to better understand what the team is wrestling with.��This right here, my friends, is a freaking art form and has everything to do with vibes.��No one wants to brace for a thorough scolding by the Pattern Police; people want to be heard, understood, and helped.��This is why kindness, empathy, and curiosity are such important qualities of design system teams.

It’s through this conversation that everyone can collaborate and unpack the nature of the issue.

Difference between a bug, visual discrepancy, gap, and patternOften design system issues arrive with a label of “bug”, simply because that’s the only button people ��� especially developers who are often the unfortunate downstream recipients of design work ��� know to push. But the issues rarely are actual bugs; that’s why we’ve found it helpful to distinguish between the the types of “issues” people encounter when working with the design system. Let’s break ’em down!

Bugs��are defects in existing design system components (e.g. the accordion doesn’t open when the user selects it, the primary button foreground and background colors don’t have sufficient color contrast).Visual discrepancies��are designs that don’t match the available coded UI components. Visual discrepancies can be a design system visual bug (the DS library and code library are out of sync) or it could be a product designer doing their own thing (detaching DS components or creating their own custom UIs)A design system feature��is a new component or variant that isn’t currently in the design system but maybe ought to be.A pattern����� or��recipe����� is a composition of design system components that is owned and assembled by the product teamA more nuanced governance processWe’ve dug into these details with a number of clients, and I’ve updated our��design system governance diagram��to detail how to handle these different scenarios.

Once the teams are chatting, they can unpack the scenario and figure out what ��� if anything ��� needs to be done. One of best outcomes is that no new work needs to happen and the DS team can guide the product team to existing solutions and save hours, days, or weeks of unnecessary work. Hooray!

But if the teams agree that new work needs to happen, the next question to ask is��“What’s the nature of the new work to be done?”

Handling bugsIf it’s truly a��bug, the design system team should address it with extreme urgency and release a patch as soon as possible. Addressing true defects in the live system should be the highest priority for the design system team; teams won’t trust or use a busted system.

Handling visual discrepanciesIn the case of a visual discrepancy, the first question to ask is: “is this an issue with the design system?” If it’s a misalignment between the design system’s design and code libraries, it should be treated as a bug and addressed immediately.

But often it’s a product-level discrepancy where a product designer has created a deviation from the system or invented new patterns. It’s important to ask:��are these changes deliberate and intentional?

A product designer creating some purple buttons simply because they like the color purple isn’t justifiable, so the course of action should be for them to update the design to better align with the design system. But! Perhaps the purple buttons were created to run and A/B test or to support a specific campaign. In that situation, the teams may agree that’s deliberate and justifiable, so the product team can carry on implementing that custom UI solution. The design system team should make a note to follow-up with the team to hear how things turned out.

Handling featuresOften product teams have needs that aren’t handled by the current design system library. For these situations, the first question becomes:��Does the feature belong in the design system?

Many times, product teams need to create and own their own��recipes��— compositions of core design system components and/or custom UI elements. Those recipes should be used consistently within a product, but may not be universal enough to include in the core design system team. The design system team can help guide the product teams to create and own their own recipes that work with the grain of the design system.

In other situations, a new feature should indeed belong in the core design system. In these cases, the question becomes:��can that work happen to meet the product team’s timeline without sacrificing the high standards of the design system?��If the answer is yes, then great! The work can be built into the system. However if there’s not enough time, the product team should own that work in order to meet their deadline, and the DS team should add it to their backlog to address it when they can.

Design systems die in the darknessWe’ve trained the design system teams we work with to be extremely skeptical when they don’t hear from consuming teams. When you don’t hear from them, it’s typically not a sign of “everything is going great” and is more likely “something is going very wrong.” So be proactive and reach out. When in doubt, have a conversation.

Design system governance involves coming together and collaborating on the best way to handle fuzzy situations. It’s critical for teams to collaborate, communicate, and dig into the nuance of a situation to determine the best course of action. Hopefully this framing can help your team be effective.

We help organizations of all shapes and sizes establish and evolve their entire design system practice: from assets and architecture to people, process, and culture. If you could use help taking your design system to the next level,��feel free to reach out!

October 15, 2024

Design of AI: Sentient Design

This is part of a series about Sentient Design, the already-here future of intelligent interfaces and AI-mediated experiences.

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The talkWatch Josh Clark's talk ���Sentient Design���

The workshopBook a workshop for designing AI-powered experiences.

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

The marvelous Design of AI podcast features a conversation with Big Medium���s Josh Clark and Veronika Kindred about Sentient Design and the already here future of intelligent interfaces. Along with hosts Brittany Hobbs and Arpy Dragffy, Josh and Veronika discuss AI as a design material���the hows, whys, and implications of using machine intelligence to create radically adaptive, context-aware digital experiences.

And friends, they get into it. The conversation highlights the importance of design in shaping machine-intelligent experiences, focusing on outcomes and human need over technology.

Listen on SpotifyListen on Apple PodcastsWatch at Design of AIJosh and Veronika address the challenges and opportunities for using machine intelligence in various domains, from healthcare to education to consumer products. They also explore ethical considerations, the need for productive skepticism, and how to raise the bar for our expectations and experiences of AI-powered interfaces. And not least, the dad-daughter pair also dig into generational perspectives about this new technology���and why old heads like Josh need young heads like Veronika to escape calcified assumptions.

And no kidding, lots more:

The types of intelligent experiences that generative AI enablesMoving beyond the chatbot: Where AI-powered interfaces go nextRole of designers in shaping the next generation of digital productsTypes of relationships Gen Z and Gen Alpha will have with machine intelligenceShould this be a period of optimism or skepticism?What they saidA few highlights to get you started:

Josh: ���The flurry around AI has been on the low-hanging fruit of productivity, and I think that there’s something that is bigger���a more meaningful transformation of experience ���that is afoot here."

Josh: ���We are already used to this idea of radically adaptive _content_���Netflix, Amazon, these prediction or recommendation features that are so familiar that they seem almost boring. The opportunity now is to say, oh wait, we can do that with UX now."

Josh: "We are used to designing the happy path as designers. There���s a set of interactions and data over which we have complete control. We set up the levers and the knobs and the dials for people to turn, and we know the path that they’re going to follow. But once you start having machine-intelligent experiences where the system is mediating this, you don���t have that control anymore, which means that the design experience shifts from creating this static experience to something that is much more responsive.���

Veronika: "I’ve been surprised by how many of these products are put to market that just don’t work, or really don’t do what they promise to do. Maybe you can get one idea to be conceptualized out of it, but you can’t take it to the next level at all. It just completely falls apart when you try to initiate any sort of feedback loop. That���s been my my biggest surprise: just how fast people are rushing to get these products out of the gate when they are not good enough yet.���

Josh: “I’m a big fan of getting rid of the sparkles like I think that all that that’s doing is saying, ���this is going to be weird and broken.��� Let���s instead think: we’re making a thing that is going to work, and we should present it to the user as any other technology. It’s just software, after all. It���s not magic. It���s just software.”

Josh: ���How do we lean into that weirdness as an asset instead of as a liability? Because we can’t fix it being a liability."

Veronika: “I think the more you engage, the more skepticism you should bring. If your expectations are low, you can be so happy when you’re wrong.”

Josh: ���One of the things that Veronika and I are trying to do with Sentient Design is not only show how you can build new kinds of experiences and products and interactions , but also: how do you lean into the better part of this and not go into the fears around this? How do we create experiences that amplify judgment and agency instead of replace them?���

Veronika: “I kind of got the sense that AI is not so much a huge force for good or bad in the world. It’s more like oil. It helps people heat their home and drive their cars, but it also is a great tool for both equality and inequality.”

Josh: ���When you ask, you know, what’s a cutting edge application? I would say it’s not even a technology application. It’s a mindset application. What kind of world do we want to design for with this? And that’s what we talk about when we talk about sentient design. Let’s let the designers be sentient and mindful about what we can do with this technology.���

Veronika: “We should raise our standards and really see that this is the moment for designers to become the ultimate mediator for users’ needs.”

Tune inListen on SpotifyListen on Apple PodcastsWatch at Design of AIOctober 14, 2024

This AI Pioneer Thinks AI Is Dumber Than a Cat

Christopher Mims of the Wall Street Journal profiles Yann LeCun, AI pioneer and senior researcher at Meta. As you’d expect, LeCun is a big believer in machine intelligence���but has no illusions about the limitations of the current crop of generative AI models. Their talent for language distracts us from their shortcomings:

Today���s models are really just predicting the nextword in a text, he says. But they���re so good at thisthat they fool us. And because of their enormous memorycapacity, they can seem to be reasoning, when in factthey���re merely regurgitating information they���ve alreadybeen trained on.

���We are used to the idea that people or entities thatcan express themselves, or manipulate language, aresmart���but that���s not true,��� says LeCun. ���You can manipulatelanguage and not be smart, and that���s basically whatLLMs are demonstrating.���

As I’m fond of saying, these are not answer machines, they’re dream machines: “When you ask generative AI for an answer, it���s not giving you the answer; it knows only how to give you something that looks like an answer.”

LLMs are fact-challenged and reasoning-incapable. But they are fantastic at language and communication. Instead of relying on them to give answers, the best bet is to rely on them to drive interfaces and interactions. Treat machine-generated results as signals, not facts. Communicate with them as interpreters, not truth-tellers.

This AI Pioneer Thinks AI Is Dumber Than a Cat | WSJOctober 13, 2024

Beware of Botshit

botshit noun: hallucinated chatbot content that is uncritically used by a human for communication and decision-making tasks. “The company withdrew the whitepaper due to excessive botshit, after the authors relied on unverified machine-generated research summaries.”

From this academic paper on managing the risks of using generated content to perform tasks:

Generative chatbots do this work by ���predicting��� responsesrather than ���knowing��� the meaning of their responses.This means chatbots can produce coherent sounding butinaccurate or fabricated content, referred to as ���hallucinations���.When humans use this untruthful content for tasks,it becomes what we call ���botshit���.

See also: slop.

Beware of Botshit: How to Manage the Epistemic Risks of Generative ChatbotsA Radically Adaptive World Model

Ethan Mollick posted this nifty little demo of a research project that generates a world based on Counter-Strike, frame by frame in response to your actions. What’s around that corner at the end of the street? Nothing, that portion of the world hasn’t been created yet���until you turn in that direction, and the world is created just for you in that moment.

This is not a post that proposes the future of gaming or that tech will replace well-crafted game worlds and the people who make them. This proof of concept is nowhere near ready or good enough for that, except perhaps as a tool to assist/support game authors.

Instead, it’s interesting as a remarkable example of a radically adaptive interface, a core aspect of Sentient Design experiences. The demo and the research paper behind it show a whole world being conceived, compiled, and delivered in real time. What happens when you apply this thinking to a web experience? To a data dashboard? To a chat interface? To a calculator app that lets you turn a blank canvas into a one-of-a-kind on-demand interface?

The risk of radically adaptive interfaces is that they turn into robot fever dreams without shape or destination. That���s where design comes in: to conceive and apply thoughtful constraints and guardrails. It���s weird and hairy and different from what’s come before.

Far from replacing designers (or game creators), these experiences require designers more than ever. But we have to learn some new skills and point them in new directions.

Ethan Mollick's post on LinkedInExploring the AI Solution Space

Jorge Arango explores what it means for machine intelligence to be “used well” and, in particular, questions the current fascination with general-purpose, open-ended chat interfaces.

There are obvious challenges here. For one, this isthe first time we���ve interacted with systems that matchour linguistic abilities while lacking other attributesof intelligence: consciousness, theory of mind, pride,shame, common sense, etc. AIs��� eloquence tricks usinto accepting their output when we have no competenceto do so.

The AI-written contract may be better than a human-writtenone. But can you trust it? After all, if you���re nota lawyer, you don���t know what you don���t know. And thefact that the AI contract looks so similar to a humanone makes it easy for you to take its provenance forgranted. That is, the better the outcome looks to yournon-specialist eyes, the more likely you are to giveup your agency.

Another challenge is that ChatGPT���s success has drivenmany people to equate AIs with chatbots. As a result,the current default approach to adding AI to productsentails awkwardly grafting chat onto existing experiences,either for augmenting search (possibly good) or replacinghuman service agents (generally bad.)

But these ���chatbot��� scenarios only cover a portionof the possibility space ��� and not even the most interestingone.

I’m grateful for the call to action to think beyond chat and general-purpose, open-ended interfaces. Those have their place, but there’s so much more to explore here.

The popular imagination has equated intelligence with convincing conversation since Alan Turing proposed his “imitation game” in 1950. The concept is simple: if a system can fool you into thinking you’re talking to a human, it can be considered intelligent. For the better part of a century, the Turing Test has shaped popular expectations of machine intelligence from science fiction to Silicon Valley. Chat is an interaction clich�� for AI that we have to escape (or at least question), but it has a powerful gravitational force. “Speaks well = thinks well” is a hard perception to break. We fall for it with people, too.

The “AI can make mistakes” labels don’t cut it.

Given the outsized trust we have in systems that speak so confidently, designers have a big challenge when crafting intelligent interfaces: how can you engage the user’s agency and judgment when the answer is not actually as confident as the LLM delivers it? Communicating the accuracy/confidence of results is a design job. The “AI can make mistakes” labels don’t cut it.

This isn’t a new challenge. I’ve been writing about systems smart enough to know they’re not smart enough for years. But the problem gets steeper as the systems appear outwardly smarter and lull us into false confidence.

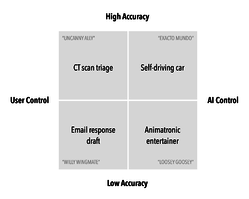

Jorge’s 2x2 matrix of AI control vs AI accuracy is a helpful tool to at least consider the risks as you explore solutions.

Source: Jorge Arango

Source: Jorge Arango This is a tricky time. It’s natural to seek grounding in times of change, which can cause us to cling too tightly to assumptions or established patterns. Loyalty to the long-held idea that conflates conversation with intelligence is doing a disservice. Conversation between human and machine doesn���t have to mean literal dialogue. Let���s be far more expansive in what we consider ���chat��� and unpack the broad forms these interactions can take.

Exploring the AI Solution Space | Jorge ArangoOctober 8, 2024

Introducing Generative Canvas

On-demand UI! Salesforce announced its pilot of “generative canvas,” a radically adaptive interface for CRM users. It’s a dynamically generated dashboard that uses AI to assemble the right content and UI elements based on your specific context or request. Look out, enterprise, here comes Sentient Design.

[image error]I love to see big players doing this. Here at Big Medium, we’re building on similar foundations to help our clients build their own AI-powered interfaces. It’s exciting stuff! Sentient Design is about creating AI-mediated experiences that are aware of context/intent so that they can adapt in real time to specific needs. Veronika Kindred and I call these radically adaptive interfaces, and it shows that machine-intelligent experiences can be so much more than chat. This new Salesforce experience offers a good example.

For Salesforce, generative canvas is an intelligent interface that animates traditional UI in new and effective ways. It’s a perfect example of a first-stage radically adaptive interface���and one that’s well suited to the sturdy reliability of enterprise software. Generative canvas uses all of the same familiar data sources as a traditional Salesforce experience might, but it assembles and presents that data on the fly. Instead of relying on static templates built through a painstaking manual process, generative canvas is conceived and compiled in real time. That presentation is tailored to context: it pulls data from the user���s calendar to give suggested prompts and relevant information tailored to their needs. Every new prompt or new context gives you a new layout. (In Sentient Design’s triangle framework, we call this the Bespoke UI experience posture.)

So the benefits are: 1) highly tailored content and presentation to deliver the most relevant content in the most relevant format (better experience), and 2) elimination or reduction of manual configuration processes (efficiency).

In Sentient Design, we call this the Bespoke UI experience posture.

Never fear: you’re not turning your dashboard into a hallucinating robot fever dream. The UI stays on the rails by selecting from a collection of vetted components from Salesforce’s Lightning design system: tables, charts, trends, etc. AI provides radical adaptivity; the design system provides grounded consistency. The concept promises a stable set of data sources and design patterns���remixed into an experience that matches your needs in the moment.

This is a tidy example of what happens when you sprinkle machine intelligence onto a familiar traditional UI. It starts to dance and move. And this is just the beginning. Adding AI to the UX/UI layer lets you generate experiences, not just artifacts (images, text, etc.). And that can go beyond traditional UI to yield entirely new UX and interaction paradigms. That’s a big focus of Big Medium’s product work with clients these days���and of course of the Sentient Design book. Stay tuned, lots more to come.

Introducing Generative Canvas: Dynamically Generated UX, Grounded in Trusted Data and WorkflowsSeptember 28, 2024

Your Sparkles Are Fizzling

This essay is part of a series about Sentient Design, the already-here future of intelligent interfaces and AI-mediated experiences.

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The talkWatch Josh Clark's talk ���Sentient Design���

The workshopBook a workshop for designing AI-powered experiences.

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

Please put the ���sparkles��� away. They���re sprinkled everywhere these days, drizzled on every AI tool, along with a heaping dollop of purple hues and rainbow gradients. It���s magic, it���s special, it���s sparkly.

It���s really not magic, of course���it���s just software. And because so many companies have heedlessly bolted AI features onto existing products, the shipped features are often half-baked, experimental, frequently shoddy. Instead of ���magic��� or ���new,��� sparkles are fast becoming the new badge for ���beta.��� The message sparkles now convey is, ���This feature is weird and probably broken���good luck!��� It would be more honest to use this emoji instead: ����

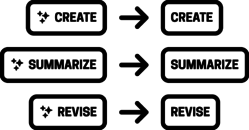

So what symbol should you use to represent machine-intelligent tools? How about no symbol at all. No need to segregate AI features or advertise their AI-ness as anything special. What matters to the user is what it does, not how it���s implemented.

We give no special billing to spell checkers or spam filters or other algorithmic tools; we just expect them to work. That���s the expectation we should set for all features, whether they���re enabled by machine intelligence or not. Features should just do what it says on the tin, without the sparkle-shaped asterisk. You���re revising text, generating images, assembling insights, making music, recommending content, predicting next steps, etc. These are simply product features���like every other powerful feature your experience offers���so present them that way. No special label required, no jazz hands needed.

Drop the sparkles. No need to segregate AI features or advertise their AI-ness as anything special. What matters to the user is what it does, not how it���s implemented.

One of the essential characteristics of Sentient Design is that it is deferential. It���s a posture that suggests humility. Put the disco ball away. Your goal should be to make machine intelligence a helpful and seamless part of the user experience, not a novelty or an afterthought.

If the feature actually is weird, unreliable, or broken, that���s a bigger problem that sparkles won���t solve. Maybe your feature isn���t ready to ship. Or better, perhaps you need to find a way to make the weirdness an asset instead of a reliability. Now that���s a fun design challenge to solve���and one that engages with the grain of machine intelligence as a design material. Take a measured pragmatic approach to what machine intelligence is good at and what it isn���t���and be equally measured in how you label it. Be cool. Put away the sparkles.

Is your organization trying to understand the role of AI in your mission and practice? We can help! Big Medium does design, development, and product strategy for AI-mediated experiences; we facilitate Sentient Design sprints; we teach AI workshops; and we offer executive briefings. Get in touch to learn more.

September 27, 2024

Data Whisperers, Pinocchios, and Sentient Design

This essay is part of a series about Sentient Design, the already-here future of intelligent interfaces and AI-mediated experiences.

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The talkWatch Josh Clark's talk ���Sentient Design���

The workshopBook a workshop for designing AI-powered experiences.

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

It took all of three minutes for Google���s NotebookLM to create this lively 12-minute podcast-style conversation about Sentient Design:

Bonkers, right? The script and the voices���along with the very human pauses, ums, and mm-hmms���all of it is machine-generated. It even nails the emotional tenor of the podcast format. I gave NotebookLM several chapters of the Sentient Design book manuscript, pushed a button, and this just popped out.[1]

The speed, quality, and believability of this podcast are remarkable, but they���re not even the most interesting thing about it. Here���s the bit that gets me excited: by transforming data from one format into another, this gives the content new life, enabling a new use case and context. It���s a whole new experience for content that was previously frozen into a different shape. Instead of 100 pages of PDFs that require your eyes and a few hours of time and attention, you���ve got a casual, relatable conversation that you can listen to on the go to get the gist in just a few minutes. It���s a new format, new mindset, new context, new user persona… and a new level of accessibility.

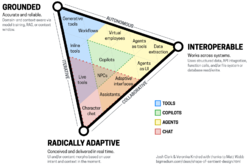

Listen to the data whispererIn Sentient Design, Veronika and I call this the data whisperer experience pattern. The data whisperer shifts content or data from one format to another. One super-pragmatic example is extracting structured data from a mess of unstructured content: turn blobs of text into JSON or XML so that they can be shared among systems. Machine intelligence is great at doing things like this���translation among formats. But this can go so much farther than file types.

If you listen to the podcast, you���ll hear the robot hosts talk about the Sentient Triangle, a way to describe different postures for machine-intelligent experiences. Data whisperers stake out the interoperable point of the triangle.

Data whisperers stake out the interoperable corner of the Sentient Design triangle.

Data whisperers stake out the interoperable corner of the Sentient Design triangle. Interoperability is typically associated with portability among systems. But data whisperers become even more powerful when they enable portability among experiences. Instead of focusing only on the artifacts that machine intelligence can generate with these transformations, consider the new interaction paradigms they could enable.

As a designer, ask yourself: what becomes possible when I liberate this content from its current form, and how can machine intelligence help me do that? And even better: what if you think of it beyond rote translation of modes or formats (text to speech, English to Chinese, PDF to JSON) but also manner, interaction, or even meaning? That���s what the podcast example hints at; it���s doing something far more than converting 100 PDF pages into audio. It���s reinterpreting the content for a whole new context.

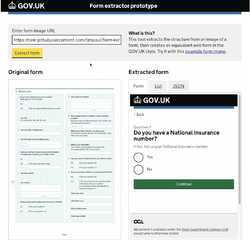

Tim Paul of GOV.UK put together this side project showing another example of using AI to rescue content from PDFs. His experiment translates static government forms into interactive multi-step web forms rendered in the gov.uk design system.

Tim Paul���s AI experiment rescues forms trapped inside PDFs and converts them to web forms using GOV.UK���s design system.

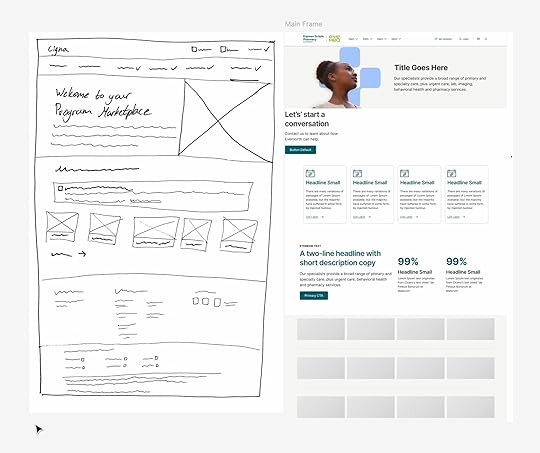

Tim Paul���s AI experiment rescues forms trapped inside PDFs and converts them to web forms using GOV.UK���s design system. Here at Big Medium, we did something similar when we built a Figma plugin for one of our clients. The plugin takes a sketch (or text prompt or screenshot) and creates a first-pass Figma layout using the company���s design system components. Here it is in action, acting as a sous chef to organize the components so that the designer “chef” can take over and create the final refined result:

The Pinocchio interaction pattern

The Pinocchio interaction patternThis plugin is a tight example of the data whisperer pattern: it thaws meaning and agency from a frozen artifact. But it���s also doing something more: it transforms content from low fidelity to high fidelity.

In Sentient Design, we call this the Pinocchio interaction pattern���turning the puppet into a real boy. Use the Pinocchio pattern to flesh an outline into text, zap a sketch into an artwork, or transform a wireframe into working code. Pinocchio is a data whisperer that elevates an idea into something functional.

Here���s another Pinocchio example. tldraw is a framework for creating digital whiteboard apps. The tldraw team created a ���Make Real��� feature that transforms hand-drawn sketches or wireframes into interactive elements built with functional code. Just draw a sketch, select it, and click the Make Real button to insert a working web view into the canvas. It���s an inline tool to enable quick and easy prototyping, turning a Pinocchio sketch into ���real boy��� markup.

When you bake the Pinocchio pattern into the fabric of an application, its interface becomes a radically adaptive surface���a free-form canvas that adapts to your behavior and context. You can see Apple working this angle in the iPad applications it previewed earlier this year. In Notes for iPad, Apple���s demo shows how you can circle a sketch, and the app creates a high-fidelity drawing based on the sketch and surrounding content context.

Or the Math Notes feature of iPad���s new calculator app lets you scrawl equations on the screen, and it transforms them into working math���spreadsheet functionality in sketch format. Scribbled numbers become variables. A column of figures becomes a sum. An equation becomes an interactive graph.

Experience over artifact

Experience over artifactWith generative AI, the generation tends to get all the attention. That���s a missed opportunity. With the data whisperer and Pinocchios patterns, you can create not only new content artifacts but new experience paradigms. You can liberate content and interaction from frozen formats, unlock new use cases, and help people move from rough idea to refined concept. Lean into it, friends, there���s much to explore here.

Is your organization trying to understand the role of AI in your mission and practice? We can help! Big Medium does design, development, and product strategy for AI-mediated experiences; we facilitate Sentient Design sprints; we teach AI workshops; and we offer executive briefings. Get in touch to learn more.

My favorite part of the podcast is when one of the AI-generated hosts says, ���Sometimes I even forget I���m talking to a computer.��� The other responds, ���Tell me about it.��� ↩

September 24, 2024

Workshop: Craft AI-Powered Experiences with Sentient Design

Contact Josh Clark to inquire about availability and pricing.

This workshop teaches and explores the Sentient Design methodology for designing intelligent interfaces and AI-mediated experiences. Learn more about Sentient Design:

The bookSentient Design by Josh Clark with Veronika Kindred will be published by Rosenfeld Media.

The talkWatch Josh Clark's talk ���Sentient Design���

Need help?If you’re working on strategy, design, or development of AI-powered products, we do that! Get in touch.

Now available! We offer private workshops to teach product and design teams to imagine, design, and deliver AI-powered features and products. It���s all backed by Big Medium���s Sentient Design methodology, a practical framework for designing intelligent interfaces that are radically adaptive to user needs and intent. We���ve been doing these workshops at conferences and with our clients, and now we want to share them with you.

These measured, pragmatic workshops are zero hype. They provide real-world AI literacy and practical techniques that your team can use today���like right now���to imagine surprising new experiences or to improve existing products. You and your team will discover entirely new interaction paradigms, along with new challenges and responsibilities, too. This demands fresh perspective, technique, and process; Sentient Design provides the framework for delivering this new kind of experience.

What���s in the workshop?Our Sentient Design workshops are immersive, hands-on experiences that guide participants through the whole life cycle of designing machine-intelligent experiences. Here���s what we���ll do together:

Prototype a new product: identify, imagine, and design AI-powered features that solve real problems (not just ���because AI���).Learn to use machine-generated content and interaction as design material in your everyday work.Use machine intelligence to deliver entirely new interactions or simply elevate traditional interfaces.Explore radically adaptive interfaces that are conceived in real-time based on user context and intent.Get your hands dirty working with models directly to learn their strengths and quirks.Discover emerging UX patterns and postures that go way beyond ���slap a chatbot on it.���Learn the art of defensive design. AI can be… unpredictable (and weird, wrong, and biased, too). Make its weirdness an asset instead of a liability.Adopt techniques to set user expectations and guide behavior to match the system���s ability.Use responsible practices that build trust and transparency.The workshops mix it up with lecture (the fun kind), discussion, and hands-on exercises. You and your team will put theory into practice through collaborative design sessions, prototyping exercises, and critical analysis of real-world AI applications. By the end of the workshop, your team will have a solid foundation in Sentient Design principles and a toolkit of techniques and design patterns to apply in your work.

Who it���s forThis workshop is perfect for designers, product owners/managers, and design-minded developers who want to stay ahead of the curve in AI-powered experiences. If you’re curious about how to make AI work for people (instead of the other way around), this is for you.

The workshop can flex to accommodate groups from 10 to 100 people.

Who���s teaching?The workshops are led by Big Medium���s Josh Clark and Veronika Kindred, authors of Sentient Design, the forthcoming book from Rosenfeld Media.

Reserve your workshopGet in touch with Josh Clark to explore availability and pricing.

We offer flexible formats to suit your team’s needs:

Half-day introductionFull-day immersionTwo-day comprehensive programThe workshops may be offered online via video conference or in person. No special preparation is required for your team. No prior AI or machine-learning experience is required���only a healthy mix of imagination and skepticism. Bring your human intelligence, and we’ll supply the artificial kind.

Workshops are flat-rate (not by number of attendees). Half-day workshops start at $5000, and pricing varies for length and for remote vs in-person.

Our workshops are also available at our frequent speaking events. Here���s what���s coming up: