Gordon Rugg's Blog, page 5

November 24, 2017

Referencing

By Gordon Rugg

So what is referencing anyway, and why should anyone care about it? What’s the difference between the Harvard system and the Vancouver system and the assorted other systems? How do you choose references that send out the right signal about you?

The answers to these and numerous other questions are in the article below. Short spoiler: If you do your referencing right, it gets you better marks, and you come across as an honest, capable individual who is highly employable and promotable. Why does it do this? Find out below…

What is referencing?

Referencing is that thing where there’s a number or a name and a date in a sentence, looking something like this.

85% of Internet references are fictitious [1].

Or like this:

85% of Internet references are fictitious (Abraham Lincoln, 1866).

It comes in various formats; for instance, the numbered format usually has the numbers in square brackets and the name-and-date format usually has the name and date in rounded parentheses. And yes, people really do pay attention to whether you mix up brackets and parentheses, for reasons that are covered below.

References versus bibliography

With references, every reference that you include in the text that you’re writing will also appear in the reference section at the end of the text; every reference in the reference section will appear somewhere in the text before the reference section.

This is different from a bibliography, which is essentially a section that lists relevant documents, without saying which documents are relevant to which parts of the text. (The full story is longer, but I’ve focused on this feature because it makes more sense of the purpose of references.)

This distinction matters for two main reasons. The first, sordidly practical, reason is that any idiot can copy-and-paste a vaguely relevant bibliography that they found somewhere on the Interwebs, to give the impression that they’ve done a huge amount of relevant work. This is lying, and most markers and employers take a dim view of lies, for understandable reasons.

The second reason is that references are actually practical tools of the trade for researchers. References are there so that the reader can check each of the key assertions that you’re making. This is extremely useful when it’s done right, and extremely irritating when it’s done wrong, or not done at all.

Suppose, for instance, that you’re trying to solve a problem, and you read an article which mentions in passing that there’s a simple, cheap solution to the problem. If that statement is followed by a reference, you can then track down the book or article in the reference, read it, and find out about that simple, cheap solution. If the statement isn’t followed by a reference, you’re going to have strong feelings about people who don’t reference properly.

This is a significant practical issue for bibliographies. They don’t have the detailed, easily traced links between assertions and source documents that are at the heart of references. This is why most universities focus on students learning how to use references, rather than bibliographies. There are some situations where bibliographies are more appropriate than references, but those situations are typically ones such as writing a textbook, which are unlikely to apply to students.

Different formats: The big picture

There are two main formats for references. One uses numbers in brackets, like this [42]. The other uses surnames and dates in parentheses, like this (Adams, 1978).

The numbers and brackets version has the advantage of being short. This is useful for situations where you need to keep documents short, or where you don’t want to interrupt the flow of a chain of reasoning. For most other situations, though, the numbers and brackets version is a pain. It’s a pain for the writer, because if you suddenly realise that you’ve missed a key reference early in the document, you then need to re-number everything that comes after it. Yes, there’s software that does the re-numbering for you, but do you really want to buy that software and spend time learning to use it?

It’s also a pain for the reader, because you need to flip to the end of the document every time you want to check a reference. An experienced reader can tell a huge amount from an author’s choice of references, and the number and brackets system makes this reading between the lines much more effort than the name plus date format.

Here’s an example of how that works.

85% of Internet references are fictitious (Katz & Friedman, 2016).

85% of Internet references are fictitious (Young, Gifted & Black, 2016).

85% of Internet references are fictitious (Abraham Lincoln, 1866).

85% of Internet references are fictitious [1].

The Katz & Friedman version looks plausible; the date is recent, and the names don’t ring any alarm bells. The Young, Gifted & Black version looks plausible in terms of date, but the authors’ names look suspicious; some checking online soon finds that there’s an Aretha Franklin classic song with that title, which strongly suggests that someone is having their little joke with that reference, but in a way that could easily be misinterpreted as a genuine reference. The Abraham Lincoln version is clearly a joke. The numbered version, however, doesn’t tell you anything; it’s just a number. If you want to assess its plausibility, you need to flip to the reference section at the end of the article. The novelty of flipping back and forward wears off pretty fast.

Different formats: Details

It would be nice if there was a single, universally agreed, sensible format for name and date referencing. Unfortunately, the world isn’t always nice and sensible. Just about every journal and publisher has their own house style for how references should be formatted. Some want the authors’ surnames in uppercase; others don’t. Some want the authors’ full names; others want surname plus initials. It’s a pain, but it’s deeply entrenched in The System, so you just have to deal with it.

Does it matter in practical terms? Yes, it does. Just about every self-respecting organisation pays a lot of attention to the details in anything it publishes, and if you depart from your organisation’s house style, someone will have to change your document into house style, which costs time and money. This applies just as much in industry as it does in academia.

The people who enforce house style pay scrupulous attention to detail, down to the level of whether you have a comma where you should have a semi-colon. Again, this applies just as much in industry as in academia. A major reason for this is that minor differences in punctuation can have major differences in meaning and in legal implications. Nobody wants to lose huge amounts of money or to end up in court because of a punctuation error, so if you’re planning to be in a role which involves writing documents that go outside the organisation, you need to be prepared for paying a lot of attention to this sort of detail.

Sending out the right signals via your choice of references

There are various signals that you’ll probably want to send out via your references. Here are some of the main ones.

You know a good range of sources of information. People on the street know about books, magazines, and the Internet. They don’t know about most of the standard academic tools of the trade, such as journals and conference proceedings, or about the grey literature, such as technical reports. Even if you only include one reference to a journal article and one reference to a set of conference proceedings, that’s still enough to show the reader that you know about those sources of information.

You know which sources of information to use for which purposes. Different sources of information are good for different purposes. For example, conference proceedings are useful for recent research findings that have gone through peer review; the Internet can be useful for up-to-date technical information. If you’re using a range of sources of information, and using them appropriately, then that shows that you know how to use the tools of your trade.

You’ve done your background reading properly. Your literature review is where you show that you’ve checked what’s been tried before, and that you’re not re-inventing the wheel out of ignorance. You need to show that you’ve read the key previous publications about the problem that you’re tackling; you do that by referencing them. You also need to show wherever possible that you’ve actually read them, rather than reading someone else’s description of them. Other people’s descriptions are often weirdly, wonderfully different from what the original document actually said. This means that your references will need to range from very recent ones (to show that you know what’s happening now) back to the original ones where a problem was first described (to show that you’ve traced the literature on the problem back to the start).

You’re careful about attention to detail. Attention to detail is viewed as important in most fields. If your references are neatly and accurately laid out, this implies that you’ll also be paying attention to detail in other areas.

You’re honest. Honesty is viewed as extremely important almost everywhere. This is one of the signals that you send out as a side-effect of being attentive to detail. It’s horribly tempting to copy and paste the reference for an article that you’ve read into your reference section. The problem with this approach is that the copy-and-paste section will almost certainly be in a different format from the other references, so it will stick out like a sore thumb to a cynical reader (such as the lecturer marking your coursework, if you’re a student). Will they give you the benefit of the doubt? If you believe that, you might want to buy some gold bricks that I’ve acquired completely honestly… When a reader sees different formats within your references, they’ll assume that you’re at least slovenly, and at worst dishonest, and they’ll start looking harder at the other things you’ve written. So, be attentive to detail, and slog through the reformatting. It does make a difference.

You’re discerning. Sometimes you have to cite an article from a low-credibility source because it’s all that you’ve got, or because you want to criticise it. For instance, you might need to discuss technical problems with an AE-35 Unit, which isn’t the sort of thing that you’ll find in journals, but which you might find in an online forum. In such cases, you can use a double-pronged strategy. One prong is to make sure you have some high-credibility references for other points that you make, so show the reader that you know about journal articles, etc. The other prong is to use phrasing which makes it clear to the reader that you’re using the low-credibility source because there’s nothing better available, and that you’re treating it with a healthy dose of scepticism; for instance, “A post in an online forum claims that…” where the word “claims” makes it clear that you’re treating that post with caution.

Nuts and bolts

How do you actually format references? Fortunately, there are lots of resources available, both online and in hard copy documents, that will guide you through referencing systems in as much detail as you could possibly desire. For the Harvard system, which is one of the most widely used, there are numerous guides which show you how to reference everything from books to journal articles to anonymous online forum postings. If you have trouble understanding anything, librarians and lecturers are usually very supportive, because students who want to get their referencing right are rare and much-valued.

On which encouraging note, I’ll end.

October 16, 2017

Ways of stating the obvious

By Gordon Rugg

Stating the obvious is an activity unlikely to win you many friends, or to influence many people in a direction that you would like. However, sometimes you have to do it.

So, why do you sometimes have to state the obvious, and how can you turn this problem to advantage? That’s the topic of this post. I’ll use the worked example of risks, both obvious and less obvious. (Reassuring note: I don’t go into scary details…)

There are assorted bad reasons for stating the obvious, such as not having the brain fully in gear, or not caring. These do not give a good impression.

There are, however, a few situations where you need to state the obvious. A common one is that you’re giving an overview, and that you need to include the obvious for completeness, alongside the less obvious things that show you in a much better light.

Some careful wording can help you to turn this situation to your advantage. I’ll work through key points of the wording in stages.

Obvious

You can start by describing what you’re about to say as “obvious”. Although this may not look like the greatest start, in fact it’s a pretty good way to begin, because it implies that you’ll be mentioning non-obvious ones separately.

An

Usually, beginning with “An obvious…” is better than beginning with “The obvious…”.

This is because “The obvious…” implies that what you have identified the item which is most obvious. That’s asking for trouble. A cynical, critical reader is likely to view this as a challenge to identify something more obvious. Finding something more obvious is usually fairly easy, because human beings tend to overlook the very, very obvious because it’s so familiar that it doesn’t get noticed.

Beginning with “An obvious” or “Some obvious” sidesteps this problem. Another way of sidestepping it is described in the next section.

Include

The word “include” is your friend in many situations, because it implies that you know other examples, but have spared the reader from a full brain-dump. The words “is” and “are” imply that you are about to give the full list, which sets you up for problems if you’ve missed some examples.

So, using the example of risks, this gives us: “Some obvious risks include…” or more briefly, “Obvious risks include…”

There’s a lot of subtext in those words, all of it working in your favour.

In addition to

The phrasing “Obvious risks include…” is strong, but a cynical reader may still wonder whether you’re actually going to mention any non-obvious risks later, or whether you’ll just stop at the end of the obvious ones. (Yes, some people do just stop at the end of the obvious ones…)

You can prevent that suspicion from arising by starting your sentence with phrasing such as: “In addition to obvious risks such as…” which makes it clear that you are going to handle the non-obvious risks as well as the obvious ones.

Where do you go next?

In case you’re wondering, it’s not usually a good idea to show the reader a list neatly divided into two sections labeled as “obvious risks” and “non-obvious risks”. It’s usually a much better strategy to base your list of risks on the literature, if possible.

One way is to use a list from a classic source on the topic (e.g. “Smith & Jones (2003) give the following list of risks, ranked by frequency”).

Sometimes, though, there isn’t a single suitable list, and that’s where having a good opening sentence about obvious and non-obvious risks is helpful. You can then create your own list, with the reassuring knowledge that you won’t get off to a bad start if the first item on your list is an obvious one, because the reader knows from your opening sentence that less obvious items will follow.

Looking further forward, the “include” phrasing can be very useful, in various forms. It shows that you know actual examples, which is a good signal to send out, without limiting you to those examples. This is particularly useful if you’re writing a plan, where you want to show that you know what you’re doing, without committing yourself to one particular approach. You can do this with phrasing such as “Possible methods include…”

One thing to remember when doing this is the difference between “i.e.” and “e.g.” which is a common cause of confusion. “i.e.” comes from the Latin “id est” meaning “that is”. “e.g.” comes from the Latin “exempli gratia” meaning “for the sake of example”. There’s a long tradition of Latin teachers using Dad-joke-style humour to help people remember Latin phrases, so I’ll draw on that by saying that “i.e.” will “ti.e.” you down, whereas “e.g.” will “e.g.spand” your options. (I didn’t say that it was a good tradition, just that it’s a long one…)

On which note, I’ll end. I hope you find this useful.

May 1, 2017

Sending the right signals at interview

By Gordon Rugg

On the surface, a lot of the advice that you’ll see about sending the right signals at job interviews is either pretty obvious (e.g. “dress smartly”) or subjective (e.g. “dress smartly”) or social convention with no relation to what you’ll actually be doing in the job (e.g. “dress smartly”).

Below the surface, however, there are regularities that make a lot more sense of what’s going on. Once you know what those regularities are, you’re in a better position to send out the signals that you want, with the minimum of wasted effort and of misunderstanding on both sides.

So, what are those regularities, and where do they come from? The answer takes us into the reasons for Irish elk having huge antlers, and peacocks having huge tails, and monarchs having huge crowns.

Images from Wikipedia; credits at the end of this article

When you look at the research into signalling, by biologists and anthropologists, one concept is particularly useful. It’s known technically as honest costly signalling. In this context, “honest” means “I can put a lot of trust in the accuracy of this signal” and costly means “requiring a lot of resources”. This is subtly but significantly different from the everyday meanings of “honest” and of “costly”. To reduce the risk of confusion, I’ll deliberately avoid using those words where there’s a chance of ambiguity.

In biology, male land animals tend to have prominent body features that are used in courtship, such as the extravagant tail feathers of peacocks, or the enormous antlers of the extinct Irish elk. The features involved are typically ones that require excellent health and that are hard to fake, which makes them trustworthy signals of biological success (and therefore of good prospective mates).

In anthropology, the same principle appears in human behaviour. An example is the crown used by a king or queen, which is typically made of rare, highly valued materials as a signal of social success. However, finding signals that are hard to fake is difficult in human society, because human beings are very inventive in finding ways to fake signals. For example, Archimedes’ famous eureka moment arose from the challenge of determining whether a ruler’s crown was made of pure gold or of a cheaper mixture of gold and silver.

So how does this apply to the initial stage of job applications?

Before interviewers see you, they’ve read your application. There’s an asymmetry at the written application stage. If your written application is bad, then it’s probably an honest signal of badness. If it’s good, that isn’t a very trustworthy signal, because they have no way of knowing whether you wrote it yourself, or whether you persuaded a friend with brilliant writing skills to do it for you. Which is one reason that most organisations like having interviews, even if the statistics for interview success in choosing the best candidate aren’t very inspiring; at least the interview lets the organisation detect some types of cheat and bullshit artists.

A brief side note: Selection panels are usually picky about typos in written applications, because they don’t want to hire someone who will cause a disaster through not being able to get the written text absolutely right. You might think that this is unfair to people with dyslexia, and you’d be right. An organisation which has its act together should have a process which includes having important documents proofread by someone with proper proof reading skills, which will make life fairer to people with dyslexia or poor spelling.

In case you’re wondering whether a simple mis-spelling can actually make a difference to anything, the answer is an emphatic “Yes”. There are plenty of cases where a typo or a mis-spelling or mis-phrasing have caused disasters, which is why a lot of employers will reject a job application because of a single typo in the cover letter.

How does this apply to the interview stage of job applications?

One obvious issue is that interviews involve questions and answers in real time, where you can’t ask a friend or do an online search for help in giving the right answer. This greatly reduces the options for sending out dishonest signals. Any halfway competent interviewer can rapidly get past the answers that the applicant has carefully prepared, and into territory that the applicant hasn’t prepared for. This doesn’t guarantee that what they find will be a completely accurate sample of the applicant’s abilities, but it’s likely to be a pretty good start.

Most candidates are well aware of this issue, and are very twitchy about being dragged out of their comfort zone into unprepared territory. If a candidate performs well in response to questions that they couldn’t have prepared for, then that’s likely to be an honest costly signal.

This, however, isn’t the only way of signalling strength. One signal which is often used semi-tacitly or tacitly involves shibboleth terms.

These are words or phrases which are used to show some form of group membership. Originally, they were words or phrases which outsiders found difficult to pronounce. In a broader sense, they are words or phrases with specific, specialist meanings, which outsiders tend to mis-use. Often, learning the specific specialist meanings involves mastering sophisticated concepts, making these terms honest costly signals.

For example, in many disciplines the terms “validity” and “reliability” have very different meanings from each other, and have strict definitions grounded in statistics and experimental design. So, if an applicant uses these terms in the correct sense without having to pause and think about them, it’s a pretty trustworthy signal that the applicant knows about a whole batch of underlying concepts in statistics and experimental design, and that there’s no need to poke around further in that area.

A common example which has significant legal implications is the distinction between “e.g.” and “i.e.”. A lot of people use these terms interchangeably, but there’s a big difference between them. The term “e.g.” means “for example”. This doesn’t tie you down. The term “i.e.” means “that is”. This does tie you down. There’s a big difference between “We offer out of hours customer support, for example on Sundays” and “We offer out of hours customer support, that is, on Sundays”. The first phrasing is saying that the out of hours support includes Sundays, but isn’t limited to Sundays. The second is saying that the out of hours support is only for Sundays (so it doesn’t include evenings, nights, or public holidays). That’s a significant difference, especially if you’re confronted by an angry customer who has been inconvenienced by your mis-phrasing.

At a craft skill level, this distinction is your friend in several ways. You can use the “e.g.” phrasing to give yourself some wiggle room in a plan, by giving an example of a method or material that you might use, but won’t necessarily use. This phrasing also allows you to get some credibility and/or Brownie points by showing that you know a specific relevant method or material. In addition, the fact that you’ve used it correctly implies that you’ll also know about a batch of other things which are usually taught together with the distinction between “e.g.” and “i.e.”

The take-home message from this is to pay attention to the apparently nit-picking distinctions that your lecturers make between terms that may be used interchangeably by non-specialists. Those distinctions are likely to act as shibboleth terms in interviews, and later when new work colleagues are meeting you for the first time and deciding how much use you’ll be. If you’re not sure why the lecturers are making a particular distinction, then it’s a wise idea to find out; if nothing else, it could get you that extra mark or two in an exam that takes you across a key boundary. On a more positive note, the more you understand your topic, the more you’re likely to get out of it, both in terms of skills and in terms of actually enjoying it.

Which leads us to the last topic in this article…

Closing thoughts

You might be starting to wonder bleakly whether job interviews are going to be an exercise in mental torture, where your every word will be measured against impossibly high standards. The reality is that the other candidates will usually be a lot like you, and will usually be feeling a lot like you, because that’s how the selection process works. The candidates who reach interview are usually at about the same level as each other, because significantly weaker candidates wouldn’t reach the interview stage, and significantly stronger candidates would have either been rejected as over-qualified (yes, that really does regularly happen) or been advised to apply for a higher grade job.

So, you don’t need to know every shibboleth term in your field to have any chance of a job; they help, and you should have picked up some of them along the way, but you don’t need to be a walking encyclopaedia of specialist terms. That should act as some reassurance to you.

Another source of reassurance is that there’s a classic form of question which interviewers often use if you’re having trouble with nerves, and feeling tongue-tied and incoherent. It’s some variant on: What did you most like/enjoy/find most interesting in your previous job?

It’s surprisingly effective at getting you to forget about being in an interview, and to start talking about something you really care about and know about. From the interviewer’s point of view, it’s very useful to discover what you really care and know about – for instance, is it about technical challenge, or producing a well finished product, or teamwork? It also shows the interviewer what you’re really like underneath the nerves; in other words, an honest signal that shows your best qualities.

On which encouraging note, I’ll end.

Notes

Credits for original pictures in the banner image:

Irish elk image: By Jatin Sindhu – Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=49736189

Peacock image: By Pavel.Riha.CB at the English language Wikipedia, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=2438547

Crown image: By Cyril Davenport (1848 – 1941) – G. Younghusband; C. Davenport (1919). The Crown Jewels of England. London: Cassell & Co. p. 6., Public Domain, https://commons.wikimedia.org/w/index.php?curid=37624150

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

There’s more about job applications and interviews in The Unwritten Rules of PhD Research, by myself and Marian Petre.

Although it’s mainly intended for PhD students, there are chapters on academic writing which are highly relevant to undergraduate and taught postgraduate students. It’s written in the same style as this article, if that’s any encouragement…

The Unwritten Rules of PhD Research, 2nd edition (Marian Petre & Gordon Rugg)

http://www.amazon.co.uk/Unwritten-Rules-Research-Study-Skills/dp/0335237029/ref=dp_ob_image_bk

March 17, 2017

Cognitive load, kayaks, cartography and caricatures

By Gordon Rugg

This article is the first in a short series about things that look complex, but which derive from a few simple underlying principles. Often, those principles involve strategies for reducing cognitive load. These articles are speculative, but they give some interesting new insights.

I’ll start with Inuit tactile maps, because they make the underlying point particularly clearly. I’ll then look at how they share the same deep structure as satirical caricatures, and then consider the implications for other apparently complex and sophisticated human activities that are actually based on very simple processes.

By Gustav Holm, Vilhelm Garde – http://books.google.com/books?id=iDspAAAAYAAJ, Public Domain, https://commons.wikimedia.org/w/index.php?curid=8386260

One of the most striking discoveries from early work in Artificial Intelligence (AI) was that some of the skills long viewed as pinnacles of human intelligence could actually be replicated quite easily with very basic software. A classic example is chess, long viewed as a supreme triumph of pure intellect. Chess actually turned out to be easy to automate.

There were similar discoveries from classic work in the 1970s and 1980s about human judgment and decision-making. For tasks such as predicting the likelihood of a bank’s client defaulting on a loan, simple mathematical models turned out to be better predictors than experienced bank managers.

So, just because human beings think that something is complex and sophisticated doesn’t mean that it necessarily is complex and sophisticated. Often, very simple explanations turn out to be surprisingly accurate and powerful. This is the case with caricature drawing, which can be explained using a couple of very simple principles.

One of those principles is widely used in map making. The Inuit tactile map in the image below shows a stretch of coastline. The map is intended to be kept within a mitten, so that in cold weather you can consult it without having to take your hand out of the mitten.

https://en.wikipedia.org/wiki/Ammassalik_wooden_maps (cropped for clarity)

Each protruding part of the map corresponds to a headland protruding into the sea; each concave part of the map corresponds to a bay. The map can also show prominent mountains, etc, because it’s three-dimensional. This link shows how the tactile map corresponds to a paper map of the same area.

The tactile map doesn’t use the same scale for the distances between headlands as it does for how far the bays go inland, or for the steepness of the terrain. This sort of inconsistency is quite common in map making. One well known reason is the problem of displaying curved sections of a spherical world on a flat surface, which is a serious problem when trying to show near polar latitudes in the widely used Mercator projection.

A less well known issue is that vertical relief is often deliberately exaggerated in raised relief maps, such as the one below. Often, the vertical relief is exaggerated by a scale of 5 or 10.

A raised relief map

Author: Jerzas at Polish Wikipedia, https://commons.wikimedia.org/wiki/File:Tatry_Mapa_Plastyczna.JPG

Because the exaggerated version often falls within the range of steepness that actually occurs within real mountainous landscapes, the exaggeration can easily go un-noticed.

Why do mapmakers do this? Because of the way the human sensory system and cognitive system work. The exaggeration makes it easier to process the image or model, and to identify key features in it.

How does this relate to caricatures? Because exactly the same process of systematic exaggeration can be used to generate a caricature from an image via software, without any need for a human artist. The steps involved are as follows. I’ve used profiles because they make it easier to demonstrate the process.

Step 1: Measure some pictures of human faces, as shown below in blue, with red measurement lines.

Step 2: Calculate the average value of each measurement, to produce an average profile (shown in black).

Original black profile image By SimonWaldherr – Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=16531195

Step 3: Compare the face that you want to caricature with the average profile, as shown below, where the target face is in beige.

Step 4: Exaggerate the differences between the target face and the average profile. You can do this in various ways; for instance, if the nose in the target face protrudes 3 millimetres from the average profile, you could double this distance to make it protrude 6 millimetres, or you could square it to 9 millimetres, to make it particularly prominent. I’ve gone for a comparatively small exaggeration, to illustrate the uncanny valley issue, described below.

The images below show the before and after versions of the original target image.

Here’s an ancient Roman example for comparison; caricatures have been around for a long time…

Photo by Vincent Ramos – Vincent Ramos, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=1137967

The example above shows how the principle of caricature works, but it doesn’t discuss how far the exaggeration should be pushed. That’s where the second underlying principle comes in.

A useful guideline for caricatures is to push the exaggeration far enough to take it to the other side of the uncanny valley. The uncanny valley is the uncomfortable space between two categories. Usually, this is used in the context of the border territory between human and not-human, such as lifelike models of human beings, or part-human monsters such as werewolves, vampires and zombies. However, the uncanny valley also occurs in other contexts; I’ve blogged about these contexts in this article.

Caricatures are usually well on the far side of the uncanny valley, which reduces uncertainty and cognitive load for the viewer. “Well on the far side” can be measured; as a rough rule of thumb, it’s about three standard deviations or more from the mean, corresponding to a level that most people would see only rarely, or never, in a lifetime.

Conclusion and further thoughts

Silhouette caricatures can be produced using just a couple of simple underlying principles. Those same principles can be applied to three-dimensional caricatures, like the heads of puppets. You just need to measure the distance between the centre of the head and each point on the skin, and apply the same principles of exaggerating the differences between an average head and the head that you are caricaturing in the puppet. Yes, that involves a lot of measurements, but it’s just a case of repeating the same basic steps a lot of times, rather than having to invoke any new, complex principles.

So, what might look like a complex triumph of human skill can be modelled using just a couple of simple underlying principles.

With caricatures, a key feature is that the end product is unambiguously on the far side of the uncanny valley. What happens when it isn’t?

When the end product is within the uncanny valley, then by definition it will produce an uncomfortable feeling in most viewers. (The question of why it only produces this feeling in most viewers, rather than all, is one which I’ll revisit in a later article.)

There’s a very different reaction when the end product is about one to two standard deviations from the mean; for example, when a man is between about six feet tall. This tends to be a “sweet spot” for favourable perceptions, before reaching the “too much of a good thing” level. I’ve blogged about this topic in this article and this article, and gone into more depth in my book Blind Spot.

This in turn raises broader questions about what people want, and about what regularities there are within those desires, and about the implications for the entertainment industry and for social norms and for politics. Those, though, are questions for later articles…

Notes

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in Gordon’s latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

January 1, 2017

Life at Uni: Some tips on exam technique

By Gordon Rugg

Standard disclaimer: This article is as usual written in my personal capacity, not in my Keele University capacity.

Sometimes, the acronyms that fit best are not the ones that produce the most encouraging words. That’s what happened when I tried to create an acronym to help with exam technique. It ended up as “FEAR FEAR”. This was not the most encouraging start. So, I’ll move swiftly on from the acronym itself to what it stands for, which is more encouraging, and should be more helpful.

A lot of people find exams mentally overwhelming. This often leads to answers that aren’t as good as they could be. When you’re in that situation, it’s useful to have a short, simple mental checklist that helps you focus on the key points that you want to get across. That’s where the acronym comes in.

F is for Facts, and F is for Frameworks

E is for Examples, and E is for Excellence

A is for Advanced, and A is for Application

R is for Reading, and R is for Relevance

In the rest of this article, I’ll work through each of the items, unpacking what they’re about, and how to handle them efficiently.

There’s another standard disclaimer at this point: Different fields have different ways of doing things, so it’s a good idea to find some guidelines that are specific to your field; wherever they’re different from the ones below, then go with the specific ones.

A couple of points before we get into the mnemonic:

First, general marking principles. Most disciplines have marking guidelines which say what skills and knowledge an answer should demonstrate in order to reach a particular grade, such as a distinction or a medium pass or a bare minimum pass. If you haven’t seen these guidelines for your field, then it’s a good idea to sweet-talk a knowledgeable member of staff into going through the guidelines with you, and explaining exactlying what is meant by wording such as “evidence of advanced critical thinking”.

Second, the problem of the Sinful Student. Quite a few of the marking guidelines exist because in the past, sinful or clueless students have done something outrageously bad, such as claiming to have read things that they’ve never even seen. Good and virtuous students are often completely unaware of this, so it never occurs to them that they might need to demonstrate that they have actually read the article that they’re quoting, etc. A common example is good students not bothering to mention technical terms, etc, because they take it for granted that the marker knows that they know those terms, and have never dreamt that anyone would try to bluff their way through the exam without ever studying the topic. The marker can only give marks to what’s written on the page, so if in doubt, err on the side of demonstrating your knowledge on the page. You get marks for demonstrating knowledge, even if the result is boring and heavy writing. In exams, boring and heavy are your friends.

On to the acronym…

F is for Facts

The facts should be specific pieces of information that people on the street don’t know; for instance, technical terms, names of key researchers, technical concepts, etc. These show that you’ve been on the course, and learned things. However, they’re a basic starting point, rather than the whole story.

F is for Frameworks

Facts only make sense in a bigger framework. In most fields, you show more advanced knowledge, which can get higher marks, by demonstrating your knowledge of the relevant frameworks. In computing, for example, there are various frameworks for developing software, such as the waterfall model or the spiral model or non-functional iterative prototyping. It’s a good idea to show that you know what the frameworks are that relate to the question you’re answering.

E is for Examples

Examples show that you understand the concept you’re writing about, and can show how it applies to the world. Examples can come from everyday life, or from the lectures, or from independent reading. Usually, any example is better than no example.

E is for Excellence

It’s a good idea to learn about the indicators of excellence in your field, and to make sure to include them in your answers where possible. I’ve blogged about this previously, in this article. Some signs of excellence are low level, and don’t require much knowledge or brainpower (for instance, sustained attention to detail in formatting, etc). Other signs of excellence are higher level, and require more effort, but don’t necessarily involve advanced knowledge (e.g. laying out your answers in a clear, systematic and thorough way). Others do require advanced knowledge, which is the topic of the next section.

A is for Advanced

The lectures and textbooks typically focus on the expected average level of student understanding. It’s difficult to cover a decent-sized academic topic properly in a single module, so the content that students receive is usually a stripped-down version rather than the full story. If you want to get marks at a distinction level, you’ll need to demonstrate advanced knowledge as well as the standard knowledge. If that sounds depressing, don’t worry; you don’t need to demonstrate astounding knowledge of everything. It’s like adding spice to a meal; it doesn’t take much spice to go from bland to delicious, but that bit of spice makes all the difference. I’ve blogged here about how to learn about advanced concepts swiftly and efficiently.

A is for Application

In most fields, it’s good to show that you can take concepts from your course and apply them to problems. I’ve dealt with students who could memorise vast quantities of material from textbooks and module handbooks, and then regurgitate them practically word for word in exams. This was impressive at one level, but it left me with no idea whether the student understood anything of what they were reciting. I’ve also dealt with students who were very good at using formal notations, but had no idea what the purpose of those formal notations was. Academics in applied disciplines get twitchy about such situations, for very practical reasons; for instance, would you be happy to fly in an aircraft which had been designed by someone who had passed their exams by regurgitating the words from the handbooks, with no evidence of understanding how to use the concepts involved? So, some disciplines and/or modules make a big deal of students demonstrating that they know how to apply what they have learnt.

R is for Reading

This is one of the ways of showing advanced skills and knowledge, and getting into the frame for a distinction. Nobody’s likely to give you a distinction for doing the bare minimum possible. For high marks, you need to show that you’ve done relevant reading on your own initiative. You won’t usually be expected to remember the full bibliographic references for that reading in an exam; that would be a waste of your memory. (Coursework is a different matter; there, you will normally be expected to give the full bibliographic reference for each piece of reading, and you’ll getmarks for each one you give.) In an exam, just remembering the concepts, and maybe the authors’ names, will be enough. As with advanced knowledge, this is like spice; you only need a bit to lift what you produce from bland to excellent.

R is for Relevance

In case you’re wondering whether anyone would actually be silly enough to write answers that aren’t relevant: Yes, quite a lot of exam candidates do just that. It’s usually not because of silliness; more often, it’s because of misunderstanding, or desperation. Misunderstandings can happen, and if it’s a reasonable misunderstanding, then markers normally go along with the misunderstanding. If you’re not sure what a question actually means, then one good strategy is to write down why you’re not sure what it means, and to write down which meaning you’re going to run with in your answer. That makes you look more like an honest student grappling with an unclear phrasing than like a clueless, desperate student doing a brain dump. (A gentle tip: It’s wise to be tactful in your explanation of why you’re not sure what the question means…)

Closing thoughts

There are a lot of good guidelines for general exam strategy, available online and in books and via student support services (who are usually very happy to help with exam nerves, exam technique, etc).

I’ve deliberately not gone into issues that are well covered in those sources, such as how to budget your time, or how to handle questions where you don’t know very much. This article is intended to complement those sources, not to replace them.

If you’re struggling to remember anything about exam technique, then one simple guideline that should help is the cabinet making metaphor. Exams and coursework are just like the way that apprentices in the old days learned to be cabinet makers. At the end of their apprenticeship, they had to make a cabinet to demonstrate that they had the skills needed to become a master cabinet maker (which is where the phrase masterpiece comes from; it was the piece of work which showed that you were ready to become a master).

So, if you were an apprentice making that cabinet, you’d make sure that your cabinet demonstrated every skill you had ever learnt, from sawing to polishing, and with every complicated joint that you’d ever encountered, so that there was no doubt whatever in the examiners’ minds about your excellence. It’s just the same with exams and coursework today.

On which inspiring note, I’ll end. If you’re reading this in preparation for an exam, then I hope you find it useful. You might also find other articles in this series useful; there’s an overview of them on the URL below.

http://www.hydeandrugg.com/pages/resources/student-academic-skills

Notes

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in Gordon’s latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

There’s more about academic writing in The Unwritten Rules of PhD Research, by myself and Marian Petre.

Although it’s mainly intended for PhD students, there are chapters on academic writing which are highly relevant to undergraduate and taught postgraduate students. It’s written in the same style as this article, if that’s any encouragement…

The Unwritten Rules of PhD Research, 2nd edition (Marian Petre & Gordon Rugg)

http://www.amazon.co.uk/Unwritten-Rules-Research-Study-Skills/dp/0335237029/ref=dp_ob_image_bk

November 27, 2016

Visualising complexity

By Gordon Rugg

Note: This article is a slightly edited and updated reblog of one originally posted on the Search Visualizer blog in 2012.

How can you visualise complexity?

It’s a simple-looking question. It invites the response: “That all depends on what you mean by ‘complexity’ and how you measure it”.

This article is about some things that you might mean by “complexity” and about how you can measure them and visualise them. It’s one of those posts that ended up being longer than expected… The core concepts are simple, but unpacking them into their component parts requires a fair number of diagrams. We’ll be exploring the theme of complexity again in later articles, as well as the theme of practical issues affecting visualizations.

This article focuses mainly on board games, to demonstrate the underlying principles. It then looks at real world activities, and some of the issues involved there.

Measuring complexity in board games

There are various well established ways of defining and measuring complexity, such as information theory and Kolmogorov complexity. In this article, I’m focusing on complexity in the sense of the number of possible outcomes from an initial starting point, and how to represent them visually.

Here’s one way you can measure the complexity of chess, without much calculation involved.

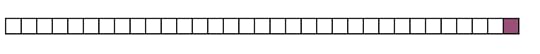

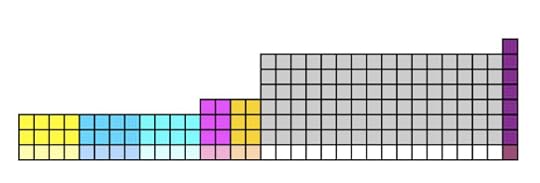

You have 32 pieces, plus one board. Some pieces are only represented once on each side (e.g. the king) while others are represented more than once (e.g. bishops and pawns). I’ll ignore that for the first illustration, but return to it later. Here’s a representation of the 32 pieces and the board, with each white box in the illustration representing one piece, and the final plum coloured square representing the board.

There are also rules in chess, whose complexity needs to be factored in. Some rules apply to particular pieces (for example, how knights move) and others don’t (for example, the rule that the white side gets to move first).

We can represent that by adding a box for each rule that applies to a particular piece, and adding another set of boxes for all the other rules.

We’ll start with the four knights, for simplicity. Each knight has the following rules that apply to it.

It moves in a particular way (two squares horizontally or vertically, and then one square at right angles).

It can take an opposing piece if it lands on the square occupied by that piece.

That’s just two rules. We can represent that as follows.

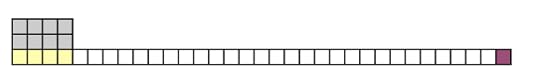

We’ll add two boxes representing these rules above each of the boxes that represents a knight. We’ll use the four boxes on the left to represent the knights; we’ll colour-code them yellow so you can see which ones they are. We’ll colour-code the rules grey.

Next, we can add the bishops. Each bishop also has two rules that apply to it, one about how it moves, and one about how it takes a piece. We’ll colour-code the four bishops light blue.

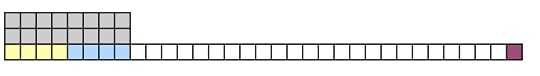

It’s the same principle for the four rooks, which each have one rule about how they move, and one rule about how they take a piece. (Note for chess players: We’ll treat castling separately, later.)

Next, we’ll add the two kings, which have two rules about directions in which they can move, plus one rule about how they capture pieces. (We’ll treat the rules about check and checkmate separately.)

The two queens also have two rules about how they move, and one rule about how they capture.

Now, the pawns. Each pawn has the following rules which apply to it.

It can move two squares directly forward on its first move.

It can move one square forward if the square in front is empty.

It can take an opposing piece which is one square diagonally away from it and in front of it.

It can take an opposing piece using the en passant move during its first move (I’ve omitted the details for brevity).

If it reaches the far side of the board, it can be promoted to become any type of piece.

That’s five rules, so for each pawn, we’ll add five boxes above it.

We’ll treat the next sixteen white boxes as representing pawns.

The boxes representing rules will be in grey, to distinguish them from the white boxes representing the pawns themselves.

There are also some general rules, about the number of squares on the board, their distribution (alternating between two colours), about castling, about stalemate conditions, about check, etc. Those are shown above the plum coloured square; we’ll assume that there are six of them (the precise number varies depending on whether you treat some of them as being two separate rules or all part of the same rule – for instance, the rules about the colours and distribution of squares).

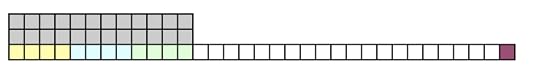

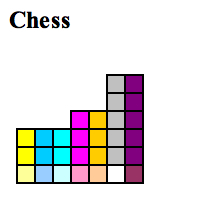

So chess looks like this.

If you want to be more precise, you can colour code the rules as well as the pieces, so that the rules applying to pawns are coloured differently from the rules applying to knights, and so on.

So that’s one way of showing the complexity of chess.

Using visualisations for comparisons

An advantage of systematic measurement and visualisation is that you are able to compare items directly.

That lets us ask how chess compares to other games.

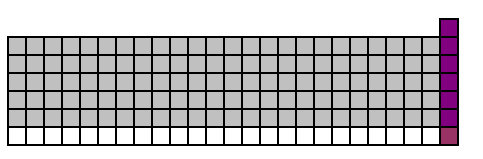

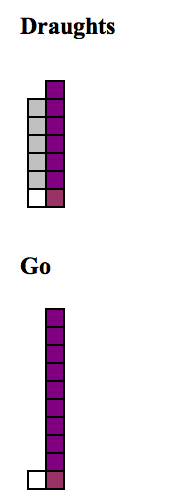

Here’s what the game of Draughts (“Checkers” in the USA) looks like using the same approach.

This has twelve black pieces and twelve white pieces, with five rules applying to each piece (normal movement, normal capture, promotion if they reach the far side, movement if they are promoted and capture if they are promoted).

The board is the same as a chess board, and there are about as many general rules about which side starts first, etc (again, the precise number depends on which rules you treat as separate and which you treat as parts of the same overall rule).

That shows draughts as much simpler than chess. That’s consistent with what you find if you calculate the possible number of moves in a draughts game compared to the possible number of moves in a chess game – chess is far, far more complex on that metric.

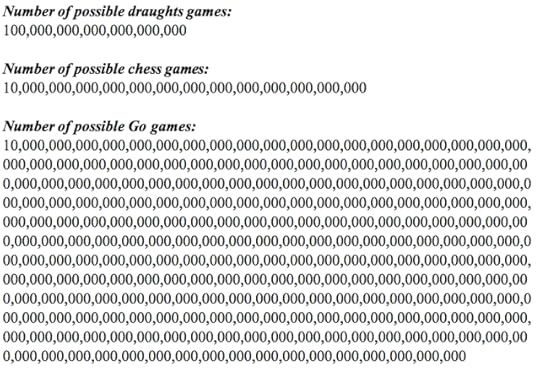

What about the game of Go? Like chess and draughts, it’s a board game, played with black pieces and white pieces. In other respects, though, it’s very different.

There are many more pieces than in chess and draughts: a total of 361 pieces, consisting of 180 white and 181 black.

The nature of the rules is also different from chess and draughts. In Go, the pieces don’t move once they’ve been put on the board, so there aren’t any rules specific to the individual pieces.

There are about ten overall rules (again, depending on how you structure the definitions, and also on whether you’re using the Chinese or the Japanese version, etc).

So how could we represent this in a diagram? One answer is to use a much bigger diagram, but that leads to logistical difficulties. Another is to use a different notation, but that leads to conceptual difficulties. The next section examines the nature and implications of these difficulties.

Notations, logistics and purposes

One conceptually clean solution is to use exactly the same notation for all three games. That would bring out very vividly the differences in size and in structure between them.

The obvious problem with that is logistical. At the scale we’ve been using so far, the diagram would be about a metre wide.

One obvious response to that problem is to use smaller squares, but that would make the diagrams difficult to read because the squares would need to be tiny – even at half a millimetre per square, the Go diagram wouldn’t fit onto a typical document page.

Another obvious response is to use a different form of scaling – for instance, making each square on the horizontal axis in the diagram represent ten pieces, like this. I’ve only represented the number of pieces, with one square per piece, and not represented the rules, because on that scale they’d be too small to show.

This shows fairly clearly how different the three games are in the number of pieces they use.

However, it’s not completely accurate. One major drawback is that it loses the differences between different types of piece, such as rooks and pawns. Another is that this can’t show the rules, because they’d be too small to see at this scale, and the alternative would be to have an inconsistent scale.

Internal consistency

A cleaner response is to use a different set of conventions – for instance, to have each type of piece represented by a single square – so one square to represent the type “pawn” and another to represent “rook” and so forth. This has the advantage of being internally consistent, rather than being based on nothing more than short-term convenience.

If we do that, then we get some very different diagrams from what we would have got using the two previous responses. As before, the last column represents the “general” rules, and each previous column represents different types of piece (ignoring the distinction between black and white, since each type of white piece obeys the same rules as its white counterpart in these games).

This brings out vividly the minimalism of Go. It only has one type of piece (in two colours, but only one type of piece) and you can’t get much more minimalist than that. However, that doesn’t mean that Go is a simple game. In some ways, it’s very complex. If we measure its complexity in terms of how many different possible games you could play before you run out of new games, then it’s much more complex than either chess or draughts. To represent that, we need to use a different format, because of logistics.

The number of possible games of chess, draughts or Go is enormous. There are various estimates; I’m focusing on the underlying concepts rather than the precise numbers. Some numbers that I’ve seen quoted are as follows.

Draughts: 1020 possible games

Chess: 1040 possible games

Go: 10700 possible games

This is a standard mathematical representation, and it’s unfortunately one which is easy to misunderstand. To non-mathematicians, it looks as if there are twice as many possible chess games as draughts games, and thirty-five times as many possible Go games as draughts games. That’s a misinterpretation. Here’s what those notations actually mean.

Unpacking the numbers from the mathematical notation helps to show that the difference is big. However, even this unpacking doesn’t begin to show how big the difference is. Most human beings have trouble grasping just what that number of zeroes means.

Here’s an example.

We’ll use one of the oak leaves in the foreground of this image to represent the number of possible games of draughts.

Let’s say that each oak leaf is 1/10 mm thick and 10 square centimetres in area.

On that scale, of one oak leaf representing the number of possible games of draughts, the number of possible games of chess is enough to fill the first valley in the picture with oak leaves, in a swathe that stretches across the whole area framed by the branches on each side. If you decided that the number of possible games of chess was actually 1042 instead of 1040 then, on the same scale, you’d be talking about enough leaves to blanket everything visible up to the horizon a kilometre deep in leaves. On the same scale, the complexity of Go is beyond what most humans can envisage. The usual comparisons are along the lines of filling the entire universe with grains of sand, or leaves, or whatever you’re using to represent the games, and still not having enough space.

How to represent such big numbers is a fascinating question, that needs an article in its own right. For the moment, we’ll return to more tractable numbers, and to visualizing the complexity of activities other than games.

Carpentry and complexity

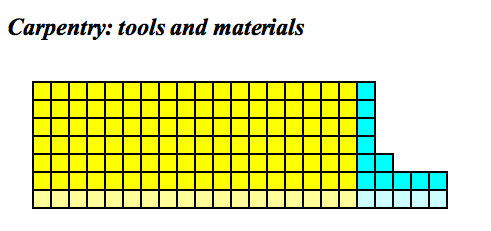

Here’s a demonstration of how the principle works. It involves an old copy of Teach Yourself Carpentry from the local second hand book shop. That book’s list of recommended tools for the beginner contains 23 items. It lists half a dozen different types of wood. Some tools are used to work wood; some are used on other tools and materials (for instance, a screwdriver is used on screws, rather than being used directly on the wood).

We can represent this using the same format as with the games, where each of the bottom row of squares represents a different tool, and the rows of squares above them represent what each tool can be applied to. The book doesn’t spell out which types of tool can be applied to which types of wood; I’ve made the simplifying assumption that all six types of wood mentioned in the book can be worked with all of the tools that can be applied to wood. In reality, some tools would only be used on a limited number of types of wood.

Eighteen of the tools in the beginner’s set are for use directly on wood. These are shown in light yellow. There are five other tools in the set, shown in turquoise.

The oilstone is used to sharpen the chisels and the plane-irons (a total of six tools).

The mallet is used on chisels (two types specified in the set).

The pincers are used on nails.

The hammer is used on nails.

The screwdriver is used on screws.

So does this mean that carpentry is less complex than chess?

The answer is no, because we’ve only shown some of the complexity in carpentry. Chess consists of two sets of variables, namely pieces and rules. Carpentry, however, involves three sets of variables – tools, materials and actions. The diagram above only shows tools and materials.

We can use the same representation to show how many separate activities can be carried out using each tool. The exact number will depend on the level of detail that we want to specify. For instance, we could treat the production of the various types of woodworking joint separately, or we could lump them together under “making a woodworking joint”. This approach fits neatly with well-established methods of task analysis, such as using a Therblig notation. We’ll examine this issue in a separate article.

October 31, 2016

Why Hollywood gets it wrong, part 4

By Gordon Rugg

The first article in this short series examined how conflicting conventions and requirements can lead to a movie being unrealistic. The second article explored the pressures driving movie scripts towards unrealistically high signal to noise ratios, with few of the extraneous details that occur in real conversations. The third in the series examined how and why movies depict a world which requires the word “very” to describe it.

All of those themes are arguably about movies either selecting versions of reality, or depicting versions of reality which are simplified and/or unlikely. Those versions are unrealistic, but not actively wrong in the strict sense of the word. The underlying common theme is that they’re simplifying reality and/or exaggerating features of it.

Today’s article looks at a different aspect, where movies and games portray the world in a way that flatters and reassures the audience, regardless of how simplified or exaggerated the accompanying portrayal of the world might be. This takes us into the concepts of vicarious experience, of vicarious affiliations, and of why Wagner’s music isn’t as bad as it sounds. It also takes us into the horribly addictive pleasures of TV tropes…

Horrors of the apocalypse, and Wagner…

A recurrent theme in our previous articles about human perception has been whether or not something is perceived as threatening. Humour, for instance, often involves the switch from an initially scary appearance to a completely non-scary interpretation of the same situation.

At first sight, horror movies may appear to be about deliberately taking people into scary territory. However, as with many other passtimes which look scary, the scariness usually occurs within a well defined context. The context typically takes one of two forms. Either the participant has no control (e.g. being on a rollercoaster) but is in a setting which makes it clear that the real risk is minimal, or the participant is in a setting which involves real risk (e.g. mountaineering) but where the participant has a perception of control via skill.

In the case of horror movies, it’s probably no accident that there are well defined categories of horror movies, such as the slasher, or the haunted house. This means that a horror movie fan is likely to be familiar with the standard categories, and will therefore be able to predict fairly well what is likely to happen next at any point in a standard horror movie. This predictability helps to keep the scariness bounded, because of the element of predictability. We’ll return in a later article to what happens when a movie breaks out of the standard conventions for its genre.

In this article, we’ll focus on one way to reassure audiences, namely portraying scary situations in a way that gives vicarious reinforcement to the viewer’s feeling of control. This can take various forms.

One common form is giving a privileged, insider’s view of a situation; for instance, showing events from the viewpoint of elite special forces units within the military, complete with specialist technical vocabulary, specialist knowledge, etc. This gives the viewer a vicarious feeling of membership of those units, and a vicarious feeling that in a firefight, they would know how to behave like a member of one of those units.

Another common form is closely related, but different in that it involves imagining an alternative way of handling a situation. A classic form is re-imagining some part of history, from a “Why didn’t they do X?” perspective. For example, recent movies about ancient Roman warfare such as Gladiator and The Eagle show the Romans using flaming ditches as a surprise defence. This looks like a feasible approach to most modern viewers, who are familiar with highly flammable fuels such as petrol and paraffin. The outcome is that the viewers are reassured that if they were sent back in time, they would find solutions that the locals had missed, and would soon have high social status.

The same narrative device is common in movies where the protagonists are interacting with the natives. It’s so common, in fact, that it’s a well-recognised trope, known as “Mighty Whitey”. Tropes are similar to schemata; a trope is a well-established pattern of events within fiction, usually fairly simple in comparison by schema standards (e.g. the old trope that if a Western hero kissed a non-Western woman in a movie, then she would die tragically before the end of the movie). The tv tropes website is a huge, fascinating, collection of tropes, and is well worth visiting, although there’s a high risk that you’ll end up emerging from it several hours later, wondering where the time went.

Returning to the Mighty Whitey trope: The usual form involves a white man rising swiftly to prominence among the natives because of his superior intellect and skills. This trope can be combined with the historical version, as in movies such as The Last Samurai, where the Western hero becomes a high-status member of samurai society. Again, this is likely to provide vicarious flattery to white males watching the movie, and to reassure them that they would be able to surmount yet another form of adversity.

So, giving viewers the feeling of control can reduce scariness to within acceptable bounds, and flatter viewers at the same time. (Usually, in the case of horror movies, white male viewers, but that’s a topic that’s been well addressed in both academic and popular culture.)

How well does that feeling of control correspond to reality? As you might suspect, it usually doesn’t correspond very well.

In the case of the Mighty Whitey trope, there have been documented cases where Europeans achieved significant status within other societies; a well known example is William Adams, an Elizabethan seaman who became a samurai. However, the full story is far from the simple Mighty Whitey trope. Typically in these cases, the European was already a fairly high status individual in their original society, and typically, the European had knowledge, skills or other resources that were in demand in the society that they moved to. Adams, for example, was a master pilot, and had a great deal of knowledge about ships and maritime trade at a time when Japan was particularly interested in these issues. Another famous Westerner in Japan, Thomas Blake Glover, followed a very similar path, for very similar reasons. Another typical feature in the successful cases is that the Europeans assimilated into the culture that they moved into.

For someone who didn’t have so much to offer, and who tried to follow the Mighty Whitey schema without paying attention to local cultural norms, the ending could be very different. An example that attracted much attention at the time was the Namamugi Incident, where a British merchant was killed by a samurai’s retainers for discourtesy.

In between these two cases, there are many less dramatic cases where ordinary Europeans ended up in other societies, and where nothing much changed. These cases don’t usually get much attention in the history books or in fiction. From a narrative viewpoint, this lack of attention is no surprise; it’s not easy to make a box office hit from a story that doesn’t have excitement, drama and high adventure. From a psychological viewpoint, there’s the further problem that these unremarkable cases don’t offer much reassurance or flattery to the audience; they’re saying, in effect, that if your life isn’t anything remarkable now, then it wouldn’t change much if you moved to a new culture.

This issue is summed up neatly in a classic observation about movies set just after an apocalypse, to the effect that every viewer thinks that they’ll be one of the heroic survivors, whereas in reality they’ll probably be one of the bleached skulls by the side of the road. Statistically, this observation is pretty accurate, but as a motivational or reassuring statement, it has room for improvement…

Second order effects

As you might expect, tropes can easily turn into clichés, especially in well-established genres such as apocalypse survival, whose tropes are central features of Mary Shelley’s The Last Man, published in 1826. This often leads to new tropes arising which are deliberate plays on an older trope. In the case of Mighty Whitey, there are numerous tropes of this kind.

The same underlying dynamic operates in other fields beyond fiction. For example, a musician might compose a piece which is a deliberate departure from a theme that has become clichéed. Richard Wagner deployed this approach on a monumental scale, with a large number of explicit musical devices to signal points such as which emotions a character was feeling, and which character was being referenced, via aspects of the music. This is the underlying dynamic of the famous insult about his music not being as bad as it sounds; the point of Wagner’s music was not to showcase the beauty of instruments or voices, but to convey a deeper message more systematically.

This issue is widely recognised in forms such as calling someone “a musician’s musician” and distinguishing between “high culture” and “low culture”. It’s a concept that leads to interesting insights, but in popular use, it’s usually not very tightly structured, from the viewpoint of knowledge modelling.

A similar issue is well known in other fields, such as safety-critical systems research, where it’s often framed in terms of the mathematical concept of second order effects.

In safety-critical systems, this concept is useful for handling interactions between the outcomes of different factors. A classic example is an office fire, which produces the first-order effect of triggering the sprinklers. The sprinklers then spray water through the office, which can lead to the second-order effect of short-circuiting electrical devices in the office. If you’re really unlucky, or the victim of bad planning, this could in turn lead to the third-order effect of a short circuit disabling the system which is supposed to send an automatic call to the fire brigade in the event of a fire.

Interactions of this type are common features of disasters, and are often the cause of disasters. The same dynamic also affects less dramatic situations, such as designing bureaucratic procedures, or planning a trip to the shops. Often, the consequence is the opposite of what was originally intended, if the second order effects introduce an unwanted feedback loop in terms of systems theory. There are numerous ways of modelling this rigorously, typically via some form of graph theory and/or argumentation. These approaches can also be applied to media studies, where they often give powerful new insights.

So, where does that leave us? In brief:

Movies are often unrealistic statistically because they wouldn’t make much box office revenue by telling each viewer that in the event of an apocalypse, they would probably end up as a bleached skull by the side of the road. That’s not the most inspiring note on which to end, so here’s a more encouraging one:

The risk of disasters can be reduced significantly by using systematic formalisms. This concept, though encouraging, may not inspire much pleasure in some readers, so for them, here’s a gentle cat picture. I hope you’ve found this article useful.

“Wikipedians cat” by Remedios44 – Own work. Licensed under Public Domain via Wikimedia Commons – https://commons.wikimedia.org/wiki/File:Wikipedians_cat.jpg#mediaviewer/File:Wikipedians_cat.jpg

Notes and links

By Franz Hanfstaengl – fr:Image:RichardWagner.jpg, where the source was stated as http://www.sr.se/p2/opera/op030419.stm, Public Domain, https://commons.wikimedia.org/w/index.php?curid=55183

By Albert Goodwin – http://www.artrenewal.org/artwork/154/3154/32410/apocalypse-large.jpg, Public Domain, https://commons.wikimedia.org/w/index.php?curid=17090743

By Henry Colburn – http://archive.org/details/lastman01shell, Public Domain, https://commons.wikimedia.org/w/index.php?curid=20230866

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

October 6, 2016

Why Hollywood gets it wrong, part 3

By Gordon Rugg

The first article in this short series examined how conflicting conventions and requirements can lead to a movie being unrealistic. The second article explored the pressures driving movie scripts towards unrealistically high signal to noise ratios, with few of the extraneous details that occur in real conversations.

Today’s article, the third in the series, addresses another way in which movies are different from reality. Movies depict a world which features the word “very” a lot. Sometimes it’s the characters who are very bad, or very good, or very attractive, or whatever; sometimes it’s the situations they encounter which are very exciting or very frightening or very memorable; sometimes it’s the settings which are very beautiful, or very downbeat, or very strange. Whatever the form that it takes, the “very” will almost always be in there somewhere prominent.

Why does this happen? It’s a phenomenon that’s well recognised in the media, well summed up in a quote attributed to Walt Disney, where he allegedly said that his animations could be better than reality.

When you think of it from that perspective, then it makes sense for movies to show something different from reality, since we can see reality easily enough every day without needing to watch a movie. This raises other questions, though, such as in which directions movies tend to be different from reality, and how big those differences tend to be.

That’s the main topic of this article.

There’s a classic quote which goes: “Well, that’s the news from Lake Wobegon, where all the women are strong, all the men are good looking, and all the children are above average.”

I’ve blogged previously about the issue of being above average, in this article about when you can have too much of a good thing. The conclusion was that being about one to two standard deviations above the mean was often a “sweet spot”. For instance, being tall tends to bring more perceived prestige, status, etc, up to a height of between about six foot and six foot two; above that, people tend to be viewed more as a freak than as having status. Protagonists in movies tend to be portrayed as tall; not always, but usually.

Some features of movies don’t follow this principle, and instead go for either a strategy of “bigger than the others” or “as big as possible”. This tends to be the case for explosions, car chases, etc, particularly for special effects. Often, this principle gets pushed so far that it bears limited relation to reality, and instead operates within the principle of schema conformity, where the audience accepts the conventions of e.g. the car chase schema, and then suspends disbelief within those established limits. This is why an audience might happily accept a massively unrealistic car chase scene, but balk at a minor unrealistic detail that isn’t part of the schema in question.

There are various bodies of theory that throw useful light on this area.

One is schema theory; although this overlaps with the concept of genre, it’s not the same, and is more fine-grained, more flexible and more powerful. In brief, a schema is a mental template for something.

Another is game theory, which provides many powerful insights into the outcomes from various types of competition. I’ve blogged about game theory here, and about its application to the mathematics of desire here.

Transactional analysis also provides some useful insights. This approach uses everyday terms to describe identifiable patterns of behaviour. One example is the pattern of “General Motors”. This involves two or more people discussing their preferences between different types of car, or different explosion scenes in movies, or different episodes of a favourite series. So, a memorable scene might be a favourite for games of “General Motors” about that type of scene, often as a result of a twist in the usual schema. A classic example is the knife fight in Butch Cassidy and the Sundance Kid, where Butch distracts his opponent by asking what the rules of the fight will be, and then kicking his opponent hard in the groin when his opponent is off guard. This can provide film makers with a low-cost but very effective way of increasing the audience’s liking for a movie.