Gordon Rugg's Blog, page 4

October 14, 2018

Mental models and metalanguage: Putting it all together

By Gordon Rugg

The previous articles in this series looked at mental models and ways of making sense of problems. A recurrent theme in those articles was that using the wrong model can lead to disastrous outcomes.

This raises the question of how to choose the right model to make sense of a problem. In this article, I’ll look at the issues involved in answering this question, and then look at some practical solutions.

What are the key variables?

At first sight, the issue of choosing the right model looks very similar to the well-understood issue of choosing the right statistical test. Most stats books include a clear, simple flowchart which asks you a few key questions, and then guides you to an appropriate set of tests. For example, it will ask whether your data are normally distributed or not, and whether you’re using repeated measures or not.

The key phrase here is “a few”. The flowchart approach works well if you’re dealing with a small number of variables. However, once you get beyond about half a dozen variables, the number of branches starts to become unmanageable.

So, how many models/variables do you need to consider when you’re trying to choose a suitable way to make sense of a problem?

In principle, the number is infinite, but in practice, it’s tractable. The same “usual suspects” keep cropping up in the vast majority of cases. I’ve blogged here about the issue of potentially infinite numbers of solutions, and here about the distribution of “usual suspect” cases.

A related point is that if you’re just trying to improve a situation, as opposed to finding a perfect solution, then there’s usually a good chance that you’ll be able to improve it by using a “usual suspect” method. The same few issues crop up repeatedly when you look at why bad situations arose.

One way of getting some approximate numbers is to use the knowledge cycle as a framework. This cycle starts with eliciting knowledge from people, then selecting an appropriate representation, then checking it for error, and finally teaching that knowledge to people.

A common source of problems is elicitation; for example, faulty requirements, or misleading results from a survey. To handle elicitation properly, you need a framework that deals with about a dozen types of memory, skill and communication, and with about a dozen elicitation methods.

Another common source of problems is representation. The crisp versus fuzzy issue is one example; the mocha versus Lego issue is another. This is a more open set than elicitation, but again, about a couple of dozen key concepts are enough to make much better sense of the majority of problems occurring in the human world.

The story is similar for error. The heuristics and biases approach pioneered by Kahneman, Slovic and Tversky has identified about a couple of hundred error types; classical logic has identified a similar number. In practice, about a couple of dozen types account for the majority of occurrences in the world; for instance, confirmation bias, and strong but wrong errors.

The last part of the cycle involves education and training. For these, you need to handle about a dozen types of memory, skill and communication, and to map these onto about a couple of dozen delivery methods.

Pulling these numbers together, we get a rough figure of about a hundred core concepts that will let you make significantly better sense of most problems. That’s a non-trivial number, but it’s manageable.

Framework versus toolbox

At the start of this article, I mentioned flowcharts for choosing the appropriate statistical test. That’s a different type of problem from choosing an appropriate model for understanding a problem. With the stats test issue, you’re narrowing down the possibilities until you end up with one correct answer, which may involve either just one statistical test, or a small set of tests which are all equally suitable.

With choice of models for handling problems, you’re doing several things which are very different from the stats choice. From a methodological viewpoint, you’re choosing a collection of methods which will each address different aspects of the problem. You’re also deciding which aspects of the problem to tackle in which order. In addition, you’re deciding which models and methods to use in which sequence to tackle those aspects. This level of open-ended complexity doesn’t lend itself well to a flowchart approach.

There are also important issues outside the viewpoint of research methodology. At a sordidly practical level, there’s the issue of barriers to entry for a model or method. This is a concept from business, where a would-be business has to overcome issues of cost, time, etc, to get into a field. For aircraft manufacture, one obvious barrier to entry is the cost of infrastructure; for becoming a veterinarian, a major barrier to entry is the length of time needed to become qualified.

With research models and methods, the barriers to entry usually involve time spent in learning the underpinning concepts. This is apparent when you learn how to use card sorts versus when you learn to use laddering. At first glance, card sorts look more complex, but in reality, the method is very easy to learn, with few necessary underpinning concepts. Laddering looks more simple, but in reality, if you want to use it properly, you need to learn some graph theory, plus basic facet theory; you also need to be reasonably good at mental visualisation. When you’ve got past those barriers, though, laddering is an elegantly powerful method that is invaluable for a wide range of problems which other methods can’t handle.

At the level of politics within research, there’s a famous quote from Max Planck to the effect that science advances one funeral at a time. There’s a similar quote to the effect that eminent researchers don’t change their minds, but they do die. In most fields, there’s resistance to new methods and models. If you try using a new method or model, particularly one that challenges established orthodoxy, then you need to do it carefully. The best case payoff is that you crack a major problem in your field and become a leading figure within it; however, the worst case is that you fail to crack even a small problem, and that you are marginalised within your field as a result. Even if you do manage to crack a major problem, you can expect resistance from die-hards for years or decades afterwards.

This doesn’t mean that using new methods or models is a recipe for disaster. On the contrary, when you do it right, the new methods and models can establish you as a significant player in your field. There’s a solid body of research into the key factors in successful innovation, such as Rogers’ classic book on diffusion of innovation, and if you’re interested in going down this route, then some time spent reading up on this will be a very useful investment.

Human factors

Following on from barriers to entry, one theme which occurs repeatedly in relation to models is human factors.

With the mocha model or the Lego model, it’s easy to handle the key features of the model in your working memory. When you get into the Meccano model, though, you’re soon dealing with many more variables; far too many to hold in working memory.

Similarly, a simple crisp set model requires less mental load than a combination of crisp and fuzzy sets.

When you’re experienced in methods such as systems theory or using crisp and fuzzy sets, that situation changes, because you learn how to group individual pieces of information into mental chunks, thereby reducing mental load. If you don’t have that experience, though, you can’t use chunking to reduce the load.

When you look at public debate from this perspective, then you see things in a new light. Instead of wondering why people are so lazy in their thought, you start realising that there are limits to what the human brain can handle without specialist expertise. Even if you do have specialist expertise, there are still concepts that can’t be properly handled via the human brain. For instance, chaos theory has transformed weather prediction, but the modeling for it involves enormous numbers of calculations, requiring supercomputers that can process quantities of data far beyond what any human could process.

This isn’t a huge problem in debate between researchers, if there is no time pressure for finding answers. It is, however, a major issue for political debate in the everyday world, where points need to be made fast, using concepts that most of the intended audience can understand. Historically, the overwhelming majority of points in political debate have been made verbally, which limits the available models even further, and tends to produce a dumbing down of debate.

Now, however, it’s much easier to use visual representations and multimedia in online debate. For example, you can use graphics to show that your opponent is treating something as a crisp set distinction, rather than a crisp/fuzzy/crisp distinction, or whatever. This lets you show the key problem visually in a way that has minimal barriers to understanding, and that shifts the debate into a framing that more accurately corresponds with reality.

It will be interesting to see whether this technology will produce a significant improvement in the quality and sophistication of debates, or whether it simply increases the number of insulting Photoshop memes in circulation…

Cognitive load, and span of implications

The issue of cognitive limitations has deep implications for ideologies. In religion and politics, it’s common to accuse ideological opponents of oversimplifying a topic because of stupidity or laziness. Another common accusation is that an opponent’s beliefs are inconsistent.

If we look at such cases from the viewpoint of cognitive load and of span and implications, then they look very different. In some cases, there may be a good-faith issue about the cognitive load involved in using one model, or about the mental barriers to entry for the model. Sometimes, this can be resolved by simply using a visualisation that reduces the cognitive load enough for the other party to grasp what you’re saying. The lightbulb effect when this happens is usually striking.

Similarly, if you look at the number of links that someone is using in a chain of reasoning, then apparent inconsistency or hypocrisy can often be explained as a good-faith issue of the inconsistency occurring beyond the span of implications that the person is considering. Again, a visual representation can help.

Often, though, ideological debates are not in simple good faith, but involve motivated reasoning, and other forms of bias. I won’t go into that issue here, because of space. For the moment, I’m focusing on cases of where understanding different mental models can help resolve honest misunderstandings and honest differences of opinion.

Conclusion and ways forward

In summary, the “two cultures” model of arts versus sciences has an element of truth, but it lumps together several different distinctions. When you start looking at a finer level of detail, you find that some of the most important distinctions in cultures and worldviews come down to a handful of issues such as whether you’re dealing with a system or a non-system, and whether you’re dealing with fuzzy sets or crisp sets or a mixture of both.

These issues are fairly easy to understand, but they’re very different from the ones usually mentioned in debates about the two cultures, and they’re nowhere near as widely known as they should be. The implications for research, and for ideological debates in the world, are far reaching.

Although the number of issues involved in choosing the most appropriate model for a problem is in principle infinite, in practice a high proportion of problems can be solved or significantly reduced via a tractably small number of commonly-relevant models. In this article, I’ve focused on issues and models that are particularly common in real world problems.

Often, a problem can be tackled from several different directions, each using a different model. This, however, is not the same as saying that all models are equally valid all the time. If you read the literature on disasters, you’ll find plenty of examples where a disaster was due to someone having the wrong mental model of a piece of technology. You’ll find much the same in the literature on cross-cultural relations.

So, in conclusion: Choice of models makes a difference, and knowing a broader range of models gives you more ways of making sense of the world.

That concludes this short series on mental models. I’ll return to some of the themes from this series in later articles.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

October 9, 2018

Mental models, worldviews, Meccano, and systems theory

By Gordon Rugg

The previous articles in this series looked at how everyday entities such as a cup of coffee or a Lego pack can provide templates for thinking about other subjects, particularly abstract concepts such as justice, and entities that we can’t directly observe with human senses, such as electricity.

The previous articles examined templates for handling entities that stay where they’re put. With Lego blocks or a cup of coffee, once you’ve put them into a configuration, they stay in that configuration unless something else disturbs them. The Lego blocks stay in the shape you assembled them in; the cup of coffee remains a cup of coffee.

However, not all entities behave that way. In this article, I’ll examine systems theory, and its implications for entities that don’t stay where they’re put, but instead behave in ways that are often unexpected and counter-intuitive. I’ll use Meccano as a worked example.

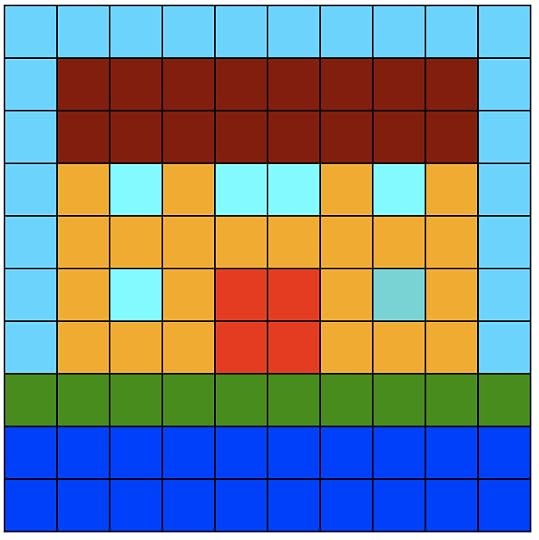

The image on the left of the banner picture shows a schematised representation of one Meccano bolt, two Meccano strips, and a Meccano baseplate. In this configuration, they will just stay where they are put. However, if you assemble these identical pieces into the configuration shown in the middle image, the situation changes. If you press down on one end of the horizontal bar, then it will pivot around the bolt in the middle, and the other end will go up. The Meccano has changed from being a group of unconnected pieces into being a simple system, where a change in one part of the system can cause a change in other parts of the same system.

There’s a whole body of work on systems and their behaviour. Their behaviour is often counter-intuitive, and often difficult or impossible to predict, even for quite simple systems. I’ve blogged about this topic here.

Systems and feedback loops

In the previous article in this series, I mentioned a real world case where the wrong mental model led to repeated tragedy. It was from Barbara Tuchman’s book The Guns of August, about the opening of the First World War. The German high command expected the populations they conquered to acquiesce to overwhelming force, and were astonished when they encountered resistance. Instead of questioning their expectations, they instead concluded that their force hadn’t been sufficiently overwhelming, and increased the punishments they inflicted for resistance. The results were sadly predictable.

What was going on in this case was a classic example of what’s known in systems theory as a feedback loop. An increase in the German demonstrations of force (A in the diagram below) increased the Belgians’ desire to fight back against the invaders (B below). This in turn increased the German demonstrations of force, and so on.

[image error]

This type of loop is known in systems theory as a self-amplifying loop. (It’s more often known as a positive feedback loop, but that name tends to lead to confusion because in many fields positive feedback is used to mean “saying encouraging nice things”). Self-amplifying loops are usually bad news in real life, since they often involve catastrophic runaway effects.

Another type of loop is a self-deadening loop (again, more often known as a negative feedback loop, with the same potential for misunderstanding). In self-deadening loops, an increase in one variable leads to a decrease in another value, so that the two counteract each other, usually leading to stability in the system. One classic example is a thermostat; another is an autopilot in aviation.

It’s possible for systems to contain subsystems; for instance, a car is a system which consists of an engine subsystem, a suspension subsystem, a steering subsystem, and so forth.

A single change within a system can cause changes to many or all of the other parts of the system, and each of these changes can in turn have further effects on many or all parts of the system, rippling on for a duration that is usually difficult or impossible to predict, even for apparently simple systems.

A fascinating example of this is Conway’s Game of Life. This is a system which was deliberately designed to be as simple as possible. If you’re not already familiar with it, you might like to look at the Wikipedia article on it, which includes several animated examples. In brief, although the game is based on deliberately simple rules, it behaves in complex ways that its own creator wasn’t able to predict.

Emergent properties

Another common feature of systems is emergent properties. A system will usually behave in ways which couldn’t readily be predicted from a knowledge of its components, and which emerge from the interaction between the components.

The Game of Life is a particularly striking example of this. Another example is that the concept of rpm (revolutions per minute) is completely relevant to a working car engine, but would be meaningless if applied to the components of that same engine before they were assembled. I’ve discussed this in more detail in my article on systems theory, and in my article about range of convenience, i.e. the range of contexts in which a term can be meaningfully used.

A lot of people have trouble getting their heads round the concept of emergent properties. There’s been a lot of resistance in theology, philosophy and popular culture to the idea that something like life or consciousness might be nothing more than an emergent property of a particular system, as opposed to being the result of some mystical unique mystery such as the “spark of life”.

Emergent properties have a habit of cropping up in unexpected places. One example is the concept of sexism without sexists, where system properties lead to an outcome that might never have been thought of by the people who set up the system. For instance, many university departments have a tradition of seminars with outside speakers being scheduled at the end of the afternoon, followed by discussions in the pub. This combination of timing and location tends to be difficult for parents, for people whose religion disapproves of alcohol and pubs, and for carers, among others. This leads to these groups being under-represented in their profession, which in turn leads to them not being considered sufficiently when seminar slots are being arranged, which leads to a self-amplifying feedback loop.

Implications

Most of the world consists of systems of one sort or another, whether natural systems, or human systems such as legal and political systems.

However, knowledge of systems theory is very patchy. In some fields, it’s taken completely for granted as a core concept. In other fields, some people use it routinely, and others have never heard of it.

One surprisingly common misconception about systems is that because they’re often complex and difficult to predict, there’s no point in trying to model them, particularly for human social systems.

In reality, although systems can be complex and difficult to predict, they often show considerable stability and predictability. Even within chaotic systems, where a tiny change to the initial configuration has huge effects later on, there are often underlying regularities that you can work with once you know about them.

Another practical issue is that if you’re familiar with basic systems theory, you can design at least some problems out of a system. For instance, if you spot a self-amplifying loop in a system design, you will probably want to look at it very closely indeed, in case it’s going to cause problems.

Another classic is spotting where a system contains incentives for a subsystem to improve things for itself at the expense of other subsystems and of the system as a whole, as happened with incentive structures in financial systems before the 2008 financial collapse.

You can also use knowledge of systems theory to work backwards from an unwelcome outcome, to identify likely causes for it, and to identify possible solutions.

For more detailed handling of systems theory, though, you need to get into some pretty heavy modeling. This takes you beyond what can be handled by the unaided human cognitive system, which is probably why systems theory isn’t more widely used in everyday non-specialist mental models.

The next article in this series will pull together themes from the articles so far, and will look at the question of how to choose the appropriate mental model for a given problem.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

October 2, 2018

Mental models, worldviews, and mocha

By Gordon Rugg

Mental models provide a template for handling things that happen in the world.

At their best, they provide invaluable counter-intuitive insights that let us solve problems which would otherwise be intractable. At their worst, they provide the appearance of solutions, while actually digging us deeper into the real underlying problem.

In this article, I’ll use a cup of mocha as an example of how these two outcomes can happen. I’ll also look at how this relates to the long-running debate about whether there is a real divide between the arts and the sciences as two different cultures.

Hot drinks are an everyday feature of life for most of the world. Precisely because they’re so familiar, it’s easy to take the key attributes of hot drinks for granted, and not to think about their implications.

I’ll start by listing some of their attributes, and comparing these attributes with Lego bricks, as another everyday item that most readers will know. At this stage, the attributes look obvious to the point of banality.

I’ll then look at how the features of hot drinks can map on to types of problem in the world, and onto worldviews.

The attributes I’ll look at are as follows.

Reversibility: Making a hot drink involves combining ingredients with hot water. Once you’ve combined them, you can’t un-combine them; the process is non-reversible. This is very different from the situation with Lego, where you can combine blocks to make a structure, and then take that structure apart and end up with the same blocks that you started with.

Localisability: Before you make a hot drink, you can point to each of the ingredients separately and say where they are. After you make a hot drink, the ingredients are no longer separate; it’s meaningless to ask where the chocolate is in a cup of mocha, because the chocolate is everywhere within the mocha. With Lego, in contrast, you can still point to each individual block within a structure after you have made the structure.

Measurability: You can easily measure the ingredients of a cup of mocha before they go into the drink. However, you can’t easily measure the ingredients of a hot drink after that drink has been made; for instance, finding out how much sugar was added. With Lego, in contrast, it’s easy to find out how many blocks of a particular type are in a structure after that structure has been made (for simplicity, I’m assuming a small number of blocks in a small structure so that they’re all visible).

Interactivity: A hot drink can change things that it comes into contact with. It can stain clothes; it can scald flesh, if the drink is very hot; it can turn paper into a soggy mess. Lego doesn’t do any of these things.

So what?

There are some obvious-looking similarities between the mocha/Lego contrasts and the contrasts between traditional views of the humanities and the sciences. The subject matter of the humanities, like mocha, doesn’t generally consist of clearly separate entities that are easily counted; instead, the humanities deal with intermingled entities that can be measured to some extent. With Lego, as with traditional models of science, it’s easy to disassemble a structure and see what happens if you reconfigure it; with mocha, and the humanities, this isn’t possible. You can’t re-run history, and you can’t raise people in an experimental setting the way that you can do with lab rats.

It’s also fairly obvious that the divide between the traditional humanities and the traditional sciences is an over-simplification. Within fields such as sociology and psychology, there is a long tradition of using sophisticated numerical, statistical, methods to study human behaviour; within fields ranging from nuclear physics to hydrodynamics, there’s a long tradition of using models that have much more in common with mocha than with Lego.

I’m taking these issues pretty much as given, and as well known. What I’ll focus on instead in this article is the bigger picture. What happens when you try to systematise the choice of appropriate mental model for a particular problem? What are the issues that you need to include, and what are the implications if you get it wrong, or only partly right?

Stains, sin and mana

I’ll start with the issue of implications.

A lot of the issues that people have to deal with in life involve abstract concepts, such as justice, or involve entities that aren’t observable with normal human senses, such as bacteria that cause diseases. This is where mental models based on familiar parts of the physical world can come in very useful; they provide a ready-made framework for handling abstract concepts, and entities that aren’t easily observable.

A classic example is the concept of cleanliness, which has been much studied within fields such as anthropology and theology. In current Western culture, cleanliness tends to be viewed as a medical issue, separate from religion and morality. This, however, is a recent development. Historically, cleanliness has been treated by most cultures as something much more associated with religion and morality.

In many cultures, for instance, there’s a distinction between the clean and the unclean. Usually, a person or an object can become unclean as a result of physical contact with something unclean (e.g. a dead body, or a grave). The underlying model here is very similar to someone or something being stained by contact with a liquid. The usual solution involves a cleaning process, often involving either some form of bathing, or some form of sacrifice, where the blood of the sacrifice washes away the spiritual contagion.

A key point here is that the uncleanliness doesn’t need to involve sin or immorality. It may happen to involve them, but it doesn’t necessarily have to. For instance, someone may accidentally become contaminated, and can then become clean after they perform the cleaning process.

This underlying principle of contagion is still widespread within Western cultures that have adopted the medical model of cleanliness.

An example is the market for objects once owned by celebrities. A pen owned by Napoleon would sell for much more than an identical pen which had not been owned by him. There’s no objective difference in the inherent properties of the two pens; what’s different is that one has been touched by him, and the other hasn’t. The converse also applies, with most people being reluctant to own or use items associated with notorious criminals. In both cases, the underlying mental model involves a spiritual stain, either good or bad, left behind by contact with the famous or infamous individual.

This model is well recognised in social anthropology, where it’s usually known by the Polynesian name of mana. Within this mental model, mana can be spread by contagion, like a stain from coffee. However, there is a key difference; in most belief systems which use mana, mana can also be spread by similarity. For instance, if someone wears the same type of clothing as a high status individual with a lot of personal mana, then the imitator will acquire mana as a result. There are entire industries making significant amounts of money out of these two principles.

So, in brief, the “stain and cleaning” aspect of the mocha model plays a significant role in worldviews.

What about other aspects of the mocha model and the Lego model? This is where there’s a strong argument for a more systematic study of mental models.

Other aspects

For everyday life, mental models are particularly useful for predicting what will happen next, in a new situation. Mental models are also used on an informal basis within research, usually as a source of useful metaphors and analogies.

However, if we look more formally and systematically at mental models, then we start to see deeper implications. There’s a whole body of research on how this relates to teaching topics such as physics, where people’s everyday mental models work to some extent, but then fail in other situations.

Flawed models can have tragic real world consequences. A striking example occurs in Barbara Tuchman’s book The Guns of August, about the start of World War I. A repeated theme is that the Germans expected defeated nations, such as Belgium, to accept the reality of overwhelming force, and to obediently do as they were told by the German authorities. The German high command appeared to be genuinely astonished when this didn’t happen.

So what was going on with that mental model?

One possible explanation is that it was an “Other as dark reflection” model, where a different group is perceived as being the opposite to the in-group. In this model, the in-group would resist being told what to do by invaders, because the in-group is brave and independent, but other groups would behave differently, because they are the opposite of the in-group.

This, however, did not appear to be the case in August 1914; Tuchman records German comments to the effect that Germans would not behave in such an insubordinate way if the roles were reversed.

A more likely explanation for this particular case involves something deeper in the mental model. A frequent feature of mental models, include the mocha and Lego models, is that their predictions run for one or two steps, and then come to a natural end. You add hot water to the ground coffee, and then you have a cup of coffee. You add blocks to a Lego tower, and it gets higher; if you go too high, it becomes unstable and falls over. Things then stay the same until some external intervention occurs.

This is very different from what happens within a system, in the formal sense of the term. A key feature of systems is that they can include feedback loops, where changes can go on happening for multiple steps, and may continue indefinitely. These changes are often counter-intuitive, in ways that have far-reaching implications.

So, how do you set about choosing the right mental model for a given problem, and how do you know whether your mental toolbox includes all the models that it should include?

I’ll go into this theme in the next article in this series, which will look at Meccano, engineering models, and systems theory.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

September 21, 2018

Mental models, worldviews, and the span of consistency

By Gordon Rugg

In politics and religion, a common accusation is that someone is being hypocritical or inconsistent. The previous article in this series looked at how this can arise from the irregular adjective approach to other groups; for example, “Our soldiers are brave” versus “Their soldiers are fanatical” when describing otherwise identical actions.

Often, though, inconsistency is an almost inevitable consequence of dealing with complexity. Mainstream political movements, like organised religions, spend a lot of time and effort in identifying and resolving inherent contradictions within their worldview. This process takes a lot of time and effort because of the sheer number of possible combinations of beliefs within any mature worldview.

In this article, I’ll work through the implications of this simple but extremely significant issue.

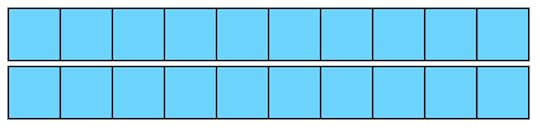

Suppose, for example, that you’re putting together a chain of reasoning that begins with assertion A, and ends in conclusion F, as in the diagram below. (For simplicity, I’m using a linear chain as an example, to keep things manageable.)

You decide that you want to check that all of the points in that chain are consistent with each other. How much effort will it be? It will actually be a lot of effort. Here’s what the set of possible cross-checks looks like for just the first five points (A to E). The internally consistent cross-checks are shown in blue; an inconsistent link, between A and D, is shown in red.

I’ve shown the cross-checks for five points for two reasons.

One is that the number of cross-checks involved (10) is already beyond the limits of normal human working memory (about 7, plus or minus 2, items). To work out this many cross-checks in your head would be unrealistic, unless you had a very well-rehearsed method for doing this. For simplicity, I’ve assumed here that consistency between point A and point B is the same as consistency between point B and point A, which halves the number of cross-checks. This assumption isn’t always true, but I’ll leave that issue to one side for the time being; the key point remains, that the number of combinations between points rapidly becomes enormous.

The second reason I’ve used this number of points is that five points is what you get if you check for just two points before the current point and for two points after it. Even that small number is already more than human working memory can handle.

What happens if you instead use a running check of only the point before and the point after the current point? You get the results below for the same set of points.

For the first full step, looking each side of point B, all three points are internally consistent, like this.

For the second step, looking each side of point C, all three points being checked are also internally consistent, like this.

However, this approach has missed the inconsistency between A and D, which was picked up by looking two points forward and two points back.

Implications

So, whether or not you detect an inconsistency in a chain of reasoning depends on the span of links that you’re looking at. Worldviews and ideologies involve so many points that the number of possible cross-checks is well beyond what a human being could work through in a lifetime. So, inconsistencies are going to occur within any worldview.

How do people handle inconsistencies when these are brought to their attention? In brief, the usual responses involve various forms of dodgy reasoning, rather than any serious attempt to work through the implications of the inconsistency. This is particularly the case when the implications are close to the individual’s core beliefs.

It’s tempting to believe that if you look closely enough at the links you’re examining, you’ll catch the inconsistencies anyway, so you don’t need to worry about this problem. That, however, isn’t true.

A classic example is Zeno’s paradox, which I’ve blogged about here.

I used the version of the paradox which features the archer’s arrow on its way to a target. Before it can reach the target, it has to cover half the distance. Before that, though, it has to cover a quarter of the distance, and before that it has to cover an eighth of the distance. You can repeat this process of halving the distance an infinite number of times. The implication is that because this process can go on for an infinite number of times, it’s never completed, and therefore movement is impossible.

This chain of reasoning is clearly wrong, but the reasons for it being wrong are subtle and complex, involving the nature of infinity, which gets pretty weird.

So, if it’s hard to see subtle errors in a chain of reasoning about something as familiar as movement, what are the odds of spotting subtle errors in a chain of reasoning about something less familiar? In brief: Poor to negligible.

What can you do about this problem if you’re making a good-faith attempt to put together a worldview, or just a small chain of reasoning about a problem?

That’s the issue at the heart of the scientific method. A key point about science is that it’s a process, not a set of fixed beliefs. Scientific knowledge is always provisional, not absolute. It keeps being changed as new discoveries come in. Sometimes those changes are tiny; sometimes they’re huge.

This concept is very different from the core concept of political and religious worldviews, which are usually based on allegedly fixed core beliefs. Because of the sheer number of beliefs in any serious worldview, it’s simply not possible to check all the possible combinations of beliefs for consistency within a human lifetime. Even if you have large numbers of believers checking for inconsistencies, there’s still the issue of how to fix those inconsistencies without causing schisms. The history of political and religious disagreements doesn’t inspire much confidence in people’s ability to resolve such schisms in an amicable manner that doesn’t lead to a body count.

In practice, core beliefs often morph over time in grudging response to the realities of the day, usually at a slow enough pace to be imperceptible on a day to day basis, thereby reducing the risk of being perceived as inconsistencies by the faithful.

Which takes us back to the starting point of this article, and is as good a place as any to stop.

In the next article, I’ll look at a mental model derived from everyday experience, and at what happens when you extend that model from the physical world to perceptions of virtue and sin.

Some related concepts and further reading

There has been a lot of work in mathematics and related fields on the problem of combinations. The mathematical issue of increasing numbers of possible combinations is a core part of the travelling salesman problem. This is a well-recognised major problem for a wide range of fields, since the number of possible combinations grows exponentially; for even a few dozen points, the number of combinations is far too large for any human being to work through within a lifetime.

There’s also a lot of work within graph theory on related concepts, such as finding the shortest route between two points, which has big implications for fields as diverse as electronics and route finding in satnav systems.

The topic of dodgy reasoning in this context has been researched from numerous perspectives, such as motivated reasoning, confirmation bias, cognitive dissonance, and loss aversion.

One field whose connection with span of consistency is less obvious, but tantalising, is criminology. A common theme in petty crime is that the individual doesn’t appear to have thought through the consequences of their actions. This has been connected in the literature with development of the frontal lobes of the brain, which are heavily involved in planning. This isn’t exactly the same as span of consistency, but it’s very similar, in terms of scanning ahead for various numbers of steps.

The issue of consistency across links also relates to a topic that I’ve blogged about several times, namely how horror and humour relate to Necker shifts, when you suddenly perceive something in an utterly different way. I’ll return to this theme in a later article.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book: Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

September 17, 2018

Mental models, and the Other as dark reflection.

By Gordon Rugg

This article is the first in a series about mental models and their implications both for worldviews and for everyday behaviour. Mental models are at the core of how we think and act. They’ve received a lot of attention from various disciplines, which is good in terms of there being plenty of material to draw on, and less good in terms of clear, unified frameworks.

In these articles, I’ll look at how we can use some clean, elegant formalisms to make more sense of what mental models are, and how they can go wrong. Much of the classic work on mental models has focused on mental models of specific small scale problems. I’ll focus mainly on the other end of the scale, where mental models have implications so far-reaching that they’re major components of worldviews.

Mental models are a classic case of the simplicity beyond complexity. Often, something in a mental model that initially looks trivial turns out to be massively important and complex; there’s a new simplicity at the other side, but only after you’ve waded through that intervening complexity. For this reason, I’ll keep the individual articles short, and then look in more detail at the implications in separate articles, rather than trying to do too much in one article.

I’ll start with the Other, to show how mental models can have implications at the level of war versus peace, as well as at the level of interpersonal bigotry and harrassment.

The Other is a core concept in sociology and related fields. It’s pretty much what it sounds like. People tend to divide the world in to Us and Them. The Other is Them. The implications are far reaching.

The full story is, as you might expect, more complex, but the core concept is that simple. In this article, I’ll look at the surface simplicity, and look at the different implications of two different forms of surface simplicity.

It’s a topic that takes us into questions about status, morality, and what happens when beliefs collide with reality.

A simple model: Using fixed opposites

A simple model of Us versus the Other uses a set of core values associated with Us, and a matching set of opposite values for the Other. Some of those values for Us are generic good things, such as being brave; others involve specific characteristics that are used to define the group, such as believing in democracy. The values are typically treated as crisp sets, with no fuzzy intermediate values between them; they’re either good or bad.

The table below shows some examples of how this works.

So far, so simple.

What happens, though, when the Other behaves in a way that doesn’t match that stereotype, or when reality behaves in a way that doesn’t match that stereotype?

This has a habit of happening in wartime, when a war begins with one group assuming that the Other is weak and cowardly (and therefore needs to be subjugated for the greater good). In World War II, the German troops invading Russia received an unwelcome shock when they encountered stiff resistance from Russian troops armed with T34 tanks that were more than a match for the German tanks. Similarly, Allied forces encountering Japanese forces equipped with the advanced Zero fighters discovered the flaws in their stereotypes about Asians doing inferior copies of Western innovations.

One option at this point would be to wonder whether the stereotype might be wrong, but people with strong stereotypes aren’t usually keen on that option, because it opens the door to uncomfortable questions such as “Are we the baddies?”

Another option, in the case of an individual Other, is to treat that individual as an honorary one of Us, who isn’t like the rest of Them. This strategy is quite often used as a way of reducing cognitive dissonance, when a prejudiced individual has to work with a member of the Other, and discovers that they are actually different from the stereotype of the Other. This can be rationalised for individual cases with dodgy folk wisdom such as “the exception that proves the rule”. By definition, though, this approach can only be used for a few cases before it starts to challenge the rule.

A third option is to deny reality, for example by claiming that uncomfortable evidence has been faked. This can easily lead into a spiral down into conspiracy theory, as the implications ripple out to a wider network of unwelcome facts which must in turn be denied.

None of these options is brilliant.

A subtler solution is to sidestep the underlying problem by using a set of opposites based on what are humorously known as irregular adjectives to cover a broader range of possibilities.

A more sophisticated model: Using irregular adjectives

An example of irregular adjectives is: “I am resolute, you are stubborn, he/she/it is pig-headed”.

So, for example, if one of Us does something dangerous, they are doing it because they are brave; if one of Them does the identical thing, they are doing it because they are fanatical. Conversely, We are prudent, whereas They are cowardly, as in the table below. When we depart from being democratic, we are being pragmatic; when they depart from being tyrannical, they are being unprincipled.

This approach has the advantage of handling all eventualities. However, it can easily be accused of moral relativism and of hypocritical cynicism. A classic example is the character of Sir Humphrey, the archetypal cynical civil servant, in the comedy series Yes Minister and Yes Prime Minister. His discussion of morality in politics is well worth watching for serious insights into complex ethical and practical questions, as well as for entertainment.

So why do people follow the simple Us/Them model?

An easy and obvious answer is that a lot of people follow the simple Good Us/Bad Other model because they are too lazy and/or stupid to handle anything more complex.

That answer, though, doesn’t stand up well to close examination. If you look at populist politics through history, simplistic Us/Them politics attracts a lot of vigorous, energetic supporters. It also attracts significant numbers of people who are in other respects intelligent and sophisticated. Laziness and stupidity don’t explain all of what’s going on.

A more interesting answer is that the Good Us/Bad Them model is attractive to some people because it’s a crisp set worldview, like the one in the image below, where something is either white or black, with no intermediates or alternatives.

A crisp set worldview offers the appearance of certainty in an uncertain world. This is particularly attractive to people who find that world threatening; for example, threatening because they’re worried about losing their status or privilege. In the world, binary categorisation is very often combined with fear or threat or the prospect of loss. It’s also very often associated with heavy moral loading for the binary values. The dividing line between Us and Them in this worldview is also the dividing line between good and evil, between high status and low status, and between happiness and misery.

The irregular adjective model is more likely to appeal to people who view the world as being inherently greyscale, with few or no absolute cases of completely right or completely wrong, as in the diagram below.

Implications

When a dividing line between categories is as important to someone as in the binary worldview above, then any breakdown in that division is deeply threatening. For this reason, extreme binary worldviews tend to be very hostile to borderline cases. This hostility can appear completely disproportionate to the apparent threat from the borderline cases themselves, which may involve just a handful of people or situations. What’s probably going on is that the hostility is driven by the perceived threat of the dividing line being broken, and letting in a host of other, more threatening, cases.

This may be why nuanced models such as the crisp/fuzzy/crisp one below are not more popular within worldviews. They explicitly assume a significant proportion of greyscale cases (which would be threatening to True Believers) but also explicitly assume a significant proportion of crisp set members (which would not fit well with a relativistic worldview where everything is on a greyscale). I’ve blogged about crisp and fuzzy sets here and here, and about their use in ideology here.

In systematised worldviews such as organised religions and formal political parties, the dividing line between crisp categories is typically policed by specialist theologians or political operatives who decide how each borderline case should be categorised. This leads to outcomes such as dietary laws or lists of clean and unclean things. From the viewpoint of the ordinary believer, this has the advantage of offloading the heavy decision making onto someone else; the believer can safely hide in the herd if they follow the official categorisation. The diagram below shows the red line between Good and Evil in such worldviews.

Whoever controls the red line has a lot of power. This becomes very apparent in populist politics and populist religion, when demagogues can stake out their claim to leadership of a group of Us by drawing a red line between the Us and the Other. This often involves Othering a group that was previously viewed as part of Us.

In contrast, the irregular adjectives model doesn’t offer strong claims to moral superiority of Us over Them, and doesn’t offer a strong appearance of certainty. It does provide explanations for unwelcome surprises from reality, but this advantage isn’t enough for true believers in the binary Good Us/Bad Them model.

Conclusion

A lot of people subscribe to simple crisp set worldviews involving a good Us versus the Other, where the Other is a dark mirror-opposite of our virtues. The popularity of these worldviews isn’t simply attributable to the believers being lazy or stupid. Something deeper is going on.

In the next article in this series, I’ll look at the issue of apparent inconsistency in simplistic worldviews, via the concept of span of consistency.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

August 21, 2018

Crisp and fuzzy categorisation

By Gordon Rugg

Categorisation occurs pretty much everywhere in human life. Most of the time, most of the categorisation appears so obvious that we don’t pay particular attention to it. Every once in a while, though, a case crops up which suddenly calls our assumptions about categorisation into question, and raises uncomfortable questions about whether there’s something fundamentally wrong in how we think about the world.

In this article, I’ll look at one important aspect of categorisation, namely the difference between crisp sets and fuzzy sets. It looks, and is, simple, but it has powerful and far-reaching implications for making sense of the world.

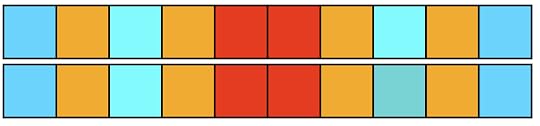

I’ll start with the example of whether or not you own a motorbike. At first glance, this looks like a straightforward question which divides people neatly into two groups, namely those who own motorbikes, and those who don’t. We can represent this visually as two boxes, with a crisp dividing line between them, like this.

However, when you’re dealing with real life, you encounter a surprising number of cases where the answer is unclear. Suppose, for instance, that someone has jointly bought a motorbike with their friend. Does that person count as being the owner of a motorbike, when they’re actually the joint owner? Or what about someone who has bought a motorbike on hire purchase, and has not yet finished the payments?

This was a problem that confronted classical logic right from the start. Classical logic as practised by leading Ancient Greeks philosophers typically involved dividing things into the categories of “A” and “not-A” and then proceeding from this starting point. The difficulty with this, as other Ancient Greek philosophers pointed out from the outset, is that a lot of categories don’t co-operate with this approach. A classical example is the paradox of the beard. At what point does someone move from the category of “has a beard” to the category of “doesn’t have a beard”? Just how many hairs need to be present for the category of “has a beard” to kick in?

An obvious response is to use categories that form some sort of scale, such as one ranging from “utterly hairless” via “wispy” to “magnificently bearded”. We can show that visually like this.

How does the motorbike example map onto these two approaches? One way is to combine them into three groups, namely “definitely owns a motorbike” and “sort-of owns a motorbike” and “definitely does not own a motorbike”. We can represent this visually like this. (I’ve included blue lines around the central category to show where it begins and ends.)

As an added refinement, we could make the width of the boxes correspond with how many people fit into each category, like this.

So far, so good. We now have a simple, powerful way of handling categorisation, which can be represented via clear, simple diagrams. As an added bonus, there’s a branch of mathematical logic, namely fuzzy logic, which provides a rigorous way of handling fuzzy categories. Since its invention by Zadeh in the 1960s, fuzzy logic has spread into a huge spread of applications; for example, it’s used in engineering applications ranging from washing machines to car engines.

At this point, you may be wondering suspiciously whether there’s a catch. You may well already know the catch, and know that it’s a big one.

The catch

So far, I’ve used gentle, non-threatening examples of categorisation, such as motorbike ownership and beard classification. The catch is that a lot of categorisation involves scary, threatening, morally charged issues that are usually linked with strong emotions, value systems, identity, and vested interests.

Here’s an illustration of how a lot of value systems work.

They divide the world into two crisp sets, where one set consists of goodness and light, and the other set consists of evil and darkness.

So how does this type of value system handle the “sort-of” problem? Typically, it will go through each type of “sort-of” and decide whether to include it in the “goodness and light” category or in the “evil and darkness” category.

This would lead to all sorts of problems if everyone within a particular ideological group made those judgments for themselves, so usually the judgments are made by a chosen individual or set of individuals, on behalf of the group. These judgments are then treated as laws, whether in the purely legal sense, or in the religious sense (e.g. dietary laws) or in a political or some other sense (as in the stated principles of a political group). I’ve blogged about the basic issues here, and about religious categorisations of gender and clean/unclean here.

This arrangement gives a lot of power to whoever decides where to position the ideological red line between light and darkness.

How would that “whoever” respond to a suggestion such as the one below, where there is a greyscale category between “definitely good” and “definitely evil”?

The usual response is to condemn it vehemently as moral relativism which puts everything on a greyscale, like the one below, where everything is tainted by the grey of evil and is therefore impure (and consequently likely to suffer the punishments prescribed by the value system). Yes, that’s a misrepresentation of what the diagram above is actually saying, but when emotions run high, facts tend to be early casualties.

Conclusion

So, where does this leave us?

First conclusion: The concepts of crisp sets and fuzzy sets are extremely useful, and allow us to make clean sense of messy problems. They’re easy to represent visually, which helps clarify what people mean when dealing with a specific problem.

Second conclusion: The combination of crisp, fuzzy and crisp is a particularly useful way of handling problems which initially look like messy greyscales.

Third conclusion: Categorisation is often associated with emotionally charged value judgments, so any plan to change an existing categorisation or to introduce a new one should check for potential value system associations.

Although these concepts look simple, they have far reaching implications, both for communication of what we actually mean within a category system, and for how we structure the categorisation systems at the heart of our world.

Notes and links

There’s a good background article about fuzzy logic here: https://en.wikipedia.org/wiki/Fuzzy_concept

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

July 22, 2018

Surface structure and deep structure

By Gordon Rugg

The concepts of surface structure and deep structure are taken for granted in some disciplines, such as linguistics and media studies, but little known in others. This article is a brief overview of these concepts, with examples from literature, film, physics and human error.

The core concept

A simple initial example is that the surface structure of Fred kisses Ginger is an instantiation of the deep structure the hero kisses the heroine. That same deep structure can appear as many surface structures, such as Rhett kisses Scarlett or Mr Darcy kisses Elizabeth Bennet.

There are various ways of representing surface and deep structure. One useful representation is putting brackets around each chunk of surface structure, to clarify which bits of surface structure map onto which bits of deep structure; for example, [Mr Darcy] [kisses] [Elizabeth Bennet].

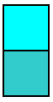

Another useful representation shows the surface structure mapped onto the deep structure visually. One way of doing this is as a table, like the one below.

Showing similarities and showing differences

This approach is useful for showing where the surface differences are masking underlying identical deep structure. It applies to a wide range of fields, and has far-reaching implications for teaching and training, because novices tend to focus on the surface structure and to miss the deep structure. For instance, a novice might focus on the surface structure difference that one accident involves a car whereas another involves a train, and miss the deep structure similarity that both were caused by a strong but wrong error. Similarly, students learning introductory maths and physics are often misled by the surface form of a problem (e.g. calculating the area of a room versus calculating the area of a playing field), and miss the deep structure commonality between the two problems.

The surface structure/deep structure distinction is also useful in the opposite direction, where very similar or identical surface structures are based on very different deep structures. For instance, there’s a scene in the original 1982 Blade Runner where the female replicant Pris kisses the male human character Sebastian. This surface structure description looks much the same as the surface structure descriptions above for heroes kissing heroines, apart from being gender-reversed.

However, the deep structure in the Blade Runner case is very different. Pris is a replicant with superhuman strength, who has already participated in the killings of numerous humans on a spacecraft before she appears on screen; whether she is a heroine or an anti-heroine or something else is one of the many debatable points of the film. When she embraces and kisses Sebastian, she is menacing him via her physical proximity. Sebastian is a minor character, rather than a hero, and has no romantic link to Pris. We can show this with a table like the one below.

The complexity beneath

With the classic romance examples, the mapping between surface structure and deep structure was pretty straightforward.

The Blade Runner example, however, raises a lot of interesting questions about what a deep structure analysis can or should tackle. For instance, one possible deep structure involves the power dynamic of a female character kissing a male character, as opposed to vice versa. Another possible deep structure involves one character kissing another character against their will. Yet another possible deep structure involves Pris being a character in the uncanny valley between human and non-human, who is kissing someone who is definitely human.

This isn’t a simple case of which is the “correct” interpretation; the choice of which deep structure to use is a reflection of assorted social and ideological beliefs, assumptions and values. That’s a fine, rich, topic for analysis, but it leads away from the central theme of this article, which is about how the concepts of surface structure operate.

The next example shows how you can map the surface/deep distinction onto a distinction that I’ve written about previously, namely the distinction between leaf level and twig/branch level within graph theory.

A well-known example of deep structure mapping is that the friendship between Han Solo and Chewbacca in Star Wars is based on a well-established trope of [human adventurer] [with] [semi-human companion]. This trope goes back to the Epic of Gilgamesh over four thousand years ago, where the human hero Gilgamesh has a semi-human companion called Enkidu.

This trope can in turn be treated as a subset of the trope [protagonist] [with] [companion who is the opposite of the protagonist in key features]. In the case of the protagonist Sherlock Holmes, for instance, his companion Watson is portrayed as a foil to Holmes in terms of Holmes’ most striking characteristics, such as his intelligence and his unconventionality.

We can show this visually via decision trees such as the one below. I’ve used pairs of examples for each surface structure box, to show how both examples map onto the same deep structure. The Maturin/Aubrey examples are from a set of novels by the late Patrick O’Brian, where Jack Aubrey is a larger-than-life Nelson-era sea captain, and Stephen Maturin is his small, intellectual companion.

One interesting result of mapping deep structure onto some form of graph theory is that this fits well with facet theory. This is a significant advantage because it provides a formal, rigorous representation which gives a solid infrastructure for examining the same topic from different perspectives. For instance, one facet for analysing fiction might be power dynamics, and another might be gender roles, while another might be social class.

That, though, takes us outside the scope of a basic introduction…

So, in summary, the distinction between surface structure and deep structure is simple in its essence, but is powerful and useful, with far reaching implications.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

July 20, 2018

The simplicity beyond complexity

By Gordon Rugg

The simplicity beyond complexity is a concept attributed to Oliver Wendell Holmes Jr. It appears in at least a couple of forms, as described below.

“I would not give a fig for the simplicity this side of complexity, but I would give my life for the simplicity on the other side of complexity.” This quote, which on the Holmes Sr page has “my right arm” instead of “my life,” is one for which I haven’t found the source so far, and so I will leave this quote as it is on both pages. – InvisibleSun 18:05, 10 October 2006 (UTC)

https://en.wikiquote.org/wiki/Talk:Oliver_Wendell_Holmes_Jr.

It’s interpreted in at least a couple of ways.

One way, which I won’t go into here, is about working out how to solve a problem, and then hiding the complexity of the solution from the user, so that the product is simple to use.

The other way, which I will go into below, is about why apparently sensible simple explanations often don’t work, and about why there’s often a different but better simple explanation that only emerges after a lot of complexity, confusion and investigation.

Adapted from: https://commons.wikimedia.org/wiki/Category:Graphic_labyrinths#/media/File:Triple-Spiral-labyrinth.svg

A common criticism of academic writing is that it’s just dressing up a simple idea in complicated words. At one level, that’s quite often a sort-of fair criticism. However, that criticism is usually followed by a statement of what the simple idea actually is, where the simple idea is simply wrong. This is the point where academics tend to point out why the simple idea is wrong, and the critic responds forcefully, and everything descends into heat and noise, with very little light being shed on what the real answer should be.

So, what’s going on?

What often happens is as follows. For standard everyday situations, there’s a simple explanation that works well enough, or at least appears to work well enough. So far, so good.

However, when a non-standard situation occurs, that simple explanation often falls apart very rapidly. One common response is to keep doing the same thing, only harder. That seldom ends well.

Usually, understanding the non-standard situation turns out to be complex, messy, and unpredictable as regards where that understanding takes you. Eventually, if all goes well, you get an answer, and it’s a raw, unpolished, complicated answer. You can now do two things with that answer.

One is to write it up in all its full, complex, messy glory, so that anyone who wants to properly understand what’s going on can see the complete answer. That’s what academic writing and technical writing are about.

The other thing you can do with the answer is to work out how to simplify it enough for anyone to understand and use. That takes time, effort, and a specialist skill set. All those commodities are rare. Also, at the end of the process, the new answer often looks so simple that people to treat it as self-evident, and to wonder why anyone would bother to make a big deal of it in the first place.

An example of this process is finding a client’s requirements. At first sight, this looks simple: You just interview them, and ask them to list their requirements.

However, this simply doesn’t work very well. It doesn’t work very well even when the client’s life literally depends on the requirements being complete and correct, as in the case of aircraft pilots giving requirements for flight control systems.

Understanding and solving this problem led to an entire discipline emerging, namely requirements engineering. This drew on a wide range of fields, from anthropology to neuropsychology and to mathematics. When Neil Maiden and I pulled the answers together into what we called the ACRE framework, the framework had several facets, and included about a dozen types of memory, skill and communication. It wasn’t exactly user-friendly for anyone except academic specialists.

However, it was possible to distil that framework down to four simple concepts that covered enough of the complexity for most purposes. The four concepts are: Do, don’t, can’t, won’t.

In brief, if you’re trying to extract information from a human being, there are four main categories:

Do: What they do tell you

Don’t: What they would be willing and able to tell you, but happen not to tell you, for various reasons

Can’t: When they can’t put their knowledge into words even though they want to (e.g. a skilled driver trying to tell a novice how to change gear)

Won’t: What they are unwilling to tell you, for various reasons

It’s short, it’s simple, and it’s good enough for most purposes. However, it’s a different simple answer from the one that we started with, and it’s not quite as simple as the one we started with. The simplicity beyond complexity is often like that.

So, that’s the simplicity beyond complexity. It’s a really useful concept, and it’s something worth striving for.

Notes and links

You’re welcome to use Hyde & Rugg copyleft images for any non-commercial purpose, including lectures, provided that you state that they’re copyleft Hyde & Rugg.

There’s more about the theory behind this article in my latest book:

Blind Spot, by Gordon Rugg with Joseph D’Agnese

http://www.amazon.co.uk/Blind-Spot-Gordon-Rugg/dp/0062097903

You might also find our website useful:

Related articles:

Overviews of the articles on this blog:

https://hydeandrugg.wordpress.com/2015/01/12/the-knowledge-modelling-book/

https://hydeandrugg.wordpress.com/2015/07/24/200-posts-and-counting/

https://hydeandrugg.wordpress.com/2014/09/19/150-posts-and-counting/

July 13, 2018

Explosive leaf level fan out

By Gordon Rugg

Often in life a beautiful idea is brought low by an awkward reality. Explosive leaf level fan out is one of those awkward realities (though it does have a really impressive sounding name, which may be some consolation).

So, what is it, and why is it a problem? Can it be a solution, as well as a problem? These, and other questions, are answered below.

Categorisation is a deeply embedded feature of the world, in areas as diverse as laws and supermarket aisle layout. Usually, we only notice it when it goes wrong, or does something unexpected, as in the example below.

Cropped from https://www.pinterest.co.uk/pin/AZgaUW8MtPVSTXzKvXeq_Yj3zNRCIRzDkAb6_24iKelbkm_FUX9JYxo/ : Used under fair use terms

Many categorisation systems are hierarchical, where you have a few categories at the top, divided into sub-categories, then into sub-categories, and so on, as in the diagram below.

A common way of constructing hierarchies is to start at the top with the categories, and then work downwards, deciding on the sub-categories and further sub-divisions as you proceed. This is known as the top-down approach.

Media genre is a familiar example of this approach, and of the problems that it can encounter. For instance, at first it makes good sense to treat “film” and “music” as separate categories. However, this hits problems with opera and with musicals; where do they fit? There’s a similar familiar problem with supermarket aisle layout, with products such as dried fruit; should dried fruit be next to fruit, because it’s dried fruit, or next to flour, because it’s widely used in baking, like flour?

The “where do we file it?” problem is different from leaf level fan out, but the two issues often overlap, as in the examples below.

When you go down through the layers of a hierarchy, you eventually emerge from categories into specific instances; for example, from the category of blues to the instance of the song Hard time killing floor blues.

This level of specific instances is known as the leaf level, by analogy with a tree dividing into branches and then twigs and finally ending with leaves. Depending on the details and purposes of the categorisation, leaves may take different forms. For instance, you might choose to treat a particular song as a leaf, or you might treat a particular cover of that song as a leaf, or you might treat each separate CD containing a particular cover of that song as a leaf.

The diagram below shows several layers of categorisation, ending at leaf level with schematic leaves.

At first glance, this looks like a manageable hierarchy. However, if we see the same information in a different layout, then the problems start to become apparent.

The image above shows how the hierarchy is rapidly broadening at leaf level. This is leaf level fan out. In this particular example, the fan out is clearly visible, but with only a few tens of leaves, there isn’t a huge problem.

However, what often happens with real cases is that a hierarchy suddenly goes from a manageable number of twigs into an enormous number of leaves. Music categorisation is a classic example, with an enormous number of leaf-level songs being mapped onto a comparatively tiny set of twig-level categories.

Explosive leaf level fan out is when the fan out at leaf level is much greater than the fan out at higher levels of the hierarchy. It’s an informal term, so you can use your judgment about whether or not to apply it to a particular case.

A key issue about explosive leaf level fan out is that it can turn a problem from being difficult to being impractical to solve or impossible to solve. This often occurs with top down solutions proposed by inexperienced managers, who may design solutions that look okay for the first few levels, but that fall apart completely when they encounter explosive leaf level fan out.

An example is attempts to legislate against dangerous dog breeds. At first sight, this looked like a case of using existing categorisations of dogs, and specifying which breeds should be classified as dangerous, with the breeds treated as the leaf level.

However, it soon became apparent that in addition to problems such as how to categorise mixed-breed dogs, there was so much individual variation of dog behaviour within breeds that treating breeds as the leaf level was not sensible. Instead, it looked more sensible to treat each individual dog as a leaf. This led to explosive leaf level fan out, because of the huge number of individual dogs involved, making the entire approach infeasible just in terms of fan out.

So, explosive leaf level fan out can be a major problem. Sometimes, though, it can be the mirror image of a very useful solution.

What happens if, instead of going through a hierarchy from the top downwards, you go through it from the bottom upwards?

What often happens is that you start off with an apparently intractable number of individual cases; far too many to handle on a case-by-case basis.

However, if you have a good categorisation system, that enormous number of leaves will map onto a manageably small number of twigs. This lets you reduce enormous leaf level complexity to a much more tractable set of categories.