Oxford University Press's Blog, page 798

June 23, 2014

Fixing the world after Iraq

Seldom do our national leaders take time to look meaningfully behind the news. As we now see with considerable clarity, watching the spasms of growing sectarian violence in Iraq, the results can be grievously unfortunate, or even genuinely catastrophic.

Injurious foreign policies ignore certain vital factors. For example, our American national leaders have meticulously examined the presumed facts surrounding Iraq and Afghanistan, Russia and the Ukraine, and Israel and the Palestinians, but, just as assiduously, have avoided any deeper considerations of particular kinds of crisis.

We must now therefore inquire: How shall we effectively improve our chances for surviving and prospering on this endangered planet?

This is not a narrow partisan query. The answers should be determined entirely by intellectual effort, not political party or ideology.

More and more, reason discovers itself blocked by a thick “fog of the irrational,” by something inside of us, heavy and dangerous, that yearns not for truth, but rather for dark mystery and immortality. Presciently, German historian Heinrich von Treitschke, cited his compatriot philosopher Johann Gottlieb Fichte, in his posthumously published lectures (Politics 1896): “Individual man sees in his own country, the realization of his earthly immortality.”

Nothing has really changed.

U.S. Army Spc. Justin Towe scans his area while on a mission with Iraqi army soldiers from 1st Battalion, 2nd Brigade, 4th Iraqi Army Division in Al Muradia village, Iraq, March, 13, 2007. Towe is assigned to 4th Platoon, Delta Company, 2nd Battalion, 27th Infantry Regiment, 3rd Brigade Combat Team, 25th Infantry Division, Schofield Barracks, Hawaii. (U.S. Air Force photo by Master Sgt. Andy Dunaway) www.army.mil CC BY 2.0 via The U.S. Army Flickr.

When negotiating the treacherous landscapes of world politics, even in Iraq, generality trumps particulars.

To garner attention, current news organizations choose to focus on tantalizing specifics, e.g., Iraq, Syria, Egypt, Afghanistan, etc. What finally matters most, however, is something more complex: a cultivated capacity for the systematic identification of recurring policy issues and problems.

Naturally, the flesh-and-blood facts concerning war, revolution, riots, despotism, terrorism, and genocide are more captivating to citizens than abstract theories. But the real point of locating specific facts must be a tangible improvement of the human condition. In turn, any civilizational betterment must be contingent on even deeper forms of general human awareness.

Only by exploring the individual cases in world politics (e.g., Iraq) as intersecting parts of a much larger class of cases, can our leaders ever hope to learn something predictive. While seemingly counter-intuitive, it is only by deliberately seeking general explanations that we can ever hope to “fix” the world.

“The blood-dimmed tide is loosed,” lamented the Irish poet, William Butler Yeats, and “everywhere the ceremony of innocence is drowned.” Today’s global harms and instabilities, whether still simmering, or already explosive, are best understood as symptoms of a more generalized worldwide fragility. It is, therefore, unhelpful to our leaders for these symptoms ever to be regarded as merely isolated, discrete, or unique.

One prospective answer concerns the seemingly irremediable incapacity of human beings to find any real meaning and identity within themselves. Typically, in world politics, it is always something other than one’s own Self (the state, the movement, the class, the faith, etc.) that is held sacred. As a result, our species remains stubbornly determined to demarcate preferentially between “us” and “them,” and then, always, to sustain a rigidly segmented universe.

In such a fractionated world, “non-members” (refugees, aliens, “infidels,” “apostates,” etc.) are designated as subordinate and inferior. This fatal designation is the very same lethal inclination that occasioned both world wars and the Holocaust, among other atrocities.

From the beginning, some kind of “tribal” conflict has driven world affairs. Without a clear and persisting sense of an outsider, of an enemy, of a suitably loathsome “other,” whole societies would have felt insufferably lost in the world. Drawing self-worth from membership in the state or the faith or the race (Nietzsche’s “herd”; Freud’s “horde”), people could not hope to satisfy even the most elementary requirements of world peace.

Our progress in technical and scientific realms has no discernible counterpart in cooperative human relations. We can manufacture advanced jet aircraft and send astronauts into space, but before we are allowed to board commercial airline flights, we must first take off our shoes.

How have we managed to blithely scandalize our own creation? Much as we still like to cast ourselves as a “higher” species, the veneer of human society remains razor thin. Terrorism and war are only superficially about politics, diplomacy, or ideology; the most sought after power is always power over death.

The key questions about Iraq have absolutely nothing to do with counter-insurgency or American “boots on the ground.” Until the underlying axes of conflict are understood, all of our current and future war policies will remain utterly beside the point. Indeed, if this had been recognized earlier, few if any American lives would have been wasted in Iraq and Afghanistan.

Although seemingly nonsensical, we must all first learn to pay more attention to our personal feelings of empathy, anxiety, restlessness, and desperation. While these feelings still remain unacknowledged as hidden elements of a wider world politics, they are in fact determinative for international relations.

Oddly enough, we Americans still don’t really understand that national and international life must ultimately be about the individual. In essence, the time for “modernization,” “globalization,” “artificial intelligence,” and even “new information methodologies” is almost over. To survive together, the fragmented residents of this planet must learn to discover an authentic and meaningfully durable human existence, detached from traditional distinctions.

It is only in the vital expressions of a thoroughly re-awakened human spirit that we can learn to recognize what is important for national survival. Beware, “The man who laughs,” warned the poet Bertolt Brecht, “has simply not yet heard the horrible news.”

Following Iraq and Afghanistan, the enduring barbarisms of life on earth can never be undone by improving global economies, building larger missiles, fashioning new international treaties, spreading democracy, or even by supporting “democratic” revolutions. Inevitably, humankind still lacks a tolerable future, not because we have been too slow to truly learn, but because we have failed to learn what is truly important.

To improve our future foreign policies, to avoid our recurring global misfortunes, we must learn to look behind the news. In so doing, we could acknowledge that the vital root explanations for war, riots, revolution, despotism, terrorism, and genocide are never discoverable in visible political institutions or ideologies. Instead, these explanations lie in the timeless personal needs of individuals.

Louis René Beres was educated at Princeton (Ph.D., 1971), and is Professor of International Law at Purdue University. Born in Zürich, Switzerland, at the end of World War II, he is the author of ten major books, and many articles, dealing with world politics, law, literature, and philosophy. His most recent publications are in the Harvard National Security Journal (Harvard Law School); International Journal of Intelligence and Counterintelligence; Parameters: Journal of the U.S. Army War College; The Brown Journal of World Affairs; Israel Journal of Foreign Affairs; The Atlantic; The Jerusalem Post; U.S. News & World Report.

If you are interested in this subject, you may also be interested in Morality and War: Can War be Just in the Twenty-first Century? by David Fisher. Fisher explores how just war thinking can and should be developed to provide such guidance. His in-depth study examines philosophical challenges to just war thinking, including those posed by moral scepticism and relativism.

Subscribe to the OUPblog via email or RSS.

Subscribe to only current affairs articles on the OUPblog via email or RSS.

The post Fixing the world after Iraq appeared first on OUPblog.

Related StoriesScotland’s return to the state of nature?Obama’s predicament in his final years as PresidentDoes learning a second language lead to a new identity?

Related StoriesScotland’s return to the state of nature?Obama’s predicament in his final years as PresidentDoes learning a second language lead to a new identity?

Hannah Arendt and crimes against humanity

Film is a powerful tool for teaching international criminal law and increasing public awareness and sensitivity about the underlying crimes. Roberta Seret, President and Founder of the NGO at the United Nations, International Cinema Education, has identified four films relevant to the broader purposes and values of international criminal justice and over the coming weeks she will write a short piece explaining the connections as part of a mini-series. This is the second one, , following The Act of Killing.

By Roberta Seret

The powerful biographical film, Hannah Arendt, focuses on Arendt’s historical coverage of Adolf Eichmann’s trial in 1961 and the genocide of six million Jews. But sharing center stage is Arendt’s philosophical concept: what is thinking?

German director, Margarethe von Trotta, begins her riveting film with a short silent scene — Mossad’s abduction of Adolf Eichmann in Buenos Aires, the ex-Nazi chief of the Gestapo section for Jewish Affairs. Eichmann was in charge of deportation of Jews from all European countries to concentration camps.

Margarethe von Trotta’s and Pam Katz’s brilliant screen script is written in a literary style that covers a four-year “slice of life” in Hannah Arendt’s world. The director invites us into this stage by introducing us to Arendt (played by award-winning actress Barbara Sukova), her friends, her husband, colleagues, and students.

As we listen to their conversations, we realize that we will bear witness not only to Eichmann’s trial, but to Hannah Arendt’s controversial words and thoughts. We get multiple points of view about the international polemic she has caused in her coverage of Eichmann. And we are asked to judge as she formulates her political and philosophical theories.

Director von Trotta continues her literary approach to cinema by using flashbacks that take us to the beginning of Arendt’s university days in Marburg, Germany. She is a Philosophy major, studying with Professor Martin Heidegger. He is the famous Father of Existentialism. Hannah Arendt becomes his ardent student and lover. In the first flashback, we see a young Arendt, at first shy and then assertive, as she approaches the famous philosopher. “Please, teach me to think.” He answers, “Thinking is a lonely business.” His smile asks her if she is strong enough for such a journey.

“Learn not what to think, but how to think,” wrote Plato, and Arendt learns quickly. “Thinking is a conversation between me and myself,” she espouses.

Arendt learned to be an Existentialist. She proposed herself to become Heidegger’s private student just as she solicited herself to cover the Eichmann trial for The New Yorker. Every flashback in the film is weaved into a precise place, as if the director is Ariadne and at the center of the web is Heidegger and Arendt. From flashback to flashback, we witness the exertion Heidegger has on his student. As a father figure, Heidegger forms her; he teaches her the passion of thinking, a journey that lasts her entire life.

Throughout the film, in the trial room, in the pressroom, in Arendt’s Riverside Drive apartment, we see her thinking and smoking. The director has taken the intangible process of thinking and made it tangible. The cigarette becomes the reed for Arendt’s thoughts. After several scenes, we the spectator, begin to think with the protagonist and we want to follow her thought process despite the smoke screen.

When Arendt studies Eichmann in his glass cell in the courtroom, she studies him obsessively as if she were a scientist staring through a microscope at a lethal cancer cell on a glass slide. She is struck by what she sees in front of her – an ordinary man who is not intelligent, who cannot think for himself. He is merely the instrument of a horrific society. She must have been thinking of what Heidegger taught her – we create ourselves. We define ourselves by our actions. Eichmann’s actions as Nazi chief created him; his actions created crimes against humanity.

The director shows us many sides of Arendt’s character: curious, courageous, brilliant, seductive, and wary, but above all, she is a Philosopher. Eichmann’s trial became inspiration for her philosophical legacy, the Banality of Evil: All men have within them the power to be evil. Man’s absence of common sense, his absence of thinking, can result in barbarous acts. She concludes at the end of the film in a form of summation speech, “This inability to think created the possibility for many ordinary men to commit evil deeds on a gigantic scale, the like of which had never been seen before.”

And Eichmann, his summation defense? It is presented to us by Willem Sassen, Dutch Fascist and former member of the SS, who had a second career in Argentina as a journalist. In 1956 he asked Eichmann if he was sorry for what he had done as part of the Nazis’ Final Solution.

Eichmann responded, “Yes, I am sorry for one thing, and that is I was not hard enough, that I did not fight those damned interventionists enough, and now you see the result: the creation of the state of Israel and the re-emergence of the Jewish people there.”

The horrific acts of the Nazis speak for themselves. Director von Trotta in this masterpiece film has stimulated us to think again about genocide and crimes against humanity, their place in history as well as in today’s world.

Roberta Seret is the President and Founder of International Cinema Education, an NGO based at the United Nations. Roberta is the Director of Professional English at the United Nations with the United Nations Hospitality Committee where she teaches English language, literature and business to diplomats. In the Journal of International Criminal Justice, Roberta has written a longer ‘roadmap’ to Margarethe von Trotta’s film on Hannah Arendt. To learn more about this new subsection for reviewers or literature, film, art projects or installations, read her extension at the end of this editorial.

The Journal of International Criminal Justice aims to promote a profound collective reflection on the new problems facing international law. Established by a group of distinguished criminal lawyers and international lawyers, the journal addresses the major problems of justice from the angle of law, jurisprudence, criminology, penal philosophy, and the history of international judicial institutions.

Oxford University Press is a leading publisher in international law, including the Max Planck Encyclopedia of Public International Law, latest titles from thought leaders in the field, and a wide range of law journals and online products. We publish original works across key areas of study, from humanitarian to international economic to environmental law, developing outstanding resources to support students, scholars, and practitioners worldwide. For the latest news, commentary, and insights follow the International Law team on Twitter @OUPIntLaw.

Subscribe to the OUPblog via email or RSS.

Subscribe to only law articles on the OUPblog via email or RSS.

The post Hannah Arendt and crimes against humanity appeared first on OUPblog.

Related StoriesPsychodrama, cinema, and Indonesia’s untold genocideInvesting for feline futuresWelcome to the OHR, Stephanie Gilmore

Related StoriesPsychodrama, cinema, and Indonesia’s untold genocideInvesting for feline futuresWelcome to the OHR, Stephanie Gilmore

Investing for feline futures

For tigers, visiting your neighbor is just not as easy as it used to be. Centuries ago, tigers roamed freely across the landscape from India to Indonesia and even as far north as Russia. Today, tigers inhabit is just 7% of that historical range. And that 7% is distributed in tiny patches across thousands of kilometers.

Habitat destruction and poaching has caused serious declines in tiger populations – only about 3,000 tigers remain from a historical estimate of 100,000 just a century ago. Several organizations are concerned with conserving this endangered species. Currently, however, all conservation plans focus increasing the number of tigers. Our study shows that, if we are managing for a future with healthy tiger populations, we need to look beyond the numbers. We need to consider genetic diversity.

Genetic diversity is the raw material for evolution. Populations with low genetic diversity can have lower health and reproduction due to ‘inbreeding effects’. This was the case with the Florida panther – in the early 1990s there were just 30 Florida panthers left. Populations with low genetic diversity are also vulnerable when faced with challenges such as disease or changes in the environment.

Photo by Rachael A. Bay

Luckily for tigers, they don’t have low genetic diversity. They have very high genetic diversity, in fact. But the problem for tigers is that losing genetic diversity happens more quickly in many small, disconnected populations than in one large population. The question is: how can we keep that genetic diversity so that tigers never suffer the consequences of inbreeding effects.

We used computer simulations to predict how many tigers we would need in the future to keep the genetic diversity they already have. Our study shows that without connecting the small populations, the number of tigers necessary to maintain that variation is biologically impossible. However, if we can connect some of these populations – between tiger reserves or even between subspecies – the number of tigers needed to harbor all the genetic variation becomes much smaller and more feasible. Case studies have shown that introducing genetic material from distantly related populations can hugely benefit the health of a population in decline. In the case of the Florida panther, when individuals from another subspecies were introduced into the breeding population, the numbers began to rise.

We do need to increase the number of tigers in the wild. If we can’t stop poaching and habitat destruction, we will lose all wild tigers before we have a chance to worry about genetic diversity. But in planning to conserve this majestic animal for future generations we should make sure those future populations can thrive – and that means trying to keep genetic variation.

Rachael A. Bay is a PhD candidate at Stanford University, and co-author of the paper ‘A call for tiger management using “reserves” of genetic diversity’, which appears in the Journal of Heredity.

Journal of Heredity covers organismal genetics: conservation genetics of endangered species, population structure and phylogeography, molecular evolution and speciation, molecular genetics of disease resistance in plants and animals, genetic biodiversity and relevant computer programs.

Subscribe to the OUPblog via email or RSS.

Subscribe to only earth and life sciences articles on the OUPblog via email or RSS.

Image credit: Tigers at San Francisco Zoo. Photo by Rachael A. Bay. Do not reproduce without permission.

The post Investing for feline futures appeared first on OUPblog.

Related StoriesGene flow between wolves and dogsWelcome to the OHR, Stephanie GilmoreFaith and science in the natural world

Related StoriesGene flow between wolves and dogsWelcome to the OHR, Stephanie GilmoreFaith and science in the natural world

June 22, 2014

How much do you know about The Three Musketeers ?

The Three Musketeers, by Alexandre Dumas, celebrates its 170th birthday this year. The classic story of friendship and adventure has been read and enjoyed by many generations all over the world, and there have been dozens of adaptations, including the classic silent 1921 film, directed by Fred Niblo, and the recent BBC series. Take our quiz to find out how much you know about the book, its author, and the time at which it was written.

Still from the American film The Three Musketeers (1921) with George Siegmann, Douglas Fairbanks, Eugene Pallette, Charles Belcher, and Léon Bary. Public Domain via Wikimedia Commons.

Get Started!

Your Score:

Your Ranking:

Alexandre Dumas was a French novelist and dramatist. A pioneer of the romantic theatre in France, he achieved fame with his historical dramas, such as Henry III et sa cour (1829). His reputation now rests on his adventure novels, especially The Three Musketeers (1844–45) and The Count of Monte Cristo (1844–45). The Oxford World’s Classics edition of The Three Musketeers is edited with an Introduction and notes by David Coward.

For over 100 years Oxford World’s Classics has made available the broadest spectrum of literature from around the globe. Each affordable volume reflects Oxford’s commitment to scholarship, providing the most accurate text plus a wealth of other valuable features, including expert introductions by leading authorities, voluminous notes to clarify the text, up-to-date bibliographies for further study, and much more. You can follow Oxford World’s Classics on Twitter and Facebook.

Subscribe to the OUPblog via email or RSS.

Subscribe to only literature articles on the OUPblog via email or RSS.

The post How much do you know about The Three Musketeers ? appeared first on OUPblog.

Related StoriesHow well do you know short stories?A tale of two fables: Aesop vs. La FontaineA Mother’s Day reading list from Oxford World’s Classics

Related StoriesHow well do you know short stories?A tale of two fables: Aesop vs. La FontaineA Mother’s Day reading list from Oxford World’s Classics

Scotland’s return to the state of nature?

Some observers may immediately recoil at the thought that an entity that is partially independent would have advantages over an entity with a full measure of sovereignty. This indeed seems to be the view of the minority of Scottish voters who intend on voting in favor of Scotland’s secession from the United Kingdom during the September 2014 independence referendum. To them, Scotland’s current condition of partial independence may seem like a cup that is half full. By contrast, full independence may seem like an outcome that is always good. This view is however at odds with the condition of nearly 50 partially independent territories throughout the world (like Hong Kong, Bermuda, and Puerto Rico), which tend to be far wealthier and more secure than their demographically similar fully independent counterparts. The per capita GDP of the average partially independent territory (of US$32,526) is about three times higher than the average sovereign state (of US$9,779). The relative wealth of partially independent territories is even more striking when the comparison controls for factors such as population size, geographic region, and regime type. The aspiration for full independence for its own sake also flies in face of our own common sense experience as individuals.

When consumers purchase fruits and vegetables at the grocery store rather than growing it themselves, when we buy clothes rather than learning the art of weaving, when customers put money in banks rather than guarding it themselves, when citizens consent to reliable police protection rather than arming for a state of war―in all of these actions people have ceded what would otherwise be their full independence and instead embraced partial independence. We put our confidence in others in some respects while in other ways retain our own autonomy. This frees us to specialize. It facilitates collaboration, allowing us to build on the work of others. It gives us confidence to take risks. At the citizen level, however, we do not typically refer to this as partial independence―we refer to it as being civilized. Clothing oneself with an animal skin and running out into the wilderness to survive alone in the state of nature may provide someone with a full cup of independence, but it is not the kind of condition that most people want to be in.

But this is precisely what Scotland’s First Minister, Alex Salmond, and his secessionist Scottish Nationalist Party (SNP) colleagues seem to want for their homeland. Scotland’s current partial independence with the United Kingdom provides wide ranging economic, political, and security advantages for both the United Kingdom and Scotland. With the world’s fifth largest economy, the UK provides a larger share of public services per capita to Scotland than other areas of the country. London is also one of the largest financial centers in the world and amidst the 2008 financial downturn―while nearby sovereign states like Iceland and Ireland were reeling under financial strain―Britain’s central bank opened its coffers to Scotland, spending £126.6 billion to prevent local bank failure. Britain, which is the world’s fourth largest military power, also furnishes Scotland’s defense. The UK also has one of the highest levels of rule of law and provides Scotland with credible guarantees for its own self-determination.

And with full control over the elections in its own local parliament as well as control over one-tenth of the seats in the British Parliament―with an occasional Scotsman as UK Prime Minister―Scotland is far more influential with its neighbors and throughout the world than atrophying into a mini sovereign state. If Scotland’s current arrangement is modified with new powers (such as greater control over taxation, natural resources, and foreign relations), its partially independent status stands to deliver even greater advantages.

Choosing full independence would, however, tragically throw away many ― or all of ― the aforementioned advantages. It would take Scotland into the wilderness of international anarchy in which it would have to fend for itself as a sovereign state. Entry into the European Union may mitigate some of the costs of secession and international anarchy, but there are no guarantees of the terms―or availability―of such membership. Indeed, even if Scotland managed to eventually join the EU, it is widely believed that it would need to drop the British pound and adopt the (locally unpopular) Euro.

Whether as a society or an individual, there certainly is a time in which it makes sense to quit the interdependence advantages of civilized life. If (as with war torn regions) one’s security and rights are under threat, or if (as in the world’s numerous weak fully independent states) conditions are so poor that society has lost its preexisting capability to deliver services, or if (as with historic colonies) one is subject to continuous exploitation by a higher power―under such conditions one may be justified in grabbing a rifle, bundling up the family, and heading for the woods. To some extent, some of the later conditions may indeed apply to Catalonia’s association with Spain―but none of them apply to Scotland’s relationship with the United Kingdom. Scotland’s partially independent union with the UK brings substantial advantages that would not be available under full independence.

True nationalists do not seek a political alternative (like full or partial independence) for its own sake or because it is an article of faith. Rather, they seek out alternatives that best fulfill the economic, security, political and other interests of their nationality. They refrain from needlessly throwing advantages away. It may however still be the case that SNP leaders have a winning strategy. If pushing for a self-damaging divorce with the UK is merely a ploy to win even greater powers as a partially independent territory, they may in fact have a strategy that could validate their nominally nationalist credentials.

David A. Rezvani, D.Phil. Oxford University, has taught courses in international and comparative politics at Dartmouth College, Harvard University, MIT, Trinity College, Boston University, and Oxford University. He is a visiting research assistant professor and lecturer at Dartmouth College. He is the author of Surpassing the Sovereign State: The Wealth, Self-Rule, and Security Advantages of Partially Independent Territories.

Subscribe to the OUPblog via email or RSS.

Subscribe to only politics articles on the OUPblog via email or RSS.

Image credit: The modern architecture of the scottish parliament building in Edinburgh. © andy2673 via iStockphoto.

The post Scotland’s return to the state of nature? appeared first on OUPblog.

Related StoriesObama’s predicament in his final years as PresidentBooks by designPolitical apparatus of rape in India

Related StoriesObama’s predicament in his final years as PresidentBooks by designPolitical apparatus of rape in India

Does learning a second language lead to a new identity?

Every day I get asked why second language learning is so hard and what can be done to make it easier. One day a student came up to me after class and asked me how his mother could learn to speak English better. She did not seem to be able to breakthrough and start speaking. Perhaps you or someone you know has found learning another language difficult.

So why is it so hard?

There are a lot of explanations. Some have to do with biology and the closing of a sensitive period for language. Others have to do with how hard grammar is. People still take English classes in US high schools up to senior year. If a language were easy, then native speakers of a language would not have to continue studying it to the dawn of adulthood.

But what if we took a different approach. Rather than ask what makes learning a second language so hard, let’s ask what makes it easier.

One group of successful language learners includes those who write in a second language. For example, Joseph Conrad, born Józef Teodor Konrad Korzeniowski, wrote Heart of Darkness in English, a language he spoke with a very strong accent. He was of Polish origin and considered himself to be of Polish origin his entire life. Despite his heavy accent, he is regarded by many as one of the greatest English writers. Interestingly, English was his third language. Before moving to England, he lived in France and was known to have a very good accent in his second language. Hence, success came to Conrad in a language he spoke less than perfectly.

The use of English as a literary language has gained popularity in recent years. William Grimes, in a New York Times piece, describes a new breed of writers that are embracing a second language in literary spirit. Grimes describes the prototypical story that captures the essence of language learning, The Other Language from Francesca Marciano. It’s the story of a teenager who falls in love with the English language tugged by her fascination with an English-speaking boy. Interestingly, it turns out there is a whole host of writers who do so in their second language.

Grimes also considers the effects that writing in a second language has on the authors themselves. Some writers find that as time passes in the host country they begin to take on a new persona, a new identity. Their native land grows more and more distant in time and they begin to feel less like the person they were when they initially immigrated. Ms. Marciano feels that English allows her to explore parts of her that she did not know existed. Others feel liberated by the voice they discover in another language.

The literary phenomenon that writers describe is one that has been discussed at length by Robert Schrauf of Penn State University as a form of state-dependent learning. In one classic study of state-dependent learning, a group of participants was asked to learn a set of words below or above water and then tested either above or below water. Interestingly, memory was better when the location of the learning matched the testing, even when that was underwater, a particularly uncomfortable situation relative to above water. Similar explanations can be used to describe how emotional states can lead to retrieval of memories that are seemingly unrelated. For example, anger at a driver who cuts you off might lead to memories of the last time you had a fight with a loved one.

Schrauf reviews evidence that is consistent with this hypothesis. For example, choosing the same word in a first or second language will lead people to remember events at different times in their lives. Words in the first language lead to remembering things earlier in life whereas viewing a translation in a second language leads to memories that occurred later in life.

The reports of writers and the research done by Robert Schrauf and his colleagues help point to a key aspect that might help people learn their second language. Every time someone learns a new language they begin to associate this language with a set of new experiences that are partially disconnected from those earlier in life. For many this experience is very disconcerting. They may no longer feel like themselves. Where they were once fluent and all knowing, now they are like novices who are trying desperately to find their bearings. For others like Yoko Tawada, a Japanese native who now lives in Berlin and writes in German, it is the very act of being disconnected that leads to creativity.

Interestingly, the use of two languages has also served as a vehicle for psychotherapists. Patients that undergo traumatic experiences often report the ability to discuss them in a second language. Avoidance of the native language helps to create a distance from the emotional content experienced in the first language.

The case of those who write in their second language as well as those in therapy suggests that our identity may play a key role in the ability to learn a second language. As we get older new experiences begin to incorporate themselves into our conscious memory. Learning a second language as an adult may serve to make the differences between distinct periods in our lives much more salient. Thus, the report of writers and the science of autobiographical memory may hold the key to successful language learning. It may involve a form of personal transformation. For those that are unsuccessful it may involve an inability to let go of their old selves. However, for those who embrace their new identity it can be liberating.

It was precisely this point that I raised with the student in my class who sought advice for his mother. I explained that learning a second language will often involve letting go of our identities in order to embrace something new. But how do you get someone to let go of himself or herself? One way to achieve this is to start keeping a diary in an unfamiliar language. It is probable that writing may not only lead a person to develop better language skills but also carry other deeper consequences. Writing in a non-native language may lead someone to develop a new identity.

Arturo Hernandez is currently Professor of Psychology and Director of the Developmental Cognitive Neuroscience graduate program at the University of Houston. He is the author of The Bilingual Brain. His major research interest is in the neural underpinnings of bilingual language processing and second language acquisition in children and adults. He has used a variety of neuroimaging methods as well as behavioral techniques to investigate these phenomena which have been published in a number of peer reviewed journal articles. His research is currently funded by a grant from the National Institutes of Child Health and Human Development. You can follow him on Twitter @DrAEHernandez. Read his previous blog posts.

Subscribe to the OUPblog via email or RSS.

Subscribe to only brain sciences articles on the OUPblog via email or RSS.

Image credit: Young female student with friends on break at cafe. © LuckyBusiness via iStockphoto.

The post Does learning a second language lead to a new identity? appeared first on OUPblog.

Related StoriesWhat do Otis Redding and Roberto Carlos have in common?Why nobody dreams of being a professorBooks by design

Related StoriesWhat do Otis Redding and Roberto Carlos have in common?Why nobody dreams of being a professorBooks by design

Books by design

Despite the old saying, a book’s cover is perhaps the strongest factor in why we pick up a book off the shelf or pause during our online web shopping. Of course, we all like to think that we are above such a judgmental mentality, but the truth is that a cover design can make — or break — a book’s fortunes.

Brady McNamara, Senior Designer at Oxford University Press, admitted that designing book covers isn’t as easy as one might think.

“To create a book jacket,” McNamara explained, “You have to first understand book’s concept. I have about 75 books at a time to design jackets for. That’s just too much. To help, I have about 10-12 really great freelance designers who really know what OUP is all about.” He continued, “I also always try one or two new freelancers each go around. Sometimes they work, and sometimes they don’t. That’s kind of what happened to Dog Whistle Politics.”

Ian Haney Lopez’s book, examining how politicians use veiled racial appeals to persuade white voters to support policies that favor the rich and threaten the middle class, is a difficult concept to capture. “It was a particularly tough cover to design: the subject just doesn’t lend itself to one concrete image,” McNamara agreed. He and his freelancer toiled over numerous cover drafts.

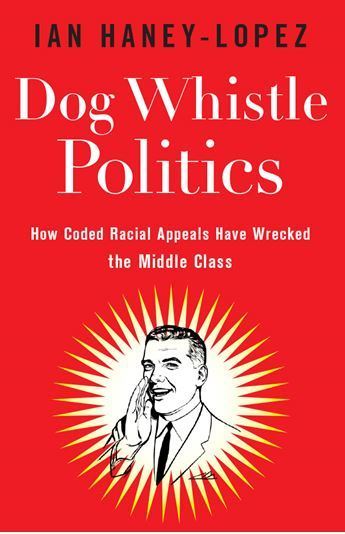

Dog Whistle Politics Design 1:

McNamara: “This is the initial sketches shown by our freelancer, I really liked [the] 1950’s clip art man. It had a kitchiness and style typical to ‘the conservative establishment.’ However, the cover review panel (editor and marketing manager) thought the starburst frame seemed out of place, like vintage advertising.”

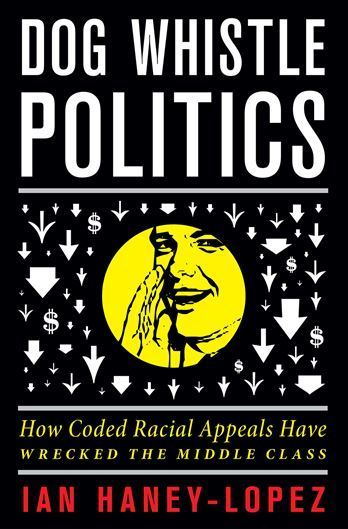

Dog Whistle Politics Design 2:

McNamara: “In another sketch, the designer used a black background and surrounded our clip art guy with symbols of the economy and arrows illustrating its downfall. It was arbitrary and yet too obvious; just didn’t feel right.”

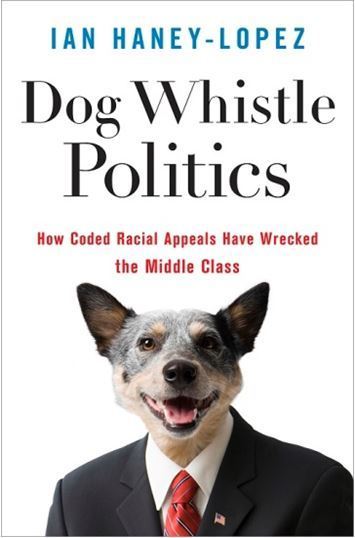

Dog Whistle Politics Design 3:

McNamara: “[The] editor suggested that an actual image of a dog could work. The sketch was submitted but not shown. I made a personal call that it was too gimmicky and distractingly odd. It was weird, and a not funny weird.”

Dog Whistle Politics Design 4:

“I finally took the design on myself,” says McNamara, laughing slightly as he remembers the process. “I had a pretty good idea of what we needed by now. It really wasn’t the freelancer’s fault, again, it’s just one of those books that is tough to design for. I tried an image of a bull dog and after the author mentioned a dog whistle, I incorporated that into the title. Nothing more literal I guess. The bulldog turned out to be too cute.”

Dog Whistle Politics Design 5:

McNamara: “I tried a more menacing Doberman in black and white—ears pricked up as if hearing the whistle. I also brought a serious tone back to the design by using just red, gray, and black type. This was finally approved. Not the most esoteric design, but it stands out on the shelf – if only for those pointy ears.”

While McNamara struggled with the concept for Dog Whistle Politics, design is a collaborative process.

James Cook, an Oxford University Press editor, discussed the pressing dilemmas of the design process: “I know the book best and worked with it the longest, so I understand the themes and perhaps have some ideas about how they can be illustrated.” He serves as a translator to the designer from the book itself and the author. “I talk with the author and try to relay his or her wishes to the designer, while also making sure the book and title are being represented. A cover needs to align, interpret, and reflect the books themes accurately, while also being attractive to a buyer.”

One of his titles, Coming Up Short, a book that sheds light on what it really means to be a working class young adult with all its economic insecurity and deepening inequality, also went through a number of cover jackets before finding the right fit.

Coming Up Short Design 1:

“One of the first designs was a girl in a skirt, sitting on a swing in the park,” remembers Cook. “It certainly portrayed what we wanted, but also had a sexual predator vibe as well.”

“I didn’t have a good feeling about the first cover I saw,” the author Jennifer Silva confessed. “I thought it had a kind of Lolita vibe when mixed with the title of the book. I expressed my concern, and it turned out that others at Oxford agreed.”

“Jen was great to work with,” Cook acknowledged. “Sometimes it becomes difficult going back and forth trying to satisfy everyone’s wishes while also finding a good portrayal of the book. Sometimes authors just don’t want certain colors or schemes in the cover, and it’s my job to make sure they are heard.”

Coming Up Short Design 2:

After several other drafts, everyone agreed on a jacket: saturated yellow with multiple ladders.

“I love it,” raved Silva. “It feels young, modern, and hip. It’s not too literal, and also looks great on a bookshelf.” When asked about if the experience of jacket designing was frustrating or stressful, Jen waves the issue away saying, “No, it was fun to go back and forth!”

Not all books are as design-intensive as Dog Whistle Politics and Coming Up Short. Cook says, “Some academic books are easy because you can follow a certain style that is well known and easily recognized as being a textbook.”

However, there are always a few books along the way that keep designers and editors nimble.

Maggie Belnap is a Social Media Intern at Oxford University Press. She attends Amherst College.

Subscribe to the OUPblog via email or RSS.

The post Books by design appeared first on OUPblog.

Related StoriesObama’s predicament in his final years as PresidentPolitical apparatus of rape in IndiaA Q&A with Peipei Qiu on Chinese comfort women

Related StoriesObama’s predicament in his final years as PresidentPolitical apparatus of rape in IndiaA Q&A with Peipei Qiu on Chinese comfort women

June 21, 2014

How social media is changing language

From unfriend to selfie, social media is clearly having an impact on language. As someone who writes about social media I’m aware of not only how fast these online platforms change, but also of how they influence the language in which I write.

The words that surround us every day influence the words we use. Since so much of the written language we see is now on the screens of our computers, tablets, and smartphones, language now evolves partly through our interaction with technology. And because the language we use to communicate with each other tends to be more malleable than formal writing, the combination of informal, personal communication and the mass audience afforded by social media is a recipe for rapid change.

From the introduction of new words to new meanings for old words to changes in the way we communicate, social media is making its presence felt.

New ways of communicating

An alphabet soup of acronyms, abbreviations, and neologisms has grown up around technologically mediated communication to help us be understood. I’m old enough to have learned the acronyms we now think of as textspeak on the online forums and ‘Internet relay chat’ (IRC) that pre-dated text messaging. On IRC, acronyms help speed up a real-time typed conversation. On mobile phones they minimize the inconvenience of typing with tiny keys. And on Twitter they help you make the most of your 140 characters.

Emoticons such as  and acronyms such as LOL (‘laughing out loud’ — which has just celebrated its 25th birthday) add useful elements of non-verbal communication — or annoy people with their overuse. This extends to playful asterisk-enclosed stage directions describing supposed physical actions or facial expressions (though use with caution: it turns out that *innocent face* is no defence in court).

and acronyms such as LOL (‘laughing out loud’ — which has just celebrated its 25th birthday) add useful elements of non-verbal communication — or annoy people with their overuse. This extends to playful asterisk-enclosed stage directions describing supposed physical actions or facial expressions (though use with caution: it turns out that *innocent face* is no defence in court).

An important element of Twitter syntax is the hashtag — a clickable keyword used to categorize tweets. Hashtags have also spread to other social media platforms — and they’ve even reached everyday speech, but hopefully spoofs such as Jimmy Fallon and Justin Timberlake’s sketch on The Tonight Show will dissuade us from using them too frequently. But you will find hashtags all over popular culture, from greetings cards and t-shirts to the dialogue of sitcom characters.

Syntax aside, social media has also prompted a more subtle revolution in the way we communicate. We share more personal information, but also communicate with larger audiences. Our communication styles consequently become more informal and more open, and this seeps into other areas of life and culture. When writing on social media, we are also more succinct, get to the point quicker, operate within the creative constraints of 140 characters on Twitter, or aspire to brevity with blogs.

New words and meanings

Facebook has also done more than most platforms to offer up new meanings for common words such as friend, like, status, wall, page, and profile. Other new meanings which crop up on social media channels also reflect the dark side of social media: a troll is no longer just a character from Norse folklore, but someone who makes offensive or provocative comments online; a sock puppet is no longer solely a puppet made from an old sock, but a self-serving fake online persona; and astroturfing is no longer simply laying a plastic lawn but also a fake online grass-roots movement.

Social media is making it easier than ever to contribute to the evolution of language. You no longer have to be published through traditional avenues to bring word trends to the attention of the masses. While journalists have long provided the earliest known uses of topical terms — everything from 1794’s pew-rent in The Times to beatboxing in The Guardian (1987) — the net has been widened by the “net.” A case in point is Oxford Dictionaries 2013 Word of the Year, selfie: the earliest use of the word has been traced to an Australian Internet forum. With forums, Twitter, Facebook, and other social media channels offering instant interaction with wide audiences, it’s never been easier to help a word gain traction from your armchair.

Keeping current

Some people may feel left behind by all this. If you’re a lawyer grappling with the new geek speak, you may need to use up court time to have terms such as Rickrolling explained to you. And yes, some of us despair at how use of this informal medium can lead to an equally casual attitude to grammar. But the truth is that social media is great for word nerds. It provides a rich playground for experimenting with, developing, and subverting language.

It can also be a great way keep up with these changes. Pay attention to discussions in your social networks and you can spot emerging new words, new uses of words — and maybe even coin one yourself.

A version of this post first appeared on OxfordWords blog.

Jon Reed is the author of Get Up to Speed with Online Marketing and runs the website Publishing Talk. He is also on Twitter at @jonreed.

Image: via Shutterstock.

The post How social media is changing language appeared first on OUPblog.

Related StoriesThe in-depth selfie: discussing selfies through an academic lensKotodama: the multi-faced Japanese myth of the spirit of languageHenry James, or, on the business of being a thing

Related StoriesThe in-depth selfie: discussing selfies through an academic lensKotodama: the multi-faced Japanese myth of the spirit of languageHenry James, or, on the business of being a thing

Obama’s predicament in his final years as President

The arc of a presidency is long, but it bends towards failure. So, to paraphrase Barack Obama, seems to be the implication of recent events. Set aside our domestic travails, for which Congress bears primary responsibility, and focus on foreign policy, where the president plays a freer hand. In East Asia, China is rising and truculent, scrapping with its neighbors over territory and maritime resources. From Hanoi to Canberra, the neighbors are buttressing their military forces and clinging to Washington’s security blanket. Across Eurasia, Vladimir Putin is pushing with sly restraint to reverse the strategic setbacks of 1989-91. America’s European allies are troubled but not to the point of resolution. At just 1.65 percent of GDP, the EU’s military spending lags far behind Russia’s, at 4.5 percent of GDP. The United States, the Europeans presume, will continue to provide, much as it has done since the late 1940s.

Washington’s last and longest wars are, meanwhile, descending towards torrid denouements. Afghanistan’s fate is tenuous. Free elections are heartening, but whether the Kabul government can govern, much less survive the withdrawal of US forces scheduled for 2016 is uncertain. Iraq, from which Obama in 2011 declared us liberated, is catastrophic. The country is imploding, caught in the firestorm of Sunni insurgency that has overwhelmed the Levant. We may yet witness genocide. We may yet witness American personnel scrambling into helicopters as they evacuate Baghdad’s International Zone, a scene that will recall the evacuation of Saigon in April 1975. The situations are not analogous: should ISIS militia penetrate Baghdad, the outcome will be less decisive than was North Vietnam’s 1975 conquest of South Vietnam, but for the United States the results will be no less devastating. America’s failure in Iraq would be undeniable, and all that would remain would be the allocation of blame.

We have traveled far from Grant Park, where an inspirational campaign culminated in promises of an Aquarian future. We are no longer in Oslo, where a president newly laden with a Nobel Prize spoke of rejuvenating an international order “buckling under the weight of new threats.” Obama’s charisma, intellect, and personality, all considerable, have not remade the world; instead, a president who conjured visions of a “just and lasting peace” now talks about hitting singles and doubles. There have in fairness been few errors, although Obama’s declaration that US troops will leave Afghanistan in 2016 may count as such. The problem is rather the conjuncture of setbacks, many deep-rooted, that now envelops US foreign policy. These setbacks are not Barack Obama’s fault, but deal with them he must. The irony is this: dire circumstances, above all Iraq, made Obama’s presidential campaign credible and secured his election. Dire circumstances, including Iraq, may now be overwhelming Obama’s presidency.

Cerebral and introspective, Obama may rue that the last years of presidencies are often difficult. Truman left the White House with his ratings in the gutter, while Eisenhower in his last years seemed to critics doddery and obsolete. But for Mikhail Gorbachev, Reagan’s presidency might have ended in ignominy. Yet a president’s last years can be years of reinvention, even years of renewal. With the mid-terms not yet upon us, the fourth part of Obama’s day has not passed; its shape is still being defined.

Barack Obama speaks in Cairo, Egypt, 4 June 2009. Public domain via Wikimedia Commons.

Notorious as a time of setbacks, the 1970s offer examples of late-term reinventions. Henry Kissinger, serving President Ford, in 1974-76 and Jimmy Carter in 1979-80 snatched opportunity from adversity; their examples may be salutary. The mid-1970s found Kissinger’s foreign policy in a nadir: political headwinds were blowing against the East-West détente that he and Richard Nixon had built, even as Congress voted to deny the administration the tools to confront Soviet adventurism in the Third World. Transatlantic relations were at low ebb, as the industrialized countries competed to secure supplies of oil and to overcome a tightening world recession. Alarmed by the deterioration of core alliances, Kissinger in 1974-76 pivoted away from his prior fixation with the Cold War’s grand chessboard and set to work rebuilding the Western Alliance. As he did so, he pioneered international economic governance through the G-7 summits and restored the comity of the West. Kissinger even engaged with the Third World, proposing an international food bank to feed the world’s poorest and aligning the United States with black majority rule in sub-Saharan Africa. Amidst transient adversity, Kissinger laid the foundations for a post-Cold War foreign policy, and the benefits abounded in the decades that followed.

Jimmy Carter in the late 1970s inherited serious challenges, some of which he exacerbated. Soviet-American détente was already on the ropes, but Carter’s outspoken defenses of Soviet human rights added to the strain. The Shah of Iran, a longtime client, was already in trouble, but the strains on his regime intensified under Carter, who sent mixed messages. Pahlavi’s tumbling in the winter of 1978-79 and the ensuing hostage crisis threw Carter’s foreign policy off the rails. Months later, the Soviet Union invaded Afghanistan. Carter responded by reorienting US foreign policy towards an invigorated Cold War posture. He embargoed the USSR, escalated defense spending, rallied the West, and expanded cooperation with China. This Cold War turn was counterintuitive, being a departure from Carter’s initial bid to transcend Cold War axioms, but it confirmed his willingness to adapt to new circumstances. Carter’s anti-Soviet turn turned up the fiscal strain on the Soviet Union, helping to precipitate not only the Cold War’s re-escalation but also the Cold War’s resolution. Carter also defined for the United States a new military role in the Persian Gulf, where Washington assumed direct responsibility for the security of oil supplies. This was not what Carter intended to achieve, but he adapted, like Kissinger, to fast-shifting circumstances with creative and far-reaching initiatives.

Late term adaptations such as Kissinger’s and Carter’s may offer cues for President Obama. One lesson of presidencies past is that the frustration of grand designs can be liberating. The Nixon administration in the early 1970s sought to build a “new international structure of peace” atop a Cold War balance of power. Despite initial breakthroughs, Nixon’s design faltered, leaving Ford and Kissinger to pick up the pieces. Carter at the outset envisaged making a new “framework of international cooperation,” but his efforts at architectural renovation also came unstuck. The failures of architecture and the frustration of grand designs nonetheless opened opportunities for practical innovation, which Kissinger, Carter, and others pursued. Even George W. Bush achieved a late reinvention focused on practical multilateralism after his grand strategic bid to democratize the Middle East failed in ignominy. Whether Obama can do the same may hinge upon his willingness to forsake big ideas of the kind that he articulated when he spoke in Cairo of a “new beginning” in US relations with the Muslim world and to refocus on the tangible problems of a complex and unruly world that will submit to grand designs no more readily today than it has done in the past.

Moving forward may also require revisiting favored concepts. Embracing a concept of Cold War politics, Nixon and Kissinger prioritized détente with Soviet Union and neglected US allies. Kissinger’s efforts to rehabilitate core alliances nonetheless proved more durable than his initial efforts to stabilize the Cold War. Substituting a concept of “world order politics” for Cold War fixations, Carter set out to promote human rights and economic cooperation. He nonetheless ended up implementing the sharpest escalation in Cold War preparedness since Truman. Making effective foreign policy sometimes depends upon rethinking the concepts that guide it. Such concepts are, after all, derived from the past; they do not predict the future.

President Obama at West Point recently declared that “terrorism” is still “the most direct threat to America.” This has been the pattern of recent years; whether it is the pattern of years to come, only time will tell. New threats will also appear, as will new opportunities, but engaging them will depend upon perceiving them. Here, strategic concepts that prioritize particular kinds of challenges, such as terrorism, over other kinds of challenge, such as climate change, may be unhelpful. So too are the blanket prohibitions that axiomatic concepts often produce. Advancing US interests may very well depend upon mustering the flexibility to engage with terroristic groups, like the Taliban or Hamas, or with regimes, like Iran’s, that sponsor terrorism. Axiomatic approaches to foreign policy that reject all dialogue with terrorist organizations may narrow the field of vision. Americans, after all, did not go to China until Nixon did so.

If intellectual flexibility is a prerequisite for successful late presidential reinventions, political courage is another. While he believed that effective foreign policy depended upon domestic consensus, Kissinger strived throughout his career to insulate policy choice from the pressures of domestic politics. He persevered in defending Soviet-American détente not because it was popular but because he believed in it. Carter also put strategic purposes ahead of political expedience. Convinced that America’s dependence on foreign oil was a strategic liability, Carter decontrolled oil prices, allowing gasoline prices to rise sharply. The decision was unpopular, even mocked, but it paid strategic dividends in the mid-1980s, when falling world oil prices helped tip the Soviet Union into fiscal collapse. Obama, in contrast, appears readier to let opinion polls guide foreign policy. Withdrawing US forces from Iraq in 2011 and committing to withdraw US forces from Afghanistan during 2016 were both popular moves; their prudence remains less obvious. Still, the 22nd Amendment gives the President a real flexibility in foreign policy. Whether Obama bequeaths a strong foundation to his successor may depend on his willingness to embrace the political opportunity that he now inhabits for bold and decisive action.

Setbacks of the kind that the United States is experiencing in the present moment are not unprecedented. Americans in the 1970s fretted about the rise of Soviet power, and they recoiled as radical students stormed their embassy in Tehran. Yet policymakers devised ways out of the impasse, and they left the United States in a deceptively strong position at the decade’s end. Reinvention depended upon flexibility. Kissinger, Carter, and others understood that the United States, while the world’s leading superpower, was more the captive of its circumstances than the master of them. They were undogmatic, insofar as they turned to opportunities that had not been their priorities at the outset. They operated in the world as they found it and did not fixate upon the world as it might be. They scored singles and doubles, but they also hit the occasional home run. This is a standard that Obama has evoked; with luck, it will be a standard that he can fulfill, if he can muster the political courage to defy adverse politics and embrace the opportunities that the last 30 months of his presidency will present.

Daniel J. Sargent is an Assistant Professor of History at the University of California, Berkeley. He is the author of A Superpower Transformed: The Remaking of American Foreign Relations in the 1970s and the co-editor of The Shock of the Global: The International History of the 1970s.

Subscribe to the OUPblog via email or RSS.

Subscribe to only American history articles on the OUPblog via email or RSS.

The post Obama’s predicament in his final years as President appeared first on OUPblog.

Related StoriesPutting an end to warPolitical apparatus of rape in IndiaThe long, hard slog out of military occupation

Related StoriesPutting an end to warPolitical apparatus of rape in IndiaThe long, hard slog out of military occupation

A Q&A with Peipei Qiu on Chinese comfort women

Issues concerning Imperial Japan’s wartime “comfort women” have ignited international debates in the past two decades, and a number of personal accounts of “comfort women” have been published in English since the 1990s. Until recently, however, there has been a notable lack of information about the women drafted from Mainland China. Chinese Comfort Women is the first book in English to record the first-hand experiences of twelve Chinese women who were forced into sexual slavery by Japanese Imperial forces during the Asia-Pacific War (1931-1945). Here, author and translator Peipei Qiu (who wrote the book in collaboration with Su Zhiliang and Chen Lifei), answers some questions about her new book.

What is one of the most surprising facts about “comfort women” that you found during your research?

One of the shocking facts revealed in this book is that the scope of military sexual slavery in the war was much larger than previously known. Korean and Japanese researchers, based on the information available to them, had estimated that the Japanese military had detained between 30,000 and 200,000 women to be sex slaves during the war. These early estimations, however, don’t accurately reflect the large number of Chinese women enslaved. Investigations conducted by Chinese researchers suggest a much higher number. My collaborating researcher Su Zhiliang, for example, estimates that between 1931 and 1945 approximately 400,000 women were forced to become military “comfort women,” and that at least half of them were drafted from Mainland China.

Although it is not possible to obtain accurate statistics on the total number of kidnappings, documented cases suggest shockingly large numbers. For instance, around the time of the Nanjing Massacre, the Japanese army abducted tens of thousands of women from Nanjing and the surrounding areas, including over 2,000 women from Suzhou, 3,000 from Wuxi, and 20,000 from Hangzhou. These blatant kidnappings continued throughout the entire war, the youngest abductee’s being only nine years old.

Chinese and Malayan girls forcibly taken from Penang by the Japanese to work as ‘comfort girls’ for the troops. The Allied Reoccupation of the Andaman Islands, 1945. Lemon A E (Sergeant), No 9 Army Film & Photographic Unit. War Office, Central Office of Information and American Second World War Official Collection. IWM Non Commercial Licence. Imperial War Museums.

What impressed you most deeply when you heard the stories of these women?

What struck me most deeply were the “comfort women’s” horrendous sufferings. I felt strongly that their stories must be told to the world. Many of the women were teenagers when the Japanese Imperial Forces kidnapped them. They were given the minimum amount of food necessary to keep them alive and were subjected to multiple rapes each day. Those who resisted were beaten or killed, and those who attempted to escape would be punished with anything from torture to decapitation, and the punishment often included not only the woman but also her family members.

The brutal torture was not only physical. These women confined in the so-called “comfort stations” lived in constant fear and agony, not knowing how long they would have to endure and what would happen to them the following day, worrying what their families went through trying to save them, and witnessing other women being tortured and killed. During research and writing I often could not hold back tears, and their stories always remain heavily and vividly in my mind.

I was also deeply impressed by their resilience and faith in humanity. These women were brutally tortured and exploited by the Japanese imperial forces during the war, and when the war ended, members of their own patriarchal society discarded them as defiled and useless. Many of them were ignored, treated as collaborators with the enemy, or even persecuted. Yet what the survivors remember and recount is not only suffering and anger but also acts of humanity – no matter how little they themselves have witnessed. Wan Aihua, though gang-raped multiple times and nearly beaten to death by Japanese troops, never forgot an army interpreter who saved her from a Japanese officer’s sword. She told us, “I didn’t know if the interpreter was Japanese, but I believe there were kind people in the Japanese troops, just as there are today, when many Japanese people support our fight for justice.”

What motivated you to write this book?

In part, I was shocked by the lack of information available. In this day and age, it feels like every fact or story to be known is easily at our fingertips. However, in this instance, it was not the case. Even after the rise of the “comfort women” redress movement, most of the focus was on the “comfort women” of Japan and its colonies, and little was known about Chinese “comfort women” outside of China. This created a serious issue in understanding the history of the Asia-Pacific war and to the study of the entire “comfort women” issue. Without a thorough understanding of Chinese “comfort women’s” experiences, an accurate explication of the scope and nature of that system cannot be achieved.

In the past two decades, how to understand what happened to “comfort women” has become an international controversy. Some Japanese politicians and activists insist that “comfort women” were prostitutes making money at frontlines, that there was not evidence of direct involvements of Japanese military and government. And they say telling the stories of “comfort women” disgraces the Japanese people.

One of the main purposes for me to write about Chinese “comfort women” is to help achieve a transnational understanding of their sufferings. To understand what happened to the “comfort women,” we must transcend the boundaries of nation-state. I hope to demonstrate that fundamentally confronting the tragedy of “comfort women” is not about politics, nor is it about national interests; it is about human life. Dismissing individual sufferings in the name of national honor is not only wrong but also dangerous, it is a ploy that nation-states have used, and continue to use, to drag people into war, to deprive them of their basic rights, and to abuse them.

Peipei Qiu is the author and translator of Chinese Comfort Women: Testimonies from Imperial Japan’s Sex Slaves, which she wrote in collaboration with Su Zhiliang and Chen Lifei. She is currently professor of Chinese and Japanese on the Louise Boyd Dale and Alfred Lichtenstein Chair and director of the Asian Studies Program at Vassar College.

Subscribe to the OUPblog via email or RSS.

Subscribe to only history articles on the OUPblog via email or RSS.

The post A Q&A with Peipei Qiu on Chinese comfort women appeared first on OUPblog.

Related StoriesWelcome to the OHR, Stephanie GilmorePolitical apparatus of rape in IndiaPutting an end to war

Related StoriesWelcome to the OHR, Stephanie GilmorePolitical apparatus of rape in IndiaPutting an end to war

Oxford University Press's Blog

- Oxford University Press's profile

- 238 followers