Josh Clark's Blog, page 16

June 28, 2017

Google Will No Longer Scan Gmail for Ad Targeting

In a bit of unexpected good news, Google announced last week that it will stop reading Gmail messages for the purpose of showings ads tailored to the content.

The New York Times reminds, however, that Google will still continue to read your messages for other purposes:

It will continue to scan Gmail to screen for potential

spam or phishing attacks as well as offering suggestions

for automated replies to email.

So the bots will continue to comb your email missives, but at least they’re seemingly doing this in the service of features that serve the user (instead of advertisers).

As always, the tricky trade-off of machine-learning services is that they only know what we feed them. But what are they eating? It’s us. How much are we willing to reveal, and in exchange for what services?

NYT | Google Will No Longer Scan Gmail for Ad Targeting

A Working Pattern Library

The charming and masterful Ethan Marcotte emphasizes the importance of crafting design systems to fit the way an organization works and behaves:

To put a finer point on it, a pattern library���s value

to an organization is tied directly to how much���and

how easily���it���s used. That���s why I usually start by

talking with clients about the intended audiences for

their pattern library. Doing so helps us better understand

who���ll access the pattern library, why they���ll do so,

and how we can design those points of contact.

While a basic pattern library might consist simply of a collection of components, an essential pattern library is presented in a way that makes it not just the “right” thing to use but the easiest. For what it’s worth, this is really hard to do well; as we’ve found in our own projects, there’s some seriously demanding UX/research work behind tailoring a design system to the people who use it.

Ethan Marcotte | A Working Pattern Library

On Design Systems: Dan Mall of Superfriendly

UXpin interviewed my friend and collaborator Dan Mall to tap his giant brain for insights about building design systems. The 47:15 interview is full of gems, and you should watch or read the whole thing. Meanwhile, here are a few highlights:

I don���t think that a design system should remove design

from your organization. A lot of clients think like,

���Oh, if we had a design system, we don���t need to design

anymore, right? ���Cause all the decisions would be made.���

And I think that I���ve never seen that actually happen.

Design systems should just help you design better.

So you���re still gonna have to go through the process

of design, but a design system should be a good tool

in your arsenal for you to be able to design better.

And eliminate some of the useless decisions that you

might have to make otherwise.

I love this. Design systems are at their best when they simply gather the best practices of the organization���the settled solutions. The most effective design systems are simply containers of institutional knowledge: ���this is what good design looks like in our company.��� Instead of building (and rebuilding and rebuilding) the same pattern over and over again, designers and developers Instead of designing a card pattern for the 15th time, it���s already done. Product teams can put their time and smarts into solving new problems. Put another way: the most exciting design systems are boring.

Dan also talks about the importance of making the system fit the workflow of the people who use it. In our projects together, we’ve found that deep user research is required to get it right:

I���ve worked with organizations that the design systems are for purely developers. That���s it. It���s not for designers. And then in other organizations, a design system has to work equally well for designers and developers. And so just those two examples, those two design systems have to be drastically different from each other ���cause they need to support different use cases and different people.

If that kind of user-centered research sounds like product work, that’s because it is:

It is a product and you have to treat it like a product. It grows like a product, it gets used like a product. And for people to think like, ���Yeah we���ll just create it once and then it���ll sit there.��� They���re not realizing the value of it and they may not actually get value from it if they���re not treating it like a thing that also needs to grow in time and adapt over time. ��� The reason that the design system is so hard to get off the ground is because it requires organizational change. It requires people to have different mindsets about how they���re going to work.

UXPin | On Design Systems: Dan Mall of Superfriendly

The Future of AI Needs To Have More People in It

If smart machines and AI agents are meant to support human goals, how might we help them better understand human needs and behaviors? UC Berkeley professor and AI scientist Anca Dragan suggests we need a more human-centered approach in our algorithms:

Though we���re building these agents to help and support

humans, we haven���t been very good at telling these

agents how humans actually factor in. We make them

treat people like any other part of the world. For

instance, autonomous cars treat pedestrians, human-driven

vehicles, rolling balls, and plastic bags blowing down

the street as moving obstacles to be avoided. But people

are not just a regular part of the world. They are

people! And as people (unlike balls or plastic bags),

they act according to decisions that they make. AI

agents need to explicitly understand and account for

these decisions in order for them to actually do well. [���]

How do we tell a robot what it should

strive to achieve? As researchers, we assume we���ll

just be able to write a suitable reward function for

a given problem. This leads to unexpected side effects,

though, as the agent gets better at optimizing for

the reward function, especially if the reward function

doesn���t fully account for the needs of the people the

robot is helping. What we really want is for these

agents to optimize for whatever is best for people.

To do this, we can���t have a single AI researcher designate

a reward function ahead of time and take that for granted.

Instead, the agent needs to work interactively with

people to figure out what the right reward function

is. [���]

Supporting people is not an after-fix

for AI, it���s the goal.

To do this well, I believe this next generation should

be more diverse than the current one. I actually wonder

to what extent it was the lack of diversity in mindsets

and backgrounds that got us on a non-human-centered

track for AI in the first place.

AI4ALL | The Future of AI Needs To Have More People in It

Melinda Gates and Fei-Fei Li Want to Liberate AI from ���Guys With Hoodies���

In a wonderful interview with Backchannel, Google Cloud’s chief AI scientist Fei-Fei Li and Microsoft alum and philanthropist Melinda Gates press for more diversity in the artificial intelligence field. Among other projects, Li and Gates have launched AI4ALL, an educational nonprofit working to increase diversity and inclusion in artificial intelligence.

Fei-Fei Li: As an educator, as a woman, as

a woman of color, as a mother, I���m increasingly worried.

AI is about to make the biggest changes to humanity,

and we���re missing a whole generation of diverse technologists

and leaders.���

Melinda Gates: If we don���t get women

and people of color at the table���������real technologists

doing the real work���������we will bias systems. Trying

to reverse that a decade or two from now will be so

much more difficult, if not close to impossible. This

is the time to get women and diverse voices in so that

we build it properly, right?

This reminds me, too, of the call of anthropologist Genevieve Bell to look beyond even technologists to craft this emerging world of machine learning. ���If we���re talking about a technology that is as potentially pervasive as this one and as potentially close to us as human beings,��� Bell said, ���I want more philosophers and psychologists and poets and artists and politicians and anthropologists and social scientists and critics of art.���

We need every perspective we can get. A hurdle: fancy new technologies often come with the damaging misperception that they’re accessible only to an elite few. I love how Gates simply dismisses that impression:

You can learn AI. And you can learn how to be part of the industry. Go find somebody who can explain things to you. If you���re at all interested, lean in and find somebody who can teach you."��� I think sometimes when you hear a big technologist talking about AI, you think, ���Oh, only he could do it.��� No. Everybody can be part of it.

It’s true. Get after it. (If you’re a designer wondering how you fit in, I have some ideas about that: Design in the Era of the Algorithm.)

Backchannel | Melinda Gates and Fei-Fei Li Want to Liberate AI from ���Guys With Hoodies���

June 20, 2017

Social Cooling

Social Cooling is Tijmen Schep’s term to describe the chilling effect of the reputation/surveillance economy on expression and exploration of ideas:

Social Cooling describes the long-term��negative��side

effects of living in a reputation economy:

A culture of conformity. Have you ever hesitated to click��on a link because

you thought your visit might be logged, and it could

look bad?���

A culture of risk-aversion.���Rating

systems can create unwanted incentives, and increase

pressure to conform a bureaucratic average.

Increased social rigidity. Digital reputation systems are limiting our ability

and our will to protest injustice.

Schep suggests that all of this raises three big questions���philosophical, economic, and cultural:

Are we becoming more well behaved, but less human?

Are we undermining our creative economy?

Will this impact our ability to evolve as a society?

Social Cooling

June 19, 2017

The Rise of Artificial Intelligence: How AI Will Affect UX Design

Adobe profiled and interviewed Big Medium���s Josh Clark about the opportunities and challenges for UX designers in a world of machine learning and artificial intelligence (AI). The design of data-driven interfaces is just as important as the underlying algorithms, Josh told Sheena Lyonnais, who wrote:

With an unprecedented amount of data being collected and algorithms driving many of our interactions, Clark says the challenge of what to with the data, how to present it, and how to use it to shape user behavior is now inherently a design question for today���s user experience designers.

���Typically digital designers have been creating interfaces for flows and for content for which we have complete control, and now we are gradually and slightly uncomfortably ceding some of that control to algorithms and digital models that we don���t quite understand���and that don���t quite understand us,��� he said.���

���I think the really critical question we���re dealing with now in this early stage of artificial intelligence is how do we design the interfaces in ways that set appropriate expectations and channel user behaviors in ways that match the capabilities of the system?��� Clark said. ���When expectations are wrong or we ask the machines to do something they���re just not capable of, that���s frustrating, and sometimes damaging.���

Josh shared several insights in the interview:

When machines generate the data and the interaction, the UX designer���s role is to anticipate and cushion the occasional weirdness, ambiguity and error that will emerge.

Gathering good training data is a matter of good research. UX research techniques have to ramp up to match the unprecedented scale machine learning requires.

Adding personality to data systems is the third rail of UX design. Too much may diminish the efficiency and utility that people expect of those systems.

As data systems begin to understand visual symbols, AI could start to help designers rough out best-practice interfaces more quickly.

AI is unlikely to replace the most creative parts of design and will instead be a useful ���companion species��� for more tactical production work.

Designing great AI experiences requires both optimistic enthusiasm and skeptical critique as we integrate machine learning into the most fundamental parts of our society and culture.

Read the full interview at Adobe. For more, check out Josh���s long-form essay on the emerging role of design and AI: Design in the Era of the Algorithm.

Is your company wrestling with the UX of bots, data-generated interfaces, and artificial intelligence? Big Medium can help���with workshops, executive sessions, or a full-blown design engagement. Get in touch.

June 17, 2017

How Germany���s Otto Uses Artificial Intelligence

Machine learning is trying to one-up just-in-time inventory with what can only be called before-it’s-time inventory. The Economist reports that German online merchant Otto is using algorithms to predict what you’ll order a week before you order it, reducing surplus stock and speeding deliveries:

A deep-learning algorithm, which was originally designed

for particle-physics experiments at the CERN laboratory

in Geneva, does the heavy lifting. It analyses around

3bn past transactions and 200 variables (such as past

sales, searches on Otto���s site and weather information)

to predict what customers will buy a week before they

order.

The AI system has proved so reliable���it predicts with

90% accuracy what will be sold within 30 days���that

Otto allows it automatically to purchase around 200,000

items a month from third-party brands with no human

intervention. It would be impossible for a person to

scrutinise the variety of products, colours and sizes

that the machine orders. Online retailing is a natural

place for machine-learning technology, notes Nathan

Benaich, an investor in AI.

Overall, the surplus stock that Otto must hold has

declined by a fifth. The new AI system has reduced

product returns by more than 2m items a year. Customers

get their items sooner, which improves retention over

time, and the technology also benefits the environment,

because fewer packages get dispatched to begin with,

or sent back.

The Economist | Automatic for the People: How Germany���s Otto Uses Artificial Intelligence

The Surprising Repercussions of Making AI Assistants Sound Human

There’s much effort afoot to make the bots sound less… robotic. Amazon recently enhanced its Speech Synthesis Markup Language to give Alexa a more human range of expression. SSML now lets Alexa whisper, pause, bleep expletives, and vary the speed, volume, emphasis, and pitch of its speech.

This all comes on the heels of Amazon’s February release of so-called speechcons (like emoticons, get it?) meant to add some color to Alexa’s speech. These are phrases like ���zoinks,��� ���yowza,��� ���read ’em and weep,��� ���oh brother,��� and even ���neener neener,��� all pre-rendered with maximum inflection. (Still waiting on ���whaboom��� here.)

The effort is intended to make Alexa feel less transactional and, well, more human. Writing for Wired, however, Elizabeth Stinson considers whether human personality is really what we want from our bots���or whether it’s just unhelpful misdirection.

���If Alexa starts saying things like hmm and well, you���re

going to say things like that back to her,��� says Alan

Black, a computer scientist at Carnegie Mellon who

helped pioneer the use of speech synthesis markup tags

in the 1990s. Humans tend to mimic conversational styles;

make a digital assistant too casual, and people will

reciprocate. ���The cost of that is the assistant might

not recognize what the user���s saying,��� Black says.

A voice assistant���s personality improving at the expense

of its function is a tradeoff that user interface designers

increasingly will wrestle with. "Do we want a

personality to talk to or do we want a utility to give

us information? I think in a lot of cases we want a

utility to give us information,��� says John Jones, who

designs chatbots at the global design consultancy Fjord.

Just because Alexa can drop colloquialisms and pop

culture references doesn���t mean it should. Sometimes

you simply want efficiency. A digital assistant should

meet a direct command with a short reply, or perhaps

silence���not booyah! (Another speechcon Amazon added.)

Personality

and utility aren���t mutually exclusive, though. You���ve

probably heard the design maxim form should follow

function. Alexa has no physical form to speak of, but

its purpose should inform its persona. But the comprehension

skills of digital assistants remain too rudimentary

to bridge these two ideals. ���If the speech is very

humanlike, it might lead users to think that all of

the other aspects of the technology are very good as

well,��� says Michael McTear, coauthor of The Conversational

Interface. The wider the gap between how an assistant

sounds and what it can do, the greater the distance

between its abilities and what users expect from it.

When designing within the constraints of any system, the goal should be to channel user expectations and behavior to match the actual capabilities of the system. The risk of adding too much personality is that it will create an expectation/behavior mismatch. Zoinks!

Wired | The Surprising Repercussions of Making AI Assistants Sound Human

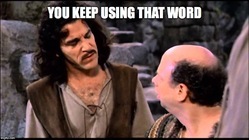

MVP: I Do Not Think That Word Means What You Think It Means

Somewhere along the way, the phrase ���minimum viable product��� (MVP) got corrupted to mean “what’s the crummiest thing we can get away with taking to market,” usually in order to be there first or beat some other arbitrary deadline. And wow, that’s too bad, because the MVP concept is actually super-useful in refining designs (and reducing risk) as part of an overall product roadmap.

Design is all hypothesis. You have ideas and assumptions about how a product can meet a goal, and you execute against those assumptions. In good hands, those assumptions are backed by research and experience, but even then, the design hypothesis won’t be proven out until it’s actually put to the test with real users. The best thing you can do is to find ways to prove/disprove that hypothesis as early as possible, and hopefully with a minimum of expense or effort.

As my pal Josh Seiden likes to ask: ������what���s the smallest thing I can do or make to test this hypothesis?��� The answer to this question is your minimum viable product, or MVP.��� The MVP process, in other words, is not about the take-to-market product but rather low-fidelity prototypes that let you test assumptions and make adjustments. Depending on your challenge, a minimum viable product could consist of prototypes as basic as:

A placeholder landing page describing the product (will people buy this?)

A SMS text interaction (would a bot be useful for this?)

Price experiments (what will people pay?)

Manual/analog service, before building fully automated system (will the service work to meet a real demand?)

All to say: the MVP is not the minimum thing you need for sales, but rather the minimum thing you need for learning. Iteration and revision is implicit in the whole concept. The MVP should be only a stepping stone to the final product, yet development too often stops at the MVP as an end itself.

Lars Damgaard recently shared his thoughts about why so many organizations embrace the term MVP without embracing its iterative process:

This is not how most large corporate organisations

work. Like it or not, a lot of corporate organisations

work in waterfalls with upfront feature specs, fully-fledged

design and harsh deadlines. Also known as building

a spaceship.

What often happens in these situations is that the

spaceship is heavily reduced when the deadline approaches

and a flawed product goes live with no budget for further

iterations, or for establishing a learning cycle. Or

alternatively it���s implemented in phases where the

first launch happens to be whatever happened to be

finished by the deadline. Both of which are also known

as pissing the team off. If this is the case, sprinkling

some half-baked MVP on top of that process won���t get

you anywhere. On the contrary, it might give a false

sense of control when essentially the entire organisationsal

structure and product development processes are what

need to be adjusted.

And:

What needs to be in place though are clear measurable objectives and KPIs and a clear definition of viability, which of course depends on the business plan.

It’s nuts to me how many design projects don’t have clearly stated goals and design principles at the outset. In our projects, our first step is to establish clear consensus about what the main goals for the business are, the user needs it must address, the assumptions we have about how to achieve them, and how we’ll test and correct for those assumptions. That’s the foundational work that makes the rest of the project move quickly and reliably.

Admitting that you have only a hypothesis and not (yet) a solution is a scary and vulnerable thing to do. But it’s not nearly as scary as baking that “solution” into a product before testing the hypothesis behind it. That’s the real and legitimate value of the MVP.