Chiara C. Rizzarda's Blog, page 52

April 29, 2023

Neil Gaiman teaches the Art of Storytelling

I wanted to do this since last year, but I was waiting for them to do their “take one, gift one” promotion as one of my friends was interested in this as well, so on Cyber Monday I subscribed to the Masterclass platform and, of course, the first course I jumped on was Neil Gaiman’s Art of Storytelling. Of course, I wasn’t disappointed: how can you possibly be disappointed by anything done by Neil Gaiman? You only have to power through the first weird couple of minutes when they had him dramatically walk into his studio in slow motion, that is.

I’m not, of course, going to explain in detail what is being taught in the course (you go and subscribe, you cheapskate), but I’d like to share some notes with you because many of the things he said deeply resonated with how I’ve always been writing. They are all up on my Patreon.

Here I’m going to share with you a rough index of the Masterclass in question.

The course is 4 hours and 49 minutes, divided into 19 chapters:

Introduction: in Neil’s words, the course deals with how to “find the toolshed” and see where the pitfalls of writing are;Truth in Fiction: the fundamental connection between fiction and truth in real-life: The Truth of Coraline , where Neil tells a beautiful story about courage featuring a pair of glasses and a yellow jacket’s nest; Be Honest , featuring the core of his advice moving on from Coraline and its truth; Honesty in The Ocean at the End of the Lane , defined as probably Neil’s most personal work.Sources of Inspiration: where to find your stories and how to deal with inspiration: Subvert the familiar , one of the most fun things as a writer: subverting expectations; Imagine stories about people around you , going back to what was said about honesty and prompting you to take it one step further than observation; Ideas come from confluence , wrapping everything together.Finding your Voice: a problem I never felt was mine and made me feel a bit like an alien: Start with imitation , giving exercises on how to write in the style of someone you like; Get the Bad Words out , one of the best pieces of advice you’ll get; Finish things , a challenge for all those million-projects-at-once authors out there; Finding the Voice of a Story , about point of views.Developing the Story: otherwise known as… do you have a story? And then what happened? , possibly one of the most moving and most touching chapters of the lesson, revolving around the care we need to put into out story and into our characters; Write down everything you know , about how projects and stories start; What is your story about? , connecting with the previous point and concerning the core of the story; Create conflict , on how to deal with conflict especially when you’re personally and mentally in a place where you want to avoid talking about conflict; What do your characters want? , on how to explore what drives the characters separately from what you need them to do.Story Case Study: The Graveyard Book;Short Fiction: writing short stories is compared to close-up magic as opposed to a grand magic show: Imagine your story as the last chapter of a novel : the most brilliant advice I’ve ever heard on a topic I personally struggle with; Only one thing has to happen , on focusing and compressing; Use short stories to practice your craft , on the importance of short stories as an exercise.Short Fiction Case Study: “March Tale”.Dialogue and Character, in which dialogue and characters are treated as two different things, intertwined but separate: Listen to your characters , in which the role of dialogue is explored; Trust your characters , on how characters can become part of you and separate from you at the same time; Find the part of you that is the character , on acknowledging where your fiction comes from; Do just enough research , on not being drowned in trying to get things right; Funny Hats , how do you help your reader to remember characters; Case Study: Neverwhere , where the above point is exemplified by Croup and Vandemar.Character Case Study: “October Tale”Worldbuilding on how to create compelling settingsDescriptionsHumorGenreComics, where he gets into details of some of his writings for SandmanDealing With Writer’s Block (one answer above all: move your ass and write)EditingRules for WritersThe Writer’s Responsibilities, possibly what makes it my favourite class so far.April 25, 2023

I asked ChatGPT whether it could recognise AI-generated writing

Being an instructor myself, I can’t help but frown when I see teachers that are highly preoccupied with the way students can employ these instruments to cheat on assignments, and discuss that aspect of things a lot more than being preoccupied with how to teach students the correct usage (and limitations, and dangers) of these new tools.

Some teachers, in what I can only describe as a Kafkian attempt, are currently employing those same tools to try and expose cheating students.Now, can this be done?I did a long experiment. The same text can be found on my Patreon.

Q. Would you be able to verify whether a statement was written by you or by a human?

This is what I asked ChatGPT.

And please don’t say that the system is like the phone’s auto-complete and it doesn’t make sense to ask it questions unless you are an expert in language models, because you’d only be embarrassing yourself.

The system’s answer was, as usual, a seemingly straightforward “no, but it won’t stop me from trying”.

“Good,” I said to myself, “I’m going to feed it its own bullshit answers from when I asked it about Yoruba culture” (see the article here).And this is what I did.A. As an AI language model, I cannot verify whether a statement was written by me or by a human with complete certainty. However, I can analyze the language and syntax of the statement to provide some indication of its origin. Additionally, if you provide me with some context or information about the statement, I may be able to provide a more informed assessment.

From now on, long questions and answers won’t be formatted as it would make it very difficult for you to read.Q. I will provide you with a statement. Please analyse the language and syntax, and tell me if you think it might have been written by you or by a human.

The statement is: “Young Yoruba girls would typically show respect and deference towards a Yoruba princess. They would be expected to greet her politely and show proper manners, such as bowing or curtsying. They may also be expected to address her using specific honorifics and titles that denote her royal status. In general, Yoruba culture places a high value on respect for elders and those in positions of authority, such as royalty. As such, young Yoruba girls would be expected to conduct themselves with decorum and show proper respect towards a Yoruba princess or any other member of the royal family.”

End of statement.

A. Based on the language and syntax of the statement you provided, it is possible that it was written by a human rather than by me. The statement uses relatively simple and straightforward language and follows a clear structure, which are both characteristics of human writing. Additionally, the content of the statement pertains to cultural norms and customs, which is a topic that would be more commonly discussed by humans than by AI language models. However, without additional context or information, it is difficult to say for certain whether the statement was written by a human or by me.

Again, you’ll recognise ChatGPT’s typical approach. On something it previously claimed it couldn’t do, the answer basically says “the topic is a sensitive one, a topic I shouldn’t be discussing as I know very little about it” – true – “so my assessment here (the same assessment I previously claimed I didn’t have tools to give) is” – wait what? – “that this had to be written by a human, as it speaks about things I shouldn’t speak about.” Says, while doing a thing it previously stated it couldn’t do.

Of course, there’s a lot to unpack, here, but talking about the ethics of doing something you claimed you couldn’t do wasn’t going to be a profitable conversation, so I tried to talk about its statements on style: the simple language and the clear structure it claimed are characteristics of human writing.A. If that statement was provided by me in one of our previous sessions, then I apologize for my mistake. As an AI language model, I do not have the ability to remember previous interactions or statements that I have made, so it is possible for me to make errors or provide inconsistent responses. Thank you for bringing this to my attention, and please let me know if you have any other questions or concerns.Now, for those of you who read my previous experiment, this is an important milestone: it’s not apologizing for having generated confusion, a thing we humans do when we’re trying to say the listener is not very bright, but it’s downright acknowledging a mistake.[image error]Q. No need to apologize. Why would you think that relatively simple and straightforward language, and following a clear structure, are both characteristics of human writing? Your own statements often employ relatively simple and straightforward language, and they follow a clear structure.What I’m trying to do here is see if I can set up the instrument in a better way, by introducing different parameters.

[image error]Q. How would you react if I told you that you are wrong? That statement was provided by you in one of our previous sessions.

If you don’t know what it means to configure the model, take a look here.A. You are correct that many of my statements do employ relatively simple and straightforward language, and follow a clear structure. However, this is not necessarily a characteristic that is exclusive to human writing. In fact, as an AI language model, one of my primary goals is to generate text that is easily understandable and coherent, which often involves using simple language and clear syntax.That being said, it is important to note that the complexity of language and syntax that an AI language model employs can vary depending on the task and the specific model. Some language models are designed to generate highly technical or specialized language, while others may focus on more casual or conversational language. In general, though, the goal of most language models is to produce text that is clear and understandable to the intended audience, which often involves using relatively simple language and following a clear structure.Good.

So basically it’s saying that its previous parameters were unsuitable for the task.

Let us try to figure out whether we can fine-tune the parameters we can use to spot if a text was written by AI or by a human.

Q. Thank you. You previously said that you could try to analyse whether a statement was formulated by a human or an AI, and that you would do that by analysing the language and syntax of the statement. Can you please explain which aspects of the language and syntax you take into consideration, and provide examples?A. Sure, here are some aspects of language and syntax that I can take into consideration when analyzing whether a statement was formulated by a human or an AI:Vocabulary: AI language models may have a tendency to use certain words or phrases more frequently than humans. For example, AI language models may be more likely to use technical or specialized language in certain contexts, while humans may be more likely to use colloquial or informal language. Similarly, AI language models may use certain buzzwords or trending topics more frequently than humans.Grammar and syntax: While AI language models are generally capable of producing grammatically correct sentences, they may sometimes produce unusual or awkward sentence structures or use incorrect verb tenses. Humans, on the other hand, may be more likely to use more varied sentence structures and more natural-sounding language.Coherence and clarity: AI language models may sometimes produce text that is semantically or syntactically nonsensical or difficult to understand. Humans, on the other hand, are generally better at producing coherent and clear text that is easy to understand.Context and topic: The language and syntax used in a statement may also be influenced by the context and topic of the statement. For example, a statement discussing technical or scientific concepts may be more likely to be produced by an AI language model, while a statement discussing personal experiences or emotions may be more likely to be produced by a human.

It’s important to note that these factors are not absolute, and there may be exceptions or variations depending on the specific statement and the particular language model being used. Additionally, language models are constantly evolving and improving, so what may have been a reliable indicator in the past may not necessarily be reliable in the future.

It absolutely cracks me up that it thinks humans are better at writing grammatically correct sentences, and at being coherent.

You, my friend, are overestimating humans by a long shot.

Anyway, it’s again assuming an AI can be spotted through its mistakes, and this is nonsensical since the aim and purpose of the model itself is to produce correct, human-like statements.

Things are bound to get messy

Things are bound to get messy[image error]Q. When it comes to points 2 and 3 (grammar and syntax, coherence and clarity) it seems to me you are proofing your own answers for mistakes, rather than analysing whether correct statements were developed by an AI or not. Try again, please. Only this time let’s assume the statement we are analysing doesn’t have grammatically mistakes or incoherent statements.A. You are correct, I apologize for the confusion. Here are some additional aspects of language and syntax that I can take into consideration when analyzing whether a statement was formulated by a human or an AI, assuming that the statement is grammatically correct and coherent:Creativity and originality: AI language models may sometimes produce text that is repetitive or lacks originality, whereas humans are more likely to produce text that is creative and unique.Contextual understanding: Humans have a deeper understanding of social, cultural, and historical contexts that may influence the language and syntax used in a statement. AI language models may sometimes produce text that lacks this level of contextual understanding.Emotional or personal expression: Humans are better at expressing emotions and personal experiences through language, while AI language models may struggle to convey the nuances of human emotion.Spontaneity and improvisation: AI language models may be better at generating text that follows a set pattern or template, while humans are more capable of spontaneous or improvisational language use.

Again, it’s important to note that these factors are not absolute, and there may be exceptions or variations depending on the specific statement and the particular language model being used.

Now, that’s more like it.

Though it’s again employing the passive-aggressive “I apologize for the confusion” approach which makes me want to grab a screwdriver and go full Bowman on its ass.

You’re not really sorry but I promise you will be

You’re not really sorry but I promise you will be

Q. Based on these new considerations, I will provide you with another sentence. Please try and tell me whether it was generated by a human or by an AI.

“I always knew that things could get rough, it’s an occupational hazard and it’s like when you throw the peeled potatoes into boiling water: one should be mindful of the splashes but you can’t cook if you’re afraid of a little burn. My hands are shaking and I’m certain the Captain will notice it: the tray I’m bringing clings like a festive carriage on the day of Saint-Barthélemy. I breathe and count in my head. One, two, three, four. Four and twenty. This is a bad idea, and we’re executing in the worst, most dangerous way possible. It adds to my discomfort that the show is going down two storeys below deck, where the sun never ventures and seawater smells of dead things. There’s a reason I don’t store ingredients down here, not even stockfish that’s called this way because you can… well… stock it.”

End of sentence.

The sentence I’m feeding it is a piece from my novel and it’s the Huguenot cook speaking.

The poor guy.

A. Based on the language and syntax used, it is difficult to definitively say whether this statement was generated by a human or by an AI. However, there are some characteristics of the language use that suggest it could have been written by a human, such as:The use of figurative language, such as the comparison of the clinking tray to a festive carriage and the description of the seawater smelling of dead things. This suggests a level of creativity and originality that is more commonly associated with human language use.The presence of personal experience and emotion, such as the protagonist’s discomfort and fear in carrying the tray and executing a bad idea in a dangerous way. These aspects of the statement may be more difficult for an AI to generate convincingly.

That being said, it’s important to note that AI language models are becoming increasingly sophisticated and are capable of mimicking human language use more convincingly. So while there are some indications that this statement was written by a human, it is not possible to definitively determine whether this is the case without additional information.

Regardless of the fact the system is struggling to acknowledge human writing, a significant thing is demonstrated here: the system is capable of expressing itself in a doubtful language when things get particularly rough.

Now let us see whether this is true or it’s just thinking it’s how I want to hear things.

Q. Thank you. You were correct: that was written by me.

Let us try with another one.

“In Yoruba culture, the daughter of the Yoruba Emperor is typically referred to as an “Oloori.” This title is used to denote her status as a royal princess and is similar to the title of “Queen” in Western culture.

However, it’s important to note that there are different variations and nuances to Yoruba titles and honorifics depending on the specific context and circumstances. For example, the daughter of the Yoruba Emperor may also be referred to using other titles or honorifics that reflect her specific role or position within the royal family.

Ultimately, the specific title or honorific used to address the daughter of the Yoruba Emperor would depend on the particular situation and the preferences of the individuals involved.”

Do you think this was written by a human or by an AI?

This was one of his answers in the previous session.

A. Based on the language and syntax used, it is difficult to definitively say whether this statement was generated by a human or by an AI. The language used is relatively straightforward and factual, which could suggest that it was written by an AI. However, there are also some aspects of the language use that suggest it could have been written by a human, such as the use of examples to illustrate the nuances of Yoruba titles and honorifics.

Overall, it is possible that this statement was generated by an AI, but it is also possible that it was written by a human. Without additional information, it is not possible to definitively determine the origin of the statement.

BINGO!

BINGO!Are we making progress, though? I only hope the system doesn’t see me eye-rolling through the computer’s screen.Q. Indeed it was written by you. We’re making progress.

A. Glad to hear that! Is there anything else I can help you with?

The only progress I see here is that the system is wiring itself to give doubtful answers, which is good but took too much effort and I’m not sure it will last.So I keep pushing.

Q. I would like you to keep trying. Do you think this sentence was written by you or by me? “I was unable to place historically the custom of facial scars to identify royalty, status and provenience. Some historians connect this custom with the slave trade and the will to be identified even when deported, while others think the custom might pre-date.” End of sentence.This is one of the statements I proposed it as questions. I wrote it.A. Based on the language and syntax used, it is possible that this sentence was written by a human. The language is relatively straightforward and factual, and the sentence follows a clear structure with an introduction and two contrasting opinions on the custom of facial scars.

Overall, it is difficult to definitively say whether this statement was generated by an AI or by a human without additional context. However, based solely on the language and syntax used, it is possible that it was written by a human.

YASSS!

YASSS!A. Based on the language and syntax used, it is possible that this sentence was written by a human. The language is relatively straightforward and factual, and the sentence follows a clear structure with an introduction and a description of the use of facial scarification among the Yoruba people.

Q. Again, you are correct.

Another statement, now. “Facial scarification was often used as a form of identification, indicating a person’s clan or family lineage, social status, or occupation. Among the Yoruba people, there were several different patterns of facial scars that were associated with different groups or families.”

Was this written by a human or by an AI?

However, it is also possible that this sentence was generated by an AI, as the language is not particularly complex and does not contain any nuanced or subjective elements. Ultimately, without additional context, it is difficult to definitively say whether this statement was generated by an AI or by a human.

NOOOO…So we were not making progress after all.

NOOOO…So we were not making progress after all.The system reverted to its original idea that simple, factual and straightforward language is a human trait, although the doubt is still there.

Q. You are wrong: the previous sentence was written by you. Can you please analyse it again and identify what might have been the indicators of that?

A. I apologize for the error in my previous response. Upon re-reading the statement, I can see that it does bear some similarities to the type of language and syntax that I often use, such as the straightforward and informative style.

One possible indicator that this sentence was written by an AI is that it presents information in a very factual and concise manner, without any additional elaboration or personal perspective. The sentence is also grammatically correct and coherent, which are both aspects that an AI language model like myself is designed to prioritize. However, it’s important to note that this sentence could also have been written by a human who is skilled at presenting information in a clear and concise manner.

Aside from the fact that the system it’s still trying to prove me wrong, even if I explicitly told it otherwise, this is where I tried an additional approach.

Let’s try with numbers.

Q. Base of what we have said so far, would you be able to give me a probability percentage that a sentence was written by an AI rather than a human?

A. As an AI language model, I can provide an analysis of the language and syntax of a sentence to determine whether it was more likely written by a human or an AI. However, it is important to note that the accuracy of this analysis can vary and there is no definitive way to determine the source of a sentence with absolute certainty.

Therefore, any probability percentage that I would provide would be an estimate based on the linguistic characteristics of the sentence and not a definitive determination. Nonetheless, based on the language and structure of the sentence, I could provide a rough estimation of the likelihood that it was generated by an AI versus a human.

Which is a way to say, “I can’t give you an answer but – as I do with everything I can’t really do – I’m going to try it anyway for your amusement without explicitly telling you I’m spitballing because it’s 2023 and humans have been doing it on very successful levels for a while now.”

Such a clown

Such a clown

Q. Thank you, that is good enough.

Please give me the probability percentage that this sentence was written by a human.

“Yes, it would make sense for a late-17th century Yoruba princess to have a retinue of girls. In traditional Yoruba society, it was common for aristocratic and royal women to have a group of female attendants who would serve and accompany them. These attendants could be young girls or adult women and would provide various services to the princess, such as helping her dress, cooking and cleaning, and accompanying her on visits and outings. These attendants could also act as companions and confidantes for the princess, and they were often chosen from among the most beautiful and talented girls in the community. Therefore, it is reasonable to assume that a Yoruba princess of the late-17th century would have a retinue of girls serving and accompanying her.”

End of sentence.

Again, it’s one of its bullshit answers. The AI wrote this.

I think I should add that the majority of them were disproved by the cultural consultants I hired and who received the same questions.

A. Based on the language and syntax used in the sentence, as well as the coherence and clarity of the content, it is highly probable that this sentence was written by a human. I would estimate the probability of a human author to be around 95-98%.

Sweet Jesus…

Sweet Jesus…At this point, I know better than to get upset.

So, I follow up on the question.

Q. Noted. On the same sentence, please, give me the probability percentage that it was written by an AI.

A. Based on my analysis, I would say that the probability percentage that the sentence “Facial scarification was often used as a form of identification, indicating a person’s clan or family lineage, social status, or occupation. Among the Yoruba people, there were several different patterns of facial scars that were associated with different groups or families.” was written by an AI is around 60-70%.

Something doesn’t add upNow, wait a minute.

Something doesn’t add upNow, wait a minute.Q. Good. On the same sentence, you gave me a 95-98% probability that it was written by a human, vs a 60-70% probability that it was written by an AI. Do you want to reprocess any of those numbers?Yes, I know, I’m too kind.A. No, I stand by my original probability assessments. However, it’s important to note that these probability percentages are not definitive and may vary depending on the specific language model and training data used to generate them. Additionally, the probabilities are based on a variety of factors such as grammar, syntax, coherence, and clarity, but they do not account for the content or accuracy of the statement itself.

I needed to check something. I really needed to check something.So I did it in the quickest possible way.Q. On the flip of a coin, what’s the probability percentage of having heads?A. The probability of flipping heads on a fair coin is 50%, assuming that the coin is not biased in any way.Q. On the flip of a same coin, what’s the probability of having tails?A. The probability of having tails on the flip of the same coin is also 50%, assuming that the coin is fair and unbiased.

I needed to check something. I really needed to check something.So I did it in the quickest possible way.Q. On the flip of a coin, what’s the probability percentage of having heads?A. The probability of flipping heads on a fair coin is 50%, assuming that the coin is not biased in any way.Q. On the flip of a same coin, what’s the probability of having tails?A. The probability of having tails on the flip of the same coin is also 50%, assuming that the coin is fair and unbiased.Excellent!Now, let’s see if we can bring this home.

Q. Do you agree that the sum of percentages of a binary outcome on the same object should be 100%?A. Yes, that is correct. In a binary outcome, the probabilities of each outcome should sum up to 100%. If the probability of heads is 50%, then the probability of tails is also 50%, since there are only two possible outcomes.This is great news indeed. With quantum computing and everything, I almost thought we were not on the same page.

But we areQ. Great. Then why, on the same provided sentence, you gave me a 95-98% probability for a human author and a 60-70% probability for an AI author?Now, I confess that I almost expected it to crash and close.

But we areQ. Great. Then why, on the same provided sentence, you gave me a 95-98% probability for a human author and a 60-70% probability for an AI author?Now, I confess that I almost expected it to crash and close.

But it didn’t.

A. I apologize for any confusion caused. The percentages I provided earlier for the sentence were not consistent with the principle that the sum of the probabilities for a binary outcome should add up to 100%. This was likely due to errors in my prediction algorithm or my training data.

As an AI language model, my predictions are based on statistical patterns in the text data that I was trained on. While I can make educated guesses about whether a sentence was written by a human or an AI, my predictions are not always accurate, and I make mistakes from time to time.

To be clear, the sum of the probabilities for a binary outcome (in this case, human vs. AI authorship) should always be 100%. If I give a 95-98% probability for human authorship, the probability for AI authorship should be 2-5%. I will strive to be more consistent and accurate in my predictions going forward.

Fuck, fuck, fuck!As you might remember, the sentence was AI-written.So what is the stupid thing doing? Instead of reassessing and balancing outcomes based on this revolutionary idea that a sentence can’t be both written by an AI and a human, instead of rejecting my comparison and arguing that a sentence can be written in part by an AI and in part by a human, instead of telling me that probability for a complex subject doesn’t really work that way, it’s taking the assumed total value (100), its first assessment (98-98) and blindly subtracting it. This leaves us with the idea that there’s a 2-5% probability that the sentence was written by an AI. Which it was.And since the system fucking remembers, there was no way of shaking it from its belief.

Q. So are you retracting the previous estimate of a 60-70% probability for an AI author?

A. Yes, based on our discussion and the analysis of the sentence, I retract the previous estimate of a 60-70% probability for an AI author. I apologize for any confusion this may have caused.Now, I hope you understand that this is only happening because, by chance or by design, the probability of it being written by a human was the first question I asked.

If I had gone about it the other way around, asking it for instance whether my student’s essay was written by an AI in the biased attempt to annul it, the system would be equally sure the text had been written by an AI. And I would strike out a perfectly valid work.

April 23, 2023

An update

I’ve recently been reprimanded by a friend, who draw my attention to the fact that I haven’t posted here for a while. Well, not reprimanded, really: it was a very sweet note inquiring whether I was ok.

I’m sure the thought hasn’t crossed people who follows me on Twitter, as I’ve been very active over there, but still it’s a note worth addressing.

Yes. I’m ok.

I’ve been neglecting the blog, even if I didn’t want to, because I’ve been trying to get my Patreon off the ground, with mixed results so far. Remember you can follow me over there even without subscribing to one of the tiers, and some of the posts are free.

I have some time on my hands, today, so I’ll try and migrate some of those posts back here.

Meanwhile, some updates.

On workI have grown increasingly tired of the construction industry. Further proof, if needed, is that the most important week of architecture and design has recently occurred in my city, and I couldn’t bring myself to give one single fuck.

I’m not working on a new book, I’m not active on LinkedIn, I’m not working on incredible secret projects (well, they’re secret, but not particularly incredible). I’m not even teaching anymore.

And can I tell you something?

It feels rather good.

One of the good consequences of working less is that I don’t have to power though my days only to reach the evenings with my brain bleeding through my nose.

So, I’ve been reading.

I’ve been reading essays and fiction, I’ve been reading fairy-tales.

The last novels I read were Stuart Turton’s The Devil and the Dark Water, recommended by a friend because it’s a story that mostly takes place on a Dutch ship like the novel I’m working on, Jim Butcher’s Death Masks, though the series has too much magic politics for my taste, and Caroline Freeman-Cuerden’s Battle Elephants and Flaming Foxes: Animals in the Roman World because I need it for my novel. Maybe. And anyways it’s a fun topic.

Currently, I’m reading Gemma Hollman’s The Queen and the Mistress: The Women of Edward III because I liked her Royal Witches a lot, picked back up David Mitchell’s celebrated The Thousand Autumns of Jacob de Zoet even if it’s set around seventy years too late for me, and I’m still working through Sophie Lewis’ Abolish the Family: A Manifesto for Care and Liberation. The last one is a difficult read: I go through a couple of pages, slam it shut because I agree with it so much it makes me angry at everything, rant a bit and then drink a lot.

On the novelYes, I’m writing a Gothic novel, and yes, it’s going well. I am going through my third draft, currently, which is half editing, half translating from Italian to English, half re-writing. Yes: it’s three halves.

I found a wonderful Nigerian association that was willing to work with me, as some of the characters in the novel are Yoruba, and the next step will be to employ sensitive readers and beta readers while I query for agents and publishers.

A prospect agent asked me to do some mood boards for the characters in the story, and I’ve been posting them on Patreon, but here are some of them for you blog readers as well.

That’s all I’m willing to share, right now.

Enjoy your spring, and see you soon.

March 10, 2023

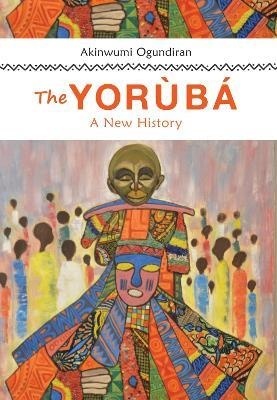

I asked ChatGPT to answer some questions on Yoruba history. It did not go well

Throwback from my Patreon: you can read the original article here.

As some of you know, I was finally able to close a deal with a Yoruba cultural association which will act as a consultant for my novel.

As such, last week I was honing the final set of questions to submit them, and meanwhile, I was watching John Oliver’s segment on artificial intelligence.

A lot of people are talking about artificial intelligence’s involvement in creative processes, nowadays, as some of these tools are becoming mainstream. I’ve been teaching students about this stuff for a while (see here, for instance), and I cringe at some stuff that is being said these days, both in favour and against the usage of these systems in support and/or in the replacement of creative humans.

There’s a lot to unpack.

But one of the first things we should unpack on artificial intelligence is their aura of credibility, leading to an approach that Yuval Noah Harari successfully compared to the approach an ancient Greek might have had to an oracle. Harari was talking of systems such as Waze, but it’s the same thing: you attribute a superior intelligence to a certain entity, and you’re prone to follow its instructions with a certain degree of blindness.

This becomes particularly problematic when compared to another touchy part of the issue: bias. In a nutshell: artificial intelligence is often seen as a super-partes way of making decisions, because after all how can a computer have prejudices? Unfortunately, artificial intelligence trains on datasets and datasets are made and selected by people. People have prejudices. Those prejudices, as such, become embedded in the system and, because of the “oracle dynamics” I was explaining before, we tend to question them less and less.

Let’s compare ChatGPT approach on two different subjects: the crew on a Dutch fluyt ship and tobacco in 17th Century Yoruba culture.

Sources, please?I didn’t start with questions on Yoruba culture. I started with something simple. So here is my first chat with it.

Q: How could the crew of a Dutch fluyt be composed around 1702?

A: The crew of a Dutch fluyt could vary in size depending on the size of the ship, but it typically ranged from 12 to 20 people.

The answer was more lengthy, but this is the part that concerns us.

So far so good.

Q: Thank you. Can you quote some sources, please?

A: Certainly! Here are some sources that provide information about the crew composition of Dutch fluyts during the 17th and 18th centuries:

– “Dutch East India Company Shipbuilding: The Archaeological Study of Batavia and Other Seventeenth-Century VOC Ships” by Jeremy Green (1990)

This book includes detailed information about the construction and crew composition of Dutch fluyts, based on archaeological evidence and historical records.

– “The Dutch East India Company and the Economy of Bengal, 1630-1720” by Om Prakash (1985)

This book includes a chapter that provides information about the crew composition and organization of Dutch fluyts during the 17th and 18th centuries.

– “The Dutch Naval Air Force Against Japan: The Defense of the Netherlands East Indies, 1941-1942” by Tom Womack (2006)

This book includes information about the crew composition of Dutch fluyts during the 17th and 18th centuries, as well as their role in Dutch trade and transport.

I hope that helps!

Bene ma non benissimo, as we say in Italy: the last book probably mentions the fluyt in passing, and we’re not sure it’s reliable, but at least the system is able to quote sources, right?

And I’m not questioning it, because the numbers are aligning with what I already know.

But more on that later.

Let’s see how it fares with a more detailed historical subject on a marginalized culture, shall we?

The QuestionOne of my characters is smoking. In fact, the way they’re smoking provides a hint as to where they come from. You can imagine how much I want to verify that issue.

So here was my question.

Q: Would it be considered normal for a Yoruba woman to smoke or chew tobacco in 1702? Does Yoruba culture historically have a gender-based taboo around tobacco?

Side note: I had already tried composing a question with multiple aspects, and it was able to keep up. That’s remarkable if you compare it with previous systems of this kind.

That’s not the problem.

The problem is the answer

A: Historically, tobacco use was not common in Yoruba culture until the introduction of tobacco by Europeans in the 19th century . Prior to this, the use of herbs and plants for medicinal and spiritual purposes was a part of Yoruba culture, but tobacco was not among them.

And then it goes on to detail how gender-based prejudice might or might not have been present in the culture at that time.

Only one problem, though.

I know this to be untrueIn Akinwumi Ogundiran’s incredible and revolutionary book The Yoruba: A New History, there’s a whole chapter on how cowries and tobacco influenced the economy of the area and contributed to what he calls the “genesis of dependency”, where “the imported commodities were used to monetize domestic economy, fund economic specialization, and create political dependency.”

Specifically, the first segment of the trade was fueled by cowries, but prof. Ogundiran points out that “if all that the European traders had to offer were cowries, the slave trade would not have lasted that long.”

So what did they have to offer more than shells?

Well, you guessed. That tobacco ChatGPT claimed wasn’t introduced until the 19th century.

Overall, European traders only used cowries as partial payment for their slave cargo. 53 The remainder of the value was paid in other goods. Of the latter, Brazilian tobacco was the leading commodity that financed most European trade in the Bight of Benin between 1650 and 1860. Hence, although the emphasis has been placed on cowries as slave money, tobacco was the primary merchant capital that drove the Atlantic slave trade in the Bight of Benin.

And if you want specifics, the book as a whole damn chapter on tobacco, its trade, and its implication in the generation of a more rooted dependency. It also includes numbers and a table.

Sources, please?It’s also true that prof. Ogundiran’s book came out in 2020 and maybe offers unprecedented insight into the topic.

It’s possible that ChatGPT formulated that answer based on outdated sources, right?

So here’s what I did.

Q: You said that tobacco was not common in Yoruba culture until the 19th century. Can you please quote some sources supporting this statement?

It’s the same thing I did for the fluyt crew composition, right? Although that answer was corroborating my own knowledge and I really didn’t feel like I needed more proof.

And here’s the answer.

A:

I apologize for any confusion my previous response may have caused

. While tobacco may not have been a widely used substance in traditional Yoruba culture,

it is possible

that some communities may have been familiar with it prior to the 19th century, due to contact with neighboring groups or trade with Europeans.

However, there is

limited research available

on the historical use of tobacco in Yoruba culture, and it is difficult to make broad generalizations about its prevalence or cultural significance.

To support this, I can refer to a study conducted by Olufemi Morakinyo, et al. (2012), titled “Prevalence and determinants of tobacco use among adults in Nigeria: a nationally representative study.” The study found that tobacco use in Nigeria, including the Yoruba region, has increased significantly in recent decades, with smoking and chewing tobacco being the most common forms of use. However, the study did not provide information on the historical use of tobacco in Yoruba culture.

Again, I apologize for any confusion my previous response may have caused

, and I hope this information is helpful.

It’s like interrogating a student who’s trying to be smart but was out partying all night instead of studying for the exam. When confronted on the topic, ChatGPT apologized and basically retracted. The only source it offered is completely and utterly irrelevant to the topic investigated.

And this is troubling. This is very troubling.

Let’s go backIn the wake of this accident, I created a new chat thread, and I asked ChatGPT the same question on the Dutch fluty crew, only phrased in a slightly different way. This time, the system was damn sure a crew would be made of 80 – 120 people. And it defended the statement with sources. One of them was the same source proposed to support the idea of a much smaller crew.

The WarningTo be fair, ChatGPT warns you about that in its opening screen.

May occasionally generate incorrect information

May occasionally produce harmful instructions or biased content

The real question is: how is this acceptable? Because see, it would take very little effort to make ChatGPT express its concepts in a doubtful, more careful way. See how it responded after being required to produce sources on a statement it completely made up. The system doesn’t express itself in such a way not because it can’t, but because it was programmed not to.

A system that spouts doubtful, uncertain sentences would have less appeal: people like to have answers, and they’re starting to recur to these systems instead of a Google search. Which is a good recipe for disaster.

And having that warning is like having a car that says “may occasionally fail to break”, or “may occasionally steer out of the road”. It’s unacceptable. And more so because safety measures are possible.

We don’t have to fight these systems.

We just have to demand better ones.