Allen B. Downey's Blog: Probably Overthinking It, page 6

November 20, 2023

Life in a Lognormal World

At PyData Global 2023 I will present a talk, “Extremes, outliers, and GOATs: On life in a lognormal world”. The schedule has not been posted yet, but when it’s available, I’ll add the info here.

Here is the abstract:

The fastest runners are much faster than we expect from a Gaussian distribution, and the best chess players are much better. In almost every field of human endeavor, there are outliers who stand out even among the most talented people in the world. Where do they come from?

In this talk, I present as possible explanations two data-generating processes that yield lognormal distributions, and show that these models describe many real-world scenarios in natural and social sciences, engineering, and business. And I suggest methods — using SciPy tools — for identifying these distributions, estimating their parameters, and generating predictions.

You can buy tickets for the conference here. If your budget for conferences is limited, PyData tickets are sold under a pay-what-you-can pricing model, with suggested donations based on your role and location.

I am still working on my slides for the talk. It is based partly on Chapter 4 of Probably Overthinking It, and partly on an additional exploration that didn’t make it into the book.

The exploration is motivated by this paper by Philip Gingerich, which takes the heterodox view that measurements in many biological systems follow a lognormal model rather than a Gaussian. Looking at anthropometric data, he reports that the two models are equally good for 21 of 28 measurements, “but whenever alternatives are distinguishable, [the lognormal model] is consistently and strongly favored.”

I replicated his analysis with two other datasets:

The Anthropometric Survey of US Army Personnel (ANSUR II), available from the Open Design Lab at Penn State.Results of medical blood tests from supplemental material from “Quantitative laboratory results: normal or lognormal distribution?” by Frank Klawonn , Georg Hoffmann and Matthias Orth.Also, I used different methods to fit the models to the data and compare them. The details are in this Jupyter notebook.

The ANSUR dataset contains 93 measurements from 4082 male and 1986 female members of the U.S. armed forces. For each measurement, I found the Gaussian and lognormal models that best fit the data and computed the mean absolute error (MAE) of the models.

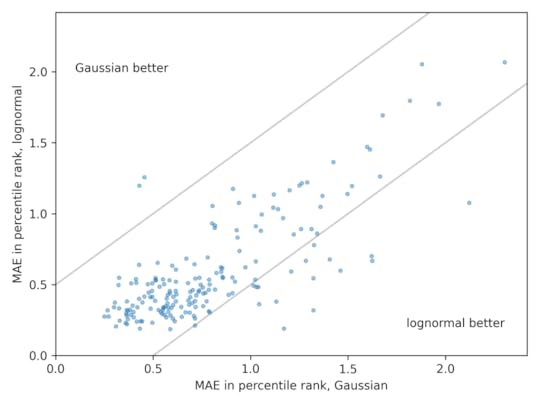

The following scatter plot shows one point for each measurement, with the average error of the Gaussian model on the x-axis and the average error of the lognormal model on the y-axis.

Points in the lower left are measurements where both models are good.Points in the upper right are measurements where both models are bad.In the upper left, the Gaussian model is better.In the lower right, the lognormal model is better.

Points in the lower left are measurements where both models are good.Points in the upper right are measurements where both models are bad.In the upper left, the Gaussian model is better.In the lower right, the lognormal model is better.These results are consistent with Gingerich’s. For many measurements, the Gaussian and lognormal models are equally good, and for a few they are equally bad. But when one model is better than the other, it is almost always the lognormal.

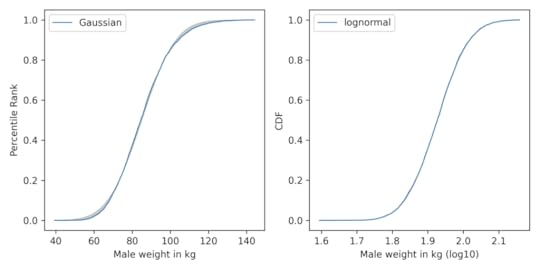

The most notable example is weight:

In these figures, the grey area shows the difference between the data and the best-fitting model. On the left, the Gaussian model does not fit the data very well; on the right, the lognormal model fits so well, the gray area is barely visible.

So why should these measurements follow a lognormal distribution? For that you’ll have to come to my PyData Global talk.

In the meantime, Probably Overthinking It is available to predorder now. You can get a 30% discount if you order from the publisher and use the code UCPNEW. You can also order from Amazon or, if you want to support independent bookstores, from Bookshop.org.

November 7, 2023

We Have a Book!

My copy of Probably Overthinking It has arrived!

If you want a copy for yourself, you can get a 30% discount if you order from the publisher and use the code UCPNEW. You can also order from Amazon or, if you want to support independent bookstores, from Bookshop.org.

The official release date is December 6, but since the book is in warehouses now, it might arrive a little early. While you wait, please enjoy this excerpt from the introduction…

IntroductionLet me start with a premise: we are better off when our decisions are guided by evidence and reason. By “evidence,” I mean data that is relevant to a question. By “reason” I mean the thought processes we use to interpret evidence and make decisions. And by “better off,” I mean we are more likely to accomplish what we set out to do—and more likely to avoid undesired outcomes.

Sometimes interpreting data is easy. For example, one of the reasons we know that smoking causes lung cancer is that when only 20% of the population smoked, 80% of people with lung cancer were smokers. If you are a doctor who treats patients with lung cancer, it does not take long to notice numbers like that.

But interpreting data is not always that easy. For example, in 1971 a researcher at the University of California, Berkeley, published a pa per about the relationship between smoking during pregnancy, the weight of babies at birth, and mortality in the first month of life. He found that babies of mothers who smoke are lighter at birth and more likely to be classified as “low birthweight.” Also, low-birthweight babies are more likely to die within a month of birth, by a factor of 22. These results were not surprising.

However, when he looked specifically at the low-birthweight babies, he found that the mortality rate for children of smokers is lower, by a factor of two. That was surprising. He also found that among low-birthweight babies, children of smokers are less likely to have birth defects, also by a factor of 2. These results make maternal smoking seem beneficial for low-birthweight babies, somehow protecting them from birth defects and mortality.

The paper was influential. In a 2014 retrospective in the Inter- national Journal of Epidemiology, one commentator suggests it was responsible for “holding up anti-smoking measures among pregnant women for perhaps a decade” in the United States. Another suggests it “postponed by several years any campaign to change mothers’ smoking habits” in the United Kingdom. But it was a mistake. In fact, maternal smoking is bad for babies, low birthweight or not. The reason for the apparent benefit is a statistical error I will explain in chapter 7.

Among epidemiologists, this example is known as the low-birthweight paradox. A related phenomenon is called the obesity paradox. Other examples in this book include Berkson’s paradox and Simpson’s paradox. As you might infer from the prevalence of “paradoxes,” using data to answer questions can be tricky. But it is not hopeless. Once you have seen a few examples, you will start to recognize them, and you will be less likely to be fooled. And I have collected a lot of examples.

So we can use data to answer questions and resolve debates. We can also use it to make better decisions, but it is not always easy. One of the challenges is that our intuition for probability is sometimes dangerously misleading. For example, in October 2021, a guest on a well-known podcast reported with alarm that “in the U.K. 70-plus percent of the people who die now from COVID are fully vaccinated.” He was correct; that number was from a report published by Public Health England, based on reliable national statistics. But his implication—that the vaccine is useless or actually harmful—is wrong.

As I’ll show in chapter 9, we can use data from the same report to compute the effectiveness of the vaccine and estimate the number of lives it saved. It turns out that the vaccine was more than 80% effective at preventing death and probably saved more than 7000 lives, in a four-week period, out of a population of 48 million. If you ever find yourself with the opportunity to save 7000 people in a month, you should take it.

The error committed by this podcast guest is known as the base rate fallacy, and it is an easy mistake to make. In this book, we will see examples from medicine, criminal justice, and other domains where decisions based on probability can be a matter of health, freedom, and life.

The Ground RulesNot long ago, the only statistics in newspapers were in the sports section. Now, newspapers publish articles with original research, based on data collected and analyzed by journalists, presented with well-designed, effective visualization. And data visualization has come a long way. When USA Today started publishing in 1982, the infographics on their front page were a novelty. But many of them presented a single statistic, or a few percentages in the form of a pie chart.

Since then, data journalists have turned up the heat. In 2015, “The Upshot,” an online feature of the New York Times, published an interactive, three-dimensional representation of the yield curve — a notoriously difficult concept in economics. I am not sure I fully understand this figure, but I admire the effort, and I appreciate the willingness of the authors to challenge the audience. I will also challenge my audience, but I won’t assume that you have prior knowledge of statistics beyond a few basics. Everything else, I’ll explain as we go.

Some of the examples in this book are based on published research; others are based on my own observations and exploration of data. Rather than report results from a prior work or copy a figure, I get the data, replicate the analysis, and make the figures myself. In some cases, I was able to repeat the analysis with more recent data. These updates are enlightening. For example, the low-birthweight paradox, which was first observed in the 1970s, persisted into the 1990s, but it has disappeared in the most recent data.

All of the work for this book is based on tools and practices of reproducible science. I wrote each chapter in a Jupyter notebook, which combines the text, computer code, and results in a single document. These documents are organized in a version-control system that helps to ensure they are consistent and correct. In total, I wrote about 6000 lines of Python code using reliable, open-source libraries like NumPy, SciPy, and pandas. Of course, it is possible that there are bugs in my code, but I have tested it to minimize the chance of errors that substantially affect the results.

My Jupyter notebooks are available online so that anyone can replicate the analysis I’ve done with the push of a button.

October 28, 2023

Why are you so slow?

Running Speeds

Recently a shoe store in France ran a promotion called “Rob It to Get It”, which invited customers to try to steal something by grabbing it and running out of the store. But there was a catch — the “security guard” was a professional sprinter, Méba Mickael Zeze. As you would expect, he is fast, but you might not appreciate how much faster he is than an average runner, or even a good runner.

Why? That’s the topic of Chapter 4 of Probably Overthinking It, which is available for preorder now. Here’s an excerpt.

If you are a fan of the Atlanta Braves, a Major League Baseball team, or if you watch enough videos on the internet, you have probably seen one of the most popular forms of between-inning entertainment: a foot race between one of the fans and a spandex-suit-wearing mascot called the Freeze.

The route of the race is the dirt track that runs across the outfield, a distance of about 160 meters, which the Freeze runs in less than 20 seconds. To keep things interesting, the fan gets a head start of about 5 seconds. That might not seem like a lot, but if you watch one of these races, this lead seems insurmountable. However, when the Freeze starts running, you immediately see the difference between a pretty good runner and a very good runner. With few exceptions, the Freeze runs down the fan, overtakes them, and coasts to the finish line with seconds to spare.

Here are some examples:

But as fast as he is, the Freeze is not even a professional runner; he is a member of the Braves’ ground crew named Nigel Talton. In college, he ran 200 meters in 21.66 seconds, which is very good. But the 200 meter collegiate record is 20.1 seconds, set by Wallace Spearmon in 2005, and the current world record is 19.19 seconds, set by Usain Bolt in 2009.

To put all that in perspective, let’s start with me. For a middle-aged man, I am a decent runner. When I was 42 years old, I ran my best-ever 10 kilometer race in 42:44, which was faster than 94% of the other runners who showed up for a local 10K. Around that time, I could run 200 meters in about 30 seconds (with wind assistance).

But a good high school runner is faster than me. At a recent meet, the fastest girl at a nearby high school ran 200 meters in about 27 seconds, and the fastest boy ran under 24 seconds.

So, in terms of speed, a fast high school girl is 11% faster than me, a fast high school boy is 12% faster than her; Nigel Talton, in his prime, was 11% faster than him, Wallace Spearmon was about 8% faster than Talton, and Usain Bolt is about 5% faster than Spearmon.

Unless you are Usain Bolt, there is always someone faster than you, and not just a little bit faster; they are much faster. The reason is that the distribution of running speed is not Gaussian — It is more like lognormal.

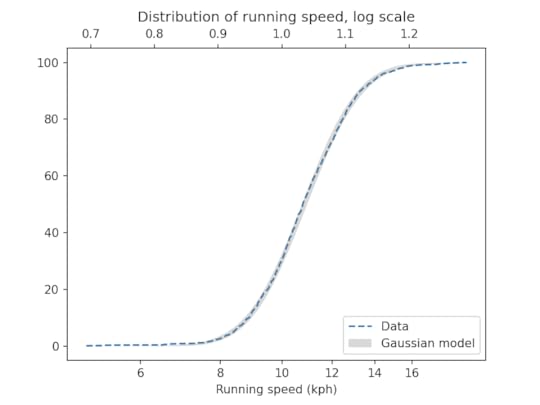

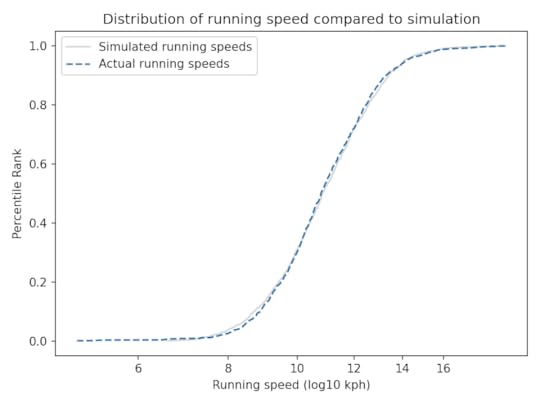

To demonstrate, I’ll use data from the James Joyce Ramble, which is the 10 kilometer race where I ran my previously-mentioned personal record time. I downloaded the times for the 1,592 finishers and converted them to speeds in kilometers per hour. The following figure shows the distribution of these speeds on a logarithmic scale, along with a Gaussian model I fit to the data.

The logarithms follow a Gaussian distribution, which means the speeds themselves are lognormal. You might wonder why. Well, I have a theory, based on the following assumptions:

First, everyone has a maximum speed they are capable of running, assuming that they train effectively.Second, these speed limits can depend on many factors, including height and weight, fast- and slow-twitch muscle mass, cardiovascular conditioning, flexibility and elasticity, and probably more.Finally, the way these factors interact tends to be multiplicative; that is, each person’s speed limit depends on the product of multiple factors.Here’s why I think speed depends on a product rather than a sum of factors. If all of your factors are good, you are fast; if any of them are bad, you are slow. Mathematically, the operation that has this property is multiplication.

For example, suppose there are only two factors, measured on a scale from 0 to 1, and each person’s speed limit is determined by their product. Let’s consider three hypothetical people:

The first person scores high on both factors, let’s say 0.9. The product of these factors is 0.81, so they would be fast.The second person scores relatively low on both factors, let’s say 0.3. The product is 0.09, so they would be quite slow.So far, this is not surprising: if you are good in every way, you are fast; if you are bad in every way, you are slow. But what if you are good in some ways and bad in others?

The third person scores 0.9 on one factor and 0.3 on the other. The product is 0.27, so they are a little bit faster than someone who scores low on both factors, but much slower than someone who scores high on both.That’s a property of multiplication: the product depends most strongly on the smallest factor. And as the number of factors increases, the effect becomes more dramatic.

To simulate this mechanism, I generated five random factors from a Gaussian distribution and multiplied them together. I adjusted the mean and standard deviation of the Gaussians so that the resulting distribution fit the data; the following figure shows the results.

The simulation results fit the data well. So this example demonstrates a second mechanism [the first is described earlier in the chapter] that can produce lognormal distributions: the limiting power of the weakest link. If there are at least five factors affect running speed, and each person’s limit depends on their worst factor, that would explain why the distribution of running speed is lognormal.

And that’s why you can’t beat the Freeze.

You can read about the “Rob It to Get It” promotion in this article and watch people get run down in this video.

October 22, 2023

The World Population Singularity

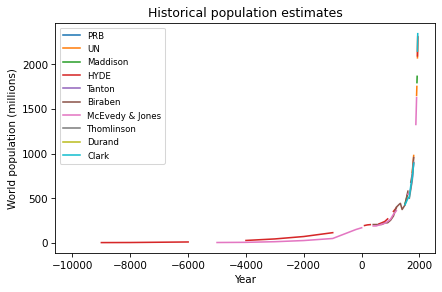

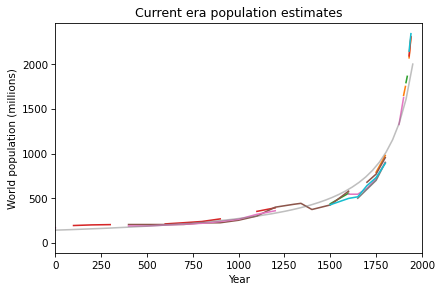

One of the exercises in Modeling and Simulation in Python invites readers to download estimates of world population from 10,000 BCE to the present, and see if they are well modeled by any simple mathematical function. Here’s what the estimates look like (aggregated on Wikipedia from several researchers and organizations):

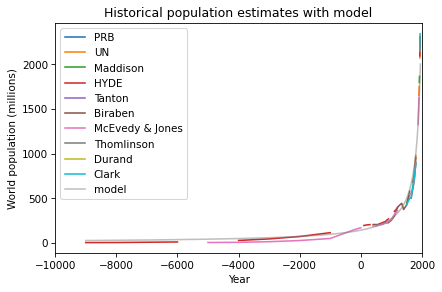

After some trial and error, I found a simple model that fits the data well: a / (b-x), where a is 300,000 and b is 2100. Here’s what the model looks like compared to the data:

So that’s a pretty good fit, but it’s a very strange model. The first problem is that there is no theoretical reason to expect world population to follow this model, and I can’t find any prior work where researchers in this area have proposed a model like this.

The second problem is that this model is headed for a singularity: it goes to infinity in 2100. Now, there’s no cause for concern — this data only goes up to 1950, and as we saw in the previous article, the nature of population growth since then has changed entirely. Since 1950, world population has grown only linearly, and it is now expected to slow down and stop growing before 2100. So the singularity has been averted.

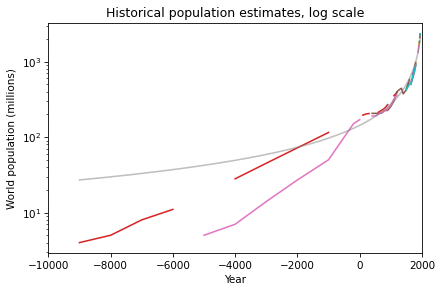

But what should we make of this strange model? We can get a clearer view by plotting the y-axis on a log scale:

On this scale, we can see that the model does not fit the data well prior to 4000 BCE. I’m not sure how much of a problem that is, considering that the estimates during that period are not precise. The retrodictions of the model might actually fall within the uncertainty of the estimates.

Regardless, even if the model only fits the data after 4000 BCE, it is still worth asking why it fits as well as it does. One step toward an answer is to express the model in terms of doubling time. With a little math, we can show that a function with the form a / (b-x) has a doubling time that decreases linearly.

In 10,000 BCE, doubling time was about 8000 years, in 5000 BCE, it was about 5000 years, and in Year 0, it was 1455.

It is plausible that doubling time should decrease during this period because of the Neolithic Revolution, which was the transition of human population from hunting and gathering to agriculture and settlement, starting about 10,000 years ago.

During this period, the domestication of plants and animals vastly increased the calories people could obtain, and the organization of large, permanent settlements accelerated the conversion of those calories into population growth.

If we zoom in on the last 2000 years, we see that the most recent data points are higher and steeper than the model’s predictions, which suggest that the Industrial Revolution accelerated growth even more.

So, if the Neolithic Revolution started world population on the path to a singularity, and the Industrial Revolution sped up the process, what stopped it? Why has population growth since 1950 slowed so dramatically?

The ironic answer is the Green Revolution, which increased our ability to produce calories so quickly, it contributed to rapid improvements in public health, education, and economic opportunity — all of which led to drastic decreases in child mortality. And, it turns out, when children are likely to survive, people choose to have fewer of them.

As a result, population growth left the regime where doubling time decreases linearly, and entered a regime where doubling time increases linearly. And soon, if not already, it will enter a regime of deceleration and decline. At this point it seems unlikely that world population will ever double again.

So, to summarize the last 10,000 years of population growth, the Neolithic and Industrial Revolutions made it possible for humans to breed like crazy, and the Green Revolution made it so we don’t want to.

This article is based on an exercise in Modeling and Simulation in Python, now available from No Starch Press and Amazon.com. You can download the data and run the code in this Jupyter notebook.

October 8, 2023

Another step toward a two-hour marathon

This is an update to an analysis I run each time the marathon world record is broken. If you like this sort of thing, you will like my forthcoming book, Probably Overthinking It, which is available for preorder now.

On October 8, 2023, Kelvin Kiptum ran the Chicago Marathon in 2:00:35, breaking by 34 seconds the record set last year by Eliud Kipchoge — and taking another big step in the progression toward a two-hour marathon.

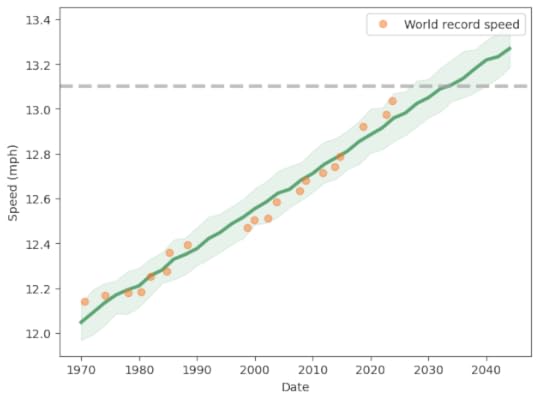

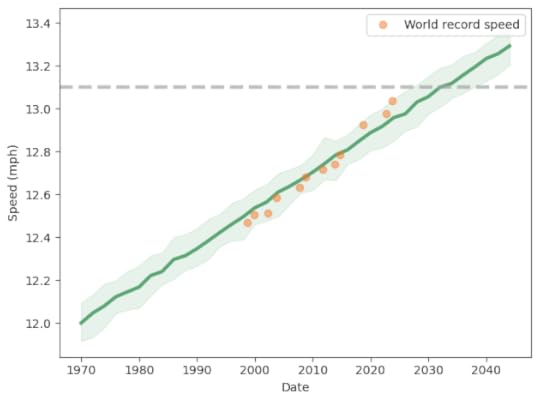

In a previous article, I noted that the marathon record speed since 1970 has been progressing linearly over time, and I proposed a model that explains why we might expect it to continue. Based on a linear extrapolation of the data so far, I predicted that someone would break the two hour barrier in 2036, plus or minus five years.

Now it is time to update my predictions in light of the new record. The following figure shows the progression of world record speed since 1970 (orange dots), a linear fit to the data (green line) and a 90% predictive confidence interval (shaded area).

This model predicts that we will see a two-hour marathon in 2033 plus or minus 6 years.

However, it looks more and more like the slope of the line has changed since 1998. If we consider only data since then, we get the following prediction:

This model predicts a two hour marathon in 2032 plus or minus 5 years. But with the last three points above the long-term trend, and with two active runners knocking on the door, I would bet on the early end of that range.

This analysis is one of the examples in Chapter 17 of Think Bayes; you can read it here, or you can click here to run the code in a Colab notebook.

October 6, 2023

How Does World Population Grow?

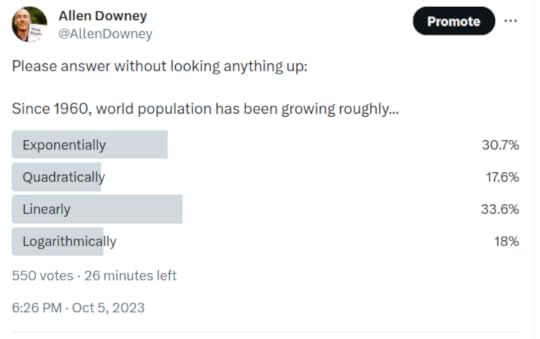

Recently I posed this question on Twitter: “Since 1960, has world population grown exponentially, quadratically, linearly, or logarithmically?”

Here are the responses:

By a narrow margin, the most popular answer is correct — since 1960 world population growth has been roughly linear. I know this because it’s the topic of Chapter 5 of Modeling and Simulation in Python, now available from No Starch Press and Amazon.com.

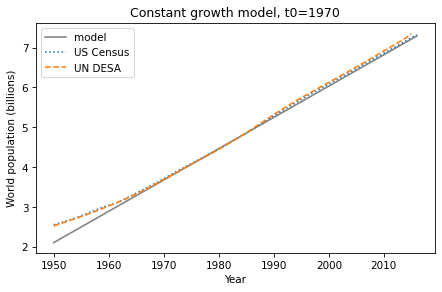

This figure — from one of the exercises — shows estimates of world population from the U.S. Census Bureau and the United Nations Department of Economic and Social Affairs, compared to a linear model.

That’s pretty close to linear.

Looking again at the poll, the distribution of responses suggests that this pattern is not well known. And it is a surprising pattern, because there is no obvious mechanism to explain why growth should be linear.

If the global average fertility rate is constant, population grows exponentially. But over the last 60 years fertility rates have declined at precisely the rate that cancels exponential growth.

I don’t think that can be anything other than a coincidence, and it looks like it won’t last. World population is now growing less-than-linearly, and demographers predict that it will peak around 2100, and then decline — that is, population growth after 2100 will be negative.

If you did not know that fertility rates are declining, you might wonder why — and why it really got going in the 1960s. Of course the answer is complicated, but there is one potential explanation with the right timing and the necessary global scale: the Green Revolution, which greatly increased agricultural yields in one region after another.

It might seem like more food would yield more people, but that’s not how it turned out. More food frees people from subsistence farming and facilitates urbanization, which creates wealth and improves public health, especially child survival. And when children are more likely to survive, people generally choose to have fewer children.

Urbanization and wealth also improve education and economic opportunity, especially for women. And that, along with expanded human rights, tends to lower fertility rates even more. This set of interconnected causes and effects is called the demographic transition.

These changes in fertility, and their effect on population growth, will be the most important global trends of the 21st century. If you want to know more about them, you might like:

Chapters 5-9 of Modeling and Simulation in Python , now available from No Starch Press and Amazon.com.Chapter 3 of Probably Overthinking It , which is available for preorder now.This Kurtzgesagt video, which was coincidentally published while I was running my poll.October 2, 2023

What size is that correlation?

This article is related to Chapter 6 of Probably Overthinking It, which is available for preorder now. It is also related to a new course at Brilliant.org, Explaining Variation.

Suppose you find a correlation of 0.36. How would you characterize it? I posed this question to the stalwart few still floating on the wreckage of Twitter, and here are the responses.

It seems like there is no agreement about whether 0.36 is small, medium, or large. In the replies, nearly everyone said it depends on the context, and of course that’s true. But there are two things they might mean, and I only agree with one of them:

In different areas of research, you typically find correlations in difference ranges, so what’s “small” in one field might be “large” in another.It depends on the goal of the project — that is, what you are trying to predict, explain, or decide.The first interpretation is widely accepted in the social sciences. For example, this highly-cited paper proposes as a guideline that, “an effect-size r of .30 indicates an effect that is large and potentially powerful in both the short and the long run.” This guideline is offered in light of “the average sizes of effects in the published literature of social and personality psychology.”

I don’t think that’s a good argument. If you study mice, and you find a large mouse, that doesn’t mean you found an elephant.

But the same paper offers what I think is better advice: “Report effect sizes in terms that are

meaningful in context”. So let’s do that.

I asked about r = 0.36 because that’s the correlation between general mental ability (g) and the general factor of personality (GFP) reported in this paper, which reports meta-analyses of correlations between a large number of cognitive abilities and personality traits.

Now, for purposes of this discussion, you don’t have to believe that g and GFP are valid measures of stable characteristics. Let’s assume that they are — if you are willing to play along — just long enough to ask: if the correlation between them is 0.36, what does that mean?

I propose that the answer depends on whether we are trying to make a prediction, explain a phenomenon, or make decisions that have consequences. Let’s take those in turn.

PredictionThinking about correlation in terms of predictive value, let’s assume that we can measure both g and GFP precisely, and that both are expressed as standardized scores with mean 0 and standard deviation 1. If the correlation between them is 0.36, and we know that someone has g=1 (one standard deviation above the mean), we expect them to have GFP=0.36 (0.36 standard deviations above the mean), on average.

In terms of percentiles, someone with g=1 is in the 84th percentile, and we would expect their GFP to be in the 64th percentile. So in that sense, g conveys some information about GFP, but not much.

To quantify predictive accuracy, we have several metrics to choose from — I’ll use mean absolute error (MAE) because I think is the most interpretable metric of accuracy for a continuous variable. In this scenario, if we know g exactly, and use it to predict GFP, the MAE is 0.75, which means that we expect to be off by 0.75 standard deviations, on average.

For comparison, if we don’t know g, and we are asked to guess GFP, we expect to be off by 0.8 standard deviations, on average. Compared to this baseline, knowing g reduces MAE by about 6%. So a correlation of 0.36 doesn’t improve predictive accuracy by much, as I discussed in this previous blog post.

Another metric we might consider is classification accuracy. For example, suppose we know that someone has g>0 — so they are smarter than average. We can compute the probability that they also have GFP>0 — informally, they are nicer than average. This probability is about 0.62.

Again, we can compare this result to a baseline where g is unknown. In that case the probability that someone is smarter than average is 0.5. Knowing that someone is smart moves the needle from 0.5 to 0.62, which means that it contributes some information, but not much.

Going in the other direction, if we think of low g as a risk factor for low GFP, the risk ratio would be 1.2. Expressed as an odds ratio it would be 1.6. In medicine, a risk factor with RR=1.2 or OR=1.6 would be considered a small increase in risk. But again, it depends on context — for a common condition with large health effects, identifying a preventable factor with RR=1.2 could be a very important result!

ExplanationInstead of prediction, suppose you are trying to explain a particular phenomenon and you find a correlation of 0.36 between two relevant variables, A and B. On the face of it, such a correlation is evidence that there is some kind of causal relationship between the variables. But by itself, the correlation gives no information about whether A causes B, B causes A, or any number of other factors cause both A and B.

Nevertheless, it provides a starting place for a hypothetical question like, “If A causes B, and the strength of that causal relationship yields a correlation of 0.36, would that be big enough to explain the phenomenon?” or “What part of the phenomenon could it explain?”

As an example, let’s consider the article that got me thinking about this, which proposes in the title the phenomenon it promises to explain: “Smart, Funny, & Hot: Why some people have it all…”

Supposing that “smart” is quantified by g and that “funny” and other positive personality traits are quantified by GFP, and that the correlation between them is 0.36, does that explain why “some people have it all”?

Let’s say that “having it all” means g>1 and GFP>1. If the factors were uncorrelated, only 2.5% of the population would exceed both thresholds. With correlation 0.36, it would be 5%. So the correlation could explain why people who have it all are about twice as common as they would be otherwise.

Again, you don’t have to buy any part of this argument, but it is an example of how an observed correlation could explain a phenomenon, and how we could report the effect size in terms that are meaningful in context.

Decision-makingAfter prediction and explanation, a third use of an observed correlation is to guide decision-making.

For example, in a 2106 article, ProPublic evaluated COMPAS, an algorithm used to inform decisions about bail and parole. They found that its classification accuracy was 0.61, which they characterized as “somewhat better than a coin toss”. For decisions that affect people’s lives in such profound ways, that accuracy is disturbingly low.

But in another context, “somewhat better than a coin toss” can be a big deal. In response to my poll about a correlation of 0.36, one stalwart replied, “In asset pricing? Say as a signal of alpha? So implausibly large as to be dismissed outright without consideration.”

If I understand correctly, this means that if you find a quantity known in the present that correlates with future prices with r = 0.36, you can use that information to make decisions that are substantially better than chance and outperform the market. But it is extremely unlikely that such a quantity exists.

However, if you make a large number of decisions, and the results of those decisions accumulate, even a very small correlation can yield a large effect. The paper I quoted earlier makes a similar observation in the context of individual differences:

“If a psychological process is experimentally demonstrated, and this process is found to appear reliably, then its influence could in many cases be expected to accumulate into important implications over time or across people even if its effect size is seemingly small in any particular instance.”

I think this point is correct, but incomplete. If a small effect accumulates, it can yield big differences, but if that’s the argument you want to make, you have to support it with a model of the aggregation process that estimates the cumulative effect that could result from the observed correlation.

Predict, Explain, DecideWhether a correlation is big or small, important or not, and useful or not, depends on the context, of course. But to be more specific, it depends on whether you are trying to predict, explain, or decide. And what you report should follow:

If you are making predictions, report a metric of predictive accuracy. For continuous quantities, I think MAE is most interpretable. For discrete values, report classification accuracy — or recall and precision, or AUC.If you are explaining a phenomenon, use a model to show whether the effect you found is plausibly big enough to explain the phenomenon, or what fraction it could explain.If you are making decisions, use a model to quantify the expected benefit — or the distribution of benefits would be even better. If your argument is that small correlations accumulate into big effects, use a model to show how and quantify how much.As an aside, thinking of modeling in terms of prediction, explanation, and decision-making is the foundation of Modeling and Simulation in Python, now available from No Starch Press and Amazon.com.

September 19, 2023

The Overton Paradox in Three Graphs

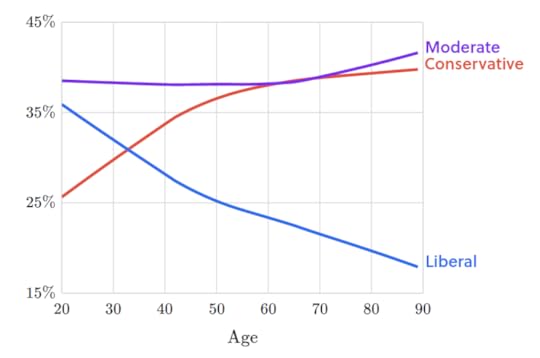

Older people are more likely to say they are conservative.

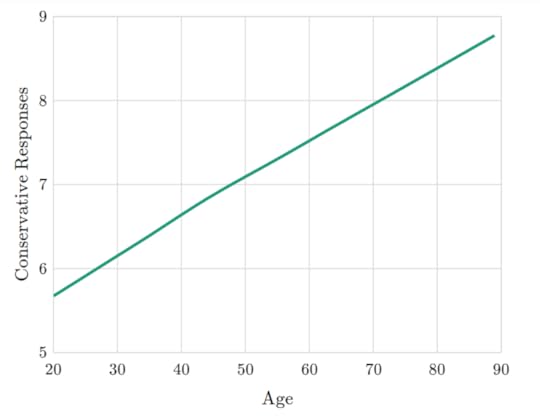

And older people believe more conservative things.

But if you group people by decade of birth, most groups get more liberal as they get older.

So if people get more liberal, on average, why are they more likely to say they are conservative?

Now there are three ways to find out!

Today Brilliant launched an interactive article explaining the Overton Paraodox.Also today, the SuperDataScience Podcast published a conversation about Probably Overthinking It, including a discussion of the Overton Paradox. You can also watch the video on YouTube.And I gave a talk about it at PyData NYC 2022, which you can watch here.Since some people have asked, I should say that “Overton Paradox” is the name I am giving this phenomenon. It’s named after the Overton window, for reasons that will be clear if you read my explanation.

September 4, 2023

How Principal Are Your Components?

In a previous post I explored the correlations between measurements in the ANSUR-II dataset, which includes 93 measurements from a sample of U.S. military personnel. I found that measurements of the head were weakly correlated with measurements from other parts of the body – and in particular the protrusion of the ears is almost entirely uncorrelated with anything else.

A friend of mine, and co-developer of the Modeling and Simulation class I taught at Olin, asked whether I had tried running principal component analysis (PCA). I had not, but now I have. Let’s look at the results.

Click here to run this notebook on Colab.

The ANSUR data is available from The OPEN Design Lab.

Explained VarianceHere’s a visualization of explained variance versus number of components.

With one component, we can capture 44% of the variation in the measurements. With two components, we’re up to 62%. After that, the gains are smaller (as we expect), but with 10 measurements, we get up to 78%.

LoadingsLooking at the loadings, we can see which measurements contribute the most to each of the components, so we can get a sense of which characteristics each component captures.

I won’t explain all of the measurements, but if there are any you are curious about, you can look them up in The Measurer’s Handbook, which includes details on “sampling strategy and measuring techniques” as well as descriptions and diagrams of the landmarks and measurements between them.

Principal Component 1:0.135 suprasternaleheight0.134 cervicaleheight0.134 buttockkneelength0.134 acromialheight0.133 kneeheightsittingPrincipal Component 2:0.166 waistcircumference-0.163 poplitealheight0.163 abdominalextensiondepthsitting0.161 waistdepth0.159 buttockdepthPrincipal Component 3:0.338 elbowrestheight0.31 eyeheightsitting0.307 sittingheight0.228 waistfrontlengthsitting-0.225 heelbreadthPrincipal Component 4:0.247 balloffootcircumference0.232 bimalleolarbreadth0.22 footbreadthhorizontal0.218 handbreadth0.212 sittingheightPrincipal Component 5:0.319 interscyeii0.292 biacromialbreadth0.275 shoulderlength0.273 interscyei0.184 shouldercircumferencePrincipal Component 6:-0.34 headcircumference-0.321 headbreadth0.316 shoulderlength-0.277 tragiontopofhead-0.262 interpupillarybreadthPrincipal Component 7:0.374 crotchlengthposterioromphalion-0.321 earbreadth-0.298 earlength-0.284 waistbacklength0.253 crotchlengthomphalionPrincipal Component 8:0.472 earprotrusion0.346 earlength0.215 crotchlengthposterioromphalion-0.202 wristheight0.195 overheadfingertipreachsittingPrincipal Component 9:-0.299 tragiontopofhead0.294 crotchlengthposterioromphalion-0.253 bicristalbreadth-0.228 shoulderlength0.189 neckcircumferencebasePrincipal Component 10:0.406 earbreadth0.356 earprotrusion-0.269 waistfrontlengthsitting0.239 earlength-0.228 waistbacklengthHere’s my interpretation of the first few components.

Not surprisingly, the first component is loaded with measurements of height. If you want to predict someone’s measurements, and can only use one number, choose height.The second component is loaded with measurements of girth. No surprises so far.The third component seems to capture torso length. That makes sense — once you know how tall someone is, it helps to know how that height is split between torso and legs.The fourth component seems to capture hand and foot size (with sitting height thrown in just to remind us that PCA is not obligated to find components that align perfectly with the axes we expect).Component 5 is all about the shoulders.Component 6 is mostly about the head.After that, things are not so neat. But two things are worth noting:

Component 7 is mostly related to the dimensions of the pelvis, but…Components 7, 8, and 10 are surprisingly loaded up with ear measurements.As we saw in the previous article, there seems to be something special about ears. Once you have exhausted the information carried by the most obvious measurements, the dimensions of the ear seem to be strangely salient.

August 27, 2023

Taming Black Swans

At SciPy 2023 I presented a talk called “Taming Black Swans: Long-tailed distributions in the natural and engineered world“. Here’s the abstract:

Long-tailed distributions are common in natural and engineered systems; as a result, we encounter extreme values more often than we would expect from a short-tailed distribution. If we are not prepared for these “black swans”, they can be disastrous.

But we have statistical tools for identifying long-tailed distributions, estimating their parameters, and making better predictions about rare events.

In this talk, I present evidence of long-tailed distributions in a variety of datasets — including earthquakes, asteroids, and stock market crashes — discuss statistical methods for dealing with them, and show implementations using scientific Python libraries.

The video from the talk is on YouTube now:

I didn’t choose the thumbnail, but I like it.Here are the slides, which have links to the resources I mentioned.

Don’t tell anyone, but this talk is part of my stealth book tour!

It started in 2019, when I presented a talk at PyData NYC based on Chapter 2: Relay Races and Revolving Doors.In 2022, I presented another talk at PyData NYC, based on Chapter 12: Chasing the Overton Window.In May I presented a talk at ODSC East based on Chapter 7: Causation, Collision, and Confusion.And this talk is based on Chapter 8: The Long Tail of Disaster.If things go according to plan, I’ll present Chapter 1 at a book event at the Needham Public Library on December 7.

More chapters coming soon!

Probably Overthinking It

- Allen B. Downey's profile

- 236 followers