Nicholas C. Zakas's Blog, page 12

October 9, 2012

ECMAScript 6 collections, Part 2: Maps

Maps[1], like sets, are also a familiar topic for those coming from other languages. The basic idea is to map a value to a unique key in such a way that you can retrieve that value at any point in time by using the key. In JavaScript, developers have traditionally used regular objects as maps. In fact, JSON is based on the premise that objects represent key-value pairs. However, the same limitation that affects objects used as sets also affects objects used as maps: the inability to have non-string keys.

Prior to ECMAScript 6, you might have seen code that looked like this:

var map = {};

// later

if (!map[key]) {

map[key] = value;

}

This code uses a regular object to act like a map, checking to see if a given key exists. The biggest limitation here is that key will always be converted into a string. That’s not a big deal until you want to use a non-string value as a key. For example, maybe you want to store some data that relates to particular DOM element. You could try to do this:

// element gets converted to a string

var data = {},

element = document.getElementById("my-div");

data[element] = metadata;

Unfortunately, element will be converted into the string "[Object HTMLDivElement]" or something similar (the exact values may be different depending on the browser). That’s problematic because every element gets converted into the same string, meaning you will constantly be overwriting the same key even though you’re technically using different elements. For this reason, the Map type is a welcome addition to JavaScript.

The ECMAScript 6 Map type is an ordered list of key-value pairs where both the key and the value can be of any type. A key of 5 is different than a key of "5", and keys are determined to be the same using the same rules as values for a set: NaN is consider the same as NaN, -0 is different from 0, and otherwise the === applies. You can store and retrieve data from a map using the set() and get() methods, respectively:

var map = new Map();

map.set("name", "Nicholas");

map.set(document.getElementById("my-div"), { flagged: false });

// later

var name = map.get("name"),

meta = map.get(document.getElementById("my-div"));

In this example, two key-value pairs are stored. The key "name" stores a string while the key document.getElementById("my-div") is used to associate meta data with a DOM element. If the key doesn’t exist in the map, then the special value undefined is returned when calling get().

Maps shared a couple of methods with sets, such as has() for determining if a key exists in the map and delete() for removing a key-value pair from the map. You can also use size() to determine how many items are in the map:

var map = new Map();

map.set("name", "Nicholas");

console.log(map.has("name")); // true

console.log(map.get("name")); // "Nicholas"

console.log(map.size()); // 1

map.delete("name");

console.log(map.has("name")); // false

console.log(map.get("name")); // undefined

console.log(map.size()); // 0

In order to make it easier to add large amounts of data into a map, you can pass an array of arrays to the Map constructor. Internally, each key-value pair is stored as an array with two items, the first being the key and the second being the value. The entire map, therefore, is an array of these two-item arrays and so maps can be initialized using that format:

var map = new Map([ ["name", "Nicholas"], ["title", "Author"]]);

console.log(map.has("name")); // true

console.log(map.get("name")); // "Nicholas"

console.log(map.has("title")); // true

console.log(map.get("title")); // "Author"

console.log(map.size()); // 2

When you want to work with all of the data in the map, you have several options. There are actually three generator methods to choose from: keys, which iterates over the keys in the map, values, which iterates over the values in the map, and items, which iterates over key-value pairs by returning an array containing the key and the value (items is the default iterator for maps). The easiest way to make use of these is to use a for-of loop:

for (let key of map.keys()) {

console.log("Key: %s", key);

}

for (let value of map.values()) {

console.log("Value: %s", value);

}

for (let item of map.items()) {

console.log("Key: %s, Value: %s", item[0], item[1]);

}

// same as using map.items()

for (let item of map) {

console.log("Key: %s, Value: %s", item[0], item[1]);

}

When iterating over keys or values, you receive a single value each time through the loop. When iterating over items, you receive an array whose first item is the key and the second item is the value.

Another way to iterate over items is to use the forEach() method. This method works in a similar manner to forEach() on arrays. You pass in a function that gets called with three arguments: the value, the key, and the map itself. For example:

map.forEach(function(value, key, map)) {

console.log("Key: %s, Value: %s", key, value);

});

Also similar to the arrays version of forEach(), you can pass in an optional second argument to specify the this value to use inside the callback:

var reporter = {

report: function(key, value) {

console.log("Key: %s, Value: %s", key, value);

}

};

map.forEach(function(value, key, map) {

this.report(key, value);

}, reporter);

Here, the this value inside of the callback function is equal to reporter. That allows this.report() to work correctly.

Compare this to the clunky way of iterating over values and a regular object:

for (let key in object) {

// make sure it's not from the prototype!

if (object.hasOwnProperty(key)) {

console.log("Key: %s, Value: %s", key, object[key]);

}

}

When using objects as maps, it was always a concern that properties from the prototype might leak through in a `for-in` loop. You always need to use `hasOwnProperty()` to be certain that you are getting only the properties that you wanted. Of course, if there were methods on the object, you would also have to filter those:

for (let key in object) {

// make sure it's not from the prototype or a function!

if (object.hasOwnProperty(key) && typeof object[key] !== "function") {

console.log("Key: %s, Value: %s", key, object[key]);

}

}

The iteration features of maps allow you to focus on just the data without worrying about extra pieces of information slipping into your code. This is another big benefit of maps over regular objects for storing key-value pairs.

Browser Support

Both Firefox and Chrome have implemented Map, however, in Chrome you need to manually enable ECMAScript 6 features: go to chrome://flags and enable "Experimental JavaScript Features". Both implementations are incomplete. Neither browser implements any of the generator method for use with for-of and Chrome’s implementation is missing the size() method (which is part of the ECMAScript 6 draft specification[2]) and the constructor doesn’t do initialization when passed an array of arrays.

Summary

ECMAScript 6 maps bring a very important, and often used, feature to the language. Developers have long been wanting a reliable way to store key-value pairs and have relied on regular objects for far too long. Maps Provide all of the abilities that regular objects can’t, including easy ways to iterate over keys and values as well as removing concern over prototypes.

As with sets, maps are part of the ECMAScript 6 draft that is not yet complete. Because of that, maps are still considered an experimental API and may change before the specification is finalized. All posts about ECMAScript 6 should be considered previews of what’s coming, and not definitive references. The experimental APIs, although implemented in some browsers, are not yet ready to be used in production.

References

Simple Maps and Sets (ES6 Wiki)

ECMAScript 6 Draft Specification (ECMA)

October 4, 2012

Thoughts on TypeScript

Earlier this week, Microsoft released TypeScript[1], a new compile-to-JavaScript language for “application scale JavaScript.” My initial reaction was confusion:

Um, why? blogs.msdn.com/b/somasegar/ar… (via @izs)

— Nicholas C. Zakas (@slicknet) October 1, 2012

It seems like almost every week there’s a new language that’s trying to replace JavaScript on the web. Google received a lukewarm reception when it introduced Dart[2], it’s own idea for fixing all of JavaScript’s perceived flaws. CoffeeScript[3] continues to be the most prominent of these options, frequently inciting the holy wars online. And now Microsoft is throwing its hat into the ring and I couldn’t help but wonder why.

My bias

Before talking about TypeScript specifically, I want to explain my personal bias so that you can take the rest of my comments in their proper context. There is a very real problem in the web development industry and that problem is a significant lack of good JavaScript developers. I can’t tell you the number of companies that contact me trying to find above-average JavaScript talent to work on their applications. Yes, there are many more competent JavaScript developers now than there were 10 years ago, but the demand has increased in a way that far outpaces the supply increase. There are simply not enough people to fill all of the JavaScript jobs that are available. That’s a problem.

Some would argue that the high demand and low supply puts good JavaScript developers in an awesome position and we should never want to change that. After all, that’s why we can demand the salaries that we do. From a personal economic standpoint, I agree. From the standpoint of wanting to improve the web, I disagree. Yes, I want to be able to make a good living doing what I do, but I also want the web as a whole to continue to grow and get better, and that only happens when we have more competent developers entering the workforce.

I see compile-to-JavaScript languages as a barrier to that goal. We should be convincing more people to learn JavaScript rather than giving them more options to not write JavaScript. I often wonder what would happen if all of the teams and companies who spent time, energy, personnel, and money to develop these alternatives instead used those resources on improving JavaScript and teaching it.

To be clear, I’m not saying that JavaScript is a perfect language and doesn’t have its warts. Every language I’ve ever used has parts that suck and parts that are awesome, and JavaScript is no different. I do believe that JavaScript has to evolve and that necessarily introduces more parts that will suck as well as more parts that are awesome. I just wish we were all spending our efforts in the same area rather than splintering them across different projects.

What is TypeScript?

I spent a lot of time this week looking at TypeScript, reading through the documentation, and watching the video on the site. I was then invited by Rey Bango to meet with a couple members of the TypeScript team to have my own questions answered. With all of that background, I feel like I have a very good idea about what TypeScript is and what it is not.

TypeScript is first and foremost a superset of JavaScript. That means you can write regular JavaScript inside of TypeScript and it is completely valid. TypeScript adds additional features on top of JavaScript that then get converted into ECMAScript 5 compatible code by the TypeScript compiler. This is an interesting approach and one that’s quite different from the other compile-to-JavaScript languages out there. Instead of creating a completely new language with new syntax rules, TypeScript starts with JavaScript and adds additional features that fit in with the syntax quite nicely.

At its most basic, TypeScript allows you to annotate variables, function arguments, and functions with type information. This additional information allows for tools to provide better auto complete and error checking than you could get using normal JavaScript. The syntax is borrowed from the original JavaScript 2/ECMAScript 4 proposal[4] that was also implemented as ActionScript 3:

var myName: string = "Nicholas";

function add(num1: number, num2: number): number {

return num1 + num2;

}

function capitalize(name: string): string {

return name.toUpperCase();

}

The colon syntax may look familiar if you ever used Pascal or Delphi, both of which use the same syntax for indicating the type. The strings, numbers, and booleans in JavaScript are represented in TypeScript as string, number, and bool (note: all lowercase). These annotations hope the TypeScript compiler to figure out if you are using correct values. For example, the following would cause a warning:

// warning: add() was defined to accept numbers

var result = add("a", "b");

Since add() was defined to accept numbers, this code causes a warning from the TypeScript compiler.

TypeScript is also smart enough to infer types when there is an assignment. For example, each of these declarations is automatically assigned a type:

var count = 10; // assume ": number"

var name = "Nicholas"; // assume ": string"

var found = false; // assume ": bool"

That means to get some benefit out of TypeScript, you don’t necessarily have to add type annotations everywhere. You can choose not to add type annotations and let the compiler try to figure things out, or you can add a few type annotations to help out.

Perhaps the coolest part of these annotations is the ability to properly annotate callback functions. Suppose you want to run a function on every item in an array, similar to Array.prototype.forEach(). Using JavaScript, you would define something like this:

function doStuffOnItems(array, callback) {

var i = 0,

len = array.length;

while (i < len) {

callback(array[i], i, array);

i++;

}

}

The callback function accepts three arguments, a value, an index, and the array itself. There’s no way to know that aside from reading the code. In TypeScript, you can annotate the function arguments to be more specific:

function doStuffOnItems(array: string[],

callback: (value: string, i: number, array: string[]) => {}) {

var i = 0,

len = array.length;

while (i < len) {

callback(array[i], i, array);

i++;

}

}

This code adds annotations to both arguments of doStuffOnItems(). The first argument is defined as an array of strings, and the second argument is defined as a function accepting three arguments. Note that the format for defining a function type is the ECMAScript 6 fat arrow function syntax.[5] With that in place, the compiler can check to see that a function matches the signature before the code is ever executed.

The type annotations really are the core of TypeScript and what it was designed to do. By having this additional information, editors can be made that not only do type checking of code before its executed, but also provide better autocomplete support as you’re coding. TypeScript already has plug-ins for Visual Studio, Vim, Sublime Text 2, and Emacs,[6] so there are lots of options to try it out.

Additional features

While the main point of TypeScript is to provide some semblance of static typing to JavaScript, it doesn’t stop there. TypeScript also has support for ECMAScript 6 classes[7] and modules[8] (as they are currently defined). That means you can write something like this:

class Rectangle {

constructor(length: number, width: number) {

this.length = length;

this.width = width;

}

area() {

return this.length * this.width;

}

}

And TypeScript converts it into this:

var Rectangle = (function () {

function Rectangle(length, width) {

this.length = length;

this.width = width;

}

Rectangle.prototype.area = function () {

return this.length * this.width;

};

return Rectangle;

})();

Note that the constructor function is created appropriately and the one method is properly placed onto the prototype.

Aside from modules and classes, TypeScript also introduces the ability to define interfaces. Interfaces are not defined in ECMAScript 6 at all but are helpful to TypeScript when it comes to type checking. Since JavaScript code tends to have a large amount of object literals defined, interfaces provide an easy way to validate that the right type of object is being used. For example:

interface Point {

x: number;

y: number;

}

function getDistance(pointA: Point, pointB: Point) {

return Math.sqrt(

Math.pow(pointB.x - pointA.x, 2) +

Math.pow(pointB.y - pointA.y, 2)

);

}

var result = getDistance({ x: -2, y: -3}, { x: -4, y: 4})

In this code, there’s an interface called Point with two properties x and y. The getDistance() function accepts two points and calculates the distance between them. The two arguments can be any object containing exactly those two properties of x and y, meaning I can pass in object literals and TypeScript will check to ensure that they contain the correct properties.

Both interfaces and classes feed into the type system to provide better error checking. Modules are just ways to group related functionality together.

What I like

The more I played with TypeScript the more I found parts of it that I really like. First and foremost, I like that you can write regular JavaScript inside of TypeScript. Microsoft isn’t trying to create a completely new language, they are trying to augment JavaScript in a useful way. I can appreciate that. I also like that the code compiles down into regular JavaScript that actually makes sense. Debugging TypeScript generated code isn’t all that difficult because it uses familiar patterns.

What impressed me the most is what TypeScript doesn’t do. It doesn’t output type checking into your JavaScript code. All of those type annotations and error checking are designed to be used only while you’re developing. The final code doesn’t do any type checking unless you are doing it manually using JavaScript code. Classes and modules get converted into regular JavaScript while interfaces completely disappear. No code for interfaces ever appear in the final JavaScript because they are used purely during development time for type checking and autocomplete purposes.

The editor integration for TypeScript is quite good. All you have to do is add a few annotations and all of a sudden the editor starts to light up with potential errors and suggestions. The ability to explicitly define expectations for callback functions is especially impressive, since that’s the one area I tend see a lot of issues related to passing incorrect values into functions.

I also like that Microsoft open-sourced TypeScript. They seem to be committed to developing this in the open and to developing a community around TypeScript. Whether or not they follow through and actually operate as an open source project is yet to be seen, but they’ve at least taken steps to allow for that possibility.

What I don’t like

While I applaud Microsoft’s decision to use ECMAScript 6 classes, I fear it puts the language in a difficult position. According to the TypeScript team members I spoke with, they’re absolutely planning on staying in sync with ECMAScript 6 syntax for modules and classes. That’s a great approach in theory because it encourages people to learn skills that will be useful in the future. In reality, that’s a difficult proposition because ECMAScript 6 is not yet complete and there is no guarantee that the syntax won’t change again before the specification is finished. That puts the TypeScript team in a very difficult position: continue to update the syntax to reflect the current reality of ECMAScript 6 or lag behind (possibly fork?) In order to keep their development environment stable.

The same goes for the type annotations. While there is significant prior work indicating that the colon syntax will work in JavaScript, there’s no guarantee that it will ever be added to the language. That means what TypeScript is currently doing may end up at odds with what ECMAScript eventually does. That will also lead to a decision as to which way to go.

The TypeScript team is hoping that a community will evolve around the language and tools in order to help inform them of which direction to go when these sort of decisions appear. That’s also a double-edged sword. If they succeed in creating a large community around TypeScript, it’s very likely that the community may decide that they want to go away from the ECMAScript standard rather than stick with it due to the high maintenance cost of upgrading existing code.

And I really don’t like having a primitive type named bool. I already told them I’d like to see that changed to boolean so that it maps back to the values returned from typeof, along with string and number.

Should you use it?

I think TypeScript has a lot of promise but keep one thing in mind: the current offering is an early alpha release. It may not look like that from the website, which is quite polished, or the editor plug-ins, or the fact that the version number is listed as 0.8.0, but I did confirm with the TypeScript team that they consider this a very early experimental release to give developers a preview of what’s coming. That means things may change significantly over the next year before TypeScript stabilizes (probably as ECMAScript 6 stabilizes).

So is it worth using now? I would say only experimentally and to provide feedback to the TypeScript team. If you choose to use TypeScript for your regular work, you do so at your own risk and I highly recommend that you stick to using type annotations and interfaces exclusively because these are removed from compiled code and less likely to change since they are not directly related to ECMAScript 6. I would avoid classes, modules, and anything else that isn’t currently supported in ECMAScript 5.

Conclusion

TypeScript offers something very different from the other compile-to-JavaScript languages in that it starts with JavaScript and adds additional features on top of it. I’m happy that regular JavaScript can be written in TypeScript and still benefit from some of the type checking provided by the TypeScript compiler. That means writing TypeScript can actually help people learn JavaScript, which makes me happy. There’s no doubt that these type annotations can create a better development experience when integrated with editors. Once ECMAScript 6 is finalized, I can see a big use for TypeScript, allowing developers to write ECMAScript 6 code that will still work in browsers that don’t support it natively. We are still a long way from that time, but in the meantime, TypeScript is worth keeping an eye on.

References

TypeScript (typescriptlang.org)

Dart (dartlang.org)

CoffeeScript (coffeescript.org)

Proposed ECMAScript 4th Edition – Language Overview (ECMA)

ECMAScript 6 Arrow Function Syntax (ECMA)

Sublime Text, Vi, Emacs: TypeScript enabled! (MSDN)

ECMAScript 6 Maximally Minimal Classes (ECMA)

ECMAScript 6 Modules (ECMA)

October 2, 2012

Computer science in JavaScript: Merge sort

Merge sort is arguably the first useful sorting algorithm you learn in computer science. Merge sort has a complexity of O(n log n), making it one of the more efficient sorting algorithms available. Additionally, merge sort is a stable sort (just like insertion sort) so that the relative order of equivalent items remains the same before and after the sort. These advantages are why Firefox and Safari use merge sort for their implementation of Array.prototype.sort().

The algorithm for merge sort is based on the idea that it’s easier to merge two already sorted lists than it is to deal with a single unsorted list. To that end, merge sort starts by creating n number of one item lists where n is the total number of items in the original list to sort. Then, the algorithm proceeds to combine these one item lists back into a single sorted list.

The merging of two lists that are already sorted is a pretty straightforward algorithm. Assume you have two lists, list A and list B. You start from the front of each list and compare the two values. Whichever value is smaller is inserted into the results array. So suppose the smaller value is from list A; that value is placed into the results array. Next, the second value from list A is compared to the first value in list B. Once again, the smaller of the two values is placed into the results list. So if the smaller value is now from list B, then the next step is to compare the second item from list A to the second item in list B. The code for this is:

function merge(left, right){

var result = [],

il = 0,

ir = 0;

while (il < left.length && ir < right.length){

if (left[il] < right[ir]){

result.push(left[il++]);

} else {

result.push(right[ir++]);

}

}

return result.concat(left.slice(il)).concat(right.slice(ir));

}

This function merges two arrays, left and right. The il variable keeps track of the index to compare for left while ir does the same for right. Each time a value from one array is added, its corresponding index variable is incremented. As soon as one of the arrays has been exhausted, then the remaining values are added to the end of the result array using concat().

The merge() function is pretty simple but now you need two sorted lists to combine. As mentioned before, this is done by splitting an array into numerous one-item lists and then combining those lists systematically. This is easily done using a recursive algorithm such as this:

function mergeSort(items){

// Terminal case: 0 or 1 item arrays don't need sorting

if (items.length < 2) {

return items;

}

var middle = Math.floor(items.length / 2),

left = items.slice(0, middle),

right = items.slice(middle);

return merge(mergeSort(left), mergeSort(right));

}

The first thing to note is the terminal case of an array that contains zero or one items. These arrays don’t need to be sorted and can be returned as is. For arrays with two or more values, the array is first split in half creating left and right arrays. Each of these arrays is then passed back into mergeSort() with the results passed into merge(). So the algorithm is first sorting the left half of the array, then sorting the right half of the array, then merging the results. Through this recursion, eventually you’ll get to a point where two single-value arrays are merged.

This implementation of merge sort returns a different array than the one that was passed in (this is not an “in-place” sort). If you would like to create an in-place sort, then you can always empty the original array and refill it with the sorted items:

function mergeSort(items){

if (items.length < 2) {

return items;

}

var middle = Math.floor(items.length / 2),

left = items.slice(0, middle),

right = items.slice(middle),

params = merge(mergeSort(left), mergeSort(right));

// Add the arguments to replace everything between 0 and last item in the array

params.unshift(0, items.length);

items.splice.apply(items, params);

return items;

}

This version of the mergeSort() function stores the results of the sort in a variable called params. The best way to replace items in an array is using the splice() method, which accepts two or more arguments. The first argument is the index of the first value to replace and the second argument is the number of values to replace. Each subsequent argument is the value to be inserted in that position. Since there is no way to pass an array of values into splice(), you need to use apply() and pass in the first two arguments combined with the sorted array. So, 0 and items.length are added to the front of the array using unshift() so that apply() can be used with splice(). Then, the original array is returned.

Merge sort may be the most useful sorting algorithm you will learn because of its good performance and easy implementation. As with the other sorting algorithms I’ve covered, it’s still best to start with the native Array.prototype.sort() before attempting to implement an additional algorithm yourself. In most cases, the native method will do the right thing and provide the fastest possible implementation. Note, however, that not all implementations use a stable sorting algorithm. If using a stable sorting algorithm is important to you then you will need to implement one yourself.

You can get both versions of mergeSort() from my GitHub project, Computer Science in JavaScript.

Computer science and JavaScript: Merge sort

Merge sort is arguably the first useful sorting algorithm you learn in computer science. Merge sort has a complexity of O(n log n), making it one of the more efficient sorting algorithms available. Additionally, merge sort is a stable sort (just like insertion sort) so that the relative order of equivalent items remains the same before and after the sort. These advantages are why Firefox and Safari use merge sort for their implementation of Array.prototype.sort().

The algorithm for merge sort is based on the idea that it’s easier to merge two already sorted lists than it is to deal with a single unsorted list. To that end, merge sort starts by creating n number of one item lists where n is the total number of items in the original list to sort. Then, the algorithm proceeds to combine these one item lists back into a single sorted list.

The merging of two lists that are already sorted is a pretty straightforward algorithm. Assume you have two lists, list A and list B. You start from the front of each list and compare the two values. Whichever value is smaller is inserted into the results array. So suppose the smaller value is from list A; that value is placed into the results array. Next, the second value from list A is compared to the first value in list B. Once again, the smaller of the two values is placed into the results list. So if the smaller value is now from list B, then the next step is to compare the second item from list A to the second item in list B. The code for this is:

function merge(left, right){

var result = [],

il = 0,

ir = 0;

while (il < left.length && ir < right.length){

if (left[il] < right[ir]){

result.push(left[il++]);

} else {

result.push(right[ir++]);

}

}

return result.concat(left.slice(il)).concat(right.slice(ir));

}

This function merges two arrays, left and right. The il variable keeps track of the index to compare for left while ir does the same for right. Each time a value from one array is added, its corresponding index variable is incremented. As soon as one of the arrays has been exhausted, then the remaining values are added to the end of the result array using concat().

The merge() function is pretty simple but now you need two sorted lists to combine. As mentioned before, this is done by splitting an array into numerous one-item lists and then combining those lists systematically. This is easily done using a recursive algorithm such as this:

function mergeSort(items){

// Terminal case: 0 or 1 item arrays don't need sorting

if (items.length < 2) {

return items;

}

var middle = Math.floor(items.length / 2),

left = items.slice(0, middle),

right = items.slice(middle);

return merge(mergeSort(left), mergeSort(right));

}

The first thing to note is the terminal case of an array that contains zero or one items. These arrays don’t need to be sorted and can be returned as is. For arrays with two or more values, the array is first split in half creating left and right arrays. Each of these arrays is then passed back into mergeSort() with the results passed into merge(). So the algorithm is first sorting the left half of the array, then sorting the right half of the array, then merging the results. Through this recursion, eventually you’ll get to a point where two single-value arrays are merged.

This implementation of merge sort returns a different array than the one that was passed in (this is not an “in-place” sort). If you would like to create an in-place sort, then you can always empty the original array and refill it with the sorted items:

function mergeSort(items){

if (items.length < 2) {

return items;

}

var middle = Math.floor(items.length / 2),

left = items.slice(0, middle),

right = items.slice(middle),

params = merge(mergeSort(left), mergeSort(right));

// Add the arguments to replace everything between 0 and last item in the array

params.unshift(0, items.length);

items.splice.apply(items, params);

return items;

}

This version of the mergeSort() function stores the results of the sort in a variable called params. The best way to replace items in an array is using the splice() method, which accepts two or more arguments. The first argument is the index of the first value to replace and the second argument is the number of values to replace. Each subsequent argument is the value to be inserted in that position. Since there is no way to pass an array of values into splice(), you need to use apply() and pass in the first two arguments combined with the sorted array. So, 0 and items.length are added to the front of the array using unshift() so that apply() can be used with splice(). Then, the original array is returned.

Merge sort may be the most useful sorting algorithm you will learn because of its good performance and easy implementation. As with the other sorting algorithms I’ve covered, it’s still best to start with the native Array.prototype.sort() before attempting to implement an additional algorithm yourself. In most cases, the native method will do the right thing and provide the fastest possible implementation. Note, however, that not all implementations use a stable sorting algorithm. If using a stable sorting algorithm is important to you then you will need to implement one yourself.

You can get both versions of mergeSort() from my GitHub project, Computer Science in JavaScript.

September 25, 2012

ECMAScript 6 collections, Part 1: Sets

For most of JavaScript’s history, there has been only one type of collection represented by the Array type. Arrays are used in JavaScript just like arrays and other languages but pull double and triple duty mimicking queues and stacks as well. Since arrays only use numeric indices, developers had to use objects whenever a non-numeric index was necessary. ECMAScript 6 introduces several new types of collections to allow better and more efficient storing of order data.

Sets

Sets are nothing new if you come from languages such as Java, Ruby, or Python but have been missing from JavaScript. A set is in an ordered list of values that cannot contain duplicates. You typically don’t access items in the set like you would items in an array, instead it’s much more common to check the set to see if a value is present.

ECMAScript 6 introduces the Set type[1] as a set implementation for JavaScript. You can add values to a set by using the add() method and see how many items are in the set using size():

var items = new Set();

items.add(5);

items.add("5");

console.log(items.size()); // 2

ECMAScript 6 sets do not coerce values in determining whether or not to values are the same. So, a set can contain both the number 5 and the string "5" (internally, the comparison is done using ===). If the add() method is called more than once with the same value, all calls after the first one are effectively ignored:

var items = new Set();

items.add(5);

items.add("5");

items.add(5); // oops, duplicate - this is ignored

console.log(items.size()); // 2

You can initialize the set using an array, and the Set constructor will ensure that only unique values are used:

var items = new Set([1, 2, 3, 4, 5, 5, 5, 5]);

console.log(items.size()); // 5

In this example, an array with feed items is used to initialize the set. The number 5 Only appears once in the set even though it appears four times in the array. This functionality makes it easy to convert existing code or JSON structures to use sets.

You can test to see which items are in the set using the has() method:

var items = new Set();

items.add(5);

items.add("5");

console.log(items.has(5)); // true

console.log(items.has(6)); // false

Last, you can Remove an item from the set by using the delete() method:

var items = new Set();

items.add(5);

items.add("5");

console.log(items.has(5)); // true

items.delete(5)

console.log(items.has(5)); // false

All of this amounts to a very easy mechanism for tracking unique unordered values.

Iteration

Even though there is no random access to items in a set, it still possible to iterate over all of the sets values by using the new ECMAScript 6 for-of statement[2]. The for-of statement is a loop that iterates over values of a collection, including arrays and array-like structures. you can output values in a set like this:

var items = new Set([1, 2, 3, 4, 5]);

for (let num of items) {

console.log(num);

}

This code outputs each item in the set to the console in the order in which they were added to the set.

Example

Currently, if you want to keep track of unique values, the most common approach is to use an object and assign the unique values as properties with some truthy value. For example, there is a CSS Lint[3] rule that looks for duplicate properties. Right now, an object is used to keep track of CSS properties such as this:

var properties = {

"width": 1,

"height": 1

};

if (properties[someName]) {

// do something

}

Using an object for this purpose means always assigning a truthy value to a property so that the if statement works correctly (the other option is to use the in operator, but developers rarely do). This whole process can be made easier by using a set:

var properties = new Set();

properties.add("width");

properties.add("height");

if (properties.has(someName)) {

// do something

}

Since it only matters if the property was used before and not how many times it was used (there is no extra metadata associated), it actually makes more sense to use a set.

Another downside of using object properties for this type of operation is that property names are always converted to strings. So you can’t have an object with the property name of 5, you can only have one with the property name of 5. That also means you can’t easily keep track of objects in the same manner because the objects get converted to strings when assigned as a property name. Sets, on the other hand, can contain any type of data without fear of conversion into another type.

Browser Support

Both Firefox and Chrome have implemented Set, however, in Chrome you need to manually enable ECMAScript 6 features: go to chrome://flags and enable “Experimental JavaScript Features”. Both implementations are incomplete. Neither browser implements set iteration using for-of and Chrome’s implementation is missing the size() method.

Summary

ECMAScript 6 sets are a welcome addition to the language. They allow you to easily create a collection of unique values without worrying about type coercion. You can add and remove items very easily from a set even though there is no direct access to items in the set. It’s still possible, if necessary, to iterate over items in the set by using the ECMAScript 6 for-of statement.

Since ECMAScript 6 is not yet complete, it’s also possible that the implementation and specification might change before other browsers start to include Set. At this point in time, it is still considered experimental API and shouldn’t be used in production code. This post, and other posts about ECMAScript 6, are only intended to be a preview of functionality that is to come.

References

Simple Maps and Sets (ES6 Wiki)

for…of (MDN)

CSS Lint

Set (MDN)

September 17, 2012

Computer science in JavaScript: Insertion sort

Insertion sort is typically the third sorting algorithm taught in computer science programs, after bubble sort[1] and selection sort[2]. Insertion sort has a best-case complexity of O(n), which is less complex than bubble and selection sort at O(n2). This is also the first stable sort algorithm taught.

Stable sort algorithms are sorts that don’t change the order of equivalent items in the list. In bubble and selection sort, it’s possible that equivalent items may end up in a different order than they are in the original list. You may be wondering why this matters if the items are equivalent. When sorting simple values, like numbers or strings, it is of no consequence. If you are sorting objects by a particular property, for example sorting person objects on an age property, there may be other associated data that should be in a particular order.

Sorting algorithms that perform swaps are inherently unstable. Items are always moving around and so you can’t guarantee any previous ordering will be maintained. Insertion sort doesn’t perform swaps. Instead, it picks out individual items and inserts them into the correct spot in an array.

An insertion sort works by separating an array into two sections, a sorted section and an unsorted section. Initially, of course, the entire array is unsorted. The sorted section is then considered to be empty. The first step is to add a value to the sorted section, so the first item in the array is used (a list of one item is always sorted). Then at each item in the unsorted section:

If the item value goes after the last item in the sorted section, then do nothing.

If the item value goes before the last item in the sorted section, remove the item value from the array and shift the last sorted item into the now-vacant spot.

Compare the item value to the previous value (second to last) in the sorted section.

If the item value goes after the previous value and before the last value, then place the item into the open spot between them, otherwise, continue this process until the start of the array is reached.

Insertion sort is a little bit difficult to explain in words. It’s a bit easier to explain using an example. Suppose you have the following array:

var items = [5, 2, 6, 1, 3, 9];

To start, the 5 is placed into the sorted section. The 2 then becomes the value to place. Since 5 is greater than 2, the 5 shifts over to the right one spot, overwriting the 2. This frees up a new spot at the beginning of the sorted section into which the 2 can be placed. See the figure below for a visualization of this process (boxes in yellow are part of the sorted section, boxes in white are unsorted).

The process then continues with 6. Each subsequent value in the unsorted section goes through the same process until the entire array is in the correct order. This process can be represented fairly succinctly in JavaScript as follows:

function insertionSort(items) {

var len = items.length, // number of items in the array

value, // the value currently being compared

i, // index into unsorted section

j; // index into sorted section

for (i=0; i < len; i++) {

// store the current value because it may shift later

value = items[i];

/*

* Whenever the value in the sorted section is greater than the value

* in the unsorted section, shift all items in the sorted section over

* by one. This creates space in which to insert the value.

*/

for (j=i-1; j > -1 && items[j] > value; j--) {

items[j+1] = items[j];

}

items[j+1] = value;

}

return items;

}

The outer for loop moves from the front of the array towards the back while the inner loop moves from the back of the sorted section towards the front. The inner loop is also responsible for shifting items as comparisons happen. You can download the source code from my GitHub project, Computer Science in JavaScript.

Insertion sort isn’t terribly efficient with an average complexity of O(n2). That puts it on par with selection sort and bubble sort in terms of performance. These three sorting algorithms typically begin a discussion about sorting algorithms even though you would never use them in real life. If you need to sort items in JavaScript, you are best off starting with the built-in Array.prototype.sort() method before trying other algorithms. V8, the JavaScript engine in Chrome, actually uses insertion sort for sorting items with 10 or fewer items using Array.prototype.sort().

References

Computer science in JavaScript: Bubble sort

Computer science in JavaScript: Selection sort

September 14, 2012

Replacing Apache with nginx on Elastic Beanstalk

WellFurnished has been using Amazon’s Elastic Beanstalk[1] service for some time now with one of the default configurations. For those who are unaware, Elastic Beanstalk is Amazon’s answer to services like Heroku and Google App Engine. You set up an application and one or more environments made up of a load balancer and any number of EC2 instances. There are several default instance types you can select from such as Apache with Tomcat 6 or 7, Apache with PHP, and Apache with Python (all are available in either 32-bit or 64-bit configurations).

Once you have created an application, you can update any environment using Git. We’ve been using this for our deploys since the start. WellFurnished is written in Java using the Play framework[2] so we have been using the 32-bit configuration with Apache and Tomcat 7. Play can export an exploded WAR file of the application which we then checked into the Elastic Beanstalk Git repository for the application. Then, we sit back and watch as the update rolls out to the environments we specified.

It’s a really nice service that costs nothing over and above the AWS resources that you’re using. That being said, the number of configuration options is limited. Amazon says that you are always free to make modifications but there are no good tutorials or examples of how to do that. Over the past couple of days, I’ve gone through the process of replacing Apache with nginx in our Elastic Beanstalk instances. I did so by piecing together information I found spread across the Internet. This is my attempt to explain how I did it in the hopes that this will help others with the process.

Understanding how it works

Before modifying a configuration, it helps to understand exactly how Elastic Beanstalk works. Each of the default AMIs for Elastic Beanstalk comes configured with a Ruby on Rails web application called hostmanager. This application is responsible for interacting with Elastic Beanstalk including health checks and deployment of changes, among other things. As long as hostmanager is functioning properly, an EC2 instance will work properly within an Elastic Beanstalk application. It sounds simple, but in reality, this is usually where the problem is. Ensuring that hostmanager continues to work in the way that it used to is the key to creating custom EC2 configurations that still work with Elastic Beanstalk.

The hostmanager runs at http://localhost:8999 on the machine and is accessible publicly at /_hostmanager. No matter what you do, you must ensure that /_hostmanager continues to work properly. The Rails app for hostmanager starts automatically so all you need to do is make sure that it’s publicly accessible.

One of Apache’s jobs on an Elastic Beanstalk instance is to make sure that hostmanager is accessible. It’s set up as a reverse proxy where /_hostmanager points to http://localhost:8999. Since my goal was to replace Apache with nginx, I had to make sure that this setting was preserved.

Modifying an AMI

To get started, it’s easiest to have an Elastic Beanstalk environment already running. As mentioned before, we were using the 32-bit Apache/Tomcat 7 configuration on a small instance. This configuration is stored in an Amazon produced AMI. In order to create a custom AMI based on that configuration, you need to create a standalone EC2 instance using that same AMI.

With Elastic Beanstalk already running, locate the EC2 instance in the EC2 section of the AWS console. Even though you’re using Elastic Beanstalk, it’s still just creates regular EC2 instances. If you’re unsure which EC2 instances being used then take a look at the elastic load balancer associated with that particular environment. That will give you the EC2 instance ID. In the EC2 section of the AWS Console, right click on the instance and select “Launch more likes this”. You’ll be taken through the EC2 instance creation process and in about a minute you’ll have an instance based off of the Elastic Beanstalk AMI.

Then you can SSH into the new instance and configure it however you want. By default, neither Apache nor tomcat are running. You will need to manually start the in order to have a working environment:

sudo service httpd start

sudo service tomcat7 start

Now everything is working just as it is in an instance used by Elastic Beanstalk. You’ll have to manually add in your application code if you want to test that, but otherwise the instance is completely functional.

Installing and configuring nginx

Swapping nginx for Apache is a simple procedure in theory. Since both are being used as reverse proxies, it’s a matter of ensuring that the nginx configuration is equivalent to the Apache configuration. Start by installing nginx:

yum -y install nginx

The next step is to modify the number of worker processes that nginx will use. By default it’s set to 1 and it’s a good idea to change it to 4. So find this line in /etc/nginx/nginx.conf:

worker_processes 1;

And change it to this:

worker_processes 4;

Next, setup proxy configuration for nginx (exact configuration taken from Hacking Elastic Beanstalk (O’Reilly)[3]. Create a file called /etc/nginx/conf.d/proxy.conf and place this inside:

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

client_max_body_size 10m;

client_body_buffer_size 128k;

client_header_buffer_size 64k;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 16k;

proxy_buffers 32 16k;

proxy_busy_buffers_size 64k;

Note that you need to manually set some headers using nginx whereas Apache sets those for you when using the ProxyPassReverse directive.

There’s a default server setup with nginx when it’s installed, so remove that:

sudo rm /etc/nginx/conf.d/default.conf

Create a file called /etc/nginx/conf.d/beanstalk.conf and fill it with this (also from Hacking Elastic Beanstalk[3]:

server {

listen 80;

server_name _;

access_log /var/log/httpd/elasticbeanstalk-access_log;

error_log /var/log/httpd/elasticbeanstalk-error_log;

#set the default location

location / {

proxy_pass http://127.0.0.1:8080/;

}

# make sure the hostmanager works

location /_hostmanager/ {

proxy_pass http://127.0.0.1:8999/;

}

}

These settings mimic the settings from Apache write down to the location of the logs. This ensures that nginx is working almost exactly like Apache (for our purposes on WellFurnished, we put in a few more modifications, but this is what you need to get started).

Now that nginx is set up, you can safely remove Apache and start nginx:

sudo yum remove httpd

sudo service nginx start

As one final measure, make sure that nginx will start automatically when the server starts:

sudo /sbin/chkconfig nginx on

With that, nginx is ready to go as an Apache replacement.

Modifying hostmanager

The tricky part of the configuration is that hostmanager actually interacts with Apache. Since you just removed Apache, that’s going to make hostmanager quite confused and angry. Thankfully, Hacking Elastic Beanstalk[3] has a bash script that modifies hostmanager to deal with nginx instead of Apache (this must be run as sudo):

cd /opt/elasticbeanstalk/srv/hostmanager/lib/elasticbeanstalk/hostmanager

cp utils/apacheutil.rb utils/nginxutil.rb

sed -i 's/Apache/Nginx/g' utils/nginxutil.rb

sed -i 's/apache/nginx/g' utils/nginxutil.rb

sed -i 's/httpd/nginx/g' utils/nginxutil.rb

cp init-tomcat.rb init-tomcat.rb.orig

sed -i 's/Apache/Nginx/g' init-tomcat.rb

sed -i 's/apache/nginx/g' init-tomcat.rb

The script creates a utility to deal with nginx and then modifies init-tomcat.rb, The code that starts up Tomcat, so that it also keeps track of nginx.

Creating a custom AMI

Now that the instances set up with appropriate modifications it’s time to create a custom AMI. Go back to the EC2 instances section of the AWS console and find your modified instance. Right click on it and select “Create Image (EBS AMI)”. It will ask you for an image name an optional description, just make sure you put something in that make sense to you. You can make any other modifications that you want (or just use the defaults) and then click “Yes, Create”. When your AMI is ready it will be listed in the AMIs list.

Important: You might be wondering why you can’t just go into an instance that Elastic Beanstalk started, modify that, and create a custom AMI directly from there. That would seem to be the logical thing to do because it requires less steps. However, Elastic Beanstalk write some configuration information to the instance when it starts up. If you create an AMI from that instance that it will always have that configuration and that will actually prevents hostmanager from starting properly (trust me, I tried).

Using the custom AMI

Go to the AMI list in the EC2 section of the AWS console and find your new AMI. Copy the AMI ID (not the name). In your Elastic Beanstalk environment, click on the Actions menu and select “Edit/Load Configuration”. Paste your custom AMI ID into the “Custom AMI” field and click “Apply Changes”. Elastic Beanstalk will then start deploying instances using the new AMI. After a couple of minutes, you should have a fully functioning environment with your new AMI.

Conclusion

Creating a custom AMI for Elastic Beanstalk is a little bit tricky and takes a lot of patience. I hope that someday Amazon will add nginx default configurations so that custom AMIs will no longer be needed. One thing to be aware of when using a custom AMI is that the instances won’t receive automatic updates, so you’ll need to keep on top of security fixes and other critical updates. Other than that, as long as hostmanager is running and accessible, you should be able to make any changes that you want.

I created a bash script with all of the steps mentioned in this post as a gist that you can copy from. Note that the script must be run as sudo.

References

Elastic Beanstalk (Amazon)

Play Framework (Play)

Hacking Elastic Beanstalk (Safari Online)

September 12, 2012

CSS Lint v0.9.9 now available

CSS Lint v0.9.9 is now available both on the command line and at the web site. This release is mostly a maintenance release with a few small features added in. This lays the groundwork for an eventual 1.0.0 release, but that doesn’t preclude the possibility of a 0.9.10 release before then. There’s still a lot of work to do on the parser to make it fully CSS3 compliant, and if you’re interested in helping out, please take a look at the separate GitHub repository.

The biggest change to CSS Lint in this release are for the command line. The first change is the ability to specify some rules to ignore. This was requested by Zack Leatherman via Twitter, and fits in nicely with the options to set rules as warnings or errors. The intend to specify this to ignore is that you want to use all of the default settings except for omitting a few rules. The syntax is as follows:

csslint --ignore=important,ids file.css

The --ignore option follows the same format as --warnings and --errors, using a comma-delimited list of rules to ignore. Read more in the .

The second change to the CLI is the ability to specify a configuration file with default options for CSS Lint. CSS Lint will look for the file named .csslintrc in the current working directory and use those options. The file is in the same format as the command line arguments so that you can do something like this:

--errors=important,ids

--format=checkstyle-xml

As long as this is in the current working directory when CSS Lint is run, those options will be picked up and used by default. Any options that are passed in on the command line will override those in the file.

For more information about the CLI, see the documentation.

In addition to that, we had several other small changes:

Jos Hirth fixed several bugs, including some bugs in tests.

Jonathan Barnett contributed a JUnit XML output format to allow easier integration with CI environments.

Zach Leatherman removed references to Microsoft-specific vendor prefixes that will not be supported in Internet Explorer 10.

The last piece of news for this release is that CSS Lint is now on Travis CI, so you can always keep up to date as to the latest status of the build. Of course, we welcome all contributions from the community on GitHub, which is why we have an extensive Developer Guide to help you get setup and ready to submit your changes. Enjoy!

August 22, 2012

The innovations of Internet Explorer

Long before Internet Explorer became the browser everyone loves to hate, it was the driving force of innovation on the Internet. Sometimes it’s hard to remember all of the good that Internet Explorer did before Internet Explorer six became the scourge of web developers everywhere. Believe it or not, Internet Explorer 4-6 is heavily responsible for web development as we know it today. A number of proprietary features became de facto standards and then official standards with some ending up in the HTML5 specification. It may be hard to believe that Internet Explorer is actually to think for a lot of the features that we take for granted today, but a quick walk through history shows that it’s true.

DOM

If Internet Explorer is a browser that everyone loves to hate, the Document Object Model (DOM) is the API that everyone loves to hate. You can call the DOM overly verbose, ill-suited for JavaScript, and somewhat nonsensical, and you would be correct on all counts. However, the DOM gives developers access to every part of a webpage through JavaScript. There was a time when you could only access certain elements on the page through JavaScript. Internet Explorer 3 and Netscape 3 only allowed programmatic access to form elements, images, and links. Netscape 4 improved the situation by expanding programmatic access to the proprietary element via document.layers. Internet Explorer 4 improve the situation even further by allowing programmatic access of every element on the page via document.all

In many regards, document.all was the very first version of document.getElementById(). You still used an element’s ID to access it through document.all, such as document.all.myDiv or document.all["myDiv"]. The primary difference was that Internet Explorer used a collection instead of the function, which matched all other access methods at the time such as document.images and document.forms.

Internet Explorer 4 was also the first browser to introduce the ability to get a list of elements by tag name via document.all.tags(). For all intents and purposes, this was the first version of document.getElementsByTagName() and worked the exact same way. If you want to get all elements, you would use document.all.tags("div"). Even in Internet Explorer 9, this method still exists and is just an alias for document.getElementsByTagName().

Internet Explorer 4 also introduced us to perhaps the most popular proprietary DOM extension of all time: innerHTML. It seems that the folks at Microsoft realized what a pain it would be to build up a DOM programmatically and afforded us this shortcut, along with outerHTML. Both of which proved to be so useful, they were standardized in HTML5[1]. The companion APIs dealing with plain text, innerText and outerText, also proved influential enough that DOM Level 3 introduced textContent[2], which acts in a similar manner to innerText.

Along the same lines, Internet Explorer 4 introduced insertAdjacentHTML(), yet another way of inserting HTML text into a document. This one took a little longer, but it was also codified in HTML5[3] and is now widely supported by browsers.

Events

In the beginning, there was no event system for JavaScript. Both Netscape and Microsoft took a stab at it and each came up with different models. Netscape brought us event capturing, the idea that an event is first delivered to the window, then the document, and so on until finally reaching the intended target. Netscape browsers prior to version 6 supported only event capturing.

Microsoft took the opposite approach and came up with event bubbling. They believed that the event should begin at the actual target and then fire on the parents and so on up to the document. Internet Explorer prior to version 9 only supported event bubbling. Although the official DOM events specification evolves to include both event capturing and event bubbling, most web developers use event bubbling exclusively, with event capturing being saved for a few workarounds and tricks buried deep down inside of JavaScript libraries.

In addition to creating event bubbling, Microsoft also created a bunch of additional events that eventually became standardized:

contextmenu – fires when you use the secondary mouse button on an element. First appeared in Internet Explorer 5 and later codified as part of HTML5[4]. Now supported in all major desktop browsers.

beforeunload – fires before the unload event and allows you to block unloading of the page. Originally introduced in Internet Explorer 4 and now part of HTML5[4]. Also supported in all major desktop browsers.

mousewheel – fires when the mouse wheel (or similar device) is used. The first browser to support this event was Internet Explorer 6. Just like the others, it’s now part of HTML5[4]. The only major desktop browser to not support this event is Firefox (which does support an alternative DOMMouseScroll event).

mouseenter – a non-bubbling version of mouseover, introduced by Microsoft in Internet Explorer 5 to help combat the troubles with using mouseover. This event became formalized in DOM Level 3 Events[5]. Also supported in Firefox and Opera, but not in Safari or Chrome (yet?).

mouseleave – a non-bubbling version of mouseout to match mouseenter. Introduced in Internet Explorer 5 and also now standardized in DOM Level 3 Events[6]. Same support level as mouseenter.

focusin – a bubbling version of focus to help more easily manage focus on a page. Originally introduced in Internet Explorer 6 and now part of DOM Level 3 Events[7]. Not currently well supported, though Firefox has a bug opened for its implementation.

focusout – a bubbling version of blur to help more easily manage focus on a page. Originally introduced in Internet Explorer 6 and now part of DOM Level 3 Events[8]. As with focusin, not well supported yet but Firefox is close.

XML and Ajax

Although XML is used nearly as much in the web today as many thought it would be, Internet Explorer also led the way with XML support. It was the first browser to support client-side XML parsing and XSLT transformation in JavaScript. Unfortunately, it did so through ActiveX objects representing XML documents and XSLT processors. The folks at Mozilla clearly thought there was something there because they invented similar functionality in the form of DOMParser, XMLSerializer, and XSLTProcessor. The first two are now part of HTML5[9]. Although the standards-based JavaScript XML handling is quite different than Internet Explorer’s version, it was undoubtedly influenced by IE.

The client-side XML handling was all part of Internet Explorer’s implementation of XMLHttpRequest, first introduced as an ActiveX object in Internet Explorer 5. The idea was to enable retrieval of XML documents from the server in a webpage and allow JavaScript to manipulate that XML as a DOM. Internet Explorer’s version requires you to use new ActiveXObject("MSXML2.XMLHttp"), also making it reliant upon version strings and making developers jump through hoops to test and use the most recent version. Once again, Firefox came along and cleaned up the mess up by creating a then-proprietary XMLHttpRequest object that duplicated the interface of Internet Explorer’s version exactly. Other browsers then copied Firefox’s implementation, ultimately leading to Internet Explorer 7 creating an ActiveX-free version as well. Of course, XMLHttpRequest was the driving force behind the Ajax revolution that got everybody excited about JavaScript.

CSS

When you think of CSS, you probably don’t think much about Internet Explorer. After all, it’s the one that tends to lag behind in CSS support (at least up to Internet Explorer 10). However, Internet Explorer 3 was the first browser to implement CSS. At the time, Netscape was pursuing an alternate proposal, JavaScript Style Sheets (JSSS)[10]. As the name suggested, this proposal used JavaScript to define stylistic information about the page. Netscape 4 introduced JSSS and CSS, a full version behind Internet Explorer. The CSS implementation was less than stellar, often translating styles into JSSS in order to apply them properly[11]. That also meant that if JavaScript is disabled, CSS didn’t work in Netscape 4.

While Internet Explorer’s implementation of CSS was limited to font family, font size, colors, and backgrounds, the implementation was solid and usable. Meanwhile, Netscape 4′s implementation was buggy and hard to work with. Yes, in some small way, Internet Explorer led to the success of CSS.

Internet Explorer also brought us other CSS innovations that ended up being standardized:

text-overflow – used to show ellipses when text is larger than its container. First appeared in Internet Explorer 6 and standardized in CSS3[12]. Now supported in all major browsers.

overflow-x and overflow-y – allows you to control overflow in two separate directions of the container. This property first appeared in Internet Explorer 5 and later was formalized in CSS3[13]. Now supported in all major browsers.

word-break – used to specify line breaking rules between words. Originally in Internet Explorer 5.5 and now standardized in CSS3[14]. Supported in all major browsers except Opera.

word-wrap – specifies whether the browser should break lines in the middle of words are not. First created for Internet Explorer 5.5 and now standardized in CSS3 as overflow-wrap[15], although all major browsers support it as word-wrap.

Additionally, many of the new CSS3 visual effects have Internet Explorer to thank for laying the groundwork. Internet Explorer 4 introduced the proprietary filter property making it the first browser capable of:

Generating gradients from CSS instructions (CSS3: gradients)

Creating semitransparent elements with an alpha filter (CSS3: opactity and RGBA)

Rotating an element an arbitrary number of degrees (CSS3: transform with rotate())

Applying a drop shadow to an element (CSS3: box-shadow)

Applying a matrix transform to an element (CSS3: transform with matrix())

Additionally, Internet Explorer 4 had a feature called transitions, which allowed you to create some basic animation on the page using filters. The transitions were mostly based on the transitions commonly available in PowerPoint at the time, such as fading in or out, checkerboard, and so on[16].

All of these capabilities are featured in CSS3 in one way or another. It’s pretty amazing that Internet Explorer 4, released in 1997, had all of these capabilities and we are now just starting to get the same capabilities in other browsers.

Other HTML5 contributions

There is a lot of HTML5 that comes directly out of Internet Explorer and the APIs introduced. Here are some that have not yet been mentioned in this post:

Drag and Drop – one of the coolest parts of HTML5 is the definition of native drag-and-drop[17]. This API originated in Internet Explorer 5 and has been described, with very few changes, in HTML5. The main difference is the addition of the draggable attribute to mark arbitrary elements as draggable (Internet Explorer used a JavaScript call, element.dragDrop() to do this). Other than that, the API closely mirrors the original and is now supported in all major desktop browsers.

Clipboard Access – now split out from HTML5 into its own spec[18], grants the browser access to the clipboard in certain situations. This API originally appeared in Internet Explorer 6 and was then copied by Safari, who moved clipboardData off of the window object and onto the event object for clipboard events. Safari’s change was kept as part of the HTML5 version and clipboard access is now available in all major desktop browsers except for Opera.

Rich Text Editing – rich text editing using designMode was introduced in Internet Explorer 4 because Microsoft wanted a better text editing experience for Hotmail users. Later, Internet Explorer 5.5 introduced contentEditable As a lighter weight way of doing rich text editing. Along with both of these came the dreaded execCommand() method and its associated methods. For better or worse, this API for rich text editing was standardized in HTML5[19] and is currently supported in all major desktop browsers as well as Mobile Safari and the Android browser.

Conclusion

While it’s easy and popular to poke at Internet Explorer, in reality, we wouldn’t have the web as we know it today if not for its contributions. Where would the web be without XMLHttpRequest and innerHTML? Those were the very catalysts for the Ajax revolution of web applications, upon which a lot of the new capabilities have been built. It seems funny to look back at the browser that has become a “bad guy” of the Internet and see that we wouldn’t be where we are today without it.

Yes, Internet Explorer had its flaws, but for most of the history of the Internet it was the browser that was pushing technology forward. Now that were in a period with massive browser competition and innovation, it’s easy to forget where we all came from. So the next time you run into people who work on Internet Explorer, instead of hurling insults and tomatoes, say thanks for helping to make the Internet what it is today and for making web developers one of the most important jobs in the world.

References

innerHTML in HTML5

textContent in DOM Level 3

insertAdjacentHTML() in HTML5

Event Handlers on Elements (HTML5)

mouseenter (DOM Level 3 Events)

mouseleave (DOM Level 3 Events)

focusin (DOM Level 3 Events)

focusout (DOM Level 3 Events)

DOMParser interface (HTML5)

JavaScript Style Sheets (Wikipedia)

The CSS Saga by Håkon Wium Lie and Bert Bos

text-overflow property (CSS3 UI)

overflow-x and overflow-y (CSS3 Box)

word-break (CSS3 Text)

overflow-wrap/word-wrap (CSS3 Text)

Introduction to Filters and Transitions (MSDN)

Drag and Drop (HTML5)

August 15, 2012

Setting up SSL on an Amazon Elastic Load Balancer

In my last post, I talked about setting up Apache as an SSL front end to Play, with the goal of having SSL to the end-user while using normal HTTP internally. That approach works well when you have just one server. When you have multiple servers behind a load balancer, the approach is a little bit different.

We’re using Amazon web services for WellFurnished and so are using an elastic load balancer (ELB) to handle traffic. It’s possible to have SSL terminated at the ELB and HTTP the rest of the way, creating a similar set up as with Apache. You basically upload your SSL certificates to the ELB and open up port 443 and you’re in business. In theory, it’s a very simple set up, but I found that in reality there were a few hiccups.

Setting up SSL

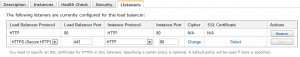

The Amazon web services console lets you go right to an ELB (in the EC2 section under “Load Balancers”). When you click on an ELB, you get its properties in the bottom pane. Click on the Listeners tab and you see all the ports that are enabled currently. The last row is reserve so that you can add new ports. If you change the first drop-down to HTTPS, then the entire row changes so you can enter the appropriate information.

In this dialog, the load balancer protocol and port are set to HTTPS and 443, respectively. The instance protocol and port are still set at HTTP and 80, meaning that the ELB will talk HTTP to all of its instances.

Of course, HTTPS is useless without a valid certificate so that web browsers can verify the site.

Uploading certificates to an ELB

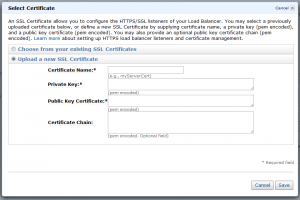

When you click on the Select link to specify an SSL certificate you get the following dialog:

The dialog asks you to enter four pieces of information:

Certificate Name – The name you want to use to keep track of the certificate within the AWS console.

Private Key – The key file you generated as part of your request for certificate.

Public Key Certificate – The public facing certificate provided by your certificate authority.

Certificate Chain – An optional group of certificates to validate your certificate.

Providing the certificate name is pretty straightforward, it can be anything you want. The name itself is just so you can keep track of it and has no other value.

The other three fields are a little bit trickier. Depending on the source of your SSL certificates, you may have to do a few more steps in order to get things working. We started out by getting a Comodo PositiveSSL certificate. When we received our certificate, we actually received three files in a single zip:

www_welfurnished_com.crt

PositiveSSLCA2.crt

AddTrustExternalCARoot.crt

The actual names of the files may vary depending on the type of SSL certificate you purchase and the certificate authority. The first file is the file that is unique to your domain while the other two are used to form a certificate chain[1] for your domain. You will always have a file with the word “root” in it, which is the root certificate[2] for your domain while the other is an intermediate certificate.

All Amazon Web services work with PEM files for certificates and you’ll note none of the files we received were in that format. So before using the files, they have to be translated into a format that Amazon will understand.

Private key

The private key is something that you generated along with your certificate request. Hopefully, you kept it safe knowing that you would need it again one day. To get the Amazon supported format for your key, you need to use OpenSSL[3] in this way:

openssl rsa -in host.key -text

The result of this command is a lot of text, the final piece of which is what Amazon is looking for. You’ll see something that looks like this:

-----BEGIN RSA PRIVATE KEY-----

(tons of text)

-----END RSA PRIVATE KEY-----

Copy this whole block, including the delimiters to begin and end the private key text, and copy that into the Private Key box in the AWS dialog.

Public certificate

The public certificate is the domain-specific file that you receive, in our case, www_wellfurnished_com.crt. This certificate file must be changed into PEM format for Amazon to use (your certificate might already be in PEM format, in which case you can just open it up in a text editor, copy the text, and paste it into the dialog). Once again, OpenSSL saves the day by transforming the certificate file into PEM format:

openssl x509 -inform PEM -in www_example_com.crt

The output you’ll see look something like this:

-----BEGIN CERTIFICATE-----

(tons of text)

-----END CERTIFICATE-----

Copy this entire text block, including the begin and end delimiters, and paste it into the Public Certificate field in the AWS dialog.

Certificate chain

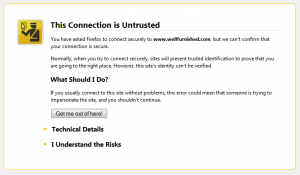

Don’t be fooled by the AWS dialog, the certificate chain isn’t really optional when your ELB is talking directly to a browser. The certificate chain is the part that verifies that fully verifies which certificate authority issued the certificate and therefore whether or not the browser can trust that the domain certificate is valid. Different browsers handle things in different ways, but if you are missing the certificate chain and Firefox, you get a pretty scary warning page:

So if your ELB is going to be talking to browsers directly, you definitely need to provide the certificate chain.

The certificate chain is exactly what it sounds like: a series of certificates. For the AWS dialog, you need to include the intermediate certificate and the root certificate one after the other without any blank lines. Both certificates need to be in PEM format, so you need to go through the same steps as with the domain certificate.

(openssl x509 -inform PEM -in PositiveSSLCA2.crt; openssl x509 -inform PEM -in AddTrustExternalCARoot.crt)

The output of this command is the concatenation of the two certificates in PEM format. Copy the entire output into the Certificate Chain box in the dialog.

Note on errors