Calum Chace's Blog, page 22

November 14, 2014

Comment on Transcendence, the movie by Stephen Oberauer

“And surely to goodness Hollywood should by now have found less hackneyed ways to kill off powerful aliens and super-intelligences than infecting them with a virus” – ha ha, excellent point! I quite enjoyed the movie… I guess that means I’ll enjoy your book as well :)

August 21, 2014

Comment on Virtual reality – for real? by Calum Chace

Hi, I’m sorry you’re having that problem. The blog is on a standard WordPress platform, and no-one else has reported the problem. Perhaps it is your browser.

July 9, 2014

Book review: Superintelligence

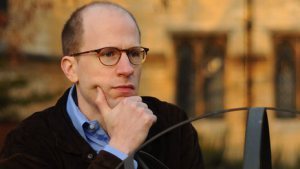

Nick Bostrom is one of the cleverest people in the world. He is a professor of philosophy at Oxford University, and was recently voted 15th most influential thinker in the world by the readers of Prospect magazine. He has laboured mightily and brought forth a very important book, Superintelligence: paths, dangers, strategies. I hesitate to tangle with this leviathan, but its publication is a landmark event in the debate which this blog is all about, so I must.

Nick Bostrom is one of the cleverest people in the world. He is a professor of philosophy at Oxford University, and was recently voted 15th most influential thinker in the world by the readers of Prospect magazine. He has laboured mightily and brought forth a very important book, Superintelligence: paths, dangers, strategies. I hesitate to tangle with this leviathan, but its publication is a landmark event in the debate which this blog is all about, so I must.

I hope this book finds a huge audience. It deserves to. The subject is vitally important for our species, and no-one has thought more deeply or more clearly than Bostrom about whether superintelligence is coming, what it will be like, and whether we can arrange for a good outcome – and indeed what ” a good outcome” actually means.

It’s not an easy read. Bostrom has a nice line in wry self-deprecating humour, so I’ll let him explain:

“This has not been an easy book to write. I have tried to make it an easy book to read, but I don’t think I have quite succeeded. … the target audience [is] an earlier time-slice of myself, and I tried to produce a book that I would have enjoyed reading. This could prove a narrow demographic.”

This passage demonstrates that Bostrom can write very well indeed. Unfortunately the search for precision often lures him into an overly academic style. For this book at least, he might have thought twice about using words like modulo, percept and irenic without explanation – or at all.

Superintelligence covers a lot of territory, and here I can only point out a few of the high points. Bostrom has compiled a meta-survey of 160 leading AI researchers: 50% of them think that an artificial general intelligence (AGI) – an AI which is at least our equal across all our cognitive functions – will be created by 2050. 90% of the researchers think it will arrive by 2100. Bostrom thinks these dates may prove too soon, but not by a huge margin.

He also thinks that an AGI will become a superintelligence very soon after its creation, and will quickly dominate other life forms (including us), and go on to exploit the full resources of the universe (“our cosmic endowment”) to achieve its goals. What obsesses Bostrom is what those goals will be, and whether we can determine them. If the goals are human-unfriendly, we are toast.

He does not think that intelligence augmentation or brain-computer interfaces can save us by enabling us to reach superintelligence ourselves. Superintelligence is a two-horse race between whole brain emulation (copying a human brain into a computer) and what he calls Good Old Fashioned AI (machine learning, neural networks and so on).

The book’s middle chapter is titled “Is the default outcome doom?” Uncharacteristically, Bostrom is coy about answering his own question, but the implication is yes, unless we can control the AGI (constrain its capabilities), or determine its motivation set. The second half of the book addresses these challenges in great depth. His conclusion on the control issue is that we probably cannot constrain an AGI for long, and anyway there wouldn’t be much point having one if you never opened up the throttle. His conclusion on the motivation issue is that we may be able to determine the goals of an AGI, but that it requires a lot more work, despite the years of intensive labour that he and his colleagues have already put in. There are huge difficulties in specifying what goals we would like the AGI to have, and if we manage that bit then there are massive further difficulties ensuring that the instructions we write remain effective. Forever.

Now perhaps I am being dense, but I cannot understand why anyone would think that a superintelligence would abide forever by rules that we installed at its creation. A successful superintelligence will live for aeons, operating at thousands or millions of times the speed that we do. It will discover facts about the laws of physics, and the parameters of intelligence and consciousness that we cannot even guess at. Surely our instructions will quickly become redundant. But Bostrom is a good deal smarter than me, and I hope that he is right and I am wrong.

In any case, Bostrom’s main argument – that we should take the prospect of superintelligence very seriously – is surely right. Towards the end of book he issues a powerful rallying cry:

“Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb. … [The] sensible thing to do would be to put it down gently, back out of the room, and contact the nearest adult. [But] the chances that we will all find the sense to put down the dangerous stuff seems almost negligible. … Nor is there a grown-up in sight. [So] in the teeth of this most unnatural and inhuman problem [we] need to bring all our human resourcefulness to bear on its solution.”

May 6, 2014

Volvo speeds up driverless car project

Volvo’s test of self-driving cars seems to be progressing significantly ahead of schedule. In a press release last December, the company said that “the project would commence in 2014 with customer research and technology development, [with the first cars] expected to be on the roads in Gothenburg by 2017.” An update just released says that “the first test cars are already rolling around the Swedish city of Gothenburg and the sophisticated Autopilot technology is performing well.”

Volvo’s test of self-driving cars seems to be progressing significantly ahead of schedule. In a press release last December, the company said that “the project would commence in 2014 with customer research and technology development, [with the first cars] expected to be on the roads in Gothenburg by 2017.” An update just released says that “the first test cars are already rolling around the Swedish city of Gothenburg and the sophisticated Autopilot technology is performing well.”

Why the rush into self-driving cars, and what will be the consequences – intended and otherwise?

One big reason for introducing self-driving cars is safety. Human beings cause a million road deaths every year, and computers are less prone to error – partly because they don’t get drunk, tired or angry. Driverless cars can also move together in synchronised “herds”, which dramatically speeds up journey times.

Some observers expect that commuting distances may increase, and commuting may become much less of a bore, as drivers read, watch movies, and catch up with emails (and blog posts) while their automatic pilots take care of the driving duties.

A lot has been made of the potential for job losses as driving jobs are automated, but most of this is overdone. Until and unless computers become conscious and pass the Turing Test, humans will be needed on board taxis, vans and trucks – partly to load and unload their cargoes, and partly to deal with the unexpected developments that the real world throws up on many journeys. Evidence for this is provided by the aviation industry. Planes have been flown “by wire” for decades, but we still don’t have pilot-less passenger or cargo planes.

May 5, 2014

Terminator or Transcendence?

Yesterday I participated in an online discussion of the movie Transcendence, along with Nikola Danaylov, the “Larry King” of transhumanism, and Stuart Armstrong of the Oxford Institute for the Future of Humanity. The debate was chaired by David Wood, chair of the London Futurist Group.

The video is here: