Calum Chace's Blog, page 21

March 15, 2015

Singularity University Summit, Seville, March 2015

Hyatt Hotels has revenues of $4bn and a market value of $8.4bn. AirBnB has revenues of $250m, 13 staff, pretty much no assets, and a market value of $14bn. It will soon be the world’s largest hotel company.

Über was founded in 2009 and has a market cap of $40bn, despite – again – having pretty much no physical assets. It has taxi drivers up in arms all over the world.

Magic Leap, a virtual reality company, raised $50m in February 2014 and then $550m in October. It persuaded the second set of investors to contribute by showing them a virtual cup of coffee alongside a real one and asking them to pick up the real one.

These are examples of the new kind of company which is disrupting industries all round the world. Disruption of industries is not new, but the digital revolution means it is happening more often, and faster.

Singularity University (SU) exists to alert the rest of us to this development, and to help people discover ways to harness the digital revolution to solve the grand challenges facing humanity – challenges like hunger, poverty, poor healthcare and lack of education.

Peter Diamandis, one of the founders of SU, says the disruptive organisations exhibit the “six Ds”: as well as being Digitised and Disruptive, they are De-materialized, De-monetized, Deceptive, and Democratized. He argues that digitisation is a hugely positive force, leading to abundance where before there was scarcity. When you de-materialise a product or service, you can usually make it free at the margin.

In the three days of the SU Summit in Seville, ten SU faculty members (supported by a handful of alumni) took 1,000 delegates through the drivers and effects of the digital revolution, providing a raft of examples and advice how to start your very own disruptive organisation.

The drivers are threefold. At the root is Moore’s Law, the observation that computer processing power is doubling every eighteen months – an exponential increase. Moore’s Law helps bring the other two drivers into being. The first is the enormous proliferation of sensors, whose price has tumbled from $1,000 each to $2 in recent years, and the other is the dramatic improvement in algorithms that is improving the effectiveness of the artificial intelligence which adds value to all kinds of products and services.

The impact of the new technologies enabled by these factors is hard to over-state. Driverless cars, 3D printing, augmented reality, virtual reality, genetic manipulation, and the ability to convert healthcare from cure to prevention. Each of these changes is a revolution in itself. Together they will make the world virtually unrecognisable in a few years – and then do it all over again, only faster.

The people at SU are not blind to the potential downsides. In particular, the rapid improvement of artificial intelligence makes some people feel profoundly uncomfortable, initially because of the threat that AI will automate many human jobs out of existence, and in the longer term because of the difficulty in controlling an AI which is smarter than humans and has its own goals. The SU faculty have thought deeply about these problems, and continue to do so. They don’t have all the answers, but nobody does.

As someone who has been reading and thinking about these issues for many years, I was very impressed by the sober, thorough, and intelligent approach that SU takes. The summit definitely made me want to attend one of their courses.

The most interesting thing about the summit for me was the way SU seems to “aim off” the more radical ideas associated with its name. The word Exponential cropped up in every presentation, and usually several times. But the notion of the Singularity itself was hardly ever mentioned, and there was no coverage, for instance, of radical life extension. It almost made me think that SU should re-brand itself as the Exponential University.

March 14, 2015

Comment on Pandora’s Brain is published! by Calum Chace

Thanks Clive. I recommend attending a Singularity University event – check out their website, http://singularityu.org/.

Comment on Pandora’s Brain is published! by clivepinder

Make it happen – I’d like to attend a ‘not for early adopters’ conference that gives those of us interested yet not immersed an opportunity to learn more, and perhaps contribute a fresh perspective on how to bring these ideas into the mainstream. Good luck with the book sales.

March 13, 2015

Pandora’s Brain is published!

Pandora’s Brain is available today on Amazon sites around the world in both ebook and paperback formats.

Pandora’s Brain is available today on Amazon sites around the world in both ebook and paperback formats.

I’m celebrating by attending the Singularity University Summit in Seville. The content of this conference has been inspiring and uplifting but also very grounded. As you would expect, the word “exponential” has been used a great deal, but the presenters – mostly SU faculty – have focused on changes expected in the near term, and have provided solid evidence and examples to support their claims about the future they envisage.

I’ve met some great SU people – including AI expert Neil Jacobstein, medical expert Daniel Kraft, and fellow novelist Ramez Naam (pictured above).

The conference has been superbly organised by Luis Rey, Director of the Colegio de San Francisco de Paula, and his colleagues. Over 1,000 people are attending, including entrepreneurs and representatives of governments and companies from over 20 countries. The whole event has been very professional and impressive. We ought to do one in London!

January 20, 2015

It’s that man again!

OK, I know some people have had enough of Mr Musk lately, but he does keep saying and doing interesting things.

In a wide-ranging and intriguing 8-minute interview with Max Tegmark (leading physicist and a founder of the Future of Life Institute), Musk lists the five technologies which will impact society the most. He doesn’t specify the timeframe.

His list of five is (not verbatim – it appears at 4 minutes in):

Making life multi-planetary

Efficient energy sources

Growing the footprint of the internet

Re-programming human genetics

Artificial Intelligence

A pretty good list, IMHO.

What is very cool is that he goes on to say (in his customary under-stated way) that he is “working on” the first three, and looking for ways to get involved in the other two. “Working on” is extraordinarily modest when you consider that his contribution to the first two are SpaceX, Tesla, and Solar City.

December 8, 2014

Comment on Attitudes towards Artificial General Intelligence by Anonymous

Good question, to which I don’t really know the aswer. A cynic might say that academics are very conservative, and they are probably also very wary of falling into the same hype trap which has led to previous AI winters.

Also they are aware of the huge gap between current AI and AGI.

And they may be resentful of the intrusion into their space by the computer scientists who make up a lot of the AGI research community.

Probably a few other reasons too.

Comment on Attitudes towards Artificial General Intelligence by procellaria

Why are the neuroscientists so skeptical about the future development of artificial intelligence?

December 7, 2014

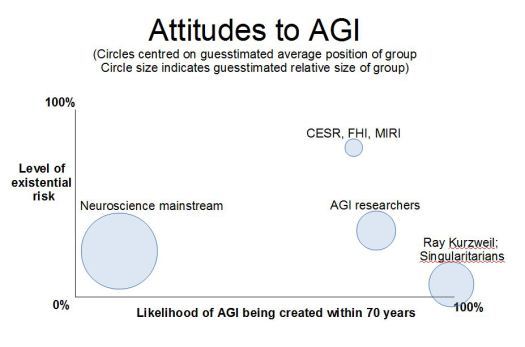

Attitudes towards Artificial General Intelligence

Following the recent comments by Elon Musk and Stephen Hawking, more people are thinking and talking about the possibility of an AGI being created, and what it might mean.

That’s a good thing.

The chart below is a sketch of how I suspect the opinions are forming within the various groups participating in the debate. (The general public is not yet participating to any significant degree.)

It’s conjecture based on news reporting and personal discussions, and not intended to offend, so please don’t sue me. Otherwise, comments welcome.

CESR = Centre for the Study of Existential Risk (Cambridge University)

FHI = Future of Humanity Institute (Oxford University)

MIRI = Machine Intelligence Research Institute (formerly the Singularity Institute)

November 16, 2014

Comment on Transcendence, the movie by Stephen Oberauer

Thanks :)

November 15, 2014

Comment on Transcendence, the movie by Calum Chace

Hi Stephen. Yup, no viruses in Pandora! And congratulations on your recent prize!