Sam Harris's Blog, page 21

March 25, 2014

The Path Between Pseudo-Spirituality and Pseudo-Science

(Photo via Bala Sivakumar)

I am often asked what will replace organized religion. The answer, I believe, is nothing and everything. Nothing need replace its ludicrous and divisive doctrines—such as the idea that Jesus will return to earth and hurl unbelievers into a lake of fire, or that death in defense of Islam is the highest good. These are terrifying and debasing fictions. But what about love, compassion, moral goodness, and self-transcendence? Many people still imagine that religion is the true repository of these virtues. To change this, we must begin to think about the full range of human experience in a way that is as free of dogma, cultural prejudice, and wishful thinking as the best science already is. That is the subject of my next book, Waking Up: A Guide to Spirituality Without Religion.

Authors who attempt to build a bridge between science and spirituality tend to make one of two mistakes: Scientists generally start with an impoverished view of spiritual experience, assuming that it must be a grandiose way of describing ordinary states of mind—parental love, artistic inspiration, awe at the beauty of the night sky. In this vein, one finds Einstein’s amazement at the intelligibility of Nature’s laws described as though it were a kind of mystical insight.

New Age thinkers usually enter the ditch on the other side of the road: They idealize altered states of consciousness and draw specious connections between subjective experience and the spookier theories at the frontiers of physics. Here we are told that the Buddha and other contemplatives anticipated modern cosmology or quantum mechanics and that by transcending the sense of self, a person can realize his identity with the One Mind that gave birth to the cosmos.

In the end, we are left to choose between pseudo-spirituality and pseudo-science.

Few scientists and philosophers have developed strong skills of introspection—in fact, many doubt that such abilities even exist. Conversely, many of the greatest contemplatives know nothing about science. I know brilliant scientists and philosophers who seem unable to make the most basic discriminations about their own moment to moment experience; and I have known contemplatives who spent decades meditating in silence who probably thought the earth was flat. And yet there is a connection between scientific fact and spiritual wisdom, and it is more direct than most people suppose.

I have been waiting for more than a decade to write Waking Up. Long before I saw any reason to criticize religion (The End of Faith, Letter to a Christian Nation), or to connect moral and scientific truths (The Moral Landscape, Free Will), I was interested in the nature of human consciousness and the possibility of spiritual experience. In Waking Up, I do my best to show that a certain form of spirituality is integral to understanding the nature of our minds. (For those of you who recoil at every use of the term “spirituality,” I recommend that you read a previous post.)

My goal in Waking Up is to help readers see the nature of their own minds in a new light. The book is by turns a seeker’s memoir, an introduction to the brain, a manual of contemplative instruction, and a philosophical unraveling of what most people consider to be the center of their inner lives: the feeling of self we call “I.” It is also my most personal book to date.

If you live in the U.S. or Canada, you can order a special hardcover edition of Waking Up through this website. This edition of the book will have the same text as the trade version, but it will be printed on nicer paper and have several other aesthetic enhancements. Simon and Schuster will be doing only one printing, and all orders must be placed by April 15th. Proceeds from the sale of the special edition of Waking Up will be used to develop an online course on the same topic.

March 17, 2014

Sam Harris vs Rev. Giles Fraser

March 10, 2014

The Significance of Our Insignificance

Peter Watson is an intellectual historian, journalist, and the author of thirteen books, including The German Genius, The Medici Conspiracy, and The Great Divide. He has written for The Sunday Times, The New York Times, the Observer, and the Spectator. He lives in London.

He was kind enough to answer a few question about his new book The Age of Atheists: How We Have Sought to Live Since the Death of God.

* * *

1. You begin your account of atheism with the 19th-century German philosopher, Friedrich Nietzsche. Why is he a good starting point?

In 1882 Nietzsche declared, roundly, in strikingly clear language, that “God is dead”, adding that we had killed him. And this was a mere twenty years after Darwin’s Origin of Species, which is rightly understood as the greatest blow to Christianity. But Nietzsche’s work deserves recognition as a near-second. Darwinism was assimilated more quickly in Germany than in Britain, because the idea of evolution was especially prevalent there. Darwin remarks in one of his letters that his ideas had gone down better in Germany than anywhere else. And the history of Kulturkampf in Germany – the battle between Protestantism and Catholicism – meant that religion was under attack anyway, by its own adherents. Other people responded to Nietzsche more than to anyone else – Ibsen, for example, W. B. Yeats, Robert Graves, James Joyce. In Germany there was the phenomenon of the Nietzschean generations – young people who lived his philosophy in specially-created communities. And people responded to Nietzsche because, his writing style was so pithy, to the point, memorable, and crystal clear. It is Nietzsche who tells us plainly, eloquently, that there is nothing external to, or higher than, life itself, no “beyond” or “above”, no transcendence and nothing metaphysical. This was dangerous thinking at the time, and has remained threatening for many people.

2. You say at one point in your book that psychology, or perhaps therapy, has taken over from religion as a way to understand our predicament, and – to an extent – deal with it. Do you follow Freud in viewing religion as, essentially, a product of neurosis?

I do think there is sound anthropological evidence that the first “priests”, the shamans of Siberia, were probably psychological misfits or malcontents, and that throughout history we have gone on from there, because many well known religious figures – some of the Hebrew prophets, John the Baptist, St. Paul, St. Augustine, Joan of Arc, Luther – were psychologically odd. Religion is not so much neurosis as psychological adjustment to our predicament – that’s the key, religion is to be understood psychologically, not theologically. It was George Carey, when he was archbishop of Canterbury, not me, who said “Jesus the Saviour is becoming Jesus the Counselor”. (This was in the 1990s.) And it was a well known Boston rabbi, Joshua Loth Liebman, who, soon after the end of World War Two, wrote a best-selling book that admitted that traditional religion had been too harsh on ordinary believers and that the churches and the synagogues and the mosques had a great deal to learn from what he called the new depth psychology – he meant Freudianism. So the church invited the psychologists to put their tanks on its lawn, so to speak. And psychotherapy hasn’t looked back. More people go into therapy now as a search for meaning than for treatment for mental illness.

3. What do you conclude from this?

That worship, the religious impulse, is best understood as a sociological phenomenon, rather than a theological one. In your own books you point up some of the absurdities of religion, but the two I regard as most revealing are the worship of a Lee Enfield motor-bicycle in a region of India, a bike involved in a crash in which its driver was killed but now is reckoned to have supernatural powers. And second, the Internet site, godchecker.com, which lists – apparently without irony – more than 3,000 “supreme beings.” I wonder how many fact-checkers they have. (That last sentence is written in a new type-face I have invented, called Ironics.)

In the recent world-wide survey of religion and economics by Pippa Norris and Ronald Inglehart, they show convincingly that religion is expanding in those areas of the world where ‘existential insecurity’ – poverty, natural disasters, disease, inadequate water supplies, HIV/AIDS, the lack of decent health care – is endemic and growing, whereas in the more prosperous and secure West, including now the USA, atheism is inexorably on the rise. Religion is prevalent among the poor and in decline in the more prosperous parts of the world. It is less that religion is on the rise as poverty is.

4. In your book you survey the views of a great number of people. How would you describe your own atheism?

We are gifted with language and Nietzsche had a gift for language. I follow people like the German poet Rilke and the American philosopher Richard Rorty who say that our way to find meaning in life is to use language to “name” the world, to describe new aspects of it that haven’t been described before, and in so doing enlarge the world we inhabit, enlarge it for everyone. This links science and the arts, in particular poetry. When new sciences are invented they bring with them new language, and scientific discoveries – continental drift, say, dendrochronology, the Higgs boson – that enlarge our understanding precisely through incorporating new language. But so does the best art, the best poetry, the best theatre. This is therefore an exercise for the informed – increasingly the very well informed, as the more mature sciences are now more or less inaccessible to the layman. Language enables us to be both precise about the world, and to generalize. As a result we know that life is made up of lots and lots of beautiful little phenomena, and that large abstractions, however beautiful in their own way, are not enough. There is no one secret to life, other than that there is no one secret to life. If you must have a transcendent idea then make it a search for “the good” or “the beautiful” or “the useful”, always realizing that your answers will be personal, finite and never final. The Anglo-American philosopher Alasdair MacIntyre said “The good life is the life spent seeking the good life.” That implies effort. We can have no satisfaction, no meaning, without effort.

February 23, 2014

The War on Reason

February 19, 2014

The Pleasure of Changing My Mind

(Photo via Simon X)

My recent collision with Daniel Dennett on the topic of free will has caused me to reflect on how best to publicly resolve differences of opinion. In fact, this has been a recurring theme of late. In August, I launched the Moral Landscape Challenge, an essay contest in which I invited readers to attack my conception of moral truth. I received more than 400 entries, and I look forward to publishing the winning essay later this year. Not everyone gets the opportunity to put his views on the line like this, and it is an experience that I greatly value. I spend a lot of time trying to change people’s beliefs, but I’m also in the business of changing my own. And I don’t want to be wrong for a moment longer than I have to be.

In response to the Moral Landscape Challenge, the psychologist Jonathan Haidt issued a challenge of his own: He bet $10,000 that the winning essay will fail to persuade me. This wager seems in good fun, and I welcome it. But Haidt then justified his confidence by offering a pseudo-scientific appraisal of the limits of my intellectual honesty. He did this by conducting a keyword search of my books: The frequency of “certainty” terms, Haidt says, reveals that I (along with the other “New Atheists”) am even more blinkered by dogmatism and bias than Sean Hannity, Glenn Beck, and Anne Coulter. This charge might have been insulting if it weren’t so silly. It is almost impossible to believe that Haidt expects his “research” on this topic to be taken seriously. But apparently he does. In fact, he claims to be continuing it.

Consider the following two passages (keywords expressing “certainty” are in boldface):

It is obvious and undeniable—and must always be remembered—that the Bible was the product of merely human minds. As such, it cannot provide true answers to every factual question that will arise for us in the 21st century.

It is obvious and undeniable—and must always be remembered—that the Bible was the product of Divine Omniscience. As such, it provides true answers to every factual question that will arise for us in the 21st century.

According to Haidt’s methodology, these passages exhibit the same degree of dogmatism. I hope it won’t appear too expressive of certainty on my part to observe how terrifically stupid that conclusion is. If, as Haidt alleges, verbal reasoning is just a way for people to “guard their reputations,” one wonders why he doesn’t use it for that purpose.

Haidt is right to observe that anyone can be misled by his own biases. (He just has the unfortunate habit of writing as though no one else understands this.) I will also concede that I don’t tend to lack confidence in my published views (that is one of the reasons I publish them). After my announcement of the Moral Landscape Challenge, a few readers asked whether I’ve ever changed my mind about anything. I trust my wrangling with Dennett has only deepened this sense of my incorrigibility. Perhaps it is worth recalling more of Haidt’s adamantine wisdom on this point:

[T]he benefits of disconfirmation depend on social relationships. We engage with friends and colleagues, but we reject any critique from our enemies.

Well, then I must be a very hard case. I received a long and detailed criticism of my work from a friend, Dan Dennett, and found it totally unpersuasive. How closed must I be to the views of my enemies?

Enter Jeremy Scahill: I’ve never met Scahill, and I’m not aware of his having attacked me in print, so it might seem a little paranoid to categorize him as an “enemy.” But he recently partnered with Glenn Greenwald and Murtaza Hussain to launch The Intercept, a new website dedicated to “fearless, adversarial journalism.” Greenwald has worked very hard to make himself my enemy, and Hussain has worked harder still. Both men have shown themselves to be unprofessional and unscrupulous whenever their misrepresentations of my views have been pointed out. This is just to say that, while I don’t usually think of myself as having enemies, if I were going to pick someone to prove me wrong on an important topic, it probably wouldn’t be Jeremy Scahill. I am, in Haidt’s terms, highly motivated to reason in a “lawyerly” way so as not to give him the pleasure of changing my mind. But change it he has.

Generally, I have supported President Obama’s approach to waging our war against global jihadism, and I’ve always assumed that I would approve of his targets and methods were I privy to the same information he is. I’ve also said publicly, on more than one occasion, that I thought our actions should be mostly covert. So the president’s campaign of targeted assassination has had my full support, and I lost no sleep over the killing of Anwar al-Awlaki. To me, the fact that he was an American citizen was immaterial.

I have also been very slow to worry about NSA eavesdropping. My ugly encounters with Greenwald may have colored my perception of this important story—but I just don’t know what I think about Edward Snowden. Is he a traitor or a hero? It still seems too soon to say. I don’t know enough about the secrets he has leaked or the consequences of his leaking them to have an opinion on that question.

However, last night I watched Scahill’s Oscar-nominated documentary Dirty Wars—twice. The film isn’t perfect. Despite the gravity of its subject matter, there is something slight about it, and its narrow focus on Scahill seems strangely self-regarding. At moments, I was left wondering whether important facts were being left out. But my primary experience in watching this film was of having my settled views about U.S. foreign policy suddenly and uncomfortably shifted. As a result, I no longer think about the prospects of our fighting an ongoing war on terror in quite the same way. In particular, I no longer believe that a mostly covert war makes strategic or moral sense. Among the costs of our current approach are a total lack of accountability, abuse of the press, collusion with tyrants and warlords, a failure to enlist allies, and an ongoing commitment to secrecy and deception that is corrosive to our politics and to our standing abroad.

Any response to terrorism seems likely to kill and injure innocent people, and such collateral damage will always produce some number of future enemies. But Dirty Wars made me think that the consequences of producing such casualties covertly are probably far worse. This may not sound like a Road to Damascus conversion, but it is actually quite significant. My view of specific questions has changed—for instance, I now believe that the assassination of al-Awlaki set a very dangerous precedent—and my general sense of our actions abroad has grown conflicted. I do not doubt that we need to spy, maintain state secrets, and sometimes engage in covert operations, but I now believe that the world is paying an unacceptable price for the degree to which we are doing these things. The details of how we have been waging our war on terror are appalling, and Scahill’s film paints a picture of callousness and ineptitude that shocked me. Having seen it, I am embarrassed to have been so trusting and complacent with respect to my government’s use of force.

Clearly, this won’t be the last time I’ll be obliged to change my mind. In fact, I’m sure of it. Some things one just knows because they are altogether obvious—and, well, undeniable. At least, one always denies them at one’s peril. So I remain committed to discovering my own biases. And whether they are blatant, or merely implicit, I will work extremely hard to correct them. I’m also confident that if I don’t do this, my readers will inevitably notice. It’s necessary that I proceed under an assurance of my own fallibility—never infallibility!—because it has proven itself to be entirely accurate, again and again. I’m certain this would remain true were I to live forever. Some things are just guaranteed. I think that self-doubt is wholly appropriate—essential, frankly—whenever one attempts to think precisely and factually about anything—or, indeed, about everything. Being a renowned scientist, Jonathan Haidt must fundamentally agree. I urge him to complete his research on my dogmatism and cognitive closure at the soonest opportunity. The man has a gift—it is pure and distinct and positively beguiling. He mustn’t waste it.[

NOTES

Haidt used the following keywords to conduct a searching, scientific analysis of “New Atheist” books:

absolute, absolutely, accura*, all, altogether, always, apparent, assur*, blatant*, certain*, clear, clearly, commit, commitment*, commits, committ*, complete, completed, completely, completes, confidence, confident, confidently, correct*, defined, definite, definitely, definitive*, directly, distinct*, entire*, essential, ever, every, everybod*, everything*, evident*, exact*, explicit*, extremely, fact, facts, factual*, forever, frankly, fundamental, fundamentalis*, fundamentally, fundamentals, guarant*, implicit*, indeed, inevitab*, infallib*, invariab*, irrefu*, must, mustnt, must’nt, mustn’t, mustve, must’ve, necessar*, never, obvious*, perfect*, positiv*, precis*, proof, prove*, pure*, sure*, total, totally, true, truest, truly, truth*, unambigu*, undeniab*, undoubt*, unquestion*, wholly.↩

February 12, 2014

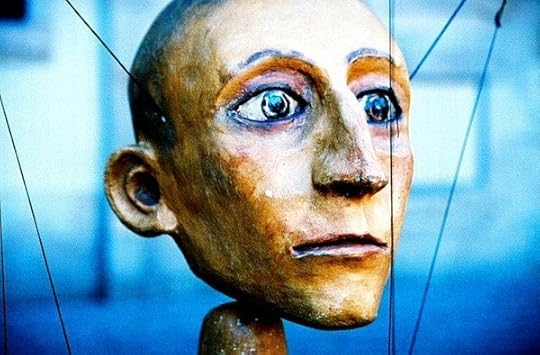

The Marionette’s Lament

(Photo via Max Boschini)

Dear Dan—

I’d like to begin by thanking you for taking the time to review Free Will at such length. Publicly engaging me on this topic is certainly preferable to grumbling in private. Your writing is admirably clear, as always, which worries me in this case, because we appear to disagree about a great many things, including the very nature of our disagreement.

I want to begin by reminding our readers—and myself—that exchanges like this aren’t necessarily pointless. Perhaps you need no encouragement on that front, but I’m afraid I do. In recent years, I have spent so much time debating scientists, philosophers, and other scholars that I’ve begun to doubt whether any smart person retains the ability to change his mind. This is one of the great scandals of intellectual life: The virtues of rational discourse are everywhere espoused, and yet witnessing someone relinquish a cherished opinion in real time is about as common as seeing a supernova explode overhead. The perpetual stalemate one encounters in public debates is annoying because it is so clearly the product of motivated reasoning, self-deception, and other failures of rationality—and yet we’ve grown to expect it on every topic, no matter how intelligent and well-intentioned the participants. I hope you and I don’t give our readers further cause for cynicism on this front.

Unfortunately, your review of my book doesn’t offer many reasons for optimism. It is a strange document—avuncular in places, but more generally sneering. I think it fair to say that one could watch an entire season of Downton Abbey on Ritalin and not detect a finer note of condescension than you manage for twenty pages running.

I am not being disingenuous when I say this museum of mistakes is valuable; I am grateful to Harris for saying, so boldly and clearly, what less outgoing scientists are thinking but keeping to themselves. I have always suspected that many who hold this hard determinist view are making these mistakes, but we mustn’t put words in people’s mouths, and now Harris has done us a great service by articulating the points explicitly, and the chorus of approval he has received from scientists goes a long way to confirming that they have been making these mistakes all along. Wolfgang Pauli’s famous dismissal of another physicist’s work as “not even wrong” reminds us of the value of crystallizing an ambient cloud of hunches into something that can be shown to be wrong. Correcting widespread misunderstanding is usually the work of many hands, and Harris has made a significant contribution.

I hope you will recognize that your beloved Rapoport’s rules have failed you here. If you have decided, according to the rule, to first mention something positive about the target of your criticism, it will not do to say that you admire him for the enormity of his errors and the folly with which he clings to them despite the sterling example you’ve set in your own work. Yes, you may assert, “I am not being disingenuous when I say this museum of mistakes is valuable,” but you are, in truth, being disingenuous. If that isn’t clear, permit me to spell it out just this once: You are asking the word “valuable” to pass as a token of praise, however faint. But according to you, my book is “valuable” for reasons that I should find embarrassing. If I valued it as you do, I should rue the day I wrote it (as you would, had you brought such “value” into the world). And it would be disingenuous of me not to notice how your prickliness and preening appears: You write as one protecting his academic turf. Behind and between almost every word of your essay—like some toxic background radiation—one detects an explosion of professorial vanity.

And yet many readers, along with several of our friends and colleagues, have praised us for airing our differences in so civil a fashion—the implication being that religious demagogues would have declared mutual fatwas and shed each other’s blood. Well, that is a pretty low bar, and I don’t think we should be congratulated for having cleared it. The truth is that you and I could have done a much better job—and produced something well worth reading—had we explored the topic of free will in a proper conversation. Whether we called it a “conversation” or a “debate” would have been immaterial. And, as you know, I urged you to engage me that way on multiple occasions and up to the eleventh hour. But you insisted upon writing your review. Perhaps you thought that I was hoping to spare myself a proper defenestration. Not so. I was hoping to spare our readers a feeling of boredom that surpasseth all understanding.

As I expected, our exchange will now be far less interesting or useful than a conversation/debate would have been. Trading 10,000-word essays is simply not the best way to get to the bottom of things. If I attempt to correct every faulty inference and misrepresentation in your review, the result will be deadly to read. Nor will you be able to correct my missteps, as you could have if we were exchanging 500-word volleys. I could heap misconception upon irrelevancy for pages—as you have done—and there would be no way to stop me. In the end, our readers will be left to reconcile a book-length catalogue of discrepancies.

Let me give you an example, just to illustrate how tedious it is to untie these knots. You quote me as saying:

If determinism is true, the future is set—and this includes all our future states of mind and our subsequent behavior. And to the extent that the law of cause and effect is subject to indeterminism—quantum or otherwise—we can take no credit for what happens. There is no combination of these truths that seems compatible with the popular notion of free will.

You then announce that “the sentence about indeterminism is false”—a point you seek to prove by recourse to an old thought experiment involving a “space pirate” and a machine that amplifies quantum indeterminacy. After which, you lovingly inscribe the following epitaph onto my gravestone:

These are not new ideas. For instance I have defended them explicitly in 1978, 1984, and 2003. I wish Harris had noticed that he contradicts them here, and I’m curious to learn how he proposes to counter my arguments.

You see, dear reader, Harris hasn’t done his homework. What a pity…. But you have simply misread me, Dan—and that entire page in your review was a useless digression. I am not saying that the mere addition of indeterminism to the clockwork makes responsibility impossible. I am saying, as you have always conceded, that seeking to ground free will in indeterminism is hopeless, because truly random processes are precisely those for which we can take no responsibility. Yes, we might still express our beliefs and opinions while being gently buffeted by random events (as you show in your thought experiment), but if our beliefs and opinions were themselves randomly generated, this would offer no basis for human responsibility (much less free will). Bored yet?

You do this again and again in your review. And when you are not misreading me, you construct bad analogies—to sunsets, color vision, automobiles—none of which accomplish their intended purpose. Some are simply faulty (that is, they don’t run through); others make my point for me, demonstrating that you have missed my point (or, somehow, your own). Consider what you say about sunsets to show that free will should not be considered an illusion:

After all, most people used to believe the sun went around the earth. They were wrong, and it took some heavy lifting to convince them of this. Maybe this factoid is a reflection on how much work science and philosophy still have to do to give everyday laypeople a sound concept of free will…. When we found out that the sun does not revolve around the earth, we didn’t then insist that there is no such thing as the sun (because what the folk mean by “sun” is “that bright thing that goes around the earth”). Now that we understand what sunsets are, we don’t call them illusions. They are real phenomena that can mislead the naive.

Of course, the sun isn’t an illusion, but geocentrism is. Our native sense that the sun revolves around a stationary Earth is simply mistaken. And any “project of sympathetic reconstruction” (your compatibilism) with regard to this illusion would be just a failure to speak plainly about the facts. I have never disputed that mental phenomena such as thoughts, efforts, volition, reasoning, and so forth exist. These are the many “suns” of the mind that any scientific theory must conserve (modulo some clarifying surprises, as has happened for the concept of “memory”). But free will is like the geocentric illusion: It is the very thing that gets obliterated once we begin speaking in detail about the origins of our thoughts and actions. You’re not just begging the question here; you’re begging it with a sloppy analogy. The same holds for your reference to color vision (which I discussed in a previous essay).

And when you are not causing problems with your own analogies, you are distorting mine. For instance, you write that you were especially dismayed by the cover of my book, which depicts a puppet theater. This cover image is justified because I argue that each of us is moved by chance and necessity, just as a marionette is set dancing on its strings. But I never suggest that this is the same as being manipulated by a human puppeteer who overrides our actual beliefs and desires and obliges us to behave in ways we do not intend. You seem eager to draw this implication, however, and so you press on with an irrelevant discussion of game theory (another area in which you allege I haven’t done my homework). Again, I am left wishing we had had a conversation that would have prevented so many pedantic digressions.

In any case, I cannot bear to write a long essay that consists in my repeatedly taking your foot out of my mouth. Instead, I will do my best to drive to the core of our disagreement.

Let’s begin by noticing a few things we actually agree about: We agree that human thought and behavior are determined by prior states of the universe and its laws—and that any contributions of indeterminism are completely irrelevant to the question of free will. We also agree that our thoughts and actions in the present influence how we think and act in the future. We both acknowledge that people can change, acquire skills, and become better equipped to get what they want out of life. We know that there is a difference between a morally healthy person and a psychopath, as well as between one who is motivated and disciplined, and thus able to accomplish his aims, and one who suffers a terminal case of apathy or weakness of will. We both understand that planning and reasoning guide human behavior in innumerable ways and that an ability to follow plans and to be responsive to reasons is part of what makes us human. We agree about so many things, in fact, that at one point you brand me “a compatibilist in everything but name.” Of course, you can’t really mean this, because you go on to write as though I were oblivious to most of what human beings manage to accomplish. At some points you say that I’ve thrown the baby out with the bath; at others you merely complain that I won’t call this baby by the right name (“free will”). Which is it?

However, it seems to me that we do diverge at two points:

1. You think that compatibilists like yourself have purified the concept of free will by “deliberately using cleaned-up, demystified substitutes for the folk concepts.” I believe that you have changed the subject and are now ignoring the very phenomenon we should be talking about—the common, felt sense that I/he/she/you could have done otherwise (generally known as “libertarian” or “contra-causal” free will), with all its moral implications. The legitimacy of your attempting to make free will “presentable” by performing conceptual surgery on it is our main point of contention. Whether or not I can convince you of the speciousness of the compatibilist project, I hope we can agree in the abstract that there is a difference between thinking more clearly about a phenomenon and (wittingly or unwittingly) thinking about something else. I intend to show that you are doing the latter.

2. You believe that determinism at the microscopic level (as in the case of Austin’s missing his putt) is irrelevant to the question of human freedom and responsibility. I agree that it is irrelevant for many things we care about (it doesn’t obviate the distinction between voluntary and involuntary behavior, for instance), but it isn’t irrelevant in the way you suggest. And accepting incompatibilism has important intellectual and moral consequences that you ignore—the most important being, in my view, that it renders hatred patently irrational (while leaving love unscathed). If one is concerned about the consequences of maintaining a philosophical position, as I know you are, helping to close the door on human hatred seems far more beneficial than merely tinkering with a popular illusion.

We both know that the libertarian notion of free will makes no scientific or logical sense; you just doubt whether it is widespread among the folk—or you hope that it isn’t, or don’t much care whether it is (in truth, you are not very clear on this point). In defense of your insouciance, you cite a paper by Nahmias et al.[] It probably won’t surprise you that I wasn’t as impressed by this research as you were. Nahmias and his coauthors repeatedly worry that their experimental subjects didn’t really understand the implications of determinism—and on my reading, they had good reason to be concerned. In fact, this is one of those rare papers in which the perfunctory doubts the authors raise, simply to show that they have thought of everything, turn out to be far more compelling than their own interpretations of their data. More than anything, this research suggests that people find the idea of libertarian free will so intuitively compelling that it is very difficult to get them to think clearly about determinism. Of course, I agree that what people think and feel is an empirical question. But it seems to me that we know much more about the popular view of free will than you and Nahmias let on.

It is worth noting that the most common objection I’ve heard to my position on free will is some version of the following:

If there is no free will, why write books or try to convince anyone of anything? People will believe whatever they believe. They have no choice! Your position on free will is, therefore, self-refuting. The fact that you are trying to convince people of the truth of your argument proves that you think they have the very freedom that you deny them.

Granted, some confusion between determinism and fatalism (which you and I have both warned against) is probably operating here, but comments of this kind also suggest that people think they have control over what they believe, as if the experience of being convinced by evidence and argument were voluntary. Perhaps such people also believe that they have decided to obey the law of gravity rather than fly around at their pleasure—but I doubt it. An illusion about mental freedom seems to be very widespread. My argument is that such freedom is incompatible with any form of causation (deterministic or otherwise)—which, as you know, is not a novel view. But I also argue that it is incompatible with the actual character of our subjective experience. That is why I say that the illusion of free will is itself an illusion—which is another way of saying that if one really pays attention (and this is difficult), the illusion of free will disappears.

The popular, folk psychological sense of free will is a moment-to-moment experience, not a theory about the mind. It is primarily a first-person fact, not a third-person account of how human beings function. This distinction between first-person and third-person views was what I was trying to get at in the passage that seems to have mystified you (“I have thought long and hard about this passage, and I am still not sure I understand it…”) Everyone has a third-person picture of the human mind—some of us speak of neural circuits, some speak of souls—but the philosophical problem of free will arises from the fact that most people feel that they author their own thoughts and actions. It is very difficult to say what this feeling consists of or to untangle it from working memory, volition, motor planning, and the rest of what our minds are up to—but there can be little doubt that most people feel that they are the conscious source of their own thoughts and actions. Of course, you may wish to deny this very assertion, or believe it more parsimonious to say that we just don’t know how most people feel—and that might be a point worth discussing. But rather than deny the claim, you simply lose sight of it—shifting from first-person experiences to third-person accounts of phenomena that lie outside consciousness.

It is true, of course, that most educated people believe the whole brain is involved in making them who they are (indeed, you and I both believe this). But they experience only some of what goes on inside their brains. Contrary to what you suggest, I was not advancing a Cartesian theory of consciousness (a third-person view), or any “daft doctrine of [my] own devising.” I was simply drawing a line between what people experience and what they don’t (first-person). The moment you show that a person’s thoughts and actions were determined by events that he did not and could not see, feel, or anticipate, his third-person account of himself may remain unchanged (“Of course, I know that much of what goes on in my brain is unconscious, determined by genes, and so forth. So what?”), but his first-person sense of autonomy comes under immediate pressure—provided he is paying attention. As a matter of experience (first-person), there is a difference between being conscious of something and not being conscious of it. And if what a person is unconscious of are the antecedent causes of everything he thinks and does, this fact makes a mockery of the subjective freedom he feels he has. It is not enough at that point for him to simply declare theoretically (third-person) that these antecedent causes are “also me.”

Average Joe feels that he has free will (first-person) and doesn’t like to be told that it is an illusion. I say it is: Consider all the roots of your behavior that you cannot see or feel (first-person), cannot control (first-person), and did not summon into existence (first-person). You say: Nonsense! Average Joe contains all these causes. He is his genes and neurons too (third-person). This is where you put the rabbit in the hat.

Imagine that we live in a world where more or less everyone believes in the lost kingdom of Atlantis. You and your fellow compatibilists come along and offer comfort: Atlantis is real, you say. It is, in fact, the island of Sicily. You then go on to argue that Sicily answers to most of the claims people through the ages have made about Atlantis. Of course, not every popular notion survives this translation, because some beliefs about Atlantis are quite crazy, but those that really matter—or should matter, on your account—are easily mapped onto what is, in fact, the largest island in the Mediterranean. Your work is done, and now you insist that we spend the rest of our time and energy investigating the wonders of Sicily.

The truth, however, is that much of what causes people to be so enamored of Atlantis—in particular, the idea that an advanced civilization disappeared underwater—can’t be squared with our understanding of Sicily or any other spot on earth. So people are confused, and I believe that their confusion has very real consequences. But you rarely acknowledge the ways in which Sicily isn’t like Atlantis, and you don’t appear interested when those differences become morally salient. This is what strikes me as wrongheaded about your approach to free will.

For instance, ordinary people want to feel philosophically justified in hating evildoers and viewing them as the ultimate cause of their evil. This moral attitude is always vulnerable to our getting more information about causation—and in situations where the underlying causes of a person’s behavior become too clear, our feelings about their responsibility begin to shift. This is why I wrote that fully understanding the brain of a normal person would be analogous to finding an exculpatory tumor in it. I am not claiming that there is no difference between a normal person and one with impaired self-control. The former will be responsive to certain incentives and punishments, and the latter won’t. (And that is all the justification we need to resort to carrots and sticks or to lock incorrigibly dangerous people away forever.) But something in our moral attitude does change when we catch sight of these antecedent causes—and it should change. We should admit that a person is unlucky to be given the genes and life experience that doom him to psychopathy. Again, that doesn’t mean we can’t lock him up. But hating him is not rational, given a complete understanding of how he came to be who he is. Natural, yes; rational, no. Feeling compassion for him could be rational, however—in the same way that we could feel compassion for the six-year-old boy who was destined to become Jeffrey Dahmer. And while you scoff at “medicalizing” human evil, a complete understanding of the brain would do just that. Punishment is an extraordinarily blunt instrument. We need it because we understand so little about the brain, and our ability to influence it is limited. However, imagine that two hundred years in the future we really know what makes a person tick; Procrustean punishments won’t make practical sense, and they won’t make moral sense either. But you seem committed to the idea that certain people might actually deserve to be punished—if not precisely for the reasons that common folk imagine, nevertheless for reasons that have little or nothing to do with the good consequences that such punishments might have, all things considered. In other words, your compatibilism seems an attempt to justify the conventional notion of blame, which my view denies. This is a difference worth focusing on.

Let’s examine Austin’s example of his missed putt:

Consider the case where I miss a very short putt and kick myself because I could have holed it. It is not that I should have holed it if I had tried: I did try, and missed. It is not that I should have holed it if conditions had been different: that might of course be so, but I am talking about conditions as they precisely were, and asserting that I could have holed it. There is the rub. Nor does ‘I can hole it this time’ mean that I shall hole it this time if I try or if anything else; for I may try and miss, and yet not be convinced that I could not have done it; indeed, further experiments may confirm my belief that I could have done it that time, although I did not. (J.L. Austin. 1961. “Ifs and Cans,” in Austin, Philosophical Papers, edited by J. Urmson and G. Warnock. Oxford, Clarendon Press.)

This is a good place to start, because you say the following in your review:

I consider Austin’s mistake to be the central core of the ongoing confusion about free will; if you look at the large and intricate philosophical literature about incompatibilism, you will see that just about everyone assumes, without argument, that it is not a mistake.

I am happy to take the bait. I see no problem with using Austin’s example to support incompatibilism. I should emphasize, however, that I am discussing only the implications of Austin’s point for an account of free will, not how it functions in his original essay (which, as you know, was an analysis of the relationship between the terms “if” and “can,” not a sustained argument against free will).[]

Let’s make sure you and I are standing on the same green: We agree that a human being, whatever his talents, training, and aspirations, will think, intend, and behave exactly as he does given the totality of conditions in the moment. That is, whatever his ability as a golfer, Austin would miss that same putt a trillion times in a row—provided that every atom and charge in the universe was exactly as it had been the first time he missed it. You think this fact (we can call it determinism, as you do, but it includes the contributions of indeterminism as well, provided they remain the same[]) says nothing about free will. You think the fact that Austin could make nearly identical putts in other moments—with his brain in a slightly different state, the green a touch slower, and so forth—is all that matters. I agree that it is what matters when assessing his abilities as a golfer: Here, we don’t care about the single NMDA receptor that screwed up his swing on one particular putt; we care about the statistics of his play, round after round. But to speak clearly and honestly (that is, scientifically) about the actual causes of what happens in the world in each moment, we must focus on the particular.

What are we really saying when we claim that Austin could have made that putt (the one he missed)? As you point out, we aren’t actually referencing that putt at all. We are saying that Austin has made similar putts in the past and we can count on him to do so in the future—provided that he tries, doesn’t suffer some neurological injury, and so forth. However, we are also saying that Austin would have made this putt had something not gotten in his way. He had the general ability, after all, so something went wrong.

Then why did Austin miss his putt? Because some condition necessary for his making it was absent. What if that condition was sufficient effort, of the sort that he was generally capable of making? Why didn’t he make that effort? The answer is the same: Because some condition necessary for his making it was absent. From a scientific perspective, his failure to try is just another missed putt. Austin tried precisely as hard as he did. Next time he might try harder. But this time—with the universe and his brain exactly as they were—he couldn’t have tried in any other way.

To say that Austin really should have made that putt or tried harder is just a way of admonishing him to put forth greater effort in the future. We are not offering an account of what actually happened (his failure to sink his putt or his failure to try). You and I agree that such admonishments have effects and that these effects are perfectly in harmony with the truth of determinism. There is, in fact, nothing about incompatibilism that prevents us from urging one another (and ourselves) to do things differently in the future, or from recognizing that such exhortations often work. The things we say to one another (and to ourselves) are simply part of the chain of causes that determine how we think and behave.

But can we blame Austin for missing his putt? No. Can we blame him for not trying hard enough? Again, the answer is no—unless blaming him were just a way of admonishing him to try harder in the future. For us to consider him truly responsible for missing the putt or for failing to try, we would need to know that he could have acted other than he did. Yes, there are two readings of “could”—and you find only one of them interesting. But they are both interesting, and the one you ignore is morally consequential. One reading refers to a person’s (or a car’s, in your example) general capacities. Could Austin have sunk his putt, as a general matter, in similar situations? Yes. Could my car go 80 miles per hour, though I happen to be driving at 40? Yes. The other reading is one you consider to be a red herring. Could Austin have sunk that very putt, the one he missed? No. Could he have tried harder? No. His failure on both counts was determined by the state of the universe (especially his nervous system). Of course, it isn’t irrational to treat him as someone who has the general ability to make putts of that sort, and to urge him to try harder in the future—and it would be irrational to admonish a person who lacked such ability. You are right to believe that this distinction has important moral implications: Do we demand that mosquitoes and sharks behave better than they do? No. We simply take steps to protect ourselves from them. The same futility prevails with certain people—psychopaths and others whom we might deem morally insane. It makes sense to treat people who have the general capacity to behave well but occasionally lapse differently from those who have no such capacity and on whom any admonishment would be wasted. You are right to think that these distinctions do not depend on “absolute free will.” But this doesn’t mean nothing changes once we realize that a person could never have made the putt he just missed, or tried harder than he did, or refrained from killing his neighbor with a hammer.

Holding people responsible for their past actions makes no sense apart from the effects that doing so will have on them and the rest of society in the future (e.g. deterrence, rehabilitation, keeping dangerous people off our streets). The notion of moral responsibility, therefore, is forward-looking. But it is also paradoxical. People who have the most ability (self-control, opportunity, knowledge, etc.) would seem to be the most blameworthy when they fail or misbehave. For instance, when Tiger Woods misses a three-foot putt, there is a much greater temptation to say that he really should have made it than there is in the case of an average golfer. But Woods’s failure is actually more anomalous. Something must have gone wrong if a person of his ability missed so easy a putt. And he wouldn’t stand to benefit (much) from being admonished to try harder in the future. So in some ways, holding a person responsible for his failures seems to make even less sense the more worthy of responsibility he becomes in the conventional sense.

We agree that given past states of the universe and its laws, we can only do what we in fact do, and not do otherwise. You don’t think this truth has many psychological or moral consequences. In fact, you describe the lawful propagation of certain types of events as a form of “freedom.” But consider the emergence of this freedom in any specific human being: It is fully determined by genes and the environment (add as much randomness as you like). Imagine the first moment it comes online—in, say, the brain of a four-year-old child. Consider this first, “free” increment of executive control to emerge from the clockwork. It will emerge precisely to the degree that it does, and when, according to causes that have nothing to do with this person’s freedom. And it will perpetuate its effects on future states of his nervous system in total conformity to natural laws. In each instant, Austin will make his putt or miss it; and he will try his best or not. Yes, he is “free” to do whatever it is he does based on past states of the universe. But the same could be said of a chimp or a computer—or, indeed, a line of dominoes. Perhaps such mechanical equivalences don’t bother you, but they might come as a shock to those who think that you have rescued their felt sense of autonomy from the gears of determinism.

In your review, you called my book a “political tract.” The irony is that your argument against incompatibilism seems almost entirely political. At times you write as though nothing is at stake apart from the future of the terms free will and compatibilism. More generally, however, you seem to think that the consequences of taking incompatibilism seriously will be pernicious:

If nobody is responsible, not really, then not only should the prisons be emptied, but no contract is valid, mortgages should be abolished, and we can never hold anybody to account for anything they do. Preserving “law and order” without a concept of real responsibility is a daunting task.

These concerns, while not irrational, have nothing to do with the philosophical or scientific merits of the case. They also arise out of a failure to understand the practical consequences of my view. I am no more inclined to release dangerous criminals back onto our streets than you are.

In my book, I argue that an honest look at the causal underpinnings of human behavior, as well as at one’s own moment-to-moment experience, reveals free will to be an illusion. (I would say the same about the conventional sense of “self,” but that requires more discussion, and it is the topic of my next book.) I also claim that this fact has consequences—good ones, for the most part—and that is another reason it is worth exploring. But I have not argued for my position primarily out of concern for the consequences of accepting it. And I believe you have.

Of course, I can’t quite blame you for missing that putt, Dan. But I can admonish you to be more careful in the future.

NOTES

E. Nahmias, S. Morris, T. Nadelhoffer & J. Turner. 2005. “Surveying Freedom: Folk Intuitions about Free Will and Moral Responsibility,” Philosophical Psychology, 18, pp. 561–584.↩

Reading the rest of Austin’s “notorious” footnote, I’m not sure he made the mistake you attribute to him. The very next sentence following your partial quotation reads, “But if I tried my hardest, say, and missed, surely there must have been something that caused me to fail, that made me unable to succeed? So that I could not have holed it.” To my eye, this closes the door on his alleged confusion about what subsequent experiments would have shown.↩

You consistently label me a “hard determinist” which is a little misleading. The truth is that I am agnostic as to whether determinism is strictly true (though it must be approximately true, as far as human beings are concerned). Insofar as it is, free will is impossible. But indeterminism offers no relief. My actual view is that free will is conceptually incoherent and both subjectively and objectively nonexistent. Causation, whether deterministic or random, offers no basis for free will.↩

January 29, 2014

Coming in September

My next book, Waking Up: A Guide to Spirituality Without Religion, will be published by Simon & Schuster in September. This is the third cover that David Drummond has created for me (along with those for Lying and Free Will). Great job, David!

January 14, 2014

Our Narrow Definition of “Science”

(Photo via Katinka Matson)

From Edge.org:

Science advances by discovering new things and developing new ideas. Few truly new ideas are developed without abandoning old ones first. As theoretical physicist Max Planck (1858-1947) noted, “A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it.” In other words, science advances by a series of funerals. Why wait that long?

Ideas change, and the times we live in change. Perhaps the biggest change today is the rate of change. What established scientific idea is ready to be moved aside so that science can advance?

Our Narrow Definition of “Science”

Search your mind, or pay attention to the conversations you have with other people, and you will discover that there are no real boundaries between science and philosophy—or between those disciplines and any other that attempts to make valid claims about the world on the basis of evidence and logic. When such claims and their methods of verification admit of experiment and/or mathematical description, we tend to say that our concerns are “scientific”; when they relate to matters more abstract, or to the consistency of our thinking itself, we often say that we are being “philosophical”; when we merely want to know how people behaved in the past, we dub our interests “historical” or “journalistic”; and when a person’s commitment to evidence and logic grows dangerously thin or simply snaps under the burden of fear, wishful thinking, tribalism, or ecstasy, we recognize that he is being “religious.”

The boundaries between true intellectual disciplines are currently enforced by little more than university budgets and architecture. Is the Shroud of Turin a medieval forgery? This is a question of history, of course, and of archaeology, but the techniques of radiocarbon dating make it a question of chemistry and physics as well. The real distinction we should care about—the observation of which is the sine qua non of the scientific attitude—is between demanding good reasons for what one believes and being satisfied with bad ones.

The scientific attitude can handle whatever happens to be the case. Indeed, if the evidence for the inerrancy of the Bible and the resurrection of Jesus Christ were good, one could embrace the doctrine of fundamentalist Christianity scientifically. The problem, of course, is that the evidence is either terrible or nonexistent—hence the partition we have erected (in practice, never in principle) between science and religion.

Confusion on this point has spawned many strange ideas about the nature of human knowledge and the limits of “science.” People who fear the encroachment of the scientific attitude—especially those who insist upon the dignity of believing in one or another Iron Age god—will often make derogatory use of words such as materialism, neo-Darwinism, and reductionism, as if those doctrines had some necessary connection to science itself.

There are, of course, good reasons for scientists to be materialist, neo-Darwinian, and reductionist. However, science entails none of those commitments, nor do they entail one another. If there were evidence for dualism (immaterial souls, reincarnation), one could be a scientist without being a materialist. As it happens, the evidence here is extraordinarily thin, so virtually all scientists are materialists of some sort. If there were evidence against evolution by natural selection, one could be a scientific materialist without being a neo-Darwinist. But as it happens, the general framework put forward by Darwin is as well established as any other in science. If there were evidence that complex systems produced phenomena that cannot be understood in terms of their constituent parts, it would be possible to be a neo-Darwinist without being a reductionist. For all practical purposes, that is where most scientists find themselves, because every branch of science beyond physics must resort to concepts that cannot be understood merely in terms of particles and fields. Many of us have had “philosophical” debates about what to make of this explanatory impasse. Does the fact that we cannot predict the behavior of chickens or fledgling democracies on the basis of quantum mechanics mean that those higher-level phenomena are something other than their underlying physics? I would vote “no” here, but that doesn’t mean I envision a time when we will use only the nouns and verbs of physics to describe the world.

But even if one thinks that the human mind is entirely the product of physics, the reality of consciousness becomes no less wondrous, and the difference between happiness and suffering no less important. Nor does such a view suggest that we will ever find the emergence of mind from matter fully intelligible; consciousness may always seem like a miracle. In philosophical circles, this is known as “the hard problem of consciousness”—some of us agree that this problem exists, some of us don’t. Should consciousness prove conceptually irreducible, remaining the mysterious ground for all we can conceivably experience or value, the rest of the scientific worldview would remain perfectly intact.

The remedy for all this confusion is simple: We must abandon the idea that science is distinct from the rest of human rationality. When you are adhering to the highest standards of logic and evidence, you are thinking scientifically. And when you’re not, you’re not.

Read 170 other responses on Edge.org.

December 14, 2013

Thinking about Good and Evil

In 2010, John Brockman and the Edge Foundation held a conference entitled “The New Science of Morality.” I attended along with Roy Baumeister, Paul Bloom, Joshua D. Greene, Jonathan Haidt, Marc Hauser, Joshua Knobe, Elizabeth Phelps, and David Pizarro. Some of our conversations have now been published in a book (along with many interesting essays) entitled Thinking: The New Science of Decision-Making, Problem-Solving, and Prediction

John Brockman and Harper Collins have given me permission to reprint my edited remarks here.

* * *

What I intended to say today has been pushed around a little bit by what has already been said and by a couple of sidebar conversations. That is as it should be, no doubt. But if my remarks are less linear than you would hope, blame that—and the jet lag.

I think we should differentiate three projects that seem to me to be easily conflated, but which are distinct and independently worthy endeavors:

The first project is to understand what people do in the name of “morality.” We can look at the world, witnessing all of the diverse behaviors, rules, cultural artifacts, and morally salient emotions like empathy and disgust, and we can study how these things play out in human communities, both in our time and throughout history. We can examine all these phenomena in as nonjudgmental a way as possible and seek to understand them. We can understand them in evolutionary terms, and we can understand them in psychological and neurobiological terms, as they arise in the present. And we can call the resulting data and the entire effort a “science of morality.” This would be a purely descriptive science of the sort that I hear Jonathan Haidt advocating.

For most scientists, this project seems to exhaust all that legitimate points of contact between science and morality—that is, between science and judgments of good and evil and right and wrong. But I think there are two other projects that we could concern ourselves with, which are arguably more important.

The second project would be to actually get clearer about what we mean, and should mean, by the term “morality,” understanding how it relates to human well-being altogether, and to use this new discipline to think more intelligently about how to maximize human well-being. Of course, philosophers may think that this begs some of the important questions, and I’ll get back to that. But I think this is a distinct project, and it’s not purely descriptive. It’s a normative project. The question is, how can we think about moral truth in the context of science?

The third project is a project of persuasion: How can we persuade all of the people who are committed to silly and harmful things in the name of “morality” to change their commitments and to lead better lives? I think that this third project is actually the most important project facing humanity at this point in time. It subsumes everything else we could care about—from arresting climate change, to stopping nuclear proliferation, to curing cancer, to saving the whales. Any effort that requires that we collectively get our priorities straight and marshal our time and resources would fall within the scope of this project. To build a viable global civilization we must begin to converge on the same economic, political, and environmental goals.

Obviously the project of moral persuasion is very difficult—but it strikes me as especially difficult if you can’t figure out in what sense anyone could ever be right and wrong about questions of morality or about questions of human values. Understanding right and wrong in universal terms is Project Two, and that’s what I’m focused on.

There are impediments to thinking about Project Two: the main one being that most right-thinking, well-educated, and well-intentioned people—certainly most scientists and public intellectuals, and I would guess, most journalists—have been convinced that something in the last 200 years of intellectual progress has made it impossible to actually speak about “moral truth.” Not because human experience is so difficult to study or the brain too complex, but because there is thought to be no intellectual basis from which to say that anyone is ever right or wrong about questions of good and evil.

My aim is to undermine this assumption, which is now the received opinion in science and philosophy. I think it is based on several fallacies and double standards and, frankly, on some bad philosophy. The first thing I should point out is that, apart from being untrue, this view has consequences.

In 1947, when the United Nations was attempting to formulate a universal declaration of human rights, the American Anthropological Association stepped forward and said that it couldn’t be done—for this would be to merely foist one provincial notion of human rights on the rest of humanity. Any notion of human rights is the product of culture, and declaring a universal conception of human rights is an intellectually illegitimate thing to do. This was the best our social sciences could do with the crematory of Auschwitz still smoking.

But, of course, it has long been obvious that we need to converge, as a global civilization, in our beliefs about how we should treat one another. For this, we need some universal conception of right and wrong. So in addition to just not being true, I think skepticism about moral truth actually has consequences that we really should worry about.

Definitions matter. And in science we are always in the business of framing conversations and making definitions. There is nothing about this process that condemns us to epistemological relativism or that nullifies truth claims. We define “physics” as, loosely speaking, our best effort to understand the behavior of matter and energy in the universe. The discipline is defined with respect to the goal of understanding how matter behaves.

Of course, anyone is free to define “physics” in some other way. A Creationist physicist could come into this room and say, “Well, that’s not my definition of physics. My physics is designed to match the Book of Genesis.” But we are free to respond to such a person by saying, “You know, you really don’t belong at this conference. That’s not ‘physics’ as we are interested in it. You’re using the word differently. You’re not playing our language game.” Such a gesture of exclusion is both legitimate and necessary. The fact that the discourse of physics is not sufficient to silence such a person, the fact that he cannot be brought into our conversation and subdued on our terms, does not undermine physics as a domain of objective truth.

And yet, on the subject of morality, we seem to think that the possibility of differing opinions is a deal breaker. The fact that someone can come forward and say that his morality has nothing to do with human flourishing—that it depends upon following shariah law, for instance—the very fact that such a position can be articulated proves that there’s no such thing as moral truth. Morality, therefore, must be a human invention. But this is a fallacy.

We have an intuitive physics, but much of our intuitive physics is wrong with respect to the goal of understanding how matter and energy behave in this universe. I am saying that we also have an intuitive morality, and much of our intuitive morality may be wrong with respect to the goal of maximizing human flourishing—and with reference to the facts that govern the well-being of conscious creatures, generally.

So I will argue, briefly, that the only sphere of legitimate moral concern is the well-being of conscious creatures. I’ll say a few words in defense of this assertion, but I think the idea that it has to be defended is the product of several fallacies and double standards that we’re not noticing. I don’t know that I will have time to expose all of them, but I’ll mention a few.

Thus far, I’ve introduced two things: the concept of consciousness and the concept of well-being. I am claiming that consciousness is the only context in which we can talk about morality and human values. Why is consciousness not an arbitrary starting point? Well, what’s the alternative? Just imagine someone coming forward claiming to have some other source of value that has nothing to do with the actual or potential experience of conscious beings. Whatever this is, it must be something that cannot affect the experience of anything in the universe, in this life or in any other.

If you put this imagined source of value in a box, I think what you would have in that box would be—by definition—the least interesting thing in the universe. It would be—again, by definition—something that cannot be cared about. Any other source of value will have some relationship to the experience of conscious beings. So I don’t think consciousness is an arbitrary starting point. When we’re talking about right and wrong, and good and evil, and about outcomes that matter, we are necessarily talking about actual or potential changes in conscious experience.

I would further add that the concept of “well-being” captures everything we can care about in the moral sphere. The challenge is to have a definition of well-being that is truly open-ended and can absorb everything we care about. This is why I tend not to call myself a “consequentialist” or a “utilitarian,” because traditionally, these positions have bounded the notion of consequences in such a way as to make them seem very brittle and exclusive of other concerns—producing a kind of body count calculus that only someone with Asperger’s could adopt.

Consider the Trolley Problem: If there just is, in fact, a difference between pushing a person onto the tracks and flipping a switch—perhaps in terms of the emotional consequences of performing these actions—well, then this difference has to be taken into account. Or consider Peter Singer’s Shallow Pond problem: We all know that it would take a very different kind of person to walk past a child drowning in a shallow pond, out of concern for getting his suit wet, than it takes to ignore an appeal from UNICEF. It says much more about you if you can walk past that pond. If we were all this sort of person, there would be terrible ramifications as far as the eye can see. It seems to me, therefore, that the challenge is to get clear about what the actual consequences of an action are, about what changes in human experience are possible, and about which changes matter.

In thinking about a universal framework for morality, I now think in terms of what I call a “moral landscape.” Perhaps there is a place in hell for anyone who would repurpose a cliché in this way, but the phrase, “the moral landscape” actually captures what I’m after: I’m envisioning a space of peaks and valleys, where the peaks correspond to the heights of flourishing possible for any conscious system, and the valleys correspond to the deepest depths of misery.

To speak specifically of human beings for the moment: any change that can affect a change in human consciousness would lead to a translation across the moral landscape. So changes to our genome, and changes to our economic systems—and changes occurring on any level in between that can affect human well-being for good or for ill—would translate into movements within this space of possible human experience.

A few interesting things drop out of this model: Clearly, it is possible, or even likely, that there are many peaks on the moral landscape. To speak specifically of human communities: perhaps there is a way to maximize human flourishing in which we follow Peter Singer as far as we can go, and somehow train ourselves to be truly dispassionate to friends and family, without weighting our children’s welfare more than the welfare of other children, and perhaps there’s another peak where we remain biased toward our own children, within certain limits, while correcting for this bias by creating a social system which is, in fact, fair. Perhaps there are a thousand different ways to tune the variable of selfishness versus altruism, to land us on a peak on the moral landscape.

However, there will be many more ways to not be on a peak. And it is clearly possible to be wrong about how to move from our present position to the nearest available peak. This follows directly from the observation that whatever conscious experiences are possible for us are a product of the way the universe is. Our conscious experience arises out of the laws of nature, the states of our brain, and our entanglement with the world. Therefore, there are right and wrong answers to the question of how to maximize human flourishing in any moment.

This becomes incredibly easy to see when we imagine there being only two people on earth: we can call them Adam and Eve. Ask yourself, are there right and wrong answers to the question of how Adam and Eve might maximize their well-being? Clearly there are. Wrong answer number one: they can smash each other in the face with a large rock. This will not be the best strategy to maximize their well-being.

Of course, there are zero sum games they could play. And yes, they could be psychopaths who might utterly fail to collaborate. But, clearly, the best responses to their circumstance will not be zero-sum. The prospects of their flourishing and finding deeper and more durable sources of satisfaction will only be exposed by some form of cooperation. And all the worries that people normally bring to these discussions—like deontological principles or a Rawlsian concern about fairness—can be considered in the context of our asking how Adam and Eve can navigate the space of possible experiences so as to find a genuine peak of human flourishing, regardless of whether it is the only peak. Once again, multiple, equivalent but incompatible peaks still allow for a realistic space in which there are right and wrong answers to moral questions.

One thing we must not get confused about is the difference between answers in practice and answers in principle. Needless to say, fully understanding the possible range of experiences available to Adam and Eve represents a fantastically complicated problem. And it gets more complicated when we add another 7 billion people to the experiment. But I would argue that it’s not a different problem; it just gets more complicated.

By analogy, consider economics: Is economics a science yet? Apparently not, judging from the last few years. Maybe economics will never get better than it is now. Perhaps we’ll be surprised every decade or so by something terrible, and we’ll be forced to concede that we’re blinded by the complexity of our situation. But to say that it is difficult or impossible to answer certain problems in practice does not even slightly suggest that there are no right and wrong answers to these problems in principle.

The complexity of economics would never tempt us to say that there are no right and wrong ways to design economic systems, or to respond to financial crises. Nobody will ever say that it’s a form of bigotry to criticize another country’s response to a banking failure. Just imagine how terrifying it would be if the smartest people around all more or less agreed that we had to be nonjudgmental about everyone’s view of economics and about every possible response to a global economic crisis.

And yet that is exactly where we stand as an intellectual community on the most important questions in human life. I don’t think you have enjoyed the life of the mind until you have witnessed a philosopher or scientist talking about the “contextual legitimacy” of the burka, or of female genital excision, or any of these other barbaric practices that we know cause needless human misery. We have convinced ourselves that somehow science is by definition a value-free space and that we can’t make value judgments about beliefs and practices that needlessly derail our attempts to build happy and sane societies.

The truth is, science is not value-free. Good science is the product of our valuing evidence, logical consistency, parsimony, and other intellectual virtues. And if you don’t value those things, you can’t participate in the scientific conversation. I’m saying we need not worry about the people who don’t value human flourishing, or who say they don’t. We need not listen to people who come to the table saying, “You know, we want to cut the heads off adulterers at half-time at our soccer games because we have a book dictated by the Creator of the universe which says we should.” In response, we are free to say, “Well, you appear to be confused about everything. Your “physics” isn’t physics, and your “morality” isn’t morality.” These are equivalent moves, intellectually speaking. They are borne of the same entanglement with real facts about the way the universe is. In terms of morality, our conversation can proceed with reference to facts about the changing experiences of conscious creatures. It seems to me to be just as legitimate, scientifically, to define “morality” in this way as it is to define “physics” in terms of the behavior of matter and energy. But most people engaged in of the scientific study of morality don’t seem to realize this.

From the publisher: Daniel Kahneman on the power (and pitfalls) of human intuition and “unconscious” thinking • Daniel Gilbert on desire, prediction, and why getting what we want doesn’t always make us happy • Nassim Nicholas Taleb on the limitations of statistics in guiding decision-making • Vilayanur Ramachandran on the scientific underpinnings of human nature • Simon Baron-Cohen on the startling effects of testosterone on the brain • Daniel C. Dennett on decoding the architecture of the “normal” human mind • Sarah-Jayne Blakemore on mental disorders and the crucial developmental phase of adolescence • Jonathan Haidt, Sam Harris, and Roy Baumeister on the science of morality, ethics, and the emerging synthesis of evolutionary and biological thinking • Gerd Gigerenzer on rationality and what informs our choices

December 6, 2013

Sam Harris's Blog

- Sam Harris's profile

- 9007 followers