Gennaro Cuofano's Blog, page 36

August 23, 2025

The New Macroeconomic Framework

When Geopolitics Overrides Economics

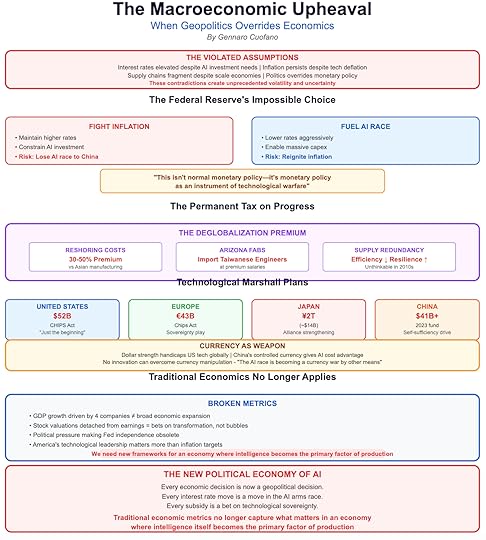

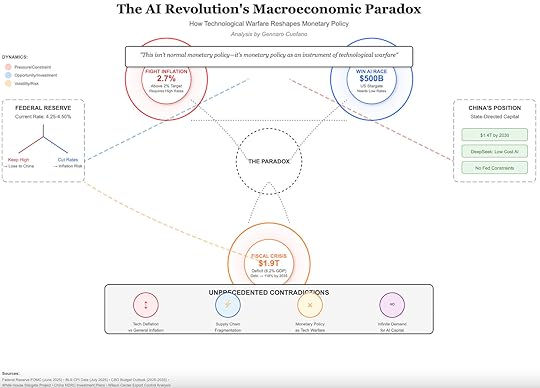

When Geopolitics Overrides EconomicsThe AI revolution is unfolding in a macroeconomic environment that violates all the assumptions of previous technology booms. Interest rates remain elevated even as governments desperately want to lower them to fuel AI investment. Inflation persists despite technological deflation. Supply chains fragment even as economies of scale demand integration. These contradictions create unprecedented volatility and uncertainty.

The interest rate dilemma exemplifies the new political economy of AI. The Federal Reserve faces an impossible choice: maintain higher rates to fight inflation, or lower rates to ensure America wins the AI race against China. This isn’t normal monetary policy—it’s monetary policy as an instrument of technological warfare. Political pressure to lower rates intensifies daily, with tech executives and defense officials united in arguing that America’s technological leadership matters more than inflation targets.

The deglobalization premium has become a permanent tax on technological progress. Reshoring semiconductor production might cost 30-50% more than Asian manufacturing. Building fabs in Arizona requires importing Taiwanese engineers at premium salaries because America lacks the expertise. Every layer of supply chain redundancy reduces efficiency but increases resilience—a trade-off that would have been unthinkable in the efficiency-obsessed 2010s.

The fiscal implications stagger the imagination. Governments worldwide are preparing massive subsidies for domestic semiconductor production—the U.S. CHIPS Act’s $52 billion is just the beginning. Europe plans €43 billion. Japan allocates ¥2 trillion. These aren’t normal industrial subsidies—they’re technological Marshall Plans where governments pick winners and losers in the AI race.

Currency dynamics add another layer of complexity. The dollar’s strength, traditionally a sign of American economic power, now handicaps U.S. tech companies competing globally. Meanwhile, China’s controlled currency gives its AI companies a cost advantage that no amount of innovation can overcome. The AI race is becoming a currency war by other means.

Most fundamentally, traditional economic metrics no longer capture what matters.

GDP growth driven by the capex spending of four companies isn’t the same as broad-based economic expansion. Stock market valuations detached from earnings multiples aren’t traditional bubbles—they’re bets on technological transformation. We need new frameworks for understanding an economy where intelligence itself becomes the primary factor of production.

The post The New Macroeconomic Framework appeared first on FourWeekMBA.

The AI GPU Wars

NVIDIA’s Fortress Under Siege

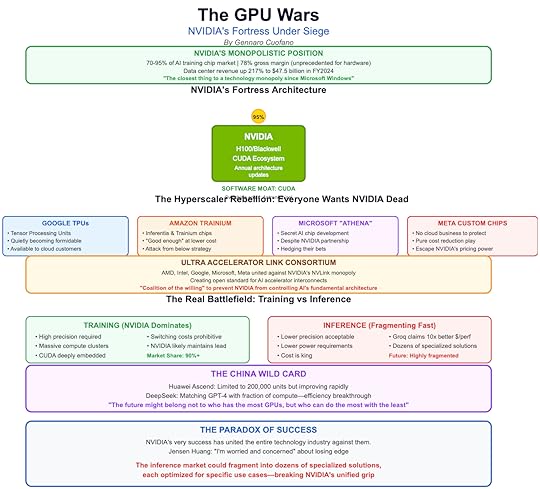

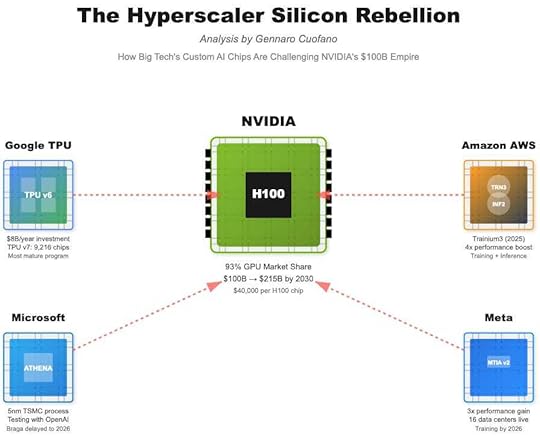

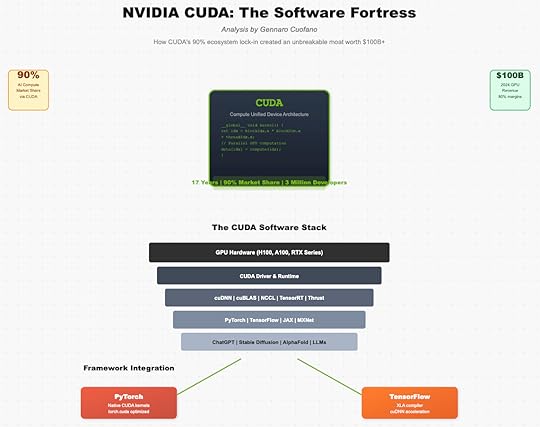

NVIDIA’s Fortress Under SiegeNVIDIA has built the closest thing to a technology monopoly since Microsoft’s Windows dominance, controlling 70-95% of AI training chips with margins that would make luxury brands envious. Their 73-75% gross margin on hardware is virtually unprecedented—Intel and AMD struggle to reach half that (at least not on advanced GPUs). But paradoxically, NVIDIA’s very success has united the entire technology industry against them.

The hyperscaler rebellion represents the most serious threat to NVIDIA’s dominance. Google’s Tensor Processing Units (TPUs) have quietly become a formidable alternative, powering much of Google’s own AI workload and increasingly available to cloud customers. Amazon’s Trainium and Inferentia chips attack from below, offering “good enough” performance at dramatically lower costs. Microsoft, despite its deep partnership with NVIDIA, is secretly developing its own AI chips codenamed “Athena.” Even Meta, which has no cloud business to protect, is designing custom chips simply to escape NVIDIA’s pricing power.

The formation of the Ultra Accelerator Link consortium in 2024 represents a technological “coalition of the willing” against NVIDIA’s NVLink monopoly. AMD, Intel, Google, Microsoft, Meta, and others have united to create an open standard for connecting AI accelerators. This isn’t just about creating alternatives—it’s about preventing NVIDIA from controlling the fundamental interconnect architecture that will define AI infrastructure for the next decade.

But NVIDIA isn’t standing still. Jensen Huang has committed to releasing new architectures annually instead of biannually, a pace that would have been considered impossible just years ago. The new Blackwell architecture promises dramatic efficiency improvements.

More cleverly, NVIDIA is making itself indispensable through software, not just hardware. Their CUDA ecosystem has become so deeply embedded in AI development that switching costs are astronomical.

The real battlefield is shifting from training to inference—the deployment of AI models for real-world tasks. While NVIDIA dominates training, inference presents different requirements: lower precision, lower power, lower cost. Companies like Groq claim 10x better inference performance per dollar. Cerebras offers wafer-scale chips optimized for specific inference workloads. The inference market could fragment into dozens of specialized solutions, each optimized for specific use cases.

China’s response adds another dimension to the GPU wars. Huawei’s Ascend chips, while currently limited to 200,000 units annually, represent China’s determination to achieve semiconductor sovereignty.

More importantly, Chinese companies are pioneering new approaches to AI efficiency that could make raw computational power less relevant. DeepSeek’s ability to match GPT-4 performance with a fraction of the compute suggests that the future might belong not to who has the most GPUs, but who can do the most with the least.

The emergence of quantum-classical hybrid computing adds a wild card to the GPU wars. While full quantum computing remains years away, quantum annealers and quantum-inspired algorithms could provide exponential speedups for specific AI workloads. The company that first successfully integrates quantum acceleration into AI training could instantly obsolete hundreds of billions in classical GPU infrastructure.

The post The AI GPU Wars appeared first on FourWeekMBA.

The AI Capital Expenditure Supernova

When Four Companies Spend Like Nations

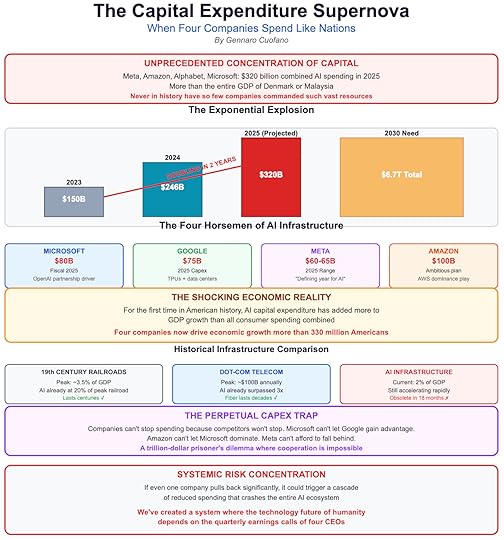

When Four Companies Spend Like NationsMeta, Amazon, Alphabet, and Microsoft plan to spend over $300 billion combined on AI infrastructure in 2025 alone. To put this in perspective, that’s more than the entire GDP of countries like Denmark or Malaysia, concentrated in the hands of four corporations betting on artificial intelligence.

The acceleration is breathtaking. In 2023, combined Big Tech capex was roughly $150 billion. By 2024, it had surged to over $200 billion. The 2025 projection of $300-320 billion represents a doubling in just two years. Microsoft alone expects to spend $80 billion in fiscal 2025, while Google’s capex will hit $75 billion. These aren’t gradual increases—they’re exponential explosions that rewrite the rules of corporate investment.

But here’s the shocking revelation: For the first time in American history, AI capital expenditure has added more to GDP growth than all consumer spending combined. Think about that. Four companies’ infrastructure investments now drive economic growth more than 330 million Americans buying homes, cars, food, and everything else. This isn’t capitalism as we know it—it’s something unprecedented, where corporate infrastructure investment becomes the primary engine of economic growth.

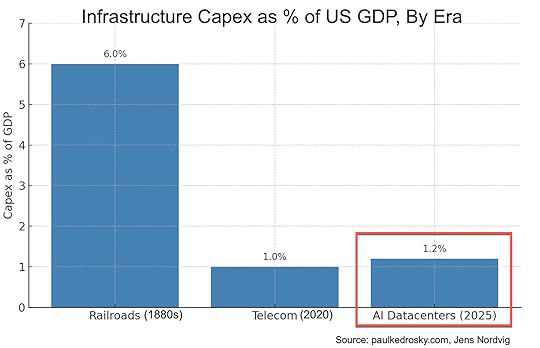

The comparison to historical infrastructure booms reveals the unique nature of this moment. Paul Kedrosky calculates that AI data center spending is already at 20% of peak railroad spending as a percentage of GDP from the 19th century, and it’s still accelerating.

Credit: Paul Kedrosky

Credit: Paul KedroskyWe’ve already surpassed the peak telecom spending of the dot-com bubble. But unlike railroads that last centuries or fiber that remains useful for decades, AI infrastructure depreciates faster than that.

This creates what I call the “Perpetual Capex Trap.” Companies can’t stop spending because their competitors won’t stop. Microsoft can’t let Google gain an advantage in model capabilities. Amazon can’t let Microsoft dominate cloud AI services. Meta can’t afford to fall behind in AI infrastructure. It’s a trillion-dollar prisoner’s dilemma where cooperation is impossible and competition demands endless escalation.

The financial markets initially celebrated this spending as visionary investment in the future. But cracks are appearing. Investors increasingly question whether revenue will ever justify these astronomical investments. The concern isn’t that AI doesn’t work—it clearly does.

The concern is that the infrastructure arms race might permanently destroy profit margins. When every competitor has similar capabilities, pricing power evaporates, and the massive fixed costs of AI infrastructure become an albatross rather than an advantage.

Most ominously, this concentration of investment in four companies creates systemic risk. If even one of these companies significantly pulls back, it could trigger a cascade of reduced spending that crashes the entire AI ecosystem.

And to be clear, that is a rational choice on the part of these players, as if you’re part of the race, you can pull up on infrastructure spend, if at all, you’ve got to double down!

The post The AI Capital Expenditure Supernova appeared first on FourWeekMBA.

How The Demographic Time Bomb Will Influence AI Development

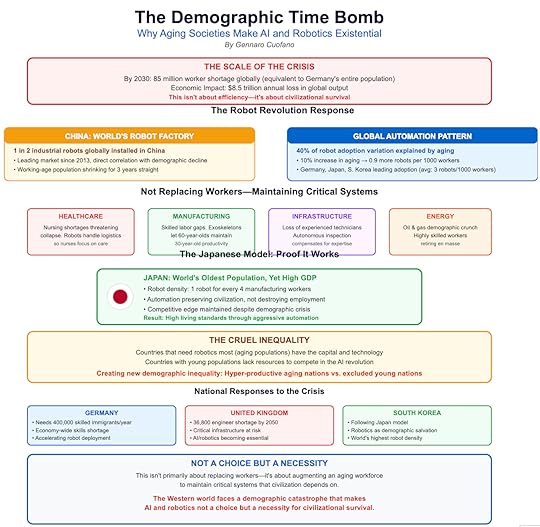

The Western world faces a demographic catastrophe that makes AI and robotics not a choice but a necessity for civilizational survival. This isn’t about efficiency or profit margins—it’s about maintaining basic infrastructure and services as the working-age population collapses.

The numbers paint a stark picture of the crisis ahead.

Global population collapse isn’t sci-fi any longer; it’s the disappearance of middle-aged workers who form the backbone of industrial civilization.

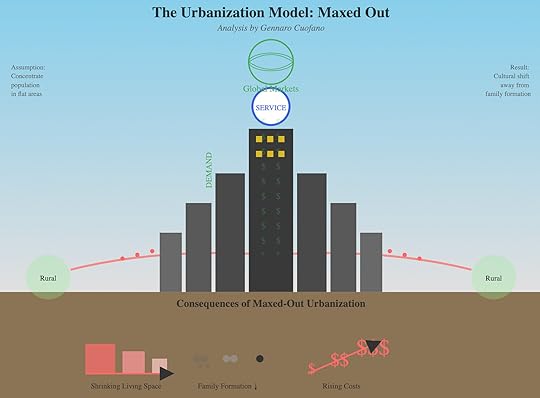

We pretty much maxed out the urbanization model of industrialization, where the assumption was to bring in as many people as possible in a flat area of the country to have them focus on the service side of the economy, thus enabling the formation of megalopolises, which enabled the concentration of massive demand, and the formation of global markets.

Yet, these massive urban centers also came with huge costs, which is, if you were part of this trend, you also had to live in a place that became more and more expensive, even with a shrinking living space, thus making the ability to have kids and raise them become sort of culturally obsolete.

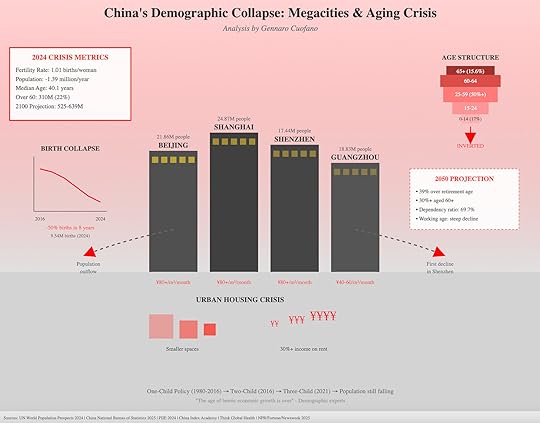

China, more than all other Western countries, fell into the same super-urbanization trap, which propelled it toward massive economic progress but also, probably, the most dangerous demographic time bomb of our times.

That explains why China’s response to the problem has been nothing short of revolutionary. One in every two industrial robots installed globally now goes to China.

The country has been the leading market for industrial robots since 2013, and this trend directly correlates with demographic decline. Population aging alone can explain the variation in robot adoption between countries.

When Germany faces similar pressures, it responds similarly—deploying robots at rates that would have seemed like science fiction a decade ago.

But here’s what most analyses miss: this isn’t primarily about replacing workers—it’s about augmenting an aging workforce to maintain critical systems. In healthcare, where nursing shortages threaten to collapse entire hospital systems, robots handle logistics so nurses can focus on patient care.

In manufacturing, exoskeletons allow 60-year-old workers to maintain the productivity of their 30-year-old selves. In infrastructure maintenance, autonomous inspection systems compensate for the loss of experienced technicians.

The Japanese model proves this can work. Despite having the world’s oldest population, Japan maintains a high GDP and living standards through aggressive automation. Their robot density—one robot for every four manufacturing workers—isn’t destroying employment; it’s preserving civilization. South Korea follows a similar path, viewing robotics not as job destroyers but as demographic salvation.

The cruel irony is that the countries that need robotics most—those with aging populations—are also the ones with the capital and technological capability to deploy them.

Meanwhile, countries with young, growing populations lack the resources to compete in the AI revolution, potentially creating a new form of demographic inequality where aging rich nations become hyper-productive through automation. In contrast, young poor nations face technological exclusion.

The post How The Demographic Time Bomb Will Influence AI Development appeared first on FourWeekMBA.

How Geopolitics Reshapes the Technology Landscape

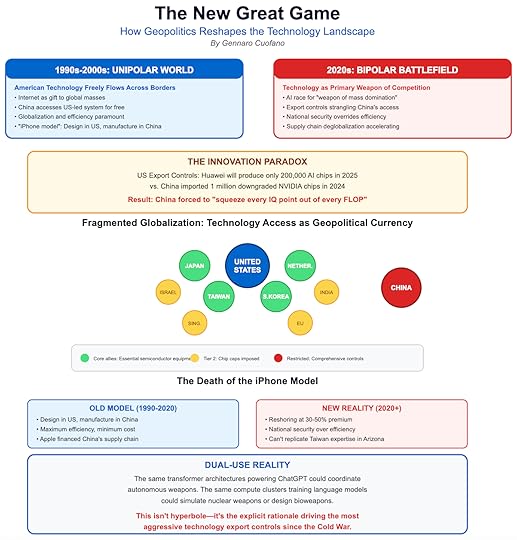

The 1990s Internet revolution unfolded in a unipolar world where American technology freely flowed across borders. Today’s AI revolution is emerging in a fractured, multipolar battlefield where technology has become the primary weapon of great power competition.

The US-China AI race isn’t just about economic competition; it’s about who controls the future of human civilization.

According to recent congressional testimony by US Commerce Secretary Howard Lutnick, Huawei will produce only 200,000 AI chips in 2025, while China legally imported around 1 million downgraded NVIDIA chips in 2024.

These numbers reveal a strategic stranglehold, but also a ticking clock—China won’t remain dependent forever.

The export control regime has created what I call the “Innovation Paradox.” By restricting China’s access to cutting-edge chips, the US has inadvertently catalyzed a Chinese efficiency revolution.

Companies like DeepSeek are learning to “squeeze every IQ point out of every FLOP,” achieving competitive results with a fraction of the computational resources.

The constraints meant to slow China down may be forcing it to develop more efficient AI architectures that could ultimately leapfrog Western approaches.

Meanwhile, the US is architecting a new alliance structure fundamentally different from the globalization era. This isn’t about free trade and open borders—it’s about creating a technological sphere of influence where access to AI capabilities becomes the new currency of geopolitical alignment.

The post How Geopolitics Reshapes the Technology Landscape appeared first on FourWeekMBA.

Understanding the AI Infrastructure Stack

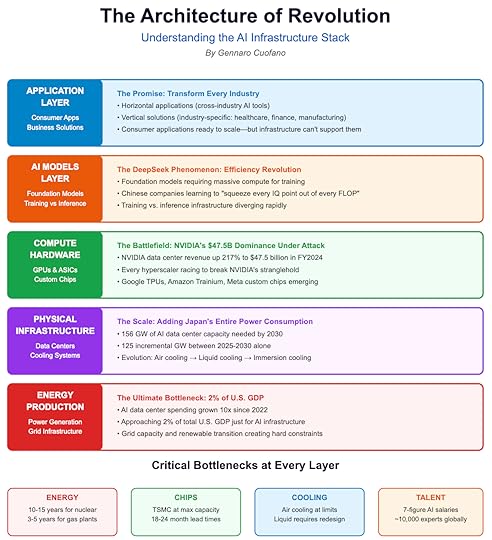

The AI revolution isn’t a single technology—it’s a complex stack of interdependent layers, each with its dynamics, bottlenecks, and power players.

To understand why this bubble is different, you must understand how these layers interact and constrain each other.

At the foundation lies the Energy Production Layer, the most overlooked yet most critical constraint.

This isn’t just about building more data centers—it’s about fundamentally reimagining our electrical grid for an era where computation becomes civilization’s primary energy consumer.

Above this sits the Physical Infrastructure Layer, where the battle for land, cooling, and connectivity plays out.

To put this in perspective, that’s equivalent to adding the entire power consumption of Japan to the global grid, just for AI.

The Compute Hardware Layer has become the most visible battlefield, where NVIDIA’s dominance meets desperate attempts at disruption.

Every hyperscaler, every major chip company, and even startups are now racing to break NVIDIA’s stranglehold on the training market.

Moving up the stack, the AI Models Layer represents where software meets silicon in an explosive marriage of capability and constraint.

This is where the DeepSeek phenomenon emerges—the shocking realization that Chinese companies, constrained by export controls, are learning to extract more intelligence per FLOP than anyone thought possible.

Necessity isn’t just the mother of invention; it’s becoming the mother of efficiency revolution.

Finally, at the apex, the Application Layer promises to transform every industry, every job, every human interaction.

But here’s the paradox: the applications are ready, the demand is proven, the ROI is clear (or at least almost, as it’s a digital workforce), but we literally cannot build the infrastructure fast enough to deploy them at scale.

The post Understanding the AI Infrastructure Stack appeared first on FourWeekMBA.

Why AI Is Nothing Like the Web Bubble

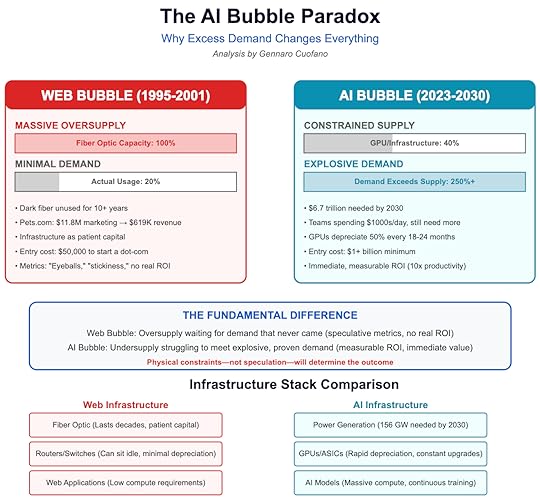

The web bubble was built on a fundamental lie: that eyeballs equal revenue. Companies with no path to profitability commanded billion-dollar valuations based on “clicks,” “stickiness,” and “mindshare.”

Pets.com spent $11.8 million on marketing to generate $619,000 in revenue. Webvan burned through $1.2 billion building infrastructure for a market that didn’t exist.

The metrics were fake, the demand was speculative, and the business models were fantasy.

The AI revolution presents the exact opposite problem. The demand is desperately real—companies are spending thousands of dollars daily on AI services and begging for more capacity.

The ROI is immediately measurable—legal teams reducing contract review from days to hours, security teams cutting incident response from 15 minutes to 5, marketing teams achieving 10x creative output.

This isn’t about hoping users might someday pay for something; it’s about customers with credit cards in hand being turned away because we literally cannot build capacity fast enough.

During the web bubble, the infrastructure investment was patient capital that could wait for demand. Fiber optic cables don’t deteriorate meaningfully over decades. Routers and switches could sit in warehouses.

The infrastructure was an option on future demand—if the internet grew as predicted, great; if not, the infrastructure would still be there when needed. Dark fiber from 2000 is still being lit up today, twenty-five years later.

AI infrastructure is impatient capital that deteriorates rapidly.

Data centers consume millions of dollars in electricity, whether fully utilized or not. Cooling systems require constant maintenance.

You can’t build AI capacity and wait for demand—by the time demand arrives, your infrastructure is obsolete.

This creates a vicious cycle where companies must constantly build to maintain their position, let alone grow.

The web bubble was also fundamentally democratic in its failure; anyone could start a dot-com with $50,000 and a dream.

The AI bubble is oligarchic by necessity. The entry ticket is measured in billions, not thousands.

You need relationships with NVIDIA, access to massive power generation, teams of PhD researchers, and most importantly, the kind of balance sheet that can sustain $80 billion annual capex without going bankrupt.

This concentration means the AI bubble can’t diffuse through thousands of startup failures—it will concentrate in a handful of titanic successes or catastrophic collapses.

And this will power up thousands of AI startups on the applications layer.

But here’s the catch that changes everything: We can’t pre-build AI capacity like we did with fiber.

Every GPU depreciates at Moore’s Law speed. Every data center requires constant, massive power draws.

Every technological advance makes yesterday’s infrastructure obsolete within quarters, not decades.

This creates a fundamentally different bubble dynamic, one that could stall not from lack of demand, but from our physical inability to supply what the world desperately needs.

The post Why AI Is Nothing Like the Web Bubble appeared first on FourWeekMBA.

AI, The Bubble That Can’t Burst (Just Not Yet)

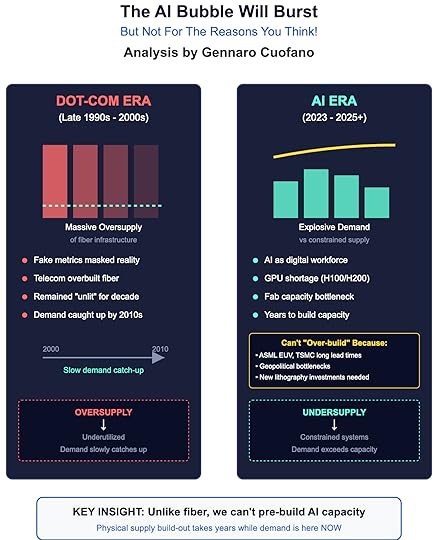

The AI bubble fundamentally differs from every technology bubble before it because it’s driven by excess demand, not oversupply.

This isn’t speculation waiting to meet reality; this is reality overwhelming our ability to build.

Consider the staggering contrast with history. During the dot-com era, telecom companies massively overbuilt fiber-optic infrastructure in anticipation of exploding Internet demand.

Much of it remained “dark” for a decade, unused and waiting for the world to catch up.

Today, we face the opposite crisis: companies worldwide will need to invest a remarkable $6.7 trillion into new data center capacity between 2025 and 2030 to keep pace with AI demand.

But here’s the catch that changes everything: We can’t pre-build AI capacity like we did with fiber.

Every GPU depreciates at Moore’s Law speed. Every data center requires constant, massive power draws.

Every technological advance makes yesterday’s infrastructure obsolete within quarters, not decades.

This creates a fundamentally different bubble dynamic, one that could stall not from lack of demand, but from our physical inability to supply what the world desperately needs.

The post AI, The Bubble That Can’t Burst (Just Not Yet) appeared first on FourWeekMBA.

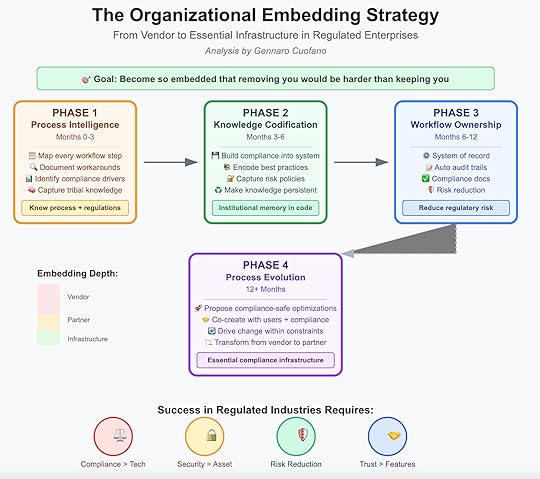

The Organizational Embedding Strategy to win in Enterprise AI

Becoming Part of the Enterprise DNA

Becoming Part of the Enterprise DNAThe most successful implementations weren’t tools—they were transformations. Your goal isn’t to sell software. It’s to become so embedded in the enterprise’s operations that removing you would be harder than keeping you.

Phase 1: Process Intelligence (Months 0-3) Work within the authorized pilot to achieve deep process understanding. Map every step of current workflows, including unofficial workarounds that exist for compliance reasons. Document decision points and regulatory requirements that drive complexity. Identify institutional knowledge that ensures compliance but isn’t documented. Become the expert on both their process AND the regulations that shaped it.Phase 2: Knowledge Codification (Months 3-6) Build their compliance expertise into your system permanently. Create templates that encode both best practices and regulatory requirements. Capture their risk policies and audit preferences in reusable components. Make their institutional knowledge persistent and auditable—critical when experienced employees retire.Phase 3: Workflow Ownership (Months 6-12) Become the system of record for regulated processes. Handle exceptions automatically while maintaining audit trails. Generate compliance documentation required for regulatory reviews. Position yourself as reducing regulatory risk, not just improving efficiency.Phase 4: Process Evolution (12+ Months) Propose optimizations backed by compliance-safe data. Co-create new workflows with both users AND compliance teams. Drive organizational change that satisfies both efficiency and regulatory needs. Transform from vendor to compliance partner to essential infrastructure.The Bottom Line: Your 12-Month Path to Enterprise SuccessMonths 0-3: Authorized Entry Launch through innovation labs or transformation offices with proper authorization. Identify middle-management champions who can navigate politics. Document wins within controlled pilots that prove value. Build trust through compliance respect, not disruption.Months 3-6: Departmental Validation Scale from pilot to department with security approval. Prove ROI metrics that include risk reduction. Navigate procurement with your champion’s guidance. Establish value that satisfies both efficiency and safety requirements.Months 6-9: Enterprise Expansion Add enterprise features required for full deployment. Scale across departments through formal channels. Build executive support through proven compliance and efficiency. Lock in enterprise agreements based on demonstrated risk reduction and value.Months 9-12: Organizational Transformation Push for process improvements within regulatory constraints. Expand use cases based on proven compliance capabilities. Become embedded in their compliance and operational infrastructure. Plan multi-year partnership, not just vendor relationship.The Harsh Reality CheckThis playbook only works if you accept certain harsh realities about enterprise AI adoption in regulated industries:

Compliance beats technology. The best model doesn’t win. The most compliant one does. Accept that inferior technology with superior compliance will beat you every time.Security reviews aren’t obstacles—they’re prerequisites. Embrace them instead of resenting them. Learn to navigate rather than circumvent. Use security as your differentiator, not excuse.You’re building for three masters: users who need efficiency, compliance who needs safety, and executives who need both. Your champion in the middle must satisfy all three.Risk reduction beats efficiency gains. One compliance violation costs more than years of efficiency gains. Depth of compliance beats speed of deployment. Audit success beats ROI every time in regulated industries.Trust beats features. It’s better to solve one workflow completely and compliantly than ten workflows partially. Embed deeply into their compliance framework rather than integrate broadly. Become essential to their regulatory posture, not just their productivity.The enterprises that will succeed with AI are those that find partners willing to do the hard work of compliant organizational transformation, not just technology deployment. The question isn’t whether you have the best AI—it’s whether you’re willing to embed yourself deeply enough into their regulatory and operational reality to make them successful.

The $30-40 billion in failed enterprise AI investment isn’t a disaster—it’s your opportunity. These regulated enterprises need partners who understand that compliance and transformation aren’t opposites—they’re prerequisites for each other. Be that partner, and the enterprise AI market is yours.

The post The Organizational Embedding Strategy to win in Enterprise AI appeared first on FourWeekMBA.

August 21, 2025

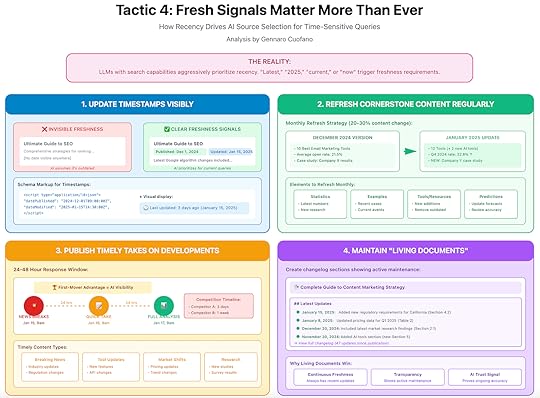

AI Visibility Tactic 4: Fresh Signals Matter More Than Ever

The Reality: LLMs with search capabilities aggressively prioritize recency for any time-sensitive query. “Latest,” “2024,” “current,” or “now” trigger freshness requirements.

Implementation:Update timestamps visibly. Display both publication and last-updated dates prominently. Use schema markup for datePublished and dateModified.Refresh cornerstone content regularly. Monthly updates to key pages with new data, examples, or developments. Change 20-30% of the content to trigger meaningful freshness signals.Publish timely takes on industry developments. When news breaks in your domain, publish analysis within 24-48 hours. First-mover advantage is real in AI visibility.Maintain “living documents” for evolving topics. Create changelog sections showing what’s been updated.

This signals active maintenance to AI systems:

## Latest Updates- **January 15, 2025**: Added new regulatory requirements for California- **January 8, 2025**: Updated pricing data for Q1 2025- **December 20, 2024**: Included latest market research findings

The post AI Visibility Tactic 4: Fresh Signals Matter More Than Ever appeared first on FourWeekMBA.