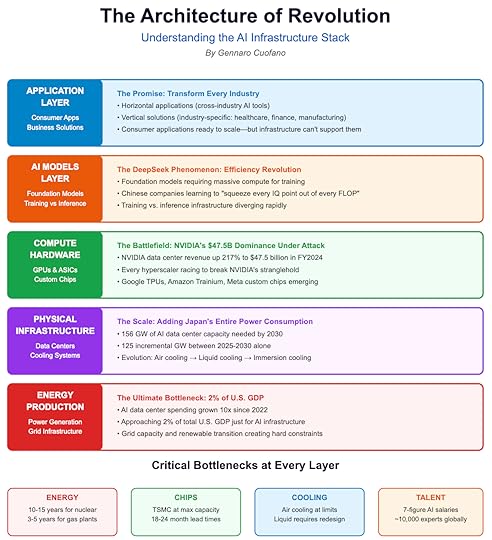

Understanding the AI Infrastructure Stack

The AI revolution isn’t a single technology—it’s a complex stack of interdependent layers, each with its dynamics, bottlenecks, and power players.

To understand why this bubble is different, you must understand how these layers interact and constrain each other.

At the foundation lies the Energy Production Layer, the most overlooked yet most critical constraint.

This isn’t just about building more data centers—it’s about fundamentally reimagining our electrical grid for an era where computation becomes civilization’s primary energy consumer.

Above this sits the Physical Infrastructure Layer, where the battle for land, cooling, and connectivity plays out.

To put this in perspective, that’s equivalent to adding the entire power consumption of Japan to the global grid, just for AI.

The Compute Hardware Layer has become the most visible battlefield, where NVIDIA’s dominance meets desperate attempts at disruption.

Every hyperscaler, every major chip company, and even startups are now racing to break NVIDIA’s stranglehold on the training market.

Moving up the stack, the AI Models Layer represents where software meets silicon in an explosive marriage of capability and constraint.

This is where the DeepSeek phenomenon emerges—the shocking realization that Chinese companies, constrained by export controls, are learning to extract more intelligence per FLOP than anyone thought possible.

Necessity isn’t just the mother of invention; it’s becoming the mother of efficiency revolution.

Finally, at the apex, the Application Layer promises to transform every industry, every job, every human interaction.

But here’s the paradox: the applications are ready, the demand is proven, the ROI is clear (or at least almost, as it’s a digital workforce), but we literally cannot build the infrastructure fast enough to deploy them at scale.

The post Understanding the AI Infrastructure Stack appeared first on FourWeekMBA.