Rod Collins's Blog, page 13

April 28, 2015

Choosing The Right DAM Vendor: Get The DAM Facts!

by Mindy Carner and Laura Kost

Is choosing the right DAM (Digital Asset Management) system akin to choosing the right wine for dinner? Reds with meats, whites with fish? In a word, no. Who says you must adhere to such conventions? Choosing the right vendor is about aligning your goals, vision and requirements so the vendor can become a partner that will provide the technology, vision and support to meet your business needs. While selecting a vendor can often be a complex, intricate process, keeping the below key elements in mind will help ensure you find the right DAM system for your business.

The Approach

When your business decides it’s time for a new DAM, it can be overwhelming to know where to start. But the first step is to define the goals of the DAM program, and use these goals to determine every requirement, criteria and decision throughout vendor selection activities. Once the program’s goals are set, you can proceed to gather business and technical requirements, write an RFI and RFP, and finally, decide a solution’s fit based on the vendor’s system demonstration.

Getting Started – Business Requirements

Requirements gathering is critical to accomplishing a successful DAM Request for Proposal (RFP), as a tool to help truly identify whether a system can meet the goals of the project. Without clearly understood requirements, it is easy to get distracted by a sleek user interface or flashy functionality described during vendor demonstrations. The requirements gathering process should involve interviewing stakeholders across the organization, observing end users conducting their day-to-day tasks, and of course performing general discovery about the assets the system is expected to manage. The requirements gathered during interviews might include:

Workflows

Metadata

User role definitions

Business needs

IT/security requirements

Search and browse

End user adaptability

Use case scenarios

Integration goals

SaaS vs On-Prem vs Private Cloud

Total Cost of Ownership

RFP Evaluation

Once you finalize requirements, it is time to write and distribute a RFP and prepare the business for the selection process. Respondent vendor proposals should be evaluated with a quantitative Scorecard that includes weighted scoring – giving more weight to must-have requirements. About four finalist vendors should be sent functional work packages outlining the Use Cases, Metadata examples, User Roles, and Workflows gathered during requirements, so they can prepare system demonstrations that speak to your business needs.

The Big Day – Vendor Demos

Finally, you will be ready to hold the vendor demonstrations. Here are a few key points to keep in mind for The Big Day:

Evaluating the Vendor Demos

Make sure you see everything you need to see during the demo. Highlight the most important requirements so you can ensure that these have been covered.

Don’t let a meandering, unprepared vendor derail your timeline. If it’s 90 minutes, then it’s 90 minutes … no extra time allowed.

Reformat Use Cases into a list with check boxes to easily track anything that gets skipped. Add space for making notes in case tasks are accomplished through work-arounds, or you need to go back to something.

Avoid evaluation team fatigue by dividing the scorecard into sections for different parts of the team to be responsible for. Evaluators will be overwhelmed if asked to follow along with all use case scenarios.

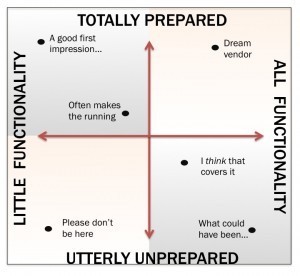

There is a quadrant that evokes the vendor preparedness/functionality fit universe to keep in mind while participating in system demos:

It’s More Than Functionality

Don’t be surprised if capabilities aren’t the only factors to influence the final vendor selection. We have seen clients select candidates based on cultural fit, or a vendor’s stellar customer service, over than system functionality.

Another element that plays a key role in decision-making is Price! An alluring price tag can mean that you end up with the system that doesn’t meet every functionality requirement, so be prepared to compromise, and know what requirements you cannot bend on.

Final DAM Thoughts …

We hope this high level review of an often very complicated and time consuming process helps you as you visit conferences like the upcoming Henry Stewart DAM NY Conference, May 7- 8. As you consider vendors and systems for your business, keep these concepts in mind as you roam the exposition hall. There will be many vendors around demonstrating beautiful user interfaces, optimal metadata capabilities, and even some dolling out fun treats and swag – remember that each vendor has a different ability to meet business needs, and yours are unique, so make sure to ask the right questions and get at the needs that matter most to your users.

Mindy Carner is a Senior Associate at Optimity Advisors.

Laura Kost is a Senior Associate at Optimity Advisors.

April 21, 2015

Four Key Lessons On How Innovation Works

by Rod Collins

There are few business leaders who would deny that we are living in a time of unprecedented innovation. Google and Wikipedia, Facebook and Twitter, iPhones and iPads, Spotify and Netflix—none of which existed a mere two decades ago—have radically changed the way markets work. Toward the end of his life as he contemplated the rapid developments of the emerging digital age, Peter Drucker astutely observed, “If you don’t understand innovation, you don’t understand business.” The need to innovate—and to innovate quickly—has become a business imperative for thriving in a post-digital hyper-connected world, and yet most businesses today struggle in the face of this challenge.

According to Steve Denning, the principle reason that many U.S. firms are struggling or dying is a failure to innovate that stems from a lack of commitment and resources to cultivate new ideas and pursue innovations capable of keeping pace with a more competitive global marketplace. If Drucker and Denning are right in their assessment of the importance of innovation, filling this resource gap may very well be job one for today’s business leaders.

Walter Isaacson’s latest book, The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution provides important insights into what business leaders need to do to install the processes that naturally enable innovative workplaces. Isaacson’s comprehensive overview of the emergence of the digital age is as much a story about management evolution as it is about technological revolution. While Isaacson chronicles the back-stories behind the development of physical devices, such as the transistor, the computer, and the Internet, he also sheds light on the creation of new organizational structures that serve as the incubators and the accelerators of innovation. The “invention of a corporate culture and management style that was the antithesis of the hierarchical organization” is the context, without which, the content of innovation may have never happened. Perhaps the reason so many companies struggle with innovation is because they are operating from the wrong context.

Walter Isaacson’s latest book, The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution provides important insights into what business leaders need to do to install the processes that naturally enable innovative workplaces. Isaacson’s comprehensive overview of the emergence of the digital age is as much a story about management evolution as it is about technological revolution. While Isaacson chronicles the back-stories behind the development of physical devices, such as the transistor, the computer, and the Internet, he also sheds light on the creation of new organizational structures that serve as the incubators and the accelerators of innovation. The “invention of a corporate culture and management style that was the antithesis of the hierarchical organization” is the context, without which, the content of innovation may have never happened. Perhaps the reason so many companies struggle with innovation is because they are operating from the wrong context.

Among the many lessons that Isaacson draws from understanding the dynamics of how innovation works, there are four key lessons that may help business leaders close their innovation gap.

1. Collaborative Teamwork

Innovation is a collaborative process and teamwork is its essential skill.

“Innovation comes from teams,” according to Isaacson, “more often than the lightbulb moments of lone geniuses.” This is perhaps the one most important observation about how innovation works. The context for innovation has more to do with leveraging the collective intelligence of the many than with relying on the individual intelligence of the few. Teams that excel at innovation tend to be collegial and typically bring together people with a wide diversity of skills. Whereas traditional organizations organize by departments of similarly skilled workers under the direction of single supervisors, innovative organizations tend to organize by project in more self-managed structures that are better suited for enabling the serendipity that is often the fuel of innovation.

Teamwork is the essential skill because innovation is, at its core, a collaborative process. While traditional leaders recognize the need for collaboration—as noted in a recent IBM CEO study that found that 75 percent of CEO’s identified collaboration as the most important organizational attribute—the same study found that most of them are not quite sure what to do to transform their organizations into collaborative enterprises. As long as they remain unsure, their organizations are likely to be disadvantaged in a rapidly changing world

2. Distributed Power

Innovation is more likely to thrive in organizations where power is distributed rather than centralized.

Isaacson notes, “The Internet was born of an ethos of creative collaboration and distributed decision making.” This ethos runs contrary to the fundamental norms of traditional hierarchical structures where power is centralized at the top. It is not surprising that leaders are challenged when it comes to building collaborative organizations because embracing collaboration inevitably means enabling distributed decision-making, which doesn’t come naturally to leaders schooled in the dynamics of command and control.

The problem with maintaining centralized power in fast-changing times is that business leaders are prone to miss innovative breakthrough opportunities because they are far more comfortable with attempting to preserve the status quo than they are with adapting to new emergent realities. Isaacson points to the example of Xerox, whose leaders were not equipped to handle a radically new innovation—a rudimentary personal computer—created by their own research and development team. If the Xerox organization had been more of a network of distributed authority, such as Google is today, the history of the personal computer could have been very different. According to Isaacson, “Xerox could have owned the entire computer industry.” Instead, they ceded this opportunity to Steve Jobs, who on a chance visit to the Xerox R&D facility, recognized the possibilities of an amazing new technology.

3. Complementary Styles

The best leadership of innovation comes from teams that bring together people with complementary styles.

A key finding that Isaacson observed in his study of innovative organizations is that, in building leadership teams, they were highly effective in pairing visionaries with people who could execute innovative ideas. Isaacson cites the example of Intel, founded by two visionaries—Robert Noyce and Gordon Moore—whose first hire was Andy Grove, a disciplined manager who knew how to install operating procedures and get things done.

The leadership at successful innovative enterprises rarely emanates from one strong leader. Instead, it “comes from having the right combination of different talents at the top.” In addition to the threesome at Intel, another example of effective tripartite leadership is at Google, where Larry Page, Sergey Brin, and Eric Schmidt have combined their skills to build one of today’s most innovative businesses.

Another way that leaders can bring together complementary styles is by building crowdsourced organizations, such as Wikipedia and Linux, that provide the framework for a diversity of people to combine their talents in self-organized structures where the leadership team emerges from the interactions of the participants. While Jimmy Wales and Linus Torvalds are the recognized leaders of their respective ventures, neither of these two visionaries maintains tight control over their innovative enterprises.

4. Learning Systems

The most important development of digital age innovation is the human-machine symbiosis that has transformed the essential orientation of all systems from programming to learning.

This fourth lesson is significant because it undercuts the fundamental dynamics that have defined the way large groups of people work together. Human-machine symbiosis began in the Agrarian Age when humans first built tools to ease the burden of physical work. This symbiosis catapulted to a new dimension with the advent of mass production. The human-machine symbiosis of the Industrial Age was almost Borg-like where large numbers of people interacted with their machines and each other in rigidly prescribed ways. This form of symbiosis led to the creation of the command-and-control structures that have defined the practice of management for over a century. The basic orientation of these management systems is prescribed programming where workers are presented with fixed plans and incentives are put in place to make sure they don’t deviate from the program.

With the advent of the Digital Age, the human-machine symbiosis has once again catapulted to a new dimension that can be best described as mass collaboration. Isaacson observes that today’s computer technology “augments human intelligence by being tools both for personal creativity and for collaborating.” As a result, the symbiotic relationship is an iterative learning partnership that combines the strengths of both humans and machines. While machines can collate information incredibly fast, humans are better at the intuitive skills of sense-making and pattern recognition. Isaacson points to the example of the Google search engine, which rapidly collates the individual judgments of billions of people to provide sensible search results. He notes, “the collaborative creativity that marked the digital age included collaboration between humans and machines.”

An Emerging New Management Model

Filling the innovation resource gap requires a rethinking of a century-old management model that has been the foundation for how things get done in large organizations. Fixed plans and tight controls are of little value in a world that is rapidly changing. Instead, business leaders need to heed the four key lessons of the innovators to guide them in transforming their management structures into highly effective learning systems where collaborative teams blend complementary styles to exercise distributed power in serving the needs of their customers. To do so, business leaders will need to operate from the right context, embrace the emerging new management model of the innovators, and organize themselves as collaborative networks rather than top-down hierarchies.

Rod Collins (@collinsrod) is Director of Innovation at Optimity Advisors and author of Wiki Management: A Revolutionary New Model for a Rapidly Changing and Collaborative World (AMACOM Books, 2014).

April 14, 2015

Leveraging Social Media to Cultivate Transparency Within An Organization

by Carol Huggins

According to Starbucks’ Howard Schultz, “The currency of leadership is transparency.” From publishing pay information to inviting all employees to every meeting, many organizations strive to become more and more transparent to both employees and the public. But transparency does not have to be as radical as sharing how salaries are calculated. A more sustainable yet progressive demonstration is the endorsement and inclusion of social media into a company’s human capital management strategy.

Just Say No…to Policy

Mandating how employees use their personal social media accounts does not translate into sound human resources management. Just as an employer should not dictate how employees spend their time off, it should not tell someone what he may or may not share online. In fact, the National Labor  Relations Board has been steadily cracking down on strict workplace social media policies. Instead, a company should make clear its formal stance and provide guidelines on how employees may best represent the company on the web. In it’s corporate (and public) blog, Adidas encourages open communication and informs employees to “tell the world about your work and share your passion.”

Relations Board has been steadily cracking down on strict workplace social media policies. Instead, a company should make clear its formal stance and provide guidelines on how employees may best represent the company on the web. In it’s corporate (and public) blog, Adidas encourages open communication and informs employees to “tell the world about your work and share your passion.”

Encourage Best Practices

Lead by example. CEOs, directors, and managers who actively use social media influence their employees to do the same. Their affirmation also promotes transparency. Though many executives have not yet embraced social media, they should at minimum, have a professional online profile that is accurate, up-to-date, and sets the standard for others. For example, LinkedIn provides a modern day business card and resume wrapped into one convenient package and serves as an effective networking tool that can lead to new business opportunities.

Be a Coach

Teach employees how to use social media effectively. Include “Social Media 101” as a topic in new employee training programs. Gloria Burke, Chief Knowledge Officer and Global Practice Portfolio Leader of Unified Social Business at Unisys, says, “Offering such training creates a team of advocates who are equipped to represent their employer online . . . you’re empowering them to be more confident and effective in what they’re sharing.” Additionally, designate official company social media ambassadors to mentor associates on how to establish or enhance their personal online brand.

Promote Teamwork

Whether or not an organization formally endorses social media, tools to facilitate communication among staff members should be implemented to encourage teamwork and increase productivity. Both Salesforce and Microsoft offer enterprise social networks as features within their products. In 2011, Nationwide launched Spot, a social intranet built on Yammer and SharePoint. Today, nearly all of its 36,000 employees are more engaged, better connected, and have access to the expertise they need to get their work done, resulting in an annual savings of $1.5 million.

Boost Participation

As a result of the growing influence of social media, employees have become a much more valuable marketing resource. Each time a press release is circulated, a new blog post is published, or a key event is publicized, everyone should be informed, and suggested tweets should be shared. The aforementioned ambassadors may also serve as key brand promoters within the firm and with customers. If employees are too busy to keep up with Twitter, then offer support to post and retweet on their behalf. Applications like Hootsuite make it easy by allowing users to schedule activity for multiple accounts.

An obvious motivation for formalizing an organization’s social media program is to avoid public relations disasters. But, more importantly, it inspires transparency. If a company embraces employee participation in social networks, then it need not worry about what employees discuss on the web. Instead, workers will feel empowered to contribute to the organization’s success via the online community.

Carol Huggins is a Manager at Optimity Advisors

April 7, 2015

Give ‘Em The Business

by Robert Moss

Increasingly, I shudder every time I hear someone in a technology role talk about “The Business.”

You know, like this:

We need The Business to give us the requirements.

Or:

We need to get someone from The Business to weigh in on this.

Or:

If The Business would just tell us what they want and stop changing their minds we could get this project delivered.

Embedded in the very term is a fundamentally outdated way of thinking. It implies that technology is somehow separate from the main line of work that a company does.

That idea used to make sense. Suppose we work for an office supply company. We have IT systems to help us order products from suppliers, manage inventory, and track sales, but that’s not “our business.” Our business is selling and delivering office supplies to our customers.

If such a stance was ever really tenable, it ceased to be so once customers started ordering all their office supplies online instead of going into brick-and-mortar stores and suddenly the online commerce system became the very foundation of the business.

If such a stance was ever really tenable, it ceased to be so once customers started ordering all their office supplies online instead of going into brick-and-mortar stores and suddenly the online commerce system became the very foundation of the business.

In 21st century companies, technology is no longer a back-office support role, yet the IT/Business divide remains one of the biggest sources of friction within organizations. It prevents them from being able to innovate and to adapt to rapidly-changing markets. For innovative companies today there can be no firm distinction between IT and “The Business.” Technology is the business, and vice-versa.

This isn’t to say that there should be no distinctions in roles within teams. Being able to write software code or diagnose a network failure takes a different set of skills and personality traits than, say, understanding why customers want to buy one product and not another or how best to outmaneuver the competition in the market. But these days the people with those differing skills and abilities cannot to be isolated in different parts of the building—or perhaps even in entirely different cities—but instead need to be sitting side by side together, collaborating to constantly redefine and drive forward the business (with a lower case ‘b’).

I can think of no better way to get started than to forbid anyone on a technology team from referring to other groups as “The Business.” This is more than a symbolic thing. Just the mental gymnastics of having to come up with a different term to describe non-technical counterparts should go a long way toward bridging the pernicious IT/Business gap.

So what do we call “The Business” instead? Referring to individual roles would be a start: we need a marketing analyst to review this text. Even better would be just to say Bob or Sally should help us revise this text. Ideally, we would just lean across the work table and say, “Hey, Sally, how does this look?”

More than anything, though, companies need people in roles who can cut across traditional boundaries—that is, individuals who can both understand the company’s market and customers and their needs as well as see the potential for enabling how products are delivered to those customers through technology.

I won’t even bother to see if “The Business” will approve this idea.

Robert Moss @RobertFMoss is a Partner and the leader of the Technology Platform practice at Optimity Advisors.

March 31, 2015

Perfecting The Member Experience: The Uberization Of Healthcare

by Elise Smith

In the recent blog titled “Creating Memorable Member Experiences,” we explored the idea that a customer’s experience with a company is oftentimes a make-or-break interaction. This is especially true in a consumerist society. Reviews about customer experience on websites such as Amazon, Yelp, Glassdoor, and Google are increasingly driving decision-making. In fact, 88 percent of consumers say they trust such online reviews as much as personal recommendations, and 81 percent of customers are willing to pay for a superior customer experience. Industry experts have estimated that failure to provide a satisfactory customer experience can amount to as much as a 20 percent annual revenue loss for poor performing businesses.

Until recently, the healthcare industry was immune to poor member experience and feedback. The combination of healthcare being an inelastic good and the lack of competition in the health insurance marketplace created an environment in which the members’ experiences, reviews, and voices often had little to no impact on the way insurers continued to conduct business—until today. In this blog, we explore the impact of the Affordable Care Act (ACA) on the rapidly evolving healthcare industry and outline what Optimity Advisors has identified as the Four Guiding Principles for a successful member experience, highlighting industry examples.

With the second annual open enrollment for State and Federal Exchanges, the healthcare industry is starting to see a tremendous change in the way providers, payers, and members interact. While the ACA’s first year was met with tactical shortcomings across the industry, newly released data has shown a decrease in both average premiums and the percentage of the uninsured, along with a historically low increase in healthcare spending. The ACA has helped align competing health plans by introducing the individual mandate and essential health benefits, capping an insurance company’s non-medical expenses, and scrutinizing annual premium rate increases. This level of regulatory oversight has pushed health insurers to compete beyond the traditional factors of affordability and benefit design. Today, the market has shifted to a new paradigm with new influencers to consumer healthcare purchasing such as access, engagement, personalization, and ease of doing business. The same characteristics that have driven consumers towards Amazon for bulk goods, Instacart for groceries, and Uber for cabs, have made their way to healthcare. By giving people the ability to easily shop for and change plans annually, the ACA has created an imperative for insurers to compete in a new way.

Many have referred to this movement as the “uberization of healthcare”. This movement is emerging from a shift in the relationship between consumers and companies, facilitated by technology and data. Just as the Uber application transformed the outdated taxicab model by shifting the focus towards the rider and away from the driver through increased competition and technological innovation, the ACA is driving similar dynamics in the healthcare industry. According to the J.D. Power 2015 Member Health Plan Study, health plan administrators are responding by taking a consumer-centric approach to build greater member trust and loyalty. As a result, the Voice of the Member is becoming more important than ever, both to improve the member experience and lower costs.

In our work with and research on successful member experiences in the healthcare industry, Optimity Advisors has identified the Four Guiding Principles that successful health plans emphasize in their member experience strategy. These include:

Create a Member-Centric Culture – An organization’s culture is centered on shared goals that empower employees to create the best member experience at every touch point.

Value the “Voice of the Member” – Key to developing the Voice of the Member is using member data, qualitative and quantitative research, and claims data to fully create member personas that lead to a personalized experience. Organizations that develop their strategies based on feedback from their members will create an experience that benefits both the members and the organization.

Be a Trusted Advisor – A health plan should deliver relevant, easy-to-access, accurate, and timely information to members across all channels to build a strong and dependable relationship with its members.

Measure What Matters – Relevant and consistent measures or Key Performance Indicators (KPIs) that are used to drive behaviors across the operation.

Create a Member-Centric Culture

While it is important for health plans to emphasize the importance of the member experience in all member-facing capacities, true success in creating a positive experience starts with the internal organization. Blue Cross Blue Shield (BCBS) Michigan recognized this imperative and developed Total Health Engagement—a philosophy that focuses on innovative health insurance plan design, better ways of providing care, and dedicated health support. In 2013, Forrester Research awarded BCBS Michigan for innovation and customer understanding for creating the Customer Experience Room. The Customer Experience Room provided a learning environment that helped nearly 7,000 employees understand and empathize with customer pain points about health insurance. By highlighting the importance of a member-centric environment within the organization, BCBS Michigan’s employees are able to better serve their members, which has enabled higher retention, with 65 percent of members reporting improved health.

Value the “Voice of the Member”

The ACA also emphasized the evolving member-centric culture by creating provisions around a new kind of health insurance company—a Consumer Operated and Oriented Plan, or CO-OP. CO-OPs are member-led with at least 51 percent of their Board of Directors controlled by members who play an active role in representing the “Voice of the Member.” In Arizona, Meritus is the state’s only CO-OP, with a unique mission to design its operations and strategies around the member. By emphasizing a member voice at the board level, all strategic decisions are influenced by people that would be directly impacted those decisions. By introducing a member-led board to healthcare, members can drive innovation, comprehensive plan designs, widespread provider access, and a financially sustainable yet affordable price point. As a result, Meritus drastically decreased its rates this year and offers some of the most affordable plans in the market, to which the market responded positively. By mid-January, the plan saw enrollment numbers reach 36,0000 new members. As Meritus continues to grow, its member voice and experience continues to be at the forefront of its strategy, and the market continues to positively respond.

Be a Trusted Advisor

One of the key findings from the J.D. Power 2015 Member Health Plan Study was that members are more likely to remain loyal with their health plans if they view the health plan as a trusted advisor. Accordingly, in the most direct representation of the “Uberization,” health plans are launching iPhone and Android applications that allow members to access all of their health plan information at the touch of a screen. In 2011, Humana’s MyHumana application won the Appy’s Award for “Best Medical App”. At the time of its award, the application became the single source of truth for Humana’s members in a revolutionary way, providing members with fast, reliable, and up-to-date information. In 2014 Humana released updates to the application based on member feedback, making it an even more seamless, integrated and personalized experience. Jody Bliney, the Chief Consumer Officer of Humana speaks to the application’s benefits: “With the launch of our new mobile app, we look forward to helping members better understand and leverage their health plans to enable more positive, lasting health outcomes.”

Measure What Matters

When Humana saw its costs increasing drastically (about 20 percent in one year) due to ACA regulations and high-priced drugs related to chronic conditions, the health plan responded by developing more member-focused programs such as cooking classes, exercise programs, and launching HumanaVitality, a wellness program with monetary rewards. Because most of its costs come from claims payouts, Humana addressed the rising costs by engaging members in their own health—not only save money for the member, but also for the insurance company. The insurer’s approach was centered on the idea of not just treating the symptom, but treating the individual as a whole. This approach was aimed at not only decreasing claims costs, but also improving the overall health of its membership population.

While the ACA still hasn’t solved all the problems it was created to resolve just yet, the healthcare industry has seen significant changes in only a year since its enactment. It has caused a tremendous shift in the healthcare industry by introducing and facilitating a new way health plans, providers, and members interact. Uberization has arrived in the healthcare industry, as insurers are finally forced to compete on their ability to engage its members. As a result, successful plans are using the Four Guiding Principles to help shape their strategy to align with the evolving expectations and needs of members.

Elise Smith is an Associate with Optimity Advisors, focusing on member experience strategy and innovation in the healthcare industry.

March 24, 2015

Getting Cozy with Your Content

by Reid Rousseau

Knowing and understanding your content is an ever evolving and essential component of ensuring the longevity and success of corporate information management initiatives—be they archiving, digital asset management (DAM), content management (CMS) or records management (RIM). Whether you are approaching this task from a “first date” or “decades-long marriage” perspective, it is important to realize that no matter how well you may think you know your content, it is worth taking a step back to formally understand what you have, and how it fits into the big picture of your business strategy and goals.

Performing an Asset Audit is a process that will allow you take inventory of the full library of asset types owned and managed in an enterprise, to discover how they are produced, interrelated, stored, accessed, and distributed. It is the crucial first step towards intimately understanding your assets from a 360-degree perspective before embarking on any of the intrinsic next phases of an information management initiative.

Performing an Asset Audit is a process that will allow you take inventory of the full library of asset types owned and managed in an enterprise, to discover how they are produced, interrelated, stored, accessed, and distributed. It is the crucial first step towards intimately understanding your assets from a 360-degree perspective before embarking on any of the intrinsic next phases of an information management initiative.

Only when you have comprehensive documentation of your assets, can you begin to design a holistic metadata strategy that reflects your asset inventory and supports asset categorization and discovery for end users. The asset audit is also a critical reference document to be able to scope, design, select, and deploy technology systems that will appropriately manage your assets and evolve in alignment with your ever changing asset and business needs.

Optimity’s Approach

At Optimity Advisors we have found that conducting an Asset Audit often uncovers insights that may be overlooked by more common high-level reviews, as on-the-ground teams may be unfamiliar with the steps necessary to fully capture the content requirements imperative for successfully pursuing information management initiatives. In reality, it is not plug and play … it’s so much more.

We recommend organizing your approach to an Asset Audit by identifying a high-level category framework, and fleshing out specific attributes per category that describe your organization’s content needs.

High-level categories may include but are not limited to:

Asset Descriptive Characteristics

Asset Technical Characteristics

Asset Workflow & Technology Touch Points

Asset Ownership

Asset Accessibility

Technology Applications & Systems Managing Assets

Asset Rights Information

Once populated, a properly conducted Asset Audit template should identify valuable insights about your organization’s physical and digital assets, their workflows, and the people, process, and technology ecosystem that support them.

Typical information identified in a thorough Asset Audit include:

Interrelationships among assets, people, processes, and technology

Workflow inefficiencies

File relationships that could be leveraged to facilitate asset discovery

Gaps in asset ownership and stewardship

Assets not managed by current technology but having the potential to be

Any non-compliance within the initiative’s policies and procedures

Optimity’s asset-first approach provides the backbone to any corporate information management initiative by guaranteeing a 360-degree snapshot of an organization’s content. The resulting Asset Audit should become a core document that guides all key elements of a holistic information management strategy, ensuring that it is scalable to support ongoing and future content and business needs.

Reid Rousseau is a Senior Associate at Optimity Advisors.

March 17, 2015

Creating Memorable Customer Experiences

by Rod Collins

When customers have a problem or need answers to an important question, there are few things that frustrate them more than the customer service gauntlet. We’ve all had the experience of calling a customer service number only to be greeted by an automated system taking us through a series of prompts—none of which really describes our issue—before the recorded voice tells us to hold for an operator, but instead of a real person, we are greeted by another recorded voice informing us that there is an unusual volume of calls, apologizing for the delay, and telling us how important we are. After several minutes, we finally get to talk to a live human being who greets us with a pitch about the company’s customer guarantee before asking us to describe our issue. However, instead of the resolution that we are hoping for, the customer service representative explains that he does not have the authority to solve our issue and passes our call to another representative who, in turn, passes us to another person until we eventually are passed back to the our first customer representative who empathizes with our plight and tells us that he wishes there was something he could do. When we remind him of his opening pitch about the company’s customer guarantee, we receive a firm lecture about how our particular problem is not covered by the guarantee. Regardless of a significant investment of our time, we end the call no better than we started.

Despite all the rhetoric about how “customers are our most important people,” few companies have the processes in place to back up the sentiment and far too many “customer guarantees” are nothing more than empty promises. What matters most to companies who put their customers through the customer service gauntlet is the bottom line, not the customer experience.

One company where you will never have to endure the gauntlet is Zappos. The innovative online retailer believes that the fundamental purpose of a business is to create value for their customers. What matters most to the business leaders at Zappos is the delivery of a delightful customer experience. That’s why every new hire at Zappos, whether the Chief Financial Officer or a customer service representative, is required to begin employment by participating in the company’s four-week customer training program. There are no exceptions. While most companies profess that listening to the voice of the customer is very important, Zappos actually backs up the commitment with an uncommon action—they actually answer the phone.

One company where you will never have to endure the gauntlet is Zappos. The innovative online retailer believes that the fundamental purpose of a business is to create value for their customers. What matters most to the business leaders at Zappos is the delivery of a delightful customer experience. That’s why every new hire at Zappos, whether the Chief Financial Officer or a customer service representative, is required to begin employment by participating in the company’s four-week customer training program. There are no exceptions. While most companies profess that listening to the voice of the customer is very important, Zappos actually backs up the commitment with an uncommon action—they actually answer the phone.

The call center is a special place at Zappos because it is where the employees get to meet the company’s most important VIP’s, their customers. That’s why there are no time limits on the calls and why the performance of customer service representatives is never measured by the number of calls per hour. What counts at Zappos is making sure that the telephone call is a positive experience for the customer, and, if that takes several hours, that’s alright as long as the customer’s needs are being met.

Customers are not abstractions at Zappos. They are real people with real needs, and the customer service representatives take the time to understand the needs of each of their customers, as Zaz Lamar discovered when she wanted to do something special for her mother.

Zaz’s mother was stricken with cancer and was going through a rough time. Zaz thought that a new pair of shoes might brighten her mother’s spirits. However, given the weight loss that can happen with cancer patients, Zaz wasn’t sure what size would fit her mom. So, she placed on online order with Zappos for several pairs of shoes in a variety of sizes, hoping at least one of them would work. Fortunately, two of the pairs fit, and Zaz made arrangements to return the remaining pairs of shoes. However, before Zaz was able to get to the UPS store, her mother took a sudden turn for the worse and passed away. When Zappos contacted Zaz after the shoes were not returned as expected, she explained that with all the arrangements she had to handle following her mother’s death, she was unable to comply with Zappos return policy.

At Zappos, policies are guidelines, not rigid rules. When the customer service representatives became aware of Zaz’s circumstances, rather than insisting that Zaz needed to make time to go to the UPS store, they sent UPS to her. While this gesture was very touching, what happened next is what caused this story to go viral on social media. Zappos customer service representatives got together and sent Zaz a beautiful bouquet of flowers to let her know that they were thinking of her in her time of need. At Zappos, policies are not restricted to protecting the company’s bottom line. The popular online retailer also has policies for making sure that their customer service representatives are creating memorable customer experiences. That’s because at Zappos everyone knows that they work for the customers and that their primary focus is to listen attentively to the voice of the customer. Do you think Zaz Lamarr will ever forget the random act of kindness she received from an empathetic customer service representative the day Zaz told Zappos she couldn’t return her recently deceased mother’s shoes on time?

Rod Collins (@collinsrod) is Director of Innovation at Optimity Advisors and author of Wiki Management: A Revolutionary New Model for a Rapidly Changing and Collaborative World (AMACOM Books, 2014).

March 10, 2015

Down With OTT: One Family’s Experience Cutting The Cable Cord

by Scott Moran

No Mas

The Super Bowl kicked off at 6:30 PM leaving me only two hours to get to Best Buy, purchase an outdoor antenna, get home, roof-mount the antenna, break out the drill and run cable from the attic down to my living room television.

I should back up.

As a husband and father of two future college tuitions small children, there’s very little I enjoy more than saving money. Unfortunately for my family, I also enjoy running unnecessarily elaborate household cost / benefit experiments. Ironically, even though I spent the majority of my career the broadcast / cable industry, I had thus far resisted the temptation to apply these inclinations to our cable service.

Then HBO announced their over the top offering.

Then Dish Network dropped their SlingTV news and enhanced channel line-up.

Then my $175 cable bill arrived.

It was time for some, in no way annoying, analysis of my family’s television viewing behaviors.

Confessions

“Cutting the cord”, canceling cable and consuming television over the Internet, is a concept that has existed for some time. In fact, an unscientific survey of neighborhood babysitters confirms that most young adults who attempt to feed my children dinner haven’t ever had a cable subscription. It’s likely that my sons won’t consider the subject once they stop spending my money and start spending their own.

Still, to 30-somethings like my wife and I, it was a daunting option and one I hadn’t seriously considered until now. That said, with my interest piqued I was confident that I had a solid plan in place to reach an informed decision.

Phase I of said plan was to take an inventory and answer three basic questions:

What did we own?

What did we watch?

What did we pay?

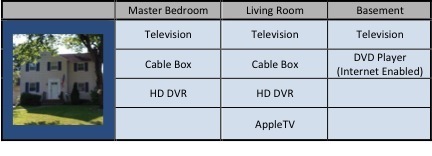

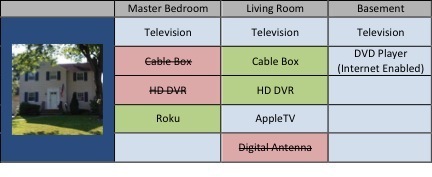

As I suspected, we own three TVs. Here’s a quick look at how they and their associated hardware are dispersed throughout the house:

Well, that was easy.

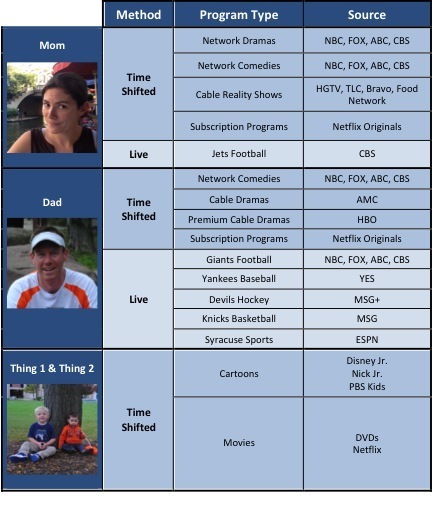

Defining my family’s viewing habits was slightly more difficult.

Initially through stealth but ultimately via constant badgering, I was able to construct the following viewing summary:

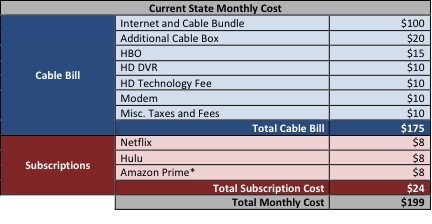

The final inventory step was to get an accurate look at our monthly expenses.

The final inventory step was to get an accurate look at our monthly expenses.

A review of our most recent bank statements revealed the following breakdown:

* Amazon Prime subscription projected to increase slightly

* Amazon Prime subscription projected to increase slightly

Half Measures

Inventory complete, it was time for Phase II.

The question was, knowing what I now did about our viewing habits, could I construct a cheaper, cable-free model without creating any major content or experience gaps?

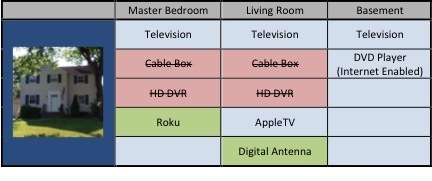

After a fair amount of research I landed on the household configuration below:

There are a number of streaming hardware options available and they each have their own idiosyncrasies in terms of the platforms they support but the decision to swap our bedroom cable box for a Roku was quite simple.

The one requirement of the bedroom television is that we need to be able to play episodes of Paw Patrol, preferably without waking up, when the children sneak into our bed at 5:30 AM.

Paw Patrol is a Nick Jr. program.

Amazon Prime has exclusive rights to stream Nick Jr. programs.

Roku and Amazon Fire TV are the only streaming boxes with built-in Amazon Prime functionality.

They both support Netflix. They both support Hulu. They both cost $99. Roku supports HBO GO, Amazon doesn’t.

So, that was that.

With the bedroom covered, I turned my attention to the living room.

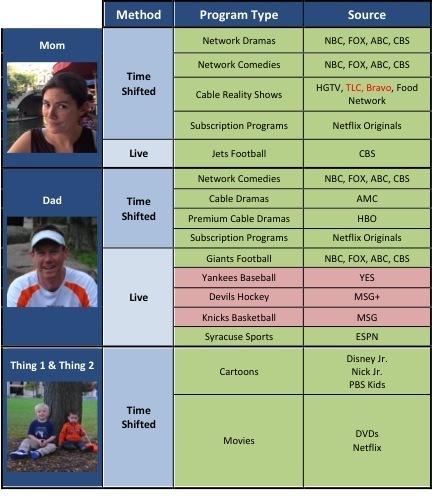

Assuming that the AppleTV, Netflix, Hulu Plus and Amazon Prime subscriptions remained and that our cable box would be replaced with a combination of SlingTV and HBO GO subscriptions, we’d be left with the following content gaps:

That’s a lot of red in my viewing boxes.

That’s a lot of red in my viewing boxes.

Many streaming packages exist for the NHL, NBA and MLB but all of them black out games airing on local cable networks. There are legally murky methods of circumventing these restraints but without resorting to shenanigans, the Yankees, Devils and Knicks appeared out.

There was, however, a solution for the NFL. Research on broadcast signal coverage confirmed that, given our proximity to New York City, we should be able receive broadcast networks in HD for free over the air with a relatively cheap, in-door antenna.

It seemed that cutting the cord would have no impact on my children, minimal impact on my wife and leave me with limited access to local sports.

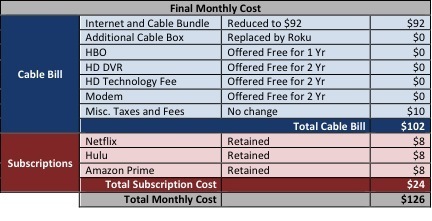

The $125 start-up costs for the Roku and antenna would be recouped quickly as our monthly bill would ultimately look like this:

* HBO GO OTT price estimated based on industry expectations

* HBO GO OTT price estimated based on industry expectations

** Sling TV price based on CES announcement

That’s a savings of $68 per month. It wouldn’t pay for college but it was enough for me to move on to Phase III.

Full Measure

It was time to reveal my plan to the family and ask them to slowly embrace the possibility of cutting the cord. But that didn’t sound like any fun so I waited for everyone to leave the house, disconnected the cable and locked the boxes in a drawer in my office.

The $99 Roku easily replaced the bedroom cable box. Configuration of our Netflix, Hulu and Amazon accounts took 15-20 minutes and after one shaky test run my wife and I quickly learned the three-button combination that would play Paw Patrol and allow us to recover precious minutes of sleep.

The living room, however, didn’t go down without a fight.

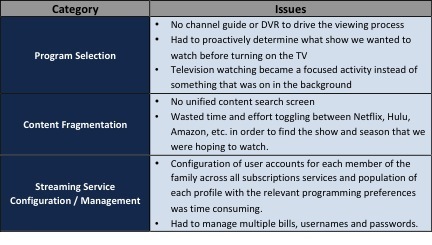

Other than the sporting events I could no longer force my wife to sit through, most of our shows were available on one of our subscription services. We did, however, encounter the following viewing challenges:

We explored some streaming aggregation sites but ultimately, we just got used to the change. Within a few days we were navigating the AppleTV and our subscription services as efficiently as we had navigated our cable box.

The final step was to watch the Super Bowl via the small, in-door antenna I had purchased. If we could pull that off, we were officially cutting the cord.

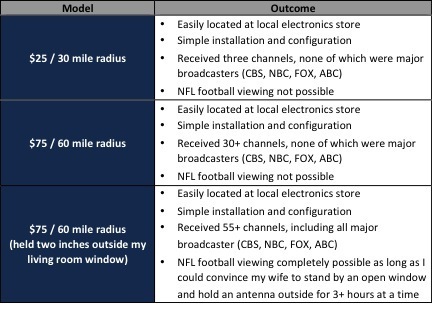

Unfortunately, the indoor digital antenna(s) performed as follows:

Which brings me back to my roof and the antenna I would have to mount there within the next two hours.

At this point it bears mentioning that I have a crippling fear of heights and am not what anyone would consider “handy”. So, I was fairly relieved when I heard my wife suggest that I should, “maybe just plug the cable back in.”

Better Call Cable

I couldn’t just plug the cable box back in and scuttle the entire experiment though. So, I decided to pretend the antenna adventure had panned out, threaten to cancel my cable subscription and see what happened.

Armed with my latest cable bill, the cable company’s best “internet only offer” and my estimated monthly cord cutting expenses, I dialed the phone.

After a fair amount of menu navigation and waiting on hold, I was connected to an accounts department representative responsible for fielding calls from prospective cord cutters.

I politely explained that I was planning to spend:

$72 for their internet only service (50 mbps download plus taxes and fees)

$35 on additional subscriptions (SlingTV & HBO GO)

$24 on current subscriptions (Netflix, Hulu, Amazon)

The $24 on current subscriptions was a wash but if the cable company could come in at under $107 for internet, cable and HBO then there wouldn’t be any financial incentive to leave. I explained that they had $35 to play with if they wanted to keep my business and dug in for a fight.

Surprisingly, they offered no resistance and immediately began waiving fees.

Roku in the bedroom? One cable box fee eliminated.

HD DVR Fee? Removed.

HD Technology Fee? Free.

Modem Rental Fee? Gone.

Free HBO? Done.

Cost reduction with no change to your cable package? Sure.

All in, the call lasted 15 minutes.

Our final household configuration looked like this:

Our final monthly bill ended up looking like this:

That’s a savings of $73 per month. It still won’t pay for college but its actually $5 per month cheaper than if we ditched cable.

Buyout

Looking at the finances, the conclusion seems simple – skip the experiment and pick up the phone. The cable companies know that the quality and availability of over the top options are increasing, as are the number of people willing to make the switch. Consequently, they’re desperate to retain subscribers even if it means undercutting their own advertised prices.

Running the numbers and experimenting had its virtues though, even if we did end up back with cable. We now know exactly what we’d miss by ditching cable (local sports, AMC) and what it would take to fill any intolerable content gaps (roof-top antenna). We’re better prepared to become a cord-cutting household than we were before, which could come in handy given the one drawback to all of the cable company’s concessions.

Each of the price cuts made to our bill came with an expiration of either one or two years, meaning the cable company is betting we’ll either forget or simply not notice when they quietly increase our fees. Even if our bill reaches its previous highs, two years is a long time.

Netflix, Hulu and Amazon Prime are already in place.

Sling TV and HBO GO are on the way and there are sure to be even more direct to consumer options in 2017.

Who knows, by then I may have even summoned the courage to scale my roof.

Scott Moran is a Manager in the Media practice at Optimity Advisors.

March 3, 2015

What’s So Special About Specialty?

by Don Anderson, Pharm.D.

Specialty drug product evolution has become the hottest topic in health care in two decades, easily dominating the conversations of most involved. With the exception of interventional radiology and a few other “boutique” concepts in medicine we have not encountered booming growth in an area of healthcare like we are currently witnessing in the large molecule biopharm space. With that boom comes innovation, pharmacologic treatment options abound, and the financial impact of innovation begins to take hold. While we strive to make treatment breakthroughs via pharmacologic interventions, pharmaceutical companies are capitalizing like never before.

To define a specialty drug isn’t easy, different entities draw boundaries around specialty products in different ways to suit their intent. For the most part we can apply the following qualifiers to a drug to determine if it is specialty or not. Not all qualifiers must be present but most will agree that four or more of these qualifiers will constitute a specialty drug:

Treatment of complex, chronic, and/or rare conditions

High cost, often exceeding $5,000 per month

Availability through exclusive, restricted, or limited distribution

Special storage, handling, and/or administration requirements

Ongoing monitoring for safety and/or efficacy

Risk evaluation mitigation strategy

Also key to setting the specialty stage is to apply a layer of financial perspective and bring specialty drug expenditures into context. According to the Pharmaceutical Care Management Association (PCMA), specialty pharmaceutical spending will rise from $55 billion in 2005 to $1.7 trillion by 2030. This will reflect an increase from 24 percent of total drug spend in 2005 to 44 percent of a health plan’s total drug expenditure by 2030.

The Foundation

In the 1970’s when the first set of biopharm engineered products were delivered to patients, no one could have foreseen the explosion that would take place nearly forty years later. The first set of these medication therapies was targeted at hemophiliacs who otherwise had limited treatment options and poor outcomes. The new “specialty” products had immediate success and were quickly adopted into national treatment standards to be used in emergency rooms nation wide. Hemophilia was almost immediately downgraded in the minds of medical practitioners because they now had good treatment options that worked in rapid fashion. Today these same drugs (BeneFIX, etc.) run about $60,000 for a single adult treatment, and we have seen health care organizations absorb single patient annual costs exceeding $350,000.

As the 1970’s came to a close there were less than a dozen drug therapies available that we would consider specialty today. Twelve was an absorbable number, even with the massive financial impact those drugs carried at that time. So few patients were afflicted with diseases that were treatable in this space that it was considered somewhat of an anomaly. Insurance providers created “laser” plans to pinpoint these exceedingly expensive patients, and the concept wasn’t a priority for most. Even throughout most of the 1980’s this landscape was relatively static and underwhelming.

The Boom

At the close of Q3, 2014 there were nearly 300 products on the market and ready to ship to patients and doctors nationwide. The FDA pipeline currently sits with over half of the 700+ products in biopharm specialty drug space. The specialty drug explosion has almost put the FDA at a standstill, unable to keep pace with the review, scrutiny and oversight previously within their wheelhouse. How did we go from eight to 300 drugs in a relatively short amount of time? What spurred the surge in biopharm output?

Throughout the 1980’s and 1990’s pharma was reaping rewards from the flood of “me too” (Me2) products that were hurled at the healthcare consumer. Drugs were launched that literally did nothing different than four or five other drugs just like it, thus creating the Me2 landscape. Pharma was stamping out drugs that weren’t innovative and, in some cases, succeeded in creating market confusion. Antihistamines and reflux meds come to mind—the likes of Claritin and Prilosec should sound pretty familiar to most of us by now. During this time pharma posted record-breaking profits from relatively little R&D expense. Some would refer to this time as times of plenty for obvious reason.

By about 2004, the wave of piggyback drug launches died off. Few Me2 scenarios remained and pharm profits stalled. Looking for creative revenue streams, big pharma became more aggressive in acquisition. This worked in a number of cases, but not nearly enough. Pharma had not reinvested in themselves, in disease research or treatments, and were limited in revenue options for the first time in many years. Enjoying the spoils of Me2 victories pharma had exposed themselves in an uncharacteristic way. As the last round of acquisitions swept up stragglers, pharma strategists modeled out the profitability of complex molecule bioengineered therapies that focused on the most serious of diseases. Using whatever information they could get their hands on, they modeled revenue forecasts that offered salvation from their financial funk. That salvation was in the form of specialty drugs whose proposed rate of return was potentially double, or triple, what had previously been experienced.

By about 2004, the wave of piggyback drug launches died off. Few Me2 scenarios remained and pharm profits stalled. Looking for creative revenue streams, big pharma became more aggressive in acquisition. This worked in a number of cases, but not nearly enough. Pharma had not reinvested in themselves, in disease research or treatments, and were limited in revenue options for the first time in many years. Enjoying the spoils of Me2 victories pharma had exposed themselves in an uncharacteristic way. As the last round of acquisitions swept up stragglers, pharma strategists modeled out the profitability of complex molecule bioengineered therapies that focused on the most serious of diseases. Using whatever information they could get their hands on, they modeled revenue forecasts that offered salvation from their financial funk. That salvation was in the form of specialty drugs whose proposed rate of return was potentially double, or triple, what had previously been experienced.

Reflection

A decade ago, when I started working in pharmacy and managed care, I didn’t have a single client with a specialty drug product in their top 25 utilized, some not even in the top 40. In 2014 more than half of my clients had at least three specialty drugs in the top 10 and an unfortunate handful had seven or eight of their top 10 utilized dominated by specialty medicines. The associated costs were, and still are, astounding. Not only were the new biopharm drugs all high cost medicines to begin with, we also saw a price inflation of 14 percent for the existing products already available in 2014. Pharma is clearly back in profit mode in the form of complex, engineered biopharm products.

David or Goliath?

Most experts agree that the small biotech firms will dominate moving forward, not the juggernaut companies most of us can name. In July of last year a small Dutch biotech firm, uniQure, brought to market it’s only product, Glybera, which provides curative therapy for a very small subset of patients afflicted with a rare genetic disease called Lipoprotein Lipase Deficiency, or LPLD. Glybera exploded the cost ceiling of any previously known drug therapy. Before July the most expensive single drug, non-acute therapy ran at most $180,000 per patient year. At product launch Glybera was priced at €1.1 million euros, or about $1.4 million US dollars. This was not the only record stamped by uniQure in this product launch. Glybera also happens to be the first ever gene therapy drug available in Western medicine. Glybera uses a modified virus to enter targeted cells and insert a replacement strand of DNA, correcting the course of action of those cells. In short, it reprograms faulty cells and in that action provides a cure to a fatal genetic disease. And make no mistake, uniQure has more genetic therapies in the works. They have announced progress in genetic therapy for hemophilia and initial stages of heart failure thus far. While the price of Glybera is still being hotly debated what we do know is that an LPLD patient will most likely die in their late 30’s from complications of advanced heart disease at an average cost of $3.8 million per occurrence. UniQure’s pricing position is that for a third of that cost they offer a curative outcome, not to mention the improved quality of life. While technically true, this price point is a hard pill for regulators and health plan executives to swallow (no pun intended).

The Orange Position

Don’t let the overall feel of this piece in any way lead you to think that biopharm output isn’t a tremendous success. We should all be proponents of innovative medicine to offer treatments and cures to those in need. Last year Gilead launched curative therapy for some types of Hepatitis C, which was groundbreaking. While the cost of that curative treatment exceeded $100,000, it is a fraction of the cost of providing ongoing pharmaceutical and medical care for a Hep C patient. But this is still a $100,000 expenditure that didn’t exist before, these scenarios need to be properly constructed and controlled. Many of these Hep C patients are in managed plans funded by our national healthcare dollars, plans that are already feeling financial pains. My concern is more focused on management of the concept than by the concept itself. Good stewardship of our healthcare dollars should be at the forefront of everyone’s thinking while we watch the biopharm landscape continue to hit home runs. Those of us who provide cutting edge guidance on this topic to those in need should be geared up for engagement. This space is only going to grow and become more complicated.

Don Anderson, Pharm.D. is a Senior Manager in the healthcare practice at Optimity Advisors.

February 27, 2015

Data Quality: An On-going Challenge For The Financial Industry And Regulators

By Vipul Parekh

Every financial, insurance, and asset management services firm has significantly changed its operations due to wide ranging global regulations such as Basel III, Dodd Frank, MiFiD, EU Solvency II, and the Volker rule in the US and Europe. More regulatory reforms are in the works for Asia. At the same time, all sectors in the financial markets have seen exponential increases in data volume through a variety of sources. The majority of CEOs want to leverage data in the quest for leaner organizations, better operational efficiency, and increased revenues. Sound data quality has always been critical and continues to play the central role in the future success of multi-billion dollar investments in this area. The quality of data not only impacts the success of these initiatives, but also is imperative to the firm’s business agility, productivity and survival likelihood. For regulated firms in financial, insurance and health care sectors, poor data quality can also results in breaches, potential fines, and reputation loss.

With the current regulatory focus, most of the firms have implemented data governance and IT infrastructure to process large amounts of data and produce regulatory reports in a timely manner. However, the next big challenge for regulators and firms is to start making sense of this data and assess if generated data can be used for meaningful market analysis at the macro (behavior of a group of banks) and micro level (behavior of a specific bank). The ultimate goal of these initiatives is to produce better quality data so that market trends and risks can be identified early enough to avoid crisis. Both firms and regulators have acknowledged the importance of data quality as one of the key bottlenecks in obtaining full transparency of the market. For example, Dodd-Frank regulation has established harmonization rules to closely monitor and improve data quality of key attributes for a number of derivative asset classes. Similarly, the Basel Committee has recognized the critical importance of data quality and issued a consultative paper (BCBS 239) with principles of a sound data governance framework (to be implemented by 2016). The firms looking to commercialize their data obviously need to produce near pristine data quality for their enterprise data analytics initiatives.

With the current regulatory focus, most of the firms have implemented data governance and IT infrastructure to process large amounts of data and produce regulatory reports in a timely manner. However, the next big challenge for regulators and firms is to start making sense of this data and assess if generated data can be used for meaningful market analysis at the macro (behavior of a group of banks) and micro level (behavior of a specific bank). The ultimate goal of these initiatives is to produce better quality data so that market trends and risks can be identified early enough to avoid crisis. Both firms and regulators have acknowledged the importance of data quality as one of the key bottlenecks in obtaining full transparency of the market. For example, Dodd-Frank regulation has established harmonization rules to closely monitor and improve data quality of key attributes for a number of derivative asset classes. Similarly, the Basel Committee has recognized the critical importance of data quality and issued a consultative paper (BCBS 239) with principles of a sound data governance framework (to be implemented by 2016). The firms looking to commercialize their data obviously need to produce near pristine data quality for their enterprise data analytics initiatives.

Essential Characteristics of Good Quality Data

With so much reliance on good quality data, let’s look at some essential characteristics of good data, what leads to bad data, and a few key points to ensure better data quality. The following are essential characteristics to assess if quality of data is good:

Completeness - Data attributes should contain values other than blank or default values.

Validity – Data attributes need to contain valid values as specified by the business to ensure consistency and integrity.

Accuracy – Data attributes should contain accurate values by enforcing conditional or field dependency rules.

Ease of use – The data values need to be easily interpreted without the need of complex parsing.

Availability – Data should be available in a timely manner for analytical use.

Firms should spend time performing current state assessment of data quality relative to the above characteristics and establish performance metrics for quality measurements.

Factors Leading to Bad Data Quality

Historically, most data quality issues result from poor data governance processes, tactical solutions, and weak rules governing firm’s systems and data stores. Some key root cause factors contributing to poor data quality are:

Lack of standardized approach to data definitions, metadata taxonomy, and validation rules

Weak change management policy for data dictionary updates

Duplicate copies of reference data with no clear identification of golden data source

Data ownership not clearly defined between the business and IT

Same data is interpreted differently by businesses and systems

Data may not be fitting the purpose for analytical or reporting use

Poor change management around data governance processes

How to Ensure Good Data Quality

At a high level, strong data governance processes with sound data validations at the time of ingestion, a well-designed data model, and metadata taxonomy are the key ingredients to produce good quality data. However, maintaining good data quality is an on-going challenge and may not receive enough attention by business and IT teams. So, establishing a formal data quality team to lead the initiative with focus on building key performance metrics, tools, and controls to monitor data quality will truly pay off in the long run. The team can collaborate with data stewards and data governance teams to re-enforce data quality standards and provide feedback into changes proactively. Additionally, the following investments will further add value to firms:

Build solid understanding of where to find data, what it means, and how to use it within business and technology teams.

Establish sound change management policies for firm’s data dictionaries, quality rules and data models.

Identify the golden source of data and avoid keeping duplicate copies of data.

Establish data standards (i.e. standard formats for dates, allowed values, alphabets, codes etc.) and leverage reference metadata to reduce variations in values.

Keep data validation rules and processes close to data ingestion processes as much as possible.

As firms and regulators rely more and more on data for analysis and decisions, the focus on data quality will continue to increase. Establishing a culture of data sensitivity and awareness is imperative for firms to maintain good data quality standards. The initiatives, such as formal data quality programs, training of data SMEs and IT teams to find value from data, and right tools to monitor data quality are key necessities for the success of data analytics and regulatory reforms and will be essential for firms to thrive in the long run.

Vipul Parekh is a leader of Financial Services practices at Optimity Advisors.

Rod Collins's Blog

- Rod Collins's profile

- 2 followers