Sarah Maddox's Blog, page 18

February 25, 2016

Accelerating Tech Comm Career Paths – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. Alyssa Fox gave a presentation entitled “From Byways to Highways: Accelerating Tech Comm Career Paths”. These are my notes from the session. All credit goes to Alyssa, and any mistakes are my own.

Alyssa started by describing how job ladders can help technical writers in an organisation plan their career paths. It helps people know the expectations for their job level in a particular job ladder, and how they can aim at promotion to the next level. It also helps managers allay people’s confusion caused when comparing their own performance to that of other people.

These are the areas that Alyssa’s company focuses on in their job ladder:

Technical expertise and product usability. This includes things like product knowledge, and how the person applies that knowledge, tools, and user experience. Alyssa described how a junior employee’s skill may differ from a more senior employee, for example in the field of product knowledge. The team encourages writers to do more than write PDF documentation. She explained that this is good for the writers’ careers too. She encourages writers to take part in and conduct UX (user experience) studies on the product itself. This helps prevent the team having to document around bad UI. The latter is not a good use of writers’ time or customers’ time.

Quality. This covers content improvement (for example, no typos, good structure), product improvement, understanding customer needs, and quality of writing. Again, the writer’s contribution differs depending on their level. A junior write may just produce good writing, while a more senior writer may offer suggestions on how to improve quality, and even drive processes for quality improvement. To move up the ladder, think about how things could be done better!

Functional expertise. These are the nuts and bolts of tech writing: putting a schedule together, process, defining a project, results. A junior person won’t be setting schedules – that’s the task of the lead writer, who’ll also coach the junior team members on how to estimate timings and create a schedule. Similarly, Alyssa has very different expectations about what a junior level person puts out in comparison to a senior level person. The important thing is that they’re learning.

Communication and teamwork. Alyssa said that Bangalore traffic is one of the best examples of communication that she’s seen. :) Thinking about how we communicate (a soft skill) is a huge part of our job. It’s vital to our success as technical communicators. Things that the job ladder takes into account: communication style, communication scope (how often you communicate with people outside the tech writer team, and what type of value you provide), teamwork, interpersonal skills. Junior writers will probably talk to only members of their immediate team, whereas more senior writers speak to people of other disciplines (such as marketing). Writers even have contacts outside the company, such as by attending and speaking at conferences, or making presentations to the team or to a wider audience.

Leadership. Alyssa emphasised you don’t need to be a manager to be a leader. It has to do with the way you carry yourself, the ideas you bring forward, your self motivation. Alyssa looks for initiative. Things on the ladder: Planning, interviewing, team leadership, change management.

Alyssa mentioned that getting promoted is great, but often people don’t necessarily want to be promoted. You can grow horizontally by expanding your skills, without necessarily wanting to progress up the ladder. UX is an obvious transition point for technical communicators. Another option is to become the team’s tools expert. Technical communicators can also move over to product management. It’s a different skillset, but the foundational knowledge that we gain as writers gives us a good start.

A focus on editing skills is an option for horizontal growth. Or information architecture. Similarly, a technical writer’s project management skills can be transferred to a full project management role. Marketing is another opportunity. Technical writers can sync up with the marketing team, to ensure a clear path for the user from the marketing content to the technical content.

In Alyssa’s job ladder, there are 7 levels of technical writer. From junior information developer (level 1), through lead information developer 1 (level 4), to senior information architect (level 7).

Thanks Alyssa for an energetic, information-packed presentation.

Collocations and Phrases in Technical Writing and Translation – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. Ashok Bagri gave a presentation entitled “Use of Collocations and Phrases in Technical Writing and Translation”. These are my notes from the session. All credit goes to Ashok, and any mistakes are my own.

A collocation is two or more words that go together and sound right. These collocations may not be grammatically correct, but idiomatically they make sense. Examples: “gross negligence” is good, but “bad negligence” sounds odd. “Safe drive” sounds good, but “secure driver” does not. “Hermetically sealed” is good. Ashok ran through various types of collocation, based on types of speech.

Ashok described the idea of a collocation tool. It helps you find the collocation you need – that perfect combination of words that’s on the tip of your tongue but you can’t find it. Ashok demonstrated an eWrite Right tool, developed by his company.

Several researches have worked for 24 months to build the database of collocations. The database has over 50,000 headword records of over 500,000 collocations. It includes terms from a number of domains (medical, legal, business etc). The tool also allows company-specific customisation of the collocations, and includes synonym phrases.

We also saw examples of phrases, synonyms, and similes that are useful for standardising documents and making them more easily comprehensible.

The demo showed the eWrite Right tool, first using the medical domain. For example, we searched for the word “administration”, saw a number of phrases using that word, and selected the phrase “oral administration”. We then copied it and pasted it into our document. We then searched for the word “access” in the IT context, and so on. The tool also shows you the correct use of phrases, and allows you to find synonym phrases for a particular phrase. For example, “to be a raving beauty” yields a number of phrases including “to be physically attractive”. The phrases are grouped into formal and informal.

You can add entries (collocations) that will be seen only within your organisation. You can even define terms as forbidden within your organisation, and give the preferred term.

Ashok closed by showing the prices of various versions of the tool (basic, standard, premium) and the estimated ROI (return on investment) gained from using the tool.

The Future is Intelligent Information – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. The keynote on day 2 of the conference was “The Future is Intelligent Information”, presented by Michael Fritz. These are my notes from the session. All credit goes to Michael, and any mistakes are my own.

Michael’s presentation was partly a recap of things said at the conference yesterday. It also discussed digitalisation and its consequences for tech comm in the future. He also introduced a program started last year by tekom.

There are many buzzwords around. “Smart” is one of those. Many things are smart these days: smart cars, smart homes, smart watches, smart fridges. These smart things have the capability to store and process data. They become parts of smart services. For example, instead of talking about a car, we talk about mobility. A mobility app may send you a car, or tell you to walk to your destination as you need more exercise. eHealth, smart shopping services, and so on.

What else is digitised? Production, for one. Smart components can join up and talk to each other by machine to machine communication. Products configure themselves automatically, forming intelligent products. Sometimes this is called cyber physical systems. This type of production system may not be focused on mass production, but rather on producing just enough for the people in the neighbourhood.

Another buzzword is “ubiquitous data”. All things are on the Web. The way in which we use data has changed. We used to use relational databases, and queried them, producing reports showing aggregated data, at specific time frames. Today we just search for data when we need it. Data is everywhere and can be used everywhere.

The economy is changing as a result of digitisation. Michael described Uber as an example. Users can rate drivers, and if a driver is consistently down-rated he or she won’t get any more fares. Taxis are suffering as a result of ride-sharing services like Uber.

Michael mentioned a few words of caution, such as cyber security (data is valuable), and the dangers of large companies dominating services by their digital platforms.

What are the consequences for technical communication?

Smart products: Usage information should be embedded in the product so users can get it easily, or the information should be easily accessible on the Internet. Products (here Michael looked at his bottle of water) should have connectors, such as codes that you can scan to get the information you want.

Smart services: Usage information won’t be stand-alone. Instead it’ll be part of an information chain. For example, when using Uber, the information is part of the whole process of using the service. When you car has a problem, the in-car screen will tell you what the problem is and where to find the nearest service station. It will even communicate the problem and your arrival to the service station.

Smart production: Usage information must be standardised so that it can be easily merged and delivered to the user. Remember, this is the scenario where components have automatically assembled themselves. There’s probably no printer around, so the information must be available in some other way.

Ubiquitous data: Usage information must be accessible from everywhere and from all the different device types that people are using.

Technical communicators should be ready for change. Michael mentioned the enticing prospect of an Uber for technical communicators. :) And we must be aware of cyber security, and make sure we take care of the security of the usage information itself. Be careful not to send malware to our customers!

Michael discussed some challenges we face. We need to know the reality of today: we’re not yet in the world of smart things and smart data. Paper and PDF still prevail.

Michael described a tekom initiative promoting a paradigm change from classical publishing to intelligent information delivery. The focus is on the (electronic) delivery of information. We shouldn’t focus so much on the creation of information, but focus first on delivery. Don’t think of documentation or even of topics any more. Think of usage situations, use cases, the customer journey. This will lead to intelligent information, which is the right information at the right time for the right person.

There’s a lot more to this concept of intelligent information, and content creation based on intelligent information: adding metadata, analysing use cases, the types of information products we produce (paper, mobile, augmented reality, embedded display, online, and so on).

Tekom has kicked off the intelligent information initiative, and is running various working groups to move it forward.

Thanks Michael, this was an informative and entertaining session. Tekom’s intelligent information is an exciting initiative indeed.

The future *is* technical communication – tcworld India 2016

This week I’m attending tcworld India 2016 in Bangalore. I was honoured to be invited to present the keynote address on day one. People seem to have enjoyed it. This post is a short summary of the session.

The presentation is called The future *is* technical communication. It’s a look at the fast-moving world of technology, the ways people interact with technology, and in particular how technology affects the way we communicate. I’m proposing that communication via technology is core to our experience of the world. We, as technical communicators, are in a very good position to grab the opportunities offered by this technology-rich world.

The slides are available on SlideShare: The future *is* technical communication.

An overview of the topics covered in the presentation

Technology is fast-moving and confusing.

It’s hard to keep up – blink, and you’ve missed something.

People suffer from cognitive overhead.

Sometimes they’re even bamboozled by technology.

But

Technology is not a lost cause.

People love technology.

People have a relationship with their tech.

People use technology for communication in weird and wonderful ways.

The way people absorb information has changed. It’s now fluid and asynchronous.

Immersive technology offers enhanced, full-on experiences.

In our weird and wonderful world, even inanimate things communicate with each other. We call this the Internet of Things, or IoT.

Some people are doing things that seem way out there. Until they become the norm.

I proposed a technical communicator’s mission statement, based on the new ways people are communicating and experiencing information.

And we looked at some things we can do right now to grab the opportunities this technology-rich world is making for us as tech communicators.

One of the slides from the presentation:

Predicting User Questions – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. Mayur Bhandarkar gave a presentation entitled “Predicting User Questions to Build an Information Repository”. These are my notes from the session. All credit goes to Mayur, and any mistakes are my own.

I’m interested in this session especially because it advocates the use of FAQ, which is a document type often criticised in the technical writing community. One of the items in the presentation overview was “What the FAQ”. Funny and clever!

Mayur started by saying that presenting information in a structured way is a failure. Rather, queries are the path to success.

What are the advantages that FAQs offer? FAQs give the reader the impression that the document is going to answer a problem that the reader has. The content makes you feel that you’re having an interaction with the system. And an FAQ provides a complete solution for a particular problem. Another advantage is that FAQs seem more informal.

Look at LinkedIn for example: they provide documentation in the form of FAQs.

How can you predict users’ questions? Mayur gave an example of predicting questions for users of a dish washer, and then for a user interface.

How to go about it:

List the features in the user interface.

Decide the types of questions: why, what, and how.

Build the question repository.

Examples:

Why should I use the x feature? (concept)

What actions can be performed using the x feature? (reference)

How do I use the x feature? (task)

Mayur related the above questions to the DITA types of concept, reference and task. Using the above examples, you can generate questions for each feature.

The next step is to organise the information so that it is useful to the user. Mayur showed us a system that prompted the user for information, including the language in which they want the information, and the type of information they want, and then showed a list of relevant questions. The user can select a question to see the answer. Mayur also discussed online sites that organise FAQs by most popular, most recently added, or modified features.

Users don’t like reading long procedures. When creating the FAQs, if a procedure ends up being very long, make the call whether to change the procedure into a video, simulation, or other easily consumable media. You could split the topic into smaller topics, but Mayur says that the users prefer media. An example is installation guides.

A trend that the team is following is the use of long-tailed keywords for SEO (search engine optimisation). A “keyword” can actually represent the whole task that the user is trying to perform. Using the FAQ format, an FAQ cis specifically related to such a keyword.

Someone from the audience asked about the problem of maintenance of videos. Mayur confirmed that these are maintenance heavy, and that the team produces videos based primarily on user demand, as represented by the support sites.

Another audience question was about basing your documentation on the UI, which is something technical writers don’t normally recommend. Mayur replied that this approach doesn’t work for troubleshooting or command-line applications, but only for simple UI-based documentation. It’s a solution for showing how to use the product.

An interesting point that Mayur mentioned: his team found that their PDF documents were being crawled more thoroughly by search engines than their online HML docs with the same content.

Thanks Mayur, this was an interesting perspective on FAQs.

February 24, 2016

Human Auditory Processing and Speech Recognition – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. Pavithra Garre gave a presentation entitled “Human Auditory Processing and Speech Recognition—Potential Latencies and Benefits for Documentation”. These are my notes from the session. All credit goes to Pavithra, and any mistakes are my own.

Pavithra Garre is an engineer in design technology at Samsung Electronics in South Korea. She started by showing us a video clip about communication as an innate human ability, and about the vision of interacting with computers via speech recognition, and the evolution of speech recognition technology.

Pavithra’s presentation was very interactive. She asked questions and chatted to the audience throughout. The presentation covered the layers of speech recognition architecture, the modes of speech recognition, speech identifiers and tagging, CMS interpretation and custom delivery.

Pavithra described a three-layer architecture:

Speech recognition: There are different modes of speech recognition: converting digital audio to simpler acoustic forms; matching units of speech; a complex lexical decoding system based on pattern matching; applying grammar, such as in predictive typing; and phoneme identification. There are challenges in speech recognition technology, such as background noise reduction, the size of the data gathered and data compression to reduce this size, and the problem of energy consumption.

Tagging the different elements of speech to present to the CMS: The software needs to identify what the person is talking about, and tag each element appropriately. Once the speech is tagged, it becomes data. Examples of tags may be a form of XML, or VTML, or a more complex tagging format like ID3 or ID3V2Easy.

Documentation in a database: Content is information plus data. The indexed tag and associated content are combined to form or retrieve a document, in what Pavithra calls “CMS interpretation”.

Some well known examples of speech recognition software:

Siri by Apple

Genie by Microsoft

Google Speech

Dragon

and more

Where can we use this technology and the voice bank containing the derived content?

Marketing agility

Big data and analytics

Resolving disputes about customer interactions in a help desk (this suggestion came from the audience)

Better performance

Pavithra also described things you need to take into account, such as data volume and data migration.

There was a lively and interested discussion at the close of the presentation. Thanks Pavithra for an interesting presentation!

Technical writing for big data applications – tcworld India 2016

I’m attending tcworld India 2016 in Bangalore. Surag Ramachandran presented a session called “Technical Writing for Big Data Applications”. These are my notes from the session. All credit goes to Surag, and any mistakes are my own.

Surag Ramachandran works in a financial services business unit, working with data analytics in enterprise big data applications. He presented a couple of use cases from the financial industry. Then he described how the applications evolved, and how that led to a shift in the technical writing processes.

Surag said that financial industry has many use cases, including risk reduction, financial crime detection, etc. When it comes to big data, most of the use cases are in the marketing area, analysing customer behaviour.

The new enterprise IT model is a convergence of SMAC – social media, mobile, analytics and cloud. These all contribute to big data. Big data consists of the structured and unstructured data coming from these sources.

The financial services industry is highly data driven. Analytics is an evolving field. Predictive modelling tools analyse incoming data and give the bank actionable intelligence. Realtime crime analysis can pick up things like insider trading.

Looking at the core banking systems, you’ll find common platforms: frameworks that contain common modules to support big data. For example, the IT team develops an application for the banking sector, then adapts it for the insurance sector. In other words, the strategy is software reuse.

Thinking about technical documentation, there is a clear case for content reuse. Write installation guides and user guides that describe the common modules. Create the content as DITA files. Store the content in a content management system, and use them in the documentation for each individual platform. Within the applications themselves, there’s opportunity for content reuse too.

A lively question-and-answer session followed Surag’s talk, with many questions from the audience about cross-industry platform reuse, training for people interested in getting into the industry, challenges in documenting features in the big data industry, and other aspects of the talk.

February 8, 2016

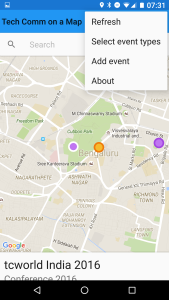

New Android app for the technical writing community

I’m super excited. I’ve just launched my first app on the Google Play Store: Tech Comm on a Map. It’s for technical communicators, to help us see what’s happening in the tech comm world.

See what’s happening in tech comm around you. If you know of something that’s missing from the map, you can add it yourself using the “Add event” option: a conference, meetup, business, or other item of interest to technical communicators.

See what’s happening in tech comm around you. If you know of something that’s missing from the map, you can add it yourself using the “Add event” option: a conference, meetup, business, or other item of interest to technical communicators.

As well as the Android app, Tech Comm on a Map is available on the Web too. The two apps use the same data source. The Web-based version has been around a while. The Android app is new and shiny: I published it to the Google Play Store this weekend.

I’d love it if you’d try it out. It’s even better if you have some events or other items of interest to share with the tech writing community, by adding them to the map. All additions are moderated before they appear in the app.

Contributors to the project

The Android app is an open source project on Github. To kick off the project, a group of us got together during a hackathon and built the basics of the app.

Disclaimer

Although I work at Google, this app is not a Google product.

January 23, 2016

Git-based technical writing workflow – notes from a TC Camp session

This week I attended TC Camp 2016 in Santa Clara. In the morning there were a few workshops, one of which was titled “Git-based Technical Communication Workflows”. A team from GitHub walked us through a workflow using Git and GitHub for technical documentation. These are my notes from the session. Any inaccuracy is my mistake, not that of the presenters.

The session covered primarily workflow on GitHub.com, and also touched on using Git on the command line. There was a good variety of Git skill levels amongst the attendees, from people who had never used Git or GitHub, to people who were comfortable using Git on the command line.

The presenters were Jamie Strusz and Jenn Leaver, both from GitHub. Stefan Stölzle was there as technical advisor, and answered plenty of questions from attendees.

Jamie started with an overview of the traditional GitHub workflow. Then Jenn, a technical writer at GitHub, explained her workflow for technical writing in particular. Here’s the repository that they created during the session, to illustrate the workflow: TCCamp demo repo on GitHub.

Some things I gleaned about a technical writing workflow using Git and GitHub:

When a fix or update is required to the documentation, the technical writers start by raising an issue in the help docs repo.

Next, the technical writer creates a branch.

Jenn’s team occasionally uses “mega branches” used by several technical writers working on a feature. But usually, Jenn just works in her own branch.

The term “mega branch” isn’t generally known. I suggested that it’d be great to have some information in the GitHub docs about best practices for managing such a branch. Jenn liked that idea.

Jenn’s team uses the Atom text editor.

The source format for the documentation is Markdown.

A useful tool for converting documentation from HTML to Markdown: pandoc.

A question came from the floor about Asciidoc. A few people have heard of it, and Jenn’s team is talking about it too.

They make all the changes in the branch, and make commits often.

They commit changes locally, then push to the web (that is, sync to the repo on GitHub). Then they make their pull request on the web.

Jenn likes to make pull requests (PRs) often, so that she can get feedback quickly. Sometimes she’ll have a PR with 200 changes in it.

More about mega branches:

Often the mega branch is for development of a doc change over a longer period of time.

Create a mega branch, then create branches off that mega branch.

Then you create the pull requests off your branch, and do the reviews there.

Then eventually push to the mega branch.

Jenn does not ever work off the master branch. The team of presenters recommend against working off master, because it’s more difficult to back out changes. GitHub views the master branch as the deployable state – so, it’s production. Therefore, always make changes and do collaboration on a branch. Then merge back into the master branch when ready for pushing to production.

A tip: sync with the main repo on GitHub often. In particular, before starting a new branch. Otherwise you’ll have problems later when merging your changes back.

Branches can be very small (just fixing a typo, for example) or very large (for a new feature).

It’s a good idea to be very descriptive with your commit messages, so that you can figure out which commit to roll back if necessary.

For version control within the files, Jenn’s team uses Liquid syntax. They also use Liquid for other conditional publishing, such as selecting enterprise docs only.

Git LFS (Large File Storage) is available for uploading large files such as videos, Adobe files, etc. For some of those files, depending on file type, LFS can also help you see the difference between versions. LFS also helps ensure that the large files don’t clutter up your repo.

What about conflicts, with multiple people working on the same doc? There are several ways of handling such merge conflicts. Some people use diff tools. Others rely on the comments that Git adds to the files about the conflicts: open the file in an editor, assess the conflicts, and make a decision about which change to accept.

Merge conflicts are reasonably rare. They usually happen if someone forgets to sync (“pull”) before starting a branch. Another time when it may happen is if you start working on something, and then the work gets put on hold for a while. When you start up again on that project, you may have conflicts.

What to put in a README file on GitHub: A general overview of what’s in the repo, and any vital information such as contributor guidelines.

Labels are useful for things like indicating status, such as “ready for review” or “in progress”, or something to indicate the feature under development.

The team uses the GitHub issue tracker as a primary communication tool. Even for things like noting when a team member is out of office, or for ordering office items. These issues go into the relevant repo. For example, at GitHub the team orders office items by creating issues in a “Gear” repo (which isn’t available for public viewing).

The commands that Jenn uses most often in her technical writing workflow are: git branch, git checkout, git status, git push, git push.

All reviews take place in a pull request. The team starts with a comment to start the conversation. They @mention people to bring them into the review, such as the subject matter experts. Before pushing the change to master, someone has to approve, by adding a squirrel emoji. :)

The team uses emojis all over the place in reviews! They use them in place of words.

Jamie created a repository which Jenn used to walk through each stage of the workflow:

TCCamp demo repo on GitHub.

An issue within the repo’s issue tracker: WIP – Jenn’s doc.

A commit.

A diff shows the changes to a file or files.

A pull request. People who are watching the repository will get a notification of the pull request. To request a review by specific colleagues, use an @mention in the comments.

If you want someone to focus on a specific area, give them the URL of the relevant commit within the pull request.

You can leave a comment on a specific line within the code, as well as comments on the pull request as a whole.

Titbits:

GitHub uses an icon of a squirrel (an emoji, :squirrel:) to indicate when reviewers are happy for a change to be shipped. In some forms the squirrel has a name: Heidi. The presenters didn’t know why GitHub uses a squirrel. Does anyone know?

We were given a limited-edition GitHub Technical Writer Octocat sticker!

January 22, 2016

Working with an engineering team

Earlier this week I spoke at a Write the Docs meetup in San Francisco. The topic was “Working with Engineers”. Kael Oisinson, a support engineer at Atlassian, gave a talk too. It was great meeting him and all the Write the Docs SF folks.

This was my first ever Write the Docs meetup. What a warm, enthusiastic group of people! There were 50 to 60 attendees (see the meetup description and attendee list). Most were technical writers, with a scattering of software engineers and support engineers.

Kael Oisinson, a support engineer at Atlassian, gave an engaging speech about the life of a support engineer who discovers the value of creating documentation as a way of scaling access to information. It was really good to hear a point of view that’s related to, but not exactly the same as, a technical writer’s. We should do that more often.

My presentation is available on SlideShare: Working with an Engineering Team. Here’s an outline of what we discussed:

Sit with the team

Grok teamwork and audience

Play with the team

Adopt the team’s methodologies

Get to know the tools

Gather and share information

The group was lively and fun, with plenty of interaction and questions. Thanks to Tom Johnson, Laura Stewart, and the Write the Docs SF organisers, for a great evening.