Jeremy Keith's Blog, page 129

March 7, 2013

Told you so

One of the recurring themes at the Responsive Day Out was how much of a sea change responsive design is. More than once, it was compared to the change we went through going from table layouts to CSS …but on a much bigger scale.

Mark made the point that designing in a liquid way, rather than using media queries, is the real challenge for most people. I think he’s right. I think there’s an over-emphasis on media queries and breakpoints when we talk about responsive design. Frankly, media queries are, for me, the least interesting aspect. And yet, I often hear “media queries” and “responsive design” used interchangeably, as if they were synonyms.

Embracing the fluidity of the medium: that’s the really important bit. I agree with Mark’s assessment that the reason why designers and developers are latching on to media queries and breakpoints is a desire to return to designing for fixed canvases:

What started out as a method to optimise your designs for various screen widths has turned, ever so slowly, into multiple canvas design.

If you’re used to designing fixed-width layouts, it’s going to be really, really hard to get your head around designing and building in a fluid way …at first. In his talk, Elliot made the point that it will get easier once you get the hang of it:

Once you overcome that initial struggle of adapting to a new process, designing and building responsive sites needn’t take any longer, or cost any more money. The real obstacle is designers and developers being set in their ways. I know this because I was one of those people, and to those of you who’ve now fully embraced RWD, you may well be nodding in agreement: we all struggled with it to begin with, just like we did when we moved from table-based layout to CSS.

This is something I’ve been repeating again and again: we’re the ones who imposed the fixed-width constraint onto the medium. If we had listened to John Allsopp and embraced the web for the inherently fluid medium it is, we wouldn’t be having such a hard time getting our heads around responsive design.

But I feel I should clarify something. I’ve been saying “we” have been building fixed-width sites. That isn’t strictly true. I’ve never built a fixed-width website in my life.

Some people find this literally unbelievable. On the most recent Happy Mondays podcast, Sarah said:

I doubt anyone can hold their hands up and say they’ve exclusively worked in fluid layouts since we moved from tables.

Well, my hand is up. And actually, I was working with fluid layouts even when we were still using tables for layout: you can apply percentages to tables too.

Throughout my career, even if the final site was going to be fixed width, I’d still build it in a fluid way, using percentages for widths. At the very end, I’d slap on one CSS declaration on the body to fix the width to whatever size was fashionable at the time: 760px, 960px, whatever …that declaration could always be commented out later if the client saw the light.

Actually, I remember losing work back when I was a freelancer because I was so adamant that a site should be fluid rather than fixed. I was quite opinionated and stubborn on that point.

A search through the archives of my journal attests to that:

2003: The long debate

2004: Regurgitating the chestnut

2004: Fixed vs. Liquid… yet again

2005: Fixed Fashion

2005: Transparent liquid

2005: A List Too Far Apart

2006: Fixtorati

2007: New Year’s Resolution

2009: Zoomfusion

Way back in 2003, I wrote:

It seems to me that, all too often, designers make the decision to go with a fixed width design because it is the easier path to tread. I don’t deny that liquid design can be hard. To make a site that scales equally well to very wide as well as very narrow resolutions is quite a challenge.

In 2004, I wrote:

Cast off your fixed-width layouts; you have nothing to lose but your WYSIWYG mentality!

I just wouldn’t let it go. I said:

So maybe I should be making more noise. I could become the web standards equivalent of those loonies with the sandwich boards, declaiming loudly that the end is nigh.

At my very first South by Southwest in 2005, in a hotel room at 5am, when I should’ve been partying, I was explaining to Keith why liquid layouts were the way to go.

That’s just sad.

So you’ll forgive me if I feel a certain sense of vindication now that everyone is finally doing what I’ve been banging on about for years.

I know that it’s very unbecoming of me to gloat. But if you would indulge me for a moment…

I TOLD YOU! YOU DIDN’T LISTEN BUT I TOLD YOU! LIQUID LAYOUTS, I SAID. BUT, OH NO, YOU INSISTED ON CLINGING TO YOUR FIXED WIDTH WAYS LIKE A BUNCH OF BLOODY SHEEP. WELL, YOU’RE LISTENING NOW, AREN’T YOU? HAH!

Ahem.

I’m sorry. That was very undignified. It’s just that, after TEN BLOODY YEARS, I just had to let it out. It’s not often I get to do that.

Now, does anyone want to revisit the discussion about having comments on blogs?

Tagged with

liquid

fluid

responsive

design

development

March 5, 2013

Tools of the trade

I remember when Rebecca wrote about A Baseline for Front-End Developers:

I think we’re seeing the emphasis shift from valuing trivia to valuing tools.

I know that Paul places a similar emphasis on the value of front-end development tools. Personally, I’ve always been lax with keeping up to date with start-of-the-art tools. I’ve been working on the web long enough to see yesterday’s cutting-edge tools stagnate or fall out of favour.

Still, I should really do more to keep up. There are a few design tools cropping up that I should really investigate.

LayerVault and Pixelapse both offer git-style version control for Photoshop, Fireworks, and Illustrator files. Sounds useful.

Then there are the tools that I think could be really useful for making HTML prototypes: Easel is browser-based, while Hammer and Mixture are OS X apps. They’ve all got enough time-saving shortcuts to make them worth investigating further. I wouldn’t use them for production code, but like I said, handy for prototyping.

Tagged with

tools

programmes

osx

design

apps

layervault

pixelapse

hammer

mixture

easel

March 4, 2013

Responsive audio out

Thanks to Drew’s tireless work over the weekend, all the audio from the Responsive Day Out is available for your aural pleasure.

I’ve added them on Huffduffer. Subscribe to the RSS feed in the podcast-listening application of your choice. Or feel free to cherry-pick from the day’s snappy, quick-fire talks:

Sarah Parmenter: The Responsive Workflow

David Bushell: Responsive Navigation

Tom Maslen: Cutting the Mustard

Jeremy chatting with Sarah, David, and Tom

Richard Rutter: Responsive Web Fonts

Josh Emerson: Asset Fonts

Laura Kalbag: Design Systems

Elliot Jay Stocks: RWD — The War Has Not Yet Been Won

Jeremy chatting with Richard, Josh, Laura, and Elliot

Anna Debenham: Playing with Game Console Browsers

Andy Hume: The Anatomy of a Responsive Page Load

Bruce Lawson: What’��s Next in StandardsLand

Jeremy chatting with Anna, Andy, and Bruce

Owen Gregory: Antiphonal Geometry

Paul Lloyd: The Edge of the Web

Mark Boulton: In Between

Jeremy chatting with Owen, Paul, and Mark

Share and enjoy!

Tagged with

responsiveconf

responsive

conference

audio

huffduffer

podcast

March 2, 2013

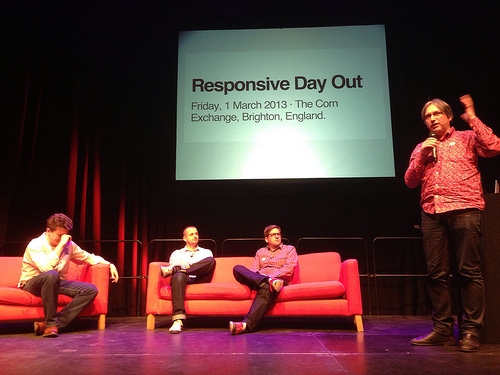

Oh, what a Responsive Day Out that was!

When I announced the Responsive Day Out just three months ago, I was at pains to point out that it wouldn’t be a typical Clearleft event:

It’s a kind of conference, I guess, but I think of it as more like a gathering of like-minded people getting together to share what they’ve learned, show some examples, swap techniques, and discuss problems. And all of it will be related to responsive web design.

Well, it all went according to plan. In fact, it surpassed all expectations. It was really, really, really good, thanks to the unstoppable quick-fire knowledge bombs being dropped by each and every amazing speaker.

In the run-up to the event, I kept telling the speakers not to prepare too much; after all, these would just be quick 20 minute talks, and they were all donating their time and expertise …but clearly they didn’t listen to me. Everyone brought their A-game.

At the excellent after-party, courtesy of the kind people of Gridset, lots of people said how much they liked the format. It’s one I shamelessly ripped off from Honor’s Improving Reality event—a batch of three quick, focused talks back-to-back, followed by a joint discussion with all three speakers, and some questions from the audience. I was playing host for the day, and I tried my best to keep things lighthearted and irreverent. From the comments I heard, I think I succeeded in bringing the tone down.

Despite the barebones, stripped-down nature of the event, there was good coffee on hand throughout, thanks to A Book Apart and Shopify.

There will be audio, thanks to Drew. There will be video, thanks to Besquare and Mailchimp.

In the meantime, Marc has posted a bunch of pictures on Flickr and Orde Saunders liveblogged the whole day.

If you came along, thank you. I hope you had a good time and enjoyed a day out at the seaside. Special thanks to the people who came from afar afield as Italy, Spain, and Belgium. I really appreciate it.

I really enjoyed myself, and I was relieved that the somewhat unorthodox gathering worked so well. But really, yesterday was a smash hit because of the speakers. Sarah, David, Tom, Richard, Josh, Laura, Elliot, Anna, Bruce, Andy, Owen, Paul, and Mark …you were all magnificent. Thank you, thank you, thank you!

February 26, 2013

Responsive day soon

This is shaping up to be a fun week. I’m off to Altitude down the coast in Portsmouth tomorrow. If you’re going to be there, come and say hello. Then just two days after that it’ll be the Responsive Day Out here in Brighton. Squee!

If you’re coming to Brighton the day before the conference, I recommend heading along to Thursday’s Async event on building HTML5 games.

I won’t be able to make it, alas—I’ll be whisking the speakers off to an undisclosed location for a nice evening out.

I have a favour to ask of you if you’re coming along to the Responsive Day Out on Friday. If you have any web-related books that you no longer need, please bring them along with you. I want to support Scrunchup’s initiative to distribute materials to schools.

I’ll also be bringing some books along, thanks to sponsors A Book Apart. If you ask a question during the discussion portions of the day, you can claim a book: either Ethan’s, or Luke’s, or Karen’s.

If you’re coming along to the Responsive Day Out, please get there nice and early for registration. Doors open at 9am and everything kicks off at 10am. There will be coffee. Good coffee.

Here’s the plan. It may change…

9:00 – 10:00Registration

10:00 – 10:20Sarah Parmenter

10:20 – 10:40David Bushell

10:40 – 11:00Tom Maslen

11:00 – 11:15Chat with Sarah, David, and Tom

11:15 – 11:45Break

11:45 – 12:05Richard Rutter and Josh Emerson

12:05 – 12:25Laura Kalbag

12:25 – 12:45Elliot Jay Stocks

12:45 – 13:00Chat with Richard, Josh, Laura, and Elliot

13:00 – 14:30Lunch break

14:30 – 14:50Anna Debenham

14:50 – 15:10Andy Hume

15:10 – 15:30Bruce Lawson

15:30 – 15:45Chat with Anna, Andy, and Bruce

15:45 – 16:15Break

16:15 – 16:35Owen Gregory

16:35 – 16:55Paul Lloyd

16:55 – 17:15Mark Boulton

17:15 – 17:30Chat with Owen, Paul, and Mark

February 22, 2013

Jets dream

I’m back home after a bit of a whirlwind visit to the US for An Event Apart in Atlanta (which was, as always, superb). I’m currently battling east-west jet lag. My usual technique is exactly what Charles Stross describes:

Simply put: go to bed immediately but set an alarm to wake you after no more than 3 hours. Then get up, and stay up, until 11pm. That’s around 3-5 hours. During this time, do nothing more intellectually challenging than running a hot bath. You haven’t caught up with your sleep deficit, you’ve just pushed it back a bit: you are as cognitively impaired as if you are medium-drunk. Now is a good time — if you have the energy — to load your dirty clothes into the washing machine, have a bath, watch something mindless on TV, and catch up on web comics. Don’t worry: you won’t remember anything tomorrow. Just refrain from answering urgent business email, driving, assembling delicate instruments, or discussing important matters — if you do any of these things, odds are high that you’ll get them horribly wrong due to the impairment caused by cumulative sleep deprivation.

He goes on to wish for the invention of teleportation (and to describe a jet-lag inspired RPG).

There’s another situation where we have to deal with sitting in one place through a long uncomfortable experience: dental surgery. In that situation, we rely on medication to get us through. A little bit of nitrous oxide and the whole thing is literally over before you know it.

Why don’t we do the same thing for transatlantic air travel? The equipment is already in place—those oxygen masks above every chair could easily be repurposed to pump out laughing gas.

If this is a stupid idea, you’ll have to forgive me: I blame the jet lag.

February 18, 2013

Designing for Touch by Josh Clark

Josh the Touchmaster is here at An Event Apart Atlanta to tell us about Designing for Touch.

Science! Science and web design. As Scott said, a lot of what we’re doing now is checking the nuances of things we’ve been doing all along. We’re testing our assumptions.

We had web standards. Then we had responsive design. Now there’s a new revelation: there is no one true input for the web.

There are lots of new input mechanisms coming down the pipe, but right now the biggest new one is touch. This talk is about designing for touch.

As of last month, 31% of US adults have tablets. A few years ago, it was zero. The iPad is the fastest-growing consumer product in the history of consumer products. But touch isn’t just for mobile phones and tablets. Touch is on the desktop now too. All desktop web designs have to be touch-friendly now.

The ugly truth is that we’ve thought of web design as primarily a visual design medium. But when you add touch into the mix, it gets physical. It’s no longer just about how your pixels look; it’s about how they feel too. You are not “just” a visual designer now. There are portions of industrial design in what you do: honest-to-goodness ergonomics. In a sense, you’re designing a physical device, because it will be explored by hands. Phones and tablets are blank slates. We provide the interface. How will feel in the user’s hands? More specifically, how will it feel in one hand?

Phones

Thumbs are fantastic. The thumb, along with celebrity gossip, is what separates us from the beasts. There’s a natural thumb-resting area on the iPhone (coming from the bottom left to the centre). That’s why positioning conventions have evolved they way have on iOS—very differently to the desktop: navigation at the bottom instead of the top.

There’s an age-old principle in industrial design: content at the top; controls at the bottom. Now we see that in iOS. But in Android there are assistive buttons at the bottom (just as the industrial design maxim suggests). But now if you put your controls at the bottom too, you’ve got too much going on. So on Android you should be putting your controls at the top. But the drawback is that this is no longer in the thumb-sweeping area.

That’s iOS and Android. What about the web?

There are problems with pinning navigation to either the top or bottom. First of all, position: fixed is really screwy on mobile browsers. Secondly, in landscape (or other limited-height environments), the controls take up far too much room compared to the content. The third problem is also related to space: browser chrome.

Instead, a better pattern is to have a menu control that reveals navigation. The simplest version is when this is simply an internal link to navigation at the bottom of the page. As Luke says, forget HTML5: this is HTML1. Best of all, this pattern leads with the content and follows it with the navigation.

So that’s where things stand with touch navigation on phones:

iOS: Controls at screen bottom.

Android: Controls at screen top.

Web: Controls at page bottom.

Tablets

What about tablets? This is more likely to be a two-handed grip. Now having controls at the bottom would be really hostile to touch. The phone thumb-zone no longer applies, but thumbs still matter because they could be obscuring controls. Your thumbs are more likely to be on the sides, with easy reach to the top. So put controls in those regions where thumbs can come to rest: the side.

There are some cases where bottom navigation is okay: in an ebook where you’re showing a complicated control …or a map with a draggable interface below it. When you need a control to do browsing or preview for the content above it, the bottom is okay.

Hybrid

The unholy alliance: a laptop with a keyboard combined with a touch-enabled screen. There are lots of them coming down the line.

Mouse and trackpad usage drops off a lot on hybrid devices. There was always the fear of “gorilla arms” with hybrid devices but it turns out that people are gripping the sides of the screen (like a tablet) but when people are jabbing the screen, it’s more like a phone. If overlay the thumb comfort zone of a hybrid laptop with the thumb comfort zone of a tablet, there’s one area that’s left out: the top …exactly where we put our navigation on laptop/desktop screens.

This is a headache for responsive design. We’ve been correlating small screens with touch. It turns out that screen size is a lousy way to detect a touchscreen. And it’s hard to detect support for touch. So, for now, we’re really just getting.

But we have top men working on the problem. Top. Men. There’s a proposal in CSS4 for a pointer property. But even then, what will a hybrid device report if it supports trackpad, keyboard, mouse and touch?

Desktop

All desktop designs have to be touch-friendly. This is going to require a big change in our thinking. For a start, it’s time to bid farewell to hover events, certainly for crucial content …maybe it can be used for enhancements.

Given the thumb zones on tablets and hybrids, we can start putting frequent controls down the side—controls that stay in view even when the content is scrolled. Just to be clear: don’t put your main navigation there—put the controls that people actually use. Sorry, but people don’t actually use your main navigation. People use main navigation only as a last resort.

Quartz uses a very thumb-friendly layout. But how big should the touch targets be? It turns out …seven millimeters; the tip of a finger. Use nine millimeters if you really need to be safe.

I don’t know about you, but I’m not using millimeter as a unit in my CSS. But standards can help here. A pixel is defined in CSS2.1 to have a set millimeter size. But that doesn’t factor in the reality of dynamic viewports: zooming, pinching, scaling. Devices still report they’re actual physical size; the hardware pixels, that have nothing to do with the calculated web pixels.

On the iPhone we arrive at this magical 44 pixel number, which is repeated over and over throughout the UI. As long as one dimension is 44 pixels, you can squeeze the other dimension down to 29 pixels: 44x29 or 29x44. On iOS, that unit is repeated for a rhythm that just feels right: 44, 88, etc. The interface is designed not just for the hand, but of the hand. Use that rhythm, even on desktop screens.

That’s lovely and elegant. Digital watches are not. Touch targets need to be a certain size.

Now these optimisations mean there’s inevitably some constraint. But that can be a good thing: you might have to reduce what’s on your screen, and that means that your interface will be more focused. Clarity trumps density.

But simplicity isn’t always a good thing. Complexity has become a dirty word, but sometimes it’s needed. People don’t want a dumbed-down interface that won’t let them do everything.

And when you don’t have space constraints, that doesn’t mean you should fill up the space with crap. Aim for clarity, no matter what the size of the screen. On a smaller screen, that can be a more conversational, back-and-forth interaction, requesting and revealing information; question, answer; ask, receive. This progressive disclosure requires more taps, but that’s okay. Extra taps and clicks aren’t evil. When done right, they can actually be better because they provide clarity and invite conversation. As long as every tap is a quality tap that provides information, and helps complete a task, they are not evil.

But the long scroll …that is evil. That’s how kittens get killed.

Luke has documented the off-canvas pattern as a way of pushing some information off-screen. It’s kind of like a carousel. So instead of everything being stacked vertically, there can be sections that are navigated horizontally. That’s what Josh and Ethan did on the site for People magazine on small screens.

So for desktop interfaces, these are the points to remember:

Hover is an enhancement

Bottom left for controls.

Big touch targets.

44px rhythm.

Progressive disclosure.

But even though Josh has been talking all about the touch interface, it’s worth remembering that sometimes the best interface is no interface at all. And we need to stop thinking about input mechanisms as singular things: they can be combined. Think about speech + gesture: it’s literally like magic (think: Harry Potter casting a spell). Aral’s hackday project—where he throws content from the phone to the Kinect—gets a round of applause. Now we’ve got Leap Motion on its way. These things are getting more affordable and available. So we could be bypassing touch completely. We can move the interface off the screen entirely. How can we start replacing clumsy touch with the combination of all these sensors?

Digital is growing more digital. Physical is growing more digital. Our job is becoming less about pixels on screens and more about interacting with the world. We have to be willing to challenge established patterns. We have to think. We have to use our brains.

Tagged with

aeaatl

aea

aneventapart

atlanta

liveblogging

conference

event

touch

interface

design

mobile

tablet

desktop

Responsive and Responsible by Scott Jehl

Scott is here at An Event Apart in Atlanta to talk to us about being responsibly responsive. Scott works with Filament Group on large-scale responsive designs like the Boston Globe. They’ve always focus on progressive enhancement and responsive design feels like a natural evolution of that.

But responsive design is just a small part of what goes into responsible design. Responsible design isn’t just about layout, it’s about making sure that adding advanced features doesn’t inconvenience anyone. Mostly, we need to care; we need to care about people in situations other than our own.

This became very clear to Scott when he was travelling in southeast Asia, working remotely with his colleagues back in Boston. Most of the time he was accessing the web through a USB dongle over a cellular network. That’s how must people get online there. So don’t make assumptions about screen size and bandwidth.

Browsing via this dongle was frustrating. It was frustrating for Scott because, as a developer, he knew that there was no reason for the web to be so difficult to use on this connection.

It’s our fault. The average web page is over a megabyte in size. A megabyte! That breaks down to a lot of images, plenty of JavaScript, some HTML, and other …which means cat pictures. Sending 800K of images is a lot for any kind of device. Same with JavaScript: 200K is a lot. The benefits that we as developers get from that JavaScript is burdening our users.

When you prototype interactions, are you thinking about your own clock …or the user’s?

The average load time for the top 200 websites is between 6 and 10 seconds on a good connection.

Good performance is good design. Scott shows a graph of “webpage loading time” on one axis against “swear words” on the other. The graph trends upwards.

Now those 1MB webpages were probably desktop-specific sites, not responsive sites. But 86% of responsive sites send the same assets to all devices.

We have to design for new sizes and new input methods, but at the same time, the old contexts aren’t going away.

We’re moving from normalisation to customisation. We used to try to make things look and work the same in every browser. It was always a silly goal, but now it seems totally ridiculous. But content parity does not require experience parity. In fact, if things look and act the same across all devices, that might mean that we’ve missed a lot of opportunities.

We should avoid presumptions. We might be able to get the dimensions of a screen, but that could be different to the dimensions of the viewport. Instead of using pixels for breakpoints, we can use ems so that the user’s font size determines the layout changes.

Before we look at some responsible techniques, let’s look at the anatomy of a page load.

HTTP

We begin with typing a URL. That request goes to a DNS server. Then the request is sent to the right host. Then the host sends a response. But on a mobile device, there’s an extra step. You have to go through a tower first, before reaching the DNS server. That connection to the tower takes two seconds (for radio). That two-second delay only happens once, thankfully.

Once the connection is made, the HTML response sends more requests: CSS, JavaScript, pictures of cats. During this time, the browser holds off on rendering. This is the critical path.

After about eight seconds, the carrier drops the connection. That two-second connection needs to be made again. So we should try to get everything during that initial eight second period.

HTML

Network conditions can change. Every HTTP request is a gamble. Say you’ve embedded a third-party widget, like Twitter’s. If you’re in a country like China that blocks Twitter, the page will never load. Chrome will hang for thirty seconds.

We need to ensure that we’re delivering assets responsibly. Consider using conditional loading. On the Boston Globe, the home page has a lot of content. The headlines certainly belong there. But content from individual sections (that you can get to from the top navigation) is also being pulled into the homepage. We definitely want to provide the link to, for example, the sports section, but the latest content from the sports section could be conditionally loaded.

Scott mentions my 24 Ways article on conditional loading for responsive designs. At Filament Group, they abstracted this “link/conditional load” into a pattern. It uses HTML5 data attributes to indicate what should be pulled in via Ajax and where it should go.

CSS

Let’s look at how we deliver CSS. The way that we load CSS today is going to catch up with us. According to the HTTP Archive, the transfer size of CSS has the highest correlation to render time.

As a start, we should be using mobile-first media queries. That means starting with the styles for absolutely every device. Then we start adding our media queries for wider and wider viewport widths. This gives you a broadly-usable default. Those initial styles should go to everyone, but Scott likes to qualify them with an only all media query for some enhanced small-screen layout declarations. That makes sure that really old browsers won’t mess it up.

Generally mobile-first media queries work pretty well. But there’s a problem. We’re sending all the CSS to all the devices, even if they’ll never use half of it.

Would it be better to use multiple link elements with different media types? Alas, no. Browsers will download all stylesheets (even if the media type is set to “nonsense”) just in case they’ll need ‘em later. And unfortunately those requests are blocking. Modern WebKit browsers do a bit better. It still downloads all the stylesheets but it will render once it has the small-screen CSS.

The best approach is, unfortunately, to send just one CSS file but minify, compress, gzip and cache the shit out of it. CSS compresses really well. Gzip works on spotting redundant data—as soon as it notices a repeating segment, you get a gain. And CSS is full of repeated declarations.

Images

Images are an interesting problem. Remember they were the worst offenders in page bloat. Fortunately they don’t block, but still—this problem will only get worse.

There are background images. They’re easy. Browsers have gotten very smart about only downloading the background images they need.

Foreground images aren’t so easy. There’s the compressive image technique that Luke mentioned. Make the JPEG bigger in size, but lower in quality. When it’s scaled down in the browser, it looks perfectly fine. A 1x image saved at 90% quality, it’s 95K. The same image at 2x with 0% quality is 44K.

But there’s a concern about the memory footprint of doing this on some devices. Filament Group are looking into this but it’s very hard to test.

With compressive images, you just have to point to them in one img element using the src attribute.

Responsive images are much trickier. There are two proposals.

The first is the picture element, which uses multiple source elements to specify different images for different breakpoints. There’s also a fallback image as a last resort for older browsers.

The second proposal is the srcset attribute. It’s particularly well-suited to different pixel densities. The advantage of this one is that the browser, rather than the author, gets the last say about which image should be displayed. There’s also talk about merging both proposals.

Neither proposal works today so Scott created Picturefill, a polyfill for the picture proposal, although it uses divs. The fallback image goes in a noscript element to prevent browsers from pre-fetching it.

Since picture and Picturefill works with media queries, perhaps you can default to standard definition but allow users to opt in to high definition versions.

Managing different images for different resolutions and pixel densities gets very tedious. Maybe we should abandon the pixel. Certainly for icons, SVG can be really useful. It’s well-supported today. It also compresses well, because it is text: it’s a markup language, like HTML. That means you can also edit the source of an SVG image in a text editor.

You can reference the SVG file directly in the src attribute of an img element. For older browsers, you could use onerror to replace it with a different image format.

You can also put SVG as a background image. And you use them as data-URLs and just write out the SVG in your stylesheet.

Building on that, there’s a tool called Grunticon that generates and regenerates CSS whenever you make changes to an image. It also generates a preview document for you.

JavaScript

Lastly, there’s JavaScript. The trick is to stay off the critical path. Load just as much as you need up front, so you can load more later on.

Scott uses a handful of variables to determine what needs to be loaded or not:

Broad qualification. Does the browser support “only all”? Scott uses YepNope to test and if the test comes back positive, he loads in his global JavaScript.

Browser features. Are touch events supported? Again, Scott uses YepNope to test before loading JavaScript that uses touch.

Environmental conditions. Media queries, basically.

Template type. Define in your markup what kind of page this is (using a meta element e.g. “shoppingcart”. Then test whether we need shoppingcart.js.

You can even load CSS with YepNope but be careful. You could use it for fonts, for example, after you’ve tested that @font-face is supported.

Dealing with third-party content is tricky—it’s blocking. The Twitter embed code for tweets now includes an async attribute. It’s pretty well supported in modern browsers.

On the Boston Globe, they had to deal with ads. Nightmare! They used iframes.

But the main idea here is: defend the critical path. Develop responsively and responsibly. Today we have the tools to build rich experiences without excluding anyone.

Tagged with

aeaatl

aea

atlanta

aneventapart

conference

event

liveblogging

responsive

development

javascript

It’s a Write/Read Mobile Web by Luke Wroblewski

Luke is at An Event Apart in Atlanta to give his presentation: It’s a Write/Read Mobile Web. He begins by showing us where he lives in Los Gatos in Silicon Valley. Facebook is up the road in Palo Alto. Yahoo is down the road in Sunnyvale, next to a landfill. In between, there’s a little company called AOL. Then there’s Google and YouTube just off Highway One.

So you have a bunch of internet companies in close physical proximity. They are also the top sites in the US when it comes to time spent on the web, by a very wide margin. You would think that the similarities would end there, because they all provide very different services: social networking, email, messaging, search, and video. But they have something in common. They are all write/read experience. They don’t work unless people contribute content to them. You post updates, you send emails, you type in searches, you upload videos.

Tim Berners-Lee said that his original vision for the web was a place where we could all meet and read and write.

This isn’t just a US-centric thing. Looking at the worldwide list of most popular sites, you’ve got the ones mentioned already but also Twitter and Wikipedia, where all the content is contributed by their users. Even Amazon is powered by reviews. This is what makes the web so awesome. It’s not a broadcast medium. It’s a two-way street. It’s interactive.

So what’s the biggest factor that’s changing for all of these sites? Mobile. 67% of Facebook users and 60% of Twitter users are on mobile. If you like at the stats for Facebook, you can see that their growth is coming from mobile. Desktop usage is pretty flat: mobile usage is soaring. Facebook also has a growing segment of mobile-only users. Zuck has defined Facebook now as a mobile company.

When people hear about the growth in mobile, they assume it’s all about content consumption: reading status updates, watching videos, and so on. There’s a myth that small devices are not good for content creation. But if that were true, then wouldn’t that be a huge problem, given the statistics of growth? Facebook and Twitter would have almost no content. But it’s simply not true. Three hours worth of video are uploaded to YouTube every second from mobile devices.

People think that mobile devices are for games, social networking, and entertainment. And it’s true that those are popular activities. But that kind of usage is actually going down. Where is that time going? The fastest growing category is utilities: finding and buying things, financial management, health services, travel planning, etc. Basically, mobile is anything. So if mobile hasn’t effected you yet, it will.

How do we think about this write/read experience? We imagine that 100% of people are consumers: reading, browsing, etc. Then 10% are curators: liking, faving, etc. Finally there’s the 1% that actually create stuff. 1.8% of users provide almost all of Wikipedia’s content. So we naturally focus on the content consumption in our designs, because that’s the biggest number. But Luke thinks it makes sense to flip that on its head. That 1% are your most important users.

Facebook redesigned their content creation flow multiple times, trying to make that “write” experience as good as possible. Same with YouTube’s uploading interface. They both focused relentlessly on the content creation.

So as we shift our attention to mobile, we should be asking: how do we design for mobile creation?

1. One-handed use

Like Luke’s old adage about “one thumb, one eyeball”, this is literally about testing content creation with one thumb. For his startup, Polar, Luke tested the interface with one thumb and timed how long it took. It was tested and designed for a thumb.

“But Luke”, you cry, “Not everyone just uses their thumb on their mobile device screens!” Well, observing 1,333 people using mobiles on the street showed that they pretty much do.

Dan Formosa says we should design for extremes. In Objectified he talked about designing garden shears for someone with arthritis. If it works for that user, it will work well for everyone.

“But Luke”, you cry, “Aren’t you falling prey to the myth of the ‘mobile context’ where the user is in a hurry, using just one hand?” Well, it is true that lots of people use their devices in the home. But even when you’re not out on the street, you’re still using your thumb: using your phone while watching TV.

Focusing on this use case means you can rethink a lot of interactions. As a general principle, Luke advises “don’t let the keyboard come up.” That is, only provide a virtual keyboard as a last resort. Use smart defaults. Let people tap on links or checkboxes instead of typing. If the user needs to enter a location, they could do so by tapping on a map instead of typing in a place name. Provide a date-picker instead of making people type out dates. Let people use sliders for setting values.

When you design for one-handed use, you’re designing for an extreme case. It also forces you to challenge your assumptions.

2. Visually engaging

When you aim to avoid the keyboard, you often come up with more visual solutions, which can be a great opportunity.

Take Snapchat. People express themselves through photos. The Line app is chat, but with a huge amount of emoticons. Chat becomes visual.

The lesson here is not society is collapsing into sexting, but that images are very powerful on mobile.

Hotel Tonight’s mobile experience is based around big beautiful images.

“But Luke”, you cry “Why are you advocating big images? Isn’t performance the most important thing on mobile.” Well, yes. But instead of just abandoning images, let’s get really creative about how we serve up images. Take, for example, the experimentation around increasing image dimensions while reducing quality, which results in much smaller files with no noticable loss of fidelity.

3. Focused flows

Dwelling on one-handed use and visually engaging imagery are techniques you can use, but you should really focus on simplifying the processes you put people through. Foursquare have drastically simplified their check-in process.

Creativity is all about focusing on something until you find the simplest way to do it.

The Boingo wireless service used to require 23 inputs. They got read of 11 of them and increased conversion by 34%. That’s great, but they didn’t go far enough. You can always simplify even more.

Hotel Tonight got their flow down to three taps and a swipe. The CEO says that’s a competitive advantage. Just compare how long it takes to book a hotel with their competitors.

It takes big changes to go small.

When taking about forms and input, this might seem like standard design advice: focus and simplify. But keep focusing and simplifying even more.

4. Just-in-time actions

So we’ve seen three different ways to make things simpler, faster, and more focused. But isn’t that really hard on a small screen, with so little real estate?

Instead of providing intro tours (which are more like intro tests), why not provide introductory information only when it’s needed? Apply the same approach to actions. On Polar, there’s an option to hide the keyboard, but that action is only visible when the keyboard appears.

On long pages on Polar, people wanted a way to get back to the top. If you start scrolling upwards, the navbar from the top is overlaid. It detects that you might be trying to get back to the top, and provides the actions you want.

5. Cross-device usage

Everything Luke has talked about so far has been specifically focused on one kind of device: mobile. But we need to keep our on the multi-device write/read web that is emerging. Creation is happening across devices …sequentially and simultaneously.

The simplest example is access. If you’re on a desktop browsing Luke’s site on Chrome, and then you move to Chrome on the iPhone, there’s Luke’s site.

The next cross-device pattern is flow. We want our processes to work across devices. So if I’m on my laptop and I do a search, then I pick up my phone, I want that last input to carry over. On Ebay, you can save a draft listing on the desktop and pick it up later on mobile. On Google Drive, editing is synced simultaneously between all your devices.

Control is another pattern: using one device to tell another device what to do.

Luke hates log-in forms; that’s no secret. Five years ago, he worked on Yahoo Projector which used a scanned barcode to take control of a TV screen with a phone. He wanted to use this to replace log in, but that never happened. But there’s a service called OneID which is tackling the same use case: you can control log-in across devices.

The last example of cross-device usage is push. Going back to that draft listing on Ebay: take a picture on your phone and have that picture show up on the desktop browser view.

People talk about mobile versus desktop, but these are all examples of these different devices working together.

This was just a taster. We’re just getting started with this stuff.

Tagged with

aeaatl

aea

aneventapart

atlanta

conference

event

mobile

web

lukew

liveblogging

Billboards and Novels by Jon Tan

Jon is at An Event Apart in Atlanta to talk about Billboards and Novels. That means: impact vs. immersion.

Who in the audience has ever had to explain layout and design decisions? And who has struggled to do that? Jon has. That’s why he wants to talk about the differences between designing for impact—to grab attention—and immersion—to get out of the way and allow for absorbing involvement.

Jon examines the difference between interruption and disruption. You want to grab attention, but the tone has to be right. This is how good advertising works. So sometimes impact is a good thing, but not if you’re trying to read.

The web is reading.

Understanding how people read is a core skill for anyone designing and developing for the web. First, you must understand language. There’s a great book by Robert Bringhurst called What Is Reading For?, the summation of a symposium. Paraphrasing Eric Gill, he says that words are neither things, nor pictures of things; they are gestures.

Words as gestures …there are #vss (very short stories) on Twitter that manage to create entire backstories in your mind using the gestures of words.

A study has shown that aesthetics does not affect perceived usability, but it does have an effect on post-use perceived aesthetics. Even though a “designed” and “undesigned” thing might work equally well, our memory the the designed thing is more positive.

Good typography and poor typography appear to have no affect on reading comprehension. This was tested with a New Yorker article that was typeset well, and the same article typeset badly. The people who had the nicely typeset article underestimated how long it had taken them to read it. Objectively it had taken just as long as reading the poorly-typeset version, but because it was more pleasing, it put them in a good mood.

Good typography induces a good mood. And if you are in a good mood, you perform tasks better …and you will think that the tasks took less time. Time flies when you’re having fun.

What about type on screens?

David Berlow describes the web as “crude media.”

Jonathan Hoefler describes how he produces fonts differently for different media: the idea (behind the typeface) gives rise to a variety of forms.

Matthew Carter designed Bell Centennial to work at one size in one environment: the crappy paper of the telephone book. He left gaps in the letterforms for the ink to spread into.

The Siri typeface was redrawn anew as SiriCore specifically for the screen.

When Jon is evaluating typefaces, he is aware that some fonts are more optimised for the screen than others. He tests the smallest text first, in the most adverse environment: a really old HP machine running Windows XP. He also looks at language support, and features and variants like lining numerals: what are the mechanics of the font?

We take quiet delight in the smallest details of a typeface.

Legibility is so important. Kevin Larson analysed how we read. We take a snapshot of a bunch of letters, and our brains rearrange them into a word. We read by skipping along lines in “saccades” with pauses or “fixations” that allow us to understand a group of letters before reading on.

Jon tells the story of how Seb was fooled by a spoof Twitter account for the London Olympics. The account name was London20l2 (with a letter L), not London2012 (with the letter one). Depending on the typeface, that difference can be very hard to spot. Here’s a handy string:

agh! iIl1 o0

Stick that into Fontdeck and you’ll get a good idea of the mechanics of the font you’re looking at. You’re looking out for ambiguities that would interrupt the reader.

The same goes for typesetting: use the right quotes and apostrophes; not primes. Use ligatures when they help. But some ligatures are just showing off and they interrupt your reading. Typesetting should help reading, not interrupt or disrupt.

You can use text-rendering: optimizeLegibility but test it. You can use hyphens: auto but test it. You can add a non-breaking space before the last two words in a paragraph to prevent orphans. It will improve the mood.

A good example of interruption is the Ampersand 2012 website. There’s a span on the letter that should receive a flourish. But you can also use expert subsets. You can use Opentype features. There are common and discretionary ligatures. Implement them wisely. Use discretionary ligatures when you want to draw attention, like in a headline.

Scantastic readability. We wander around the page or screen in the same way as we read with saccades: our eyes jump around the place. Our scan path is a roughly Z-shaped pattern. You can design for this scan path: deliberately interrupt …but not disrupt. Jon uses the squint test when he is designing, to see what jumps out and interrupts.

Measure (line-length) is really important. Long lines tire us out. Bringhurst mentioned 45-75 character measures. But the measure is also bound to the prose: the content might be very short and snappy.

Contrast can give you careful, deliberate interruptions. Position, density, size …these are all tools we can use to interrupt without disrupting. The I Love Typography article on The Origins of ABC is a beautiful example of this. Compare it to the disruption of faddish parallax sites.

But there are no rules, just good decisions.

It’s all so emotional. Sometimes there are no words. Think of the masterful storytelling of the first twenty minutes of Wall-E.

We react incredibly quickly to faces. We can see and recognise a human face in 40 milliseconds, before we even consciously process that we’ve seen a face.

When we try to write about music, the result can be some really purple prose.

We have an emotional reaction to faces, colour, music …and type.

Jon demonstrates the effect on us that a friendly typeface has compared to a harsh typeface …even though the friendly typeface is used for the Malay word for “hate” and the harsh typeface is used for the Malay word for “love.” Our amygdala is reacting directly. It’s a physiological, visceral reaction we have before we even understand what we’re looking at.

Fonts are wayfinding apps for emotions. There’s a difference between designing places and designing postcards of places.

The Milwaukee Police News website is very impactful …but there’s no immersion. It doesn’t communicate beyond the initial reaction.

Places are defined by type and form: New York, London, Paris. A website for Barcelona or Brooklyn should reflect the flavour of those places.

All these things combine: impact, immersion, contrast, colour, type. We can affect people’s experiences. We can put them in a better mood.

Type shapes our experience. It paints pictures that echo in our memory long after we’ve left.

Eric Spiekermann said:

Details in typefaces are not to be seen, but felt.

Those details have to work in the greater context (of colour, contrast, layout).

Bruce Lee said:

Don’t think; feel.

Tagged with

aeaatl

aea

aneventapart

atlanta

conference

event

design

typography

type

reading

fonts

liveblogging

Jeremy Keith's Blog

- Jeremy Keith's profile

- 56 followers