Martin Fowler's Blog, page 37

November 19, 2014

Testing Strategies in a Microservice Architecture

Microservices has been quite the topic of conversation this year, with a rapid rise of interest. But although this architectural style is often a useful one it has its challenges, which can easily lead a less experienced team into trouble. Testing is a central part of this challenge, which is particularly relevant for those of us that consider testing to be a central part of effective software development.

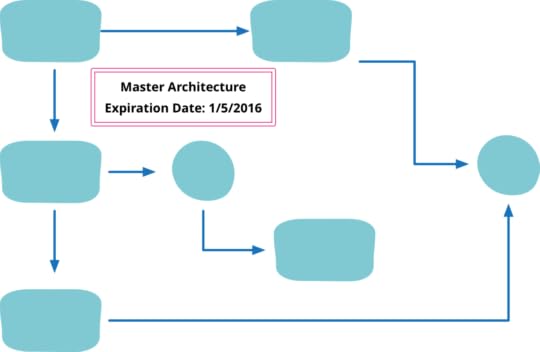

My colleague Toby Clemson has responded to this question by distilling his experiences into an infodeck that explains the various testing techniques to use with microservices and when to use them. The first installment outlines the anatomy of a microservice architecture and explains the role of the first testing technique: unit testing.

Retreaded: TechnicalDebtQuadrant

Retread of post orginally made on 14 Oct 2009

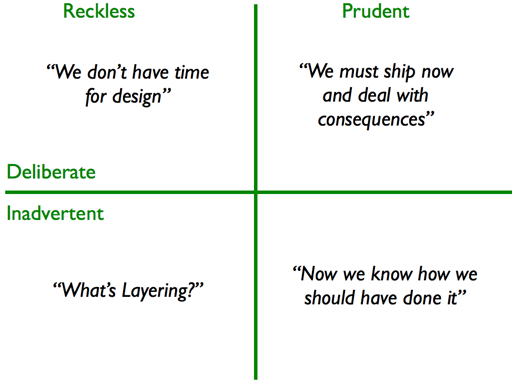

There's been a few posts over the last couple of months about

TechnicalDebt that's raised the question of what kinds of design

flaws should or shouldn't be classified as Technical Debt.

A good example of this is Uncle Bob's post saying a

mess is not a debt. His argument is that messy code, produced by

people who are ignorant of good design practices, shouldn't be a

debt. Technical Debt should be reserved for cases when people have

made a considered decision to adopt a design strategy that isn't

sustainable in the longer term, but yields a short term benefit,

such as making a release. The point is that the debt yields value

sooner, but needs to be paid off as soon as possible.

To my mind, the question of whether a design flaw is or isn't

debt is the wrong question. Technical Debt is a metaphor, so the

real question is whether or not the debt metaphor is helpful about

thinking about how to deal with design problems, and how to

communicate that thinking. A particular benefit of the debt metaphor

is that it's very handy for communicating to non-technical people.

I think that the debt metaphor works well in both cases - the

difference is in nature of the debt. A mess is a reckless debt which

results in crippling interest payments or a long period of paying

down the principal. We have a few projects where we've taken over a

code base with a high debt and found the metaphor very useful in

discussing with client management how to deal with it.

The debt metaphor reminds us about the choices we can make with

design flaws. The prudent debt to reach a release may not be

worth paying down if the interest payments are sufficiently small -

such as if it were in a rarely touched part of the code-base.

So the useful distinction isn't between debt or non-debt, but

between prudent and reckless debt.

There's another interesting distinction in the example I just

outlined. Not just is there a difference between prudent and

reckless debt, there's also a difference between deliberate and

inadvertent debt. The prudent debt example is deliberate because the

team knows they are taking on a debt, and thus puts some thought as

to whether the payoff for an earlier release is greater than the

costs of paying it off. A team ignorant of design practices is

taking on its reckless debt without even realizing how much hock

it's getting into.

Reckless debt may not be inadvertent. A team may know about good

design practices, even be capable of practicing them, but decide to

go "quick and dirty" because they think they can't afford the time

required to write clean code. I agree with Uncle Bob that this is

usually a reckless debt, because people underestimate where the

DesignPayoffLine is. The whole point of good design and

clean code is to make you go faster - if it didn't people like Uncle

Bob, Kent Beck, and Ward Cunningham wouldn't be spending time

talking about it.

Dividing debt into reckless/prudent and deliberate/inadvertent

implies a quadrant, and I've only discussed three cells. So is there

such a thing as prudent-inadvertent debt? Although such a thing

sounds odd, I believe that it is - and it's not just common but

inevitable for teams that are excellent designers.

I was chatting with a colleague recently about a project he'd

just rolled off from. The project that delivered valuable software,

the client was happy, and the code was clean. But he wasn't happy

with the code. He felt the team had done a good job, but now they

realize what the design ought to have been.

I hear this all the time from the best developers. The point is

that while you're programming, you are learning. It's often the case

that it can take a year of programming on a project before you

understand what the best design approach should have been. Perhaps

one should plan projects to spend a year building a system that you

throw away and rebuild, as Fred Brooks suggested, but that's a

tricky plan to sell. Instead what you find is that the moment you

realize what the design should have been, you also realize that you

have an inadvertent debt. This is the kind of debt that Ward talked

about in his

video.

The decision of paying the interest versus paying down the

principal still applies, so the metaphor is still helpful for this

case. However a problem with using the debt metaphor for this is

that I can't conceive of a parallel with taking on a

prudent-inadvertent financial debt. As a result I would think it

would be difficult to explain to managers why this debt appeared. My

view is this kind of debt is inevitable and thus should be

expected. Even the best teams will have debt to deal with as a

project goes on - even more reason not to recklessly overload it

with crummy code.

reposted on 19 Nov 2014

November 17, 2014

photostream 77

November 4, 2014

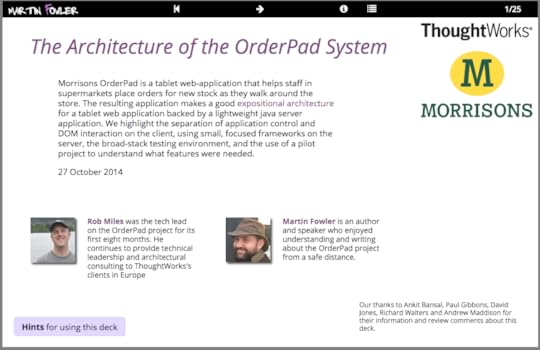

Architecture of Morrisons OrderPad

Morrisons OrderPad is a tablet web-application that helps staff in supermarkets place orders for new stock as they walk around the store. My colleague Rob Miles and I felt that the resulting application makes a good expositional architecture for a tablet web application backed by a lightweight java server. We highlight the separation of application control and DOM interaction on the client, using small, focused frameworks on the server, the broad-stack testing environment, and the use of a pilot project to understand what features were needed.

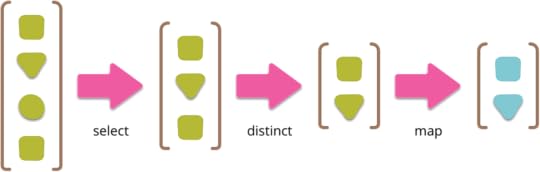

Updates to Collection Pipelines

Over the last few weeks I’ve been quietly making a bunch of small updates to my article on collection pipelines. To the main text I’ve added a subsection contrasting them with Nested Operator Expressions. I’ve also added several operators to the operation catalog, including slice and various set operations.

October 26, 2014

photostream 76

October 22, 2014

Bliki: SacrificialArchitecture

You're sitting in a meeting, contemplating the code that your

team has been working on for the last couple of years. You've come

to the decision that the best thing you can do now is to throw away

all that code, and rebuild on a totally new architecture. How does that

make you feel about that doomed code, about the time you spent

working on it, about the decisions you made all that time ago?

For many people throwing away a code base is a sign of failure,

perhaps understandable given the inherent exploratory nature of

software development, but still failure.

But often the best code you can write now is code you'll discard in a

couple of years time.

Often we think of great code as long-lived software. I'm writing

this article in an editor which dates back to the 1980's. Much

thinking on software architecture is how to facilitate that kind of

longevity. Yet success can also be built on the top of code long since

sent to /dev/null.

Consider the story of eBay, one of the web's most successful large

businesses. It started as a set of perl scripts built over a weekend in

1995. In 1997 it was all torn down and replaced with a system

written in C++ on top of the windows tools of the time. Then in 2002

the application was rewritten again in Java. Were these early

versions an error because the were replaced? Hardly. Ebay is one of

the great successes of the web so far, but much of that success was

built on the discarded software of the 90's. Like many successful

websites, ebay has seen exponential growth - and exponential growth

isn't kind to architectural decisions. The right architecture to

support 1996-ebay isn't going to be the right architecture for

2006-ebay. The 1996 one won't handle 2006's load but the 2006

version is too complex to build, maintain, and evolve for

the needs of 1996.

Indeed this guideline can be baked into an organization's way of

working. At Google, the explicit rule is to design a

system for ten times its current needs, with the implication

that if the needs exceed an order of magnitude then it's often better

to throw away and replace from scratch [1]. It's common for

subsystems to be redesigned and thrown away every few years.

Indeed it's a common pattern to see people coming into a maturing

code base denigrating its lack of performance or scalability. But

often in the early period of a software system you're less sure of

what it really needs to do, so it's important to put more focus on

flexibility for changing features rather than performance or

availability. Later on you need to switch priorities as you get more

users, but getting too many users on an unperforment code base is

usually the better problem than its inverse. Jeff Atwood coined the

phrase "performance is a feature", which some people read as saying

the performance is always priority number 1. But any feature is

something you have to choose versus other features. That's not

saying you should ignore things like performance - software can get

sufficiently slow and unreliable to kill a business - but the team

has to make the difficult trade-offs with other needs. Often these

are more business decisions rather than technology ones.

So what does it mean to deliberately choose a sacrificial

architecture? Essentially it means accepting now that in a few years

time you'll (hopefully) need to throw away what you're currently building. This

can mean accepting limits to the cross-functional needs of what

you're putting together. It can mean thinking now about things that

can make it easier to replace when the time comes - software

designers rarely think about how to design their creation to support

its graceful replacement. It also means recognizing that software

that's thrown away in a relatively short time can still deliver

plenty of value.

Knowing your architecture is sacrificial doesn't mean abandoning

the internal quality of the software. Usually sacrificing internal

quality will bite you more rapidly than the replacement time, unless

you're already working on retiring the code base. Good modularity is

a vital part of a healthy code base, and modularity is usually a big

help when replacing a system. Indeed one of the best things to do

with an early version of a system is to explore what the best

modular structure should be so that you can build on that knowledge

for the replacement. While it can be reasonable to sacrifice an entire

system in its early days, as a system grows it's more effective to

sacrifice individual modules - which you can only do if you have

good module boundaries.

One thing that's easily missed when it comes to handling this

problem is accounting. Yes, really — we've run into situations where

people have been reluctant to replace a clearly unviable system

because of the way they were amortizing the codebase. This is more

likely to be an issue for big enterprises, but don't forget to check

it if you live in that world.

You can also apply this principle to features within an existing

system. If you're building a new feature it's often wise to make it

available to only a subset of your users, so you can get feedback on

whether it's a good idea. To do that you may initially build it in a

sacrificial way, so that you don't invest the full effort on a feature that

you find isn't worth full deployment.

Modular replaceability is a principal argument in favor of a

microservices architecture, but I'm wary to recommend that for a

sacrificial architecture. Microservices imply distribution and

asynchrony, which are both complexity boosters. I've already run

into a couple of projects that took the microservice path without

really needing to — seriously slowing down their feature pipeline as a

result. So a monolith is often a good sacrificial architecture,

with microservices introduced later to gradually pull it apart.

The team that writes the sacrificial architecture is the team

that decides it's time to sacrifice it. This is a different case to

a new team coming in, hating the existing code, and wanting to

rewrite it. It's easy to hate code you didn't write, without an

understanding of the context in which it was written. Knowingly

sacrificing your own code is a very different dynamic, and knowing

you going to be sacrificing the code you're about to write is a

useful variant on that.

Acknowledgements

Conversations with Randy Shoup encouraged and helped me

formulate this post, in particular describing the history of eBay

(and some similar stories from Google). Jonny Leroy pointed out

the accounting issue. Keif Morris, Jason Yip, Mahendra Kariya,

Jessica Kerr, Rahul Jain, Andrew Kiellor, Fabio Pereira, Pramod

Sadalage, Jen Smith, Charles Haynes, Scott Robinson and Paul

Hammant provided useful comments.

Notes

1:

As Jeff Dean puts it "design for ~10X growth, but plan to

rewrite before ~100X"

Talking about Refactoring on the Ruby Rogues Podcast

Last week I sat with the Ruby Rogues - a podcast about development in the Ruby and Rails world (Avdi Grimm, Jessica Kerr and host Charles Max Wood). They have a regular book club, and their book this time was the Ruby edition of Refactoring We talked about the definition of refactoring, why we find we don’t use debuggers much, what might be done to modernize the book, the role of refactoring tools, whether comments can be used for good, the trade-off between refactoring and rewriting, modularity and microservices, and how the software industry has changed over the last twenty years.

October 11, 2014

photostream 75

October 9, 2014

Keynote from goto: Our Responsibility to Defeat Mass Surveillance

In our keynote for goto 2014, Erik and I consider our responsibilities as software professionals towards combatting the growing tide of mass surveillance. We talk about how software professionals should take a greater role in deciding what software to build, which requires us to have a greater knowledge of the domain and responsibility towards our users and the greater society. We say why privacy is important, both as a human need and for the maintenance of a democratic society. We use the example of email to explore the importance of an open, collaborative development approach for key infrastructure, and argue our freedoms require a greater level of encryption for all of us together with move to decentralize. We finish with a brief mention of “Pixelated”, a project ThoughtWorks is doing to increase the use of encrypted email and why its challenges are much more about UX than the details of cryptography.

Martin Fowler's Blog

- Martin Fowler's profile

- 1103 followers