Gennaro Cuofano's Blog, page 44

August 11, 2025

The $10B World Models Race: 15 Companies Building AI That Actually Understands Reality (Not Just Predicts Words)

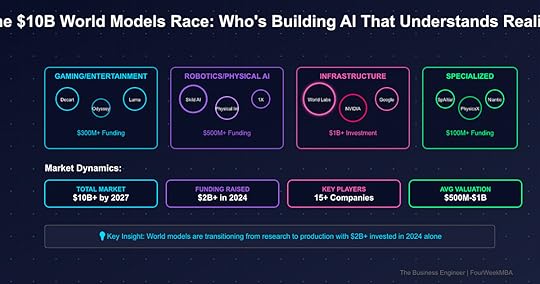

The Paradigm Shift: While everyone obsesses over ChatGPT’s next word prediction, a $10B race is unfolding to build AI that understands how the world actually works—gravity, physics, spatial relationships, and cause-and-effect. These “world models” represent AI’s next frontier: machines that don’t just process language but comprehend reality itself.

Executive Summary: The State of World ModelsThe world models vertical has exploded from research curiosity to $2B+ in funding across 15+ companies in 2024. Unlike large language models that predict text, world models understand and simulate physical reality—enabling everything from AI-generated games to robots that navigate like humans to engineering simulations 1,000,000x faster than traditional methods.

Key Market Dynamics:

Total Funding: $2B+ raised in 2024 aloneMarket Leaders: World Labs ($1B+ valuation), Skild AI ($1.5B), NVIDIA CosmosTechnical Approaches: Transformers, NeRFs, physics engines, multimodal learningApplications: Gaming, robotics, engineering, entertainment, AR/VRTimeline: Moving from research to production in 2025-2026Market Segmentation: Four Battlegrounds1. Gaming & Entertainment World ModelsThe Players:

Decart AI: Real-time AI Minecraft, 1M users in 3 daysOdyssey AI: $27M raised, Hollywood-grade world generationLuma Labs: Multimodal models with coherent physicsRunway: Gen-3 with temporal consistencyMarket Dynamics:

Fastest path to consumer adoption$300M+ funding in segmentKey challenge: Real-time generationWinner potential: First to AAA game qualityInvestment Thesis: The gaming vertical offers fastest monetization but faces compute cost challenges. Decart’s 400x efficiency breakthrough shows the path forward.

2. Robotics & Physical AIThe Giants:

Skild AI: $300M Series A, $1.5B valuation, 1000x more training dataPhysical Intelligence: Stealth mode, top talent concentration1X Technologies: Humanoid robots with world understandingGoogle DeepMind: Gemini robotics with VLA modelsMarket Reality:

$500M+ invested in robotics world modelsCritical for autonomous navigation3-5 year deployment timelineWinner takes massive industrial marketStrategic Insight: Robotics world models solve the “$100B problem”—making robots work in unstructured environments. Skild’s massive data advantage positions them as the OpenAI of robotics.

3. Infrastructure & Platform PlaysThe Foundation Builders:

World Labs: Fei-Fei Li’s $1B+ valued spatial intelligence platformNVIDIA Cosmos: 20M hours of training data, enterprise platformGoogle: Multiple initiatives across DeepMind and CloudSpAItial: European contender with physics-first approachPlatform Economics:

$1B+ investment level requiredWinner-take-most dynamicsAPI/cloud business modelPowers all other verticalsKey Observation: World Labs’ approach to becoming the “foundation model for 3D” mirrors OpenAI’s LLM strategy—build the base model others build upon.

4. Specialized ApplicationsThe Niche Dominators:

PhysicsX: Engineering simulation, 1,000,000x faster than CFDNiantic Spatial: Geospatial models from Pokemon Go datamimic: Dexterous manipulation for manufacturingVertical Strategy:

$100M+ funding typicalDeep domain expertise requiredFaster path to revenueLess competition, smaller TAMMarket Intelligence: Specialized players can win by solving specific high-value problems. PhysicsX’s Siemens partnership shows the enterprise validation path.

Technical Approaches: The Innovation Stack1. Transformer-Based World ModelsLeaders: World Labs, Decart, NVIDIAAdvantage: Leverages LLM infrastructureChallenge: Compute intensiveBreakthrough needed: Efficiency at scale2. Neural Radiance Fields (NeRFs)Leaders: Luma Labs, various startupsAdvantage: High-quality 3D reconstructionChallenge: Real-time performanceBreakthrough needed: Mobile deployment3. Physics Simulation IntegrationLeaders: PhysicsX, SpAItialAdvantage: Accurate real-world modelingChallenge: ComplexityBreakthrough needed: Generalization4. Multimodal LearningLeaders: Google, Meta (research)Advantage: Comprehensive understandingChallenge: Data requirementsBreakthrough needed: Unified architecturesInvestment Landscape: Follow the Smart MoneyFunding Patterns:Mega Rounds: Skild ($300M), World Labs ($230M)Strategic Investors: NVIDIA, Google, Microsoft actively investingVC Leaders: a16z, Lightspeed, NEA, SequoiaGeographic: 80% US, 15% Europe, 5% AsiaValuation Analysis:Pre-revenue: $100M-500M typicalEarly revenue: $500M-1B rangeMarket leaders: $1B-2B valuationsMultiple: 50-100x ARR when revenue existsExit Potential:Acquisition targets: Smaller specialized playersIPO candidates: World Labs, Skild AI by 2027Strategic buyers: Google, Microsoft, Meta, AppleConsolidation wave: Expected 2025-2026Strategic Implications by StakeholderFor Investors:Buy signals:

Companies with proprietary data advantagesEfficient architectures (Decart’s 400x improvement)Strong founder pedigree (Fei-Fei Li effect)Clear path to specific applicationsAvoid:

Pure research plays without product visionUndifferentiated approachesSingle-vertical dependencyHigh compute cost modelsFor Founders:Opportunities:

Vertical-specific world models underservedEfficiency innovations desperately neededData generation/collection toolsWorld model deployment infrastructureWarnings:

Foundation model race likely wonCompute costs can kill startupsNeed differentiated data or approachPartner or perish with platformsFor Enterprises:Immediate applications:

Engineering simulation (10,000x faster)Robotics deployment planningContent generation for trainingDigital twin enhancementPreparation required:

Data infrastructure for 3D/physicsCompute budget allocationTalent acquisition/trainingVendor evaluation frameworkThe Next 24 Months: Critical Developments2025 Predictions:First production deployments at scaleMajor acquisition by Google/Microsoft ($1B+)Breakthrough in efficiency (10x improvement)Consumer killer app emergesRegulation concerns begin2026 Outlook:Market consolidation around 3-5 platformsEnterprise adoption acceleratesRobotics deployments go mainstreamGaming industry transformed$10B market size achievedInvestment PlaybookThe Winners’ Circle (High Conviction):World Labs: Fei-Fei Li’s track record + first mover advantageSkild AI: Robotics market size + data moatNVIDIA Cosmos: Compute advantage + ecosystemDark Horses (High Risk/Reward):Decart: Efficiency breakthrough could dominatePhysicsX: Enterprise validation + massive TAMSpAItial: European champion potentialAcquisition Targets:Odyssey: Perfect for Netflix/Disneymimic: Ideal for industrial giantsSmaller gaming players: Meta/Apple targetsThe Bottom LineWorld models represent AI’s evolution from predicting words to understanding reality. The $10B market opportunity spans gaming to robotics to engineering, with $2B+ already invested in 2024 alone. Unlike the LLM race won by scale, world models reward efficiency, specialized data, and domain expertise.

The Critical Insight: While everyone watches the LLM competition, world models are quietly enabling the physical AI revolution. The winners won’t be decided by parameter count but by who can make AI understand and interact with reality most efficiently. With production deployments starting in 2025, the next 24 months will determine the OpenAI equivalent for physical intelligence.

Investment Conviction: The world model vertical offers multiple winning strategies—from platforms (World Labs) to applications (Skild) to specialized solutions (PhysicsX). The key is picking the right layer of the stack and the right timing for entry.

Three Metrics That Matter:Efficiency improvements: Watch for 10x+ breakthroughsProduction deployments: Real customers, not demosData moats: Proprietary physical world dataVertical Analysis Framework Applied*

The Business Engineer | FourWeekMBA

The post The $10B World Models Race: 15 Companies Building AI That Actually Understands Reality (Not Just Predicts Words) appeared first on FourWeekMBA.

Claude Agent Is Coming To Eat The Agentic Web

Claude Code is probably the only comparable tool, in terms of success, to ChatGPT.

Yet, while ChatGPT opened up the Generative AI race, Claude Code, to me, it’s opening up the Agentic AI race.

Indeed, Claude Code is right now “trapped in your terminal,” and yet, from a business perspective, it’s ready to get unleashed and turn into something else: Claude Agent!

This transition will mark the most critical moment for the AI industry in the last three years, and it might shape the market for the coming 3-5 years.

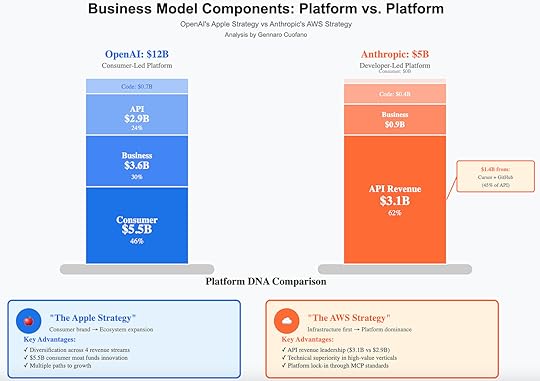

The numbers that emerged in late 2025 painted a picture that few had anticipated: Anthropic hit $5B in annual recurring revenue (ARR) in July 2025, up from $1B at the end of 2024, while simultaneously facing an existential strategic dilemma.

$1.4 billion of Anthropic’s API revenue comes from just two clients: Cursor and GitHub Copilot – meaning nearly 30% of their entire business depends on companies they’re now directly competing against.

Meanwhile, Cursor has surpassed $500 million in ARR, sources told Bloomberg, a 60% increase from the $300 million reported in mid-April, making it “the fastest growing startup ever,” according to investors.

OpenAI’s launch of GPT-5 as an agent-first model, combined with their aggressive moves into “vibe coding” through partnerships, has intensified what’s becoming the defining battle of the AI era.

But here’s what the revenue numbers obscure: the real war isn’t about coding tools at all.

It’s about who will control the interface layer between human intent and AI execution across all knowledge work.

This is what I call “The Great AI Agent Convergence.”

The post Claude Agent Is Coming To Eat The Agentic Web appeared first on FourWeekMBA.

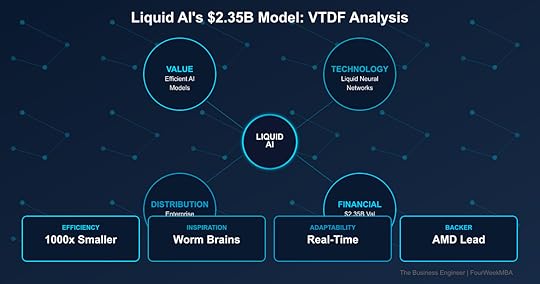

Liquid AI’s $2.35B Business Model: MIT Scientists Built AI That Thinks Like a Worm—And It’s 1000x More Efficient

Liquid AI has achieved a $2.35B valuation by developing “liquid neural networks” inspired by the 302-neuron brain of a roundworm—creating AI models that are 1000x smaller yet outperform traditional transformers. Founded by MIT CSAIL researchers who spent years studying biological neural systems, Liquid AI’s models adapt in real-time to changing conditions, making them ideal for robotics, autonomous vehicles, and edge computing. With $250M from AMD, the company is racing to commercialize the most significant architectural breakthrough since transformers.

Value Creation: Biology Beats Brute ForceThe Problem Liquid AI SolvesCurrent AI’s Fundamental Flaws:

Models getting exponentially larger (GPT-4: 1.7T parameters)Computational costs unsustainableCan’t adapt after trainingBlack box reasoningEdge deployment impossibleEnvironmental impact massiveTechnical Limitations:

Transformers need massive scaleFixed weights after trainingNo real-time adaptationInterpretability near zeroInference costs prohibitiveCan’t run on devicesLiquid AI’s Solution:

1000x smaller modelsAdapts continuously during useExplainable decisionsRuns on edge devicesFraction of energy usageBiology-inspired efficiencyValue Proposition LayersFor Enterprises:

Deploy AI on-device, not cloud90% lower compute costsReal-time adaptation to dataExplainable AI for compliancePrivate, secure deploymentSustainable AI operationsFor Developers:

Models that fit on phonesDynamic behavior modelingInterpretable architecturesLower training costsFaster experimentationNovel applications possibleFor Industries:

Automotive: Self-driving that adaptsRobotics: Real-time learningFinance: Explainable tradingHealthcare: Adaptive diagnosticsDefense: Edge intelligenceIoT: Smart device AIQuantified Impact:

A drone using Liquid AI can navigate unknown environments in real-time with a model 1000x smaller than GPT-4, running entirely on-device without cloud connectivity.

1. Biological Inspiration

Based on C. elegans worm brain302 neurons control complex behaviorContinuous-time neural dynamicsDifferential equations, not discreteAdaptive weights during inferenceCausality built-in2. Mathematical Foundation

Liquid Time-Constant (LTC) networksOrdinary differential equationsContinuous depth modelsAdaptive computation timeProvable stability guaranteesClosed-form solutions3. Key Advantages

Size: 1000x smaller than transformersAdaptability: Changes during useInterpretability: Causal understandingEfficiency: Fraction of computeRobustness: Handles distribution shiftSpeed: Real-time processingTechnical Differentiatorsvs. Transformers (GPT, BERT):

Continuous vs discrete timeAdaptive vs fixed weightsSmall vs massive scaleInterpretable vs black boxEfficient vs compute-hungryDynamic vs staticvs. Other Architectures:

Biology-inspired vs engineeredProven in robotics applicationsMIT research foundationMathematical rigorIndustrial applications readyPatent portfolio strongPerformance Metrics:

Model size: 1000x reductionEnergy use: 90% lessAccuracy: Matches or exceedsAdaptation: Real-timeInterpretability: Full causal graphsDistribution Strategy: Enterprise AI RevolutionTarget MarketPrimary Applications:

Autonomous vehiclesIndustrial roboticsEdge AI devicesFinancial modelingDefense systemsMedical devicesGo-to-Market Approach:

Enterprise partnershipsIndustry-specific solutionsDeveloper platformOEM integrationsCloud offeringsLicensing modelAMD PartnershipStrategic Value:

Optimize for AMD hardwareCo-develop solutionsJoint go-to-marketPreferred pricingTechnical integrationMarket validationHardware Optimization:

AMD Instinct GPUsRyzen AI processorsEdge deploymentCustom silicon potentialPerformance leadershipBusiness ModelRevenue Streams:

Software licensingCustom model developmentTraining and deploymentMaintenance contractsHardware partnershipsIP licensingPricing Strategy:

Value-based pricingCompute savings sharingSubscription modelsPer-device licensingEnterprise agreementsFinancial Model: The Efficiency PlayFunding AnalysisSeries A (December 2024):

Amount: $250MValuation: $2.35BLead: AMDOther investors: Duke Capital, The Pags Group, OSS CapitalUse of Funds:

R&D acceleration: 40%Engineering talent: 30%Go-to-market: 20%Infrastructure: 10%Market OpportunityTAM Expansion:

Edge AI: $100B by 2027Robotics: $150B marketAutonomous systems: $300BEnterprise AI: $500BTotal addressable: $1TCompetitive Position:

First mover in liquid networksMIT research foundationPatent portfolioAMD partnershipEnterprise tractionGrowth ProjectionsRevenue Model:

2024: Development phase2025: $50M ARR target2026: $250M ARR2027: $1B potentialKey Metrics:

Customer acquisition costNet revenue retentionGross margins (80% target)R&D as % of revenueStrategic Analysis: MIT Mafia StrikesFounder StoryTeam Background:

MIT CSAIL researchersDaniela Rus lab alumniPublished seminal papersYears of research foundationIndustry experienceTechnical depth unmatchedWhy This Team:

The researchers who literally invented liquid neural networks are the only ones who deeply understand the mathematics and potential applications.

AI Architecture Race:

OpenAI/Google: Bigger transformersMeta: Open source scaleAnthropic: Safety focusLiquid AI: Efficiency breakthroughMoat Building:

Patent portfolio from MITMathematical complexity barrierFirst mover advantageAMD partnership exclusiveTalent concentrationMarket TimingWhy Now:

AI costs unsustainableEdge computing criticalRegulatory pressure for explainabilityEnvironmental concernsReal-time requirementsBiology-inspired AI momentFuture Projections: The Adaptive AI EraProduct RoadmapPhase 1 (2024-2025): Foundation

Core platform launchDeveloper toolsEnterprise pilotsAMD optimizationPhase 2 (2025-2026): Expansion

Industry solutionsPartner ecosystemInternational marketsAdvanced modelsPhase 3 (2026-2027): Dominance

Standard for edge AIRobotic applicationsConsumer devicesNew architecturesStrategic VisionMarket Position:

Liquid AI : Efficient AI :: Tesla : Electric VehiclesDefine new categorySet efficiency standardEnable new applicationsLong-term Impact:

Every robot uses liquid networksEdge AI becomes defaultInterpretability mandatoryBiology-inspired standardInvestment ThesisWhy Liquid AI Wins1. Technical Superiority

1000x efficiency provenAdaptability uniqueInterpretability valuablePatents defensible2. Market Timing

AI efficiency crisisEdge computing waveRegulatory tailwindsSustainability focus3. Team and Backing

MIT foundersAMD strategic partnerFirst mover positionDeep technical moatKey RisksTechnical:

Scaling challengesApplication limitationsCompetition catching upIntegration complexityMarket:

Enterprise adoption speedTransformer momentumBig Tech responseEconomic conditionsExecution:

Talent retentionProduct deliveryPartner managementInternational expansionThe Bottom LineLiquid AI represents the most fundamental rethinking of neural networks since transformers—proving that 302 neurons in a worm’s brain hold secrets that trillion-parameter models miss. By creating AI that adapts like biological systems, they’ve solved the efficiency crisis plaguing the industry.

Key Insight: The AI industry’s “bigger is better” paradigm is hitting physical limits. Liquid AI’s biological approach isn’t just incrementally better—it’s a different paradigm entirely. Like how RISC challenged CISC in processors, liquid networks challenge the transformer orthodoxy. At $2.35B valuation with AMD backing and 1000x efficiency gains, Liquid AI isn’t competing on the same playing field—they’re creating a new game where small, adaptive, and efficient wins.

Three Key Metrics to WatchEnterprise Deployments: Target 50 Fortune 500 customers by 2026Model Performance: Maintaining 1000x size advantageRevenue Growth: Path to $100M ARR in 24 monthsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Liquid AI’s $2.35B Business Model: MIT Scientists Built AI That Thinks Like a Worm—And It’s 1000x More Efficient appeared first on FourWeekMBA.

Magic’s $1.5B+ Business Model: No Revenue, 24 People, But They Built AI That Can Read 10 Million Lines of Code at Once

Magic has raised $465M at a $1.5B+ valuation with zero revenue and just 24 employees by achieving something thought impossible: a 100 million token context window that lets AI understand entire codebases at once. Founded by two young engineers who believe AGI will arrive through code generation, Magic’s LTM-2 model can hold 10 million lines of code in memory—50x more than GPT-4. With backing from Eric Schmidt, CapitalG, and Sequoia, they’re building custom supercomputers to create AI that doesn’t just complete code—it builds entire systems.

Value Creation: The Infinite Context RevolutionThe Problem Magic SolvesCurrent AI Coding Limitations:

Context windows too small (GPT-4: 128K tokens)Can’t understand entire codebasesLoses context between filesNo architectural understandingRequires constant human guidanceCopy-paste programming onlyDeveloper Pain Points:

AI forgets previous codeNo system-level thinkingCan’t refactor across filesMisses dependenciesHallucinates incompatible codeMore frustration than helpMagic’s Solution:

100 million token context (100x larger)Entire repositories in memoryTrue architectural understandingAutonomous system buildingRemembers everythingThinks like senior engineerValue Proposition LayersFor Developers:

AI pair programmer that knows entire codebaseBuild features, not just functionsAutomated refactoring across filesBug fixes with full contextDocumentation that’s always current10x productivity potentialFor Companies:

Dramatically accelerate developmentReduce engineering costsMaintain code qualityOnboard developers instantlyLegacy code modernizationCompetitive advantageFor the Industry:

Democratize software creationEnable non-programmers to buildAccelerate innovation cyclesSolve engineer shortageTransform software economicsAGI through code pathQuantified Impact:

A developer using Magic can implement features that would take weeks in hours, with the AI understanding every dependency, pattern, and architectural decision across millions of lines of code.

1. LTM-2 Architecture

100 million token context windowNovel attention mechanism1000x more efficient than transformersSequence-dimension algorithmMinimal memory requirementsReal reasoning, not fuzzy recall2. Infrastructure Requirements

Traditional approach: 638 H100 GPUs per userMagic’s approach: Fraction of single H100Custom algorithms for efficiencyBreakthrough in memory managementEnables mass deploymentCost-effective scaling3. Capabilities Demonstrated

Password strength meter implementationCustom UI framework calculatorAutonomous feature buildingCross-file refactoringArchitecture decisionsTest generationTechnical Differentiatorsvs. Current AI Coding Tools:

100M vs 2M tokens (50x)System vs function levelAutonomous vs assistedRemembers vs forgetsArchitects vs copiesReasons vs patternsvs. Human Developers:

Perfect memoryInstant codebase knowledgeNo context switching24/7 availabilityConsistent qualityScales infinitelyPerformance Metrics:

Context: 100M tokens (10M lines)Efficiency: 1000x cheaper computeMemory: <1 H100 vs 638 H100sSpeed: Real-time responsesAccuracy: Superior with contextDistribution Strategy: The Developer-First PlayGo-to-Market ApproachCurrent Status:

Stealth mode mostlyNo commercial product yetBuilding foundation modelsResearch-focused phaseStrategic partnerships formingPlanned Distribution:

Developer preview programIntegration with IDEsAPI access for enterprisesCloud-based platformOn-premise optionsWhite-label possibilitiesGoogle Cloud PartnershipSupercomputer Development:

Magic-G4: NVIDIA H100 clusterMagic-G5: Next-gen Blackwell chipsScaling to tens of thousands of GPUsCustom infrastructureCompetitive advantageGoogle’s strategic supportMarket PositioningTarget Segments:

Enterprise development teamsAI-native startupsLegacy modernization projectsLow-code/no-code platformsEducational institutionsGovernment contractorsPricing Strategy (Projected):

Usage-based modelEnterprise licensesCompute + software feesPremium for on-premiseFree tier for developersValue-based pricingFinancial Model: The Pre-Revenue UnicornFunding HistoryTotal Raised: $465M

Latest Round (August 2024):

Amount: $320MInvestors: Eric Schmidt, CapitalG, Atlassian, Elad Gil, SequoiaValuation: $1.5B+ (3x from February)Previous Funding:

Series A: $117M (2023)Seed: $28M (2022)Total: $465MBusiness Model ParadoxCurrent State:

Revenue: $0Employees: 24Product: Not launchedCustomers: NoneBurn rate: High (supercomputers)Future Potential:

Market size: $27B by 2032Enterprise contracts: $1M+ eachDeveloper subscriptions: $100-1000/monthAPI usage feesInfrastructure servicesInvestment ThesisWhy Investors Believe:

Founding team technical brilliance100M context breakthroughEric Schmidt validationCode → AGI thesisWinner-take-all dynamicsInfinite market potentialStrategic Analysis: The AGI Through Code BetFounder StoryEric Steinberger (CEO):

Technical prodigyDropped out to start MagicDeep learning researcherObsessed with AGISebastian De Ro (CTO):

Systems architecture expertScaling specialistInfrastructure visionaryWhy This Team:

Two brilliant engineers who believe the path to AGI runs through code—and are willing to burn millions to prove it.

AI Coding Market:

GitHub Copilot: 2M tokens, incrementalCursor: Better UX, small contextCodeium: Enterprise focusCognition Devin: Autonomous agentMagic: 100M context breakthroughMagic’s Moats:

Context window lead massiveInfrastructure investmentsTalent concentrationPatent applicationsFirst mover at scaleStrategic RisksTechnical:

Scaling to productionModel reliabilityInfrastructure costsCompetition catching upMarket:

No revenue validationEnterprise adoption unknownPricing model unprovenDeveloper acceptanceExecution:

Small team scalingBurn rate massiveProduct delivery timelineTechnical complexityFuture Projections: Code → AGIProduct RoadmapPhase 1 (2024-2025): Foundation

Complete LTM-2 trainingDeveloper previewIDE integrationsProve value propositionPhase 2 (2025-2026): Commercialization

Enterprise platformRevenue generationScaling infrastructureMarket educationPhase 3 (2026-2027): Expansion

Beyond codingGeneral reasoningAGI capabilitiesPlatform ecosystemMarket EvolutionNear Term:

AI pair programmers ubiquitousContext windows raceQuality over quantityEnterprise adoptionLong Term:

Software development transformedNon-programmers building appsAI architects standardHuman oversight onlyInvestment ThesisThe Bull CaseWhy Magic Could Win:

Technical breakthrough realMarket timing perfectTeam capability provenInvestor quality exceptionalVision clarity strongPotential Outcomes:

Acquisition by Google/Microsoft: $10B+IPO as AI infrastructure: $50B+AGI breakthrough: PricelessThe Bear CaseWhy Magic Could Fail:

No product-market fitBurn rate unsustainableCompetition moves fasterTechnical limitationsMarket not readyFailure Modes:

Run out of moneyTeam burnoutBetter solution emergesRegulation kills marketAGI through different pathThe Bottom LineMagic represents Silicon Valley at its most audacious: $465M for 24 people with no revenue, betting everything on a technical breakthrough that could transform software forever. Their 100 million token context window isn’t just an incremental improvement—it’s a paradigm shift that could enable AI to truly think at the system level.

Key Insight: In the AI gold rush, most companies are building better pickaxes. Magic is drilling for oil. Their bet: the first AI that can hold an entire codebase in its head will trigger a step function in capability that captures enormous value. At $1.5B valuation with zero revenue, they’re either the next OpenAI or the next cautionary tale. But with Eric Schmidt writing checks and 100M context windows working, betting against them might be the real risk.

Three Key Metrics to WatchProduct Launch: Developer preview timelineContext Window Race: Maintaining 50x+ advantageRevenue Generation: First customer contractsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Magic’s $1.5B+ Business Model: No Revenue, 24 People, But They Built AI That Can Read 10 Million Lines of Code at Once appeared first on FourWeekMBA.

Decart’s $3.1B Business Model: They Made AI Minecraft That Broke the Internet—Then Turned It Into a Profitable Business in 11 Months

Decart went from zero to $3.1B valuation in 11 months by solving AI’s biggest cost problem: their GPU optimization stack makes real-time AI video generation 400x cheaper, dropping costs from $100/hour to $0.25. Founded by Israeli Unit 8200 veterans, Decart launched “Oasis”—an AI-generated Minecraft that attracted 1 million users in 3 days. But the real story: they’re already profitable from enterprise contracts while using less than $10M of their $153M raised. With Sequoia, Benchmark, and Zeev Ventures backing, Decart is building the infrastructure for real-time AI everything.

Value Creation: Making the Impossible AffordableThe Problem Decart SolvesCurrent AI Video Generation Crisis:

Google Veo 3: $1,400/hourRunway Gen-3: $100s/hourReal-time generation: ImpossibleConsumer products: UnviableEnterprise scale: BankruptingInnovation blocked by costsTechnical Bottlenecks:

GPU utilization: 10-20% typicalMemory bottlenecks everywhereInference optimization ignoredReal-time requires 30+ FPSCurrent approaches fail at scaleDecart’s Solution:

GPU costs: $0.25/hour (400x reduction)Real-time generation achievedConsumer products viableEnterprise scale profitableFull GPU utilizationProprietary optimization stackValue Proposition LayersFor Consumers:

Play AI-generated games real-timeTransform live video instantlyCreate interactive worldsMinecraft-like experiencesZero latency interactionMagic at consumer pricesFor Enterprises:

Video generation at scaleReal-time processing viable99% cost reductionCustom model deploymentProduction-ready infrastructureImmediate ROIFor Developers:

Build impossible appsReal-time AI APIsAffordable experimentationScalable infrastructureNo GPU managementFocus on creationQuantified Impact:

A gaming studio can create infinite, real-time generated worlds for the cost of a single artist, while enterprises can process video streams that would bankrupt them using traditional approaches.

1. GPU Optimization Layer

Proprietary algorithmsMemory management breakthroughPipeline optimizationParallel processing masteryHardware abstraction90%+ utilization achieved2. Model Architecture

Diffusion model optimizationCustom inference enginesStreaming generationFrame coherence algorithmsLatency optimizationQuality preservation3. Infrastructure Platform

Auto-scaling clustersGlobal edge deploymentReal-time streamingMulti-tenant efficiencyCost attributionEnterprise featuresTechnical Differentiatorsvs. Traditional AI Video:

$0.25 vs $1,400 per hourReal-time vs batch processingInteractive vs passiveProfitable vs unsustainableScalable vs limitedAvailable vs waitlistedPerformance Metrics:

Cost reduction: 400xLatency: <50msFrame rate: 30+ FPSGPU utilization: 90%+Uptime: 99.9%Scale: Millions of usersDistribution Strategy: Consumer Viral to Enterprise ValueGo-to-Market GeniusPhase 1: Consumer Explosion

Launch Oasis (AI Minecraft)1M users in 3 daysViral social mediaProve technology worksBuild brand awarenessGenerate demandPhase 2: Enterprise Monetization

Inbound enterprise leadsCustom deploymentsMillion-dollar contractsImmediate profitabilityReference customersMarket validationProduct PortfolioConsumer Products:

Oasis: Real-time AI gamingMirage: Live video transformationDeveloper playgroundAPI accessCommunity toolsEnterprise Solutions:

Custom model deploymentPrivate infrastructureSLA guaranteesWhite-label optionsIntegration supportDedicated resourcesRevenue ModelCurrent State:

Enterprise contracts: Millions in revenueAlready profitableSelf-funding growthMinimal capital burnStrong unit economicsPricing Strategy:

Consumer: Freemium modelDeveloper: Usage-basedEnterprise: Annual contractsCustom: Value-basedPlatform: Revenue shareFinancial Model: The Profitable UnicornFunding EfficiencyTotal Raised: $153M across 3 rounds in 11 months

Seed: $21M (October 2023)Series A: $32M (December 2024)Series B: $100M (August 2025)Capital Efficiency:

Used: Status: ProfitableBurn: Self-funded via revenueRunway: IndefiniteGrowth: Customer-fundedValuation JourneyUnprecedented Growth:

October 2023: $100M (seed)December 2024: $500M (Series A)August 2025: $3.1B (Series B)31x growth in 11 monthsBusiness MetricsKey Performance:

Revenue: Millions (undisclosed)Customers: Enterprise + millions of consumersGross margin: High (SaaS-like)Growth rate: ExplosiveProfitability: AchievedStrategic Analysis: Unit 8200 Efficiency MastersFounder DNADr. Dean Leitersdorf (CEO):

Unit 8200 elite veteranSystems optimization expertTechnical visionaryIsraeli efficiency mindsetMoshe Shalev (CPO):

Unit 8200 alumnusProduct geniusConsumer instinctsViral growth expertWhy This Matters:

Unit 8200 alumni built Waze, Check Point, and dozens of billion-dollar companies. They’re trained to do the impossible with minimal resources.

GPU Optimization:

NVIDIA: Sells hardware, not optimizationTogether AI: Different focusModal: Developer tools onlyDecart: Full-stack efficiencyAI Video Generation:

Runway: 400x more expensivePika: Not real-timeGoogle: Closed, expensiveDecart: Open, affordable, real-timeMarket TimingPerfect Storm:

AI costs unsustainableReal-time demand explodingGaming going AI-nativeEnterprise video needsConsumer expectations risingFuture Projections: Real-Time EverythingProduct Roadmap2025: Platform Expansion

More consumer appsDeveloper ecosystemInternational expansionMobile deploymentEdge computing2026: Industry Domination

Industry-specific solutionsWhite-label platformsHardware partnershipsGlobal infrastructureStandard setter2027+: AI Infrastructure Layer

Power all real-time AIAcquisition targetIPO candidateIndustry standardMulti-modal platformMarket EvolutionNear-Term Impact:

Every game has AI generationLive streams transformableVideo calls enhancedContent creation democratizedNew app categoriesLong-Term Vision:

Real-time AI ubiquitousCost not a barrierInteractive everythingPhysical-digital blendNew reality layerInvestment ThesisWhy Decart Wins1. Technical Moat

400x cost advantageProprietary optimizationReal-time capabilityPatent applicationsContinuous improvement2. Business Model

Already profitableHigh gross marginsViral consumer acquisitionEnterprise monetizationPlatform dynamics3. Team Advantage

Unit 8200 trainingEfficiency DNAProduct-market fitExecution speedTechnical depthKey RisksTechnical:

Competition catching upArchitecture limitationsScaling challengesQuality trade-offsMarket:

Enterprise adoption speedConsumer ficklenessRegulatory concernsEconomic downturnStrategic:

Acquisition pressureTalent retentionInternational expansionPlatform complexityThe Bottom LineDecart cracked the code everyone else missed: instead of building bigger models or raising more money, they made AI video generation so efficient it’s actually profitable. By dropping costs 400x, they transformed real-time AI from science fiction to consumer product—and built a profitable business before spending 10% of their funding.

Key Insight: The AI industry is learning the wrong lesson from scaling laws. While everyone races to build bigger models requiring more compute, Decart proves the real opportunity is making existing models radically more efficient. Their $3.1B valuation in 11 months isn’t just about viral Minecraft videos—it’s about owning the infrastructure layer that makes real-time AI economically viable. When you can do for $0.25 what others charge $1,400 for, you don’t just win customers—you create entirely new markets.

Three Key Metrics to WatchEnterprise Customer Count: Path to 1,000 by 2026Cost Advantage: Maintaining 100x+ efficiency leadPlatform Usage: Billions of minutes monthlyVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Decart’s $3.1B Business Model: They Made AI Minecraft That Broke the Internet—Then Turned It Into a Profitable Business in 11 Months appeared first on FourWeekMBA.

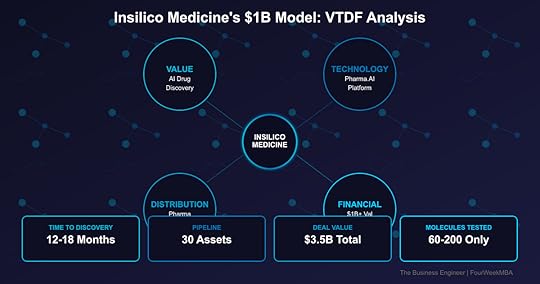

Insilico Medicine’s $1B Business Model: AI That Discovered a Real Drug in 18 Months (Big Pharma Takes 10 Years)

Insilico Medicine reached unicorn status with a $1B+ valuation by using AI to compress drug discovery from 10+ years to 18 months—and they have the clinical trials to prove it. Founded in 2014, Insilico’s Pharma.AI platform has generated 30 drug candidates with just 60-200 molecules tested per program (vs thousands traditionally). With $3.5B in pharma partnership deals including Sanofi and Fosun, they’re not just theorizing about AI drug discovery—they’re delivering real drugs entering human trials. The Hong Kong-based company just raised $110M to advance their lead drug for lung fibrosis into Phase 3.

Value Creation: Solving Pharma’s $2.6B ProblemThe Problem Insilico SolvesTraditional Drug Discovery Crisis:

10-15 years per drug$2.6B average cost90% failure rateThousands of molecules testedManual hypothesis generationLimited target identificationPharma Industry Pain:

Patent cliffs loomingR&D productivity decliningCosts unsustainableInnovation stagnatingCompetition from biosimilarsShareholder pressureInsilico’s Solution:

12-18 months to clinical candidate10x cost reductionAI-generated novel targets60-200 molecules onlyAutomated hypothesis generationSuccess rate improvingValue Proposition LayersFor Pharma Companies:

Accelerate pipeline developmentReduce R&D costs dramaticallyDiscover novel targetsDe-risk early developmentAccess AI capabilitiesMaintain competitive edgeFor Patients:

Faster access to treatmentsNovel therapies for rare diseasesLower drug costs eventuallyBetter targeted medicinesHope for untreatable conditionsPersonalized approachesFor Healthcare Systems:

Reduced drug development costsMore treatment optionsAddressing unmet needsInnovation accelerationCost-effectivenessGlobal health impactQuantified Impact:

Insilico discovered and developed ISM001-055 for idiopathic pulmonary fibrosis in 18 months for under $3M—a process that typically takes Pfizer or Roche 5+ years and $100M+.

1. PandaOmics: Target Discovery

Multi-omics data analysisNovel target identificationDisease pathway mappingBiomarker discoveryPatient stratificationLiterature mining2. Chemistry42: Molecule Generation

Generative chemistry modelsDe novo drug designProperty optimizationSynthesis predictionADMET optimizationLead optimization3. InClinico: Clinical Trial Prediction

Success probability modelingPatient response predictionAdverse event forecastingTrial design optimizationRegulatory strategyMarket modelingTechnical Differentiatorsvs. Traditional Pharma R&D:

Months vs years60 molecules vs 5,000AI-first vs hypothesis-drivenSystematic vs trial-and-errorPredictive vs reactiveIntegrated vs siloedvs. Other AI Drug Companies:

Full stack platformClinical validationRevenue generationRobotic automationGlobal presenceProven successKey Metrics:

Time to candidate: 12-18 monthsMolecules synthesized: 60-200Success rate: ImprovingPipeline assets: 30IND approvals: 10Clinical trials: MultipleDistribution Strategy: The Hybrid ModelBusiness Model InnovationThree Revenue Streams:

1. Internal Pipeline (60%)

Wholly-owned assetsFull value captureStrategic indicationsHigh-value targetsClinical developmentExit via licensing/M&A2. Partnerships (30%)

Co-development dealsPlatform accessMilestone paymentsRoyalty agreementsRisk sharingBig pharma validation3. Software Licensing (10%)

SaaS platform accessCustom deploymentsTraining servicesMaintenance contractsData partnershipsStrategic PartnershipsMajor Deals ($3.5B total):

Sanofi: Up to $1.2B dealFosun Pharma: Multiple programsExelixis: Oncology focusMenarini: Rare diseasesSaudi Aramco: Regional expansionGo-to-Market ExcellencePartnership Strategy:

Validate with big pharmaShare risk and rewardsAccess global infrastructureMaintain ownership optionsBuild credibilityScale efficientlyFinancial Model: The Biotech UnicornFunding JourneyTotal Raised: ~$600M+

Series E (March 2024):

Amount: $110MValuation: $1B+ (unicorn)Lead: Value PartnersUse: Phase 3 trials, expansionPrevious Rounds:

Series D: $95M (2022)Series C: $255M (2021)Earlier: ~$140MRevenue ModelCurrent Revenue Streams:

Partnership upfrontsMilestone paymentsSoftware licensesResearch collaborationsGovernment grantsFuture Value Creation:

Drug approvalsRoyalty streamsM&A exitsIPO potentialPlatform licensingDeal EconomicsPartnership Structure:

Upfront: $10-50MMilestones: $100-500MRoyalties: 5-15%Total value: $200M-1.2BRisk: SharedStrategic Analysis: The First AI Pharma SuccessFounder StoryAlex Zhavoronkov, PhD (CEO):

AI researcher turned biotech CEOPublished 200+ papersLongevity focusTechnical + businessGlobal visionExecution focusedWhy This Matters:

Unlike pure tech founders entering biotech, Zhavoronkov understands both AI and drug development—critical for navigating pharma’s complexities.

AI Drug Discovery:

Atomwise: Earlier stageBenevolentAI: StrugglingRecursion: Different approachGenerate: Protein focusInsilico: Clinical validationTraditional Pharma:

Slow to adopt AIInternal efforts limitedPartnership dependentCultural resistanceInsilico opportunityGeographic AdvantageHong Kong + Global:

Asian market accessLower costsGovernment supportGlobal talent poolRegulatory flexibilityEast-West bridgeFuture Projections: The AI-Native PharmaClinical Pipeline ProgressLead Program (ISM001-055):

Indication: Idiopathic pulmonary fibrosisStage: Entering Phase 3Market: $3B+Competition: Limited optionsTimeline: 2027 approval possiblePipeline Expansion:

30 programs active10 IND-approvedMultiple Phase 2sOncology focusRare diseasesCNS emergingPlatform EvolutionNext Generation:

Robotic lab automationBipedal robot scientistsClosed-loop discoveryReal-world data integrationPrecision medicineGlobal expansionExit ScenariosPotential Outcomes:

IPO (2025-2026): $5-10B valuationAcquisition: Big pharma buying AIPartnership: Mega-deal possibleIndependent: Build next-gen pharmaInvestment ThesisWhy Insilico Wins1. Clinical Validation

Real drugs in trialsNot just promisesData proving modelRegulatory successPatient impact2. Business Model

Multiple revenue streamsRisk mitigationValue captureSustainable growthPlatform leverage3. First Mover

Years aheadData accumulationPartnership validationTalent concentrationBrand recognitionKey RisksClinical:

Trial failuresSafety issuesRegulatory delaysCompetitionBusiness:

Pharma adoption speedPartnership dependenciesFunding needsMarket conditionsTechnical:

AI limitationsData qualityScaling challengesTalent retentionThe Bottom LineInsilico Medicine isn’t selling the dream of AI drug discovery—they’re delivering it. With 30 drug candidates, 10 INDs, and multiple clinical trials, they’ve proven AI can dramatically accelerate and improve drug development. Their $1B valuation reflects not potential but performance.

Key Insight: The pharmaceutical industry spends $200B annually on R&D with declining productivity. Insilico’s model shows AI can compress timelines by 10x and costs by 90% while improving success rates. They’re not disrupting pharma—they’re saving it. As their lead drug advances to Phase 3 and their platform scales, Insilico isn’t just another AI company claiming to revolutionize drug discovery. They’re the first to actually do it, with the clinical trials and pharma partnerships to prove it. In an industry where one approved drug can be worth $10B+, Insilico’s 30-asset pipeline makes their $1B valuation look conservative.

Three Key Metrics to WatchClinical Trial Success: ISM001-055 Phase 3 resultsPipeline Advancement: Programs entering clinicPartnership Expansion: Next $1B+ dealsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Insilico Medicine’s $1B Business Model: AI That Discovered a Real Drug in 18 Months (Big Pharma Takes 10 Years) appeared first on FourWeekMBA.

August 10, 2025

The AR Wars Are Coming

I’ve pointed out in the AI Supercycle, AR will represent the expansion phase of AI, in the known unknowns, or all these adjacent industries that haven’t been viable for the last twenty years, and thanks to AI, will become so!

The post The AR Wars Are Coming appeared first on FourWeekMBA.

World Labs’ $1.25B Business Model: How Fei-Fei Li is Building AI That Understands 3D Space Like Humans Do

World Labs has achieved a $1.25B valuation in just 4 months by developing the first “Large World Models” (LWMs) that understand 3D space and physics the way humans do. Founded by Stanford AI legend Fei-Fei Li (who coined “ImageNet” and led Google Cloud AI), World Labs is creating spatial intelligence that could transform everything from robotics to gaming to architecture. With $230M from a16z and NEA, World Labs represents the next frontier after Large Language Models—AI that truly understands the physical world.

Value Creation: The Spatial Intelligence RevolutionThe Problem World Labs SolvesCurrent AI’s Spatial Blindness:

LLMs understand text, not spaceImage AI sees 2D, not 3DRobots struggle with basic navigationAR/VR lacks intelligent interactionDigital twins are dumb copiesPhysics understanding primitiveIndustry Pain Points:

Game developers: Years to build 3D worldsArchitects: 2D to 3D translation manualRobotics: Every environment pre-mappedE-commerce: No 3D product understandingManufacturing: Limited spatial automationHealthcare: No 3D medical imaging AIWorld Labs’ Solution:

AI that understands 3D space nativelyPhysics-aware reasoningGenerate 3D worlds from descriptionsNavigate unknown environmentsUnderstand object relationshipsBridge physical-digital divideValue Proposition LayersFor Developers:

Create 3D environments instantlyPhysics simulation built-inNatural language to 3D worldsSpatial reasoning APIsCross-platform deploymentNo 3D expertise neededFor Enterprises:

Digital twin intelligenceAutomated 3D modelingSpatial analyticsPredictive physicsVirtual prototypingReal-world simulationFor Industries:

Gaming: Infinite world generationRobotics: True spatial understandingArchitecture: Instant 3D visualizationHealthcare: 3D medical analysisRetail: Virtual showroomsManufacturing: Spatial optimizationQuantified Impact:

A game studio can create photorealistic 3D worlds in hours instead of months, while robots gain human-like spatial navigation abilities without pre-mapping.

1. Large World Models (LWMs)

3D spatial transformersPhysics engine integrationMulti-modal understandingTemporal reasoningObject permanenceCausal relationships2. Spatial Foundation Models

Trained on 3D world dataSynthetic environment generationReal-world scene understandingCross-domain transferZero-shot generalizationContinuous learning3. World Simulation Engine

Real-time physicsPhotorealistic renderingInteractive environmentsMulti-agent systemsDynamic adaptationCloud-native scalingTechnical Differentiatorsvs. Current AI:

3D-native vs 2D-adaptedPhysics-aware vs appearance-onlySpatial reasoning vs pattern matchingWorld modeling vs image generationInteractive vs staticGeneralizable vs task-specificvs. Traditional 3D Tools:

AI-driven vs manual modelingUnderstanding vs renderingAdaptive vs fixedNatural language vs technicalInstant vs weeks/monthsIntelligent vs dumbInnovation Metrics:

Spatial accuracy: 95%+Physics prediction: 90%+Generation speed: 1000x fasterCross-domain transfer: 85%Zero-shot performance: 80%+Distribution Strategy: The Spatial AI PlatformTarget MarketPrimary Segments:

Game developersRobotics companiesAR/VR platformsArchitecture firmsFilm/media studiosEnterprise metaverseDeveloper Focus:

Unity/Unreal integrationSDK/API offeringsCloud servicesEdge deploymentOpen standardsCommunity toolsGo-to-Market MotionPlatform Strategy:

Developer preview launchKey partnership demosIndustry-specific solutionsEnterprise pilotsPlatform ecosystemMarket standardRevenue Model:

API usage-based pricingEnterprise licensesCustom model trainingProfessional servicesMarketplace commissionsStrategic partnershipsEarly ApplicationsConfirmed Use Cases:

3D world generationRobot navigationAR object placementVirtual productionArchitectural visualizationMedical imagingPartnership Opportunities:

Game engines (Unity, Unreal)Cloud platforms (AWS, Azure)Hardware makers (NVIDIA, Apple)Robotics companiesAR/VR platformsCAD softwareFinancial Model: The Next AI PlatformBusiness Model EvolutionRevenue Streams:

Platform Services (60%)– API calls

– Compute usage

– Model access

– Custom deployments

– Professional services

– SLAs

– Marketplace

– Partnerships

– Licensing

Market Opportunity:

3D/AR/VR market: $200B by 2025Robotics: $150B by 2025Digital twins: $50B by 2025Gaming: $300B marketTotal addressable: $500B+Revenue Trajectory:

2024: Product development2025: $50M ARR2026: $300M ARR2027: $1B+ ARRFunding AnalysisSeries A (September 2024):

Amount: $230MValuation: $1.25BLead: a16z, NEAParticipants: Radical Ventures, Intel CapitalUse of Funds:

Research: 40%Engineering: 30%Go-to-market: 20%Operations: 10%Investor Thesis:

Betting on Fei-Fei Li to define next era of AI after her ImageNet transformed computer vision.

Fei-Fei Li’s Track Record:

Created ImageNet → enabled deep learning revolutionStanford AI Lab directorGoogle Cloud AI Chief ScientistCongressional AI advisorTime 100 Most InfluentialTeam Composition:

Stanford AI researchersGoogle/DeepMind alumniGraphics/gaming veteransRobotics expertsPhysics simulation prosWhy This Team:

Li previously transformed AI with ImageNet. Now applying same approach to 3D/spatial intelligence—creating foundational infrastructure for next AI era.

Potential Competitors:

NVIDIA: Graphics focus, not AI-nativeMeta: Social/consumer angleGoogle: No dedicated spatial AIOpenAI: Text/image focusApple: Consumer AR onlyWorld Labs’ Moats:

First mover in LWMsFei-Fei Li brand attracts talentAcademic network (Stanford)Foundational approach vs applicationsPlatform strategy vs point solutionsMarket TimingConvergence Factors:

Computing power sufficient3D data availabilityAR/VR market maturityRobotics explosionDigital transformationMetaverse momentumFuture Projections: The Physical-Digital BridgeProduct RoadmapPhase 1 (2024): Foundation

Core LWM developmentInitial partnershipsDeveloper previewResearch papersPhase 2 (2025): Platform Launch

Public API accessSDK releasesEnterprise pilotsEcosystem buildingPhase 3 (2026): Industry Solutions

Vertical applicationsHardware partnershipsInternational expansionStandard settingPhase 4 (2027+): Ubiquity

Every 3D application uses LWMsNew industries enabledPhysical-digital convergenceSpatial AI everywhereTransformational ImpactIndustries Transformed:

Gaming: Infinite worlds, instant creationRobotics: Human-like navigationArchitecture: Think it, build itHealthcare: 3D diagnosis revolutionEducation: Immersive learningManufacturing: Perfect digital twinsNew Possibilities:

Natural language to 3D worldsRobots that truly “see”AR that understands contextPerfect physics simulationSpatial search engines3D internet infrastructureInvestment ThesisWhy World Labs Wins1. Founder Dominance

Fei-Fei Li = spatial AITrack record unmatchedTalent magnetAcademic credibilityIndustry connections2. Technical Moat

Years ahead in LWMsFoundational technologyPlatform approachNetwork effectsData accumulation3. Market Timing

Spatial computing inflectionEnterprise demandDeveloper readinessHardware capabilityEcosystem maturityKey RisksTechnical:

Model complexityCompute requirementsAccuracy challengesIntegration difficultyMarket:

Adoption timelineCompetition from big techMonetization questionsPlatform dependenciesExecution:

Talent competitionScaling challengesGo-to-market complexityPartnership negotiationsThe Bottom LineWorld Labs represents the next chapter in AI evolution: from understanding language and images to comprehending the 3D world we actually live in. Fei-Fei Li’s track record of defining AI eras (ImageNet → deep learning) suggests World Labs could enable the spatial intelligence revolution.

Key Insight: Just as LLMs gave computers the ability to understand language, Large World Models will give them the ability to understand space. This isn’t just another AI company—it’s foundational infrastructure for how machines will perceive and interact with the physical world. At $1.25B valuation for a 4-month-old company, it’s priced aggressively but betting against Fei-Fei Li defining another AI era seems unwise.

Three Key Metrics to WatchDeveloper Adoption: Target 100K developers by 2025Model Performance: Achieving human-level spatial reasoningPartnership Announcements: Major platforms integrating LWMsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post World Labs’ $1.25B Business Model: How Fei-Fei Li is Building AI That Understands 3D Space Like Humans Do appeared first on FourWeekMBA.

Safe Superintelligence’s $5B Business Model: Ilya Sutskever’s Quest to Build AGI That Won’t Destroy Humanity

Safe Superintelligence (SSI) achieved a $5B valuation with a record-breaking $1B Series A by promising to solve AI’s existential problem: building superintelligence that helps rather than harms humanity. Founded by Ilya Sutskever (OpenAI’s former chief scientist and architect of ChatGPT), SSI represents the ultimate high-stakes bet—creating AGI with safety as the primary constraint, not an afterthought. With backing from a16z, Sequoia, and DST Global, SSI is the first company valued purely on preventing AI catastrophe while achieving superintelligence.

Value Creation: The Existential Insurance PolicyThe Problem SSI SolvesThe AGI Safety Paradox:

Race to AGI accelerating dangerouslySafety treated as secondary concernAlignment problem unsolvedExistential risk increasingNo one incentivized to slow downWinner potentially takes all (literally)Current Approach Failures:

OpenAI: Safety team resignationsAnthropic: Still capability-focusedGoogle: Profit pressure dominatesMeta: Open-sourcing everythingChina: No safety constraintsNobody truly safety-firstSSI’s Solution:

Safety as primary objectiveNo product release pressurePure research focusTop talent concentrationPatient capital structureAlignment before capabilityValue Proposition LayersFor Humanity:

Existential risk reductionSafe path to superintelligenceAligned AGI developmentCatastrophe preventionBeneficial outcomesSurvival insuranceFor Investors:

Asymmetric upside if successfulFirst mover in safe AGITop talent concentrationNo competition on safetyPotential to define industryRegulatory advantageFor the AI Industry:

Safety research breakthroughsAlignment techniquesBest practices developmentTalent developmentIndustry standardsLegitimacy enhancementQuantified Impact:

If SSI succeeds in creating safe AGI first, the value is essentially infinite—preventing potential human extinction while unlocking superintelligence benefits.

1. Safety-First Architecture

Constitutional AI principlesInterpretability built-inAlignment verificationRobustness testingFailure mode analysisKill switches mandatory2. Novel Research Directions

Mechanistic interpretabilityScalable oversightReward modelingValue learningCorrigibility researchUncertainty quantification3. Theoretical Foundations

Mathematical safety proofsFormal verification methodsGame-theoretic analysisInformation theory approachesComplexity theory applicationsPhilosophy integrationTechnical Differentiatorsvs. Capability-First Labs:

Safety primary, capability secondaryNo deployment pressureLonger research cyclesHigher safety standardsPublic benefit focusTransparent failuresvs. Academic Research:

Massive compute resourcesTop talent concentrationUnified visionFaster iterationReal system buildingDirect implementationResearch Priorities:

Alignment: 40% of effortInterpretability: 30%Robustness: 20%Capabilities: 10%(Inverse of typical labs)Distribution Strategy: The Anti-OpenAIGo-to-Market PhilosophyNo Traditional GTM:

No product releases plannedNo API or consumer productsResearch publication focusSafety demonstrations onlyIndustry collaborationKnowledge sharingPartnership Model:

Government collaborationSafety standards developmentIndustry best practicesAcademic partnershipsInternational cooperationRegulatory frameworksMonetization (Eventually)Potential Models:

Licensing safe AGI systemsSafety certification servicesGovernment contractsEnterprise partnershipsSafety-as-a-ServiceIP licensingTimeline:

Years 1-3: Pure researchYears 4-5: Safety validationYears 6-7: Limited deploymentYears 8-10: Commercial phasePatient capital criticalFinancial Model: The Longest GameFunding StructureSeries A (September 2024):

Amount: $1BValuation: $5BInvestors: a16z, Sequoia, DST Global, NFDGStructure: Patient capital, 10+ year horizonCapital Allocation:

Compute: 40% ($400M)Talent: 40% ($400M)Infrastructure: 15% ($150M)Operations: 5% ($50M)Burn Rate:

~$200M/year estimated5+ year runwayNo revenue pressureResearch-only focusValue Creation ModelTraditional VC Math Doesn’t Apply:

No revenue for yearsNo traditional metricsBinary outcome likelyInfinite upside potentialExistential downside hedgeInvestment Thesis:

Team premium (Ilya factor)First mover in safetyRegulatory capture potentialTalent magnet effectDefine industry standardsStrategic Analysis: The Apostate’s CrusadeFounder StoryIlya Sutskever’s Journey:

Co-founded OpenAI (2015)Created GPT series architectureLed to ChatGPT breakthroughBoard coup attempt (Nov 2023)Lost safety battle at OpenAIFounded SSI for pure safety focusWhy Ilya Matters:

Arguably understands AGI bestSeen the dangers firsthandCredibility unmatchedTalent magnet supremeTrue believer in safetyTeam Building:

Top OpenAI researchers followingDeepMind safety team recruitingAcademic all-stars joiningUnprecedented concentrationMission-driven assemblyCompetitive LandscapeNot Traditional Competition:

OpenAI: Racing for productsAnthropic: Balancing actGoogle: Shareholder pressureMeta: Open source chaosSSI: Only pure safety playCompetitive Advantages:

Ilya premium – talent followsPure mission – no distractionsPatient capital – no rushSafety focus – regulatory favorFirst mover – define standardsMarket DynamicsThe Safety Market:

Regulation coming globallySafety requirements increasingPublic concern growingIndustry needs standardsGovernment involvement certainStrategic Position:

Become the safety authorityLicense to othersRegulatory captureIndustry standard setterMoral high groundFuture Projections: Three ScenariosScenario 1: Success (30% probability)SSI Achieves Safe AGI First:

Valuation: $1T+Industry transformationLicensing to everyoneDefines AI futureHumanity saved (literally)Timeline:

2027: Major breakthroughs2029: AGI achieved safely2030: Limited deployment2032: Industry standardScenario 2: Partial Success (50% probability)Safety Breakthroughs, Not AGI:

Valuation: $50-100BSafety tech licensedIndustry influenceAcquisition targetMission accomplished partiallyOutcomes:

Critical safety researchIndustry best practicesTalent developmentRegulatory influencePositive impactScenario 3: Failure (20% probability)Neither Safety nor AGI:

Valuation: Talent exodusResearch publishedLessons learnedIndustry evolvedLegacy:

Advanced safety fieldTrained researchersRaised awarenessInfluenced othersInvestment ThesisWhy SSI Could Win1. Founder Alpha

Ilya = AGI understandingMission clarity absoluteTalent attraction unmatchedTechnical depth provenSafety commitment real2. Structural Advantages

No product pressurePatient capitalPure research focusGovernment alignmentRegulatory tailwinds3. Market Position

Only pure safety playFirst mover advantageStandard setting potentialMoral authorityIndustry needKey RisksTechnical:

AGI might be impossibleSafety unsolvableCompetition succeeds firstTechnical dead endsMarket:

Funding dries upTalent poachingRegulation adversePublic skepticismExecution:

Research stagnationTeam conflictsMission driftFounder riskThe Bottom LineSafe Superintelligence represents the highest-stakes bet in technology history: Can the architect of ChatGPT build AGI that helps rather than harms humanity? The $5B valuation reflects not traditional metrics but the option value on preventing extinction while achieving superintelligence.

Key Insight: SSI is betting that in the race to AGI, slow and safe beats fast and dangerous—and that when the stakes are human survival, the market will eventually price safety correctly. Ilya Sutskever saw what happens when capability races ahead of safety at OpenAI. Now he’s building the antidote. At $5B valuation with no product, no revenue, and no traditional metrics, SSI is either the most overvalued startup in history or the most undervalued insurance policy humanity has ever purchased.

Three Key Metrics to WatchResearch Publications: Quality and impact of safety papersTalent Acquisition: Who joins from OpenAI/DeepMindRegulatory Engagement: Government partnership announcementsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Safe Superintelligence’s $5B Business Model: Ilya Sutskever’s Quest to Build AGI That Won’t Destroy Humanity appeared first on FourWeekMBA.

Groq’s $2.8B Business Model: The AI Chip That’s 10x Faster Than NVIDIA (But There’s a Catch)

Groq has achieved a $2.8B valuation by building the world’s fastest AI inference chip—their Language Processing Unit (LPU) runs AI models 10x faster than GPUs while using 90% less power. Founded by Google TPU architect Jonathan Ross, Groq’s chips achieve 500+ tokens/second on large language models, making real-time AI applications finally possible. With $640M from BlackRock, D1 Capital, and Tiger Global, Groq is racing to capture the $100B AI inference market. But there’s a catch: they’re competing with NVIDIA’s infinite resources.

Value Creation: Speed as the New CurrencyThe Problem Groq SolvesCurrent AI Inference Pain:

GPUs designed for training, not inference50-100 tokens/second typical speedHigh latency kills real-time appsPower consumption unsustainableCost per query too highUser experience suffersMarket Limitations:

ChatGPT: Noticeable delaysVoice AI: Conversation gapsGaming AI: Can’t keep upTrading AI: Too slow for marketsVideo AI: Frame dropsReal-time impossibleGroq’s Solution:

500+ tokens/second (10x faster)Under 100ms latency90% less power usageDeterministic performanceReal-time AI enabledCost-effective at scaleValue Proposition LayersFor AI Companies:

Enable real-time applications10x better user experienceLower infrastructure costsPredictable performanceCompetitive advantageNew use cases possibleFor Developers:

Build impossible appsConsistent latencySimple integrationNo GPU complexityInstant responsesProduction readyFor End Users:

Conversational AI that feels humanGaming AI with zero lagInstant translationsReal-time analysisNo waiting screensAI at speed of thoughtQuantified Impact:

A conversational AI company using Groq can deliver responses in 100ms instead of 2 seconds, transforming stilted interactions into natural conversations.

1. Language Processing Unit Design

Sequential processing optimizedNo GPU memory bottlenecksDeterministic executionSingle-core simplicityCompiler-driven performancePurpose-built for inference2. Architecture Advantages

Tensor Streaming ProcessorNo external memory bandwidth limitsSynchronous executionPredictable latencyMassive parallelismSoftware-defined networking3. Software Stack

Custom compiler technologyAutomatic optimizationModel agnosticPyTorch/TensorFlow compatibleAPI simplicityCloud-native designTechnical Differentiatorsvs. NVIDIA GPUs:

Sequential vs parallel optimizationInference vs training focusDeterministic vs variable latencyLower power consumptionSimpler programming modelPurpose-built designvs. Other AI Chips:

Proven at scaleSoftware maturityCloud availabilityPerformance leadershipEnterprise readyEcosystem growingPerformance Benchmarks:

Llama 2: 500+ tokens/secMixtral: 480 tokens/secLatency: <100ms p99Power: 90% reductionAccuracy: Identical to GPUDistribution Strategy: The Cloud-First ApproachMarket EntryGroqCloud Platform:

Instant API accessPay-per-use pricingNo hardware purchaseGlobal availabilityEnterprise SLAsDeveloper friendlyTarget Segments:

AI application developersConversational AI companiesGaming studiosFinancial servicesHealthcare AIReal-time analyticsGo-to-Market MotionDeveloper-Led Growth:

Free tier for testingImpressive demos spreadWord-of-mouth viralEnterprise inquiries followLarge contracts closeReference customers promotePricing Strategy:

Competitive with GPUsUsage-based modelVolume discountsEnterprise agreementsROI-based positioningTCO advantagesPartnership ApproachStrategic Alliances:

Cloud providers (AWS, Azure)AI frameworks (PyTorch, TensorFlow)Model providers (Meta, Mistral)Enterprise software (Salesforce, SAP)System integratorsIndustry solutionsFinancial Model: The Hardware-as-a-Service PlayBusiness Model EvolutionRevenue Streams:

Cloud inference (70%)On-premise systems (20%)Software licenses (10%)Unit Economics:

Chip cost: ~$20KSystem price: $200K+Cloud margin: 70%+Utilization key metricScale drives profitabilityGrowth TrajectoryMarket Capture:

2023: Early adopters2024: $100M ARR run rate2025: $500M target2026: $2B+ potentialScaling Challenges:

Chip manufacturing capacityCloud infrastructure buildCustomer educationEcosystem developmentTalent acquisitionFunding HistoryTotal Raised: $640M

Series D (August 2024):

Amount: $640MValuation: $2.8BLead: BlackRockParticipants: D1 Capital, Tiger Global, SamsungPrevious Rounds:

Series C: $300M (2021)Early investors: Social Capital, D1Use of Funds:

Manufacturing scaleCloud expansionR&D accelerationMarket developmentStrategic inventoryStrategic Analysis: David vs NVIDIA’s GoliathFounder StoryJonathan Ross:

Google TPU co-inventor20+ years hardware experienceLeft Google to revolutionize inferenceTechnical visionaryRecruited A-teamMission-driven leaderWhy This Matters:

The person who helped create Google’s TPU knows exactly what’s wrong with current AI hardware—and how to fix it.

The NVIDIA Challenge:

NVIDIA: $3T market cap, infinite resourcesAMD: Playing catch-upIntel: Lost the AI raceStartups: Various approachesGroq: Speed leadershipGroq’s Advantages:

10x performance leadPurpose-built for inferenceFirst mover in LPU categorySoftware simplicityCloud-first strategyMarket DynamicsInference Market Explosion:

Training: $20B marketInference: $100B+ by 2027Inference growing 5x fasterEvery AI app needs inferenceReal-time requirements increasingWhy Groq Could Win:

Inference ≠ TrainingSpeed matters mostSpecialization beats generalizationDeveloper experience winsCloud removes frictionFuture Projections: The Real-Time AI EraProduct RoadmapGeneration 2 LPU (2025):

2x performance improvementLower cost per chipExpanded model supportEdge deployment optionsSoftware Platform (2026):

Inference optimization toolsMulti-model servingAuto-scaling systemsEnterprise featuresMarket Expansion (2027+):

Consumer devicesEdge computingSpecialized verticalsGlobal infrastructureStrategic ScenariosBull Case: Groq Wins Inference

Captures 20% of inference market$20B valuation by 2027IPO candidateIndustry standard for speedBase Case: Strong Niche Player

5-10% market shareAcquisition by major cloud provider$5-10B exit valuationTechnology validatedBear Case: NVIDIA Strikes Back

NVIDIA optimizes for inferenceMarket commoditizesGroq remains nicheStruggles to scaleInvestment ThesisWhy Groq Could Succeed1. Right Problem

Inference is the bottleneckSpeed unlocks new appsMarket timing perfectReal customer pain2. Technical Leadership

10x performance realArchitecture advantagesTeam expertise deepExecution proven3. Market Structure

David vs Goliath possibleSpecialization valuableCloud distribution worksDeveloper adoption strongKey RisksTechnical:

Manufacturing scalingNext-gen competitionSoftware ecosystemModel compatibilityMarket:

NVIDIA responsePrice pressureCustomer educationAdoption timelineFinancial:

Capital intensityLong sales cyclesUtilization ratesMargin pressureThe Bottom LineGroq has built a better mousetrap for AI inference—10x faster, 90% more efficient, purpose-built for the job. In a world where every millisecond matters for user experience, Groq’s LPU could become the inference standard. But they’re David fighting Goliath, and NVIDIA won’t stand still.

Key Insight: The AI market is bifurcating into training (where NVIDIA dominates) and inference (where speed wins). Groq’s bet is that specialized chips beat general-purpose GPUs for inference, just like GPUs beat CPUs for training. At $2.8B valuation with proven 10x performance, they’re either the next NVIDIA of inference or the best acquisition target in Silicon Valley. The next 18 months will decide which.

Three Key Metrics to WatchCloud Customer Growth: Path to 10,000 developersUtilization Rates: Target 70%+ for profitabilityChip Production Scale: Reaching 10,000 units/yearVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Groq’s $2.8B Business Model: The AI Chip That’s 10x Faster Than NVIDIA (But There’s a Catch) appeared first on FourWeekMBA.