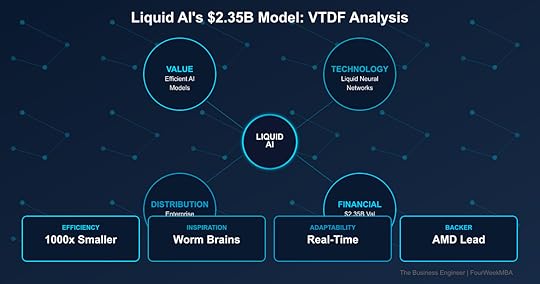

Liquid AI’s $2.35B Business Model: MIT Scientists Built AI That Thinks Like a Worm—And It’s 1000x More Efficient

Liquid AI has achieved a $2.35B valuation by developing “liquid neural networks” inspired by the 302-neuron brain of a roundworm—creating AI models that are 1000x smaller yet outperform traditional transformers. Founded by MIT CSAIL researchers who spent years studying biological neural systems, Liquid AI’s models adapt in real-time to changing conditions, making them ideal for robotics, autonomous vehicles, and edge computing. With $250M from AMD, the company is racing to commercialize the most significant architectural breakthrough since transformers.

Value Creation: Biology Beats Brute ForceThe Problem Liquid AI SolvesCurrent AI’s Fundamental Flaws:

Models getting exponentially larger (GPT-4: 1.7T parameters)Computational costs unsustainableCan’t adapt after trainingBlack box reasoningEdge deployment impossibleEnvironmental impact massiveTechnical Limitations:

Transformers need massive scaleFixed weights after trainingNo real-time adaptationInterpretability near zeroInference costs prohibitiveCan’t run on devicesLiquid AI’s Solution:

1000x smaller modelsAdapts continuously during useExplainable decisionsRuns on edge devicesFraction of energy usageBiology-inspired efficiencyValue Proposition LayersFor Enterprises:

Deploy AI on-device, not cloud90% lower compute costsReal-time adaptation to dataExplainable AI for compliancePrivate, secure deploymentSustainable AI operationsFor Developers:

Models that fit on phonesDynamic behavior modelingInterpretable architecturesLower training costsFaster experimentationNovel applications possibleFor Industries:

Automotive: Self-driving that adaptsRobotics: Real-time learningFinance: Explainable tradingHealthcare: Adaptive diagnosticsDefense: Edge intelligenceIoT: Smart device AIQuantified Impact:

A drone using Liquid AI can navigate unknown environments in real-time with a model 1000x smaller than GPT-4, running entirely on-device without cloud connectivity.

1. Biological Inspiration

Based on C. elegans worm brain302 neurons control complex behaviorContinuous-time neural dynamicsDifferential equations, not discreteAdaptive weights during inferenceCausality built-in2. Mathematical Foundation

Liquid Time-Constant (LTC) networksOrdinary differential equationsContinuous depth modelsAdaptive computation timeProvable stability guaranteesClosed-form solutions3. Key Advantages

Size: 1000x smaller than transformersAdaptability: Changes during useInterpretability: Causal understandingEfficiency: Fraction of computeRobustness: Handles distribution shiftSpeed: Real-time processingTechnical Differentiatorsvs. Transformers (GPT, BERT):

Continuous vs discrete timeAdaptive vs fixed weightsSmall vs massive scaleInterpretable vs black boxEfficient vs compute-hungryDynamic vs staticvs. Other Architectures:

Biology-inspired vs engineeredProven in robotics applicationsMIT research foundationMathematical rigorIndustrial applications readyPatent portfolio strongPerformance Metrics:

Model size: 1000x reductionEnergy use: 90% lessAccuracy: Matches or exceedsAdaptation: Real-timeInterpretability: Full causal graphsDistribution Strategy: Enterprise AI RevolutionTarget MarketPrimary Applications:

Autonomous vehiclesIndustrial roboticsEdge AI devicesFinancial modelingDefense systemsMedical devicesGo-to-Market Approach:

Enterprise partnershipsIndustry-specific solutionsDeveloper platformOEM integrationsCloud offeringsLicensing modelAMD PartnershipStrategic Value:

Optimize for AMD hardwareCo-develop solutionsJoint go-to-marketPreferred pricingTechnical integrationMarket validationHardware Optimization:

AMD Instinct GPUsRyzen AI processorsEdge deploymentCustom silicon potentialPerformance leadershipBusiness ModelRevenue Streams:

Software licensingCustom model developmentTraining and deploymentMaintenance contractsHardware partnershipsIP licensingPricing Strategy:

Value-based pricingCompute savings sharingSubscription modelsPer-device licensingEnterprise agreementsFinancial Model: The Efficiency PlayFunding AnalysisSeries A (December 2024):

Amount: $250MValuation: $2.35BLead: AMDOther investors: Duke Capital, The Pags Group, OSS CapitalUse of Funds:

R&D acceleration: 40%Engineering talent: 30%Go-to-market: 20%Infrastructure: 10%Market OpportunityTAM Expansion:

Edge AI: $100B by 2027Robotics: $150B marketAutonomous systems: $300BEnterprise AI: $500BTotal addressable: $1TCompetitive Position:

First mover in liquid networksMIT research foundationPatent portfolioAMD partnershipEnterprise tractionGrowth ProjectionsRevenue Model:

2024: Development phase2025: $50M ARR target2026: $250M ARR2027: $1B potentialKey Metrics:

Customer acquisition costNet revenue retentionGross margins (80% target)R&D as % of revenueStrategic Analysis: MIT Mafia StrikesFounder StoryTeam Background:

MIT CSAIL researchersDaniela Rus lab alumniPublished seminal papersYears of research foundationIndustry experienceTechnical depth unmatchedWhy This Team:

The researchers who literally invented liquid neural networks are the only ones who deeply understand the mathematics and potential applications.

AI Architecture Race:

OpenAI/Google: Bigger transformersMeta: Open source scaleAnthropic: Safety focusLiquid AI: Efficiency breakthroughMoat Building:

Patent portfolio from MITMathematical complexity barrierFirst mover advantageAMD partnership exclusiveTalent concentrationMarket TimingWhy Now:

AI costs unsustainableEdge computing criticalRegulatory pressure for explainabilityEnvironmental concernsReal-time requirementsBiology-inspired AI momentFuture Projections: The Adaptive AI EraProduct RoadmapPhase 1 (2024-2025): Foundation

Core platform launchDeveloper toolsEnterprise pilotsAMD optimizationPhase 2 (2025-2026): Expansion

Industry solutionsPartner ecosystemInternational marketsAdvanced modelsPhase 3 (2026-2027): Dominance

Standard for edge AIRobotic applicationsConsumer devicesNew architecturesStrategic VisionMarket Position:

Liquid AI : Efficient AI :: Tesla : Electric VehiclesDefine new categorySet efficiency standardEnable new applicationsLong-term Impact:

Every robot uses liquid networksEdge AI becomes defaultInterpretability mandatoryBiology-inspired standardInvestment ThesisWhy Liquid AI Wins1. Technical Superiority

1000x efficiency provenAdaptability uniqueInterpretability valuablePatents defensible2. Market Timing

AI efficiency crisisEdge computing waveRegulatory tailwindsSustainability focus3. Team and Backing

MIT foundersAMD strategic partnerFirst mover positionDeep technical moatKey RisksTechnical:

Scaling challengesApplication limitationsCompetition catching upIntegration complexityMarket:

Enterprise adoption speedTransformer momentumBig Tech responseEconomic conditionsExecution:

Talent retentionProduct deliveryPartner managementInternational expansionThe Bottom LineLiquid AI represents the most fundamental rethinking of neural networks since transformers—proving that 302 neurons in a worm’s brain hold secrets that trillion-parameter models miss. By creating AI that adapts like biological systems, they’ve solved the efficiency crisis plaguing the industry.

Key Insight: The AI industry’s “bigger is better” paradigm is hitting physical limits. Liquid AI’s biological approach isn’t just incrementally better—it’s a different paradigm entirely. Like how RISC challenged CISC in processors, liquid networks challenge the transformer orthodoxy. At $2.35B valuation with AMD backing and 1000x efficiency gains, Liquid AI isn’t competing on the same playing field—they’re creating a new game where small, adaptive, and efficient wins.

Three Key Metrics to WatchEnterprise Deployments: Target 50 Fortune 500 customers by 2026Model Performance: Maintaining 1000x size advantageRevenue Growth: Path to $100M ARR in 24 monthsVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Liquid AI’s $2.35B Business Model: MIT Scientists Built AI That Thinks Like a Worm—And It’s 1000x More Efficient appeared first on FourWeekMBA.