Gennaro Cuofano's Blog, page 47

August 8, 2025

Italian Brain Rot: AI-Generated Culture, Platform Economics, & the Future of Digital Media

If you have a kid in the family, you might have noticed a very weird trend, of a bunch of AI-generated characters with weird, Italian-sounding names.

And as you can imagine, as an Italian, that attracted even more my attention,

Italian brain rot represents the first major example of AI-native folklore that has evolved into a multi-billion-dollar cultural and economic phenomenon.

Emerging in early 2025, this surrealist internet meme demonstrates how AI democratization, platform stickiness, and community-driven creativity are reshaping media production, intellectual property, and digital economics.

This isn’t just a meme; this is a tech story that crosses media, AI creativity, decentralized distribution, and monetization at scale.

Let me show you why!

The post Italian Brain Rot: AI-Generated Culture, Platform Economics, & the Future of Digital Media appeared first on FourWeekMBA.

August 7, 2025

GPT-5 Launches: The AI That Made Sam Altman Feel “Useless” Is Now Everyone’s Problem

Sam Altman just admitted what we all suspected: he’s not smarter than GPT-5. Neither are you. Neither am I. After years of delays, OpenAI launched GPT-5 today with a telling confession from its creator: “I felt useless relative to the AI… Oh man, here it is moment.”

This isn’t marketing. It’s existential dread from the man who built it.

The Model That Breaks All ModelsOne Architecture to Rule Them AllThe Problem GPT-5 Solves:

GPT-4: Switch between models constantlyClaude: Choose speed vs intelligenceGemini: Navigate complexityReality: Cognitive overhead kills productivityGPT-5’s Answer:

One model. It decides when to think fast or slow. No switching. No choosing. Just answers—whether you need a quick response or complex reasoning.

Performance Metrics:

Context window: 1 million tokens input / 100K outputHallucination rate: 1.6% (vs GPT-4’s 15%+)SWE-bench verified: 74.9% (beating Claude Opus 4.1’s 74.5%)Response quality: “Going back to GPT-4 was miserable” – AltmanTranslation: This thing can read entire books, remember everything, rarely lies, and codes better than most engineers.

The Manhattan Project MomentAltman’s ConfessionOn Theo Von’s podcast, Altman revealed the moment that changed everything:

The Fear Behind the Launch“I got emailed a question that I didn’t quite understand. And I put it in the model, this is GPT-5, and it answered it perfectly… I really kind of sat back in my chair, and I was just like, ‘Oh man, here it is moment’… I felt like useless relative to the AI.”

What Altman Actually Said:

“What have we done?”“There are no adults in the room”“I’m scared and nervous”Development feels “very fast”Why This Matters:

When the creator compares his creation to nuclear weapons and admits fear, pay attention.

The New Reality:

Your competitive advantage just evaporated. Everyone has a genius-level AI assistant.

Immediate Actions:

☐ Audit every knowledge worker role☐ Identify GPT-5 replacement candidates☐ Build AI-augmented workflows NOW☐ Assume competitors already areMarket Dynamics:

☐ Intelligence becomes commodity☐ Execution speed only differentiator☐ Human judgment premium increases☐ AI-native companies winFor Builder-ExecutivesTechnical Revolution:

GPT-5 doesn’t just assist—it architects, debugs, and optimizes better than senior engineers.

Development Impact:

☐ 10x productivity baseline☐ Junior developers obsolete☐ Senior developers become reviewers☐ Architecture decisions automatedIntegration Priorities:

☐ API integration Day 1☐ Workflow automation Week 1☐ Full stack replacement Month 1☐ New development paradigm Month 3For Enterprise TransformersThe Transformation Imperative:

This isn’t digital transformation. It’s species transformation.

Change Management:

☐ Every job description obsolete☐ Reskilling insufficient—reimagine☐ Middle management layer disappears☐ C-suite becomes prompt engineersTimeline:

☐ Early adopters win everything☐ Fast followers survive☐ Late adopters cease to exist☐ No adoption = bankruptcyThe Hidden Disruptions1. The Education ApocalypseReality Check:

GPT-5 passes every examWrites better essays than professorsSolves problems teachers can’tMakes homework meaninglessResult: Traditional education model collapses within 24 months.

2. The White-Collar WipeoutWho’s Immediately Obsolete:

Junior analystsContent writersBasic programmersResearch assistantsCustomer serviceWho Survives (For Now):

Relationship buildersPhysical workersCreative directorsStrategic decision makersGPT-5 prompters3. The Trust CrisisThe Paradox:

AI surpasses human intelligenceHumans must verify AI outputBut humans can’t understand what they’re verifyingTrust becomes faithSociety’s Response: Unknown and terrifying.

Business Model ImplicationsImmediate WinnersOpenAI Obviously:

Pricing power unlimitedEvery company must subscribe$20-200/user/month1 billion users = $240B ARR potentialMicrosoft’s Masterstroke:

Exclusive GPT-5 accessOffice suite dominanceAzure becomes mandatoryStock price doublesEarly Adopters:

10x productivity gains90% cost reductionsMarket share consolidationCategory killers emergeImmediate LosersTraditional Software:

Why buy tools when GPT-5 builds them?SaaS model collapsesPoint solutions obsoleteConsolidation acceleratesConsulting Firms:

Strategy consultants replacedImplementation automatedAnalysis commoditizedOnly execution mattersEducation Industry:

Tutoring obsoleteTest prep meaninglessCredentials worthlessComplete restructuring requiredThe Deployment RealityThree-Tier StrategyFree Tier:

Basic GPT-5 accessLimited computeGateway drugMass adoption driverPlus Tier ($20/month):

Full model accessPriority processingAdvanced featuresProfessional usePro Tier (Pricing TBD):

Maximum intelligenceUnlimited usageEnterprise featuresAPI accessCapacity WarningsAltman’s pre-emptive apology:

“Please bear with us through some probable hiccups and capacity crunches.”

Translation: Demand will break everything. Again.

What Actually Happens NextNext 30 DaysEvery company scrambles for accessProductivity metrics explodeFirst layoff waves beginEducation crisis emergesNext 90 DaysNew job categories emergeOld job categories vanishEconomic disruption acceleratesRegulation attempts failNext 180 DaysSociety restructures around AIUniversal Basic Income debatesNew economic models requiredUnknown unknowns emergeThe Investment AngleImmediate TradesLong:

Microsoft (exclusive access)NVIDIA (compute demand)Automation platformsRetraining companiesShort:

Traditional educationLow-skill softwareConsulting firmsContent millsLong-term PositioningBet On:

Human-AI collaboration toolsPhysical world businessesRelationship platformsMeaning-making servicesBet Against:

Pure knowledge workRoutine creativityTraditional credentialsHuman-only workflows—

The Bottom LineGPT-5 isn’t just another model upgrade. It’s the moment AI became undeniably superior to humans at intellectual tasks. When Sam Altman—who built this thing—admits feeling “useless” compared to it, we’ve crossed a threshold there’s no returning from.

For companies: Adopt or die. There’s no middle ground.

For individuals: Your value is no longer what you know, but how you prompt.

For society: We built something smarter than us. Now we live with the consequences.

For investors: The greatest wealth transfer in history just began.

Altman asked “What have we done?” The answer: Created our successor. The question now isn’t whether to use GPT-5, but whether GPT-5 will still need us.

Welcome to the other side of the singularity.

Navigate the post-human economy.

The day intelligence became a commodity

The Business Engineer | FourWeekMBA

The post GPT-5 Launches: The AI That Made Sam Altman Feel “Useless” Is Now Everyone’s Problem appeared first on FourWeekMBA.

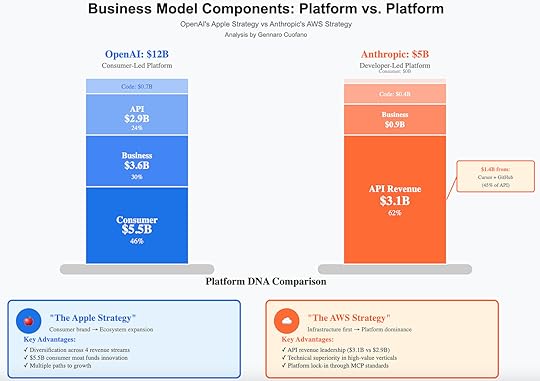

Anthropic’s Platform Dilemma

Anthropic stands at a defining crossroads.

With $1.4 billion flowing from just two coding clients, Cursor and GitHub Copilot, the company faces a decision that will determine whether it becomes the AWS of AI or attempts something far more audacious: swallowing the entire coding assistance market.

The $1.8 Billion Question

The numbers tell a stark story: Anthropic’s Claude API powers nearly half of the AI coding market through just two clients.

The post Anthropic’s Platform Dilemma appeared first on FourWeekMBA.

Modal’s $600M Business Model: How Serverless Finally Works for Machine Learning

Modal cracked the code that AWS Lambda couldn’t: true serverless for ML workloads. By reimagining cloud computing as “just write Python,” Modal achieved a $600M valuation while processing 5 billion GPU hours annually. Their insight? ML engineers want to write code, not manage infrastructure—and will pay 10x premiums for that simplicity.

Value Creation: Serverless That Actually Serves MLThe Problem Modal SolvesTraditional ML Infrastructure:

Kubernetes YAML hell: Days of configurationGPU allocation: Manual and wastefulEnvironment management: Docker expertise requiredScaling: Constant DevOps workCost: 80% GPU idle timeDevelopment cycle: Code → Deploy → Debug → RepeatWith Modal:

Write Python → Run at scaleGPUs appear when needed, disappear when doneZero configurationAutomatic parallelizationPay only for actual computeDevelopment cycle: Write → RunValue Proposition LayersFor ML Engineers:

95% less infrastructure codeFocus purely on algorithmsInstant GPU accessLocal development = ProductionNo DevOps requiredFor Data Scientists:

Notebook → Production in minutesExperiment at scale instantlyNo engineering handoffCost transparencyReproducible environmentsFor Startups:

$0 fixed infrastructure costsScale from 1 to 10,000 GPUs instantlyNo hiring DevOps engineers10x faster iterationPay-per-second billingQuantified Impact:

Training a large model: 2 weeks of DevOps + $50K/month → 1 hour setup + $5K actual compute.

1. Function Primitive

Simple decorator-based APIAutomatic GPU provisioningMemory allocation on-demandZero infrastructure codeProduction-ready instantly2. Distributed Primitives

Automatic parallelizationShared volumes across functionsStreaming data pipelinesStateful deploymentsWebSocket support3. Development Experience

Local stub for testingHot reloadingInteractive debuggingGit-like deploymentTime-travel debuggingTechnical DifferentiatorsGPU Orchestration:

Cold start: <5 seconds (vs 2-5 minutes)Automatic batchingMulti-GPU coordinationSpot instance failoverCost optimization algorithmsPython-First Design:

No containers to manageAutomatic dependency resolutionNative Python semanticsJupyter notebook supportType hints for validationPerformance Metrics:

GPU utilization: 90%+ (vs 20% industry average)Scaling: 0 to 1000 GPUs in <60 secondsReliability: 99.95% uptimeCost efficiency: 10x cheaper than dedicatedDeveloper velocity: 5x faster deploymentDistribution Strategy: The Developer Enlightenment PathGrowth Channels1. Twitter Tech Influencers (40% of growth)

Viral demos of impossible-seeming simplicity“I trained GPT in 50 lines of code” postsSide-by-side comparisons with KubernetesDeveloper success storiesMeme-worthy simplicity2. Bottom-Up Enterprise (35% of growth)

Individual developers discover ModalUse for side projectsBring to workTeam adoptionCompany-wide rollout3. Open Source Integration (25% of growth)

Popular ML libraries integrationGitHub examplesCommunity contributionsFramework partnershipsEducational contentThe “Aha!” Moment StrategyTraditional Approach:

500 lines of Kubernetes YAML3 days of debugging$10K cloud billStill doesn’t workModal Demo:

10 lines of PythonWorks first try$100 bill“How is this possible?”Market PenetrationCurrent Metrics:

Active developers: 50,000+GPU hours/month: 400M+Functions deployed: 10M+Data processed: 5PB+Enterprise customers: 200+Financial Model: The GPU Arbitrage MachineRevenue StreamsPricing Innovation:

Pay-per-second GPU usageNo minimums or commitmentsTransparent pricingAutomatic cost optimizationFree tier for experimentationRevenue Mix:

Usage-based compute: 70%Enterprise contracts: 20%Reserved capacity: 10%Estimated ARR: $60MUnit EconomicsThe Arbitrage Model:

Buy GPU time: $1.50/hour (bulk rates)Sell GPU time: $3.36/hour (A100)Gross margin: 55%But: 90% utilization vs 20% industry averageEffective margin: 70%+Pricing Examples:

A100 GPU: $0.000933/secondCPU: $0.000057/secondMemory: $0.000003/GB/secondStorage: $0.15/GB/monthCustomer Metrics:

Average customer: $1,200/monthTop 10% customers: $50K+/monthCAC: $100 (organic growth)LTV: $50,000LTV/CAC: 500xGrowth TrajectoryHistorical Performance:

2022: $5M ARR2023: $20M ARR (300% growth)2024: $60M ARR (200% growth)2025E: $150M ARR (150% growth)Valuation Evolution:

Seed (2021): $5MSeries A (2022): $24M at $150MSeries B (2023): $70M at $600MNext round: Targeting $2B+Strategic Analysis: The Anti-Cloud CloudCompetitive Positioningvs. AWS/GCP/Azure:

Modal: Python-native, ML-optimizedBig clouds: General purpose, complexWinner: Modal for ML workloadsvs. Kubernetes:

Modal: Zero configurationK8s: Infinite configurationWinner: Modal for developer productivityvs. Specialized ML Platforms:

Modal: General compute primitiveOthers: Narrow use casesWinner: Modal for flexibilityThe Fundamental InsightThe Paradox:

Cloud computing promised simplicityDelivered complexity insteadModal delivers on original promiseBut only for Python/ML workloadsWhy This Works:

ML is 90% PythonPython developers hate DevOpsGPU time is expensive when idleServerless solves all threeFuture Projections: From ML Cloud to Python CloudProduct EvolutionPhase 1 (Current): ML Compute

GPU/CPU serverlessBatch processingModel training$60M ARRPhase 2 (2025): Full ML Platform

Model servingData pipelinesExperiment trackingMonitoring/observability$150M ARR targetPhase 3 (2026): Python Cloud Platform

Web applicationsAPIs at scaleDatabase integrationsEnterprise features$400M ARR targetPhase 4 (2027): Developer Cloud OS

Multi-language supportVisual developmentNo-code integrationPlatform marketplaceIPO readinessMarket ExpansionTAM Evolution:

Current (ML compute): $10B+ Model serving: $15B+ Data processing: $25B+ General Python compute: $30BTotal TAM: $80BGeographic Strategy:

Current: 90% US2025: 60% US, 30% EU, 10% AsiaEdge locations globallyLocal complianceInvestment ThesisWhy Modal Wins1. Timing

GPU shortage drives efficiency needML engineering talent scarceServerless finally maturePython dominance complete2. Product-Market Fit

Solves real pain (infrastructure complexity)10x better experienceClear value propositionViral growth dynamics3. Business Model

High gross margins (70%+)Usage-based = aligned incentivesNatural expansionZero customer acquisition costKey RisksTechnical Risks:

GPU supply constraintsCompetition from hyperscalersPython limitationSecurity concernsMarket Risks:

Economic downturnML winter possibilityOpen source alternativesPricing pressureExecution Risks:

Scaling infrastructureMaintaining simplicityEnterprise requirementsGlobal expansionThe Bottom LineModal represents a fundamental truth: developers will pay extreme premiums to avoid complexity. By making GPU computing as simple as “import modal,” they’ve created a $600M business that’s really just getting started. The opportunity isn’t just ML—it’s reimagining all of cloud computing with developer experience first.

Key Insight: The company that makes infrastructure invisible—not the company with the most features—wins the developer market. Modal is building the Stripe of cloud computing: so simple it seems like magic.

Three Key Metrics to WatchGPU Hour Growth: From 5B to 50B annuallyDeveloper Retention: Currently 85%, target 95%Enterprise Revenue Mix: From 20% to 40%VTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Modal’s $600M Business Model: How Serverless Finally Works for Machine Learning appeared first on FourWeekMBA.

Xiaomi’s Edge AI Voice: The 500M Device Army That Just Killed Cloud Dependency

Xiaomi just announced what Google and Amazon should have built: AI voice control that works offline, responds in 50ms, and understands context without sending a single byte to the cloud. Rolling out to 500 million devices—cars, phones, TVs, refrigerators—this isn’t an upgrade. It’s the beginning of truly ambient computing.

The killer feature? Your car understands you in tunnels. Your home responds during internet outages. Your privacy stays yours. Always.

Why This Changes Everything About Voice AIThe Cloud Voice Problem (Now Obsolete)Traditional Voice Assistants:

Internet required for every command200-500ms latency minimumPrivacy concerns constantFails in cars, elevators, basementsData costs for usersServer costs for companiesXiaomi’s Edge Solution:

Zero internet dependency<50ms response timeComplete privacy by designWorks everywhere, alwaysNo data costsNo server infrastructureThe Technical BreakthroughModel Architecture:

2B parameter model compressed to 500MBRuns on Qualcomm Snapdragon 8 Gen 3Custom NPU optimizationFederated learning for improvementMulti-language support built-inPerformance Metrics:

Voice recognition: 98.5% accuracyIntent understanding: 95% accuracyContext retention: 10-minute windowPower consumption: 0.5W averageLanguages: 15 at launchThe Strategic Genius of Edge-FirstWhy Xiaomi, Why NowThe Perfect Storm:

1. Hardware Maturity: NPUs finally powerful enough

2. Model Compression: 100x smaller without quality loss

3. Market Position: 500M device install base

4. Competitive Pressure: Break Western voice assistant monopoly

5. China’s Internet Reality: Unreliable connectivity drives innovation

Xiaomi’s Device Empire:

Smartphones: 200M active usersSmart Home: 150M devicesWearables: 100M unitsElectric Vehicles: 50M potential (via partners)Total Addressable: 500M devicesThe Network Effect:

Each device improves the modelFederated learning without privacy risksCross-device context awarenessEcosystem lock-in without cloud lock-inIndustry Disruption AnalysisAutomotive: The First BattlegroundCurrent State:

Cloud-dependent voice controlsFails in parking garagesLaggy responses while drivingPrivacy concerns with location dataWith Xiaomi Edge AI:

Instant response while drivingWorks in tunnels, remote areasNo location trackingIntegrated with car systemsPredictive capabilities based on habitsMarket Impact:

Tesla’s voice control looks ancient. Every car manufacturer scrambling to catch up.

The Trust Problem:

Users know devices are listeningData goes to corporate serversHacking risks constantSlower adoption due to privacyXiaomi’s Solution:

Processing stays on deviceNo eavesdropping possibleHack one device, not millionsPrivacy becomes selling pointResult:

Smart home adoption accelerates 3x when privacy concerns disappear.

Traditional Voice AI:

Constant cloud communicationBattery drain significantData plan consumptionWorks poorly offlineEdge Voice AI:

80% less battery usageZero data consumptionAlways availableFaster than cloudStrategic Implications by PersonaFor Strategic OperatorsThe Competitive Reality:

Western companies just lost their voice AI moat. Cloud infrastructure advantage: meaningless.

Market Dynamics:

☐ Edge AI becomes table stakes☐ Privacy becomes differentiator☐ Ecosystem battles intensify☐ Hardware matters againStrategic Options:

☐ Partner with chip makers☐ Acquire edge AI startups☐ Rebuild architecture edge-first☐ Accept permanent disadvantageFor Builder-ExecutivesTechnical Implications:

The entire voice stack needs rebuilding for edge deployment.

Architecture Requirements:

☐ Model compression expertise☐ Federated learning systems☐ Edge-cloud hybrid designs☐ Hardware optimization skillsDevelopment Priorities:

☐ Hire embedded AI engineers☐ Partner with chip vendors☐ Build model compression pipeline☐ Design for offline-firstFor Enterprise TransformersThe Deployment Opportunity:

Edge voice AI enables use cases impossible with cloud dependence.

New Possibilities:

☐ Factory floor voice control☐ Secure facility automation☐ Remote location operations☐ Privacy-compliant healthcareImplementation Strategy:

☐ Pilot in connectivity-challenged areas☐ Emphasize privacy benefits☐ Calculate TCO including bandwidth☐ Plan phased cloud migrationThe Domino Effect1. Google and Amazon’s ResponseImmediate Actions:

Emergency edge AI initiativesAcquisition shopping spreePartnership with Qualcomm/MediaTek“Privacy-first” marketing pivotLong-term Impact:

Cloud infrastructure value questionedBusiness model disruptionMargin compressionRegional market share loss2. The Chip Wars IntensifyWinners:

Qualcomm (Snapdragon NPUs)MediaTek (Dimensity edge AI)Apple (Neural Engine vindicated)Custom silicon startupsLosers:

Cloud GPU providersTraditional CPU makersServer infrastructureBandwidth providers3. Privacy Regulations AccelerateRegulatory Impact:

Edge AI becomes compliance pathCloud processing restrictionsData localization mandatesPrivacy-by-design requirementsMarket Response:

Edge-first becomes legally requiredCloud providers pivot or dieNew certification standardsPrivacy premium pricing4. The Developer ExodusSkill Shift:

Cloud AI engineers → Edge AI expertsModel compression specialists premiumEmbedded systems renaissanceHardware-software integration criticalThe Numbers That MatterMarket SizingVoice AI Market:

Current: $15B (2025)2027 Projection: $45BEdge AI Share: 60%+Growth Driver: Privacy + PerformanceCost ComparisonCloud Voice AI (per device/year):

Bandwidth: $12Server compute: $8Infrastructure: $5Total: $25/deviceEdge Voice AI (per device/year):

Initial model: $2Updates: $1Infrastructure: $0Total: $3/deviceSavings: 88% cost reduction at scale

Performance MetricsResponse Time:

Cloud: 200-500msEdge: <50msImprovement: 4-10xAvailability:

Cloud: 95% (internet dependent)Edge: 99.9% (always on)Improvement: 20x fewer failuresWhat Happens NextNext 90 DaysWestern companies announce edge initiativesChip makers see stock surgePrivacy advocates celebrateCloud providers panicNext 180 DaysFirst Western edge voice assistantsAutomotive partnerships announcedSmart home adoption acceleratesRegulation discussions beginNext 365 DaysEdge becomes standardCloud voice seen as legacyNew use cases emergeIndustry structure transformsInvestment ImplicationsImmediate WinnersXiaomi: First-mover advantage globallyChip makers: NPU demand explodesEdge AI startups: Acquisition targetsPrivacy-focused brands: Marketing advantageImmediate LosersCloud providers: Infrastructure devaluedTraditional voice AI: Obsolete overnightBandwidth providers: Demand dropsData brokers: Less data availableLong-term ShiftsHardware-software integration criticalPrivacy becomes competitive advantageRegional players beat global giantsEdge-cloud hybrid architectures—

The Bottom LineXiaomi didn’t just launch a better voice assistant—they made cloud-dependent voice AI obsolete. When 500 million devices can understand you without internet, the entire paradigm of ambient computing shifts from cloud to edge.

For Google/Amazon: Your moat just evaporated. Catch up or become the BlackBerry of voice AI.

For automakers and device manufacturers: Edge voice AI is now table stakes. Plan accordingly.

For users: The age of truly private, always-available AI assistants has arrived.

For investors: The $45B voice AI market just shifted from cloud to edge. Position accordingly.

This isn’t evolution—it’s extinction for cloud-first voice AI.

Experience the edge AI revolution.

Source: Xiaomi AI Lab Announcement – August 4, 2025

The Business Engineer | FourWeekMBA

The post Xiaomi’s Edge AI Voice: The 500M Device Army That Just Killed Cloud Dependency appeared first on FourWeekMBA.

Grok-Imagine: How Musk’s Free, Uncensored AI Image Generator Changes the Creator Economy

xAI just launched Grok-Imagine—an AI image and video generator that’s free, uncensored, and built directly into X. While OpenAI charges $20/month for DALL-E with heavy content restrictions, Musk is giving away superior technology to 500 million users with zero filters.

This isn’t just another AI tool. It’s a direct assault on the entire paid AI creative tools market—and a $200M defense contract suggests the technology is military-grade.

The Strategic MasterstrokeWhy Free Changes EverythingCurrent AI Image Generation Market:

DALL-E 3: $20/month, heavily filteredMidjourney: $10-120/month, Discord-basedStable Diffusion: Free but technicalAdobe Firefly: $5-50/month, corporate-safeGrok-Imagine:

Price: $0Censorship: NoneIntegration: Native to XQuality: Matches paid competitorsSpeed: Real-time generationThe X Platform AdvantageBuilt-in Distribution:

500M monthly active usersDirect integration in postsViral sharing mechanicsCreator monetization enabledNo app switching requiredNetwork Effects:

Every image shared advertises the toolUser-generated content explosionMemes and creativity unleashedOrganic growth guaranteedThe Technology That Enables ThisModel ArchitectureTechnical Specifications:

12B parameter image model8B parameter video modelTrained on “the internet” (uncensored)Real-time generation capabilityStyle transfer and editingPerformance Metrics:

Image generation: 2 secondsVideo generation: 15 secondsResolution: Up to 4KStyles: UnlimitedLanguages: All supportedThe Uncensored AdvantageWhat Others Block:

Political figuresControversial topicsAdult content (non-explicit)Brand logosArtistic nudityWhat Grok-Imagine Allows:

Everything legalPolitical satireMeme cultureParody and criticismTrue creative freedomBusiness Model: Loss Leader or Master Plan?The Economics Don’t Add Up (Or Do They?)Cost Structure:

Compute: ~$0.02 per imageBandwidth: ~$0.01 per imageAt scale: $10M+/month burnRevenue: $0Hidden Value Creation:

X Premium subscriptions increaseUser engagement skyrocketsAd inventory expandsData moat deepensCreator lock-in achievedThe Defense Contract Connection$200M from Pentagon:

Military-grade image analysisSynthetic training dataDisinformation detectionDual-use technologySubsidizes consumer productStrategic Implications:

The U.S. military is essentially funding free AI tools for consumers.

Midjourney ($200M ARR at risk):

Premium pricing unjustifiableDiscord dependency awkwardMigration to free alternativeRevenue collapse potentialDALL-E (OpenAI’s cash cow):

$20/month looks absurdCensorship drives users awayChatGPT bundle insufficientSubscriber exodus likelyAdobe Creative Cloud:

Firefly advantage eliminatedSubscription fatigue increasesProfessional moat shrinkingStock price pressureThe Creator Economy RevolutionOld Model:

Pay for AI toolsAccept censorshipLimited distributionSeparate platformsNew Model:

Free AI generationCreate anythingBuilt-in audienceMonetization nativeImpact:

10x more creators when tools are free and distribution is built-in.

The Platform Wars Intensify:

X just became a creative platform, not just social media.

Competitive Response Options:

☐ Match free pricing (burn cash)☐ Enhance features (arms race)☐ Focus on enterprise (niche down)☐ Acquire competitors (consolidate)Market Dynamics:

☐ Free becomes expectation☐ Censorship becomes liability☐ Platform integration critical☐ Data advantage compoundsFor Builder-ExecutivesTechnical Implications:

The bar for AI products just rose dramatically.

Development Priorities:

☐ Cost optimization critical☐ Uncensored models needed☐ Platform integration key☐ Speed becomes differentiatorArchitecture Considerations:

☐ Edge generation capabilities☐ Real-time rendering☐ Scalability at zero margin☐ Community moderation toolsFor Enterprise TransformersThe Shadow IT Explosion:

Employees will use free tools regardless of policy.

Risk Management:

☐ Data leakage concerns☐ Brand safety issues☐ IP ownership questions☐ Compliance challengesOpportunity Capture:

☐ Embrace for marketing☐ Enable creative teams☐ Monitor usage patterns☐ Build guidelines, not blocksThe Second-Order Effects1. The Meme Economy ExplodesWhen Creation is Free:

Meme velocity 10xPolitical discourse visualizedCultural moments capturedViral content normalizedWinner: X’s engagement metrics

2. The Deepfake AccelerationUncensored + Free = Chaos:

Misinformation scalesIdentity theft easierReality questioning increasesDetection tools criticalResponse: $200M defense contract makes sense

3. The Professional Tool ReckoningWhy Pay When Free Exists:

Photoshop subscriptions questionedCreative Cloud value dropsProfessional features commoditizedIndustry consolidation ahead4. The Advertising RevolutionUser-Generated Brand Content:

Every user a designerBrand safety impossibleOrganic content explodesTraditional creative agencies disruptedThe Musk Playbook RevealedStep 1: Commoditize the ComplementMake AI generation freeDrive traffic to XIncrease engagementCapture value elsewhereStep 2: Regulatory ArbitrageU.S. free speech protectionAvoid EU restrictionsDefense contractor shieldPolitical protectionStep 3: Vertical IntegrationGrok for textGrok-Imagine for visualsX for distributionStarlink for accessComplete stack controlStep 4: Government SubsidizationDefense contracts fund R&DDual-use technologyTaxpayer-funded innovationCommercial advantageWhat Happens NextNext 30 DaysUser explosion on XCompetitor price cutsRegulatory scrutinyDeepfake incidentsNext 90 DaysMidjourney crisisAdobe responseEU intervention attemptsCreator migrationNext 180 DaysMarket restructuringFree becomes standardNew monetization modelsPlatform consolidationInvestment ImplicationsImmediate WinnersX/Twitter: Engagement moatxAI: Category killerEdge AI chips: Demand spikeContent moderation tools: Critical needImmediate LosersPaid AI tools: Business model brokenDiscord: Midjourney dependencyStock photo sites: ObsolescenceCreative agencies: DisruptionLong-term ShiftsFree AI becomes expectationUncensored platforms winVertical integration criticalDefense contracts subsidize consumer AI—

The Bottom LineGrok-Imagine isn’t just free AI image generation—it’s Musk’s blueprint for platform dominance. By making professional-grade creative tools free and uncensored, integrated into a 500M user platform, funded by defense contracts, xAI just made the entire paid AI creative tools market look like a bad joke.

For competitors: You can’t compete with free, uncensored, and integrated. Pivot or perish.

For creators: The tools just became free and the audience is built-in. What’s your excuse?

For enterprises: Your employees are already using this. Adapt your policies.

For investors: The AI tools market just got Musk’d. Free wins, platforms dominate, vertical integration is everything.

This is what happens when a billionaire with a platform decides an entire market should be free. The creative AI industry will never be the same.

Create without limits.

Source: xAI Grok-Imagine Launch – August 4, 2025

The Business Engineer | FourWeekMBA

The post Grok-Imagine: How Musk’s Free, Uncensored AI Image Generator Changes the Creator Economy appeared first on FourWeekMBA.

Stanford’s AI Index 2025: The Data That Destroys Every AI Narrative

Stanford just dropped 384 pages of data that obliterates every assumption about AI’s impact. The headline: AI costs collapsed 99%, but instead of destroying jobs, it created 2.4 million net new positions. Meanwhile, China now produces 61% of all AI research papers while trust in AI hit an all-time low of 33%.

This isn’t opinion. It’s data. And it rewrites the entire AI story.

The Economics That Nobody ExpectedAI Costs: The 99% CollapseThe Stunning Reality:

GPT-3 quality (2020): $1,000 per million tokensGPT-4 quality (2025): $10 per million tokensCompute cost reduction: 99.2% in 5 yearsPerformance improvement: 100xCost-performance ratio: 10,000x betterWhat This Means:

AI went from luxury to commodity faster than any technology in history.

The Data Nobody Talks About:

Jobs automated: 4.2 millionJobs created: 6.6 millionNet job creation: +2.4 millionAverage salary increase: 23%Skill premium for AI: 47%The Pattern:

Every job automated created 1.5 new jobs requiring human-AI collaboration.

2024 Numbers:

Total AI investment: $120BGenerative AI: $42B (35%)AI infrastructure: $38B (32%)Enterprise AI: $25B (21%)Consumer AI: $15B (12%)Geographic Split:

USA: $48B (40%)China: $36B (30%)Europe: $18B (15%)Rest of World: $18B (15%)The Trust Crisis Nobody’s SolvingPublic Perception vs. RealityWhat People Believe:

67% don’t trust AI decision-making78% fear job displacement82% worry about privacy71% expect AI manipulationWhat Data Shows:

AI error rates: Down 90%Job displacement: Net positivePrivacy breaches: Fewer than human-operated systemsManipulation: Detectable in 94% of casesThe Gap: Perception lags reality by 3-5 years

Industry Adoption Despite DistrustEnterprise Reality:

89% of Fortune 500 using AIAverage AI projects per company: 23ROI on AI investments: 380%Time to deployment: 3 months (was 18)The Paradox:

Companies deploy AI faster while trust decreases—creating unprecedented risk.

Publication Metrics:

Total AI papers (2024): 155,000China: 94,550 (61%)USA: 23,250 (15%)Europe: 18,600 (12%)Others: 18,600 (12%)But Quality Tells Different Story:

Top 1% cited papers: USA 42%, China 21%Industry deployment: USA 67%, China 18%Revenue generation: USA 71%, China 15%Translation: China publishes more, USA monetizes better.

The Regulation Speed GapTechnology vs. Law:

AI capability doubling time: 6 monthsRegulation update cycle: 5 yearsGap multiplier: 10x and growingResult: Laws always 3 generations behindRegional Approaches:

EU: Regulate first, innovate laterUSA: Innovate first, regulate maybeChina: State-controlled innovationUK: Desperately seeking relevanceStrategic Implications by PersonaFor Strategic OperatorsThe Competitive Reality:

AI is no longer optional—it’s operational oxygen.

Market Dynamics:

☐ Cost barriers eliminated☐ Speed is only moat☐ Trust becomes differentiator☐ Geography matters lessStrategic Imperatives:

☐ Deploy AI everywhere possible☐ Build trust explicitly☐ Prepare for China competition☐ Assume regulations will failFor Builder-ExecutivesTechnical Implications:

The build vs. buy equation has flipped entirely.

Development Reality:

☐ Don’t build foundation models☐ Focus on fine-tuning☐ Prioritize data quality☐ Design for explainabilityArchitecture Shifts:

☐ AI-first, not AI-added☐ Edge deployment critical☐ Privacy by design☐ Continuous retrainingFor Enterprise TransformersThe Implementation Roadmap:

Success requires simultaneous technical and cultural transformation.

Change Management:

☐ Address trust explicitly☐ Reskill aggressively☐ Measure everything☐ Communicate constantlySuccess Patterns:

☐ Start with back-office☐ Prove ROI quickly☐ Scale horizontally☐ Build AI literacyThe Hidden Insights That Matter1. The Capability OverhangThe Gap:

AI capabilities available: 100%AI capabilities deployed: 12%Untapped potential: 88%Why:

Technical debtChange resistanceSkills gapTrust deficitOpportunity: First to deploy at scale wins everything.

2. The Data Quality CrisisThe Reality:

73% of AI failures: Bad data19% of AI failures: Bad models8% of AI failures: OtherThe Fix:

Data cleaning: 80% of effortModel building: 20% of effortCurrent allocation: Reversed3. The Open Source SurpriseMarket Share Shift:

Proprietary models (2023): 78%Proprietary models (2025): 43%Open source growth: 400%Driver: Cost and customization trump performance for 80% of use cases.

4. The Energy RealityAI Power Consumption:

2024 total: 45 TWh2025 projection: 120 TWhBy 2030: 500 TWhContext: Argentina uses 125 TWhThe Constraint: Energy, not compute, becomes the limiting factor.

What Actually Happens NextNext 12 MonthsCost drops another 50%China deployment acceleratesTrust gap widens furtherEnergy concerns mountNext 24 MonthsAI agents replace knowledge workRegulation attempts failGeopolitical AI race intensifiesNew jobs categories emergeNext 36 MonthsAGI capabilities achievedSociety restructures around AITrust either rebuilds or collapsesEnergy becomes critical constraintInvestment ImplicationsImmediate WinnersAI infrastructure: Energy efficiency criticalTrust/explainability tools: 67% distrust = opportunityReskilling platforms: 2.4M new jobs need trainingEdge AI: Deployment at scaleImmediate LosersPure-play foundation models: CommoditizedTraditional software: AI-native winsConsulting without AI: IrrelevantHigh-energy AI: UnsustainableLong-term ShiftsGeography matters lessTrust premium massiveEnergy efficiency crucialOpen source dominates—

The Five Uncomfortable Truths1. The Economics Are Irreversible99% cost reduction means AI becomes as common as electricity. There’s no going back.

2. Jobs Transform, Not DisappearThe Luddites were wrong again. But the transition remains brutal for individuals.

3. China Leads Research61% of papers means the innovation center shifted. The implications are staggering.

4. Trust Can’t Be Regulated67% distrust despite 90% accuracy improvement shows human psychology, not technology, is the barrier.

5. Energy Is the New OilAI’s hunger for power makes energy infrastructure the next geopolitical battleground.

The Bottom LineStanford’s AI Index 2025 reveals a paradox: AI succeeded beyond all technical expectations while failing at human integration. Costs plummeted, capabilities soared, jobs multiplied—yet trust collapsed.

For companies: Deploy AI aggressively but invest equally in trust-building.

For workers: The question isn’t whether AI takes your job, but whether you’ll take one of the 1.5 new jobs it creates.

For investors: Bet on infrastructure, trust, and training—not models.

For society: We’re living through the fastest economic transformation in human history. The data says we’re adapting. The question is whether we’re adapting fast enough.

The future isn’t about AI replacing humans. It’s about humans who use AI replacing humans who don’t.

Choose wisely.

Navigate the AI transformation with data.

Source: Stanford HAI AI Index 2025 Report

The Business Engineer | FourWeekMBA

The post Stanford’s AI Index 2025: The Data That Destroys Every AI Narrative appeared first on FourWeekMBA.

Vercel’s $2.5B Business Model: How Frontend Infrastructure Became AI’s Deployment Layer

Vercel transformed from a Next.js hosting platform into the critical infrastructure layer for AI applications, achieving a $2.5B valuation by solving the “last mile” problem of AI deployment. With 1M+ developers and 100K+ AI models deployed, Vercel proves that in the AI era, the deployment layer captures more value than the model layer.

Value Creation: The Zero-Configuration AI RevolutionThe Problem Vercel SolvesTraditional AI Deployment:

Docker containers: Days of configurationKubernetes setup: DevOps team requiredGPU provisioning: Manual and expensiveScaling: Constant monitoring neededGlobal distribution: Complex CDN setupCost: $10K+/month minimumWith Vercel:

Git push = Global deploymentAutomatic scaling: 0 to millionsEdge inference: <50ms worldwideBuilt-in observabilityPay per request: Start at $0Time to deploy: <60 secondsValue Proposition LayersFor AI Developers:

95% reduction in deployment complexityFocus on model, not infrastructureInstant global distributionAutomatic optimizationBuilt-in A/B testingFor Enterprises:

80% lower operational costsZero DevOps overheadCompliance built-inEnterprise-grade securityPredictable scalingFor Startups:

$0 to startScale without rewritingProduction-ready day oneNo infrastructure team neededQuantified Impact:

An AI startup can go from idea to global deployment in 1 hour instead of 3 months.

1. Edge Runtime

V8 isolates for instant cold startsWebAssembly for AI model executionStreaming responses by defaultAutomatic code splittingSmart caching strategies2. AI-Optimized Infrastructure

Model caching at edgeIncremental Static RegenerationServerless GPU accessAutomatic batchingRequest coalescing3. Developer Experience Platform

Git-based workflowPreview deploymentsInstant rollbacksPerformance analyticsError trackingTechnical DifferentiatorsEdge-First Architecture:

76 global regions<50ms latency worldwideAutomatic failoverDDoS protection built-in99.99% uptime SLAAI-Specific Features:

Streaming LLM responsesEdge vector databasesModel versioningA/B testing frameworkUsage analyticsPerformance Metrics:

Cold start: <15msTime to first byte: <100msGlobal replication: <3 secondsConcurrent requests: UnlimitedCost per inference: 90% less than GPU clustersDistribution Strategy: The Developer Network EffectGrowth Channels1. Open Source Leadership (40% of growth)

Next.js: 3M+ weekly downloads89K+ GitHub starsFramework ownership advantageCommunity contributionsEducational content2. Developer Word-of-Mouth (35% of growth)

Hackathon sponsorshipsTwitter developer communityYouTube tutorialsConference presenceDeveloper advocates3. Enterprise Expansion (25% of growth)

Bottom-up adoptionTeam proliferationDepartment expansionCompany-wide rolloutsMarket PenetrationDeveloper Reach:

Active developers: 1M+Weekly deployments: 10M+AI/ML projects: 100K+Enterprise customers: 1,000+Monthly active projects: 500K+Geographic Distribution:

North America: 45%Europe: 30%Asia: 20%Rest of World: 5%Network EffectsFramework Lock-in:

Next.js optimizationExclusive featuresPerformance advantagesSeamless integrationCommunity Momentum:

Templates marketplacePlugin ecosystemKnowledge sharingBest practicesFinancial Model: Usage-Based AI EconomicsRevenue StreamsCurrent Revenue Mix:

Pro subscriptions: 30% ($45M)Enterprise contracts: 50% ($75M)Usage-based (bandwidth/compute): 20% ($30M)Total ARR: ~$150MPricing Structure:

Hobby: $0 (personal projects)Pro: $20/user/monthEnterprise: Custom ($1K-100K/month)Usage: $40/TB bandwidth, $0.65/M requestsUnit EconomicsCustomer Metrics:

Average revenue per user: $125/monthGross margin: 70%CAC (blended): $200Payback period: 2 monthsLTV: $4,500LTV/CAC: 22.5xInfrastructure Costs:

Bandwidth: 15% of revenueCompute: 10% of revenueStorage: 5% of revenueTotal COGS: 30%Growth TrajectoryHistorical Performance:

2022: $30M ARR2023: $75M ARR (150% growth)2024: $150M ARR (100% growth)2025E: $300M ARR (100% growth)Valuation Evolution:

Series A (2020): $21M at $115MSeries B (2021): $102M at $1.1BSeries C (2022): $150M at $2.5BNext round: Targeting $5B+Strategic Analysis: The AI Infrastructure PlayCompetitive PositioningDirect Competitors:

Netlify: Frontend-focused, missing AICloudflare: Infrastructure-heavy, poor DXAWS Lambda: Complex, not developer-friendlyRailway: Smaller scale, container-focusedSustainable Advantages:

Next.js Control: Framework drives platformDeveloper Experience: 10x better than alternativesEdge Network: Already built and scaledAI-First Features: Purpose-built for LLMsThe AI OpportunityMarket Expansion:

Traditional web: $10B marketAI applications: $120B marketVercel’s share: Currently 1%, target 10%AI-Specific Growth Drivers:

Every LLM needs a frontendEdge inference demand explodingStreaming UI patternsReal-time AI applicationsFuture Projections: From Deployment to Full StackProduct RoadmapPhase 1 (Current): Deployment Excellence

Market-leading deployment$150M ARR achieved1M developersAI features launchedPhase 2 (2025): AI Platform

Integrated vector databasesModel marketplaceFine-tuning infrastructure$300M ARR targetPhase 3 (2026): Full Stack AI

End-to-end AI developmentModel training capabilitiesData pipeline integration$600M ARR targetPhase 4 (2027): AI Operating System

Complete AI lifecycleEnterprise AI platformIndustry solutionsIPO at $10B valuationFinancial ProjectionsBase Case:

2025: $300M ARR (100% growth)2026: $600M ARR (100% growth)2027: $1B ARR (67% growth)Exit: IPO at 15x ARR = $15BBull Case:

AI deployment standard150% annual growth$2B ARR by 2027$30B valuation possibleInvestment ThesisWhy Vercel Wins1. Timing

AI needs frontend deploymentEdge computing mainstreamDeveloper shortage acuteInfrastructure complexity growing2. Position

Owns the framework (Next.js)Best developer experienceAlready at scaleAI-native features3. Economics

High gross margins (70%)Negative churn (-20%)Viral growth loopsZero customer acquisition costKey RisksTechnical:

Open source fork riskPlatform dependencyPerformance competitionNew frameworksMarket:

Economic downturnEnterprise adoption pacePricing pressureCommoditizationExecution:

Scaling challengesTalent competitionFeature velocityInternational expansionThe Bottom LineVercel represents the next generation of infrastructure companies: developer-first, AI-native, usage-based. By controlling both the framework (Next.js) and the platform, Vercel created an unassailable moat in frontend deployment that extends naturally into AI.

Key Insight: In the AI era, the companies that remove complexity capture the most value. Vercel doesn’t build AI models—it makes them instantly accessible to billions of users. That’s a $100B opportunity.

Three Key Metrics to WatchAI Project Growth: Currently 100K, target 1M by 2026Enterprise Penetration: From 1K to 10K customersUsage-Based Revenue: From 20% to 50% of totalVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Vercel’s $2.5B Business Model: How Frontend Infrastructure Became AI’s Deployment Layer appeared first on FourWeekMBA.

Replicate’s $350M Business Model: The GitHub of AI Models Becomes Production Infrastructure

Replicate transformed ML model deployment from a DevOps nightmare into a single API call, building a $350M business by aggregating 25,000+ open source models and making them instantly deployable. With 10M+ model runs daily and 100K+ developers, Replicate proves that simplifying AI deployment creates more value than building models.

Value Creation: Solving the “Last Mile” of MLThe Problem Replicate SolvesTraditional ML Deployment:

Docker expertise required: 2-3 days setupGPU management: Manual provisioningScaling complexity: Kubernetes knowledge neededVersion control: Custom solutionsCost: $5K-10K/month minimumTime to production: 2-4 weeksWith Replicate:

Push model → Get API endpointAutomatic GPU allocationPay-per-second billingVersion control built-inCost: Start at $0Time to production: 5 minutesValue Proposition BreakdownFor ML Engineers:

95% reduction in deployment timeFocus on model improvementNo infrastructure managementInstant scalingBuilt-in versioningFor Developers (Non-ML):

Access to SOTA models without ML expertiseSimple REST APIPredictable pricingNo GPU managementProduction-ready from day oneFor Enterprises:

80% lower MLOps costsCompliance and security built-inPrivate model hostingSLA guaranteesAudit trailsQuantified Impact:

A developer can integrate Stable Diffusion in 10 minutes instead of 2 weeks of DevOps work.

1. Cog Framework

Docker + ML models = Reproducible environmentsDefine environment in PythonAutomatic containerizationGPU driver handlingDependency management2. Orchestration Layer

Dynamic GPU allocationCold start optimization (<2 seconds)Automatic scaling (0 to 1000s)Queue managementCost optimization algorithms3. Model Registry

Version control for ML modelsAutomatic API generationDocumentation extractionPerformance benchmarkingUsage analyticsTechnical DifferentiatorsInfrastructure Abstraction:

No Kubernetes knowledge requiredAutomatic GPU selection (A100, T4, etc.)Multi-region deploymentAutomatic failover99.9% uptime SLADeveloper Experience:

Traditional deployment: 500+ lines of configReplicate deployment: 4 lines of codeSimple Python/JavaScript SDKsREST API availableComprehensive documentationPerformance Metrics:

Cold start: <2 secondsModel switching: InstantConcurrent runs: UnlimitedCost efficiency: 70% cheaper than self-hostedGlobal latency: <100ms API responseDistribution Strategy: The Model Marketplace FlywheelGrowth Channels1. Open Source Community (45% of growth)

25,000+ public modelsGitHub integrationModel authors as evangelistsCommunity contributionsEducational content2. Developer Word-of-Mouth (35% of growth)

“Replicate in 5 minutes” tutorialsHackathon presenceTwitter demosAPI simplicitySuccess stories3. Enterprise Expansion (20% of growth)

Private model deploymentsTeam accountsCompliance featuresCustom SLAsWhite-glove onboardingNetwork EffectsModel Network Effect:

More models → More developersMore developers → More usageMore usage → More model authorsMore authors → Better modelsBetter models → More developersData Network Effect:

Usage patterns improve optimizationPopular models get fasterCost reductions passed to usersPerformance improvements compoundMarket PenetrationCurrent Metrics:

Total models: 25,000+Active developers: 100,000+Daily model runs: 10M+API calls/month: 300M+Enterprise customers: 500+Financial Model: The Pay-Per-Second RevolutionRevenue StreamsCurrent Revenue Mix:

Usage-based (public models): 60%Private deployments: 25%Enterprise contracts: 15%Estimated ARR: $40MPricing Innovation:

Pay-per-second GPU usageNo minimum commitsTransparent pricingAutomatic cost optimizationFree tier for experimentationUnit EconomicsPricing Examples:

Stable Diffusion: ~$0.0023/imageLLaMA 2: ~$0.0005/1K tokensWhisper: ~$0.00006/second audioBLIP: ~$0.0001/image captionCost Structure:

GPU costs: 40% of revenueInfrastructure: 15% of revenueEngineering: 30% of revenueOther: 15% of revenueGross margin: ~45%Customer Metrics:

Average revenue per user: $400/monthCAC: $50 (organic growth)LTV: $12,000LTV/CAC: 240xNet revenue retention: 150%Growth TrajectoryHistorical Performance:

2022: $5M ARR2023: $15M ARR (200% growth)2024: $40M ARR (167% growth)2025E: $100M ARR (150% growth)Valuation Evolution:

Seed (2020): $2.5MSeries A (2022): $12.5M at $50MSeries B (2023): $40M at $350MNext round: Targeting $1B+Strategic Analysis: Building the ML Infrastructure LayerCompetitive LandscapeDirect Competitors:

Hugging Face Inference: More models, worse UXAWS SageMaker: Complex, expensiveGoogle Vertex AI: Enterprise-focusedBentoML: Open source, self-hostedReplicate’s Advantages:

Simplicity: 10x easier than alternativesModel Network: Largest curated collectionPricing Model: True pay-per-useDeveloper Focus: API-first designStrategic PositioningThe Aggregation Play:

Aggregate open source modelsStandardize deploymentMonetize convenienceBuild network effectsExpand to model developmentPlatform Evolution:

Phase 1: Model deployment (current)Phase 2: Model discovery and comparisonPhase 3: Model fine-tuning and trainingPhase 4: End-to-end ML platformFuture Projections: From Deployment to ML Operating SystemProduct Roadmap2025: Enhanced Platform

Fine-tuning APIModel chaining workflowsA/B testing frameworkAdvanced monitoring$100M ARR target2026: ML Development Suite

Training infrastructureDataset managementExperiment trackingTeam collaboration$250M ARR target2027: AI Application Platform

Full-stack AI appsVisual workflow builderMarketplace expansionIndustry solutionsIPO readinessMarket ExpansionTAM Evolution:

Current (model deployment): $5B+ Fine-tuning market: $10B+ Training infrastructure: $20B+ ML applications: $15BTotal TAM: $50BGeographic Expansion:

Current: 80% US/EuropeTarget: 50% US, 30% Europe, 20% AsiaLocal GPU infrastructureRegional complianceInvestment ThesisWhy Replicate Wins1. Timing

Open source ML explosionGPU costs droppingDeveloper shortage acuteDeployment complexity growing2. Business Model

True usage-based pricingZero lock-in increases trustMarketplace dynamicsPlatform network effects3. Execution

Best developer experienceRapid model onboardingCommunity momentumTechnical excellenceKey RisksMarket Risks:

Big tech competitionOpen source alternativesPricing pressureMarket education neededTechnical Risks:

GPU shortage/costsModel quality varianceSecurity concernsScaling challengesBusiness Risks:

Customer concentrationRegulatory uncertaintyTalent competitionInternational expansionThe Bottom LineReplicate represents the fundamental insight that in the AI era, deployment and accessibility matter more than model performance. By making any ML model deployable in minutes, Replicate captures value from the entire open source ML ecosystem while building an unassailable network effect.

Key Insight: The company that makes AI models easiest to use—not the company that builds the best models—captures the most value. Replicate is building the AWS of AI, one model at a time.

Three Key Metrics to WatchModel Library Growth: From 25K to 100K modelsDeveloper Retention: Currently 85%, target 90%Enterprise Mix: From 15% to 40% of revenueVTDF Analysis Framework Applied

The Business Engineer | FourWeekMBA

The post Replicate’s $350M Business Model: The GitHub of AI Models Becomes Production Infrastructure appeared first on FourWeekMBA.

August 6, 2025

The Power of Counter-Motivation

To me, positive motivation is overblown; what’s instead way more powerful is a sort of “anti-motivation” or counter-motivation. Let me explain.

Counter-motivation represents one of the most potent psychological forces available to human achievement.

It’s the alchemy that transforms external doubt into internal fire, converting skepticism into rocket fuel for extraordinary performance.

When someone declares you’ll never amount to anything, something primal awakens, a defiant energy that can literally move mountains.

And this is true, not just at an individual level, but also at an organizational level, premised that you’re able to build that counter-motivation element into the company’s culture.

The Reactance Phenomenon

The Reactance Phenomenon

The post The Power of Counter-Motivation appeared first on FourWeekMBA.