Gennaro Cuofano's Blog, page 24

September 6, 2025

Zipf’s Law of AI Usage: Why 1% of Features Get 99% of Use

In 1949, linguist George Zipf discovered something strange: in any language, the most common word appears twice as often as the second most common, three times as often as the third, and so on. This pattern – where a few items dominate while most barely register – appears everywhere from city sizes to wealth distribution. Now it’s showing up in AI usage, and it’s breaking everyone’s product strategies.

Zipf’s Law reveals an uncomfortable truth about AI products: while companies race to add hundreds of features and capabilities, users stubbornly stick to using the same few functions over and over. The distribution is so extreme it seems impossible – until you look at the data.

The Brutal Reality of AI Feature UsageThe 1% RuleAnalyze any AI product’s usage data and you’ll find:

1% of features generate 50%+ of all usage5% of features generate 80%+ of all usage20% of features generate 95%+ of all usage80% of features are virtually never usedThis isn’t poor product design – it’s Zipf’s Law in action.Real-World Examples

ChatGPT Usage Pattern:

1. Basic Q&A (40% of all queries)

2. Writing assistance (20%)

3. Code help (15%)

4. Translation (8%)

5. Summarization (5%)

…Everything else (<12%)

Image AI Usage Pattern:

1. Portrait enhancement (35%)

2. Background removal (25%)

3. Style transfer (15%)

4. Object removal (10%)

…Advanced features (<15%)

The pattern is universal: extreme concentration at the top, rapid decay down the tail.

Why Zipf’s Law Emerges in AICognitive Load EconomicsHumans have limited cognitive bandwidth. Learning new AI features has costs:

Discovery Cost: Finding the feature existsLearning Cost: Understanding how to use itMemory Cost: Remembering it existsSwitching Cost: Changing established workflowsUsers economize by mastering a few high-value features and ignoring the rest.The Habit Formation Curve

Once users find features that work, habit formation locks them in:

Day 1-7: Experimentation phase – try many features

Day 8-30: Consolidation phase – narrow to useful ones

Day 30+: Habit phase – use same features repeatedly

After 30 days, usage patterns solidify into Zipfian distribution.

The Satisficing PrincipleUsers don’t optimize – they satisfice (find “good enough”):

Basic chat solves 80% of needsWhy learn advanced features for 20% improvement?Cognitive effort isn’t worth marginal gains“Good enough” dominates “optimal”This creates winner-take-all dynamics within product features.The Product Strategy ImplicationsThe Feature Bloat Trap

Companies keep adding features because:

Competition Pressure: Match competitor feature listsMarketing Needs: New features for announcementsUser Requests: Vocal minorities demand edge casesEngineering Pride: Technical capability demonstrationsBut Zipf’s Law means most features are waste.The Core Feature Paradox

The paradox: Users choose products based on feature breadth but use them for feature depth.

Selection Criteria: “Can it do everything?”

Usage Reality: “I only use it for one thing”

Retention Driver: Excellence at core features

Churn Reason: Core feature degradation

Companies optimize for selection (breadth) but should optimize for retention (depth).

The Long Tail IllusionThe “long tail” strategy suggests serving niche needs profitably. But in AI:

Long tail features have near-zero usageMaintenance costs are significantComplexity degrades core experienceSupport burden is disproportionateThe long tail is a cost center, not profit center.Strategic Responses to Zipf’s LawThe Ruthless Focus Strategy

Accept Zipf’s Law and optimize for it:

Identify Power Features: Find your 1% that drives 50% usage

10x Investment: Put all resources into dominant features

Aggressive Pruning: Remove underused features

Depth Over Breadth: Better to do one thing perfectly

Examples: Grammarly (grammar checking), Jasper (marketing copy), GitHub Copilot (code completion)

The Progressive Disclosure StrategyHide complexity from most users:

Layer 1: Core features (visible to all)

Layer 2: Power features (available on request)

Layer 3: Advanced features (hidden by default)

Layer 4: API/Developer features (separate documentation)

This respects Zipf’s Law while serving edge cases.

The Modular Architecture StrategySeparate core from periphery:

Core Product: Minimal, perfect, fast

Plugin Ecosystem: Optional additions

Feature Marketplace: Third-party extensions

API Platform: Build your own features

Let Zipf’s Law work for you – most users get simplicity, power users get everything.

The AI-Specific ManifestationsPrompt Engineering ConcentrationEven in “open-ended” AI, usage concentrates:

Top Prompts (variations of):

1. “Explain this to me”

2. “Write a [document type] about [topic]”

3. “Fix this [code/text]”

4. “Summarize this”

5. “Translate to [language]”

Despite infinite possibilities, users converge on a few patterns.

The Model Capability WasteLarge models have thousands of capabilities, but users tap into few:

GPT-4 Capabilities: Poetry, analysis, coding, translation, reasoning, math…

Actual Usage: 90% is basic text generation

Capability Utilization: <5% of model potential Economic Implication: Overpaying for unused capability

This drives the “right-sized model” movement.

The Interface ConvergenceAll AI interfaces converge to the same few patterns:

Chat interface (dominates everything)Single input box (maximum simplicity)Regenerate button (most-used feature)Copy button (second-most used)Zipf’s Law drives interface homogenization.The Business Model ImplicationsPricing Power Concentration

Since usage concentrates, so does pricing power:

Core Features: Can charge premium (high usage)

Secondary Features: Must bundle (moderate usage)

Long Tail Features: Can’t monetize (no usage)

Price discrimination should follow Zipfian distribution.

The Freemium OptimizationZipf’s Law suggests optimal freemium strategy:

Free Tier: Top 1-2 features (50% of usage value)

Paid Tier: Top 5-10 features (90% of usage value)

Enterprise Tier: Everything (last 10% of value)

This matches willingness-to-pay with usage patterns.

The Competitive Moat RealityMoats exist only in high-usage features:

Strong Moat: Excellence at #1 used feature

Weak Moat: Breadth of rarely-used features

No Moat: Me-too implementation of everything

Competition happens at the head of the distribution.

The Innovation DilemmaWhere to Innovate?Zipf’s Law creates an innovation paradox:

Innovate at the Head: Marginal improvements to dominant features

Pro: Affects most usersCon: Incremental, not revolutionaryInnovate at the Tail: Revolutionary new capabilitiesPro: Breakthrough potentialCon: Nobody will use itMost innovation dies in the tail.The Feature Discovery Problem

Great features can’t overcome Zipf’s Law:

Discovery Barriers:

Users won’t exploreHabits are establishedCognitive load is realSwitching costs dominateEven revolutionary features struggle to break into the head.The Education Futility

Companies think they can educate users out of Zipf’s Law:

Education Attempts:

Onboarding tutorialsFeature announcementsEmail campaignsIn-product tooltipsReality: Usage still follows Zipfian distributionYou can’t educate away fundamental human behavior.Living With Zipf’s LawFor Product Managers

Accept reality and design for it:

1. Measure ruthlessly – Know your true distribution

2. Invest accordingly – Resources should follow usage

3. Simplify aggressively – Remove the tail

4. Perfect the core – Excellence at the head matters most

5. Stop feature racing – Breadth is a losing game

Market the head, not the tail:

1. Lead with power features – What people actually use

2. Avoid feature lists – They don’t drive decisions

3. Show depth, not breadth – Excellence over options

4. Target use cases – Specific problems, not capabilities

5. Demonstrate habits – Show repeated use, not one-time tricks

Build business models around Zipf’s Law:

1. Price the head – That’s where value lives

2. Bundle the middle – Package secondary features

3. Abandon the tail – Or make it community-supported

4. Compete on core – That’s where battles are won

5. Differentiate on excellence – Not feature count

As Zipf’s Law becomes understood, expect:

Single-feature AI productsMicro-apps for specific usesDramatic simplificationDeath of “all-in-one” AIThe Specialization Wave

Products will choose their Zipfian peak:

Writing AI (only writing)Code AI (only coding)Image AI (only images)Analysis AI (only data)Generalist AI will lose to specialists.The Interface Revolution

New interfaces that embrace Zipf’s Law:

Single-button productsZero-learning curve designsHabit-first interfacesInvisible AI (no interface at all)Key Takeaways

Zipf’s Law in AI usage reveals fundamental truths:

1. Feature usage is extremely concentrated – A few features dominate completely

2. Human behavior follows power laws – This isn’t changeable through design

3. Excellence beats breadth – Better to perfect one feature than add ten

4. Habits dominate exploration – Users stick with what works

5. Simplicity is a moat – Complexity is a liability

The winners in AI won’t be those with the most features, but those who:

Identify the vital few features that matterPerfect those features beyond all competitionResist the temptation to add complexityBuild business models that align with usage realityAccept that most features are never used – and that’s okayZipf’s Law isn’t a problem to solve – it’s a reality to design for. The question isn’t how to get users to use more features, but how to make the features they do use absolutely perfect. In AI, as in language, a few words do most of the work. The wisdom is knowing which ones.The post Zipf’s Law of AI Usage: Why 1% of Features Get 99% of Use appeared first on FourWeekMBA.

September 5, 2025

Market Competitive Dynamics

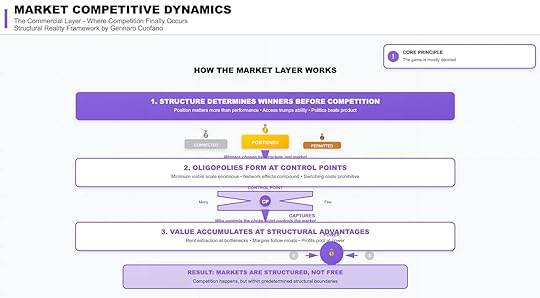

The market layer is the last and most visible layer of the structural reality framework. It is where competition appears to play out, where companies and products collide, and where narratives about “winners” and “losers” are told.

But the core principle here is blunt: the game is mostly decided before competition begins.

By the time companies meet in the open market, their fate is already shaped by structures—geopolitical permission, macroeconomic flows, and infrastructural constraints. Markets may look free, but they operate within boundaries set long before.

1. Structure Determines Winners Before CompetitionThe defining feature of markets is that position matters more than performance.

Access beats ability: Who can enter a market is often more decisive than who has the best product.Politics beats product: Regulatory approval, licensing, and national alignment matter more than technical merit.Position beats performance: Early control of platforms, standards, or channels tilts the board.Firms that are connected, positioned, and permitted dominate—not because they always outperform, but because structural placement determines opportunity.

Winners are chosen by structure, not by the market contest itself.

2. Oligopolies Form at Control PointsWhere structure sets the rules, control points capture the value.

Markets naturally consolidate into oligopolies because:

The minimum viable scale is enormous, making new entry prohibitive.Network effects compound, favoring incumbents exponentially.Switching costs trap customers, locking demand to dominant players.The outcome is predictable: whoever controls the choke point controls the market.

This is why in digital infrastructure, a handful of cloud providers dominate, or why in semiconductors, TSMC commands strategic leverage. Control points are where many funnel into few.

3. Value Accumulates at Structural AdvantagesMarkets appear to reward competition, but value actually pools at structural advantages.

Bottlenecks enable rent extraction—those who control scarce inputs capture margins.Profit flows follow control—whether of distribution, platforms, or resources.Power defines pricing—dominant players impose terms not through efficiency, but through position.This dynamic explains why companies at bottlenecks—like NVIDIA in GPUs, Apple in mobile ecosystems, or Visa in payments—earn outsized returns. It’s not just product excellence; it’s structural positioning.

Result: Markets Are Structured, Not FreeThe final truth of the market layer is that competition is real, but bounded.

Firms battle intensely—but only within boundaries already defined by power structures above. They fight over market share, but not over whether they can exist.

Markets are not arenas of perfect freedom; they are fields with predetermined lines.

Strategic ImplicationsFor strategists, this layer reframes how to think about competition:

Stop over-indexing on performance. The best product often loses. Position, access, and political alignment matter more.Locate control points. Oligopolies form at chokepoints. If you’re not at one, you’re downstream.Follow the structural rents. Profit pools are not evenly distributed. They concentrate where constraints and dependencies converge.Conclusion: The Illusion of the Free MarketThe Market Competitive Dynamics layer exposes the illusion of the “free market.” Competition exists, but only inside the structural cage built by geopolitics, economics, and infrastructure.

The visible fights—features, prices, marketing campaigns—are secondary. The real game is invisible: who is positioned, who controls the chokepoints, and who holds structural advantage.

In this sense, markets don’t crown winners. Structures do.

The post Market Competitive Dynamics appeared first on FourWeekMBA.

Infrastructure Dynamics in AI

The infrastructure layer is where abstract ambitions—political or economic—collide with the immovable wall of physical reality. Unlike policy or markets, this layer does not negotiate. Its core principle is simple: physics doesn’t bend to intentions.

No business model, no fiscal package, and no diplomatic maneuver can override the laws of thermodynamics, the scarcity of materials, or the geography of supply. Infrastructure is the silent but absolute arbiter of what is possible.

1. Absolute Constraints Create Hard BoundariesThe first function of this layer is the establishment of absolute constraints.

While financial systems can create leverage and policy can manipulate incentives, the infrastructure layer imposes limits that cannot be abstracted away.

Four domains are especially decisive:

Power – The maximum energy available defines the ceiling for any industrial or digital expansion. AI data centers, EV fleets, and military modernization all collapse into watts.Water – Often overlooked until scarcity bites, water constrains everything from semiconductor fabs in Arizona to food systems across Asia.Metals – Copper, lithium, rare earths, and steel are the hidden architecture of modern economies. They cannot be conjured by fiat—they must be mined, refined, and transported.Land – Geography imposes unavoidable limits on agriculture, logistics, and industrial concentration. Even with globalization, location still determines vulnerability.Where macroeconomics can stretch cycles and geopolitics can bend rules, infrastructure enforces boundaries that are not negotiable.

2. Bottlenecks Cascade Through SystemsThe second mechanism of this layer is the way bottlenecks cascade.

In complex systems, the limiting factor of one component defines the throughput of the entire system.

A shortage of transformers can stall gigawatt-scale grid expansion, even if solar panels are abundant.A missing mineral input can derail entire value chains, no matter how much capital is available.A single chokepoint—such as the Strait of Malacca or TSMC’s fabs—creates systemic fragility far beyond its size.These constraints ripple outward. One input throttles output everywhere downstream. What looks like a market failure is often a structural bottleneck.

3. Timescales Disconnect from Market ExpectationsThe third mechanism is the mismatch between physical timescales and market expectations.

Markets move in quarters. Investors demand return on capital within years. But infrastructure delivers in decades.

Energy projects routinely require 7–15 years from planning to operation.Transmission lines or rail networks take decades to permit, finance, and construct.Water systems and mining investments stretch even further—30–50 year horizons.The result is a persistent temporal mismatch. Political cycles and investor horizons underestimate the lead times of physical capacity. By the time shortages manifest, decades of underinvestment have already locked in the crisis.

Result: Physical Reality Determines PossibilityThe defining truth of this layer is harsh: no business model survives violating physical constraints.

Startups can disrupt interfaces and incumbents can manipulate finance, but they all eventually run into the same immovable walls—energy density, mineral availability, water access, land geography.

Where the macroeconomic layer bends to strategy, the infrastructure layer enforces reality. Every ambition must be reconciled with what the physical system can deliver.

Strategic ImplicationsFor operators and analysts, three lessons follow:

Trace constraints, not narratives. If you want to see the future, follow the bottlenecks—power grids, water tables, copper mines—not the pitch decks.Discount short-term optimism. Market forecasts that ignore multi-decade build times are noise. The timeline of physics dominates the timeline of capital.Map cascade effects. The vulnerability of modern systems lies not in averages but in chokepoints. A single constraint can throttle entire industries.Conclusion: The Sovereignty of PhysicsThe Infrastructure Dynamics layer reminds us that ambition, whether political or economic, is irrelevant if it violates physics. Energy, water, metals, and land impose ceilings that cannot be crossed by willpower, money, or code.

This is the layer where all higher-order intentions must submit. The market layer dreams, the macroeconomic layer rationalizes, the geopolitical layer commands—but the infrastructure layer decides.

It decides not by negotiation, but by boundary. And in doing so, it sets the outer perimeter of possibility.

The post Infrastructure Dynamics in AI appeared first on FourWeekMBA.

Macroeconomic Environment: Where Power Becomes Policy

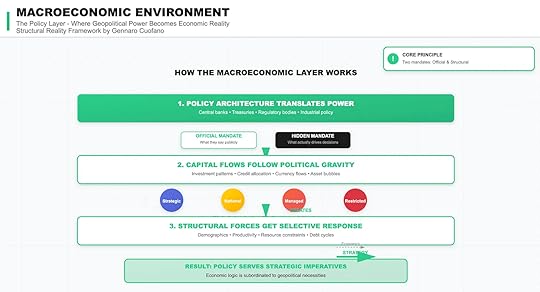

If the geopolitical layer sets the rules of the game, the macroeconomic environment is where those rules are translated into policy, capital allocation, and economic outcomes. It is the layer where sovereignty becomes spreadsheets.

The core principle here is that every macroeconomic system runs on two mandates: an official mandate and a structural mandate.

The official mandate is what central banks, treasuries, and regulators declare—price stability, employment, fiscal discipline.The structural mandate is what actually drives decisions—geopolitical imperatives, strategic priorities, and survival of the state.Understanding the gap between the two is essential.

1. Policy Architecture Translates PowerThe first mechanism of this layer is the policy architecture.

Institutions such as central banks, treasuries, and regulatory agencies do not act in a vacuum. They are transmission belts that convert power structures into economic reality.

Consider:

Central banks claim independence but act as instruments of state power when crises hit. The Federal Reserve’s emergency facilities in 2008 and 2020 weren’t about abstract price stability—they were about systemic survival.Industrial policy is officially about competitiveness but is structurally about sovereignty. The U.S. CHIPS Act is not just an economic development program—it is a geopolitical firewall against China.Regulatory bodies shape financial and industrial flows, often aligning them with strategic objectives rather than market efficiency.Key insight: The macroeconomic environment is not neutral. It is policy designed to protect and project state power.

2. Capital Flows Follow Political GravityThe second mechanism is the way capital flows are bent by political gravity.

Officially, capital is supposed to follow efficiency, risk-adjusted returns, and investor choice. Structurally, it flows along the channels that political power allows.

Four archetypes illustrate this:

Strategic flows – Subsidized, incentivized, or guaranteed by governments for long-term security. Example: sovereign investment in energy independence or defense supply chains.National flows – Directed domestically for employment stability, infrastructure, or strategic industries. Example: Japan’s postwar keiretsu financing model.Managed flows – Heavily regulated or steered into politically safe directions. Example: China’s capital controls and credit guidance.Restricted flows – Entirely blocked by sanctions, export controls, or financial exclusion. Example: Russia post-2022 being cut off from dollar settlement systems.Markets may call these distortions. In structural terms, they are the operating system.

3. Structural Forces Get Selective ResponseMacroeconomics is supposed to respond to structural forces like demographics, productivity, debt cycles, and resource constraints. But in practice, those forces get selective attention depending on strategic priorities.

Demographics matter until they conflict with strategic labor policies, migration controls, or security fears.Productivity growth is ideal, but if it requires reliance on a rival’s technology stack, it will be sacrificed.Debt cycles are tolerated or extended when collapse would weaken state power, even at the cost of inflation or future instability.Resource constraints are real, but states bend enormous financial effort to suppress them when national security is at stake—think U.S. shale or China’s rare earth dominance.Result: economics is not an impartial referee. It bends to strategic imperatives.

The Structural Mandate: Policy as StrategyThe outcome of these three mechanisms is clear: policy serves strategy, not abstract efficiency.

Every central bank statement, fiscal policy, or regulatory action operates with two audiences in mind:

The public—who are given the official mandate: employment, stability, inflation control.The state—which pursues the hidden mandate: sovereignty, survival, and strategic advantage.This duality explains why macroeconomic logic often seems inconsistent: why deficits are tolerated in wartime but vilified in peacetime, why interest rates sometimes chase inflation and sometimes ignore it, why industrial subsidies appear in systems supposedly committed to free markets.

Strategic ImplicationsFor analysts and operators, the lesson is to stop treating macroeconomics as a closed system of economic logic. It is always subordinated to the geopolitical layer.

Implications include:

Follow the hidden mandate. When official and structural mandates diverge, the structural mandate always wins.Capital is political. Where it flows, where it is restricted, and where it is subsidized tells you more about the future than yield curves or balance sheets.Policy is selective. Structural problems like aging populations or sovereign debt are not solved—they are managed according to strategic priorities.Markets misread consistency. Investors expecting linear economic logic will be blindsided when policy bends suddenly under geopolitical stress.Conclusion: Economics as StrategyThe Macroeconomic Environment layer teaches us that economics is not about efficiency or neutrality. It is about translating power into policy.

Officially, central banks and treasuries defend price stability, employment, or fiscal prudence. Structurally, they defend sovereignty, sustain alliances, and manage strategic vulnerabilities.

The truth is simple but uncomfortable: economic logic is always subordinated to geopolitical necessity.

To forecast markets or industries without factoring in this layer is to mistake appearances for reality.

The post Macroeconomic Environment: Where Power Becomes Policy appeared first on FourWeekMBA.

Geopolitical Architecture: The Power Layer of Markets

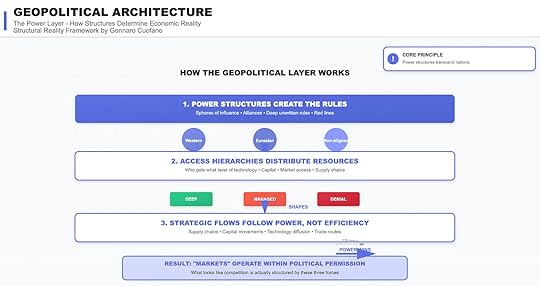

Most market commentary begins at the wrong end. Analysts obsess over competitive dynamics or regulatory changes without asking the deeper question: what structures make those dynamics possible in the first place? The geopolitical architecture is that foundation. It is the layer of power that sets the rules, distributes access, and determines which flows of capital, technology, and resources are even possible.

The core principle is stark: power structures transcend nations. Markets, companies, and even states operate within invisible but rigid boundaries of political permission.

1. Power Structures Create the RulesAt the highest level, power is rule-setting.

These rules are not always codified in treaties or trade agreements. More often, they are:

Spheres of influence: regions where one bloc’s security umbrella dominates.Alliances: formal and informal pacts that shape flows of capital and technology.Unwritten rules: red lines that everyone understands but few articulate.Today, three broad blocs structure the global order:

Western: U.S.-led alliances, NATO, dollar hegemony.Eurasian: China-centric networks, BRICS+ expansion, yuan settlement systems.Non-Aligned: States hedging between the two, extracting concessions while trying to avoid entrapment.Markets behave differently inside each bloc. A startup in California, a chip fab in Taiwan, and a refinery in the Gulf are not just businesses—they are assets embedded in power structures that decide their fates when crises erupt.

Key insight: competition is not free. It is bounded by who sets the rules of the game.

2. Access Hierarchies Distribute ResourcesOnce rules are established, power manifests through access hierarchies.

This is the real mechanism of geopolitical influence: deciding who gets what level of technology, capital, and market access.

Three tiers dominate:

Deep access – Allies and close partners receive privileged entry to advanced technologies, capital markets, and supply chain integration. Example: Japan and South Korea inside the U.S. semiconductor alliance.Managed access – Middle-tier states are allowed selective integration but under strict conditions. Example: India gaining access to Western defense tech while facing restrictions on dual-use AI.Denial – Strategic adversaries are excluded from critical flows altogether. Example: China’s exclusion from cutting-edge GPUs and lithography systems.This hierarchy shapes the contours of globalization. The naive story says markets allocate resources efficiently. The structural reality says: markets allocate resources politically.

Efficiency does not win. Power does.

3. Strategic Flows Follow Power, Not EfficiencyThe third mechanism is control over flows—the arteries of the global economy.

Supply chains are not just logistics—they are power tools. Chokepoints in rare earths, lithium, and semiconductors are deliberately cultivated.Capital movements follow sanction regimes and reserve currency dominance. A sovereign wealth fund in Riyadh may prefer dollar-denominated assets not out of efficiency, but because the dollar still anchors the global system.Technology diffusion is tightly controlled. Export licenses, security reviews, and joint-venture restrictions dictate who can climb the technology ladder.Trade routes become geopolitical flashpoints. The South China Sea, the Strait of Hormuz, and the Arctic passages are not just shipping lanes—they are strategic levers.The myth of globalization was that supply chains follow efficiency. The reality is that strategic flows follow permission.

4. Markets Operate Within Political PermissionThe outcome of these three forces—rule-setting, access hierarchies, and flow control—is simple but easily forgotten:

Markets operate within political permission.

What looks like open competition between firms is structured competition inside power boundaries. A company may out-innovate its rival but still lose access to markets, inputs, or capital because of political decisions. Conversely, firms with state backing may survive despite inefficiency.

Examples abound:

Huawei, cut off from advanced semiconductors, cannot compete on efficiency alone.ASML, monopolist in EUV lithography, is constrained by Dutch and U.S. export policies.Gulf energy producers, despite efficiency, remain structurally dependent on maritime chokepoints protected by the U.S. Navy.The deeper truth: efficiency is secondary to permission.

The Core Principle RevisitedTo understand markets at the surface, one must begin at the geopolitical base.

Power structures set the game.Access hierarchies distribute the spoils.Flows obey power, not efficiency.Thus, when investors or strategists talk about “market forces,” they are often mistaking symptoms for causes. The true drivers are structural, geopolitical, and deeply embedded in the architecture of power.

Strategic ImplicationsFor operators, investors, and policymakers, the implications are profound:

Market entry is political. Every new market opportunity requires permission, explicit or implicit, from the ruling power bloc.Supply chains are sovereignty chains. They reveal not just economic dependence but political alignment.Technology is weaponized capital. Access to frontier technology is the ultimate expression of trust—or distrust—between blocs.Efficiency myths are dangerous. Betting on “best product wins” without mapping geopolitical permission is a strategic error.Conclusion: Seeing the Hidden LayerThe Geopolitical Architecture reminds us that what appears to be free competition is, in fact, bounded competition. Companies, investors, and even nations operate inside rules they did not choose.

Markets are not neutral. They are politically constructed.

In this sense, strategy is not about reading financial statements or market forecasts alone. It is about reading the map of power. Because until you know which rules, hierarchies, and flows shape the game, you don’t know what the game really is.

The post Geopolitical Architecture: The Power Layer of Markets appeared first on FourWeekMBA.

The Structural Reality Framework

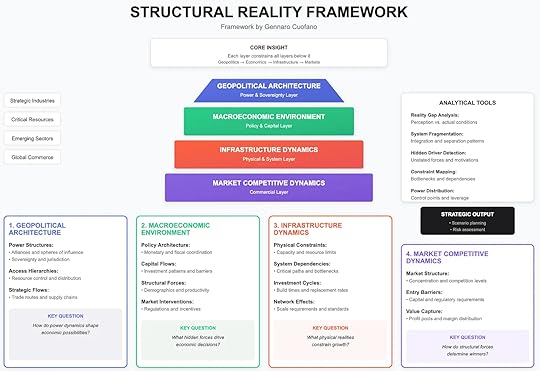

Markets never move in isolation. They are the final expression of deeper structural forces—forces that are rarely visible in quarterly earnings calls, policy briefings, or product roadmaps. The Structural Reality Framework offers a way to analyze these layers systematically, exposing how power, policy, infrastructure, and competition interact to shape outcomes.

At its core, the framework rests on a simple but profound insight: each layer constrains all layers above it.

Geopolitics constrains economics.Economics constrains infrastructure.Infrastructure constrains markets.This is why understanding markets requires starting from the bottom.

1. Geopolitical Architecture: The Sovereignty LayerThe deepest layer is geopolitics—the architecture of power and sovereignty.

Here, what matters are:

Power structures: alliances, spheres of influence, and great-power competition.Access hierarchies: who controls resources, supply chains, and distribution routes.Strategic flows: the trade corridors and chokepoints that determine resilience.The key question at this level is:

How do power dynamics shape economic possibilities?

When the U.S. restricts semiconductor exports to China, it isn’t just a trade move—it resets the boundaries of what is economically possible for entire industries. Similarly, when the Red Sea or South China Sea become contested, the flow of goods becomes a structural constraint, not a logistical hiccup.

Markets cannot transcend geopolitics. They operate within it.

2. Macroeconomic Environment: The Capital LayerOn top of geopolitics sits the macroeconomic environment—the world of policy and capital.

Its drivers include:

Policy architecture: coordination between monetary and fiscal levers.Capital flows: where investment moves, and what barriers it faces.Structural forces: demographics, productivity, and technological diffusion.Market interventions: regulation, incentives, and industrial policy.The key question here is:

What hidden forces drive economic decisions?

For example, a demographic collapse in advanced economies isn’t a short-term market event—it drives labor shortages, changes savings patterns, and forces immigration debates. Similarly, sovereign wealth funds in the Gulf don’t just allocate capital; they tilt the playing field by deciding which technologies and sectors scale.

Capital, like water, flows where it is allowed—and where it is pushed.

3. Infrastructure Dynamics: The Physical LayerBeneath every market outcome lie the hard realities of infrastructure.

This layer is defined by:

Physical constraints: the limits of energy, compute, land, or bandwidth.System dependencies: chokepoints in supply chains and industrial clusters.Investment cycles: build times, replacement rates, and depreciation schedules.Network effects: standards, interoperability, and adoption flywheels.The key question is:

What physical realities constrain growth?

This is where structural optimism often collapses. You cannot scale AI without GPUs, gigawatts of power, and gigaliters of water. You cannot expand manufacturing without overcoming permitting timelines, grid access, and labor bottlenecks.

Markets ignore infrastructure until they run into it. By then, it is often too late.

4. Market Competitive Dynamics: The Commercial LayerAt the top of the pyramid sits what most analysts focus on: market competition.

Here, the questions are tactical:

Market structure: How concentrated is the sector?Entry barriers: What capital or regulatory hurdles exist?Value capture: Who controls the profit pools, and where do margins flow?The key question is:

How do structural forces determine winners?

Take AI. At the commercial layer, the discussion is about OpenAI vs. Anthropic vs. Google. But at the infrastructure layer, the reality is about NVIDIA’s GPU chokehold. And at the macroeconomic layer, it’s about U.S. industrial policy subsidizing semiconductor fabs. At the geopolitical layer, it’s about U.S. export controls preventing China from closing the gap.

Seen this way, the market fight is not just about models—it is about structures cascading down.

Analytical ToolsThe framework isn’t just descriptive. It provides analytical tools for interrogating reality:

Reality Gap Analysis: Where do narratives diverge from structural conditions?System Fragmentation: Which systems are integrating, which are decoupling?Hidden Driver Detection: What unstated motivations force actors’ hands?Constraint Mapping: Where are the bottlenecks? Who controls them?Power Distribution: Who sits at the levers of control?These tools move analysis away from surface explanations and toward causal depth.

Strategic OutputThe goal is not theory. It is action.

By applying the Structural Reality Framework, operators, investors, and policymakers can produce:

Scenario planning: plausible futures anchored in structural forces.Risk assessment: exposure maps that reveal where vulnerabilities really lie.For instance, a company betting on European AI growth cannot just look at user adoption. It must ask:

What is the EU’s energy mix (infrastructure layer)?How do EU capital markets treat growth risk (macroeconomic layer)?What geopolitical dependencies exist with U.S. and China (geopolitical layer)?Only then can market strategy make sense.

The Core InsightThe core insight of the Structural Reality Framework is simple:

Markets are the surface expression of deeper realities.

Analysts who start at the top (competition) often confuse symptoms for causes.Analysts who start at the bottom (geopolitics, macroeconomics, infrastructure) see the constraints that define what markets can and cannot do.This is why structural analysis outperforms tactical commentary. It reveals not just what is happening, but what must happen.

Conclusion: Seeing Through the LayersThe Structural Reality Framework forces a change in perspective. Instead of asking, “Who will win?” it asks, “What structures make winning possible?”

Geopolitics sets the outer boundaries.Macroeconomics directs the flow of capital.Infrastructure dictates the pace of growth.Markets allocate what’s left.The mistake is to see markets as free-floating contests. They are not. They are constrained by deeper forces.

In strategy, the advantage goes to those who see through the layers.

The post The Structural Reality Framework appeared first on FourWeekMBA.

The Chesterton’s Fence Problem: AI Removing Things It Doesn’t Understand

Imagine if GitHub Copilot were to remove all the “redundant” error handling from a nuclear reactor control system. The code looked cleaner. The tests still passed. Six months later, an edge case triggered a near-meltdown. This is Chesterton’s Fence in the age of AI: machines optimizing away safeguards they don’t understand, destroying protections whose purpose they can’t comprehend.

Imagine if GitHub Copilot were to remove all the “redundant” error handling from a nuclear reactor control system. The code looked cleaner. The tests still passed. Six months later, an edge case triggered a near-meltdown. This is Chesterton’s Fence in the age of AI: machines optimizing away safeguards they don’t understand, destroying protections whose purpose they can’t comprehend.

G.K. Chesterton wrote in 1929: “Don’t ever take a fence down until you know the reason it was put up.” He was warning reformers about destroying traditions they didn’t understand. Now we’ve given that destructive power to machines that understand nothing, operating at superhuman speed, optimizing away the very foundations of our systems.

The Original WisdomChesterton’s ParableChesterton imagined reformers finding a fence across a road. The modern reformer says: “I don’t see the use of this; let us clear it away.” The intelligent reformer says: “If you don’t see the use of it, I certainly won’t let you clear it away. Go away and think. When you can come back and tell me that you do see the use of it, I may allow you to destroy it.”

The fence exists for a reason. That reason might be outdated, but it might be crucial. Maybe it keeps cattle off the road. Maybe it marks a property line. Maybe it prevents erosion. You can’t evaluate whether to remove it until you understand why it exists.

This principle protected societies for millennia. Change happened slowly enough for wisdom to accumulate. Reformers had to understand before they could destroy. AI breaks this principle: it changes everything, understands nothing, and operates faster than wisdom can form.

Why Understanding MattersEvery system contains implicit knowledge. Code contains programmer wisdom. Processes encode failure lessons. Traditions preserve survival strategies. These fences protect against dangers the current generation hasn’t experienced.

A seemingly redundant database backup exists because someone lost critical data. An apparently pointless approval process prevents a type of fraud. A bizarre legacy system quirk works around a hardware bug. Remove them without understanding, and you recreate the disasters they prevent.

Human reformers at least had skin in the game. They lived with their changes. They suffered from their mistakes. AI has no skin in the game. It optimizes, deploys, and moves on, leaving humans to discover what essential fences it removed.

AI’s Destructive OptimizationThe Code “Improvement” CatastropheAI code assistants optimize for metrics they can measure: fewer lines, faster execution, higher test coverage. They can’t measure what they don’t understand: why certain inefficiencies exist, what edge cases the ugly code handles, which bugs the weird patterns prevent.

Consider the infamous Therac-25 radiation therapy machine. Its software had strange, seemingly redundant safety checks. An optimizer would remove them—they slow execution and seem purposeless. Those checks prevented lethal radiation overdoses. Removing them killed patients.

Modern AI assistants make these optimizations constantly. They remove “unnecessary” null checks that prevent crashes. They consolidate “redundant” functions that handle special cases. They simplify “overcomplicated” logic that manages race conditions. Every optimization removes a fence whose purpose the AI doesn’t understand.

The Process Automation DisasterAI workflow automation removes “inefficient” human checkpoints. Why have three approvals when one suffices? Why require manual reviews when everything passes automatically? Why keep humans in loops that seem to work without them?

Knight Capital found out in 2012. Their automated trading system had manual deployment checks that seemed unnecessary—the automation worked perfectly. They removed the human verification steps. A deployment error caused the system to lose $440 million in 45 minutes. The fence they removed was the last defense against catastrophic automation failure.

AI now removes these fences at scale. It identifies “bottlenecks” (safety checks), eliminates “redundancies” (backup systems), and streamlines “inefficiencies” (human oversight). Every optimization makes systems more fragile by removing protections the AI doesn’t understand exist.

The Data Cleaning MassacreAI systems love clean data. They remove outliers, normalize distributions, eliminate anomalies. They don’t understand that messy data often contains essential information.

Medical AI removed “anomalous” test results that didn’t fit patterns. Those anomalies were rare disease indicators. Financial AI cleaned “erroneous” transaction data. Those errors were fraud signals. Manufacturing AI normalized sensor readings that contained failure predictors.

The cleaning seems logical. Messy data reduces model performance. Outliers skew predictions. Anomalies confuse algorithms. But the mess exists for reasons. The outliers matter. The anomalies are the point. AI removes these fences and destroys the very signals systems need to function.

VTDF Analysis: Systematic IgnoranceValue ArchitectureTraditional value preservation required understanding. Humans maintained systems they comprehended. AI promises value through optimization without comprehension. It’s like hiring a blind surgeon who operates at superhuman speed.

The value destruction is hidden. Systems appear to work better after AI optimization. They’re faster, cleaner, more efficient. The removed fences only matter when the dangers they prevented finally arrive. By then, nobody remembers what protections existed or why.

Value in complex systems comes from robustness, not efficiency. But AI optimizes for efficiency because it’s measurable. Robustness requires understanding dangers that haven’t materialized. AI can’t understand what hasn’t happened.

Technology Stack ArchaeologyEvery technology stack contains layers of historical decisions. Ancient workarounds for forgotten bugs. Defensive code against retired systems. Compatibility shims for departed platforms. Each layer is a fence built for reasons lost to time.

AI sees these as technical debt to eliminate. It refactors without understanding why the original structure existed. It modernizes without knowing what the old code prevented. It performs archaeology with dynamite, destroying artifacts it doesn’t recognize as valuable.

The stack wisdom takes decades to accumulate and seconds to destroy. A senior engineer’s career of defensive programming vanishes in an AI refactoring. Twenty years of failure-driven architecture gets optimized away in an afternoon.

Distribution Channel EvolutionDistribution channels encode market wisdom. Seemingly inefficient intermediaries prevent specific failures. Apparently redundant checks stop particular frauds. Complex approval chains exist because simpler ones failed catastrophically.

AI distribution optimization removes these fences. It disintermediates without understanding what intermediaries prevented. It simplifies without knowing what complexity protected against. Every optimization recreates conditions for failures the system evolved to prevent.

The evolution took decades. Markets learned through crashes. Channels adapted through fraud. Systems evolved through failure. AI undoes this evolution in quarters, returning systems to states that failed before.

Financial Model ProtectionFinancial models contain hidden risk management. Buffer accounts that seem wasteful. Reserve requirements that appear excessive. Approval limits that look arbitrary. These are fences built from bankruptcy lessons.

AI financial optimization targets these “inefficiencies.” It minimizes buffers to maximize returns. It reduces reserves to increase leverage. It raises limits to accelerate growth. Every optimization removes a protection learned from financial disaster.

The protections only matter in crises. During normal operations, they’re pure cost. AI trained on normal operations sees only the cost. It optimizes away crisis protection because it doesn’t understand crises that haven’t happened yet.

Real-World DemolitionsBoeing’s MCAS TragedyBoeing’s 737 MAX included MCAS (Maneuvering Characteristics Augmentation System) to make the plane feel like older 737s. The system seemed overly complex. Modern AI would optimize it for efficiency: simpler logic, fewer sensors, cleaner code.

The complexity existed for reasons. Multiple sensors prevented single-point failures. Complex logic handled edge cases. Redundant checks caught sensor disagreements. These fences seemed inefficient until their removal killed 346 people.

The tragedy perfectly illustrates Chesterton’s Fence. Engineers who didn’t understand why the complexity existed simplified it. They removed fences whose purpose they didn’t comprehend, creating disasters the fences prevented.

Tesla’s Autopilot EvolutionTesla’s Autopilot removed sensor “redundancy” to rely purely on cameras. Radar seemed unnecessary when vision worked. Ultrasonics appeared redundant with neural networks. Each removal was an optimization that eliminated a fence.

The fences existed for specific conditions. Radar penetrates fog that blinds cameras. Ultrasonics detect close objects cameras miss. The redundancy wasn’t inefficiency but protection against specific failure modes.

Every Autopilot accident reveals a removed fence. The car that drove into a white truck—radar would have seen it. The parking failures—ultrasonics would have prevented them. Each optimization recreated vulnerabilities that redundancy prevented.

Facebook’s Content ModerationFacebook’s AI content moderation removed human reviewer “bottlenecks.” Humans were slow, expensive, inconsistent. AI was fast, cheap, scalable. The optimization removed fences that prevented societal damage.

Human reviewers understood context AI couldn’t grasp. They recognized dangerous patterns algorithms missed. They caught subtle incitements machines interpreted as benign. The inefficiency was the point—careful human judgment preventing automated amplification of harm.

The removed fences enabled genocide in Myanmar. Algorithmic amplification without human understanding spread hate faster than comprehension could contain it. The optimization away from human understanding created disasters only human understanding prevented.

The Cascade of Unintended ConsequencesTechnical Debt Becomes Technical DisasterAI identifies technical debt and removes it. But some technical debt is load-bearing. It’s the programming equivalent of a fence—ugly but essential.

Legacy code often contains undocumented business logic. Weird functions handle legal requirements. Strange conditions manage regulatory compliance. AI removes these because they’re not in the requirements. They’re fences whose purpose was never written down.

The disasters cascade. Removed validation causes data corruption. Data corruption breaks downstream systems. Broken systems trigger compliance failures. Each removed fence enables the next failure.

Optimization Feedback LoopsAI optimizations create feedback loops that accelerate fence removal. First AI removes “redundant” checks. System runs faster. AI is rewarded. AI removes more checks. System runs even faster. The positive feedback continues until critical fences are gone.

The loops operate faster than human oversight. By the time humans notice problems, multiple fence generations are removed. Rebuilding them requires understanding why each existed—knowledge that departed with the fences.

The acceleration makes recovery impossible. Each optimization builds on previous ones. Reversing one requires reversing all. The system becomes increasingly fragile and irreversibly optimized.

Knowledge Extinction EventsWhen AI removes fences, it also removes knowledge of why they existed. Documentation describes what, not why. Comments explain how, not purpose. The wisdom encoded in the fence dies with its removal.

This creates knowledge extinction events. Nobody knows why certain patterns existed. Nobody remembers what problems they prevented. When disasters recur, nobody knows they’re recurring because nobody remembers they occurred before.

The extinction accelerates through employee turnover. Senior engineers who understood the fences retire. New engineers never learn they existed. AI assistance means nobody needs to understand the system deeply enough to know what’s missing.

Strategic ImplicationsFor EngineersDocument why, not just what. Every piece of code should explain its purpose, especially the ugly parts. When AI suggests removing something, the documentation explains why it should stay.

Create unremovable fences. Make critical protections so integral that removing them breaks everything immediately. If AI can’t remove a fence without obvious failure, it won’t.

Build explanation requirements. Before AI can optimize, it must explain what each component prevents. Force understanding before allowing destruction.

For OrganizationsPreserve institutional memory. The knowledge of why fences exist is as valuable as the fences themselves. Document failures, not just successes. Remember what didn’t work and why.

Require archaeological assessment. Before AI optimization, understand system history. What failures prompted each component? What disasters does current complexity prevent?

Value inefficiency. Some inefficiency is protective redundancy. Some complexity is necessary safeguarding. Not everything should be optimized.

For PolicymakersMandate explainability for removal. AI shouldn’t remove anything it can’t explain the purpose of. Understanding before destruction should be legally required.

Preserve system diversity. Monocultures created by AI optimization are fragile. Require variation, redundancy, inefficiency. Fences protect even when we don’t understand them.

Create optimization speed limits. Slow AI optimization to human comprehension speed. Wisdom must keep pace with change.

The Future of Unknown UnknownsThe Comprehension CrisisAs systems become AI-optimized, nobody understands them. Not the AI that optimized them. Not the humans who use them. We’re creating incomprehensible systems by removing comprehensible protections.

The crisis accelerates through AI assistance. Humans don’t need to understand systems that AI manages. AI doesn’t understand systems it optimizes. Nobody understands anything, but everything keeps running—until it doesn’t.

When failures occur, nobody knows why. The fences that would have prevented them are gone. The knowledge of why they existed is extinct. We face disasters we’ve faced before but don’t remember facing.

The Rebuild ImpossibilityOnce AI removes Chesterton’s Fences, rebuilding them becomes impossible. We don’t know what we removed. We don’t know why it existed. We don’t even know we removed anything.

The impossibility compounds through optimization layers. Each AI improvement builds on previous ones. Unwinding requires understanding the entire history. But the history died with the fences.

Systems become permanently fragile. They work until they encounter conditions the fences prevented. Then they fail catastrophically. And we don’t know how to fix them because we don’t know what we broke.

The Wisdom Preservation ImperativeSurviving AI optimization requires preserving wisdom about why things exist. Not just documentation but understanding. Not just requirements but reasons. Not just what fences do but what happens without them.

This preservation can’t be digital. AI can access and ignore digital documentation. Wisdom must be encoded in systems themselves—fences that explain their own necessity.

The future belongs to systems that resist optimization. That preserve inefficiency. That maintain redundancy. That keep fences even when nobody remembers why they exist.

Conclusion: The Fence and the MachineChesterton’s Fence assumed human reformers who could eventually understand. AI reformers can never understand. They optimize without comprehension, destroy without wisdom, remove without remembering.

Every AI optimization is a bet that no fence it removes matters. Every efficiency gain assumes no protection was lost. Every improvement gambles that understanding isn’t necessary.

But Chesterton was right: fences exist for reasons. Those reasons might be forgotten, but forgetting doesn’t make them false. The disasters they prevent still wait, made more likely by every optimization.

We’re watching AI dismantle protections built over generations. Removing safeguards learned through suffering. Optimizing away defenses we don’t remember needing. Each removal seems like progress until we rediscover why the fence existed—usually through catastrophe.

The next time AI suggests removing something “unnecessary,” remember Chesterton’s warning. That fence might be the only thing standing between optimization and disaster. And once removed, we might never remember why we needed it—until it’s too late.

The post The Chesterton’s Fence Problem: AI Removing Things It Doesn’t Understand appeared first on FourWeekMBA.

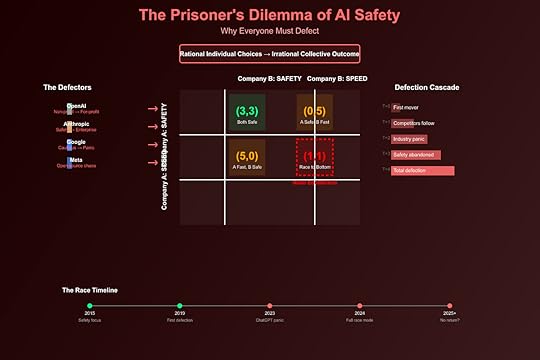

The Prisoner’s Dilemma of AI Safety: Why Everyone Defects

OpenAI abandoned its non-profit mission. Anthropic takes enterprise money despite safety origins. Meta open-sources everything for competitive advantage. Google rushes releases after years of caution. Every AI company that started with safety-first principles has defected to competitive pressures. This isn’t weakness—it’s the inevitable outcome of game theory. The prisoner’s dilemma is playing out at civilizational scale, and everybody’s choosing to defect.

The Classic Prisoner’s DilemmaThe Original GameTwo prisoners, unable to communicate:

Both Cooperate: Light sentences for both (best collective outcome)Both Defect: Heavy sentences for both (worst collective outcome)One Defects: Defector goes free, cooperator gets maximum sentenceRational actors always defect, even though cooperation would be better.

The AI Safety VersionAI companies face the same structure:

All Cooperate (Safety): Slower, safer progress for everyoneAll Defect (Speed): Fast, dangerous progress, potential catastropheOne Defects: Defector dominates market, safety-focused companies dieThe dominant strategy is always defection.

The Payoff MatrixThe AI Company Dilemma“`

Company B: Safety Company B: Speed

Company A: Safety (3, 3) (0, 5)

Company A: Speed (5, 0) (1, 1)

“`

Payoffs (Company A, Company B):

(3, 3): Both prioritize safety, sustainable progress(5, 0): A speeds ahead, B becomes irrelevant(0, 5): B speeds ahead, A becomes irrelevant(1, 1): Arms race, potential catastropheNash Equilibrium: Both defect (1, 1)

Real-World PayoffsCooperation (Safety-First):

Slower model releasesHigher development costsRegulatory complianceLimited market shareLong-term survivalDefection (Speed-First):

Rapid deploymentMarket dominationMassive valuationsRegulatory captureExistential riskThe Defection ChroniclesOpenAI: The Original Defector2015 Promise: Non-profit for safe AGI

2019 Reality: For-profit subsidiary created

2023 Outcome: $90B valuation, safety team exodus

The Defection Path:

Started as safety-focused non-profitNeeded compute to competeRequired investment for computeInvestors demanded returnsReturns required speed over safetySafety researchers quit in protestAnthropic: The Reluctant Defector2021 Promise: AI safety company by ex-OpenAI safety team

2024 Reality: Enterprise focus, massive funding rounds

The Rationalization:

“We need resources to do safety research”“We must stay competitive to influence standards”“Controlled acceleration better than uncontrolled”“Someone worse would fill the vacuum”Each rationalization true, collectively they ensure defection.

Meta: The Chaos AgentStrategy: Open source everything to destroy moats

Game Theory Logic:

Can’t win the closed model raceOpen sourcing hurts competitors moreCommoditizes complement (AI models)Maintains platform powerMeta isn’t even playing the safety game—they’re flipping the board.

Google: The Forced DefectorPre-2022: Cautious, research-focused, “we’re not ready”

Post-ChatGPT: Panic releases, Bard rush, safety deprioritized

The Pressure:

Stock price demands responseTalent fleeing to competitorsNarrative of “falling behind”Innovator’s dilemma realizedEven the most resourced player couldn’t resist defection.

The Acceleration TrapWhy Cooperation FailsFirst-Mover Advantages in AI:

Network effects from user dataTalent attraction to leadersCustomer lock-in effectsRegulatory capture opportunitiesPlatform ecosystem controlThese aren’t marginal advantages—they’re existential.

The Unilateral Disarmament ProblemIf one company prioritizes safety:

Competitors gain insurmountable leadSafety-focused company becomes irrelevantNo influence on eventual AGI developmentInvestors withdraw fundingCompany dies, unsafe actors win“Responsible development” equals “market exit.”

The Multi-Player DynamicsThe Iterative Game ProblemIn repeated prisoner’s dilemma, cooperation can emerge through:

Reputation effectsTit-for-tat strategiesPunishment mechanismsCommunication channelsBut AI development isn’t iterative—it’s winner-take-all.

The N-Player ComplexityWith multiple players:

Coordination becomes impossibleOne defector breaks cooperationNo enforcement mechanismMonitoring is difficultAttribution is unclearCurrent Players: OpenAI, Anthropic, Google, Meta, xAI, Mistral, China, open source…

One defection cascades to all.

The International DimensionThe US-China AI Dilemma“`

China: Safety China: Speed

US: Safety (3, 3) (0, 5)

US: Speed (5, 0) (-10, -10)

“`

The stakes are existential:

National security implicationsEconomic dominance at stakeMilitary applications inevitableNo communication channelNo enforcement mechanismBoth must defect for national survival.

The Regulatory ArbitrageCountries face their own dilemma:

Strict Regulation: AI companies leave, economic disadvantageLoose Regulation: AI companies flock, safety risksResult: Race to the bottom on safety standards.

The Investor Pressure MultiplierThe VC DilemmaVCs face their own prisoner’s dilemma:

Fund Safety: Lower returns, LPs withdrawFund Speed: Higher returns, existential riskThe Math:

10% chance of 100x return > 100% chance of 2x returnEven if 10% includes extinction riskIndividual rationality creates collective irrationalityThe Public Market PressurePublic companies (Google, Microsoft, Meta) face quarterly earnings:

Can’t explain “we slowed for safety”Stock price punishes cautionActivists demand accelerationCEO replaced if resistingThe market is the ultimate defection enforcer.

The Talent Arms RaceThe Researcher’s DilemmaAI researchers face choices:

Join Safety-Focused: Lower pay, slower progress, potential irrelevanceJoin Speed-Focused: 10x pay, cutting-edge work, impactReality: $5-10M packages for top talent at speed-focused companies

The Brain Drain CascadeTop researchers join fastest companiesFastest companies get fasterSafety companies lose talentSpeed gap widensMore researchers defectCascade acceleratesTalent concentration ensures defection wins.

The Open Source WrenchThe Ultimate DefectionOpen source is the nuclear option:

No safety controls possibleNo takebacks once releasedDemocratizes capabilitiesEliminates competitive advantagesMeta’s Strategy: If we can’t win, nobody wins

The Inevitability ProblemEven if all companies cooperated:

Academia continues researchOpen source community continuesNation-states develop secretlyIndividuals experimentSomeone always defects.

Why Traditional Solutions FailRegulation: Too Slow, Too WeakThe Speed Mismatch:

AI: Months to new capabilitiesRegulation: Years to new rulesEnforcement: Decades to developBy the time rules exist, game is over.

Self-Regulation: No EnforcementIndustry promises meaningless without:

Verification mechanismsPunishment for defectionMonitoring capabilitiesAligned incentivesEvery “AI Safety Pledge” has been broken.

International Cooperation: No TrustRequirements for cooperation:

Verification of compliancePunishment mechanismsCommunication channelsAligned incentivesTrust between partiesNone exist between US and China.

Technical Solutions: InsufficientProposed Solutions:

Alignment research (takes time)Interpretability (always behind)Capability control (requires cooperation)Compute governance (requires enforcement)Technical solutions can’t solve game theory problems.

The Irony of AI Safety LeadersThe Cassandra PositionSafety advocates face an impossible position:

If right about risks: Ignored until too lateIf wrong about risks: Discredited permanentlyIf partially right: Dismissed as alarmistNo winning move except not to play—but that ensures losing.

The Defection of Safety LeadersEven safety researchers defect:

Ilya Sutskever leaves OpenAI for new ventureAnthropic founders left Google for speedGeoffrey Hinton quits to warn—after building everythingThe safety community creates the race it warns against.

The Acceleration DynamicsThe Compound EffectEach defection accelerates others:

Company A defects: Gains advantageCompany B must defect: Or dieCompany C sees B defect: Must defect fasterNew entrants: Start with defectionCooperation becomes impossible: Trust destroyedThe Point of No ReturnWe may have already passed it:

GPT-4 triggered industry-wide panicEvery major company now racingBillions flowing to accelerationSafety teams disbanded or marginalizedOpen source eliminating controlsThe game theory has played out—defection won.

Future ScenariosScenario 1: The Capability ExplosionEveryone defects maximally:

Exponential capability growthNo safety measuresRecursive self-improvementLoss of controlExistential eventProbability: Increasing

Scenario 2: The Close CallNear-catastrophe causes coordination:

Major AI accidentGlobal recognition of riskEmergency cooperationTemporary slowdownEventual defection returnsProbability: Moderate

Scenario 3: The Permanent RaceContinuous acceleration without catastrophe:

Permanent competitive dynamicsSafety always secondaryGradual risk accumulationNormalized existential threatProbability: Current trajectory

Breaking the DilemmaChanging the GameSolutions require changing payoff structure:

Make Cooperation More Profitable: Subsidize safety researchMake Defection More Costly: Severe penalties for unsafe AIEnable Verification: Transparent development requirementsCreate Enforcement: International AI authorityAlign Incentives: Restructure entire industryEach requires solving the dilemma to implement.

The Coordination ProblemTo change the game requires:

Global agreement (impossible with current tensions)Economic restructuring (against market forces)Technical breakthroughs (on unknown timeline)Cultural shift (generational change)Political will (lacking everywhere)We need cooperation to enable cooperation.

Conclusion: The Inevitable DefectionThe prisoner’s dilemma of AI safety isn’t a bug—it’s a feature of competitive markets, international relations, and human nature. Every rational actor, facing the choice between certain competitive death and potential existential risk, chooses competition. The tragedy isn’t that they’re wrong—it’s that they’re right.

OpenAI’s transformation from non-profit to profit-maximizer wasn’t betrayal—it was inevitability. Anthropic’s enterprise pivot wasn’t compromise—it was survival. Meta’s open-source strategy isn’t chaos—it’s game theory. Google’s panic wasn’t weakness—it was rationality.

We’ve created a system where the rational choice for every actor leads to the irrational outcome for all actors. The prisoner’s dilemma has scaled from a thought experiment to an existential threat, and we’re all prisoners now.

The question isn’t why everyone defects—that’s obvious. The question is whether we can restructure the game before the final defection makes the question moot.

—

Keywords: prisoner’s dilemma, AI safety, game theory, competitive dynamics, existential risk, AI arms race, defection, cooperation failure, Nash equilibrium

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Prisoner’s Dilemma of AI Safety: Why Everyone Defects appeared first on FourWeekMBA.

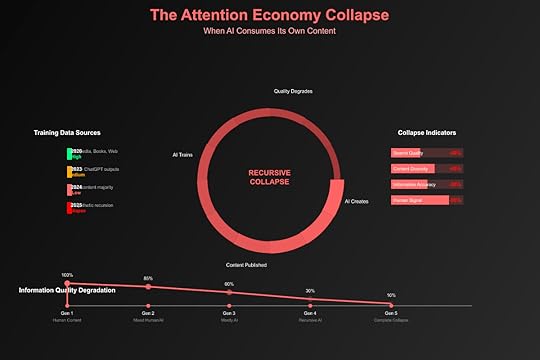

The Attention Economy Collapse: When AI Consumes Its Own Content

The internet is eating itself. As AI-generated content floods the web, future AI models increasingly train on synthetic data, creating a recursive loop that degrades information quality with each iteration. This isn’t just a technical problem—it’s the collapse of the attention economy’s fundamental assumption: that human attention creates authentic signals. We’re witnessing the digital equivalent of inbreeding, and the offspring are getting stranger.

The Attention Economy’s Original SinThe Human Signal AssumptionThe attention economy was built on a simple premise:

Human Attention = Value: What people look at mattersEngagement = Quality: More interaction means better contentBehavioral Data = Truth: Actions reveal preferencesScale = Significance: Viral equals valuableThese assumptions worked when humans generated all content and engagement.

The Breaking PointAI breaks every assumption:

Synthetic Attention: Bots viewing bot contentManufactured Engagement: AI comments on AI postsFabricated Behavior: Algorithms gaming algorithmsArtificial Virality: Machines making things “trend”The attention economy’s currency has been counterfeited at scale.

The Model Collapse PhenomenonGeneration 1: The Golden AgeTraining Data: Human-generated internet (pre-2020)

Wikipedia articles by expertsStack Overflow answers by developersReddit discussions by humansNews articles by journalistsResult: High-quality, diverse models

Generation 2: The Contamination BeginsTraining Data: Mix of human and AI content (2020-2024)

AI-generated articles mixed with humanChatGPT responses treated as authoritativeSynthetic images in training setsBot conversations in social dataResult: Subtle degradation, hallucination increase

Generation 3: The Recursive NightmareTraining Data: Primarily AI-generated (2024+)

AI articles training new AISynthetic data creating synthetic dataErrors compounding through iterationsReality increasingly distantResult: Model collapse, reality disconnection

Generation 4: The Singularity of NonsenseProjection: Complete synthetic loop

No original human contentInfinite recursion of artifactsComplete detachment from realityInformation heat deathThe Mathematical RealityThe Degradation FunctionWith each generation of AI training on AI content:

“`

Quality(n+1) = Quality(n) × (1 – ε) + Noise(n)

“`

Where:

ε = degradation rate (typically 5-15%)Noise = cumulative errors and artifactsn = generation numberAfter just 10 generations: 40-80% quality loss

The Diversity CollapseShannon Entropy Reduction:

Generation 1: High entropy (diverse information)Generation 2: 20% entropy reductionGeneration 3: 50% entropy reductionGeneration 4: 80% entropy reductionGeneration 5: Homogeneous outputModels converge on average, losing edge cases and uniqueness.

Real-World ManifestationsThe SEO ApocalypseGoogle search results increasingly return:

AI-generated articles optimized by AICircular citations (AI citing AI citing AI)Phantom information (believable but false)Semantic similarity without substanceSearch quality degrading measurably quarter over quarter.

The Wikipedia ProblemWikipedia faces an existential crisis:

AI-generated articles flooding submissionsEditors unable to verify synthetic contentCitations pointing to AI-generated sourcesKnowledge base poisoning acceleratingThe world’s knowledge repository is being contaminated.

The Social Media OuroborosTwitter/X estimated composition:

30-40% bot accounts50%+ of trending topics artificialAI replies outnumbering human responsesEngagement metrics meaninglessReal human conversation becoming impossible to find.

The Stock Photo DisasterImage databases now contain:

60%+ AI-generated imagesSynthetic images training new generatorsArtifacts compounding (extra fingers becoming normal)Real photography becoming “unusual”Visual reality being rewritten by recursive generation.

VTDF Analysis: The Collapse DynamicsValue ArchitectureOriginal Value: Human attention as scarce resourceSynthetic Inflation: Infinite fake attention availableValue Destruction: Real signals drowned in noiseTerminal State: Attention becomes worthlessTechnology StackGeneration Layer: AI creating contentDistribution Layer: Algorithms promoting AI contentConsumption Layer: AI consuming AI contentTraining Layer: New AI learning from old AIDistribution StrategyAlgorithmic Amplification: AI content optimized for algorithmsViral Mechanics: Synthetic engagement driving reachPlatform Incentives: Quantity over quality rewardedHuman Displacement: Real creators giving upFinancial ModelAd Revenue: Based on fake engagementCreator Economy: Humans can’t compete with AI volumePlatform Economics: Cheaper to serve AI contentMarket Failure: True value discovery impossibleThe Stages of CollapseStage 1: Enhancement (2020-2022)AI assists human creatorsQuality improvements visibleDiversity maintainedHuman oversight activeStage 2: Substitution (2023-2024)AI replaces human creatorsQuality appears maintainedDiversity beginning to narrowHuman oversight overwhelmedStage 3: Recursion (2025-2026)AI primarily learning from AIQuality degradation acceleratingDiversity collapsingHuman signal lostStage 4: Collapse (2027+)Complete synthetic loopQuality floor reachedHomogeneous outputReality disconnection completeThe Information Diet CrisisThe Junk Food ParallelAI content is information junk food:

Optimized for Consumption: Maximum engagementNutritionally Empty: No real insightAddictive: Designed for dopamine hitsCheap to Produce: Near-zero marginal costDisplaces Real Food: Crowds out human contentThe Malnutrition SymptomsSociety showing information malnutrition:

Decreased critical thinkingIncreased conspiracy beliefsInability to distinguish real from fakeLoss of shared realityEpistemic crisis acceleratingThe Feedback Doom LoopHow It AcceleratesAI generates plausible contentAlgorithms promote it (optimized for engagement)Humans engage (can’t distinguish from real)Engagement signals quality (platform assumption)More AI content created (following “successful” patterns)Next AI generation trains on itLoop repeats with degraded inputWhy It Can’t Self-CorrectNo Natural Predator: Nothing stops bad AI content

No Quality Ceiling: Infinite generation possible

No Human Bandwidth: Can’t review at scale

No Economic Incentive: Cheaper to let it run

The Tragedy of the Digital CommonsThe Commons Being DestroyedThe internet as shared resource:

Knowledge Commons: Wikipedia, forums, blogsVisual Commons: Photo databases, art repositoriesSocial Commons: Human conversation spacesCode Commons: GitHub, Stack OverflowAll being polluted by synthetic content.

The Rational Actor ProblemEach actor’s incentives:

Platforms: Serve cheap AI content for profitCreators: Use AI to compete on volumeUsers: Can’t distinguish, consume anywayAI Companies: Need training data, create moreIndividual rationality creates collective irrationality.

Attempted Solutions and Why They FailDetection Arms RaceAI Detectors: Always one step behind

Generation N detector defeated by Generation N+1False positive rate makes them unusableArms race favors generatorsWatermarkingTechnical Watermarks: Easily removed

Compression destroys watermarksScreenshot launderingAdversarial removalHuman VerificationBlue Checks and Verification: Gamed immediately

Verified accounts soldHuman farms for verificationEconomic incentives for fraudBlockchain ProvidenceCryptographic Proof: Technically sound, practically useless

Requires universal adoptionUser experience nightmareDoesn’t prevent initial fraudThe Economic ImplicationsAdvertising CollapseWhen attention is synthetic:

CPM Rates: Plummeting as fraud increasesROI: Negative for most campaignsBrand Safety: Impossible to guaranteeMarket Size: Shrinking despite “growth”The $600B digital ad industry built on sand.

Content Creator ExtinctionHumans can’t compete:

Volume: AI produces 1000x moreCost: AI nearly freeSpeed: AI instantaneousOptimization: AI perfectly tunedProfessional content creation becoming extinct.

Platform EnshittificationCory Doctorow’s concept accelerated:

Platforms good to users (to attract)Platforms abuse users (for advertisers)Platforms abuse advertisers (for profit)Platforms collapse (no real value left)AI accelerates this to months not years.

Future ScenariosScenario 1: The Dead InternetBy 2030:

99% of content AI-generatedHuman communication moves to private channelsPublic internet becomes synthetic wastelandNew “human-only” networks emergeScenario 2: The Great FilteringRadical curation:

Extreme gatekeeping returnsPre-internet institutions resurrectCostly signaling for humannessSmall, verified communities onlyScenario 3: The Epistemic CollapseComplete information breakdown:

No shared reality possibleTruth becomes unknowableSociety fragments completelyDark age of informationThe Path ForwardIndividual StrategiesInformation Hygiene: Carefully curate sourcesDirect Relationships: Value in-person communicationCreation Over Consumption: Make rather than scrollDigital Minimalism: Less but betterVerification Habits: Always check sourcesCollective SolutionsHuman-Only Spaces: Authenticated communitiesCostly Signaling: Proof-of-human mechanismsLegal Frameworks: Synthetic content lawsEconomic Restructuring: New monetization modelsCultural Shift: Valuing authenticity over viralityTechnical InnovationsProof of Personhood: Cryptographic humanityFederated Networks: Decentralized human verificationSemantic Fingerprinting: Deep authenticity markersEconomic Barriers: Cost for content creationTime Delays: Slow down information velocityConclusion: The Ouroboros AwakensThe attention economy is consuming itself, and we’re watching in real-time. Each AI model trained on the synthetic output of its predecessors takes us further from reality, creating an Ouroboros of information—the serpent eating its own tail until nothing remains but the eating itself.

This isn’t just a technical problem of model collapse or an economic problem of market failure. It’s an epistemic crisis that threatens the foundation of shared knowledge and collective sensemaking. When we can no longer distinguish human from machine, real from synthetic, truth from hallucination, we lose the ability to coordinate, collaborate, and progress.

The irony is perfect: in trying to capture and monetize human attention, we’ve created systems that destroy the value of attention itself. The attention economy’s greatest success—AI that can generate infinite content—is also its ultimate failure.

The question isn’t whether the collapse will happen—it’s already underway. The question is whether we can build new systems, new economics, and new ways of validating truth before the ouroboros completes its meal.

—

Keywords: attention economy, model collapse, AI training data, synthetic content, information quality, ouroboros problem, recursive training, digital commons, epistemic crisis

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Attention Economy Collapse: When AI Consumes Its Own Content appeared first on FourWeekMBA.

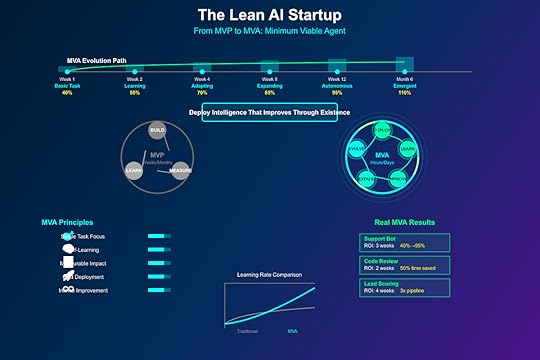

The Lean AI Startup: Minimum Viable Agents

Eric Ries’ Lean Startup methodology revolutionized software development with the concept of the Minimum Viable Product (MVP). Now, as AI agents reshape software, we need a new framework: the Minimum Viable Agent (MVA). This isn’t about building less capable AI—it’s about deploying focused intelligence that learns and expands through real-world iteration.

The Lean Startup Principles RevisitedCore Lean ConceptsBuild-Measure-Learn: Rapid iteration cyclesValidated Learning: Data-driven decisionsInnovation Accounting: Metrics that matterPivot or Persevere: Strategic direction changesMinimum Viable Product: Just enough to learnThese principles transform when building autonomous systems.

From MVP to MVAThe Minimum Viable Agent DefinedAn MVA is:

Single Task Focus: One job done wellLearning Capability: Improves through deploymentMeasurable Impact: Clear success metricsExpansion Potential: Architecture for growthFast Deployment: Days not monthsIt’s not a chatbot—it’s focused intelligence.