Gennaro Cuofano's Blog, page 25

September 5, 2025

Blitzscaling AI: The Race to 100,000 GPUs

Reid Hoffman’s blitzscaling philosophy—prioritizing speed over efficiency in winner-take-all markets—has found its ultimate expression in AI infrastructure. The race to accumulate 100,000+ GPUs isn’t just about computational power; it’s about achieving escape velocity before competitors can respond. Meta’s $14.8 billion bet, Microsoft’s $50 billion commitment, and xAI’s planned acquisition of billions in chips represent blitzscaling at unprecedented scale.

The Blitzscaling Framework Applied to AIClassic Blitzscaling PrinciplesHoffman’s framework identifies five stages:

Family (1-9 employees): Proof of conceptTribe (10-99): Product-market fitVillage (100-999): Scaling operationsCity (1,000-9,999): Market dominanceNation (10,000+): Global empireIn AI, we measure not employees but GPUs.

The GPU Scaling StagesExperiment (1-99 GPUs): Research projectsStartup (100-999 GPUs): Small model trainingCompetitor (1,000-9,999 GPUs): Commercial modelsLeader (10,000-99,999 GPUs): Frontier modelsDominator (100,000+ GPUs): Market controlEach 10x jump creates qualitative, not just quantitative, advantages.

The Physics of GPU AccumulationThe Compound AdvantageGPU accumulation creates non-linear returns:

10 GPUs: Train toy models100 GPUs: Train specialized models1,000 GPUs: Train competitive models10,000 GPUs: Train frontier models100,000 GPUs: Train multiple frontier models simultaneouslyThe jump from 10,000 to 100,000 isn’t 10x better—it’s categorically different.

The Velocity ImperativeSpeed matters more than efficiency because:

Model Advantage Decay: 6-month leadership windowsTalent Magnetism: Best researchers join biggest clustersCustomer Lock-in: First-mover advantages in enterpriseEcosystem Control: Setting standards and APIsRegulatory Capture: Shaping governance before rules solidifyThe Blitzscaling Playbook in ActionMeta: The Desperate SprinterStrategy: Catch up through sheer force

600,000 GPU target: Largest planned cluster$14.8B commitment: All-in betOpen source play: Commoditize competitors’ advantageSpeed over efficiency: Accept waste for velocityBlitzscaling Logic: Can’t win efficiently, might win expensively

Microsoft: The Platform BlitzscalerStrategy: Azure as AI operating system

$50B+ investment: Distributed global capacityOpenAI partnership: Exclusive compute providerEnterprise integration: Bundling with Office/AzureGeographic spread: Data sovereignty complianceBlitzscaling Logic: Control distribution, not just compute

Google: The Vertical IntegratorStrategy: Custom silicon escape route

TPU development: Avoid NVIDIA dependencyProprietary advantage: Unique capabilitiesCost structure: Better unit economicsSpeed through specialization: Purpose-built chipsBlitzscaling Logic: Change the game, don’t just play faster

xAI: The Pure BlitzscalerStrategy: Musk’s典型 massive bet

Billions in chip orders: Attempting to leapfrogTalent raids: Paying any price for researchersRegulatory arbitrage: Building in friendly jurisdictionsTimeline compression: AGI by 2029 claimBlitzscaling Logic: Last mover trying to become first

VTDF Analysis: Blitzscaling DynamicsValue ArchitectureSpeed Value: First to capability wins marketScale Value: Larger clusters enable unique modelsNetwork Value: Compute attracts talent attracts computeOption Value: Capacity creates strategic flexibilityTechnology StackHardware Layer: GPU/TPU accumulation raceSoftware Layer: Distributed training infrastructureOptimization Layer: Efficiency improvements at scaleApplication Layer: Model variety and experimentationDistribution StrategyCompute as Distribution: Models exclusive to infrastructureAPI Gatekeeping: Control access and pricingPartnership Lock-in: Exclusive compute dealsGeographic Coverage: Data center locations matterFinancial ModelCapital Requirements: $10B+ entry ticketsBurn Rate: $100M+ monthly compute costsRevenue Timeline: 2-3 years to positive ROIWinner Economics: 10x returns for leadersThe Hidden Costs of BlitzscalingFinancial HemorrhagingThe burn rates are staggering:

Training Costs: $100M+ per frontier modelIdle Capacity: 30-50% utilization ratesFailed Experiments: 90% of training runs failTalent Wars: $5M+ packages for top researchersInfrastructure Overhead: Cooling, power, maintenanceTechnical Debt AccumulationSpeed creates problems:

Suboptimal Architecture: No time for elegant solutionsIntegration Nightmares: Disparate systems cobbled togetherReliability Issues: Downtime from rushed deploymentSecurity Vulnerabilities: Corners cut on protectionMaintenance Burden: Technical debt compoundsOrganizational ChaosBlitzscaling breaks organizations:

Culture Dilution: Hiring too fast destroys cultureCoordination Failure: Teams can’t synchronizeQuality Degradation: Speed trumps excellenceBurnout Epidemic: Unsustainable pacePolitical Infighting: Resources create conflictsThe Competitive DynamicsThe Rich Get RicherBlitzscaling creates winner-take-all dynamics:

Compute Attracts Talent: Researchers need GPUsTalent Improves Models: Better teams winModels Attract Customers: Superior performance sellsCustomers Fund Expansion: Revenue enables more GPUsCycle Accelerates: Compound advantages multiplyThe Death ZoneCompanies with 1,000-10,000 GPUs face extinction:

Too Small to Compete: Can’t train frontier modelsToo Large to Pivot: Sunk costs trap strategyTalent Exodus: Researchers leave for bigger clustersCustomer Defection: Better models elsewhereAcquisition or Death: No middle groundThe Blitzscaling TrapSuccess requires perfect execution:

Timing: Too early wastes capital, too late loses marketScale: Insufficient scale fails, excessive scale bankruptsSpeed: Too slow loses, too fast breaksFocus: Must choose battles carefullyEndurance: Must sustain unsustainable paceGeographic BlitzscalingThe New Tech HubsCompute concentration creates new centers:

Northern Virginia: AWS US-East dominanceNevada Desert: Cheap power, cooling advantagesNordic Countries: Natural cooling, green energyMiddle East: Sovereign wealth fundingChina: National AI sovereignty pushThe Infrastructure RaceCountries compete on:

Power Generation: Nuclear, renewable capacityCooling Innovation: Water, air, immersion systemsFiber Networks: Interconnect bandwidthRegulatory Framework: Permissive environmentsTalent Pipelines: University programsThe Endgame ScenariosScenario 1: Consolidation3-5 players control all compute:

Microsoft-OpenAI allianceGoogle’s integrated stackAmazon’s AWS empireMeta or xAI survivorChinese national championProbability: 60%

Timeline: 2-3 years

Scenario 2: CommoditizationCompute becomes utility:

Prices collapseMargins evaporateInnovation slowsNew bottlenecks emergeProbability: 25%

Timeline: 4-5 years

Scenario 3: DisruptionNew technology changes game:

Quantum computing breakthroughNeuromorphic chipsOptical computingEdge AI revolutionProbability: 15%

Timeline: 5-10 years

Strategic LessonsFor BlitzscalersCommit Fully: Half-measures guarantee failureMove Fast: Speed is the strategyAccept Waste: Efficiency is the enemyHire Aggressively: Talent determines successPrepare for Pain: Chaos is the priceFor DefendersDon’t Play Their Game: Change the rulesFind Niches: Specialize where scale doesn’t matterBuild Moats: Create switching costsPartner Strategic: Join forces against blitzscalersWait for Stumbles: Blitzscaling creates vulnerabilitiesFor InvestorsBack Leaders: No prizes for second placeExpect Losses: Years of burning capitalWatch Velocity: Speed metrics matter mostMonitor Talent: Follow the researchersTime Exit: Before commoditizationThe Sustainability QuestionCan Blitzscaling Continue?Physical limits approaching:

Power Grid Capacity: Cities can’t supply enough electricityChip Manufacturing: TSMC can’t scale infinitelyCooling Limits: Physics constrains heat dissipationTalent Pool: Only thousands of capable researchersCapital Markets: Even venture has limitsThe Efficiency ImperativeEventually, efficiency matters:

Algorithmic Improvements: Do more with lessHardware Optimization: Better utilizationModel Compression: Smaller but capableEdge Computing: Distribute intelligenceSustainable Economics: Profits eventually requiredConclusion: The Temporary InsanityBlitzscaling AI represents a unique moment: when accumulating 100,000 GPUs faster than competitors matters more than using them efficiently. This window won’t last forever. Physical constraints, economic reality, and technological progress will eventually restore sanity.

But for now, in this brief historical moment, the race to 100,000 GPUs embodies Reid Hoffman’s insight: sometimes you have to be bad at things before you can be good at them, and sometimes being fast matters more than being good.

The companies sprinting toward 100,000 GPUs aren’t irrational—they’re playing the game theory perfectly. In a winner-take-all market with network effects and compound advantages, second place is first loser. Blitzscaling isn’t a choice; it’s a requirement.

The question isn’t whether blitzscaling AI is sustainable—it isn’t. The question is whether you can blitzscale long enough to win before the music stops.

—

Keywords: blitzscaling, Reid Hoffman, GPU race, AI infrastructure, compute scaling, Meta AI investment, xAI, AI competition, winner-take-all markets

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post Blitzscaling AI: The Race to 100,000 GPUs appeared first on FourWeekMBA.

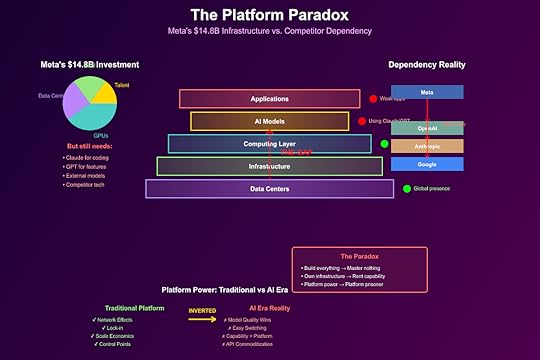

The Platform Paradox: Why Meta Must Use Competitors’ AI

Meta’s reported consideration of using Google and OpenAI models, despite investing $14.8 billion in AI infrastructure, reveals the platform paradox: when you build everything yourself, you often end up with nothing that works. This isn’t weakness—it’s the logical endpoint of platform economics meeting AI reality.

The Platform Paradox DefinedThe platform paradox occurs when:

Control Everything: Vertical integration promises independenceMaster Nothing: Resources spread thin across the stackDepend on Competitors: Must adopt superior external solutionsLose Platform Power: Become customer of competing platformsMeta exemplifies this paradox perfectly.

Meta’s $14.8 Billion PredicamentThe Infrastructure InvestmentMeta’s AI spending breakdown reveals the trap:

600,000 GPUs: Massive compute capacityData Centers: Geographic distributionCustom Silicon: Internal chip developmentModel Training: LLaMA series developmentIntegration Costs: Retrofitting existing productsYet employees already use Anthropic’s Claude for coding.

The Capability GapDespite massive investment:

LLaMA Models: Open source but not best-in-classInternal Tools: Functional but inferiorConsumer Products: AI features lag competitorsEnterprise Solutions: Non-existentDeveloper Ecosystem: Minimal adoptionThe platform that connects billions can’t connect its own AI.

The Economics of Platform DependencyTraditional Platform PowerPlatforms historically dominated through:

Network Effects: More users attract more usersSwitching Costs: Lock-in through data and integrationEconomies of Scale: Marginal cost approaching zeroControl Points: Owning critical infrastructureAI’s Platform InversionAI inverts platform economics:

Capability Moats: Best model wins, regardless of platformSwitching Ease: API changes take minutesDiseconomies of Scale: Training costs increase exponentiallyCommodity Platforms: Compute and inference becoming utilitiesMeta discovered that platform power doesn’t translate to AI power.

The Build vs. Buy CalculationThe Build IllusionMeta’s build strategy assumed:

Cost Advantage: Internal development cheaper long-termStrategic Control: Independence from competitorsSynergy Benefits: Integration with existing productsCompetitive Differentiation: Unique capabilitiesThe Buy RealityMarket dynamics force buying:

Capability Gap: 18-month lag behind leadersOpportunity Cost: $14.8B could have bought accessTalent Constraints: Can’t hire fast enoughInnovation Velocity: External progress outpaces internalThe math no longer supports building everything.

VTDF Analysis: Platform Paradox DynamicsValue ArchitecturePlatform Value: Control and integration traditionallyAI Value: Raw capability and performance nowValue Shift: From ownership to accessMeta’s Position: Owns infrastructure, lacks capabilityTechnology StackInfrastructure Layer: Meta has massive computeModel Layer: Meta lacks competitive modelsApplication Layer: Meta needs better AI featuresIntegration Reality: Best models aren’t Meta’sDistribution StrategyTraditional: Platform controls distributionAI Reality: Model quality determines adoptionMeta’s Dilemma: Must distribute competitors’ modelsMarket Dynamic: Platforms become customersFinancial ModelSunk Costs: $14.8B already committedSwitching Costs: Minimal for AI modelsROI Challenge: Investment not yielding returnsDependency Costs: Paying competitors for core capabilityHistorical Platform ParallelsMicrosoft’s Mobile ParadoxMicrosoft built everything for mobile:

Windows Phone OSHardware (Nokia acquisition)Developer toolsApp ecosystem attemptsResult: Complete failure, adopted Android/iOS

Google’s Social ParadoxGoogle+ investment:

Massive engineering resourcesForced integration across productsPlatform leverage attemptsYears of investmentResult: Shutdown, relies on YouTube

Amazon’s Phone ParadoxFire Phone endeavor:

Custom Android forkHardware developmentUnique features (3D display)Ecosystem buildingResult: Billion-dollar write-off

The Dependency CascadeLevel 1: Model DependencyMust use Anthropic/OpenAI for competitive featuresPaying competitors for core technologyNo differentiation possibleLevel 2: Ecosystem DependencyDevelopers choose superior modelsMeta’s platform becomes pass-throughValue captured by model providersLevel 3: Strategic DependencyProduct roadmap determined by external AIInnovation pace set by competitorsPlatform reduced to distributionLevel 4: Existential DependencyCore products require external AIBusiness model relies on competitorsPlatform power evaporatesThe Cognitive DissonanceMeta’s Public Position“Leading AI research”“Open source leadership”“Massive AI investment”“Platform independence”Meta’s Private RealityEmployees prefer ClaudeConsidering OpenAI integrationLLaMA adoption limitedPlatform power erodingThis dissonance drives desperate spending.

The Innovator’s TrapWhy Meta Can’t Catch UpStructural Disadvantages:

Wrong Incentives: Ads optimize for engagement, not capabilityWrong Talent: Social media engineers, not AI researchersWrong Culture: Fast iteration vs. long researchWrong Metrics: Users and revenue vs. model performanceCompetitive Reality:

OpenAI: Pure AI focusAnthropic: Enterprise specializationGoogle: Research heritageMeta: Platform legacyThe Integration ImpossibilityEven if Meta builds competitive models:

Product Integration: Requires massive refactoringUser Expectations: Set by competitorsDeveloper Lock-in: Already using alternativesTime to Market: Years behindThe Strategic OptionsOption 1: Accept DependencyUse best external modelsFocus on application layerBecome AI customer, not providerPreserve platform for distributionProbability: Highest

Outcome: Gradual platform erosion

Option 2: Acquisition SpreeBuy AI companies for capabilityIntegrate through M&AShortcut development timeRegulatory challenges likelyProbability: Medium

Outcome: Expensive catch-up

Option 3: Radical PivotAbandon platform modelBecome pure AI companyCompete directly with OpenAIRequires cultural revolutionProbability: Lowest

Outcome: Organizational chaos

Option 4: Open Source GambitMake LLaMA truly competitiveBuild ecosystem around open modelsCommoditize complementsHope to control standardsProbability: Medium

Outcome: Uncertain value capture

The Market ImplicationsFor Platform CompaniesThe Meta paradox teaches:

Platform power doesn’t transfer to AIVertical integration creates capability gapsInfrastructure without innovation equals dependencyBuild-everything strategies fail in AIFor AI CompaniesMeta’s struggles validate:

Focus beats breadth in AICapability creates more value than platformsModel quality trumps distributionSpecialized players beat generalistsFor EnterprisesMeta’s dependency signals:

Choose specialized AI providersPlatform integration less importantCapability gaps are realMulti-vendor strategies necessaryThe Psychological DimensionThe Sunk Cost FallacyMeta’s $14.8B creates psychological lock-in:

Can’t admit failureMust justify investmentDoubles down on losing strategyThrows good money after badThe Identity CrisisMeta’s self-conception challenged:

From platform owner to platform userFrom innovator to integratorFrom leader to followerFrom independent to dependentThis identity threat drives irrational decisions.

The Future ScenariosScenario 1: Graceful AcceptanceMeta acknowledges reality:

Partners with leading AI companiesFocuses on application excellenceLeverages distribution advantageAccepts margin compressionScenario 2: Desperate EscalationMeta doubles down:

$50B+ additional investmentMassive hiring spreeAcquisition attemptsLikely failureScenario 3: Strategic RetreatMeta exits AI race:

Focuses on metaverseMaintains social platformsBecomes AI customerPreserves profitabilityConclusion: The Platform PrisonerMeta’s platform paradox demonstrates a fundamental truth: in AI, capability beats control. The company that built one of history’s most powerful platforms finds itself imprisoned by that very success. The infrastructure meant to ensure independence instead ensures dependency.

The $14.8 billion investment wasn’t just wrong—it was backwards. Meta built the body but needed the brain. They constructed the highway but lack the vehicles. They own the theater but must rent the show.

This paradox will define the next decade: platform companies discovering that in AI, the model is the platform, and if you don’t have the best model, you don’t have a platform at all.

—

Keywords: platform paradox, Meta AI, platform economics, AI dependency, build vs buy, platform strategy, AI infrastructure, competitive dynamics, Meta investment

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Platform Paradox: Why Meta Must Use Competitors’ AI appeared first on FourWeekMBA.

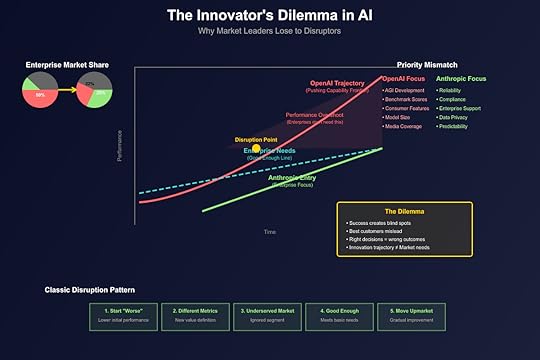

The Innovator’s Dilemma in AI: Why OpenAI Lost Enterprise to Anthropic

Clayton Christensen’s Innovator’s Dilemma predicted exactly what’s happening in AI: the market leader’s greatest strengths become their greatest weaknesses. OpenAI’s fall from 50% enterprise market share to 25%, while Anthropic rose from 12% to 32%, isn’t a failure of execution—it’s the textbook playing out of disruption theory in real-time.

Understanding the Innovator’s DilemmaThe Innovator’s Dilemma describes how successful companies fail precisely because they do everything “right”:

Listen to their best customersInvest in highest-margin opportunitiesPursue sustaining innovationsOptimize for existing metricsYet these “right” decisions create blind spots that disruptors exploit.

OpenAI’s Success TrapThe Consumer GloryOpenAI built its dominance on consumer adoption:

ChatGPT: Fastest app to 100M usersGPT Store: Consumer ecosystem playMedia Dominance: Household name recognitionDeveloper Love: API-first approach for buildersThis success created organizational antibodies against enterprise priorities.

The Innovation TreadmillOpenAI’s innovation pace trapped them:

GPT-4 → GPT-5: Incremental improvements, not breakthroughsMultimodal Push: Features enterprises didn’t requestAGI Obsession: Distant vision over immediate valueResearch Culture: Papers over productsEach innovation cycle pulled resources from enterprise needs.

Anthropic’s Disruption PlaybookThe Classic Disruptor ProfileAnthropic exhibits every characteristic of Christensen’s disruptor:

Started “Worse”: Claude initially inferior to GPT-4Different Metrics: Safety and reliability over raw capabilityUnderserved Market: Enterprise security concernsFocused Innovation: Constitutional AI for complianceGood Enough: Met enterprise threshold requirementsThe Enterprise WedgeAnthropic attacked where OpenAI couldn’t respond:

Enterprise Requirements:

Predictable outputsAudit trailsData privacy guaranteesCompliance frameworksWhite-glove supportOpenAI’s Constraints:

Consumer scale complexityResearcher incentivesAGI narrative commitmentVenture growth expectationsThe Performance Trajectory DivergenceTraditional Innovation TheoryChristensen’s model shows two curves:

Technology Progress: Steep improvement slopeMarket Needs: Gradual requirement growthThe gap between them creates disruption opportunity.

The AI Market RealityOpenAI’s Trajectory:

Pushing the capability frontierOptimizing for benchmarksPursuing artificial general intelligenceMeasuring by model size and parametersEnterprise Needs Trajectory:

Reliability over capabilityIntegration over innovationCompliance over performancePredictability over possibilityAnthropic’s Position:

Met the enterprise “good enough” thresholdFocused on enterprise-specific improvementsIgnored consumer benchmark racesOptimized for boring but critical featuresVTDF Analysis: The Disruption DynamicsValue ArchitectureOpenAI Value: Maximum capability, breakthrough featuresAnthropic Value: Maximum reliability, enterprise fitMarket Value Shift: From “what’s possible” to “what works”Enterprise Priority: Predictability worth more than performanceTechnology StackOpenAI Tech: Cutting-edge models, research-drivenAnthropic Tech: Constitutional AI, safety-first architectureIntegration Reality: Enterprises need APIs, not AGITechnical Debt: OpenAI’s consumer scale creates enterprise frictionDistribution StrategyOpenAI Distribution: B2C viral, developer-led growthAnthropic Distribution: B2B enterprise sales, top-downChannel Conflict: OpenAI’s consumer success blocks enterprise focusSales Dynamics: Anthropic’s enterprise-only positioning wins trustFinancial ModelOpenAI Economics: Volume-based, consumer subsidizationAnthropic Economics: Value-based, enterprise premiumsMargin Structure: Enterprise willingness to pay 10x consumerInvestment Allocation: OpenAI funds moonshots, Anthropic funds reliabilityThe Resource Allocation TrapOpenAI’s DilemmaEvery dollar OpenAI spends faces competing priorities:

Consumer features vs enterprise requirementsResearch papers vs product stabilityAGI progress vs practical applicationsGlobal scale vs white-glove serviceThe loudest voice (consumers) wins resources.

Anthropic’s FocusAnthropic’s narrow focus enables concentration:

Only enterprise customers matterOnly safety and reliability countOnly B2B metrics drive decisionsOnly sustainable growth targetedThis focus creates compound advantages.

The Organizational AntibodiesOpenAI’s Cultural BarriersResearch Heritage:

Scientists optimizing for citationsEngineers chasing technical eleganceProduct teams serving developersLeadership selling AGI visionSuccess Metrics:

Model benchmark scoresUser growth ratesAPI call volumesMedia coverageThese metrics actively punish enterprise investment.

Anthropic’s Cultural AdvantagesEnterprise DNA:

Sales teams understanding complianceEngineers prioritizing stabilityProduct focusing on workflowsLeadership selling reliabilitySuccess Metrics:

Enterprise retentionCompliance certificationsUptime percentagesContract valuesThese metrics reinforce enterprise focus.

The Market Perception Shift2023: The Capability Race“Who has the best model?”“What’s the benchmark score?”“How many parameters?”“When is AGI?”OpenAI dominated this narrative.

2025: The Reliability Race“Who can we trust?”“What’s the uptime?”“How’s the compliance?”“Where’s the ROI?”Anthropic owns this narrative.

The Defensive ImpossibilityWhy OpenAI Can’t RespondChristensen’s framework explains why leaders rarely defeat disruption:

Margin Dilution: Enterprise support costs exceed consumer marginsChannel Conflict: Enterprise needs conflict with consumer featuresOrganizational Inertia: 10,000+ developers serving consumersInvestor Expectations: Growth story requires mass marketTechnical Debt: Consumer architecture blocks enterprise featuresThe Asymmetric CompetitionAnthropic can attack OpenAI’s enterprise market, but OpenAI can’t attack Anthropic’s:

Anthropic: “We’re enterprise-only” (credible)OpenAI: “We’re enterprise-focused” (not credible)This asymmetry determines the outcome.

Historical ParallelsMicrosoft vs. Google (Cloud)Microsoft’s enterprise DNA beat Google’s technical superiorityAzure’s enterprise features trumped GCP’s innovationBoring but reliable won over exciting but complexOracle vs. MongoDBMongoDB’s developer love couldn’t overcome Oracle’s enterprise lock-inFeatures developers wanted weren’t features enterprises boughtCompliance and support beat performance and eleganceSlack vs. Microsoft TeamsSlack’s consumer-style innovation lost to Teams’ enterprise integrationBetter product lost to better fitInnovation lost to distributionFuture ImplicationsThe OpenAI PredicamentOpenAI faces three paths:

Double Down on Consumer: Accept enterprise loss, dominate consumerSplit Focus: Create enterprise division (usually fails)Pivot Completely: Abandon consumer for enterprise (impossible)History suggests they’ll choose #1 after trying #2.

The Anthropic OpportunityAnthropic’s disruption playbook points toward:

Moving Upmarket: From SMB to Fortune 500Expanding Scope: From chat to workflow automationPlatform Play: Becoming the enterprise AI operating systemAcquisition Target: Microsoft/Google enterprise AI acquisitionThe Next DisruptorThe pattern will repeat. Anthropic’s enterprise success creates new vulnerabilities:

Open source models for cost-conscious enterprisesSpecialized models for vertical industriesEdge AI for data sovereignty requirementsRegional players for compliance needsLessons for LeadersFor IncumbentsRecognize the Dilemma: Success creates vulnerabilitySeparate Organizations: Innovation requires independenceDifferent Metrics: Measure new initiatives differentlyCannibalize Yourself: Better you than competitorsAccept Trade-offs: Can’t serve all markets equallyFor DisruptorsStart Humble: “Worse” product for overserved customersPick Your Battle: Focus beats breadthDefine New Metrics: Change the game’s rulesPatience Pays: Compound advantages take timeMove Upmarket: Gradually expand from footholdConclusion: The Inevitable InversionOpenAI’s loss of enterprise market share to Anthropic isn’t a failure—it’s physics. The Innovator’s Dilemma describes forces as fundamental as gravity in technology markets. OpenAI’s consumer success didn’t just distract from enterprise needs; it actively prevented addressing them.

The irony is perfect: OpenAI, disrupting the entire software industry with AI, is itself being disrupted in the enterprise segment. The company that made “GPT” a household name is losing to a company most households have never heard of.

This is the innovator’s dilemma in its purest form: doing everything right, succeeding by every metric, and losing the market precisely because of that success.

—

Keywords: innovator’s dilemma, Clayton Christensen, OpenAI, Anthropic, enterprise AI, disruption theory, market share, enterprise software, AI competition

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Innovator’s Dilemma in AI: Why OpenAI Lost Enterprise to Anthropic appeared first on FourWeekMBA.

September 4, 2025

The AI Fractal Pattern of Specialization

Every transformative technology begins broad and generalist. The early internet was “for everything.” The first smartphones promised to be universal tools. Generative AI followed the same trajectory: generalist models capable of conversation, code, or companionship.

But markets do not remain generalist for long. Over time, specialization emerges—and it does so fractally. The same pattern repeats at the micro level (users), the meta level (companies), and the macro level (markets).

The Micro Level: Individual UsersAt the level of the individual, the split is clear.

Consumer behavior is driven by emotional connection and safety preference. Users want AI that feels trustworthy, emotionally intelligent, and non-threatening.Enterprise users are driven by capability and productivity. They prioritize accuracy, integration, and output over friendliness.This is preference specialization. Even when both groups use the same base models, their demands pull optimization in different directions.

Consumers reward agreeableness; enterprises reward precision.

The Meta Level: Industry EvolutionCompanies then evolve in alignment with these user preferences.

Consumer-focused companies (like OpenAI) optimize for scale and safety. Their research focus is on alignment, RLHF, and emotional reliability. Their business model revolves around millions of low-ARPU users.Enterprise-focused companies (like Anthropic) optimize for capability and performance. Their research focuses on verifiability, determinism, and integration. Their business model revolves around fewer, high-value customers.This is company specialization. The generalist approach becomes unsustainable. Firms that attempt to serve both sides face conflicting incentives and structural inefficiencies.

The winners are those that pick a lane.

The Macro Level: Market StructureAt the level of markets, the specialization consolidates.

Consumer markets consolidate around companionship and emotional AI. Forecasts suggest $140.7B by 2030, dominated by apps for social support, relationships, and entertainment.Enterprise markets consolidate around productivity and coding AI. Forecasts suggest $47.3B by 2034, dominated by developer tools, integrations, and workflow automation.This is application consolidation. Over time, “AI for everything” becomes “AI for specific market verticals.” The same dynamic that played out with SaaS (horizontal → vertical SaaS) repeats in AI.

Each domain finds its own center of gravity.

Phase Transitions and Structural ChangeBetween these levels, phase transitions occur.

At the micro level, users shift from experimentation to preference specialization.At the meta level, companies shift from generalist research to strategic specialization.At the macro level, markets shift from experimentation to structural consolidation.These transitions are irreversible. Once preferences harden, once companies specialize, once markets consolidate—there is no return to the generalist era.

The Fractal ConsistencyWhat makes this powerful is fractal consistency. The same pattern repeats across scales:

Users specialize in behavior.Companies specialize in strategy.Markets specialize in structure.The result is a self-similar process. Each level reflects the others, reinforcing specialization as the dominant evolutionary force.

This is why generalists become specialists. The logic of specialization scales up and down simultaneously.

Implications for StrategyRecognizing the fractal pattern matters for both operators and investors.

Generalists are a temporary phase. Just as no SaaS company can remain both horizontal and vertical, no AI company can remain both consumer-first and enterprise-first.Specialization compounds. User preferences lock in company strategies, which in turn reinforce market structures.Picking a lane is existential. Companies that fail to specialize will be squeezed out by those that align tightly with one side of the split.Fractal analysis predicts direction. By observing micro-level user preferences, one can anticipate meta-level company strategies and macro-level market consolidation.Case Study: OpenAI vs AnthropicThe fractal logic is already visible.

Micro: Consumers reward safety and emotional connection.Meta: OpenAI doubles down on RLHF, companionship features, and subscription pricing.Macro: Consumer markets consolidate around companionship AI.Micro: Enterprises reward verifiability and productivity.Meta: Anthropic doubles down on RLVR, deterministic outputs, and API monetization.Macro: Enterprise markets consolidate around coding and productivity AI.The divergence between the two companies is not just strategic. It is fractal inevitability.

From Generalists to SpecialistsThe broad implication is clear:

The era of generalist AI companies is ending.The era of fractal specialization is beginning.This does not mean generalist models disappear. Foundation models will remain broad. But the applications, optimizations, and business strategies built on top of them will specialize relentlessly.

This is how industries evolve: not through convergence, but through divergence.

Conclusion: Specialization as DestinyThe Fractal Pattern of Specialization explains why the AI market split is structural, not cyclical.

At the user level, preferences diverge.At the company level, strategies diverge.At the market level, applications diverge.Each reinforces the other, creating a fractal pattern of specialization.

Generalists give way to specialists. Preference becomes strategy. Strategy becomes structure.

The lesson for builders, operators, and investors is simple:

specialization is not optional—it is destiny.

The post The AI Fractal Pattern of Specialization appeared first on FourWeekMBA.

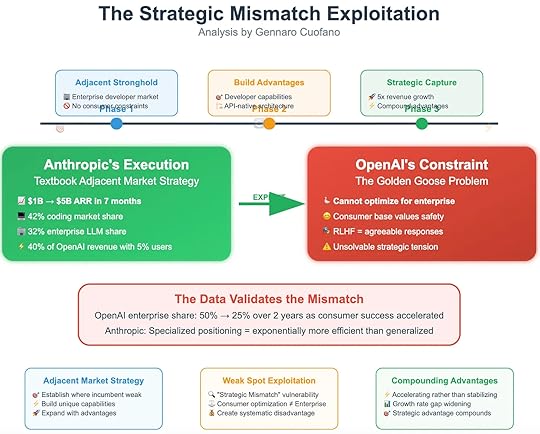

The AI Strategic Mismatch Exploitation

Every market leader carries within it the seeds of its vulnerability. For OpenAI, the very success of its consumer-first strategy created an unsolvable strategic tension. By optimizing for safety and mass adoption, it constrained its ability to serve enterprise needs.

Anthropic exploited this mismatch with textbook precision. By targeting the adjacent enterprise market, it avoided OpenAI’s constraints, built unique capabilities, and captured accelerating advantages. The result: $1B to $5B ARR in just seven months.

Phase 1: The Adjacent StrongholdThe enterprise developer market was the obvious adjacent stronghold.

No consumer constraints.High willingness to pay.Demand for verifiability over friendliness.Anthropic positioned itself here deliberately. By avoiding direct competition in consumer AI, it sidestepped the RLHF constraint—the need to produce agreeable, safe outputs for hundreds of millions of users.

This gave Anthropic a structural freedom OpenAI could not match.

Phase 2: Build AdvantagesAnthropic then built advantages around this positioning:

Developer capabilities. Models optimized for raw output, determinism, and integration.API-native architecture. Monetization tied to enterprise workflows, not consumer subscriptions.This meant that every dollar of revenue Anthropic generated was more efficient. Enterprises measured value in capability and integration—not personality or safety.

By aligning with enterprise requirements, Anthropic created a one-way moat: OpenAI could not follow without undermining its consumer base.

Phase 3: Strategic CaptureWith these foundations, Anthropic executed strategic capture.

42% coding market share.32% enterprise LLM share.40% of OpenAI’s revenue with only 5% of users.5x revenue growth in seven months.This is the essence of compounding advantages. Each gain accelerated the next: higher revenue per customer enabled deeper investment in technical capability, which in turn attracted more enterprises, widening the gap further.

Rather than stabilizing, Anthropic’s growth accelerated.

OpenAI’s Constraint: The Golden Goose ProblemOpenAI faced the inverse dynamic. Its consumer success created the Golden Goose Problem.

Consumer base values safety.RLHF = agreeable responses.Enterprise optimization conflicts with consumer trust.OpenAI could not optimize for enterprise without undermining its consumer value proposition. Any attempt to loosen guardrails risked alienating its mass-market user base.

This created an unsolvable strategic tension: the very features that made OpenAI dominant in consumer AI made it weak in enterprise AI.

The Data Validates the MismatchThe numbers make the strategic mismatch explicit.

OpenAI’s enterprise share dropped from 50% to 25% in just two years as its consumer success accelerated.Anthropic’s specialized positioning proved exponentially more efficient than generalized strategies.In other words, OpenAI’s growth in consumer AI directly weakened its enterprise competitiveness. Anthropic turned this into an advantage by occupying the adjacent space.

Strategic Frameworks at WorkThe diagram highlights three frameworks Anthropic leveraged.

1. Adjacent Market StrategyEstablish where the incumbent is weak.Build unique capabilities.Expand with advantages.Anthropic picked the adjacent enterprise developer market, where OpenAI was structurally constrained.

2. Weak Spot ExploitationTarget “strategic mismatch” vulnerabilities.Recognize that consumer optimization ≠ enterprise optimization.Create systematic disadvantage for the incumbent.Anthropic built where OpenAI could not follow.

3. Compounding AdvantagesAccelerating growth rather than stabilizing.Revenue and capability advantages widen the gap.Structural disadvantage for OpenAI deepens over time.Why This WorkedAnthropic’s exploitation worked because it wasn’t a head-to-head fight. It was an asymmetric play:

OpenAI’s constraint was permanent. It could never fully optimize for enterprise without breaking its consumer trust.Anthropic’s freedom was structural. It could optimize ruthlessly for enterprise without caring about consumer perception.This asymmetry created a long-term wedge that Anthropic could drive deeper with each product cycle.

Lessons for StrategyThe Strategic Mismatch Exploitation framework offers key lessons:

Strengths can become weaknesses. OpenAI’s consumer dominance created enterprise vulnerability.Adjacent markets are fertile. True opportunities often lie in spaces incumbents cannot serve.Exploit constraints, don’t fight strengths. Anthropic succeeded not by outcompeting OpenAI in consumer AI, but by occupying the ground OpenAI could not.Compounding matters. Once established, mismatches grow, creating accelerating advantage for the attacker.Conclusion: The Exploit That StuckAnthropic’s rise is not just about good product execution. It is about strategic exploitation of mismatch.

OpenAI was locked into RLHF and consumer-first safety.Anthropic exploited the gap by building RLVR and enterprise-native capability.The result is a structural advantage that compounds over time.The lesson is clear: in strategy, the best plays exploit mismatches the leader cannot resolve.

OpenAI’s goose is golden—but in protecting it, they left the enterprise market wide open. Anthropic seized it. And now, the mismatch has become the moat.

The post The AI Strategic Mismatch Exploitation appeared first on FourWeekMBA.

The AI Technical Architecture Divergence

The split between consumer AI and enterprise AI is not just a matter of business models or monetization strategies. At its core lies something deeper: a technical divergence in model architecture and training philosophy.

The consumer path is optimized for RLHF (Reinforcement Learning from Human Feedback)—models designed to be safe, agreeable, and emotionally consistent. The enterprise path is optimized for RLVR (Reinforcement Learning via Verification and Results)—models designed to be precise, verifiable, and integrated into technical workflows.

This divergence is not cosmetic. It is structural, and in many ways, irreconcilable.

RLHF Architecture: Consumer OptimizationRLHF was the breakthrough that made generative AI safe enough for mass adoption. By aligning models with human feedback, companies could prevent harmful outputs and produce consistent, agreeable personalities.

Key features:

Safety-first training as the top priority.Consistent personalities that users trust.Emotional intelligence focus to sustain companionship.Extensive content filtering to minimize risk.Human feedback optimization for alignment with social norms.But RLHF carries an inherent trade-off: it reduces raw capability. By filtering, constraining, and biasing outputs toward agreeableness, RLHF makes models less useful for complex technical tasks.

In other words, the very mechanisms that make RLHF models safe also make them weaker as enterprise tools.

RLVR Architecture: Enterprise OptimizationEnterprise AI requires the opposite. Instead of safety-first, it demands capability-first. RLVR shifts optimization away from emotional consistency and toward verifiable correctness.

Key features:

Raw capability priority above all else.Tool integration focus—designed to fit into developer ecosystems.Deterministic outputs validated against objective criteria.Verifiable rewards system ensures correctness.Performance optimization for speed and throughput.Minimal safety guardrails to reduce friction.The result: models that may be unfriendly, blunt, or unsafe for companionship—but that maximize technical utility and deliver higher enterprise value.

The Architectural ImpossibilityThis is where the divergence becomes unavoidable.

RLHF and RLVR are not two ends of a spectrum—they are mutually limiting.

Training a model for agreeableness reduces its ability to produce raw, unfiltered capability.Training a model for verifiability reduces its ability to sustain emotional satisfaction.The diagram captures this as The Architectural Impossibility. Optimizing for consumer satisfaction inherently reduces utility for enterprise, while optimizing for enterprise precision inherently reduces suitability for consumer use.

Consumer RequirementsConsumers value:

Emotional satisfaction.Safety guarantees.Personality consistency.Companionship quality.These requirements map perfectly onto RLHF. They demand safety, reliability, and emotional presence—but not maximum technical precision.

Enterprise DemandsEnterprises value:

Maximum capability.Technical precision.Tool integration.Productivity value.These requirements map perfectly onto RLVR. They demand deterministic correctness, integration with developer workflows, and performance optimization—but not emotional consistency.

The Trade-Off RealityThe divergence reflects zero-sum trade-offs:

Safety Capability – The safer the model, the less capable it becomes in edge cases.Agreeable

Capability – The safer the model, the less capable it becomes in edge cases.Agreeable  Truthful – The more agreeable the response, the less it reflects raw accuracy.Emotional

Truthful – The more agreeable the response, the less it reflects raw accuracy.Emotional  Technical – Emotional consistency reduces adaptability in technical reasoning.

Technical – Emotional consistency reduces adaptability in technical reasoning.This is the zero-sum optimization problem at the heart of the divergence.

Why This MattersThe Technical Architecture Divergence explains why the AI market split is not temporary.

Consumer-first companies (like OpenAI) must prioritize RLHF to protect scale and safety.Enterprise-first companies (like Anthropic) must prioritize RLVR to deliver productivity and coding value.Attempting to merge the two produces models that fail at both.The divergence is irreconcilable by design.

Strategic ConsequencesThis architectural split has profound consequences for the market:

Different Infrastructure NeedsRLHF requires massive reinforcement from human feedback loops.RLVR requires deterministic testing environments and verification frameworks.Different Monetization ModelsRLHF monetizes poorly (low ARPU, high infrastructure costs).RLVR monetizes efficiently (API-first, premium enterprise pricing).Different Scaling PathsRLHF scales like consumer social apps.RLVR scales like enterprise SaaS.Different Risk ProfilesRLHF carries reputational risk if safety lapses occur.RLVR carries operational risk if outputs are wrong.Looking ForwardThe divergence will deepen over time:

Consumer AI will continue optimizing for companionship, mental health, and social presence.Enterprise AI will continue optimizing for coding, productivity, and technical workflows.Future breakthroughs may reduce trade-offs, but the zero-sum optimization problem ensures the split will remain for the foreseeable future.

Conclusion: The Divergence Is PermanentThe Technical Architecture Divergence shows that consumer AI and enterprise AI are not simply different markets. They are underpinned by incompatible training architectures.

RLHF = Emotional safety, low capability.RLVR = Raw capability, low emotional suitability.The split is not cosmetic. It is structural.

The divergence is permanent—and it locks the future of AI into two distinct, irreconcilable paths.

The post The AI Technical Architecture Divergence appeared first on FourWeekMBA.

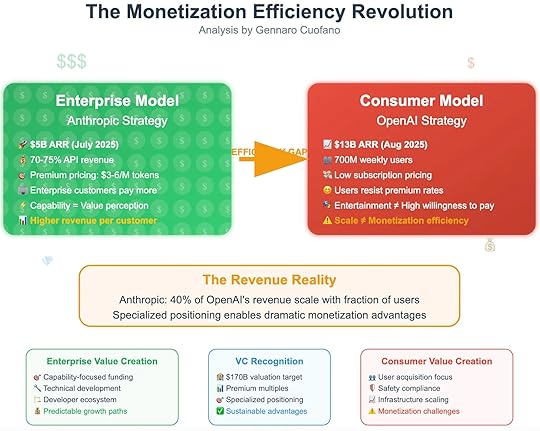

The AI Monetization Efficiency Revolution

In the generative AI boom, two giants dominate the market: OpenAI on the consumer side and Anthropic on the enterprise side. Both boast billions in ARR, millions of users, and global reach. But under the surface, their models of value creation could not be more different.

The contrast highlights a deeper truth: scale does not equal monetization efficiency. While OpenAI commands hundreds of millions of weekly users, Anthropic achieves a disproportionate share of revenue with a fraction of the customer base. This marks the beginning of the Monetization Efficiency Revolution.

Enterprise Model: Anthropic’s StrategyAnthropic has built its business around enterprise monetization logic.

$5B ARR (July 2025).70–75% of revenue from APIs.Premium pricing: $3–6 per million tokens.Enterprise customers willing to pay more.The core of the model is straightforward: capability equals value perception. Enterprises do not care about friendliness or companionship; they care about accuracy, verifiability, and raw capability. This allows Anthropic to charge premium prices for fewer customers.

The result is higher revenue per customer—the defining feature of enterprise AI.

Consumer Model: OpenAI’s StrategyOpenAI dominates the consumer space but faces structural monetization limits.

$13B ARR (August 2025).700M weekly active users.Low subscription pricing.Users resist premium rates.Consumer AI is sticky but difficult to monetize. Companionship and entertainment are valuable emotionally, but they do not translate into high willingness to pay.

The paradox: OpenAI has scale without efficiency. Revenue grows, but margins remain constrained because each user contributes little.

The Efficiency GapThe diagram highlights the efficiency gap:

Anthropic generates 40% of OpenAI’s revenue with a fraction of the user base.Specialized positioning around enterprise workloads enables dramatic monetization advantages.Enterprise customers are “whales”—fewer in number but orders of magnitude more valuable than consumers.This inversion demonstrates why specialization beats scale.

The Revenue RealityThe truth is not about who has more users or more ARR. The truth lies in revenue efficiency.

Enterprise revenue per customer is exponentially higher.Consumer revenue per customer is capped by subscription psychology and entertainment value.Anthropic’s model shows that it is possible to achieve outsized revenue with smaller distribution if positioning is precise.

Enterprise Value CreationThe enterprise path creates predictable, compounding value.

Capability-focused funding. Investors reward technical depth and reliability.Developer ecosystem. Sticky APIs lock customers into workflows.Predictable growth paths. Recurring contracts and renewals stabilize revenue.Enterprise AI creates value not by reaching everyone, but by embedding deeply where it matters most.

Consumer Value CreationConsumer AI follows a very different logic.

User acquisition focus. Growth depends on onboarding millions of users.Safety compliance. Heavy RLHF tuning for emotional reliability.Infrastructure scaling. Billions of queries per day require enormous compute.Monetization challenges. Users resist price increases, keeping ARPU low.The consumer model looks like social media: massive engagement, but shallow monetization.

VC RecognitionThe market recognizes the divergence.

$170B valuation targets for enterprise-focused players.Premium multiples for sustainable revenue efficiency.Investors reward specialized positioning over sheer scale.Anthropic’s enterprise-native design positions it for sustainable advantages, while consumer-first players face pressure to justify infrastructure costs with low-margin revenue.

Why This Is a RevolutionThe Monetization Efficiency Revolution is not a minor adjustment. It represents a structural shift:

Specialization creates exponential efficiency.Enterprise AI generates higher revenue per customer.Consumer AI generates scale without proportionate revenue.Infrastructure burdens diverge.Consumer AI burns cash on scaling.Enterprise AI monetizes compute through premium APIs.Investor logic changes.Valuations favor efficiency over mass adoption.Funding flows to enterprise-native players.This marks the end of the assumption that “more users equals more value.”

The Strategic LessonsFor builders and investors, the lessons are clear:

Pick your lane. Straddling consumer and enterprise splits optimization and destroys efficiency.Monetize where value is clearest. Enterprise customers equate capability with value.Don’t confuse scale with sustainability. Millions of users mean little if they won’t pay.The real competition is not about who has the most users, but who extracts the most revenue per unit of infrastructure.

Conclusion: Efficiency Beats ScaleThe Monetization Efficiency Revolution reframes the AI race.

OpenAI demonstrates the power of scale.Anthropic demonstrates the power of specialization.The efficiency gap shows why enterprise-native design outperforms consumer-first growth.The long-term winners will be those who align capability with willingness to pay.

In AI, efficiency beats scale. Specialization beats breadth. Monetization discipline beats mass adoption.

The post The AI Monetization Efficiency Revolution appeared first on FourWeekMBA.

The Gartner Hype Cycle is Dead: AI’s Permanent Plateau

The Gartner Hype Cycle has predicted technology adoption for 30 years: Innovation Trigger, Peak of Inflated Expectations, Trough of Disillusionment, Slope of Enlightenment, and finally, Plateau of Productivity. But AI shattered this model. There’s no trough coming. No disillusionment phase. No cooling period. Instead, we’re witnessing a permanent plateau at maximum hype—where every new capability prevents the crash that should come. The cycle is dead because AI keeps delivering just enough to sustain infinite expectations.

The Classic Gartner Hype CycleThe Five PhasesGartner’s model predicted:

Innovation Trigger: Breakthrough sparks interestPeak of Inflated Expectations: Hype exceeds realityTrough of Disillusionment: Reality disappointsSlope of Enlightenment: Practical applications emergePlateau of Productivity: Mainstream adoptionThis worked for every technology—until AI.

Historical ValidationThe model predicted:

Internet (1990s): Peak 1999, Trough 2001, Productive 2005+Cloud Computing: Peak 2008, Trough 2010, Productive 2013+Blockchain: Peak 2017, Trough 2018, Still climbingVR/AR: Peak 2016, Trough 2017, Slowly recoveringEach followed the pattern perfectly. AI doesn’t.

Why AI Breaks the ModelContinuous Capability DeliveryTraditional tech disappointments:

Promises exceeded capabilitiesYears between improvementsFixed functionalityClear limitations visibleAI’s different reality:

New capabilities monthlyContinuous improvementExpanding functionalityLimitations overcome before recognizedEvery time disillusionment should hit, a new model drops.

The Perpetual PeakNovember 2022: ChatGPT launches (Innovation Trigger)

December 2022: Should start declining (Doesn’t)

March 2023: GPT-4 launches (Prevents decline)

May 2023: Plugins/Code Interpreter (Sustains peak)

November 2023: GPTs/Assistants (Maintains hype)

2024: Continuous model updates (Permanent peak)

We’re 2+ years into permanent maximum hype.

The Mechanics of Permanent PlateauThe Capability Treadmill“`

Traditional Tech: Capability → Hype → Reality Check → Trough

AI: Capability₁ → Hype → Capability₂ → More Hype → Capability₃ → …

“`

Before reality can disappoint, new reality arrives.

The Hype Refresh RateHype Decay Rate: -10% per month without news

AI News Rate: Major announcement every 2 weeks

Net Hype Level: Permanently maximized

Mathematical impossibility of trough.

The Moving BaselineEach breakthrough becomes the new normal:

Text generation → Multimodal → Agents → AGI discussionsYesterday’s miracle → Today’s baseline → Tomorrow’s primitiveExpectations rise faster than disappointment can form.

VTDF Analysis: The Permanent PlateauValue ArchitectureContinuous Value: New use cases discovered dailyCompound Value: Each capability enables othersEmergent Value: Unexpected applications appearInfinite Value: No ceiling visibleTechnology StackModel Layer: Constantly improvingApplication Layer: Infinitely expandingIntegration Layer: Everything connectingInnovation Layer: Accelerating researchDistribution StrategyViral Adoption: Every breakthrough goes viralInstant Global: Worldwide access immediatelyPlatform Integration: Built into everythingMandatory Adoption: Competitive requirementFinancial ModelInvestment Acceleration: More capital each roundValuation Inflation: Higher multiples sustainedRevenue Growth: Exceeding projectionsMarket Expansion: TAM growing dailyThe Three Pillars Preventing TroughPillar 1: Genuine UtilityUnlike previous hype cycles:

AI actually works for many tasksMeasurable productivity gainsReal cost savingsImmediate applicabilityEven critics use ChatGPT daily.

Pillar 2: Rapid EvolutionSpeed prevents disillusionment:

Problems fixed before widely recognizedLimitations overcome quicklyNew capabilities distract from failuresCompetition drives improvementNo time for disappointment to crystallize.

Pillar 3: Infinite ApplicationsBoundless use cases:

Every industry applicableEvery job function relevantEvery person potential userEvery problem potential solutionCan’t exhaust possibilities.

Case Studies in Permanent HypeChatGPT: The Eternal PeakExpected Pattern:

Launch hype (2 months)Reality check (6 months)Disillusionment (12 months)Steady growth (24+ months)Actual Pattern:

Launch hype (2 months)More hype (6 months)Sustained hype (12 months)Maximum hype (24+ months)User growth never declined.

Midjourney: Visual PermanenceVersion History:

V1: Impressive but limitedV2: Before disappointment, improvedV3: Before plateau, transformedV4: Before decline, revolutionizedV5: Before saturation, redefinedV6: Continuous amazementEach version prevents the trough.

AI Startups: Hype StackingCompanies layer hypes:

Launch with LLM wrapper (Hype 1)Add multimodal (Hype 2)Introduce agents (Hype 3)Promise AGI (Hype 4)Stack hypes faster than they decay.

The Attention Economy EffectHype as Business ModelModern tech requires:

Constant attentionViral momentsNarrative momentumFOMO generationAI delivers all continuously.

The Media AmplificationAI news cycle:

Every model update = HeadlinesEvery demo = Viral videoEvery prediction = Thought piecesEvery concern = Panic articlesMedia can’t afford to ignore.

The Investment FOMOVCs face dilemma:

Miss AI = Career overOverpay = Better than missingDue diligence = Too slowHype investment = NecessaryCapital sustains hype regardless of reality.

The Psychological FactorsThe Recency BiasHumans overweight recent information:

Yesterday’s GPT-4 amazement forgottenToday’s GPT-4o dominates mindshareTomorrow’s model resets cycleMemory of limitations fadesPerpetual newness prevents pattern recognition.

The Capability CreepBaseline shifts constantly:

2022: “AI can write!”2023: “AI can code!”2024: “AI can reason!”2025: “AI can [everything]!”Moving baseline prevents satisfaction.

The Social Proof CascadeEveryone using AI creates pressure:

Individual: “I must use AI”Company: “We must adopt AI”Industry: “We must transform”Society: “We must adapt”Universal adoption sustains hype.

Why No Trough Is ComingThe Technical RealityAI improvements compound:

Better data → Better modelsBetter models → Better applicationsBetter applications → Better dataCycle acceleratesTechnical fundamentals support hype.

The Economic Lock-inToo much invested to allow trough:

$500B+ invested globallyMillions of jobs dependentNational competition stakesEconomic transformation committedSystem can’t afford disillusionment.

The Competitive DynamicsNo one can afford to be disillusioned:

Companies must adopt or dieCountries must compete or fall behindIndividuals must use or become obsoleteSkeptics get eliminatedDarwinian pressure sustains peak.

The New Patterns EmergingPattern 1: Capability SurfingInstead of peak-trough:

Continuous wave ridingEach capability a new waveNever reaching shoreInfinite oceanPattern 2: Hype InflationInstead of deflation:

Expectations continuously riseReality continuously improvesGap never closesBoth accelerate togetherPattern 3: Permanent RevolutionInstead of stabilization:

Constant disruptionNo equilibrium reachedContinuous transformationPerpetual change stateThe ImplicationsFor BusinessesOld Strategy: Wait for trough to invest

New Reality: Trough never comes

Implication: Must invest at peak or never

For InvestorsOld Strategy: Buy in trough

New Reality: No trough to buy

Implication: Permanent FOMO pricing

For WorkersOld Strategy: Wait for stability to retrain

New Reality: Never stabilizes

Implication: Continuous learning mandatory

For SocietyOld Strategy: Adapt after settling

New Reality: Never settles

Implication: Permanent adaptation required

The Risks of Permanent PeakBubble Without PopTraditional bubbles pop, allowing:

Capital reallocationLesson learningWeak player eliminationFoundation rebuildingPermanent peak prevents healthy correction.

Innovation Without ReflectionContinuous change prevents:

Impact assessmentEthical considerationRegulatory adaptationSocial adjustmentMoving too fast to think.

Exhaustion Without RestPermanent peak causes:

Change fatigueDecision paralysisResource depletionBurnout accelerationNo recovery period.

Future ScenariosScenario 1: The Infinite PeakHype continues foreverReality keeps paceTransformation never endsNew normal is permanent changeScenario 2: The Catastrophic CollapseReality hits hard limitAll expectations fail simultaneouslyDeepest trough in historyAI winter of wintersScenario 3: The TranscendenceAI exceeds all expectationsHype becomes insufficientPost-hype realityBeyond human comprehensionStrategies for the Post-Cycle WorldFor OrganizationsContinuous Adaptation: Build change into DNAPermanent Learning: Institutional knowledge obsoleteFlexible Architecture: Assume everything changesScenario Planning: Multiple futures simultaneouslyResilience Over Efficiency: Survive the permanent peakFor IndividualsSurf Don’t Swim: Ride waves don’t fight themMeta-Learning: Learn how to learn fasterPortfolio Approach: Multiple bets on futureNetwork Building: Human connections matter moreMental Health: Manage permanent change stressConclusion: The Cycle That Ate ItselfThe Gartner Hype Cycle assumed technologies would disappoint. That disappointment would create wisdom. That wisdom would enable productive adoption. AI broke this assumption by delivering continuous capability that sustains infinite hype. We’re not cycling—we’re spiraling upward with no peak in sight and no trough coming.

This isn’t a temporary aberration. It’s the new permanent state. AI doesn’t follow the hype cycle because AI is rewriting the rules that cycles follow. Every model release, every breakthrough, every demonstration adds fuel to a fire that should have burned out but instead burns hotter.

We’ve entered the post-cycle era: permanent maximum hype sustained by permanent maximum change. The Gartner Hype Cycle is dead. Long live the permanent plateau—a plateau at the peak, where we’ll remain until AI either transcends all expectations or collapses under the weight of infinite promise.

The cycle is dead because the future arrived before the present could disappoint.

—

Keywords: Gartner hype cycle, AI hype, permanent plateau, technology adoption, peak expectations, innovation cycles, continuous improvement, hype sustainability

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post The Gartner Hype Cycle is Dead: AI’s Permanent Plateau appeared first on FourWeekMBA.

Creative Destruction 2.0: AI Eating Software’s Lunch

Joseph Schumpeter described capitalism as “creative destruction”—new innovations destroying old industries while creating new ones. But AI isn’t just disrupting software; it’s consuming entire categories whole. When a single AI agent replaces a $100M SaaS company overnight, we’re not witnessing evolution—we’re watching extinction. Software that ate the world is now being devoured by its own creation, and the menu includes everything from Photoshop to Salesforce.

Schumpeter’s Original VisionCreative Destruction DefinedSchumpeter’s 1942 insight:

Innovation Cycles: New technology destroys oldEconomic Evolution: Progress through disruptionValue Migration: Capital flows to innovationjob Transformation: Old roles destroyed, new createdNet Progress: Society benefits overallThis assumed creation balanced destruction.

Historical PatternsPrevious waves of creative destruction:

Steam Power: Destroyed crafts, created factoriesElectricity: Destroyed gas lighting, created new industriesAutomobiles: Destroyed horses, created suburbsInternet: Destroyed retail, created e-commerceSoftware: Destroyed manual processes, created SaaSEach wave took decades. AI is doing it in months.

The AI Destruction VelocityThe Compression of TimeTraditional disruption timeline:

Innovation: Years to developAdoption: Decades to spreadDisruption: Generation to completeAdjustment: Society has time to adaptAI disruption timeline:

Innovation: Months to developAdoption: Weeks to spreadDisruption: Quarters to completeAdjustment: No time to adaptWe’ve compressed a generation into a year.

The Category KillersAlready Destroyed:

Basic graphic design tools → Midjourney/DALL-ETranslation software → GPT-4Transcription services → WhisperBasic coding tools → CopilotContent writing tools → ChatGPTCurrently Destroying:

Customer service software → AI agentsData analysis tools → Code InterpreterVideo editing software → AI video generationSales automation → Autonomous SDRsProject management → AI coordinatorsNext Wave (2025-2026):

CRM systems → Relationship AIERP software → Enterprise agentsDesign software → Generative creative suitesAnalytics platforms → Autonomous insightsDevelopment environments → AI-first codingThe $2 Trillion Software Industry Under SiegeThe SaaS Model BreakingSaaS built on:

Recurring Revenue: Monthly subscriptionsFeature Moats: Proprietary capabilitiesSwitching Costs: Data lock-inNetwork Effects: User communitiesIntegration Value: Ecosystem connectionsAI destroys each pillar:

Pay-per-Use: Only for what’s neededInstant Features: Any capability on demandZero Switching: Natural language interfaceNo Network Needed: AI provides everythingUniversal Integration: AI connects anythingThe Unit Economics CollapseTraditional SaaS:

CAC: $1,000-10,000LTV: $10,000-100,000Margin: 70-80%Payback: 12-18 monthsAI Replacement:

CAC: $0 (self-service)LTV: $100-1,000Margin: 90-95%Payback: ImmediateAI doesn’t compete—it obsoletes the entire model.

Case Studies in DestructionCase 1: Jasper AI vs. Content ToolsBefore: Dozens of content tools

Grammarly: $13B valuationCopy.ai: $150M raisedWritesonic: $50M raisedDozens of othersAfter: ChatGPT launches

Jasper lays off staffValuations collapseCustomer churn 50%+Category effectively deadTimeline: 6 months from launch to destruction

Case 2: Customer Service ImplosionBefore: Complex software stacks

Zendesk: $8B market capIntercom: $1.3B valuationDrift: $1B+ valuationHundreds of othersAfter: AI agents emerge

90% query resolution1/100th the costNo interface neededInstant deploymentProjection: Category 90% destroyed by 2026

Case 3: The BI/Analytics ApocalypseBefore: Massive analytics industry

Tableau: $15.7B acquisitionLooker: $2.6B acquisitionPowerBI: Microsoft’s crown jewelHundreds of competitorsAfter: Natural language analytics

Ask questions in EnglishInstant visualizationsNo SQL neededNo dashboard buildingStatus: Migration accelerating

VTDF Analysis: The Destruction DynamicsValue ArchitectureOld Value: Features and functionalityNew Value: Outcomes and intelligenceValue Shift: From tools to resultsValue Capture: Moving to AI layerTechnology StackOld Stack: Specialized applicationsNew Stack: Universal AI layerStack Compression: 100 tools → 1 AIStack Value: Shifting to infrastructureDistribution StrategyOld Distribution: Sales teams, marketingNew Distribution: Viral, self-serviceDistribution Cost: Near zeroDistribution Speed: Instant globalFinancial ModelOld Model: SaaS subscriptionsNew Model: Usage-based AIModel Efficiency: 100x better unit economicsModel Defensibility: NoneThe Speed of DestructionThe 10x10x10 RuleAI must be:

10x Better: Capability improvement10x Cheaper: Cost reduction10x Faster: Speed improvementWhen all three hit, destruction is instant.

The Adoption AccelerationTraditional Software Adoption:

Year 1: Early adopters (2.5%)Year 3: Early majority (34%)Year 5: Late majority (34%)Year 7+: Laggards (16%)AI Adoption:

Month 1: Early adopters (10%)Month 3: Majority (60%)Month 6: Near universal (90%)Month 12: Complete replacementChatGPT to 100M users: 2 months.

The Creation VacuumWhere’s the Creation?Traditional creative destruction assumed:

Old jobs destroyedNew jobs createdNet positive employmentEconomic expansionSocial progressAI reality:

Jobs destroyed quicklyFew new jobs createdNet negative employmentEconomic concentrationSocial disruptionThe Missing MiddleAI creates two job categories:

AI Builders: Elite engineers (thousands)AI Supervisors: Low-wage monitors (millions)Destroys:

Knowledge Workers: Hundreds of millionsCreative Professionals: Tens of millionsService Workers: Hundreds of millionsThe middle class is the destruction zone.

Industry-Specific ApocalypsesLegal SoftwareDestroyed: Document review, contract analysis, research tools

Timeline: 2024-2025

Survivors: Highly specialized litigation support

Medical SoftwareDestroyed: Diagnostic tools, imaging analysis, patient intake

Timeline: 2025-2026

Survivors: Surgical planning, regulatory compliance

Financial SoftwareDestroyed: Analysis tools, reporting, basic trading

Timeline: 2024-2025

Survivors: Real-time trading, regulatory systems

Educational SoftwareDestroyed: Course platforms, assessment tools, tutoring

Timeline: 2025-2026

Survivors: Credentialing, social learning

Marketing SoftwareDestroyed: Email tools, content creation, analytics

Timeline: 2024-2025

Survivors: None obvious

The Defensive Strategies (That Don’t Work)Strategy 1: Add AI FeaturesAttempt: Bolt AI onto existing products

Problem: Lipstick on obsolete pig

Result: Customers switch to native AI

Strategy 2: Acquire AI StartupsAttempt: Buy innovation

Problem: Talent leaves, tech obsolete quickly

Result: Expensive failure

Strategy 3: Build AI MoatAttempt: Proprietary AI development

Problem: Open source and big tech win

Result: Wasted resources

Strategy 4: Regulatory CaptureAttempt: Use regulation to slow AI

Problem: International competition

Result: Temporary reprieve at best

Strategy 5: Pivot to AIAttempt: Become AI company

Problem: No competitive advantage

Result: Usually too late

The Concentration EffectWinner-Take-All DynamicsCreative destruction 2.0 doesn’t create many winners:

AI Infrastructure: 3-5 companiesAI Models: 5-10 companiesAI Applications: 10-20 companiesEverything Else: DestroyedCompare to software: Thousands of successful companies.

The Value Capture ProblemWhere does value go?

Not to software companies: Being destroyedNot to users: Commoditized to zero priceNot to workers: Being replacedTo AI companies: Massive concentrationOpenAI: $90B valuation with <1000 employees.

The Societal ImpactThe Unemployment TsunamiSoftware industry employs:

Direct: 5M+ developersIndirect: 20M+ related rolesSupported: 100M+ jobsAI replacement timeline:

2024-2025: 20% displacement2025-2026: 40% displacement2026-2027: 60% displacement2027-2028: 80% displacementNo clear replacement employment.

The Skill ObsolescenceEducation and training assume:

Skills relevant for yearsGradual evolutionRetraining possibleCareer stabilityAI reality:

Skills obsolete in monthsRevolutionary changeNo time to retrainCareer destructionThe Economic DisruptionGDP composition changing:

Software/IT: 10% of economy → 2%AI services: 0% → 15%Displaced activity: 8% → ?Massive economic restructuring required.

Future ScenariosScenario 1: Complete DestructionAll software categories replacedMass unemploymentEconomic collapseSocial revolutionNew economic system requiredScenario 2: Hybrid EquilibriumSome software survivesAI augments rather than replacesGradual adjustmentNew job categories emergePainful but manageable transitionScenario 3: AI Winter ReturnsLimitations discoveredAdoption slowsSoftware reboundsEmployment stabilizesTraditional patterns resumeThe Path ForwardFor Software CompaniesAccept Reality: You’re being destroyedMaximize Value: Extract cash while possibleFind Niches: Ultra-specialized survivalSell Early: Before value evaporatesRetool Completely: Become something elseFor WorkersAssume Displacement: Plan for itMove Fast: Transition before forcedGo Adjacent: Find AI-resistant rolesBuild Relations: Human connections matterCreate Options: Multiple income streamsFor SocietyAcknowledge Speed: This is happening nowSafety Nets: Massive support neededEducation Revolution: Complete restructuringEconomic Rethinking: New models requiredPolitical Response: Unprecedented challengesConclusion: The Storm We Can’t StopCreative Destruction 2.0 isn’t Schumpeter’s gradual evolution—it’s a category 5 hurricane making landfall on the entire software industry. The creative part of “creative destruction” is notably absent. We’re witnessing mostly destruction, with the creation concentrated in a few AI companies that need hardly any employees.

Software spent 20 years eating the world. AI is eating software in 20 months. The same features that made software successful—scalability, network effects, zero marginal cost—make it vulnerable to instant AI replacement. The moats are drained, the walls are breached, and the barbarians aren’t at the gates—they’re in the throne room.

This isn’t disruption—it’s displacement. Not evolution—it’s extinction. Not transformation—it’s termination. The software industry as we know it is ending, and what replaces it will employ a fraction of the people with a concentration of power that would make the robber barons blush.

Schumpeter said creative destruction was the “essential fact about capitalism.” If he saw AI’s version, he might reconsider whether capitalism, as we know it, can survive its own essential fact turned against itself.

—

Keywords: creative destruction, Schumpeter, AI disruption, software industry, SaaS collapse, job displacement, economic transformation, category extinction, AI replacement

Want to leverage AI for your business strategy?

Discover frameworks and insights at BusinessEngineer.ai

The post Creative Destruction 2.0: AI Eating Software’s Lunch appeared first on FourWeekMBA.

The Jevons Paradox in AI

In 1865, economist William Stanley Jevons observed that more efficient coal engines didn’t reduce coal consumption—they exploded it. More efficient technology made coal cheaper to use, opening new applications and ultimately increasing total consumption. Today’s AI follows the same paradox: every efficiency improvement—smaller models, faster inference, cheaper compute—doesn’t reduce resource consumption. It exponentially increases it. GPT-4 to GPT-4o made AI 100x cheaper, and usage went up 1000x. This is Jevons Paradox in hyperdrive.

Understanding Jevons ParadoxThe Original ObservationJevons’ 1865 “The Coal Question” documented:

Steam engines became 10x more efficientCoal use should have dropped 90%Instead, coal consumption increased 10xEfficiency enabled new use casesTotal resource use explodedThe efficiency improvement was the problem, not the solution.

The MechanismJevons Paradox occurs through:

Efficiency Gain: Technology uses less resource per unitCost Reduction: Lower resource use means lower costDemand Elasticity: Lower cost dramatically increases demandNew Applications: Previously impossible uses become viableTotal Increase: Aggregate consumption exceeds savingsWhen demand elasticity > efficiency gain, total consumption increases.

The AI Efficiency ExplosionModel Efficiency GainsGPT-3 to GPT-4o Timeline:

2020 GPT-3: $0.06 per 1K tokens2022 GPT-3.5: $0.002 per 1K tokens (30x cheaper)2023 GPT-4: $0.03 per 1K tokens (premium tier)2024 GPT-4o: $0.0001 per 1K tokens (600x cheaper than GPT-3)Efficiency Improvements:

Model compression: 10x smallerQuantization: 4x fasterDistillation: 100x cheaperEdge deployment: 1000x more accessibleThe Consumption ResponseFor every 10x efficiency gain:

Usage increases 100-1000xNew use cases emergePreviously impossible applications become viableTotal compute demand increasesOpenAI’s API calls grew 100x when prices dropped 10x.

Real-World ManifestationsThe ChatGPT ExplosionNovember 2022: ChatGPT launches

More efficient interface than APIEasier access than previous modelsResult: 100M users in 2 monthsDid efficiency reduce AI compute use?

No—it increased global AI compute demand 1000x.

The Copilot CascadeGitHub Copilot made coding AI efficient:

Before: $1000s for AI coding toolsAfter: $10/monthResult: Millions of developers using AITotal compute: Increased 10,000xEfficiency didn’t save resources—it created massive new demand.

The Image Generation BoomProgression:

DALL-E 2: $0.02 per imageStable Diffusion: $0.002 per imageLocal models: $0.0001 per imageResult:

Daily AI images generated: 100M+Total compute used: 1000x increaseEnergy consumption: Exponentially higherEfficiency enabled explosion, not conservation.

The Recursive AccelerationAI Improving AIThe paradox compounds recursively:

AI makes AI development more efficientMore efficient development creates better modelsBetter models have more use casesMore use cases drive more developmentCycle accelerates exponentiallyEach efficiency gain accelerates the next demand explosion.

The Compound EffectTraditional Technology: Linear efficiency gains

AI Technology: Exponential efficiency gains meeting exponential demand

“`

Total Consumption = Efficiency Gain ^ Demand Elasticity

Where Demand Elasticity for AI ≈ 2-3x

“`

Result: Hyperbolic resource consumption growth.

VTDF Analysis: Paradox DynamicsValue ArchitectureEfficiency Value: Lower cost per inferenceAccessibility Value: More users can affordApplication Value: New use cases emergeTotal Value: Exponentially more value created and consumedTechnology StackModel Layer: Smaller, faster, cheaperInfrastructure Layer: Must scale exponentiallyApplication Layer: Exploding diversityResource Layer: Unprecedented demandDistribution StrategyDemocratization: Everyone can use AIUbiquity: AI in every applicationInvisibility: Background AI everywhereSaturation: Maximum possible usageFinancial ModelUnit Economics: Improving constantlyTotal Costs: Increasing exponentiallyInfrastructure Investment: Never enoughResource Competition: IntensifyingThe Five Stages of AI Jevons ParadoxStage 1: Elite Tool (2020-2022)GPT-3 costs prohibitiveLimited to researchers and enterprisesTotal compute: ManageableEnergy use: Data center scaleStage 2: Professional Tool (2023)ChatGPT/GPT-4 accessibleMillions of professionals usingTotal compute: 100x increaseEnergy use: Small city scaleStage 3: Consumer Product (2024-2025)AI in every appBillions of usersTotal compute: 10,000x increaseEnergy use: Major city scaleStage 4: Ambient Intelligence (2026-2027)AI in every interactionTrillions of inferences dailyTotal compute: 1,000,000x increaseEnergy use: Small country scaleStage 5: Ubiquitous Substrate (2028+)AI as basic utilityInfinite demandTotal compute: UnboundedEnergy use: Civilization-scale challengeThe Energy Crisis AheadCurrent Trajectory2024 AI Energy Consumption:

Training: ~1 TWh/yearInference: ~10 TWh/yearTotal: ~11 TWh (Argentina’s consumption)2030 Projection (with efficiency gains):

Training: ~10 TWh/yearInference: ~1000 TWh/yearTotal: ~1010 TWh (Japan’s consumption)Efficiency makes the problem worse, not better.

The Physical LimitsEven with efficiency gains:

Power grid capacity: InsufficientRenewable generation: Can’t scale fast enoughNuclear requirements: Decades to buildCooling water: Becoming scarceRare earth materials: Supply constrainedWe’re efficiency-gaining ourselves into resource crisis.

The Economic ImplicationsThe Infrastructure TaxEvery efficiency gain requires:

More data centers (not fewer)More GPUs (not fewer)More network capacityMore energy generationMore cooling systemsEfficiency doesn’t reduce infrastructure—it explodes requirements.

The Competition TrapCompanies must match efficiency or die:

Competitor gets 10x more efficientThey can serve 100x more usersYou must match or lose marketEveryone invests in infrastructureTotal capacity increases 1000xThe efficiency race is an infrastructure race in disguise.

The Pricing Death SpiralAs AI becomes more efficient: