More on this book

Community

Kindle Notes & Highlights

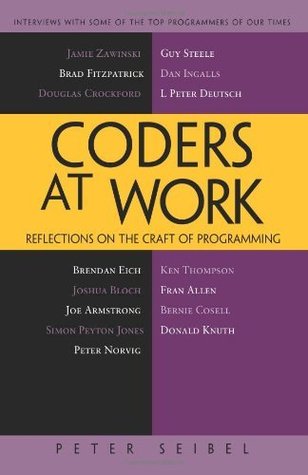

by

Peter Seibel

Read between

April 2 - May 14, 2020

You've got the second-system problem where people who've had some success are given a blank slate and allowed to do whatever they want. Generally, they will fail because they'll be too ambitious, they won't understand the limits. And you get nothing out of that. You have to have extreme discipline to say, “It's not a blank slate; it's reimplementing what we had here; it's doing what we knew.”

Because we were so smart and we had so much experience. We had it wired. Couldn't miss. Programmers are optimistic. And we have to be because if we weren't optimists we couldn't do this work. Which is why we fall prey to things like second systems, why we can't schedule our projects, why this stuff is so hard.

Seibel: To read Knuth, it seems to me, you have to be able to read the math and understand it. To what extent do you think having that kind of mathematical training is necessary to be a programmer? Crockford: Obviously it's not, because most of them don't have it. In the sorts of applications that I'm working on, we don't see that much application of the particular tools that Knuth gives us. If we were writing operating systems or writing runtimes, it'd be much more critical. But we're doing form validations and UIs. Generally performance is not that important in the things that we do. We

...more

Seibel: When you're hiring programmers, how do you recognize the good ones? Crockford: The approach I've taken now is to do a code reading. I invite the candidate to bring in a piece of code he's really proud of and walk us through it.

I have this big allergy to ivory-tower design and design patterns. Peter Norvig, when he was at Harlequin, he did this paper about how design patterns are really just flaws in your programming language. Get a better programming language. He's absolutely right. Worshipping patterns and thinking about, “Oh, I'll use the X pattern.”

As they say, it's easier to optimize correct code than to correct optimized code.

But there's a discrete and a continuous way to get your mind around infinity. I think that for a programmer it's more important to have mastered the discrete way. For example, I just mentioned induction proofs. You can prove something true for all integers. It's kind of magical. You prove it for one integer and you prove that one implies the next and then you've proved it for all of them. And I think that is more important for a programmer than, let's say, understanding the notion of limits.

As your confidence in the API increases, then you flesh it out. But the fundamental rule is, write the code that uses the API before you write the code that implements it.

In a sense, what I'm talking about is test-first programming and refactoring applied to APIs. How do you test an API? You write use cases to it before you've implemented it. Although I can't run them, I am doing test-first programming: I'm testing the quality of the API, when I code up the use cases to see whether the API is up to the task.

Here's one I learned when I worked in transaction systems. When you try to do automatic locking or optimistic concurrency control based merely on reading and writing at the byte level, you end up with “false contention” between threads: you have physical conflicts that don't correspond to logical conflicts. If you're forced to think about what locks to acquire, you can do your best to ensure that you don't acquire any locks beyond what is required to enforce logical conflicts.

I don't like working in total isolation. When I'm writing a program and I come to a tricky design decision, I just have to bounce it off someone else. At every place I've worked, I've had one or more colleagues I could bounce ideas off of. That's critically important for me; I need that feedback.

The funny thing is, thinking back, I don't think all these modern gizmos actually make you any more productive. Hierarchical file systems—how do they make you more productive? Most of software development goes on in your head anyway. I think having worked with that simpler system imposes a kind of disciplined way of thinking. If you haven't got a directory system and you have to put all the files in one directory, you have to be fairly disciplined. If you haven't got a revision control system, you have to be fairly disciplined. Given that you apply that discipline to what you're doing it

...more

Armstrong: I think the lack of reusability comes in object-oriented languages, not in functional languages. Because the problem with object-oriented languages is they've got all this implicit environment that they carry around with them. You wanted a banana but what you got was a gorilla holding the banana and the entire jungle.

And another thing, very important for problem solving, is asking my colleagues, “How would you solve this?” It happens so many times that you go to them and you say, “I've been wondering about whether I should do it this way or that way. I've got to choose between A and B,” and you describe A and B to them and then halfway through that you go, “Yeah, B. Thank you, thank you very much.” You need this intelligent white board—if you just did it yourself on a white board there's no feedback. But a human being, you're explaining to them on the white board the alternative solutions and they join in

...more

Armstrong: Yeah, then all the bits fit together. But maybe I can't explain it to anybody. I just get a very strong feeling that if I start writing the program now it'll work. I don't really know what the solution is. It's like an egg. The chicken's ready to lay the egg. Now I'm ready to lay the egg.

One that's tricky is slight spelling errors in variable names. So I choose variable names that are very dissimilar, deliberately, so that error won't occur. If you've got a long variable like personName and you've got personNames with an “s” on the end, that's a list of person names, that will be something that my eye will tend to read what I thought it should have been. And so I'd have personName and then listOfPeople. And I do that deliberately because I know that my eye will see what I thought I'd written.

Armstrong: I'd write them in C or assembler or something. Or I might actually write them in a dialect of Erlang and then cross-compile the Erlang to C. Make a dialect—this kind of domain-specific language kind of idea. Or I might write Erlang programs which generate C programs rather than writing the C programs by hand. But the target language would be C or assembler or something. Whether I wrote them by hand or generated them would be the interesting question. I'm tending toward automatically generating C rather than writing it by hand because it's just easier.

It's a motivating force to implement something; I really recommend it. If you want to understand C, write a C compiler. If you want to understand Lisp, write a Lisp compiler or a Lisp interpreter. I've had people say, “Oh, wow, it's really difficult writing a compiler.” It's not. It's quite easy. There are a lot of little things you have to learn about, none of which is difficult. You have to know about data structures. You need to know about hash tables, you need to know about parsing. You need to know about code generation. You need to know about interpretation techniques. Each one of these

...more

Seibel: What are the techniques that you use there? Print statements? Armstrong: Print statements. The great gods of programming said, “Thou shalt put printf statements in your program at the point where you think it's gone wrong, recompile, and run it.”

The code shows me what it does. It doesn't show me what it's supposed to do.

Peyton Jones: Well, nobody ever taught me to program. I'm not sure I ever really missed that. Today I feel as if my main programming blank spot is that I don't have a deep, visceral feel for object-oriented programming. Of course I know how to write object-oriented programs and all that. But something different happens when you do something at scale. When you build big programs that last for a long time and you use class hierarchies in a complex way and you build frameworks—that's what I mean by a deep, visceral understanding. Not the kind of stuff that you'd learn immediately from a book. I

...more

This was my main insight about small companies: to be an entrepreneur you need to get energy from stressful situations involving money, whereas my energy is sapped by stressful situations involving money. My boss was the managing director of this company. The worse things got, the more energetic he would be.

Was it just that it was a neat trick? In some ways I think neat tricks are very significant. Lazy evaluation was just so neat and you could do such remarkable different things that you wouldn't think were possible.

Peyton Jones: I think probably the big changes in how I think about programming have been to do with monads and type systems. Compared to the early 80s, thinking about purely functional programming with relatively simple type systems, now I think about a mixture of purely functional, imperative, and concurrent programming mediated by monads.

Peyton Jones: I think my default is not to write something very general to begin with. So I try to make my programs as beautiful as I can but not necessarily as general as I can. There's a difference. I try to write code that will do the task at hand in a way that's as clear and perspicuous as I can make it. Only when I've found myself writing essentially the same code more than once, then I'll think, “Oh, let's just do it once, passing some extra arguments to parameterize it over the bits that are different between the two.”

But if you ask me, is STM better than locks and condition variables? Now you're comparing like with like. Yes. I think it completely dominates locks and condition variables. So just forget locks and condition variables. For multiple program counters, multiple threads, diddling on shared memory on a shared-memory multicore: STM. But is that the only way to write concurrent programs? Absolutely not.

A sequential implementation of a double-ended queue is a first-year undergraduate programming problem. For a concurrent implementation with a lock per node, it's a research paper problem. That is too big a step. It's absurd for something to be so hard. With transactional memory it's an undergraduate problem again.

Seibel: What's your desert-island list of books for programmers? Peyton Jones: Well, you should definitely read Jon Bentley's Programming Pearls. Speaking of pearls, Brian Hayes has a lovely chapter in this book Beautiful Code entitled, “Writing Programs for ‘The Book’” where I think by “The Book” he means a program that will have eternal beauty. You've got two points and a third point and you have to find which side of the line between the two points this third point is on. And several solutions don't work very well. But then there's a very simple solution that just does it right. Of course,

...more

This highlight has been truncated due to consecutive passage length restrictions.

I think the primary limitation on software is not the speed of computers but our ability to get our heads around what it's supposed to do.

Peyton Jones: For me, part of what makes programming fun is trying to write programs that have an intellectual integrity to them. You can go on slapping mud on the side of a program and it just kind of makes it work for a long time but it's not very satisfying. So I think a good attribute of a good programmer, is they try to find a beautiful solution. Not everybody has the luxury of being able to not get the job done today because they can't think of a beautiful way to do it.

I sometimes feel a bit afraid about the commercial world with, on the one hand, the imperatives of getting it done because the customer needs it next week and, on the other hand, the sheer breadth rather than depth of the systems that we build.

Peyton Jones: Sometimes to say that it's obviously right doesn't mean that you can see that it's right without any mental scaffolding. It may be that you need to be told an insight to figure out why it's right. If you look at the code for an AVL tree, if you didn't know what it was trying to achieve, you really wouldn't have a clue why those rotations were taking place. But once you know the invariant that it's maintaining, you can see, ah, if we maintain that invariant then we'll get log lookup time. And then you look at each line of code and you say, “Ah, yes, it maintains the invariant.” So

...more

Seibel: And do you remember any particular aha! moments where you noticed the difference between working on something by yourself and working on a team? Norvig: I don't know if it was so much moments, but just this realization that you can't do everything yourself. I think a lot of programming is being able to keep as much as you can inside your head, but that only goes so far, at least in my head. Then you have to rely on other people to have the right abstractions so that you can use what they have. I started thinking about it in terms of, “How is this likely done?” rather than, “I know how

...more

Seibel: Are there any books that you think all programmers should read? Norvig: I think there are a lot of choices. I don't think there's only one path. You've got to read some algorithm book. You can't just pick these things out and paste them together. It could be Knuth, or it could be the Cormen, Leiserson, and Rivest. And there are others. Sally Goldman's here now. She has a new book out that's a more practical take on algorithms. I think that's pretty interesting. So you need one of those. You need something on the ideas of abstraction. I like Abelson and Sussman. There are others.

Norvig: I think one of the most important things is being able to keep everything in your head at once. If you can do that you have a much better chance of being successful. That makes a small program easier. For a bigger program, you need extra tools to be able to handle that.

Norvig: I see tests more as a way of correcting errors rather than as a way of design. This extreme approach of saying, “Well, the first thing you do is write a test that says I get the right answer at the end,” and then you run it and see that it fails, and then you say, “What do I need next?”—that doesn't seem like the right way to design something to me. It seems like only if it was so simple that the solution was preordained would that make sense. I think you have to think about it first. You have to say, “What are the pieces? How can I write tests for pieces until I know what some of them

...more

We were doing this shopping search and saying, “We want a test where on this query we want to get 80 percent right answers.” And so they're saying, “Right! So if it's a wrong answer it's a bug, right?” And I said, “No, it's OK to have one wrong answer as long at it's not 80 percent.” So they say, “So a wrong answer's not a bug?” It was like those were the only two possibilities. There wasn't an idea that it's more of a trade-off.

On the fourth hand, one reason I don't like IDEs quite so much is that they can make it hard to know when you've actually seen everything. Walking around in a graph, it's hard to know you've touched all the parts. Whereas if you've got some linear order, it's guaranteed to take you through everything.

But I think if a program is well written, there will be something about its structure that will guide me to various parts of it in an order that will make some kind of sense. You know, it's not just what the program does—there's a story. There's a story about how the program is organized, there's a story about the context in which the program is expected to operate. And one would hope that there will be something about the program, whether it's block comments at the start of each routine or an overview document that comes separately or just choices of variable names that will somehow convey

...more

Seibel: Other than the possibility of implementing it at all, how do you decide whether your interfaces are good? Steele: I usually think about generality and orthogonality. Conformance to accepted ways of doing things. For example, you don't put the divisor before the dividend unless there's a really good reason for doing so because in mathematics we're used to doing it the other way around. So you think about conventional ways of doing things. I've done enough designs that I think about ways I've done it before and whether they were good or bad. I'm also designing relative to some related

...more

On the other hand, it's really hard to make a language that's great at everything, in part just because there are only so many concise notations to go around. There's this Huffman encoding problem. If you make something concise, something is going to have to be more verbose as a consequence. So in designing a language, one of the things you think about is, “What are the things I want to make very easy to say and very easy to get right?” But with the understanding that, having used up characters or symbols for that purpose, you're going to have made something else a little bit harder to say.

Seibel: One way to resolve that is the way Lisp does—make everything uniformly semiconcise. Where the uniformity has the advantage of allowing users of the language to easily add their own equally uniform, semiconcise, first-class syntactic extensions.

Something I worry about a lot when I write, that I'm less worried about with a computer, is about the ways in which English is ambiguous. I'm constantly worrying about ways in which the reader might misinterpret what I've written. So I've actually spent a lot of time consciously crafting the mechanics of my prose style to use constructions that are less likely to be misinterpreted.

Seibel: Yet lots of people have tried to come up with languages or programming systems that will allow “nonprogrammers” to program. I take it you think that might be a doomed enterprise—the problem about programming is not that we haven't found the right syntax for it but that people have to learn this unnatural act. Steele: Yeah. And I think that the other problem is that people like to focus on the main thing they have in mind and not worry about the edge cases or the screw cases or things that are unlikely to happen. And yet it is precisely in those cases where people are most likely to

...more

Steele: So I guess there's lessons there—the lesson I should have drawn is there may be more than one bug here and I should have looked harder the first time. But another lesson is that if a bug is thought to be rare, then looking at rarely executed paths may be fruitful. And a third thing is, having good documentation about what the algorithm is trying to do, namely a reference back to Knuth, was just great.

Deutsch: Absolutely. Yes. Programs built in Python and Java—once you get past a certain fairly small scale—have all the same problems except for storage corruption that you have in C or C++. The essence of the problem is that there is no linguistic mechanism for understanding or stating or controlling or reasoning about patterns of information sharing and information access in the system. Passing a pointer and storing a pointer are localized operations, but their consequences are to implicitly create this graph. I'm not even going to talk about multithreaded applications—even in

...more