Lorin Hochstein's Blog, page 12

February 26, 2023

You can’t see what you don’t have a frame of reference for

In my recent talk at the LFI conference, I referenced this quote from Karl Weick:

I was reminded of that quote when reading a piece by the journalist Yair Rosenberg:

Last week, two Jews were shot as they left morning prayers in Los Angeles. And yet, the twin attacks received little national attention, which is why you're probably hearing about them for the first time. I wrote about the antisemitism we don't discuss: https://t.co/OMzMEKfPvC

— Yair Rosenberg (@Yair_Rosenberg) February 23, 2023

Rosenberg notes that this particular story hasn’t gotten much widespread press, and his theory is that the attacks, as well as other uncovered antisemitic events, don’t fit neatly into the established narrative frames of reference.

"When Americans do not have a convenient partisan frame through which to process an anti-Semitic act, it is often met with silence or soon dropped from the agenda." https://t.co/OMzMEKfPvC

— Yair Rosenberg (@Yair_Rosenberg) February 23, 2023

Such is the problem with events that don’t fit neatly into a bucket: we can’t discuss them effectively because they don’t fit into one of our pre-existing categories.

February 23, 2023

My talk from the LFI conference

My talk from the recent Learning from Incidents in Software conference is now up.

Unfortunately, the first few minutes were lost due to technical issues. You’ll just have to take my word for it that the missing part of my talk was truly astounding, a veritable tour de force.

My slides are also available for download.

February 19, 2023

Good category, bad category (or: tag, don’t bucket)

The baby, assailed by eyes, ears, nose, skin, and entrails at once, feels it all as one great blooming, buzzing confusion; and to the very end of life, our location of all things in one space is due to the fact that the original extents or bignesses of all the sensations which came to our notice at once, coalesced together into one and the same space.

William James, The Principles of Psychology (1890)

I recently gave a talk at the Learning from Incidents in Software conference. On the one hand, I mentioned the importance of finding patterns in incidents:

But I also had some… unkind words about categorizing incidents.

We humans need categories to make sense of the world, to slice it up into chunks that we can handle cognitively. Otherwise, the world would just be, as James put it in the quote above, one great blooming, buzzing confusion. So, categorization is essential to humans functioning in the world. In particular, if we want to identify meaningful patterns in incidents, we need to do categorization work.

But there are different techniques we can use to categorize incidents, and some techniques are more productive than others.

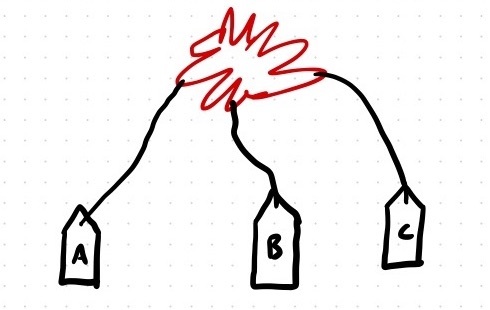

The buckets approach An incident must be placed in exactly one bucket

An incident must be placed in exactly one bucketOne technique is what I’ll call the buckets approach of categorization. That’s when you define a set up of categories up front, and then you assign each incident that happens to exactly one bucket. For example, you might have categories such as:

bug (new)bug (latent)deployment problemthird partyI have seen two issues with the bucketing approach. The first issue is that I’ve never actually seen it yield any additional insight. It can’t provide insights into new patterns because the patterns have already been predefined as the buckets. The best it can do is give you one type of filter to drill down and look at some more issues in more detail. There’s some genuine value in giving you a subset of related incidents to look more closely at, but in practice, I’ve rarely seen anybody actually do the harder work of looking at these subsets.

The second issue is that incidents, being messy things, often don’t fall cleanly into exactly one bucket. Sometimes they fall into multiple, and sometimes they don’t fall into any, and sometimes it’s just really unclear. For example, an issue may involve both a new bug and a deployment problem (as anyone who has accidentally deployed a bug to production and then gotten into even more trouble when trying to roll things back can tell you). The bucket approach forces you to discard information that is potentially useful in identifying patterns by requiring you to put the incident into exactly one bucket. This inevitably leads to arguments about whether an incident should be classified into bucket A or bucket B. This sort of argument is a symptom that this approach is throwing away useful information, and that it really shouldn’t go into a single bucket at all.

The tags approach You may hang multiple tags on an incident

You may hang multiple tags on an incidentAnother technique is what I’ll call the tags method of categorization. With the tags method, you annotate an incident with zero, one, or multiple tags. The idea behind tagging is that you want to let the details of the incident help you come up with meaningful categories. As incidents happen, you may come up with entirely new categories, or coalesce existing ones into one, or split them apart. Tags also let you examine incidents across multiple dimensions. Perhaps you’re interested in attributes of the people that are responding (maybe there’s a “hero” tag if there’s a frequent hero who comes in to many incidents), or maybe there’s production pressure related to some new feature being developed (in which case, you may want to label with both production-pressure and feature-name), or maybe it’s related to migration work (migration). Well, there are many different dimensions. Here are some examples of potential tags:

query-scope-accidentally-too-broadpeople-with-relevant-context-out-of-officeunforeseen-performance-impactThose example tags may seem weirdly specific, but that’s OK! The tags might be very high level (e.g., production-pressure) or very low level (e.g., pipeline-stopped-in-the-middle), or anywhere in between.

Top-down vs circularThe bucket approach is strictly top-down: you enforce a categorization on incidents from the top. The tags approach is a mix of top-down and bottom-up. When you start tagging, you’ll always start with some prior model of the types of tags that you think are useful. As you go through the details of incidents, new ideas for tags will emerge, and you’ll end up revising your set of tags over time. Someone might revisit the writeup for an incident that happened years ago, and add a new tag to it. This process of tagging incidents and identifying potential new tags categories will help you identify interesting patterns.

The tag-based approach is messier than the bucket-based one, because your collection of tags may be very heterogeneous, and you’ll still encounter situations where it’s not clear whether a tag applies or not. But it will yield a lot more insight.

February 18, 2023

The value of social science research

One of the benefits of basic scientific research is the potential for bringing about future breakthroughs. Fundamental research in the physical and biological sciences might one day lead to things like new sources of power, radically better construction materials, remarkable new medical treatments. Social scientific research holds no such promise. Work done in the social sciences is never going to yield, say, a new vaccine, or something akin to a transistor.

The statistician (and social science researcher) Andrew Gelman goes so far as to say, literally, that the social sciences are useless. But I’m being unfair to Gelman by quoting him selectively. He actually advocates for social science, not against it. Gelman argues that good social science is important, because we can’t actually avoid social science, and if we don’t have good social science research then we will be governed by bad social “science”. The full title of his blog post is The social sciences are useless. So why do we study them? Here’s a good reason.

We study the social sciences because they help us understand the social world and because, whatever we do, people will engage in social-science reasoning.

Andrew Gelman

I was reminded of this at the recent Learning from Incidents in Software conference when listening to a talk by Dr. Ivan Pupulidy titled Moving Gracefully from Compliance to Learning, the Beginning of Forest Service’s Learning Journey. Pupulidy is a safety researcher who worked at the U.S. Forest Service and is now a professor at University of Alabama, Birmingham.

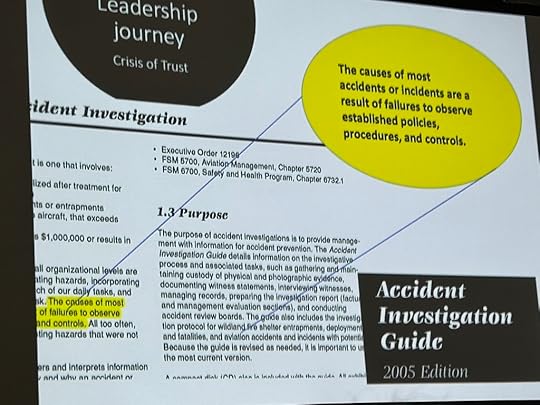

In particular, it was this slide from Pupulidy’s talk that struck me right between the eyes.

Thanks to work done by Ivan Pupulidy, the Forest Service doesn’t look at incidents this way anymore

Thanks to work done by Ivan Pupulidy, the Forest Service doesn’t look at incidents this way anymoreThe text in yellow captures what you might call the “old view” of safety, where accidents are caused by people not following the rules properly. It is a great example of what I would call bad social science, which invariably leads to bad outcomes.

The dangers of bad social science have been known for a while. Here’s the economist John Maynard Keynes:

The ideas of economists and political philosophers, both when they are right and when they are wrong, are more powerful than is commonly understood. Indeed the world is ruled by little else. Practical men, who believe themselves to be quite exempt from any intellectual influence, are usually the slaves of some defunct economist.

John Maynard Keynes, The General Theory of Employment, Interest and Money (February 1936)

Earlier and more succinctly, here’s the journalist H.L. Mencken:

Explanations exist; they have existed for all time; there is always a well-known solution to every human problem — neat, plausible, and wrong.

H.L. Mencken, “The Divine Afflatus” in New York Evening Mail (16 November 1917)

As Mencken notes, we can’t get away from explanations. But if we do good social science research, we can get better explanations. These explanations often won’t be neat, and may not even be plausible on first glance. But they at least give us a fighting chance at not being (completely) wrong about the social world.

February 12, 2023

The high cost of low ambiguity

Natural language is often frustratingly ambiguous.

Homonyms are ambiguous at the individual word levelThere are scenarios where ambiguity is useful. For example, in diplomacy, the ambiguity of a statement can provide wiggle room so that the parties can both claim that they agree on a statement, while simultaneously disagreeing on what the statement actually means. (Mind you, this difference in interpretation might lead to problems later on).

Surprisingly, ambiguity can also be useful in engineering work, specifically around design artifacts. The sociologist Kathryn Henderson has done some really interesting research on how these ambiguous boundary objects are able to bridge participants from different engineering domains to work together, where each participant has a different, domain-specific interpretation of the artifact. For more on this, check out her paper Flexible Sketches and Inflexible Data Bases: Visual Communication, Conscription Devices, and Boundary Objects in Design Engineering and her book On Line and On Paper.

Humorists and poets have also made good use of the ambiguity of natural language. Here’s a classic example from Grouch Marx:

Groucho Marx was a virtuoso in using ambiguous wordplay to humorous effectDespite these advantages, ambiguity in communication hurts more often than it helps. Anyone who has coordinated over Slack during the incident has felt the pain of the ambiguity of Slack messages. There’s the famous $5 million missing comma. As John Gall, the author of Systemantics, reminds us, ambiguity is often the enemy of effective communication.

This type of ambiguity is rarely helpfulTHE MESSAGE SENT IS NOT NECESSARILY THE MESSAGE RECEIVED.

— Systemantics Quotes (@SysQuotes) February 11, 2022

Given the harmful nature of ambiguity in communication, the question arises: why is human communication ambiguous?

One theory, which I find convincing, is that communication is ambiguous because it reduces overall communication costs. In particular, it’s much cheaper for the speaker to make utterances that are ambiguous than to speak in a fully unambiguous way. For more details on this theory, check out the paper The communicative function of ambiguity in language by Piantodosi, Tily, and Gibson.

Note that there’s a tradeoff: easier for the speaker is more difficult for the listener, because the listener has to do the work of resolving the ambiguity based on the context. I think people pine for fully unambiguous forms of communication because they have experienced firsthand the costs of ambiguity as listeners but haven’t experienced what the cost to the speaker would be of fully unambiguous communication.

Even in mathematics, a field which we think of us being the most formal and hence unambiguous, mathematical proofs themselves are, in some sense, not completely formal. This has led to amusing responses from mathematical-inclined computer scientists who think that mathematicians are doing proofs in an insufficiently formal way, like Leslie Lamport’s How to Write a Proof and Guy Steele’s It’s Time for a New Old Language.

My claim is that the reason you don’t see mathematical proofs written in a formal-in-the-machine-checkable sense is that mathematicians have collectively come to the conclusion that it isn’t worth the effort.

The role of cost-to-the-speaker vs cost-to-the-listener in language ambiguity is a great example of the nature of negotiated boundaries. We are constantly negotiating boundaries with other people, and those negotiations are generally of the form “we both agree that this joint activity would benefit both of us, but we need to resolve how we are going to distribute the costs amongst us.” Human evolution has worked out how to distribute the costs of verbal communication between speaker and listener, and the solution that evolution hit upon involves ambiguity in natural language.

January 21, 2023

Why you can’t buy an observability solution off the shelf

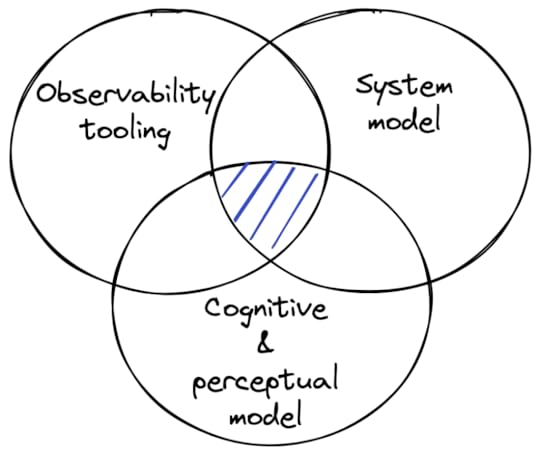

An effective observability solution is one that helps an operator quickly answer the question “Why is my system behaving this way?” This is a difficult problem to solve in the general case because it requires the successful combination of three very different things.

Most talk about observability is around vendor tooling. I’m using observability tooling here to refer to the software that you use for collecting, querying, and visualizing signals. There are a lot of observability vendors these days (for example, check out Gartner’s APM and Observability category). But the tooling is only one part of the story: at best, it can provide you with a platform for building an observability solution. The quality of that solution is going to depend on how good your system model is and your cognitive & perceptual model is that it’s encoded in your solution.

A good observability solution will have embedded within it a hierarchical model of your system. This means it needs to provide information about what the system is supposed to do (functional purpose), the subsystems that make up the system, all of the way down to the low-level details of the components (specific lines of code, CPU utilization on the boxes, etc).

As an example, imagine your org has built a custom CI/CD system that you want to observe. Your observability solution should give you feedback about whether the system is actually performing its intended functions: Is it building artifacts and publishing them to the artifact repository? Is it promoting them across environments? Is it running verifications against them?

Now, imagine that there’s a problem with the verifications: it’s starting them but isn’t detecting that they’re complete. The observability solution should enable you to move from that function (verifications) to the subsystem that implements it. That subsystem is likely to include both application logic and external components (e.g., Temporal, MySQL). Your observability solution should have encoded within it the relationships between the functional aspects and the relevant implementations to support the work of moving up and down the abstraction hierarchy while doing the diagnostic work. Building this model can’t be outsourced to a vendor: ultimately, only the engineers who are familiar with the implementation details of the system can create this sort of hierarchical model.

Good observability tooling and a good system model alone aren’t enough. You need to understand how operators do this sort of diagnostic work in order to build an interface that supports this sort of work well. That means you need a cognitive model of the operator to understand how the work gets done. You also need a perceptual model of how it is that humans effectively navigate through the world so you can leverage this model to build an interface that will enable operators to move fluidly across data sources from different systems. You need to build an operational solution with high visual momentum to avoid an operator needing to navigate through too many displays.

Every observability solution has an implicit system model and an implicit cognitive & perceptual model. However, while work on the abstraction hierarchy dates back to the 1980s, and visual momentum goes back to the 1990s, I’ve never seen this work, or the field of cognitive systems engineering in general, explicitly referenced. This work remains largely unknown in the tech world.

January 17, 2023

Does any of this sound familiar?

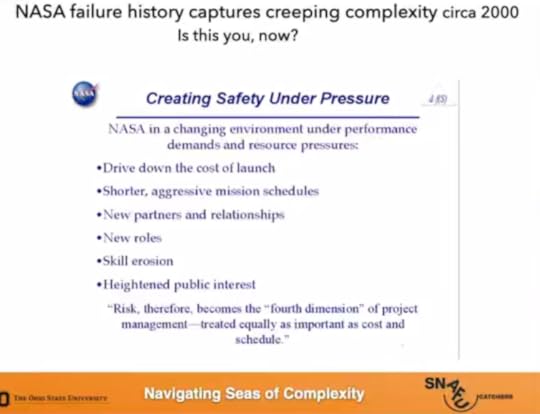

The other day, David Woods responded to one of my tweets with a link to a talk:

claim of root cause is ex. of component substitution fallacy. All incidents that threaten failure reveal component weaknesses due to finite

— David Woods (@ddwoods2) January 16, 2023

resources & tradeoffs -> easy to miss the critical systemic/emergent factors see min 25 https://t.co/OsYy2U8fsA

I had planned to write a post on the component substitution fallacy that he referenced, but I didn’t even make it to minute 25 of that video before I heard something else that I had to post first, at the 7:54 mark. The context of it is describing the state of NASA as an organization at the time of the Space Shuttle Columbia accident.

And here’s what Woods says in the talk:

Now, does this describe your organization?

Are you in a changing environment under resource pressures and new performance demands?

Are you being pressured to drive down costs, work with shorter, more aggressive schedules?

Are you working with new partners and new relationships where there are new roles coming into play, often as new capabilities come to bear?

Do we see changing skillsets, skill erosion in some areas, new forms of expertise that are needed?

Is there heightened stakeholder interest?

Finally, he asks:

How are you navigating these seas of complexity that NASA confronted?

How are you doing with that?

January 15, 2023

A small mistake does not a complex systems failure make

I’ve been trying to take a break from Twitter lately, but today I popped back in, only to be trolled by a colleague of mine:

@norootcause, it looks like “they found the root cause” of that FAA outage

— Bertrand MT (@bertrandmt) January 15, 2023https://t.co/dBQzaVnGPk

Here’s a quote from the story:

The source of the problem was reportedly a single engineer who made a small mistake with a file transfer.

Here’s what I’d like you to ponder, dear reader. Think about all of the small mistakes that happen every single day, at every workplace, on the face of the planet. If a small mistake was sufficient to take down a complex system, then our systems would be crashing all of the time. And, yet, that clearly isn’t the case. For example, before this event, when was the last time the FAA suffered a catastrophic outage?

Now, it might be the case that no FAA employees have ever made a small mistake until now. Or, more likely, the FAA system works in such a way that small mistakes are not typically sufficient for taking down the entire system.

To understand this failure mode, you need to understand how it is that the FAA system is able to stay up and running on a day-to-day basis, despite the system being populated by fallible human beings who are capable of making small mistakes. You need to understand how the system actually works in order to make sense of how a large-scale failure can happen.

Now, I’ve never worked in the aviation industry, and consequently I don’t have domain knowledge about the FAA system. But I can tell you one thing: a small mistake with a file transfer is a hopelessly incomplete explanation for how the FAA system actually failed.

January 1, 2023

The Allspaw-Collins effect

While reading Laura Maguire’s PhD dissertation, Controlling the Costs of Coordination in Large-scale Distributed Software Systems, when I came across a term I hadn’t heard before: the Allspaw-Collins effect:

An example of how practitioners circumvent the extensive costs inherent in the Incident Command model is the Allspaw-Collins effect (named for the engineers who first noticed the pattern). This is commonly seen in the creation of side channels away from the group response effort, which is “necessary to accomplish cognitively demanding work but leaves the other participants disconnected from the progress going on in the side channel (p.81)”

Here’s my understanding:

A group of people responding to an incident have to process a large number of signals that are coming in. One example of such signals is the large number of Slack messages appearing in the incident channel as incident responders and others provide updates and ask questions. Another example would be additional alerts firing.

If there’s a designated incident commander (IC) who is responsible for coordination, the IC can become a bottleneck if they can’t keep up with the work of processing all of these incoming signals.

The effect captures how incident responders will sometimes work around this bottleneck by forming alternate communication channels so they can coordinate directly with each other, without having to mediate through the IC. For example, instead of sending messages in the main incident channel, they might DM each other or communicate in a separate (possibly private) channel.

I can imagine how this sort of side-channel communication would be officially sanctioned (“all incident-related communication should happen in the incident response channel!“), and also how it can be adaptive.

Maguire doesn’t give the first names of the people the effect is named for, but I strongly suspect they are John Allspaw and Morgan Collins.

December 28, 2022

Southwest airlines: a case study in brittleness

What happens to a complex system when it gets pushed just past its limits?

In some cases, the system in question is able to actually change its limits, so it can handle the new stressors that get thrown at it. When a system is pushed beyond its design limit, it has to change the way that it works. The system needs to adapt its own processes to work in a different way.

We use the term resilience to describe the ability of a system to adapt how it does its work, and this is what resilience engineering researchers study. These researchers have identified multiple factors that foster resilience. For example, people on the front lines of the system need autonomy to be able to change the way they work, and they also need to coordinate effectively with others in the system. A system under stress inevitably need access to additional resources, which means that there needs to be extra capacity that was held in reserve. People need to be able to anticipate trouble ahead, so that they can prepare to change how they work and deploy the extra capacity.

However, there are cases when systems fail to adapt effectively when pushed just beyond their limits. These systems face what Woods and Branlat call decompensation: they exhaust their ability to keep up with the demands placed on them, and their performance falls sharply off of a cliff. This behavior is the opposite of resilience, and researchers call it brittleness.

The ongoing problems facing Southwest Airlines provides us with a clear example of brittleness. External factors such as the large winter storm pushed the system past its limit, and it was not able to compensate effectively in the face of these stressors.

There are many reports coming out of the media now about different factors that contributed to Southwest’s brittleness. I think it’s too early to treat these as definitive. A proper investigation will likely take weeks if not months. When the investigation finally gets completed, I’m sure there will be additional factors identified that haven’t been reported on yet.

But one thing we can be sure of at this point is that Southwest Airlines fell over when pushed beyond its limits. It was brittle.