Lorin Hochstein's Blog, page 11

August 20, 2023

Oddly influenced podcast

Brian Marick recently interviewed me about resilience engineering on his Oddly Influenced podcast. I’m pretty happy with how it turned out.

June 26, 2023

Active knowledge

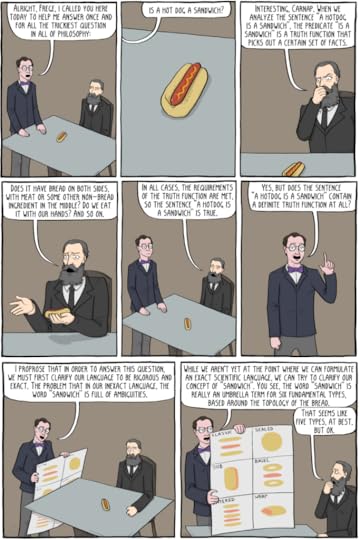

Existential Comics is an extremely nerdy webcomic about philosophers, written and drawn by Corey Mohler, a software engineer(!). My favorite Existential Comics strip is titled Is a Hotdog a Sandwich? A Definitive Study. The topic is… exactly what you would expect:

At the risk of explaining a joke: the punchline is that we can conclude that a hotdog isn’t a sandwich because people don’t generally refer to hotdogs as sandwiches. In Wittgenstein’s view, the meaning of a phrase isn’t determined by a set of formal criteria. Instead, language is use.

In a similar spirit, in his book Designing Engineers, Louis Bucciarelli proposed that we should understand “knowing how something works” to mean knowing how to work it. He begins with an anecdote about telephones:

A few years ago, I attended a national conference on technological literacy… One of the main speakers, a sociologist, presented data he had gathered in the form of responses to a questionnaire. After a detailed statistical analysis, he had concluded that we are a nation of technological illiterates. As an example, he noted how few of us (less than 20 percent) know how our telephone works.

This statement brought me up short. I found my mind drifting and filling with anxiety. Did I know how my telephone works?

Bucciarelli tries to get at what the speaker actually intended by “knowing how a telephone works”.

I squirmed in my seat, doodled some, then asked myself, What does it mean to know how a telephone works? Does it mean knowing how to dial a local or long-distance number? Certainly I knew that much, but this does not seem to be the issue here.

He dives down a level of abstraction into physical implementation details.

No, I suspected the question to be understood at another level, as probing the respondent’s knowledge of what we might call the “physics of the device.”

I called to mind an image of a diaphragm, excited by the pressure variations of speaking, vibrating and driving a coil back and forth within a a magnetic field… If this was what the speaker meant, then he was right: Most of us don’t know how our telephone works.

But then Bucciarelli continues to elaborate this scenario:

Indeed, I wondered, does [the speaker] know how his telephone works? Does he know about the heuristics used to achieve optimum routing for long distance calls? Does he know about the intricacies of the algorithms used for echo and noise suppression? Does he know how a signal is transmitted to and retrieved from a satellite in orbit? Does he know how AT&T, MCI, and the local phone companies are able to use the same network simultaneously? Does he know how many operators are needed to keep this system working, or what those repair people actually do when they climb a telephone pole? Does he know about corporate financing, capital investment strategies, or the role of regulation in the functioning of this expansive and sophisticated communication system?

Does anyone know how their telephone works?

At this point, I couldn’t help thinking of that classic tech interview question, “What happens when you type a URL into address bar of your web browser and hit enter”? It’s a fun question to ask precisely becaise there are so many different aspects to the overall system that you could potentially dig in on (Do you know how your operating system services keyboard interrupts? How your local Wi-Fi protocol works?). Can anyone really say that they understand everything that happens after hitting enter?

Because no individual possesses this type of comprehensive knowledge of engineered systems, Bucciarelli settles on a definition that relies on active knowledge: knowing-how-it-works as knowing-how-to-use-it.

No, the “knowing how it works” that has meaning and significance is knowing how to do something with the telephone—how to act on it and react to it, how to engage and appropriate the technology according to one’s needs and responsibilities.

I thought of Bucciarelli’s definition while reading Andy Clark’s book Surfing Uncertainty. In Chapter 6, Clark claims that our brain does not need to account for all of its sensory input to build a model of what’s happening in the world. Instead, it relies in simpler models that are sufficient for determining how to act (emphasis mine).

This may well result … in the use of simple models whose power resides precisely in their failing to encode every detail and nuance present in the sensory array. For knowing the world, in the only sense that can matter to an evolved organism, means being able to act in that world: being able to respond quickly and efficiently to salient environmental opportunities.

The through line that connects Wittgenstein, Bucciarelli, and Clark, is the idea of knowledge as an active thing. Knowing implies using and acting. To paraphrase David Woods, knowledge is a verb.

June 17, 2023

Resilience requires helping each other out

A common failure mode in complex systems is that some part of the system hits a limit and falls over. In the software world, we call this phenomenon resource exhaustion, and a classic example of this is running out of memory.

The simplest solution to this problem is to “provision for peak”: to build out the system so that it always has enough resources to handle the theoretical maximum load. Alas, this solution isn’t practical: it’s too expensive. Even if you manage to overprovision the system, over time, it will get stretched to its limits. We need another way to mitigate the risk of overload.

Fortunately, it’s rare for every component of a system to reach its limit simultaneously: while one component might get overloaded, there are likely other components that have capacity to spare. That means that if one component is in trouble, it can borrow resources from another one.

Indeed, we see this sort of behavior in biological systems. In the paper Allostasis: A Model of Predictive Regulation, the neuroscientist Peter Sterling explains why allostasis is a better theory than homeostasis. Readers are probably familiar with the term homeostasis: it refers to how your body maintains factors in a narrow range, like keeping your body temperature around 98.6°F. Allostasis, on the other hand, is about how your body predicts where these sorts of levels should be, based on anticipated need. Your body then takes action to modify the current state of these levels. Here’ Sterling explaining why he thinks allostasis is superior, referencing the idea of borrowing resources across organs (emphasis mine)

A second reason why homeostatic control would be inefficient is that if each organ self-regulated independently, opportunities would be missed for efficient trade-offs. Thus each organ would require its own reserve capacity; this would require additional fuel and blood, and thus more digestive capacity, a larger heart, and so on – to support an expensive infrastructure rarely used. Efficiency requires organs to trade-off resources, that is, to grant each other short-term loans.

The systems we deal with are not individual organisms, but organizations that are made up of groups of people. In organization-style systems, this sort of resource borrowing becomes more complex. Incentives in the system might make me less inclined to lend you resources, even if doing so would lead to better outcomes for the overall system. In his paper The Theory of Graceful Extensibility: Basic rules that govern adaptive systems, David Woods borrows the term reciprocity from Elinor Ostrom to describe this property in a system of one agent being willing to lend resources to another as a necessary ingredient for resilience (emphasis mine):

Will the neighboring units adapt in ways that extend the [capacity for maneuver] of the adaptive unit at risk? Or will the neighboring units behave in ways that further constrict the [capacity for maneuver] of the adaptive unit at risk? Ostrom (2003) has shown that reciprocity is an essential property of networks of adaptive units that produce sustained adaptability.

I couldn’t help thinking of the Sterling and Woods papers when reading the latest issue of Nat Bennett’s Simpler Machines newsletter, titled What was special about Pivotal? Nat’s answer is reciprocity:

This isn’t always how it went at Pivotal. But things happened this way enough that it really did change people’s expectations about what would happen if they co-operated – in the game theory, Prisoner’s Dilemma sense. Pivotal was an environment where you could safely lead with co-operation. Folks very rarely “defected” and screwed you over if you led by trusting them.

People helped each other a lot. They asked for help a lot. We solved a lot of problems much faster than we would have otherwise, because we helped each other so much. We learned much faster because we helped each other so much.

And it was generally worth it to do a lot of things that only really work if everyone’s consistent about them. It was worth it to write tests, because everyone did. It was worth it to spend time fixing and removing flakes from tests, because everyone did. It was worth it to give feedback, because people changed their behavior. It was worth it to suggest improvements, because things actually got better.

There was a lot of reciprocity.

Nat’s piece is a good illustration of the role that culture plays in enabling a resilient organization. I suspect it’s not possible to impose this sort of culture, it has to be fostered. I wish this were more widely appreciated.

June 15, 2023

If you can’t tell a story about it, it isn’t real

We use stories to make sense of the world. What that means is that when events occur that don’t fit neatly into a narrative, we can’t make sense of them. As a consequence, these sorts of events are less salient, which means they’re less real.

In The Invisible Victims of American Anti-Semitism, Yair Rosenberg wrote in the Atlantic about the kinds of attacks that target Jews which don’t get much attention in the larger media. His claim is that this happens when these attacks don’t fit into existing narratives about anti-Semitism (emphasis mine):

What you’ll also notice is that all of the very real instances of anti-Semitism discussed above don’t fall into either of these baskets. Well-off neighborhoods passing bespoke ordinances to keep out Jews is neither white supremacy nor anti-Israel advocacy gone awry. Nor can Jews being shot and beaten up in the streets of their Brooklyn or Los Angeles neighborhoods by largely nonwhite assailants be blamed on the usual partisan bogeymen.

That’s why you might not have heard about these anti-Semitic acts. It’s not that politicians or journalists haven’t addressed them; in some cases, they have. It’s that these anti-Jewish incidents don’t fit into the usual stories we tell about anti-Semitism, so they don’t register, and are quickly forgotten if they are acknowledged at all.

In The 1918 Flu Faded in Our Collective Memory: We Might ‘Forget’ the Coronavirus, Too, Scott Hershberger speculated in Scientific American along similar lines about why historians paid little attention the Spanish Flu epidemic, even though it killed more people than World War I (emphasis mine):

For the countries engaged in World War I, the global conflict provided a clear narrative arc, replete with heroes and villains, victories and defeats. From this standpoint, an invisible enemy such as the 1918 flu made little narrative sense. It had no clear origin, killed otherwise healthy people in multiple waves and slinked away without being understood. Scientists at the time did not even know that a virus, not a bacterium, caused the flu. “The doctors had shame,” Beiner says. “It was a huge failure of modern medicine.” Without a narrative schema to anchor it, the pandemic all but vanished from public discourse soon after it ended.

I’m a big believer in the role of interactions, partial information, uncertainty, workarounds, tradeoffs, and goal conflicts as contributors to systems failures. I think the way to convince other people to treat these entities as first-class is to weave them into the stories we tell about how incidents happen. If we want people to see these things as real, we have to integrate them into narrative descriptions of incidents.

Because, If we can’t tell a story about something, it’s as if it didn’t happen.

June 11, 2023

When there’s no plan for this scenario, you’ve got to improvise

An incident is happening. Your distributed system has somehow managed to get itself stuck in a weird state. There’s a runbook, but because the authors didn’t foresee this failure mode ever happening, the runbook isn’t actually helpful here. To get the system back into a healthy state, you’re going to have to invent a solution on the spot.

In other words, you’re going to have to to improvise.

“We gotta find a way to make this fit into the hole for this using nothing but that.” – scene from Apollo 13

“We gotta find a way to make this fit into the hole for this using nothing but that.” – scene from Apollo 13Like uncertainty, improvisation is an aspect of incident response that we typically treat as a one-off, rather than as a first-class skill that we should recognize and cultivate. Not every incident requires improvisation to resolve, but the hairiest ones will. And it’s these most complex of incidents that are the ones we need to worry about the most, because they’re the ones that are costliest to the business.

One of the criticisms of resilience engineering as a field is that it isn’t prescriptive. Often, a response I’ll hear about resilience engineering research I talk about is “OK, Lorin, that’s interesting, but what should I actually do?” I think resilience engineering is genuinely helpful, and in this case it teaches us that improvisation requires local expertise, autonomy, and effective coordination.

To improvise a solution, you have to be able to effectively use the tools and technologies that you have on hand that are directly available to you in this situation, what Claude Levi Strauss referred to as bricolage. That means you have to know what those tools are and you have to be skilled in their use. That’s the local expertise part. You’ll often need to leverage what David Woods calls a generic capability in order to solve the problem at hand. That’s some element of technology that wasn’t explicitly designed to do what you need, but is generic enough that you can use it.

Improvisation also requires that the people with the expertise have the authority to take required actions. They’re going to need the ability to do risky things, which could potentially end up making things worse. That’s the autonomy part.

Finally, because of the complex nature of incidents, you will typically need to work with multiple people to resolve things. It may be that you don’t have the requisite expertise or autonomy, but somebody else does. Or it may be that the improvised strategy requires coordination across a group of people. I remember one time when I was the incident commander where there was a problem that was affecting a large number of services and the only remediation strategy was to restart or re-deploy the affected services: we had to effectively “reboot the fleet”. The deployment tooling at the time didn’t support that sort of bulk activity, so we had to do it manually. A group of us, sitting in the war room (this was in pre-COVID days), divvied up the work of reaching out to all of the relevant service owners. We coordinated using Google sheets. (In general, I’m opposed to writing automation scripts during an incident if doing the task manually is just as quick, because the blast radius of that sort of script is huge, and those scripts generally don’t get tested well before use because of the urgency).

While we don’t know exactly what we’ll be called on to do during an incident, we can prepare to improvise. For more on this topic, check out Matt Davis’s piece on Site Reliability Engineering and the Art of Improvisation.

June 10, 2023

Treating uncertainty as a first-class concern

One of the things that complex incidents have in common is the uncertainty that the responders experience while the incident is happening. Something is clearly wrong, that’s why an incident is happening. But it’s hard to make sense of the failure mode, on what precisely the problem is, based on the signals that we can observe directly.

Eventually, we figure out what’s going on, and how to fix it. By the time the incident review rolls around, while we might not have a perfect explanation for every symptom that we witnessed during the incident, we understand what happened well enough that the in-the-moment uncertainty is long gone.

Cooperative Advocacy: An Approach for Integrating Diverse Perspectives in Anomaly Response is a paper by Jennifer Watts-Englert and David Woods that compares two incidents involving NASA space shuttle missions: one successful and the other tragic. The paper discusses strategies for dealing with the authors call anomaly response, when something unexpected has happened. The authors describe a process they observed which they call Cooperative Advocacy for effectively dealing with uncertainty during an incident. They document how cooperative advocacy was applied in the successful NASA mission, and how it was not applied in the failed case.

It’s a good paper (it’s on my list!), and SREs and anyone else who deals with incidents will find it highly relevant to their work. For example, here’s a quote from the paper that I immediately connected with:

For anomaly response to be robust given all of the difficulties and complexities that can arise, all discrepancies must be treated as if they are anomalies to be wrestled with until their implications are understood (including the implications of being uncertain or placed in a difficult trade-off position). This stance is a kind of readiness to re-frame and is a basic building block for other aspects of good process in anomaly response. Maintaining this as a group norm is very difficult because following up on discrepancies consumes resources of time, workload, and expertise. Inevitably, following up on a discrepancy will be seen as a low priority for these resources when a group or organization operates under severe workload constraints and under increasing pressure to be “faster, better, cheaper”.

(See one of my earlier blog posts, chasing down the blipperdoodles).

But the point of this blog post isn’t to summarize this specific paper. Rather, it’s to call attention to the fact that anomaly response as a problem that we will face over and over again. Too often, we dismiss the anomaly we just faced in an incident as a weird, one-off occurrence. And while that specific failure mode likely will be a one-off, we’ll be faced with new anomalies in the future.

This paper treats anomaly response as a first-class entity, as a thing we need to worry about on an ongoing basis, as something we need to be able to get better at. We should do the same.

April 25, 2023

My SREcon 23 talk is up

The talk I gave at SREcon 23 Americas is now available for your viewing pleasure:

See also: an earlier post about my presentation style, which used this talk as an example.

April 15, 2023

Missing the forest for the trees: the component substitution fallacy

Here’s a brief excerpt from a talk by David Woods on what he calls the component substitution fallacy (emphasis mine):

claim of root cause is ex. of component substitution fallacy. All incidents that threaten failure reveal component weaknesses due to finite

— David Woods (@ddwoods2) January 16, 2023

resources & tradeoffs -> easy to miss the critical systemic/emergent factors see min 25 https://t.co/OsYy2U8fsA

Everybody is continuing to commit the component substitution fallacy.

Now, remember, everything has finite resources, and you have to make trade-offs. You’re under resource pressure, you’re under profitability pressure, you’re under schedule pressure. Those are real, they never go to zero.

So, as you develop things, you make trade offs, you prioritize some things over other things. What that means is that when a problem happens, it will reveal component or subsystem weaknesses. The trade offs and assumptions and resource decisions you made guarantee there are component weaknesses. We can’t afford to perfect all components.

Yes, improving them is great and that can be a lesson afterwards, but if you substitute component weaknesses for the systems-level understanding of what was driving the event … at a more fundamental level of understanding, you’re missing the real lessons.

Seeing component weaknesses is a nice way to block seeing the system properties, especially because this justifies a minimal response and avoids any struggle that systemic changes require.

Woods on Shock and Resilience (25:04 mark)

Whenever an incident happens, we’re always able to point to different components in our system and say “there was the problem!” There was a microservice that didn’t handle a certain type of error gracefully, or there was bad data that had somehow gotten past our validation checks, or a particular cluster was under-resourced because it hadn’t been configured properly, and so on.

These are real issues that manifested as an outage, and they are worth spending the time to identify and follow up on. But these problems in isolation never tell the whole story of how the incident actually happened. As Woods explains in the excerpt of his talk above, because of the constraints we work under, we simply don’t have the time to harden the software we work on to the point where these problems don’t happen anymore. It’s just too expensive. And so, we make tradeoffs, we make judgments about where to best spend our time as we build, test, and roll out our stuff. The riskier we perceive a change, the more effort we’ll spend on validation and rollout of the change.

And so, if we focus only on issues with individual components, there’s so much we miss about the nature of failure in our systems. We miss looking at the unexpected interactions between the components that enabled the failure to happen. We miss how the organization’s prioritization decisions enabled the incident in the first place. We also don’t ask questions like “if we are going to do follow-up work to fix the component problems revealed by this incident, what are the things that we won’t be doing because we’re prioritizing this instead?” or “what new types of unexpected interactions might we be creating by making these changes?” Not to mention incident-handling questions like “how did we figure out something was wrong here?”

In the wake of an incident, if we focus only on the weaknesses of individual components then we won’t see the systemic issues. And it’s the systemic will continue to bite us long after we’ve implemented all of those follow-up action items. We’ll never see the forest for the trees.

March 25, 2023

Some thoughts on my presentation style

I recently gave a talk at SREcon Americas. The talk isn’t posted yet, but you can find my slides here.

At this point in my career, I’ve developed a presentation style that I’m pretty happy with. This post captures some of my reflections on how I put together a slide deck.

One slide, one conceptMy goal is to have every slide convey exactly one concept. (I think this aspect of my style was influenced by watching a Lawrence Lessig talk online).

As a consequence, my slides are never a list of bullets. My ideal slide is a single sentence:

Because there’s one sentence, the audience members don’t need to scan the slide to figure out which part is relevant to what I’m currently saying. It makes it less work for them.

Sometimes I break up a sentence across slides:

I’ll often use a sentence fragment rather than a full sentence.

If I really want to emphasize a concept, I’ll use an image as a backdrop.

Note that this style means that my slides are effectively incomprehensible as standalone documents: they only make sense in the context of my talks.

Giving hints about talk structureI lose interest in a talk if I can’t tell where the speaker is going with it, and so I try to frame my talk at the beginning: I give people an overview of how my talk is structure. This is the “tell them what you’re going to tell them” part of the dictum: “tell them what you’re going to tell them, tell them, tell them what you told them.”

Note that this framing slide is the one place where I break my “no bullets” rule. When I summarize at the end of my talk, I dedicate a separate slide to each chunk. I also reword slightly.

Lots of little chunks

Lots of little chunksWhen I listen to a talk, my attention invariably wanders in and out, and I assume that’s happening to everyone in the audience as well. To deal with this, I try to structure my talk into many small, self-contained “chunks” of content, so that if somebody loses the thread during one chunk because of a distraction, then they can re-orient themselves when the next chunk comes along.

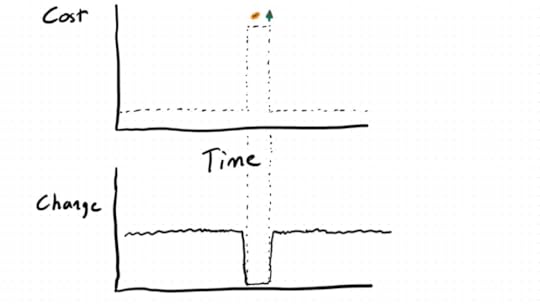

As an example, in the part of my talk that deals with change, I have one chunk about code freezes:

And then, a few slides later, I have another chunk which is a vignette about an SRE who identified the source of a breaking change on a hunch.

This effectively reduces the “blast radius” of losing the thread of the talk.

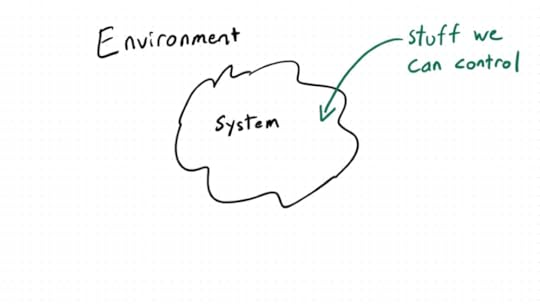

Hand-drawn diagramsI draw all of my own diagrams with the GoodNotes app on an an iPad with an Apple Pencil. I use a Frunsi Drawing Tablet Stand to make it easier to draw on the iPad.

I was inspired to draw my diagrams after reading Dan Roam (especially his book The Back of the Napkin).

I’ve always thought of myself as being terrible at drawing, and so it took me a long time to get to a point where I would freehand draw diagrams instead of using software.

(Yes, tools like Excalidraw can do sketch-y style diagrams, but I still prefer doing them by hand).

It turns out that very simple doodles are surprisingly effective at conveying concepts. And the rawness of the diagrams works to their advantage.

The hardest part for me was to learn how to sketch faces. I found the book Pencil Me In by Christina Wodtke very helpful for learning different ways to do this.

Sketchy text style

Sketchy text styleI use a tool called Deckset for generating my slides from Markdown, and it has a style called sketchnote which gives the text that sketch-y vibe.

The sketchy-ness is most apparent in the cover slide

The sketchy-ness is most apparent in the cover slideI really like this unpolished style. It makes the slides feel more intimate, and it meshes well with the diagrams that I draw.

Evoke a reactionBack when I was an academic, my conference talks were on research papers that I had co-authored, and the advice I was given was “the talk is an ad for the paper”: don’t aim for completeness in the talk, instead, get people interested in enough in the material that they’ll read the paper.

Nowadays, my talks aren’t based on papers, but the corresponding advice I’d give is “evoke a reaction”. There’s a limit to the quantity of information that you can convey in a talk, so instead go for conveying a reaction. Get people to connect emotionally in some way, so that they’ll care enough about the material that they’ll follow up to learn more some time in the future. I’d rather my audience leave angry with what I presented than leave bored and retain nothing.

In practice, that means that I’m willing to risk over-generalizing for effect.

One sentence slides aren’t going to be effective at conveying nuance, but they can be very effective at evoking reactions.

March 13, 2023

Making peace with the imperfect nature of mental models

We all carry with us in our heads models about how the world works, which we colloquially refer to as mental models. These models are always incomplete, often stale, and sometimes they’re just plain wrong.

For those of us doing operations work, our mental models include our understanding of how the different parts of the system work. Incorrect mental models are always a factor in incidents: incidents are always surprises, and surprises are always discrepancies between our mental models and reality.

There are two things that are important to remember. First, our mental models are usually good enough for us to do our operations work effectively. Our human brains are actually surprisingly good at enabling us to do this stuff. Second, while a stale mental model is a serious risk, none of us have the time to constantly verify that all of our mental models are up to date. This is the equivalent of popping up an “are you sure?” modal dialog box before taking any action. (“Are you sure that pipeline that always deploys to the test environment still deploys to test first?”)

Instead, because our time and attention is limited, we have to get good at identifying cues to indicate that our models have gotten stale or are incorrect. But, since we won’t always get these cues, it’s inevitable that our mental models will go out of date. But that’s just an inevitable part of the job when you work in a dynamic environment. And we all work in dynamic environments.