Lorin Hochstein's Blog, page 16

January 8, 2022

Henry Yin on what the cyberneticists got wrong

I’ve been on a bit of a control systems kick lately, and, serendipitously, I happened to see this tweet, which referenced a paper by Henry Yin at Duke University titled The crisis in neuroscience.

Henry @HenryYin19 and I discuss his view the brain is a big hierarchical set of control loops, how we should use control theory to study how brains underly behaviors, & the error engineers (& neuroscientists) make using control theory to study organismshttps://t.co/Ih0SWvmFBV

— Paul Middlebrooks (@pgmid) November 11, 2021

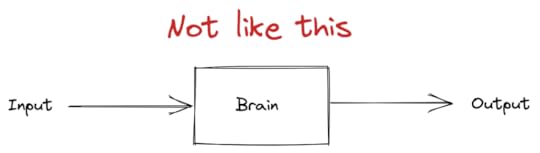

In the paper, Yin argues that neuroscience has failed to make progress in modeling human behavior because it tries to model the brain as a linear system, where you can study it by generating inputs and observing outputs.

Input/output model of brain

Input/output model of brainYin proposes an alternative model, that you need to view the brain as composed of a collection of hierarchical, closed-loop control systems in order to understand behavior from a neurological perspective.

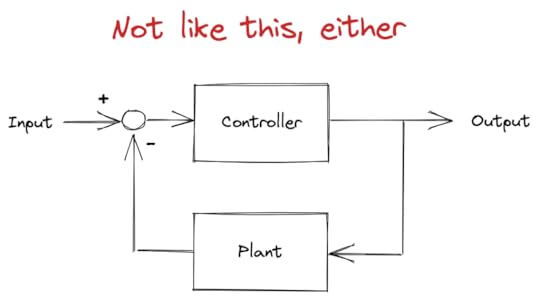

Now, the cybernetics folks have long argued that you should model human brains as control systems. But Yin argues that the cyberneticists got an important thing wrong in their control models: their models were too close to engineering applications to be directly applicable to organisms.

Classical engineering model of a feedback control system

Classical engineering model of a feedback control systemIn an engineered control system, a human operator specifies the set point. For example, for a cruise control system, you’d set desired speed. In the block diagram above, this set point is provided as the input to the system.

The output of the “Plant” block diagram is the current state of the variable you’re trying to control (e.g., current speed). The controller takes as input the difference between the set point and current state, and uses that to determine how to drive the plant (e.g., input to the motor).

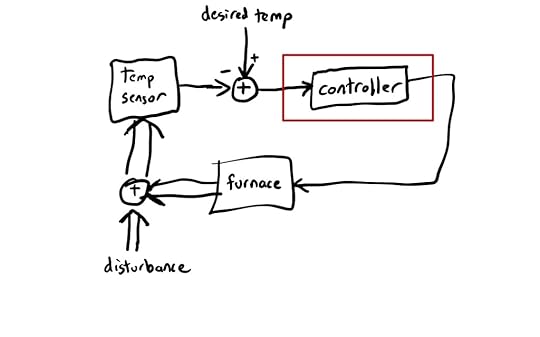

Here’s a block diagram of everyone’s favorite control systems example, the thermostat:

A thermostat that controls temperature

A thermostat that controls temperatureI’ve used a double-arrow to indicate signals that propagate through the environment, and single-arrows to indicate signals that propagate through wires. I’ve put a red box around where Yin claims the cyberneticists hold as their model for control in animals.

The variable under control is the temperature. A human sets the desired temperature, and a temperature sensor reads the current temperature. The controller takes as input the difference between the desired temperature and the current temperature, and uses that to determine whether or not to turn on the furnace.

The actual temperature in the house is determined both by the output of the furnace, and by other factors (e.g., temperature outside, how good the insulation is, whether someone has opened a door), which I’ve labeled disturbance.

The problem with this, Yin argues, is that the red box is not a good model for the control that happens in the brain. As an alternative, he proposes the following model:

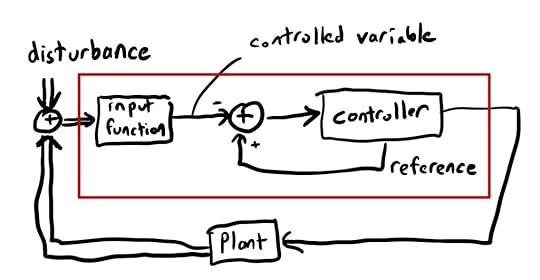

You can think of the red box as being the stuff inside some aspect of the brain, the “plant” as being the things that this aspect controls (e.g., other parts of the brain, muscles).

The difference in Yin’s model is that the controller determines the set point. There’s no external agent specifying the desired value as an input. Instead, the controller generates its own set point, which Yin calls the reference value.

Also note that Yin’s model includes the input function inside the red box. This takes sensory input at calculates the variable that’s under control. The difference between this model and the thermostat is that, in the thermostat model, you know from the outside that temperature is the variable being controlled. In Yin’s model, you can’t see from the outside what the variable is that’s being controlled for: the variable is internal to the control system.

Despite knowing nothing about neuroscience, and only knowing a bit about control systems, I still found this paper surprisingly accessible. I recommend it. There’s a lot more here than what I’ve touched on in this post.

December 28, 2021

The ambiguity of real work

All ambiguity is resolved by actions of practitioners at the sharp end of the system.

Dr. Richard I. Cook, How Complex Systems Fail

There’s a wonderful book by the late urban planning professor Donald Schön called The Reflective Practitioner: How Professionals Think in Action. In the first chapter, he discusses the “rigor or relevance” dilemma that faces educators in professional degree programs. In the case of a university program aimed at preparing students for a career in software development, this is the “should we teach topological sort or React?” question.

Schön argues that the dilemma itself is a fundamental misunderstanding of the nature of professional work. What it misses is the ambiguity and uncertainty inherent in the work of professional life. The “rigor vs relevance” debate is an argument over the best way to get from the problem to the solution: do you teach the students first principles, or do you teach them how to use the current set of tools? Schön observes that a more significant challenge for professionals is defining the problems to solve in the first place, since an ill-defined problem admits no technical solution at all.

In the varied topography of professional practice, there is a high, hard ground where practitioners can make effective use of research-based theory and technique, and there is a swampy lowland where situations are confusing “messes” incapable of technical solution. The difficulty is that the problems of the high ground, however great their technical interest, are often relatively unimportant to clients or to the larger society, while in the swamp are the problems of greatest human concern.

His use of the term “messes” evokes Russell Ackoff’s use of the term in his paper The Future of Operational Research is Past:

Managers are not confronted with problems that are independent of each other, but with dynamic situations that consist of complex systems of changing problems that interact with each other. I call such situations messes. Problems are abstractions extracted from messes by analysis; they are to messes as atoms are to tables and chairs. We experience messes, tables, and chairs; not problems and atoms

To take another example from the software domain. Imagine that you’re doing quarterly planning, and there’s a collection of reliability work that you’d like to do, and you’re trying to figure out how to prioritize it. You could apply a rigorous approach, where you quantify some values in order to do the prioritization work, and so you try to estimate information like:

the probability of hitting a problem if the work isn’t donethe cost to the organization if the problem is encounteredthe amount of effort involved in doing the reliability workBut you’re soon going to discover the enormous uncertainty involved in trying to put a number on any of those things. And, in fact, doing any reliability work can actually introduce new failure modes.

Over and over, I’ve seen the theme of ambiguity and uncertainty appear in ethnographic research that looks at professional work in action. In Designing Engineers, the aerospace engineering professor Louis Bucciarelli did an ethnographic study of engineers in a design firm, and discovered that the engineers all had partial understanding of the problem and solution space, and that their understandings also overlapped only partially. As a consequence, a lot of the engineering work that was done actually involved engineers resolving their incomplete understanding through various forms of communication, often informal. Remarkably, the engineers were not themselves aware of this process of negotiating understandings of the problems and solutions.

The famous Common Ground and Coordination in Joint Activity paper by Gary Klein, Paul Feltovich, and David Woods, makes explicit the role that ambiguity plays in human coordination and communication.

You’ll sometimes hear researchers who study work talk about the process of sensemaking. For example, there’s a paper by Sana Albolino, Richard Cook, and Micahel O’Connor called Sensemaking, safety, and cooperative work in the intensive care unit that describes this type of work in an intensive care unit. I think of sensemaking as an activity that professionals perform to try to resolve ambiguity and uncertainty.

(Ambiguity isn’t always bad. In the book On Line and On Paper, the sociologist Kathryn Henderson describes how engineers use engineering drawings as boundary objects. These are artifacts are that are understood differently by the different stakeholders: two engineers looking at the same drawing will have different mental models of the artifact based on their own domain expertise(!). However, there is also overlap in their mental models, and it is this combination of overlap and the fact that individuals can use the same artifact for different purposes that makes it useful. Here the ambiguity has actual value! In fact, her research shows that computer models, which eliminate the ambiguity, were less useful for this sort of work).

As practitioners, we have no choice: we always have to deal with ambiguity. As noted by Richard Cook in the quote that opens this blog post, we are the ones, at the sharp end, that are forced to resolve it.

December 8, 2021

The Howie Guide: How to get started with incident investigations

Until now, if you wanted to improve your organization’s ability to learn from incidents, there wasn’t a lot of how-to style material you could draw from. Sure, there were research papers you could read (oh, so many research papers!). But academic papers aren’t a great source of advice for someone who is starting on an effort to improve how they do incident analysis.

There simply weren’t any publications about how to get started with doing incident investigations which were targeted at the infotech industry. Your best bet was the Etsy Debrief Facilitation Guide. It was practical, but it focused on only a single aspect of the incident investigation process: the group incident retrospective meeting. And there’s so much more to incident investigation than that meeting.

The folks at Jeli have stepped up to the challenge. They just released Howie: The Post-Incident Guide.

We made a new thing! Jeli's all about evolving the industry, but building a new post-incident process is…super hard. So we did it for you. Introducing

— Jeli.io (@jeli_io) December 8, 2021Howie: The Post- Incident Guide.

Download it, print it, share it, frame it. Use it to evolve. https://t.co/O4TF68oiAh

Readers of this blog will know that this is a topic near and dear to my heart. The name “Howie” is short for “How we got here“, which is what we call our incident writeups at Netflix. (This isn’t a coincidence: we came up with this name at Netflix when Nora Jones of Jeli and I were on the CORE team).

Writing a guide like this is challenging, because so much of incident investigation is contextual: what you look at it, what questions you ask, will depend on what you’ve learned so far. But there are also commonalities across all investigations; the central activities (constructing timelines, doing one-on-one interviews, building narratives) happen each time. The Howie guide gently walks the newcomer through these. It’s accessible.

When somebody says, “OK, I believe there’s value in learning more from incidents, and we want to go beyond doing a traditional root-cause-analysis. But what should I actually do?”, we now have a canonical answer: go read Howie.

November 28, 2021

I have no idea what I’m doing

A few days ago, David Heinemeier Hansson (who generally goes by DHH) wrote a blog post titled Programmers should stop celebrating incompetence:

"You can't become the I HAVE NO IDEA WHAT I'M DOING dog as a professional identity. Don't embrace being a copy-pasta programmer whose chief skill is looking up shit on the internet." https://t.co/vVBYSsE9lh

— DHH (@dhh) November 25, 2021

I disagreed with the post, but for different reasons than from most of the other responses I saw on twitter.

Here are a couple of lines from the post:

You can’t become the I HAVE NO IDEA WHAT I’M DOING dog as a professional identity. Don’t embrace being a copy-pasta programmer whose chief skill is looking up shit on the internet.

From the twitter reactions, it seems like people thought DHH was saying, “you shouldn’t be looking things up on the internet and copy-pasting code”. But I think that gets the thrust of his argument wrong. This wasn’t a diatribe against Stack Overflow, but it was about how programmers see themselves and their work.

DHH was criticizing a sort of anti-intellectualism mode of expression. The attitude he was criticizing reminds me of reading an essay (I can’t remember the source or author, it might have been Paul Lockhart) where a mathematics(?) professor was talking to some colleagues from the humanities department, and when the math professor mentioned their field, the humanities professor said, “Oh, I was never any good at math”, and it came off almost as a point of pride.

Where I disagree with DHH is that I don’t see this type of anti-intellectualism in our field at all. I don’t see “LOL, I don’t know what I’m doing” on people’s LinkedIn profiles or in their resumes, I don’t hear it in interviews, I don’t see it on pull request comments, I don’t hear it in technical meetings. I don’t think it exists in our field.

You can see our field’s professionalism in criticisms of technical interviews that involve live coding. You don’t hear programmers criticizing it by saying, “LOL, actually, nobody knows how to do this.” What you hear instead is, “these interviews don’t effectively evaluate my actual skills as a software developer”.

So, what’s going on here? What led DHH astray? Where does the dog meme come from?

To explain my theory, I’m going to use this recent blog post by Diomidis Spinnellis, called Rather than alchemy, methodical troubleshooting:

"Rather than alchemy, methodical troubleshooting", or "Finding a needle in a haystack by running git bisect on synthetic commits" https://t.co/ClRP9Tf6g3

— Diomidis Spinellis (@CoolSWEng) November 27, 2021

Spinellis is a software engineering professor who has written numerous books for practitioners and has contributed to numerous open source projects (including the FreeBSD kernel). He is as professional as they come.

His blog post is about his struggles getting a React Native project to build in Xcode, including trying (in vain) various bits of advice he found through Googling. Spinellis actually feels bad about his initial approach:

Although advice from the web can often help us solve tough problems in seconds, as the author of the book Effective Debugging, I felt ashamed of wasting time by following increasingly nonsensical advice.

I bring this up not to pile onto Spinellis, but to point out that the surface area of the software world is vast, so vast that even the most professional software engineer will encounter struggles, will hit issues outside of their expertise.

(As an aside: note that Spinellis does not solve the problem by developing a deep understanding of the failure mode, but instead by systematically eliminating the differences between a succeeding build and a failed one.)

In the book Designing Engineers, Louis Bucciarelli notes that Murphy’s Law and horror stories told by engineers are symptoms of the dissonance between the certainty of engineering models and the uncertainty of reality. I think the dog meme is another such symptom. It uses humor to help us deal with the fact that, no matter how skilled we become in our profession as software engineers, we will always encounter problems that extend beyond our area of expertise to understand.

To put it another way: the dog meme is a coping mechanism for professionals in dealing with a domain that will always throw problems at them that push them beyond their local knowledge. It doesn’t indicate a lack of professionalism. Instead, it calls attention to the ironies of professionalism in software engineering. Even the best software engineers still get relegated to Googling incomprehensible error messages.

November 24, 2021

How much did that outage cost?

People like to put dollar values on outages. There’s no true answer to the question of how much an outage costs an organization. If your company is transaction-based, you can estimate how many transactions were missed, but there are all sorts of other factors that you could decide to model. (Are those transactions really lost, or will people come back later? Does it impact the public’s perception of the organization? What if your business isn’t transaction-based?). If you ask John Allspaw, he’ll tell you that incidents can provide benefits to an organization in addition to costs.

Putting all of that aside for now, one question around incident cost that I think is interesting is the perceived cost within the organization. How costly does leadership feel that this incident was?

Here’s a proposed approach to try and capture this. After an incident, go to different people in leadership, and ask them the following question:

Imagine I could wave a magic wand, and it would alter history to undo the incident: it will be as if the incident never happened. However, I’ll only do this if you pay me money out of your org’s budget. How much are you willing to pay me to wave the wand?

I think this would be an interesting way to convey to the organization how leadership perceived the incident.

November 22, 2021

“What could we have done differently?”

During incident retrospective meetings, I’ve often heard someone ask: “What could we have done differently?” I don’t like this question, and so I never ask it.

A world that never wasI am a firm believer in the idea that the best way to get better at dealing with incidents is to understand how incidents actually happen. After an incident happens, I focus all of my energies on the understanding aspect, because the window of opportunity for studying the incident closes quickly.

Asking “what could we have done differently?” can’t teach us anything about how the incident happened, because it’s asking us to imagine an alternate reality where events unfolded differently. You can’t get a better understanding of why an incident responder took action X by imagining a world where the responder took action Y.

Instead of asking how it could have unfolded differently, you’ll learn a lot more about the incident if you try to understand the frame of mind of the incident responders. What did they see? What did they know at the time? What was confusing to them?

The future, not the pastI believe the question is well-intended, to help us prevent the incident from recurring. In that case, I think a better question would be something along the lines of: “If we encounter similar symptoms in a future incident, what actions should we take?” This sounds like the same question, but it’s not:

“If we encounter similar symptoms” introduces uncertainty into the exercise – the future incident may look like the last one, but it might be different with the same symptoms! When we ask about doing things differently in the past, it’s all too easy to forget about this uncertainty.

Uncertainty is one of the defining characteristics of an incident. The system is behaving in an unexpected way, and we don’t understand why! When we look back on an incident, we should focus on this uncertainty rather than elide it.

Another reason that imagining future scenarios is better that counterfactuals about past scenarios is that our system in the future is different from the one in the past. For example:

You may have made changes to the system in the wake of the last incident that prevents the incident from recurring in exactly the same way as before, so the question turns out to be moot.You may have improved the operability of your system in some way (e.g., added an admin interface so you can make an API call instead of poking at the database), so that you have new actions you can take in the future that you couldn’t take in the past.While I still probably wouldn’t ask this question (I want to spend all of my energy understanding the incident), I think it’s a much better question, because it gives us practice at anticipating future incidents.

November 21, 2021

OOPS writeups

A couple of people have asked me to share how I structure my OOPS write-ups. Here’s what they look like when I write them. This structure in this post is based on the OOPS template that has evolved over time inside of Netflix, with contributions from current and former members of the CORE team.

My personal outline looks like this (the bold sections are the ones that I include in every writeup)

TitleExecutive summaryBackground Narrative descriptionPrologueThe triggerImpactEpilogueContributors/enablers Mitigators Risks Challenges in handlingTitle: OOPS-NNN: How we got hereEvery OOPS I write up has the same title, “how we got here”. However, the name of the Google doc itself (different from the title) is a one-line summary, for example: “Server groups stuck in ‘deploying’ state”.

Executive summaryI start each write-up with a summary section that’s around three paragraphs. I usually try to capture:

When it happenedThe impactExplanation of the failure mode Aspects about this incident that were particularly difficult

On , from to , users were unable to

The failure mode was triggered by an unintended change in that led to .

The issue was made more difficult to diagnose/remediate due to a number of factors:

I’ll sometimes put the trigger in the summary, as in the example above. It’s important not to think of the trigger as the “root cause”. For example, if an incident involves TLS certificates expiring, then the trigger is the passage of time. I talk more about the trigger in the “narrative description” section below.

BackgroundIt’s almost always the case that the reader will need to have some technical knowledge about the system in order to make sense of the incident. I often put in a background section where I provide just enough technical details to help the reader understand the rest of the writeup. Here’s an example background section:

Managed Delivery (MD) supports a GitOps-style workflow. For apps that are on Managed Delivery, engineers can make delivery-related changes to the app by editing a file in their app’s Stash repository called the delivery config.

To support this workflow, Managed Delivery must be able to identify when a new commit has happened to the default branch of a managed app, and read the delivery config associated with that commit.

The initial implementation of this functionality used a custom Spinnaker pipeline for doing these imports. When an application was onboarded to Managed Delivery, newt would create a special pipeline named import-delivery-config. This pipeline was triggered by commits to the default branch, and would execute a custom pipeline stage that would retrieve the delivery config from Stash and push it to keel, the service that powers Managed Delivery.

This solution, while functional, was inelegant: it exposed an implementation detail of Managed Delivery to end-users, and made it more difficult for users to identify import errors. A better solution would be to have keel identify when commits happen to the repositories of managed apps and import the delivery config directly. This solution was implemented recently, and all apps previously using pipelines were automatically migrated to the native git integration. As will be revealed in the narrative, an unexpected interaction involving the native git integration functionality contributed to this OOPS.

The narrative is the heart of the writeup. If I don’t have enough time to do a complete writeup, then I will just do an executive summary and a narrative description, and skip all of the other sections.

Since the narrative description is often quite long (over ten pages, sometimes many more), I break it up into sections and sub-sections. I typically use the following top-level sections.

PrologueThe triggerImpactEpiloguePrologueIn every OOPS I’ve ever written up, implementation decisions and changes that happen well before the incident play a key role in understanding how the system got into a dangerous state. I use the Prologue section to document these, as well as describing how those decisions were reasonable when they happened.

I break the prologue up into subsections, and I include timeline information in the subsection headers. Here are some examples of prologue subsection headers I’ve used (note: these are from different OOPS writeups).

New apps with delivery configs, but aren’t on MD (5 months before impact)Implementing the git integration (4 months before impact)Always using the latest version of a platform library (4 months before impact)A successful plugin deployment test (8 days before impact)A weekend fix is deployed to staging (4 days before impact)Migrating existing apps (3-4 days before impact)A dependency update eludes dependency locking (1 day before impact)I often use foreshadowing in my prologue section writeups. Her are some examples:

It will be several months before keel launches its first Titus Run Job orca task. Until one of those new tasks fails, nobody will know that a query against orca for task status can return a payload that keel is incapable of deserializing.

The scope of the query in step 2 above will eventually interact with another part of the system, which will broaden the blast radius of the operational surprise. But that won’t happen for another five months.

Unknown at the time, this PR introduced two bugs:

1.

2.

Note that the first bug masks the second. The first bug will become apparent as soon as the code is deployed to production, which will happen in three days. The second bug will lay unnoticed for eleven days.

The “trigger” section is the shortest one, but I like to have it as a separate section because it acts as what my colleague J. Paul Reed calls a “pivot point”, a crucial moment in the story of the incident. This section should describe how the system transitions into a state where there is actual customer impact. I usually end the trigger section with some text in red that describes the hazardous state that the system is now in.

Here’s an example of a trigger section:

Trigger: a submitted delivery config

On , at , commits a change to their delivery config that populates the artifacts section. With the delivery config now complete, they submit it to Spinnaker, then point their browser at the environments view of the app, where they can observe Spinnaker manage the app’s deployment.

When submits their delivery config, keel performs the following events:

receives the delivery config via REST API.deserializes the delivery config from YAML into POJOs.serializes the config into JSON objects.writes the JSON objects to the database.At this point, keel has entered a bad state: it has written JSON objects into the resource table that it will not be able to deserialize.

ImpactThe impact section is the longest part of the narrative: it covers everything from the trigger until the system has returned to a stable state. Like the prologue section, I chunk it into subsections. These act as little episodes to make it easier for the reader to follow what’s happening.

Here are examples of some titles for impact subsections I’ve used:

User reports access denied on unpinPinning the library backMaybe it’s gate?Deploying the version with the library pinned backLet’s try rolling back stagingStaging is good, let’s do prodWhere did the headers go?Rollback to main is completeWe’re stable, but why did it break?For some incidents, I’ll annotate these headers with the timing, like I did in the prologue (e.g., “45 minutes after impact”).

Because so much of our incident coordination is over Slack these days, my impact section will typically have pasted screeenshots of Slack conversation snippets, interspersed with text. I’ll typically write some text that summarizes the interaction, and then paste a screenshot, e.g.:

notes something strange in keel’s gradle.properties: it has multiple version parameters where it should only have one:

[Slack screenshot here]

The impact section is mostly written chronologically. However, because it is chunked into episodic subsections, sometimes it’s not strictly in chronological order. I try to emphasize the flow of the narrative over being completely faithful to the ordering of the events. The subsections often describe activities that are going on in parallel, and so describing the incident in the strict ordering of the events would be too difficult to follow.

EpilogueI’ll usually have an epilogue section that documents work done in the wake of the incident. I split this into subsections as well. An example of a subsection: Fixing the dependency locking issue

Contributors/enablersHere’s the guidance in the template for the contributors and enablers section:

Various contributors and enablers create vulnerabilities that remain latent in the system (sometimes for long periods of time). Think of these as things that had to be true in order for the incident to take place, or somehow made it worse.

This section is broken up into subsections, one subsection for each contributor. I typically write these at a very low-level of abstraction, where my colleague J. Paul Reed writes these at a higher level.

I think it’s useful to call the various contributors out explicitly because it brings home how complex the incident really was.

Here are some example subsection titles:

Violated assumptions about version stringsScope of SQL queryBeans not scanned at startup after Titus refactorIncomplete TitusClusterSpecDeserializerMetadata field not populated for PublishedArtifact objectsResilience4J annotations and Kotlin suspend functionsTransient errors immediately before deploying to stagingArtifact versioning complexityProduction pinned for several daysNo attempts to deploy to production for several daysThree large-ish changes landed at about the same time Holidays and travelAlerts focus on keel errors and resource checksMitigatorsThe guidance we give looks like this:

Which factors helped reduce the impact of this operational surprise?

Like the contributors/enablers section, this is broken up into subsections. Here are some examples of subsection titles:

RADAR alerts caught several issues in staging recognized Titus API refactor as a trigger for an issue in production quickly diagnoses artifact metadata issue’s hypothesis about transactions rolling back due to error recognized query too broad notices spike in actuationsRisksHere’s the guidance for this section from the template:

Risks are items (technical architecture or coordination/team related) that created danger in the system. Did the incident reveal any new risks or reinforce the danger of any known risks? (Avoid hindsight bias when describing risks.)

The risks section is where I abstract up some of the contributors to identify higher-level patterns. Here are some example risk subsection titles:

Undesired mass actuationMaintaining two similar things in the codebaseProblems with dynamic configuration that are only detectable at runtimePlugins that violate assumptions in the main codebaseNot deploying to prod for a whileChallenges in handlingHere’s the guidance for this section from the template:

Highlight the obstacles we had to overcome during handling. Was there anything particularly novel, confusing, or otherwise difficult to deal with? How did we figure out what to do? What decisions were made? (Capturing this can be helpful for teaching others how we troubleshoot and improvise).

In particular, were there unproductive threads of action? Capture avenues that people explored and mitigations that were attempted that did not end up being fruitful.

Sometimes it’s not clear what goes into a contributor and what goes into a challenge. You could put all of these into “contributors” and not write this section at all. However, I think it’s useful to call out what explicitly made the incident difficult to handle. Here are some example subsection headers:

Long time to diagnose and remediateLimited signals for making sense of underlying problemError checking task status as red herringOther sectionsThe template has some other sections (incident artifacts, follow-up items, timeline and links), but I often don’t include those in my own writeups. I’ll always do a timeline document as input for writing up the OOPS, and I will typically link it for reference, but I don’t expect anybody to read it. I don’t see the OOPS writeup as the right vehicle for tracking follow-up work, so I don’t put a section in it.

October 22, 2021

The danger of hidden functional roles

There’s a collection of friends that I have a standing videochat with every couple of weeks. We had been meeting at 8am, but several people developed conflicts at that time, including me. I have a teenager that starts school at 8am, and I’m responsible for getting them to school in the morning (I like to leave the house around 7:40am), which prevented me from participating.

As a group, we decided to reschedule the chat to 7am. This works well for me, because I get up at 6. Today was the first day meeting at the new scheduled time. I got up as I normally do, and was sure to be quiet so as not to wake my wife, Stacy; she sleeps later than I do, but she gets up early enough to rouse our kids for school. I even closed the bedroom door so that any noise I made from the videochat wouldn’t disturb her.

I was on the videochat, taking part in the conversation in hushed tones, when I looked over at the time. I saw it was 7:25am, which is about fifteen minutes before I start getting ready to leave the house. Usually, the rest of the household is up, showering, eating breakfast. But I hadn’t heard a peep from anyone. I went upstairs to discover that nobody else had gotten up yet.

It turns out that my typical morning routine was acting as a natural alarm clock for Stacy. My alarm goes off at 6am every weekday, and I get up, but Stacy stays in bed. However, the noises from my normal morning routine are the thing that rouse her, which is typically around 7am. Today, I was careful to be very quiet, and so she didn’t wake up. I didn’t know that I was functioning as an alarm clock for her! That’s why I was careful to be quiet, and why I didn’t even think to mention to her about the new videochat time.

I suspect this is failure mode is more common than we realize: there is a process inside a system, and over time the process comes to fulfill some unintended, ancillary functional role, and there are people who participate in this process that aren’t even aware of this function.

As an example, consider Chaos Monkey. Chaos Monkey’s intended function is to ensure that engineers design their services to withstand a virtual machine instance failing unexpectedly, by increasing their exposure to this failure mode. But Chaos Monkey also has the unintended effect of recycling instances. For teams that deploy very infrequently, their service might exhibit problems with long-lived instances that they never notice because Chaos Monkey tends to terminate instances before they hit those problems. Now imagine declaring an extended period of time where, in the interest of reducing risk(!), no new code is deployed, and Chaos Monkey is disabled.

When you turn something off, you never know what might break. In some cases, nobody in the system knows.

October 20, 2021

Plus c’est la même chose, plus ça change

I’m re-reading a David Woods’s paper titled the theory of graceful extensibility: basic rules that govern adaptive systems. The paper proposes a theory to explain how certain types of systems are able to adapt over and over again to changes in their environment. He calls this phenomenon sustained adaptability, which we contrasts with systems that can initially adapt to an environment but later collapse when some feature of the environment changes and they fail to adapt to the new change.

Woods outlines six requirements that any explanatory theory of sustained adaptability must have. Here’s the fourth one (emphasis in the original):

Fourth, a candidate theory needs to provide a positive means for a unit at any scale to adjust how it adapts in the pursuit of improved fitness (how it is well matched to its environment), as changes and challenges continue apace. And this capability must be centered on the limits and perspective of that unit at that scale.

The phrase “adjust how it adapts” really struck me. Since adaptation is a type of change, this is referring to a second-order change process: these adaptive units have the ability to change the mechanism by which they change themselves! This notion reminded me of Chris Argyris’s idea of double-loop learning.

Woods’s goal is to determine what properties a system must have, what type of architecture it needs, in order to achieve this second-order change process. He outlines in the paper that any such system must be a layered architecture of units that can adapt themselves and coordinate with each other, which he calls a tangled, layered network.

Woods believes there are properties that are fundamental to systems that exhibit sustained adaptability, which implies that these fundamental properties don’t change! A tangled, layered network may reconfigure itself in all sorts of different ways over time, but it must still be tangled and layered (and maintain other properties as well).

The more such systems stay the same, the more they change.

October 3, 2021

Adapting to a crunch: the Mask Match story

I just got back from Strange Loop, and my favorite talk was Tech When the Sky is Falling: Tools for Crisis Response by Emma Ferguson and Colin Schimmelfing. I’m going to use this talk to illustrate one of the ideas in David Woods theory of graceful extensibility. The idea is that a system needs to deploy, mobilize, or generate capacity when it is at risk of saturation.

My silly doodle of the speakers

My silly doodle of the speakers Back in March 2020, frontline hospital workers dealing with COVID-19 patients were running short on N95 face masks. Hospitals simply didn’t have enough masks to supply their workers. This shortage of masks is a great example of what Woods calls a crunch, where a system runs short on some resource that it needs. When a system is crunched like this, it needs to adapt. It has to make some sort of change in order to get more of that resource so that it can function properly.

Woods lists three methods for getting more of a resource. If you’ve prepared in advance by stockpiling resources, you can deploy those stockpiles. If you don’t have those extra resources on hand, but your larger network has resources to spare, you can mobilize your network to access those resources. Finally, if you can’t tap into your network to get those resources, your only option is to generate the resources you need. In order to generate resources, you need access to raw materials, and then you need to do work to transform those raw materials into the right resources.

In the case of the mask shortages, the hospitals did not have sufficient stockpiles of N95 masks on hand, so deploying wasn’t an option. It turns out that there were many American households that happened to have N95 masks sitting in storage, and many of those households were willing to donate these unused masks to healthcare workers. In theory, hospitals could mobilize this network of volunteers in order to get these masks to the frontline workers.

There was a problem, though: hospital administrators refused to accept donated N95 masks because of liability concerns. So, this wasn’t something the hospitals were going to do.

Workers wanted masks, and people wanted to donate, but hospital admins wouldn’t let them

Workers wanted masks, and people wanted to donate, but hospital admins wouldn’t let themFortunately, there was a loophole: frontline workers could bring in their own masks. Now, the problem to be solved was: how do you get masks from donors who had masks to the workers who wanted them?

Emma and Colin needed to generate a new capability: a mechanism for matching up the donors with the healthcare workers. The raw materials that they initially used to generate this capability were Google Sheets and Gmail for coordinating among the volunteers.

And it worked! However, they quickly ran into a new risk of saturation. Google Sheets has a limit of 50 concurrent editors, and Gmail limits an email account to a maximum of 500 emails per day. And so, once again, the team had to generate a new capability that would scale beyond what Google Sheets and Gmail were capable of. They ended up building a system called Mask Match, by writing a Flask app that they deployed on Heroku, and using Mailgun for sending the emails.

My favorite part of this talk was when Emma Ferguson mentioned that they originally just wanted to pay Google in order to get the Google Sheets and Gmail limits increased (their GoFundMe campaign was quite successful, so getting access to money wasn’t a problem for them). However, they couldn’t figure out how to actually pay Google for a limit increase! This is a wonderful example of what Woods calls brittleness, where a system is unable to extend itself when it reaches its limits. Google is great at building robust systems, but their ethos of removing humans from the loop means that it’s more difficult for consumers of Google services to adapt them to unexpected, emergency scenarios.