Oxford University Press's Blog, page 296

November 28, 2017

Place of the Year nominee spotlight: Catalonia

The Catalan independence movement was frequently in the news in 2017, earning Catalonia its place among the nominees for Oxford University Press’s Place of the Year. While tensions seemed to come to a head this year, the independence movement has a long history of clashes with the Spanish government, beginning with the separatist movements of the mid-19th century.

In this excerpt from Geopolitics: A Very Short Introduction, Klaus Dodds discusses the regional sense of identity among communities including the Catalans and Basque in Spain and explains how this sense of identity interacts with Spanish attempts at nation-building.

If regional expressions of identity and purpose complicate the relationship between political entities and expressions of national identity, subnational groupings seeking independence or greater autonomy from a central authority also question any simple assumptions that identities are territorially bounded. Countries such as Japan and Iceland, which are virtually ethnically homogeneous have had less experience of subnational groupings challenging territorial legitimacy and associated claims to national identity. Within Europe, communities such as the Catalan community in Spain and the Walloons in Belgium continue to provide reminders that expressions of national unity and purpose are circumscribed and sometimes violently contested by other groupings that resent claims to a national identity or vision. Nation building is a dynamic process and states such as Spain have alternated between trying to repress and to accommodate competing demands for particular territorial units and representations of identity therein. Over the last 40 years, Spanish governments based in Madrid have granted further autonomy to the Catalan and Basque communities, at the same time as military officials have been quoted as noting that the country would never allow those regions to break away from Spain.

Statue of Felipe III and Casa de la Panadería, Plaza Mayor, Madrid, Spain by Carlos Delgado. CC BY-SA 3.0 via Wikimedia Commons.

Statue of Felipe III and Casa de la Panadería, Plaza Mayor, Madrid, Spain by Carlos Delgado. CC BY-SA 3.0 via Wikimedia Commons.This apparent determination to hold on to those territories has in part provoked groups such as ETA (Basque Homeland and Freedom in English) to pursue terror campaigns that have in the past included bombings and attacks on people and property in the Basque region and major cities such as Madrid. Created in July 1959, it sought to promote Basque nationalism alongside an anti-colonial message which called for the removal of Spain’s occupation. The Spanish leader General Franco was a fierce opponent and used paramilitary groups to attempt to crush ETA. This proved unsuccessful and ETA continued to operate after his death in 1975, notwithstanding various attempts to secure a ceasefire in the 1990s. Most importantly, the group was initially blamed for the Madrid bombing on 11 March 2004, which cost the lives of nearly 200 people. Islamic militant groups rather than ETA were the perpetrators of the Madrid bombings (called ‘11-M’ in Spain). The then People’s Party government led by Prime Minister Jose Aznar, who had approved the deployment of Spanish troops to Iraq, was heavily defeated at the national election three days later. Interestingly, a national government haunted by low popularity attempted to blame an organization operating within Spain for a bombing that many believed to be a direct consequence of Spain’s willingness to support the War on Terror.

While the challenge to the Spanish state posed by subregional nationalisms remains, the use of terror probably receded as a consequence of the March 2004 attacks on Madrid. As with other regional movements, found in Catalonia and Galicia, groups such as ETA play a part in mobilizing narratives of identity which run counter to national stories about Spain and Spanish identities. The separatists unsurprisingly either target property and symbols emblematic of the Spanish state and its ‘colonial occupation’ or vigorously promote practices and expressions of difference such as languages, regional flags, and maps.

Featured image credit: “independence-of-catalonia-flag-spain” by lexreusois. CC0 via Pixabay.

The post Place of the Year nominee spotlight: Catalonia appeared first on OUPblog.

Modernizing classical physics: an oxymoron?

Open most textbooks titled “Modern Physics” and you will see chapters on all the usual suspects: special relativity, quantum mechanics, atomic physics, nuclear physics, solid state physics, particle physics and astrophysics. This is the established canon of modern physics. Notably missing from this list are modern topics in dynamics that most physics majors use in their careers: nonlinearity, chaos, network theory, econophysics, game theory, neural nets and curved geometry, among many others. These are among the topics at the forefront of physics that drive high-tech businesses and start-ups, which is where almost half of all physicists work. However, this modern reality of physics education has not (yet) filtered down to the undergraduate curriculum, but the community appears to be taking steps towards progress.

Our choice of topics that we teach our physics students has a very high inertia, and physics as a field has greater inertia than most. Most high school students today in their biology labs routinely do genetic engineering and make bacteria glow green, while the students in the physics labs down the hall are dropping lead weights and finding differences of squares almost the same way Galileo did it in 1610. It can be argued that college undergraduate physics majors get their bachelor’s degrees having learned topics that were last researched seriously about a hundred years ago. The time is overdue for the physics curriculum to catch up with the times.

There are two general reasons why the physics curriculum lags behind most other disciplines. The first has to do with the (false) expectation that physics majors will become academics. If we are going to teach students advanced physics in graduate school, then there is no better time to build a foundation for it than when they are undergraduates. We teach slowly and methodically, brick by brick, so the students have all the tools they need for graduate school in physics. The problem is that many undergraduate physics majors do not go on to physics graduate school, with 40% entering the workforce after their undergraduate degree. For those who do go to graduate school, a sizable fraction enter graduate programs other than in physics. For all of these students, the undergraduate curriculum has stranded them with hundred-year-old knowledge.

The time has come to bring the undergraduate physics curriculum into the 21st century.

The second reason for the lag in upgrading the physics curriculum relates to the (false) expectation that advanced topics are too conceptually challenging for undergraduates and that advanced mathematical methods are needed that the undergraduates have not yet mastered. Yet students are hungry to learn the latest physics and willing to grapple with the concepts, going to Google or to Wikipedia in an instant to find out more. Fortunately, many of the advanced topics that spark their interest, like economic dynamics or game theory or network dynamics, have strong phenomenological aspects that can be understood intuitively through phase space portraits without the need for deep math. The students learn the concepts quickly, and can explore the systems with simple computer codes and interactive web sites, like Wolfram Alpha, that physics undergraduates are handling with ever increasing facility.

One example of a central concept that needs updating is the simple harmonic oscillator. It has been argued that most dynamics can be reduced to a simple harmonic oscillator (SHO) because the energy minimum of any potential can be approximated by a quadratic function. But the SHO is actually the most pathological of all oscillators: it has no dependence of frequency on amplitude. While this is a great asset for clocks, it is such a special case that it skews a student’s intuition about real-world oscillators for which anharmonic effects are the rule instead of the exception. Anharmonic oscillators break frequency degeneracy, opening the door to important topics like Hamiltonian chaos. Going further, autonomous oscillators, like the van der Pol oscillator, provide the paradigmatic foundation for a broad range of modern topics like synchronization, business cycles, neural pulses and social network dynamics.

Therefore, the time has come to bring the undergraduate physics curriculum into the 21st century. By relaxing our insistence that every student know how to calculate difficult Lagrangians or Poisson brackets (Lagrange and Poisson were at their peak about 200 years ago), there is plenty of room and time in the undergraduate curriculum to introduce them to truly modern dynamics. This task will be helped in part by burgeoning web resources and the increasing breadth of knowledge that students bring with them. It also will be helped by a new crop of textbooks that adopt a modern view of the purpose of physics in the modern world.

The post Modernizing classical physics: an oxymoron? appeared first on OUPblog.

November 27, 2017

What’s wrong with electric cars?

Recently, we’ve heard that Volvo are abandoning the internal combustion engine, and that both the United Kingdom and France will ban petrol and diesel cars from 2040. Other countries like China are said to be considering similar mandates.

All cars use stored energy to overcome air resistance at higher speeds and rolling resistance at lower speeds. Energy is also needed for acceleration. The advantage of the electrical car is the efficiency with which it uses the energy stored in the battery with minimal losses. By contrast the petrol or diesel powered car only uses about 20% of the chemical energy stored in the fuel. The disadvantage is that the energy density, the energy that can be stored for a given weight, in even the best batteries is 50 times worse than gasoline. The weight of gasoline in a car is less than 5% of the total weight of a car, while the weight of the battery is about 25% the weight of the Tesla electric car. However the extra weight of the battery is more than offset by the much higher efficiency of the electric motor compared to a petrol or diesel engine. The problem is filling them up.

Nobody likes spending time in petrol stations, but due to the high energy density of petrol or diesel fuel, it doesn’t take very long. I filled up my car with 12 gallons of petrol in 75 seconds. In power terms, that’s 20 MW, equivalent to the electrical consumption of 10,000 average homes consuming 2 kW. Taking account of the greater efficiency of the electric car would lower the electrical consumption equivalent to about 4.5 MW, or 2,250 homes.

Image credit: Tile by MabelAmber. Public domain via Pixabay.

Image credit: Tile by MabelAmber. Public domain via Pixabay.Or we could look at it in terms of battery sizes. The new Tesla S has a 100 kWh battery, the much more modest Chevy Bolt 60 kWh. Filling them up from a home charging point limited to a few kW will take overnight. Charging from a fast charging point will be limited by the ability of the battery to accept charge. It takes an hour for a Li battery to accept a full charge if it is to last a few years and be recharged many times. Do you really want to hang around a filling station that long?

Charging at home will mean that cars have to be parked in garages. That’s fine for the wealthy with space to spare in their three-car garage. I don’t see this happening in most of England. My brother is the only person in his neighborhood of a few hundred houses who parks his car in the garage. It’s a small car by today’s standards and he has only centimeters to spare on either side!

The extra electrical energy needed if all vehicles were converted from gasoline or diesel can be estimated from the energy content of oil consumed, divided by about four to account for the increased efficiency of the electric car compared to the petrol or diesel car. In most developed countries this would imply a need to generate 25% more electrical energy. However the electrical companies will have to distribute substantially more power to the home. To fully charge a car overnight requires up to 10kW, a major increase on average consumption. Fast charging stations also present a challenge for the electrical company. The equivalent of the local petrol filling station will require 1-2 MW or electrical power, about the same as a small shopping mall.

If the cars are charged at home don’t expect to use solar to charge your car, unless you only drive at night and leave the car in the garage during the day! Given that most people will want to charge their cars at night (like their cell phones!) this will smooth out the electrical demand during the day. Constant electrical power demand is best met by nuclear, coal, hydro electricity, or combined cycle natural gas. In fact if coal were used to generate the extra electricity required by electric cars there would be no reduction of CO2 emissions compared to petrol or diesel cars.

So even if the cost and the range problem are solved, the time taken to fill up an electric car still makes it unattractive compared to a hybrid or a conventional vehicle.

Featured image: Electric car by geralt. Public domain via Pixabay .

The post What’s wrong with electric cars? appeared first on OUPblog.

Where is scientific publishing heading?

As researchers, we are unlikely to spend much time reflecting on one of the often-forgotten pillars of science: scientific publishing. Naturally, our focus leans more towards traditional academic activities including teaching, mentoring graduate students and post docs, and the next exciting experiment that will allow us to advance our understanding. Despite our daily dependence on the research produced by our colleagues and contemporaries in scientific papers, and an equal dependence on journals to present the results of our own research, it is uncomfortable to think that we as scientists have lost control of the majority of this infrastructure.

Traditionally, scientific publishing was controlled by learned societies such as the Royal Society and the National Academy of Science (in the USA), alongside publishers associated with key universities, Oxford University Press being one. However, as large multinational companies such as Roche, Sigma-Aldrich, and Agilent have evolved to dominate the markets for chemicals, research equipment, and various researcher services; the publication of scientific results from commercial publishers has become a highly profitable endeavour. The three largest publishers—Elsevier, Springer Nature, and Wiley-Blackwell—now represent around half of the ten billion GDP scientific publication industry, their dominance following years of consolidation in the industry. With profit margins outdoing even those of tech giants Apple and Google, it seems incredible that we as scientists are contributing significantly to the success of these journals, largely for free!

However, the scientific publication industry is undergoing dramatic changes. The number of journals continues to increase, competing for the best papers, as evidenced by the large number of invitations we receive. With many journals remaining in the traditional format, relying on library subscriptions alongside ever tighter library budgets, there are a number of new journals opting for the open access route. In this model, it is the authors paying the fees. Following acceptance (or a pre-determined embargo period), their paper is then made freely available for all.

The rapid development of open access journals, including PLOS ONE, Nature’s Scientific Reports, and Biomed Central’s Genome Biology, to name just a few, is supported by many funders who are now requiring that research papers are open access. Furthermore, the European University Association recently published a document recommending all member institutions to install policies ensuring a reduction in publication costs, that authors retain all publication rights, and that all research papers are open access.

With many journals offering ‘hybrid’ journals, a combination of open access papers and traditional library subscriptions, it could soon become problematic for these journals to maintain income from library subscriptions if more and more papers are published open access. Although fully open access journals can operate at lower costs, article processing fees are unlikely to be able to fund those journals run by editorial teams, who not only handle papers, but also provide much of the front matter including perspectives, book reviews, and research highlights. If the industry does eventually become totally open access, it is likely we will lose the various news coverage and perspectives provided by many of the high-end journals.

Another development to consider is the introduction of so-called predatory journals. Several different scenarios can result; some fake journals will request submission, take the article processing fee, and never publish the paper. Others will fake the peer review process, publishing without any kind of quality control. The severity of this problem was well illustrated by a study in Science earlier this year, in which the authors created a fictitious scientist, complete with falsified CV, and requested enrollment as an editor on several editorial boards – and was successful.

This example demonstrates the financial opportunity scientific publishing has become; therefore we as scientists need to be careful where we submit our papers. There are some key questions we need to ask:

Are the members of the Editorial Board well-respected scientists?

Does the journal have a clear editorial policy?

Are publication fees clearly stated?

Is the journal indexed, in PubMed for example?

Does the journal publish papers on similar subjects to your own?

Finally, one vital question to ask: Who is publishing the journal? It is now more important than ever that we provide support for publications driven by not-for-profit organisations, either in the form of learned societies, academies, and others, who have clear objectives for supporting the scientific community. We as scientists benefit from these society-run journals. Why publish in a journal where profits are going to a board of investors, when instead it could be put towards a scholarship for your next post doc, or a grant for a PhD student to join an international conference? FEMS Yeast Research belongs to this last category, supporting various conferences and research fellowships through the work of the Federation of European Microbiological Societies (FEMS).

Finally, I’ll end with my original question: where is scientific publishing heading? Niels Bohr said “prediction is very difficult, especially about the future”, and of course it is impossible for me to know with any certainty. However, I do think that the traditional library subscription model will eventually disappear – and perhaps this will be good science and society as a whole. Either way, I encourage all editors, reviewers, authors, and readers to share your thoughts on journal policies, and engage with these kinds of discussions in the wider community.

Featured image credit: Office by Free-Photos. CC0 public domain via Pixabay.

The post Where is scientific publishing heading? appeared first on OUPblog.

Many rivers to cross – can the Ganges be saved?

The Ganges is known as a wondrous river of legend and history whose epithets in Sanskrit texts include “eternally pure”, “a light amid the darkness of ignorance”, and “daughter of the Lord of Himalaya.” One hymn calls it the “sublime wine of immortality.” Yet the river whose waters and fertile silt have supported the densest populations of humans on earth for millennia is now under threat. Where its waters are not diverted for irrigation and hydro-electricity, they are befouled by sewage and poisoned by pesticides, industrial waste, carcinogenic heavy metals and bacterial genes that make lethal infections resistant to modern antibiotics. When I first wrote this sentence in the middle of the dry season, I was looking at a front-page story in The Hindu newspaper headlined “Is the mighty Ganga drying up?”. The conclusions of official measurements, academic papers, and the evidence of one’s own eyes are alarming for anyone who cares about human health or the environment.

After decades of false starts, scandals, and wasted money under previous governments, Indian Prime Minister Narendra Modi launched a campaign in 2014 to clean the Ganges and save it for future generations. More than two years on, many of those who thought he would achieve something are bitterly disappointed by the lack of progress. It will in any case be a huge and costly task. But it is not impossible.

Find out more about the Ganges, its problems, and what can be done to save it with our interactive map.

Background image credit: Physical Features of India by A happy ThingLink User.

Featured image credit: India Varanasi Ganges Boats by TonW. Public domain via Pixabay.

The post Many rivers to cross – can the Ganges be saved? appeared first on OUPblog.

November 26, 2017

The building blocks of ornithology

Museum collections are dominated by vat collections of natural history specimens—pinned insects in glass-topped drawers, shells, plants pressed on herbarium sheets, and so on. Most of these collections were never intended for display, but did work in terms of understanding the variety and distribution of nature. They were also the product of the personal passions, obsessions of enormous numbers of people. Who they were and the means by which they built their collections are often lost in the mists of time, leaving the specimens rather adrift.

Natural history blossomed as a pastime in the nineteenth century, notably in Britain, Europe and North America, and as people travelled farther afield as part of the machinery of empire and the colonialist impulse. It was a respectable pastime enjoyed by many classes; this was especially important for the middle and upper classes as they had large amounts of time to occupy, but Victorian principles of self-help meant that enjoyment for enjoyment’s sake was not enough: even leisure activities should be morally uplifting.

Social aspects of natural history meant that some pursuits were suitable for some social groups but not others: shell-collecting and flower-collecting/botany were popular with both men and women. Bird collecting (ornithology) was popular with young men, and was encouraged, as it got them outdoors into the fresh air.

Pied Kingfisher from A History of the Birds of Europe. Used with permission.

Pied Kingfisher from A History of the Birds of Europe. Used with permission.Bird collecting held some particular challenges, beyond the difficulty of shooting specimens, in that birds were relatively difficult to preserve. A reliable preservative, consisting of arsenic mixed with camphor bound in a bar of soap, was discovered in the late 18th century and popularised in the 1820s; bird collecting (in terms of collecting preserved specimens) increased greatly in popularity, although the detailed instructions contained in collecting manuals may have been just as important as the discovery of a preservative.

Mounted specimens with glass eyes could be attractive (although they often weren’t), but they took up a lot of space and were difficult to transport. Most collectors collected ‘study skins’—simply prepared specimens that resembled a bird lying on its back, filled with cotton wool or other similar soft material. Study skins represented a standardised format that could be purchased or exchanged and amassed into collections of similar specimens. Labels containing information on where, when and by whom specimens had been collected were attached to the legs of specimens.

Ornithology was a specimen-based discipline, and the subject was dominated by the quest for reliable empirical information that sought to address two particular questions: what species are there, and where are they found? In the days before the camera and indeed binoculars, specimens were the most reliable evidence of the occurrence of particular birds at particular places, and the maxim ‘what’s hit is history and what is missed is mystery’ was popular among naturalists. The search for globalised, encyclopaedic information involved great numbers of devotees, who were instructed in what to observe and where through a variety of collecting manuals. The standardised methods of natural history meant that anyone could potentially contribute to the great collective endeavour of outlining the distribution of the birds of the world.

Bird skins and eggs were in great demand among collectors, so new markets were created. This resulted in a great machine-like undertaking, involving scientists and naturalists, but also explorers, private travellers, geographers, businessmen, employees, speculators, trappers, missionaries, and untold thousands of farmers, lighthouse keepers, sailors, poorer people and servants who did the bulk of the dirty work of specimen collecting and preparation.

Operating at a time before organised and institutionalised museums, professions and conservation movements, self-motivated individuals came together to participate, debate, and carve out territory for themselves within ‘ornithology’. Seemingly mutually exclusive activities—collecting, conserving, protecting, hunting—were entangled in ways not found today. The rather anonymous specimens in museums are the products of a tremendous variety of relationships, but their fuller story can be pieced together through archives, letters and collections catalogues.

Spoonbill from A History of the Birds of Europe. Used with permission.

Spoonbill from A History of the Birds of Europe. Used with permission.Henry Dresser (1838–1915) was one of the leading ornithologists of the mid–late 19th and early 20th centuries, yet his name is hardly known today. He produced A History of the Birds of Europe, begun with Richard Sharpe and issued between 1871–82. Dresser had an exciting life: his father was engaged in the Baltic and New Brunswick timber trade, working in London. Henry was sent to be schooled in Germany and Sweden, and he worked in the timber business in Finland and New Brunswick. Following on from this he spent 14 months in Mexico and Texas at the height of the American Civil War. He settled in the timber and iron trades in London, yet, in his ‘spare’ time, was one of the leading ornithologists in the world.

Throughout his life, Dresser was a most ambitious natural history collector and ornithologist. He played a leading role in scientific societies, at a time when these were being established, as the world’s birds were being discovered and described, and as the bird conservation movement developed. Dresser made extensive collections that formed the basis of over 100 publications, including great illustrated books that combined masterpieces of bird illustration with cutting edge scientific information. An exploration of his life reveals much about the transformations in 19th and early 20th century ornithology, and the role that private gentlemen naturalists played in a time without institutions or professionals.

Featured image credit: Black Woodpecker from A History of the Birds of Europe. Used with permission.

The post The building blocks of ornithology appeared first on OUPblog.

The problem with a knowledge-based society

In the last few decades, few concepts have spoken to the imagination of economists like the ‘knowledge based economy’ or ‘knowledge economy’ within Western policy circles. There has been a consensus that Western economies have entered a phase in economic history called the ‘knowledge’ or ‘knowledge-based’ era. The brains of the workforce are thought to be the most important contributor to today’s wealth creation. Through technological progress, innovating activities, and knowledge creation, economic prosperity is accomplished. A knowledge-based economy relies primarily on the use of ideas rather than physical abilities, and on the application of technology rather than the transformation of raw materials or the exploitation of cheap labour. Knowledge is used, created, acquired, and transmitted throughout the whole economy, where it becomes a crucial element for the production of goods and services and economic development in general.

The concept of a knowledge society has a long history. From the 1960s onward, theorists have argued that the future of advanced capitalist countries is defined by the exploitation of knowledge and information. Scholars such as Peter Drucker, Daniel Bell, and Alvin Toffler argued that the knowledge-based revolution was to industrialisation what industrialisation was to the agricultural society. A large body of literature developed, emphasising the importance of knowledge and advanced formal education to fuel a knowledge-based economy.

Rather than employing manual workers, knowledge-based industries employ predominantly what have been called ‘knowledge workers.’ Within the academic literature this refers to workers who can successfully transform and work with knowledge. The so-called knowledge elites are those professionals who can capture, exploit, and control knowledge and therefore will increasingly be powerful and influential. There exists a strong assumption within the knowledge economy discourse that existing work is also becoming more complex and thus skilled. Higher Education is seen as the crucial developer of skills and knowledge to perform knowledge work.

There have been numerous critiques against the idea of the knowledge-based economy and its assumptions, in particular by sociologists. Some have argued that the knowledge economy does not grasp the actual extent of which workers use knowledge in the workplace. Others have stressed that knowledge has not been the exclusive realm of a special professional group. All jobs require knowledge and there is no clear threshold to identify the intensity of knowledge in an occupation we think of as a knowledge work one. Knowledge has penetrated throughout the economy.

A knowledge-based economy relies primarily on the use of ideas rather than physical abilities, and on the application of technology rather than the transformation of raw materials or the exploitation of cheap labour.

During a three-year study, I have investigated the work of lab-based scientists in the biotechnology and pharmaceutical companies, software engineers, financial analysts and press officers. All four occupations are seen as examples of knowledge work ‘knowledge occupations’. The findings of the study support the scepticism about the knowledge economy discourse. The workers in the four occupations use a wide variety of knowledge and not just formal and abstract knowledge associated with formal learning. Most use ‘embodied knowledge’ which is practical and context-specific or ‘encoded knowledge’ such as rules and standards codified language and information distributed through members of an organization. Even within the work of scientists’ ‘thinking skills’ and drawing on abstract knowledge and deductive reasoning are not used for the majority of their day.

The study also found that the skills of graduate workers used at work are not dominated by, and can certainly not be equated with, ‘advanced’ thinking skills. Instead, the workers in the four graduate occupations use a wide range of skills, combining both hard and soft skills (working in tandem). Soft skills are often embedded in hard skills and knowledge. Hard skills and knowledge do not have a privileged status within the four occupations.

One of the perceived characteristics of knowledge workers is to work under conditions of relatively high autonomy and to, at the minimum, have the ability and opportunity to innovate products and processes. Although not in itself a skill, job control, the ability to decide how to do one’s work (and the pace at which to do it), influences how skills are exercised and how much scope exists for skill development. The case studies, which only examined workers’ subjective job control, indicate that most graduate workers generally perceive their own control to be high. Yet there does not seem to be anything specifically about their work that indicates the need or practice of high job control.

It is important to realise that the knowledge-based economy is not a neutral descriptive concept. It supports an ideological project that regards the development and application of so-called ‘human’ capital (the productive skills, individuals possess) as the answer to consistent productivity and prosperity problems, as well as issues of distributive justice. It is assumed that ongoing technological change has to be directly linked to a growing importance of technical skills and knowledge (developed through Higher Education). Yet in reality, the skills, knowledge, and abilities required to perform graduate roles are shaped by many other factors such as organisational and sector specific practices as well as job design and role interpretation.

Featured image credit: People men women graduation by StockSnap. Public domain via

Pixabay.

The post The problem with a knowledge-based society appeared first on OUPblog.

The final piece of the puzzle

When a group of people collectively solve a jigsaw puzzle, who gets the credit? The person who puts the final piece in the puzzle? The person who sorted out the edge pieces at the beginning? The person who realised what the picture was of? The person who found the puzzle pieces and suggested trying to put them together? The person who managed the project and kept everyone on track? The whole group?

The Nobel Prize in Physics 2017 was recently awarded to Rainer Weiss, Barry C. Barish, and Kip S. Thorne, “for decisive contributions to the LIGO detector and the observation of gravitational waves”. This has provoked discussion about how Nobel Prizes are awarded. Weiss himself noted that the work that led to the prize involved around a thousand scientists. Martin Rees, the Astronomer Royal, said:

“Of course, LIGO’s success was owed to literally hundreds of dedicated scientists and engineers. The fact that the Nobel committee refuses to make group awards is causing them increasingly frequent problems – and giving a misleading and unfair impression of how a lot of science is actually done.”

There are perhaps two difficult questions here. If a group of people publish a research paper, how do they divide up the credit amongst themselves? And if a breakthrough is a result of a number of papers, by a whole group of people, who gets the acclaim?

The convention in my own subject of pure mathematics is usually that on a paper with multiple authors, the names appear in alphabetical order by last name, regardless of who did the most work, who made the largest contribution, or who is most senior. That, at least, is relatively straightforward – although it does mean for example that when reviewing candidates for a job, it can be hard to identify exactly what someone contributed to their joint publications.

More recently, some mathematicians have started to work in new large-scale online collaborations, and this raises all sorts of questions about how credit is assigned. When the mathematician Tim Gowers first proposed experimenting with such a collaboration, which he called a ‘Polymath’ project, he specified in advance how any resulting research papers would be published. The last of his twelve Polymath rules says

“Suppose the experiment actually results in something publishable. Even if only a very small number of people contribute the lion’s share of the ideas, the paper will still be submitted under a collective pseudonym with a link to the entire online discussion.”

Since, to everyone’s surprise, the first Polymath project did indeed lead to a research paper, this rule was immediately implemented, and it has continued in this way for subsequent Polymath projects. The discussions that led to the paper are all still available online, on various blogs and wikis, so if someone wants to check an individual’s specific contribution, they can do exactly that – which is not the case for more traditional collaborations.

Within pure mathematics, breakthroughs have mostly been attributed to the individual or small group of people who put the final piece in the jigsaw puzzle. That is perhaps unsurprising. If a person gives a solution to a problem or proves a conjecture, then they should get the credit, shouldn’t they? But mathematical arguments don’t usually exist in isolation: most often one piece of work builds on many previous ideas. Isaac Newton famously said “If I have seen further it is by standing on the shoulders of Giants”. If other mathematicians contributed ideas that were crucial ingredients but that don’t have the glamour of a complete solution to a famous problem, how can they receive appropriate credit for their work?

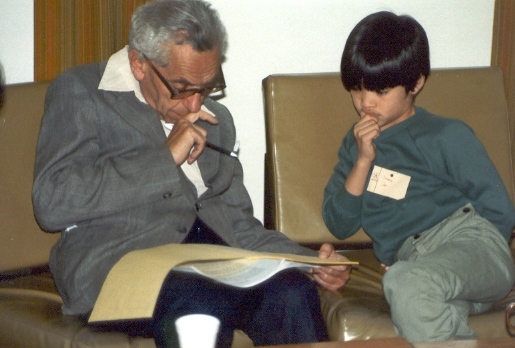

Paul Erdős teaching Terence Tao in 1985, at the University of Adelaide. At the time, Terence was 10 years old. Years later, Terence would grow to become one of the greatest Mathematicians alive. Terence Tao received the Fields Medal in 2006, and was elected a Fellow of the Royal Society in 2007. Photo by either Billy or Grace Tao. CC BY-SA 2.0 via Wikimedia Commons.

Paul Erdős teaching Terence Tao in 1985, at the University of Adelaide. At the time, Terence was 10 years old. Years later, Terence would grow to become one of the greatest Mathematicians alive. Terence Tao received the Fields Medal in 2006, and was elected a Fellow of the Royal Society in 2007. Photo by either Billy or Grace Tao. CC BY-SA 2.0 via Wikimedia Commons.The public nature of the Polymath projects makes it possible to track progress on some problems in a way that has not previously been possible. In 2010, there was a Polymath collaborative project on the ‘Erdős discrepancy problem’, but the project did not reach a solution. The problem was subsequently solved by Terry Tao in 2015. Tao had been one of the participants in the Polymath5 project on the problem, and in his paper he acknowledged the role that Polymath5 had played in his work. He also built on work by Kaisa Matomäki and Maksym Radziwiłł, and a suggestion by Uwe Stroinski that a recent paper of Matomäki, Radziwiłł, and Tao might be linked to the Erdős discrepancy problem. I am not commenting on this example because I think that anyone has behaved badly. On the contrary, Tao was scrupulous about acknowledging all of this in his paper. Rather, the public collaborative aspect of the story has made it easier than usual to trace the journey that eventually led to a solution. Without a doubt it was Tao who put it all together, added his own crucial ideas and insights, and came up with a solution, but it does seem that others’ contributions were key to the breakthrough coming at that particular moment. I suspect that history will record that “the Erdős discrepancy problem was solved by Tao”, without the nuances.

Two of the mathematicians I have mentioned, Tim Gowers and Terry Tao, are winners of the Fields Medal, one of the highest honours to be awarded in mathematics. The Fields Medal is awarded “to recognize outstanding mathematical achievement for existing work and for the promise of future achievement”. I am curious about whether in the future the Fields Medal, the Abel Prize, or any of the other accolades in mathematics might be awarded to a Polymath collaboration that has achieved something extraordinary.

Featured image credit: Pay by geralt. Public domain via Pixabay .

The post The final piece of the puzzle appeared first on OUPblog.

November 25, 2017

Place of the Year nominee spotlight: Puerto Rico [quiz]

In September 2017, two powerful hurricanes devastated Puerto Rico. Two months later, 50% of the island is still without power, and residents report feeling forgotten by recovery efforts. From the controversy of hiring a small Montana-based electrical company, Whitefish, to restore power to the island; to the light shone on the outdated Jones Act, the humanitarian crisis following the hurricanes catapulted Puerto Rico to the world stage.

How much do you know about this commonwealth? Referencing Puerto Rico: What Everyone Needs to Know®, we’ve put together a quiz to test your knowledge. Take the quiz below and then vote to let us know where the Place of the Year should be for 2017.

Featured image credit: “puerto-rico-mountain-rock-wall-flag” by Lenaeriksson. CCO via Pixabay.

The post Place of the Year nominee spotlight: Puerto Rico [quiz] appeared first on OUPblog.

An overview of common vaccines [slideshow]

Vaccines represent one of the greatest public health advances of the past 100 years. A vaccine is a substance that is given to a person or animal to protect it from a particular pathogen—a bacterium, virus, or other microorganisms that can cause disease. The slideshow below was created to outline common child and adolescent vaccines from Kristen A. Feemster’s Vaccines: What Everyone Needs to Know.

Hepatitis B (HepB) recombinant vaccine

DISEASE: Hepatitis B—a virus transmitted through blood and other body fluids; can also be transmitted from mother to infant. It causes acute and chronic liver disease. Chronic infection can lead to liver failure, cirrhosis, or cancer (causes 50% of hepatocellular cancer).

BRIEF HISTORY: Before vaccine introduction, about 18,000 children less than 10 years old were infected each year. Since the vaccine introduction, only 2,895 cases were reported in 2012. 90% of infected infants develop chronic infection.

CURRENT ROUTINE SCHEDULE IN THE US: First dose: birth, Second dose: 1– 2 months, third dose: 6– 18 months. After birth, the vaccine may be given as part of a combination vaccine (DTaP– HepB– IPV) at 2, 4, and 6 months.

REPORTED ADVERSE EVENTS: Pain or soreness at the injection site, mild fever, headache, fatigue.

VACCINE EFFECTIVENESS: Over 90% protection to infants, children, and adults immunized with 3- dose series before exposure to the virus. Some people may not respond to the first series and will require a second series.

Image credit: “Hepatitis B virus” by jrvalverde. CCo Public Domain via Pixabay.

Rotavirus (RV1 and RV5) – Oral live attenuated virus vaccine

DISEASE: Rotavirus is a virus that infects the lining of the intestines and causes diarrhea and sometimes vomiting; can lead to severe dehydration. It is spread through fecal-oral route.

BRIEF HISTORY: Before vaccine introduction, 2.7 million children were affected every year. Almost every child had a rotavirus infection by age 5 years. Since vaccine introduction there have been 40,000– 50,000 fewer rotavirus hospitalizations per year since 2008.

CURRENT ROUTINE SCHEDULE IN THE US: First dose: 2 months, 2nd dose: 4 months, third dose: 6 months, RV1 is 2- dose series, RV5 is 3 – dose series

REPORTED ADVERSE EVENTS: Vomiting, diarrhea, irritability, fever.

VACCINE EFFECTIVENESS: Approximately nine out of ten vaccinated children are protected from severe rotavirus illness, whereas seven out of ten children will be protected from rotavirus infection of any severity.

Image credit: “Transmission electron micrograph of multiple rotavirus particles” by Dr Graham Beards. CC by 3.0 via Wikimedia Commons.

Haemophilus influenzae type b conjugate (Hib)

DISEASE: Haemophilus influenza type b is bacteria that cause pneumonia, bloodstream infections, meningitis, and infections of the bones and joints.

BRIEF HISTORY: Before vaccine introduction, there were about 20,000 children under 5 years with serious (or fatal) infections per year. Since vaccine introduction 25 reported cases per year 2003– 2010. Most cases among un- or under-vaccinated children.

CURRENT ROUTINE SCHEDULE IN THE US: First dose: 2 months, 2nd dose: 4 months, 3rd dose: 6 months, fourth dose: 12– 15 months, OR 3rd dose: 12– 15 months.

REPORTED ADVERSE EVENTS: Pain and soreness at injection site.

VACCINE EFFECTIVENESS: 95-100% effective at preventing serious infections after primary series.

Image credit: “Haemophilus influenzae bacteria” by Centers for Disease Control and Prevention/Dr. W.A. Clark. Public Domain via Wikimedia Commons.

Inactivated Polio vaccine (IPV)

DISEASE: Poliovirus is an enterovirus that first infects the throat and lining of the intestines and then can invade an infected person’s brain and spinal cord, causing paralysis (paralytic poliomyelitis)

BRIEF HISTORY: Before the polio vaccine, there were 13,000– 20,000 cases of paralytic polio per year. The United States has been polio-free since 1979; the last imported case was in 1993. There has been near eradication worldwide

CURRENT ROUTINE SCHEDULE IN THE US: 1st dose: 2 months 2nd dose: 4 months 3rd dose: 6– 18 months 4th dose: 4– 6 years May be given as part of a combination vaccine (DTaP– HepB– IPV) at 2, 4, and 6 months

REPORTED ADVERSE EVENTS: Redness and pain at injection site

VACCINE EFFECTIVENESS: More than 90% immune after 2 doses; 99% immune after 3 doses

Image credit: “Transmission electron micrograph of Polioviruses” by Dr Graham Beards. CC BY-SA 4.0 via Wikimedia Commons.

Pneumococcal Conjugate (PCV13)–Conjugate protein vaccine

DISEASE: Streptococcus pneumonia is bacteria that cause ear and sinus infections, pneumonia, bloodstream infections, and meningitis, especially in young children and older adults. The current vaccine targets 13 serotypes (of about 90 total serotypes)

BRIEF HISTORY: Before vaccine introduction there were 17,000 cases of invasive disease (bloodstream infections, meningitis) and 5 million cases of middle ear infection among children under 5 years old. Since the introduction of the vaccine, there has been a 99% reduction in invasive disease caused by vaccine serotypes in children under 5 years old and a 76% reduction in disease caused by all types. Significant decreases in older adults (65+ years old) due to herd immunity

CURRENT ROUTINE SCHEDULE IN THE US: 1st dose: 2 months, 2nd dose: 4 months, 3rd dose: 6 months, 4th dose: 12– 15 months

REPORTED ADVERSE EVENTS: Pain and swelling at the injection site, fever, high fever (>102°F), Decreased appetite, irritability

VACCINE EFFECTIVENESS: 97% reduction in invasive disease caused by vaccine types among children. 75 out of 100 adults age 65 years or older are protected against invasive pneumococcal disease

Image credit: “Streptococcus pneumoniae” by Janice Haney Carr. Public domain via Wikimedia Commons.

Influenza—IIV and LAIV (IIV—inactivated influenza virus vaccine, LAIV— live attenuated influenza virus vaccine)

DISEASE: Influenza virus causes respiratory infections and pneumonia. Complications include secondary bacterial pneumonia, respiratory failure, and death. Circulating strains of influenza may change each year, which leads to almost yearly changes to the influenza vaccine.

BRIEF HISTORY: Yearly epidemics—activity usually peaks December— March 200,000 hospitalizations per year. Highest hospitalization rates among young children, infants, and older adults

CURRENT ROUTINE SCHEDULE IN THE US: 6– 23 months: annual vaccination (IIV only) 1 or 2 doses 2– 8 years: annual vaccination 1 or 2 doses (IIV or LAIV) 9 years and older: annual vaccination 1 dose only (IIV or LAIV)

REPORTED ADVERSE EVENTS: Redness, soreness, or swelling at the injection site; muscle aches; headache; low- grade fever

VACCINE EFFECTIVENESS: Vaccine effectiveness varies by year. Generally reduces the risk of influenza by 50– 60% when most circulating flu viruses are similar to vaccine viruses

Image credit: “influenza virus” by CDC Influenza Laboratory. Public Domain via Wikimedia Commons.

Hepatitis A—Inactivated virus vaccine

DISEASE: Hepatitis A virus causes mild to severe liver disease. Symptoms include fever, stomach pain, jaundice (yellowing of the skin), and nausea. 15% of cases hospitalized.

BRIEF HISTORY: Before vaccine introduction there were 30,000– 60,000 reported cases per year. Since vaccine introduction there have been about 1,600– 2,500 reported cases per year.

CURRENT ROUTINE SCHEDULE IN THE US: 2-dose series between 12 and 23 months; separate the 2 doses by 6–18 months.

REPORTED ADVERSE EVENTS: Pain, redness, and tenderness at the injection site, headache, mild fever, feeling “out of sorts,” fatigue.

VACCINE EFFECTIVENESS: 68% reduction in hepatitis A hospitalizations since vaccine introduction.

Image credit: “Hepatitis A virus” by CDC/Betty Partin. Public Domain via Wikimedia Commons.

Human papillomavirus (HPV9)—Recombinant vaccine

DISEASE: Human papillomavirus (HPV) is the most common sexually transmitted infection, affecting 80% of all men and women during their lifetime. The majority of new infections occur in 15- to 24-year-olds. Some types of the virus can cause cancers of the anus, cervix, oropharynx, penis, rectum, vagina, and vulva, as well as genital warts. Vaccine protects against 9 HPV types associated with about 85% of cervical cancers.

BRIEF HISTORY: Each year in the United States, HPV causes 30,700 cancers in men and women and over 300,000 new cases of genital warts

CURRENT ROUTINE SCHEDULE IN THE US: 2-dose schedule: 0, 6–12 months for adolescents aged 11 or 12 years. 3-dose schedule for adolescents who receive first HPV dose at age 15 years or older: 0, 1–2, and 6 months

REPORTED ADVERSE EVENTS: Pain, redness, or swelling at the injection site; slight fever. Rare: fainting

VACCINE EFFECTIVENESS: Approximately 70% reduction in prevalence of cancer-causing serotypes observed since vaccine introduction. HPV9 has an estimated potential to prevent 90% of anogenital cancers caused by HPV

Image credit: “Papilloma virus” by National Cancer Institute. Public Domain via Wikimedia Commons.

Featured image credit: “Syringe” by qimono. CC0 Public Domain via Pixabay.

The post An overview of common vaccines [slideshow] appeared first on OUPblog.

Oxford University Press's Blog

- Oxford University Press's profile

- 238 followers