Oxford University Press's Blog, page 160

March 1, 2020

Grammar in Wonderland

Lewis Carroll was a mathematically-inclined poet who published Alice’s Adventures in Wonderland in 1865 and Through the Looking-Glass in 1872 as well a number of poems and math and logic texts. Last summer I saw an outdoor production of Alice in Wonderland and it reminded me of all the linguistics in the two books. Carroll touches on questions of homophony, meaning, reference, syntax, pragmatics, and ambiguity in Alice’s various interactions or imaginings in her Wonderland.

Puns abound, as when the Mouse tells his story to Alice:

“Mine is a long and a sad tale!” said the Mouse, turning to Alice, and sighing.

“It is a long tail, certainly,” said Alice, looking down with wonder at the Mouse’s tail; “but why do you call it sad?”

This is followed by the tail-shaped poem, reinforcing the pun visually. There is much, much more, some of which is discussed in books like Marina Yaguello’s Language Through the Looking Glass and Martin Gardner’s The Annotated Alice (among many others).

When Alice meets Humpty Dumpty, he explains the apparent nonsense words in the poem Jabberwocky—giving meanings for slithy and mimsy and gimble and gyre.

But before that, the girl and the egg explore how things are named. In Plato’s Cratylus, Socrates was asked whether names were conventional or natural. Here, Humpty Dumpty plays the naturalist and Alice plays the conventionalist. Humpy asks Alice her name. When she tells him, he remarks that Alice is “a stupid name” and asks what it means.

“Must a name mean something?” Alice asked doubtfully.

“Of course it must,” Humpty Dumpty said with a short laugh: “my name means the shape I am — and a good handsome shape it is, too. With a name like yours, you might be any shape, almost.”

We see another bit of semantics soon after. In the twentieth century, the philosopher H. P. Grice expounded on the general maxims that conversationalists follow, one of which is to be relevant. Carroll gets at that too, when Alice tries to change the subject from names:

“Why do you sit out here all alone?” said Alice, not wishing to begin an argument.

“Why, because there’s nobody with me!” cried Humpty Dumpty. “Did you think I didn’t know the answer to that? Ask another.”

What Alice really wants to know is the reason he is sitting alone on the wall, but he answers irrelevantly. The egg ignores the conventional purpose of the inquiry and treats her question as a metalinguistic one—a riddle about the meaning of “all alone.” He does it again a bit later when he asks about Alice’s age:

“So here’s a question for you. How old did you say you were?”

Alice made a short calculation, and said “Seven years and six months.”

“Wrong!” Humpty Dumpty exclaimed triumphantly. “You never said a word like it!”

“I thought you meant ‘How old are you?’” Alice explained.

“If I’d meant that, I’d have said it,” said Humpty Dumpty.

Alice is following conversational conventions and Humpty Dumpty is flouting them. Later still Humpty Dumpty plays with morphology and with vagueness. When Alice notices his cravat, he explains that it was a gift from the White King and Queen.

“They gave it me,” Humpty Dumpty continued thoughtfully as he crossed one knee over the other and clasped his hands round it, “they gave it me — for an un-birthday present.”

“I beg your pardon?” Alice said with a puzzled air.

“I’m not offended,” said Humpty Dumpty.

“I mean, what is an un-birthday present?”

“A present given when it isn’t your birthday, of course.”

Humpty Dumpty plays with the literal meaning of “I beg your pardon,” but he also creates a neologism: the un-birthday, which is any day not that is not your birthday. Combining un-birthday with present, the wily egg proposes that there are 364 possible opportunities for un-birthday presents as opposed to one opportunity for birthday presents. But the un-birthday days might result in no presents, so Humpty is engaging in some sleight of hand here. He claims victory anyway, saying

“There’s glory for you!”

“I don’t know what you mean by ‘glory,’” Alice said.

Humpty Dumpty smiled contemptuously. “Of course you don’t — till I tell you. I meant ‘there’s a nice knock-down argument for you!’”

“But ‘glory’ doesn’t mean ‘a nice knock-down argument,’” Alice objected.

“When I use a word,” Humpty Dumpty said in rather a scornful tone, “it means just what I choose it to mean — neither more nor less.”

Humpty is suggesting that meanings are infinitely subjective and determined by the intentions of the speaker; Carroll mocks the absurdity.

At the outset of Alice’s Adventures in Wonderland, when Alice cries “Curioser and curiouser!” Carroll tells the reader, parenthetically, that “(she was so much surprised, that for a moment she quite forgot how to speak good English).”

All along in the Wonderland and Looking Glass, Carroll seems intent on making language curiouser and curiouser and making his readers more curious about it.

Featured image: Wikimedia Commons

The post Grammar in Wonderland appeared first on OUPblog.

February 28, 2020

The remarkable life of philosopher Frank Ramsey

Frank Ramsey, the great Cambridge philosopher, economist, and mathematician, was a superstar in all three disciplines, despite dying at the age of 26 in 1930. One way to glimpse the sheer genius of this extraordinary young man is by looking at some of the things that bear his name. My favourite was coined by Donald Davidson: the Ramsey Effect is the phenomenon of discovering that your exciting and apparently original philosophical discovery has been already presented, and presented more elegantly, by Frank Ramsey.

Ramsey published a grand total of eight pages in pure mathematics. He had been working the Entscheidungsproblem in the foundations of mathematics, which asked whether there is a way of deciding whether or not any particular sentence in a formal system is valid or true. He solved a special case of the problem, pushed its general expression to the limit, and saw that limit clearly. A theorem he proved along the way showed that in apparently disordered systems, there must be some order. The branch of mathematics that studies the conditions under which order must occur is now named Ramsey Theory, with more discrete parts of it called Ramsey’s Theorem and Ramsey Numbers.

In 1927-28, Ramsey published two papers in economics, with the encouragement of John Maynard Keynes. When the Economic Journal celebrated its 125th anniversary with a special edition in 2015, both papers were included. That is, looking back over a century and a quarter, one of the world’s best journals of economics decided that two of its 13 most important papers were written by Frank Ramsey when he was 25 years old. The editors explained themselves by saying that the papers initiated “entirely new fields”—optimal savings and optimal taxation theory. In addition, they produced the Ramsey-Cass-Koopmans model, Ramsey Pricing, Ramsey’s Problem, the Keynes-Ramsey Rule, and more.

Ramsey was also the first to set out the subjective conception of probability and expected utility theory that underpins much of contemporary economics. That is, he figured out how to measure degrees of belief and preferences and then showed how we might determine what a rational decision would be, given what someone believes and desires. He was a socialist and wouldn’t have been happy with what became of his idea—he didn’t think that all human action and decision should be crammed into the strictures of rational choice theory, as many economists and social scientists today seem to assume. Nonetheless, in this domain we find the Ramsey/de Finetti Theorem, the Ramsey-Good Result, Ramsey’s Procedure for measuring the intensity of preferences, and more.

In philosophy, he was just as impressive. When an undergraduate, he translated Ludwig Wittgenstein’s Tractatus and wrote a critical notice of it that still stands as one of its most important commentaries. He went on to have a profound influence on Wittgenstein, persuading him to drop the quest for certainty and purity, and turn to ordinary language and human practices. Ramsey was in search of a realistic philosophy and was leaning in the direction of American pragmatism when he died.

He also made major contributions to analytic philosophy. For instance, in a footnote in a draft paper published after his death, he suggested that when someone evaluates a conditional “if p, then q” they are hypothetically adding the antecedent p to their stock of knowledge and then seeing if q would also be there. Robert Stalnaker in 1968 proposed a theory of truth conditions for counterfactuals on the basis of that footnote. What is now known as the Ramsey Test for Conditionals is a method for determining whether we should believe a conditional.

In another draft paper, Ramsey devised a novel and important account of scientific theories and their unobservable entities. A theory is a long and complex sentence, starting with: “There are electrons, which . . .” and going on to tell a story about those electrons. We assume there are electrons for the sake of the theory, just as we assume there is a girl when we listen to a story that starts “Once upon a time there was a girl, who . . . .” Unlike the story, we commit ourselves to the existence of the entities in our theory, knowing that if it gets overthrown, so will our commitment to its entities. In the meantime, we use and believe the theory. Any additions to it are made within the scope of the quantifier that says that there exists at least one electron—the theory can evolve while still being about the original entities. Such a sentence is now called a Ramsey Sentence and is employed by both philosophers of science and philosophers of mind.

But Ramsey was the antithesis of the kind of figure usually associated with great genius. He was not an enigmatic, cult-encouraging eccentric, like his friend Wittgenstein. He was genial, open, and modest. “Never a showman,” said I.A. Richards, one of the founders of the new Cambridge school of literary criticism. Frances Marshall, at the centre of the Bloomsbury circle, never heard anyone say a word against him—she didn’t think it would be possible. But the best one-liner is from Michael Ramsey (Archbishop of Canterbury), who said that his brother had “a total lack of uppishness.”

Featured Image Credit: Rights owned by Oxford University Press

The post The remarkable life of philosopher Frank Ramsey appeared first on OUPblog.

February 27, 2020

The scientists who transformed modern medicine

Structural biology, a seemingly arcane topic, is currently at the heart of biomedical research. It holds the key to the creation of healthier, cleaner and safer lives, since it guides researchers in understanding both the causes of diseases and the creation of medicines required to conquer them. Structural biology describes the molecules of life. It seeks to know how many atoms are present, and in what ways they are connected, in substances like amino acids, enzymes and vitamins, for it is these properties that govern how such molecules function in living bodies.

Molecular biology emerged from the work of physicists and chemists. Some of them, and most of their predecessors, worked in the Royal Institution’s Davy Faraday Research Laboratory in London. It is in the laboratory where some of the giants of British science conducted their pioneering work. It was where Humphry Davy discovered in the 1800s the elements sodium, potassium, calcium, and chlorine. It is also where he invented the miners’ safety lamp, which saved coalminers’ lives. Davy’s young assistant, Michael Faraday, was described by Ernest Rutherford as the greatest scientific discoverer ever. Faraday invented the dynamo, electric motor and the means of generating continuous electricity. In the 1930s, the laboratory’s then director, Sir William Bragg, collected a group of brilliant young physicists together to investigate, using X-rays, the detailed atomic structures of many of the molecules of life: proteins, amino acids, enzymes, viruses, steroids, etc. One colourful individual who worked at the laboratory was J. D. Bernal The three Nobel prizewinners, Max Perutz (extreme left), Aaron Klug and John Kendrew (front row), accompanied by three other members of Peterhouse who were pursuing research at the Laboratory of Molecular Biology: (Back row) Drs R. A. Crowther, J. T. Finch, and P. J. G. Butler. (Credit JET Photographic).

The personalities, ambitions, abilities, idiosyncrasies, and rivalries of these scientists transformed all branches of biology, by the brilliance of their structural work, and through collaboration and competition with one another, provided us with a beautiful example of how science advances. Dorothy Hodgkin, from the time she worked with Bernal, and later in Oxford, was less competitive than most of the other people described here. She was the first woman, after the Curies (mother and daughter), to win the Nobel Prize in Chemistry, in 1964. Her successes were stellar throughout her life. As a graduate student she elucidated the structure of steroids, notably cholesterol. Later she solved the structure of insulin, penicillin, and of vitamin B12, thereby transforming much of modern medicine.

Peterhouse College, Cambridge, was also home to a galaxy of outstanding molecular biologists. The photograph below shows six members of Peterhouse that were also key scientists at the Laboratory of Molecular Biology, operated by the Medical Research Council. Three of them were Nobel Prize-winners and five were fellows of the Royal Society.

In the 1950s, Rosalind Franklin (working at Birbeck College, London, in Bernal’s group) and her two research colleagues, Aaron Klug and John Finch, were given permission by Lawrence Bragg to use the unique high-intensity X-ray sources of the Davy Faraday Research Laboratory to investigate the structure of the polio virus. (Finch had to be injected with the Salk vaccine, before starting his experiments there).

Perutz and Kendrew also led the world in deriving the structures of haemoglobin and myoglobin, the proteins that transport and store oxygen in all animals. The Laboratory of Molecular Biology that they founded in Cambridge is often described as one of the most successful ever. They shared the Nobel Prize in 1962. And shortly thereafter they founded the European Molecular Biology Organisation. It was one of their collaborators, Michael Rossmann, who in 2016 solved the structure of the Zika virus.

The nature of the first enzyme to be determined by the techniques of molecular biology was also solved at the Davy Faraday Research Laboratory, by a group of physicists, led by David Phillips and Louise Johnson, before they were each appointed at different times to professorships of molecular biophysics in the University of Oxford.

Featured image ‘Michael Faraday in his laboratory. c. 1850s’ by Harriet Jane Moore. Used with permission from Wikimedia Commons.

The post The scientists who transformed modern medicine appeared first on OUPblog.

February 26, 2020

Etymology gleanings for January and February 2020

Three comments on the most recent posts

Hunt: etymology

The Greek verb meaning “chase, hunt” has the root kīn (with long i), and that is why some speakers of British English pronounce the first syllable of kinetics as in kine. Long i in the Greek verb goes back to a diphthong. Kīn is the full grade of the root. Its zero grade has the vowel i (short). There is no way from ī to short u, as in hunt. Also, the most ancient meaning of the Indo-European root kei– must have been “to set in motion,” not “chase.” Last time, I said that the Greek verb had not attracted the attention of the oldest English etymologists. I should have avoided the plural, because only John Minsheu (1617) cited the Greek word at hunt, but in Minsheu’s dictionary the line between cognates and synonyms in various languages is sometimes hard to draw. The great Early Modern philologist Francis Junius was an especially strong proponent of the Greek origin of English words; he did not even include hunt in his dictionary (he may have had nothing to say about it). George William Lemon, another old etymologist, traced hunt to the root of Latin canis “dog,”, because, I assume, hunting was inseparable in his mind from hounds. Noah Webster was prone to deriving English words from the languages strewn all over the world; yet he offered no conjectures. Finally, Hensleigh Wedgwood, another great master of stringing together remote words, had no suggestions either. The reticence of even the oldest philologists when it came to deriving hunt from Greek should warn modern amateurs against such wild guesses. The origin of hunt remains unknown.

Engl. breath, German Atem, and Greek átmus

Are Atem and átmus “vapor, steam,” the latter known to us from atmosphere, related? Most probably, they are not, even though t in German Atem goes back to th (þ) and though the original vowel in Atem was also long. The vowel á in átmus is the product of a contracted diphthong (ae), with the digamma (F) in the middle. Indeed, some dictionaries hedge and say that perhaps the words are related after all or add that they have the same type of word formation. Those polite evasions are hardly needed. It will be remembered that the problem of Atem arose because Murray isolated br- in breath (see the post on January 22, 2020). As a curiosity, I may mention Henry Cecil Wyld’s puzzled question about where Murray found this element. Yet a few pages later, while discussing the etymology of bring, he pleaded for the protoform br-ing. No one in the world is perfect.

The root mo

In a comment on the most recent post (“Muddy waters”), in which I discussed Germanic words for “earth,” almost identical Uralic forms were cited. At the same time, my Rumanian colleague Ion Carstoiu wrote me a letter with similar examples. We may be dealing with either a Nostratic or a sound-symbolic word. However, even if we call it Nostratic, we will still be left wondering why, over such a large territory, such a syllable was chosen as the name of the earth. Is it because “earth” and “mother” are coupled in the consciousness of most or even all people, and the word for “mother” usually begins with m? Just guessing.

Answering letters

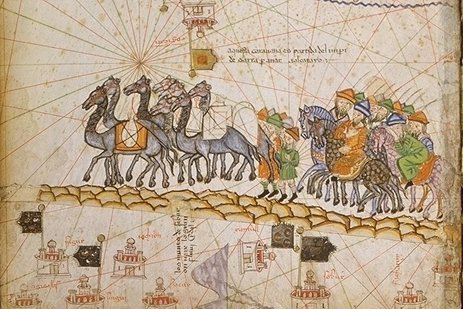

The Great Silk Road, one of the most famous roads in history. Caravan on the Silk Road, Abraham Cresques, 1375. Public domain via Wikimedia Commons.

The Great Silk Road, one of the most famous roads in history. Caravan on the Silk Road, Abraham Cresques, 1375. Public domain via Wikimedia Commons.The word silk

This is the letter I received from our French correspondent: “When using the picture of an electric cable, a professor of Arabic used the phonetic translation of silk. Since a natural thread is known to be very resistant… and given the Silk Road traveled by merchants importing the cloth ran through various Arabic-speaking lands, it would make sense for the etymology of silk to be ‘strong string’ and not the ser of serigraphy as told by many.” I have consulted numerous sources and believe that the traditional etymology can stand. The reason for my conclusion is that the Old English words for “silk” was sīde (compare German Seide) and sioloc. The first word was an obvious borrowing of Medieval Latin seta, and the second goes back to Sērica (from which we have serge; Sērica is the name of some people in the East, probably the Chinese). The source of both forms must have been Latin saeta Sērica “Serian hair.” The l ~ r variation is due to the fact that in several regions of the East these sounds are not distinguished they are in the European languages. I believe that only an etymology that can account for both silk and sīde will carry conviction.

Horned squirrels

The German for “squirrel” is Eichhörnchen. In this word, Eich means “oak,” and –hörnchen means “little horn.” A correspondent from Germany suggests that the form of the squirrel’s ears could be the reason the animal received its name. He also appended two pictures: of a squirrel and of an owl, both with horn-like ears. However ingenious, this hypothesis has no historical foundation. The word’s protoform must have sounded approximately as aikurna. It is anybody’s guess whether aik– has anything to do with oak (German Eiche; probably not: squirrels have no special liking for oaks), but urna could not mean “horn.” Folk etymology produces all kinds of funny associations. The anthologized example is Engl. asparagus, sometimes pronounced as sparrowgrass, though sparrows are not known for their attraction to that vegetable.

Beware of wearing horns. Red squirrel by Peter Trimming. CC by 2.0 via Geograph.

Beware of wearing horns. Red squirrel by Peter Trimming. CC by 2.0 via Geograph.Can bad and better ~ best be related?

Cases of enantiosemy (this term refers to words combining two opposite meanings) are not too rare, so that, in principle, bad ~ better might go back to the same root, but I doubt that we have such a case here. To begin with, both words are of unknown or disputable origin, and conclusions from such data are usually wrong. Second, better is a Common Germanic word, while bad has a narrow and rather insignificant Germanic base outside English, even though, contrary to what dictionaries say, it is not isolated. In my opinion, bad is a baby word (see the posts for June 24, July 8, and July 15, 2015 and don’t miss the comments: they contain some arguments against my conjecture). No evidence suggests that the root bat– has similar origins or that it is related to any other word supposedly akin to bad. Reconstructing ancient roots on such a flimsy foundation cannot be recommended.

Davy Jones: not a self-portrait. Illustration by George Cruikshank, 1832. Public domain via Wikimedia Commons.

Davy Jones: not a self-portrait. Illustration by George Cruikshank, 1832. Public domain via Wikimedia Commons.Davy Jones

Tracing that name to some Low German phrase should be avoided. There was a noted botanist, John Bellenden Ker, who decided that he had discovered the Low German origin of numerous English idioms and words. His two-volume book An Essay on the Archaeology of Our Popular Phrases and Nursey Rhymes is a tribute to the theme “Etymology and Delusion,” even though Ker was perfectly normal. He derived hundreds of idioms and rhymes from an impossible dialect of his own concoction. Reading that book will prevent anyone from following his example. Legends about the famous pirate are many. Davy Jones’s locker means “the bottom of the sea.” May the secret of his origin reside there.

Jig

The origin of this word, though “unknown,” is rather obvious. There is a similar French dance, so that perhaps the English word is a borrowing, rather than native. Several factors should be considered. Both the French and the English words were probably “low,” avoided by serious writers, so that their occurrence in books says little about the time of their coining. Also, numerous words beginning with and ending in j are sound symbolic, expressive, and onomatopoeic; their etymology is bound to remain doubtful. Old French giguer meant “gambol, sport.”. But jek-, je-g, ji-g, and their likes seem to have been the bases on which various slang words designating movement back and forth and sudden movement (jerks) were formed in Germanic and Romance; hence jig-jig, jig-a-jig, etc. for copulation. Fligmejig and frigmjig referred to women of loose character. It follows that, though we will never know whether the name of the dance is English or French, the idea underlying the coinage is transparent.

This is what the jig is all about. La Trénis, 1805. Public domain via Wikimedia Commons.

This is what the jig is all about. La Trénis, 1805. Public domain via Wikimedia Commons.Belong

Belong is a truly puzzling word. Many years ago, I wrote a post about it (September 13, 2006: “The long arm of etymology…”). Disregard some strange typos in that text; usually the posts are free from them.

A few look-alikes: How are whence/hence, thence/then; where/there, and what/that related?

In hence and thence, the historically correct spelling would be hens and thens, because s in them is the ancient ending of the genitive case, which in those words acquired an adverbial function. A relic of that case is our s-possessive (John’s, Mary’s, etc.). We can detect the same historical s in once, twice, thrice, since, else, and towards. Words like when and where began with hw-. The modern spelling with wh– is misleading. No doubt, people noticed the pattern in the rhyming words where/there, when/then, and their likes, but that similarity does not mean affinity. From the historical point of view, final t in what and that is the ending of the nominative. The roots are not related but they rhymed, and their closeness could not escape the speakers. The ancient root of where was the same as in who and what; there shared the root with that and the.

Finally, I want to thank Mark Jacobs for calling my attention to Laura Riding’s musings on words. Hers was a typical poet’s attitude toward language. She was not Interested in the etymology of words the way Skeat or Murray were: she listened to words and discovered “phonetic sympathies.” Among others, Roman Jakobson often wrote about the relations between poetry and etymology and about such

“sympathies.”

Featured image credit: The Silk Road via lwtt93. CC by 2.0 via Flickr. Image has been cropped to fit.

The post Etymology gleanings for January and February 2020 appeared first on OUPblog.

February 25, 2020

The architectural tragedy of the 2019 Notre-Dame fire

Sometimes it takes a catastrophic loss for us to realize how important historic architecture and cityscapes are to our lives. For instance, repairs are still ongoing following a 2011 5.8 magnitude earthquake that caused more than $30 million dollars’ worth of damage to the National Cathedral in Washington, DC. And on 15 April 2019 a cataclysmic fire brought massive destruction to the Gothic cathedral of Notre-Dame in Paris, including the loss of its soaring spire and its centuries-old wood roof structure. There followed an international outpouring of grief for the loss of one of the icons of Western architecture. People from innumerable walks of life and countries around the world mourned the loss of a building that represented the aspirations, creativity, and sheer labor of mostly nameless artists, architects, and workers. The restoration campaign likely will take years, perhaps even a decade or more.

Around the globe, wars and natural disasters—not to mention modernization and development—threaten the built environment. Globally, more than half of the world’s population (according to the United Nations Population Division) now lives in cities, and by 2050 about 70% of the earth’s population will be urban. We can only imagine what the implications of this growth will be for historic cities and buildings and hope that heritage preservation and conservation will keep pace.

It’s not just iconic monuments and buildings by famous architects that we have to be concerned about: historic architecture and urban environments are mostly the work of unnamed (vernacular) builders and craftspeople—not professional designers. All sorts of builders and buildings will be affected in this period of urbanization and development. To become effective preservation advocates, we will all have to become knowledgeable about a wide range of buildings and environments.

Eugène-Emmanuel Viollet-le-Duc (1814-1879), the nineteenth-century restorer of the Cathedral of Notre-Dame in Paris, made the connection between modernization and preservation in his Dictionnaire raisonné de l’architecture française du XIe au XVIe siècle (Dictionary of French Architecture from the Eleventh through the Sixteenth Centuries) published beginning in 1854. The author began his entry on “Restoration” by saying “The word and the thing are modern.” This seemingly paradoxical statement contains a kernel of truth that is as apt now as it was in the mid-nineteenth century: Although preservation (or restoration, in his terms) is concerned with retaining buildings that represent the past, the very need to do so is a function of modernization.

Just like twenty-first century urban dwellers, Viollet-le-Duc lived in a period of enormous change in the city where he lived: Paris. Starting in the 1850s, a massive campaign of rebuilding—called “Haussmannization” for its principal architect, the Baron Georges-Eugène Haussmann (1809-1891)—entailed the demolition of great swaths of the city’s ancient fabric, and the construction of new broad avenues, grand apartment blocks, and splendid monuments like the Paris Opéra. Today’s urbanites would find familiar some of the regrettable consequences of Haussmannization, such as a sort of gentrification in which working-class residents of the city center were displaced to its periphery. Viollet-le-Duc wasn’t explicitly criticizing urban modernization in his writing, but he did effectively connect two apparently distinctive phenomena: the expansion and reconfiguration of built landscapes (which took place throughout Europe and North America in the nineteenth century) and increasing public and private support for preserving or even reconstructing historic buildings.

Viollet-le-Duc’s concept of restoration, as expressed in his dictionary and elsewhere, had an enormous impact on the restoration of Notre-Dame which he undertook in collaboration with architect Jean-Baptiste Lassus (1807-1857) in the 1840s. Unlike today’s restorers who would try to bring back a building as much as possible to its original appearance, or to the way it was documented as having looked later on, Viollet-le-Duc believed that restoring a work of architecture meant giving it an imagined completeness which may never have existed.

Thus, the Cathedral of Notre-Dame, which had never had spires atop the two towers of its façade, Viollet-le-Duc at one point imagined with those elements reconstructed, and the demolished tower that had risen over a point above the main altar, rebuilt. He never carried out that ambitious project, but he did rebuild the one destroyed spire which was completed just before 1860. In the absence of much documentation of the original spire, Viollet-le-Duc’s version was a creative design. It paid homage to the great importance attached to the spire which soared above the cityscape to mark the location of the cathedral of Paris.

When the burning spire crashed to the roof below in April 2019, it ignited a debate about whether it should be replaced and, if so, with what? Many observers believe that Viollet-le-Duc’s spire—the result of the cathedral’s nineteenth-century restoration—should be reconstructed. The fervency of the calls for Notre-Dame’s restoration underscore how important older buildings and environments still seem, even as cities grow and transform.

Featured Image Credit: Rohan Reddy via Unsplash .

The post The architectural tragedy of the 2019 Notre-Dame fire appeared first on OUPblog.

February 24, 2020

How youth could save the Earth

Major global environmental problems threaten us. Recent scientific reports show that we are falling short on tackling climate change or stopping biodiversity loss, meaning that the Earth’s climate is under threat and natural species are undergoing a mass extinction wave. While these global environmental issues persist and become more urgent, policymakers have trouble elaborating and implementing solutions helping society to adapt. Considering the paralysis of the international system, new actors have begun to engage in environmental protection worldwide: youth. Giving speeches at United Nations international summits, and organising strikes or marches across the globe, the young generation is expressing its voice to ask for change vigorously. But could youth actors actually bring about the transformations needed to catalyse more aggressive government action on climate change?

The iconic Greta Thunberg, a 16-year-old Swedish woman who has attracted much attention to climate issues, first by addressing delegates at the 24th UN climate change conference in December 2018, symbolizes a complete reversal in the role that adults and children have played in policy debates traditionally. While Thunberg’s speeches to government delegates are critically important, perhaps more impressive is the reach of her messages to inspire youth actions all over the world. Greta inspired the current worldwide School Strike for Climate during which, on Fridays (known as the Fridays For Future), millions of students (and therefore future voters) walk out of schools all over the world to inform the public how seriously they take the issue of climate change.

Greta Thunberg’s actions are only the tip of the youth movement iceberg. This movement also relies on a large number of organisations that represent youth in global environmental governance. As a sign of the crucial importance of young voices, many different organisations are now representing youth. These organisations have various formats and scopes and include traditional environmental non-governmental organisations, like Friends of the Earth, which have opened their own youth branches, such as Young Friends of the Earth Europe set up in 2007; organisations specifically dedicated to represent youth interests around several environmental issues, like Youth For The Environment set up in 2010; organisations specifically dedicated to represent youth interests around precise and unique environmental issues, such as Youth for Oceans!, active since 2016; classical youth organisations that have embraced part of the global environmental agenda, like the World Organization of the Scout Movement; and youth-led networks, such as 350.org created in 2008, which are organisations not directly representing youth interests but still managed by young people.

And youth actors are also organising themselves around umbrella networks and organisations. Since 2008, the Global Youth Biodiversity Network has presented the views of youth actors on biodiversity protection during Conferences of the Parties of the Convention on Biological Diversity. Since 2011, the Children and Youth Constituency to United Nations Framework Convention on Climate Change (YOUNGO) has been the official youth constituency – gathering all youth organisations – at the UN Framework Convention on Climate Change. All these initiatives promise to bring new and forward-looking ideas to global environmental politics.

In addition to their diversity youth actors might catalyse change in global environmental politics because they embody different demographic characteristics compared to traditional civil society actors.

One clear distinctive feature is that young women are dominant within the youth movement. More than 66% of the student-led Fridays For Future protests have been female and young women, beyond Greta, are leading the movement: Belgians Anuna De Wever (who is 17) and Kyra Gantois (who is 20) organised the Climate March movement in Europe. And 22-year-old Nakabuye Hilda Flavia became the central youth leader for environmental protection in Uganda. This is a critically important indicator of the youth movement’s ability to catalyse change because the inclusion of gender concerns and a better gender balance within decision-making improves our ability to address environmental problems.

Change could also come from the fact that youth actors represent potential inter-generational bridges, between different generations, while overcoming differences in generational interests is a major challenge for environmental problems as most of these problems include a long-term dimension. Youth actors are particularly interesting as they also find themselves at the centre of inter-generational challenges, with part of youth suffering from difficulties such as poverty and low employment. They are therefore well positioned to link different interests within and across generations and catalyse efficient environmental policies to reconcile the competing interests between different groups.

Finally, youth actors are expressing claims that go beyond current environmental protection problems to raise broader justice, human rights, or power inequalities issues. They also critique the current business-as-usual logic that, according to some of them, benefits the already powerful. Their claims include calls for increased participation, transparency, and responsibility. They ask for a redefinition of democratic rules, to obtain a greater decision-making share. As youth actors are future voters and policy-makers, the dominant policy approach so far—mostly education programs—might be far less impactful than just working together with young people to target global problems.

Featured image credits: John Cameron, Assorted garbage bottles on sandy surface via Unsplash

The post How youth could save the Earth appeared first on OUPblog.

February 23, 2020

Seven psychology books that explore why we are who we are [reading list]

Social Psychology looks at the nature and causes of individual behavior in social situations. It asks how others’ actions and behaviors shape our actions and behaviors, how our identities are shaped by the beliefs and assumptions of our communities. Fundamentally it looks for scientific answers to the most philosophical questions of self. These seven books about a range of issues within social psychology—identity, gender and sexuality, radicalism, social assumptions and biases—address just a few of the questions about who we are.

Strange Case of Donald J. Trump: A Psychological Reckoning Dan P. McAdams In the summer of 2016, Dan McAdams wrote one of The Atlantic’s most widely-read pieces on the soon-to-be-elected 45th president— The Mind of Donald Trump . In his new book—a collection of stand-alone essays that each explore a single psychological facet of the president—McAdams makes the startling assertion that Trump may just be the rare person who lacks any inner life story. More than just a political and psychological biography, this book is a vivid illustration of the intricate relationship between narrative and identity. Finding Truth in Fiction: What Fan Culture Gets Right—and Why it’s Good to Get Lost in a Story Karen E. Dill-Shackelford and Cynthia Vinney

In almost everyone’s life there is a book, a movie, or a television show that has a personal impact that would seem disproportionate for a fictional work. Karen E. Dill-Shackelford and Cynthia Vinney illustrate how engagement with fictional worlds provides the very mental models that inform how we exist in this world. Being a fan—of Game of Thrones, Harry Potter, Star Trek, or Pride and Prejudice—is being human. ISIS Propaganda: A Full-Spectrum Extremist Message edited by Stephane J. Baele, Katharine A. Boyd, and Travis G. Coan

Conflict, aggression, radicalism, and terrorism are at their very cores matters of psychology and motivation. This book uses a multidisciplinary approach to show of how a group like the Islamic State could so successfully spread its message across the region and how people can counter these narratives in the future. The Radical’s Journey: How German Neo-Nazis Voyaged to the Edge and Back Arie W. Kruglanski, David Webber, and Daniel Koehler

In a turbulent global environment where extremist ideology has gained more mainstream popularity, The Radical’s Journey is a critical addition to scholarship on radicalization by leading experts in the field. Drawn from rare interviews with former members of the German far-right groups, the book delves into the motivations of those who join and ultimately leave neo-Nazi groups. Old Man Country: My Search for Meaning Among the Elders Thomas R. Cole

Today, a 65-year-old man will more likely than not live to see his 85th birthday. What life looks like in a man’s ninth decade can be a daunting unknown. Thomas R. Cole spoke with a dozen prominent American men—including former Chairman of the Federal Reserve Paul Volcker and spiritual leader Ram Dass—to answer four questions about old age: am I still a man? Do I still matter? What is the meaning of my life? Am I loved? How to Be Childless: A History and Philosophy of Life Without Children Rachel Chrastil

There is a common perception that someone’s status as a parent is the most unequivocally important role they she play in society, but the way this assumption erases the experiences of those who do not have children is profoundly difficult. This book explores the state of childlessness from the past 500 years. It’s a narrative that could empower readers—parents and the childless alike—to navigate their lives with purpose. Out in Time: The Public Lives of Gay Men from Stonewall to the Queer Generation Perry N. Halkitis

Library Journal called this book, “the rare nonfiction book that can also be a work of art.” Perry N. Halkitis’s new book looks at the experience of coming out across three generations in America—the Stonewall generation, the AIDS generation, and the Queer generation, and explores how, while sociology of life for LGBTQ people has changed, the underlying psychology has not.

Neither science nor philosophy will ever answer all of the questions of why we behave the way we do. But these seven books on social psychology answer a few them, and more importantly, raise hundreds more questions to keep the pursuit understanding forever moving forward.

Featured image by Frida Aguilar Estrada via Unsplash

The post Seven psychology books that explore why we are who we are [reading list] appeared first on OUPblog.

How Roman skeptics shaped debates about God

More than a third of all millennials now consider themselves atheist, agnostic or “religiously unaffiliated.” But this doesn’t mean that they have all given up on spiritual life. Some 28% of the unaffiliated attend religious services at least once or twice a month or a few times a year (and 4% go weekly). 20% of them pray at least daily, and 38% pray monthly or weekly. We may be entering a new age in which skepticism toward religious institutions is curiously accompanied by more trust in ones own experience of God.

If you are troubled, perplexed or alarmed by religion but still have stirrings of spiritual life, you are in good company with skeptics of the ancient Roman world. They were vigorous contributors to the debates about God that roiled the first century and helped shape Judaism and early Christianity.

Lucretius (c. 99-55 BCE) was the most influential Roman Epicurean. On the Nature of the Universe is a long poem written with missionary zeal dedicated to the overthrow of popular religion. In Lucretius’ view intelligent society should have no place for providence, divine intervention, fate, immortality of the soul, and religious rituals. Religion was an invention that kept human beings enslaved to superstitions by playing on their anxieties, desires, fears, and ignorance. Everything we need to know is found in nature and comes through the physical senses alone. We should thus abandon religion, develop our sense of wonder and dedicate ourselves to simple virtue, for as Lucretius writes in On the Nature of Things, “…True piety lies rather in the power to contemplate the universe with a quiet mind.”

Cicero (106 —43 BCE) was equally troubled about popular piety and wrote three books against deformed religious beliefs: On the Nature of the Gods, On Fate, and On Divination. But unlike Lucretius he made a clear distinction between practice and belief. Active involvement in religious life was fine, but only out of respect for cultural tradition, state order and “the opinion of the masses.” You don’t have to actually believe in it. Even so, Cicero was unwilling to jettison God. He writes in On Divination, “…The celestial order and the beauty of the universe compel me to confess that there is some excellent and eternal Being who deserves the respect and homage of men.”

Seneca (c. 4 BCE—65 CE) goes further. He thinks it is dishonest to cherish the god of the philosophers while outwardly maintaining false religion—even for reasons of state and pandering to the masses. He argues instead for a philosophical commitment to the divine, but without the trappings of religion. He believes in a kind fate whose ways—for now—are unknown and simply require peaceful acceptance of whatever happens. If you accept with equanimity the good, bad or indifferent you will be on the way to that harmony of inward and outward being that is the goal of human life.

Epictetus (c. 55—135 CE) taught that reason, self-reliance, self-restraint and recognizing what we can and can’t control are the keys to happiness and freedom. He doesn’t deny divine guidance, but says that God rejects the prayers of those who won’t take action to help themselves or others.

For Plutarch (c. 46—120 CE) what is most disturbing about popular religion is its exploitation of fear. In On Superstition, he says: “The atheist thinks there are no gods; the superstitious man wishes there were none, but believes in them against his will, for he is afraid not to believe…The superstitious man by preference would be an atheist, but is too weak to hold the opinion about the gods that he wishes to hold.” Plutarch has no trouble understanding why thoughtful people would rather be atheists. The gods themselves might prefer to have their existence denied than to have their character maligned by superstitious fear. For him this kind of religion is worse than atheism. He spent the last thirty years of his life serving as a priest at Delphi and was very well aware of the abuses of religion. Yet Plutarch refuses to throw away faith, and is saddened that so many have no awareness of God’s presence. Also in On Superstition, he says: “It is as if the soul had suffered the extinction of the brightest and most dominant of its many eyes, the conception of God.” For Plutarch, true piety lies in rejecting both extremes of credulity and atheism, and pursuing the middle way that brings rational and mystical together.

Roman thinkers, Jewish tradition, and early Christian experience converged in spirited debate about divine guidance in the first century. Whatever the deformations of religion, evidence of love, beauty, and truth compelled these people –and many today as well—to reckon with their source.

Featured image: Wikimedia Commons

The post How Roman skeptics shaped debates about God appeared first on OUPblog.

February 22, 2020

How sabre-toothed cats got their bites

Big cats are the most specialized killers of large prey among carnivorans. Dogs, bears, or hyenas have teeth fit to deal with non-meat food items like bone, invertebrates or plants. Not the cats: In the course of evolution they lost almost all teeth not essential for killing prey or cutting meat. But in the distant past there was a group of cats that went farther down the path of specialization, making their living relatives look like amateur hunters. They were the sabre-toothed felids, members of the extinct subfamily Machairodontinae within the cat family.

A lot of ink has been spilled about the enigmatic extinction of the sabre-toothed felids at the end of the Pleistocene, 10,000 years ago, especially because they were so diverse and widespread until then. But if we consider that many of their prey animals disappeared at about the same time, their demise seems less mysterious, or at least can be seen as part of a bigger mystery: the catastrophic Armageddon that razed the Ice Age megafaunas. Just as intriguing, but more interesting from an evolutionary perspective, is to know how and why the sabretooths developed their spectacular adaptations in the first place.

Like modern cats, the sabretooths reduced their non-cutting dentition, but they developed huge blade-like upper canines, often with serrated margins that cut easily through flesh. The last sabretooth species of the Pleistocene , like the famous Smilodon fatalis from the Rancho la Brea tar pits, had a set of anatomical adaptations that allowed them to use those “knives” with great efficiency, biting in a way quite different from modern cats. Logically, many of these adaptations were located in the skull and mandible, but a long and powerful neck was also essential. Why? Because with such long fangs, the jaws needed to open very widely in order to create enough clearance between canine tips, and at such gapes the muscles that close the mandible couldn’t contract powerfully enough. To compensate, the attachments of their neck muscles were modified, so they could pull down the whole head more efficiently and help drive the canine tips into the prey’s body. Once the gap was reduced, the jaw-closing muscles could contract more efficiently, powering the rest of the bite. The shape of the first neck vertebra was different from that of living cats. It gave the sabretooths the power to sink its teeth into flesh.

Ten million years before Smilodon lived Machairodus aphanistus, an early, lion-sized sabretooth. It became widespread in four continents during the Miocene, but despite being present in many fossil sites, it was traditionally known from fragmentary remains only. Things changed with the discovery of the Spanish fossil sites of Cerro Batallones, Madrid’s answer to California’s Rancho la Brea. Batallones is a carnivore trap, where predators got attracted by the carcasses of previous entrapment victims only to find their own death. As a result, scientists recovered hundreds of complete sabretooth fossils.

This fossil bonanza allowed us to describe the skull of Machairodus in detail some years ago, revealing that many of the anatomical refinements seen in advanced sabretooths like Smilodon were absent in the earlier cat, whose skull resembled those of the living big felids, even though its upper canines were already elongated, flattened and serrated. But what about its neck? Was it long and powerful with a modified atlas, as in Smilodon, or shorter like those of extant felines? The answer is: neither of the above!

It appears that the neck of Machairodus was long, flexible and strong like that of Smilodon, but its atlas was not specialized and resembled that of living cats. What is the meaning of this odd combination? It is an example of evolution in mosaic, meaning that some of the adaptations typical of a group of animals appear early in the history of the lineage, but others take much longer to evolve. This contradicts our intuitive perception of organisms as perfectly adapted to their ecological niche, but instead shows evolution as a process where nature uses the available materials to come up with the best solution possible. And the best solution possible is not perfect, just good enough for organisms to remain competitive.

The early history of sabretooths is thus revealed as a complex process. The key change that triggered their evolution was the development of their namesake upper canines, which allowed them to kill large prey rapidly by cutting their throats and causing massive blood loss and a quick death. Even in a cat like Machairodus that lacked many of the refined adaptations of its later relatives, just deploying its deadly canines put it ahead of its competitors. Its primitive atlas may not be ideal to contribute to the downward pull of the head needed to sink the sabres, but the long and powerful neck provided for a precise aim and kept the head firmly in place during the violent bite. Evolution is unforgiving with maladaptive mutations, but it allows imperfection all the time, and the slightest advantage is enough to keep participants in the competition. In the Miocene of Spain, knives were out for the prey, and the predator race went on.

Featured image credit: A life reconstruction of the sabre-toothed felid Machairodus aphanistus hunting a horse of the genus Hipparion. The predator uses its long neck to achieve a very precise bite at the throat of its prey. Artwork by Mauricio Anton. Used with permission of the artist.

The post How sabre-toothed cats got their bites appeared first on OUPblog.

February 20, 2020

How academics can leave the university but stay in academia

When “quit lit,” the trend of disillusioned PhDs writing personal essays about their decision to leave academia, hit its peak around 2013, I was just finishing my own PhD coursework. It seemed that every day, as I revised my dissertation proposal and worked on recruiting potential field sites, there was another column about the scarcity of tenure-track jobs, the plight of adjunct faculty, the ostracism faced by people who went alt-ac—the chance that the light at the end of the tunnel just might be an oncoming train.

When I read these pieces, I felt validated.

I had entered a PhD program in linguistics as a career changer, hoping to explore questions raised during my years as a language teacher and assessment developer. I never aspired to be a professor, but to conduct research that would feel relevant and useful to teachers in the classroom; I used to say, “I have questions that I want to work on, and I don’t care who signs my paycheck.” At a certain point, I realized that if the tenure track wasn’t my Plan A, I certainly wasn’t going to end up there as Plan B, and I focused my job search on nonprofit and government jobs. And I became a vocal advocate for career diversity within my department and social networks, reminding anyone who would listen that they shouldn’t assume every PhD candidate was an aspiring professor. I became the smug cousin to the doomed mouse in the Kafka fable that Rebecca Schuman retold for PhDs, asking my colleagues, why not just turn and walk the other way?

By engaging with these issues, though, I became more and more concerned about issues that were specific to the university. I started getting more engaged with my professional association to create better programming for grad students. I was even writing a dissertation where one of my field sites was a university classroom! I may have decided not to be a professor, but I still cared about higher education.

As it turned out, I found a job that allowed me to stay engaged with these topics: I’m director of education and professional practice at the American Anthropological Association where, among other things, I manage the association’s academic relations function. I present at scholarly meetings, write for academic publications, and I’ve even taught at a local university. The stark choice that was implicit in the quit-lit program—stay in academia for adjunct pay and the slim hope of a tenure line, or leave the profession altogether—turns out to have been a false dilemma. At the same time, I now have a better sense of the systemic pressures that keep so many PhDs in contingent academic positions, and having once considered that route myself, I can use my platform to contribute to their advocacy efforts.

Not only have I found a place for myself in this gray area between academia and professional practice, but in the course of my work with anthropologists, I have come to know a number of university-based colleagues who work in the same liminal space. Some work on environmental or urban youth programming, and bring their students along. Others write reports on Ebola or climate change, not just for publication in journals, but to support evidence-based policymaking. Still others apply their ethnographic insight to improve the functioning of their business—which happens to be a university. Sometimes these people are librarians or instructional designers or program directors, but more often, they’re professors.

I’ve also learned about academic departments that have found ways to value their faculty’s work outside the university, either as engaged scholars or from previous careers in practice settings. Some tenure and promotion guidelines allow a broad selection of work products such as technical reports, consulting work, service on community- or government-based committees, appointment to government agencies, public lectures, and testimony before federal or state legislatures. Traditional academic work still maintains its prestige; public engagement is typically considered service rather than research, and technical reports may need to be accompanied by peer-reviewed publications. Nevertheless, there seems to be a growing acceptance within academia of the diverse work products beyond scholarly teaching and publishing that may be produced in the course of a productive career.

There is a lot of talk about how to raise awareness among PhD students of the diverse career opportunities they have, and this is an important conversation, but the fact is that there’s a lot to like about research and teaching, and once they’ve gone so far as to write a dissertation, many people are understandably reluctant to give that up. My point is that you don’t have to. For all that we talk about a split between theorist academicians and professional practitioners, that view couldn’t be more oversimplified. Academics solve problems, practitioners build theory, and there’s a whole range of professional life histories that aren’t easily categorized as the one or the other.

CC by 4.0 by Stefano Ricci Cortili via Wikimedia Commons.

The post How academics can leave the university but stay in academia appeared first on OUPblog.

Oxford University Press's Blog

- Oxford University Press's profile

- 238 followers