Daniel Miessler's Blog, page 81

July 10, 2019

The Dangers of Abruptly Destroying Meaning Structures

I recently watched an older debate between Sam Harris and Jordan Peterson in which I saw Sam admonishing Jordan for creating an opening for foolish beliefs. He took a poll of who was religious in the audience, and then all but blamed Jordan for their credulity.

That’s when it hit me that while Jordan might have enabled the religious, Sam actually enabled Jordan.

How? The same way George Bush enabled Iran to take over Iraq by removing Saddam.

Sam’s ideology is brilliant at stripping someone to the bone, but it unfortunately leaves people wishing they had a jacket.

The New Atheists created a vacuum of belief and meaning among their followers, and their sadness can be felt in questions posed to Sam in AMAs and QAs. I’ve seen multiple questions like the following from his fans, delivered in almost shameful and apologetic tones:

Sam actually does answer this, but he does it in book form (Waking Up) as opposed to offering guides and methodologies like Jordan.

Thank you for freeing me, but…um…what do I believe now?

It’s a question Sam’s never given a good answer to, and one that Jordan Peterson has become a religious figure by answering well.

Peterson is like Iran taking advantage of the vacuum left in Iraq. People need structure, and he’s providing it.

I’m not implying here that Peterson is malicious or cynical in what he’s offering.

Peterson is Iran sweeping into a post-Saddam Iraq. He’s offering structure to those who desperately need it to explain their struggles, just as Iraqis have been craving a strong hand to govern them.

The lesson here is not that Sam should not have dispelled the bad magic of supernatural belief. Neither is it that Jordan is being opportunistic. I think he’s as surprised as anyone that people are so eager to hear him speak.

The lesson is that most humans need structure, and Sam made a mistake in assuming that more people were like him (or me) in being able to erect an existential scaffolding of our own making. Most people can’t do that. And even for those who might be able to, it’s likely to require a process.

Iraqis are just as capable of self-government as anyone else on Earth. Just as former Christians are plenty capable of secular and existential meaning creation. But it takes time.

He also thought that well-constructed conversations could solve all disagreements.

Summary

Harris was too optimistic about the ability for people to construct their own meaning once false structures had been removed.

That miscalculation left people 1) feeling empty, and 2) feeling weak and ashamed that they did need something.

Peterson’s message suddenly started resonating because it was precisely the structure that could fill the void left by the New Atheists.

Sam (and others) are now frustrated that Peterson acolytes might have just swapped one religion for another, and/or left the door open to other fallacious beliefs.

I think Sam is one of the top living intellectuals in the world, by the way. I have massive respect for him. This is not an attack on what he did, but rather an explanation of what happened afterwards.

I disagree significantly with Jordan Peterson on major topics, and particularly his flirtation with what I consider to be numerology, but I do think he is fundamentally a good person who is trying to help people. And I think he’s tapped into something powerful in the lack of meaning in the lives of young men.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

July 7, 2019

Unsupervised Learning: No. 185

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

July 6, 2019

Why I Don’t Think Craig Wright is Satoshi

I many years ago I wrote a piece about why I didn’t think Craig was Satoshi, and a bunch of reporters hit me up immediately for interviews. I didn’t give any, and I deleted the post.

I think they were so interested because they had tracked down my interactions with him (not about bitcoin) from back in the day, which validated that I at least knew him to some degree.

Anyway, the gist of the piece that I removed was that—in my mind—the Craig I knew could not have been Satoshi for a very simple reason: Craig seeks attention, while Satoshi shunned it.

I’d guess that tens of thousands of people are smart enough to have invented bitcoin.

To be clear, in my few interactions with Craig he’s always been polite and decent. And I think he’s definitely brilliant enough to do the work. I could absolutely be wrong about this. He could actually be him.

Craig seems more like the brilliant quarterback, while Satoshi would be the loner in the corner.

I just don’t think so. Everything I understand about humans tells me it’s a hard no. Here are some inputs that brought me to that analysis.

Craig talked about himself constantly

Craig constantly sought attention

Craig loved to publicly debate with people

Craig loved to boast about his accomplishments

It looks like he talked about degrees he didn’t yet have

He’d do things like post photos of him working out on a rower

We was good looking and liked to show it off

I’m not a Satoshi expert, so I could be wrong about some of these.

In short, he was more like a brilliant cult leader. Where I see Satoshi as more like the following:

Brilliant

Introverted

Wants to do good in the world

Does not want a single bit of the credit

Would rather remain anonymous if possible

Would happily die with nobody knowing it was him

Those don’t seem like the same two people.

So, no—I don’t have cryptographic evidence that Satoshi is someone else, or that Craig isn’t him. But everything I know about humans—and particularly male egos—tells me that Craig is almost the exact opposite of the real Satoshi.

If Craig really is perpetrating a con here, I wish he’d stop. And if he’s really Satoshi and I’m wrong, well, I hope he forgives me this analysis.

But I’d give that a 3% chance, if that.

TL;DR: Satoshi wouldn’t upload pictures of himself wearing spandex on a rowing machine.

Notes

There’s also the small point that if he were Satoshi he could just sign something using his private key.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

July 4, 2019

YouTube’s Ban of Hacking Videos Moves Us Closer to an Entertainment-only Public Sphere

Marcus Hutchins wrote a great essay recently about YouTube’s new ban on “hacking” videos.

He writes:

One major problem here is that hacking tutorials are not inherently bad. There exist a vast YouTube community aimed at teaching the next generation of cyber security experts.

YouTube’s New Policy on Hacking Tutorials is Problematic, Marcus Hutchins

I think he’s absolutely correct, but it’s actually worse than that. I think it reveals a precarious future where dangerous is redefined as, “anything that can be used to do harm”.

Almost any information can be used to do—or contribute to—harm, so the question is where to draw the line.

I am using a pure hacking definition here.

The heart of the hacking community is not so different from that of education, since one of the central goals is describing how things work.

But if you’re a cynic, or you have a desire to control people, you’re prone to notice that knowing how something works also helps you destroy it. To be fair, this is in fact a major component in the security industry, i.e., learning how things work so you can break them before the bad guys do.

In a healthy world environment, this isn’t a problem. When you trust other people you freely distribute information about how things work. We call it an education. And we do this because we assume that others will be responsible with that knowledge.

Videos by STÖK are a great example of positive hacking culture.

That’s the part that’s going away.

YouTube is now in the position of building a global platform based on edge cases. If someone describes how an alarm system works, so people can understand that and maybe make a different choice, or add some additional defenses, it only takes a few people to complain—or maybe an incident—to claim that the knowledge of how that alarm works makes the public less safe.

I’m forced to make the analogy to hardware stores, where you can freely walk into thousands of locations across the US and purchase nail guns, ice picks, and saw blades. How is that possible? Don’t people realize how much harm someone could do with such tools?

Knowing how a bridge is built isn’t that far away from knowing how to find vulnerabilities in your own web applications.

Knowing how a bridge is built is very similar. Or how a security alarm works. Or how to find vulnerabilities in your own web application. They’re all examples of education being simultaneously useful and dangerous.

As Marcus points out, much of the Hacking community on YouTube is about explaining how things work. Tutorials. Tooling. Explanations. They show us how to find flaws in the things we have, so that we can fix them before someone else takes advantage.

Yes, there are some people who use those videos to do harm, but there are also people who commit murder with kitchen knives. Let us not ban home cooking over it.

That’s the future I’m worried about with moves like this from YouTube. I’m worried that when people don’t trust each other, the game becomes removing weapons from the enemy because there’s no trust they won’t use them in the wrong way. And in such an environment, tools quickly conflate with weapons.

In that world, where there’s no trust and where education becomes something that we withhold from our enemies, the only approved content will be entertainment.

Entertainment is safe; Information is dangerous.

This will do two things. First, it’ll drive real information exchange underground, where—due to the pressures applied—it will often take on darker forms. Second, it will leave the public platforms as a sterile environment devoid of the most important content and conversation.

Ultimately, true accounts of the world will be labeled dangerous or offensive, the public platforms will become Nerf Zones devoid of real content and conversation, and quality education will only be found in private forums and private schools.

The only people who will know how things work will be people trying to break rather than build. And the only people having real conversations will be those who are angry with those who tried to silence them.

Such policies are well-meaning, but they result in a net-loss for everyone.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

July 2, 2019

Unsupervised Learning: No. 184 (Member Edition)

This is a Member-only episode. Members get the newsletter every week, and have access to the Member Portal with all existing Member content.

Non-members get every other episode.

or…

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

June 30, 2019

Regulated Corporate Data Champions

We’re about to collide into the brick wall that is personal data privacy.

There are several things to work out, but what I find most interesting to think about is the potential solutions and how they form based on incentive structures.

I think it’s obvious that there’s going to be both a government and a corporate component, and here’s a potential way that could play out.

The government says that anyone taking in data will have to secure their data using an approved Data Champion.

A Data Champion is also a consumer product, and they market to consumers as being the best protectors of peoples’ privacy.

Data Champions have to secure data at a given level, as determined by government regulation, but they can of course go beyond that.

The key function that the Data Champion plays is that of ACTIVE ADVOCATE for every customer’s data. So they don’t just protect the data that they have, but they go around the entire internet cleaning, masking, removing, and otherwise improving the safety of that customer’s data everywhere.

The key component here is that they get paid to do this, by the customer, and by the government. So it aligns the business incentives towards privacy, rather than away from it.

And it’s not that it stops data exchange—which won’t work because the internet of things is powered by personal data—but rather that the data exchange will be highly cared for because there will be advocates involved on all sides.

So there will be corporate interests in ensuring that the exact right amount of data is sent, to the correct entity, with the correct protections.

And every consumer has the option of picking a Data Champion from an approved list provided by the government. Like eating at a restaurant that’s allowed to be open by the health department.

Government can’t do privacy by itself. It has to be a corporate solution.

And most corporations won’t protect data as part of their culture because 1), it’s hard, and 2) they worry they’ll make less money.

So this combined solution unifies those weaknesses into a strength, whereby all data is protected by a Corporate Data Champion, which is in turn regulated by the government.

Anyway, not fully fleshed out or anything. Just tossing around ideas for how to deal with this thing that’s coming.

Ideas welcome.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

June 29, 2019

amass — Automated Attack Surface Mapping

Whether you’re attacking or defending, you have the highest chance of success when you fully understand the target.

Why amass?

The Modules

Intelligence

Enumeration

Visualization

Real-world Examples

Company Properties

New Domains via CIDR

New Domains via ASN

Finding Subdomains

Summary

The pronunciation stress is on the second syllable.

amass (/əˈmas/) is a versatile cybersecurity tool for gathering information on the attack surface of targets in multiple dimensions, and this amass tutorial will take you through its most important and powerful features, including many examples.

Why amass?

For example, there are many port scanners, but nmap and masscan provide 99% of the value.

You might be asking, “Why amass and not one of the 113 other tools out there?” It’s a good question, and part of the answer is because yes—there really is a legion of tools out there that all do one or two things decently—and it’s refreshing to have this level of quality across so many features all in one place.

I’ve just become a contributor to the project as well (June 2019).

amass also prioritizes the use of many different sources of input, whereas many tools only have a few. So when a new technique comes out—such as certificate transparency—the developers are quick to include it. Here’s a short list of all the different things it looks at:

DNS: Basic enumeration, Brute forcing (upon request), Reverse DNS sweeping, Subdomain name alterations/permutations, Zone transfers (upon request)

Scraping: Ask, Baidu, Bing, CommonCrawl, DNSDumpster, DNSTable, Dogpile, Exalead, FindSubdomains, Google, HackerOne, IPv4Info, Netcraft, PTRArchive, Riddler, SiteDossier, ViewDNS, Yahoo

Certificates: Active pulls (upon request), Censys, CertDB, CertSpotter, Crtsh, Entrust

APIs: AlienVault, BinaryEdge, BufferOver, CIRCL, DNSDB, HackerTarget, Mnemonic, NetworksDB, PassiveTotal, RADb, Robtex, SecurityTrails, ShadowServer, Shodan, Sublist3rAPI, TeamCymru, ThreatCrowd, Twitter, Umbrella, URLScan, VirusTotal

Web Archives: ArchiveIt, ArchiveToday, Arquivo, LoCArchive, OpenUKArchive, UKGovArchive, Wayback

@caffix, @fork_while_fork, and the rest of the team are phenomenal.

Finally, tools develop their own gravity once they get big enough, popular enough, and good enough. In the OSINT/Recon tools game, there exists a depressing graveyard of one-off and abandoned utilities, and it’s nice to see a project with some consistent developer attention.

Installation

Here are the best ways to install amass.

You’ll need to make sure your Go pathing is set up correctly so you can run it. You might need a chicken to kill.

Go

go get -u github.com/caffix/amass

amass enum –list

Docker

docker build -t amass https://github.com/OWASP/Amass.git

docker run -v ~/amass:/amass/

amass enum –list

Homebrew/macOS

brew tap caffix/amass

brew install amass

amass enum –list

The Modules

amass is somewhat unique in that all its functionality is broken into modules that it calls subcommands, which are intel, enum, viz, track, and db.

The primary amass research modules

There’s a full user guide that functions much like a man page, and you can use that as a full reference. But here we’ll cover the basic themes and show a few of my favorite options.

In short, intel is for finding information on the target, enum is for mapping the attack surface, viz is for showing results, and track is for showing results over time. db is for manipulating the database of results in various ways.

Intelligence

Consult the full user guide for more detail on each.

If you’re not doing adequate recon, you’re setting yourself up to be unpleasantly surprised in the future.

If you have a new target and are only using amass, the Intelligence subcommand is where you’ll start. It takes what you have and helps you expand your scope to additional root domains. Here are some of my favorite options under the intel subcommand.

intel: -addr (by IP range), -asn (by ASN), -cidr (show you domains on that range), -org (to find organizations with that text in them), and -whois (for reverse whois).

I’m using Uber because they are known to have an open bounty program that encourages this sort of public scrutiny.

Let’s look at organizations with “uber” in their name.

amass intel -org uber

A few of those should stand out (and not just because I highlighted them).

Results abridged for brevity.

18692, NEUBERGER - Neuberger Berman

19796, SHUBERT - Shubert Organization

42836, SCHUBERGPHILIS

45230, UBERGROUP-AS-NZ UberGroup Limited

52336, Autoridad Nacional para la Innovaci�n Gubernamental

54320, FLYP - Uberflip

56036, UBERGROUP-NIX-NZ UberGroup Limited

57098, IMEDIA-AS Pierre de Coubertin 3-5 office building

63086, UBER-PROD - Uber Technologies

63943, UBER-AS-AP UBER SINGAPORE TECHNOLOGY PTE. LTD

63948, UBER-AS-AP UBER SINGAPORE TECHNOLOGY PTE. LTD

132313, UB3RHOST-AS-AP Uber Technologies Limited

134135, UBER-AS-AP Uber Technologies

134981, UBERINC-AS-CN Uber Inc

135072, SUITCL-AS-AP Shanghai Uber Information Technology Co.

135190, UBERCORE-AS Ubercore Data Labs Private Limited

136114, IDNIC-UBER-AS-ID PT. Uber Indonesia Technology

267015, ESADINET - EMPRESA DE SERVICOS ADM. DE ITUBERA LTD

And here’s a lookup based on a CIDR range, where you can find all the domains hosted on that range.

amass intel -ip -cidr 104.154.0.0/15

Finding domains hosted on a CIDR range

Enumeration

The most basic example is just finding subdomains for a given domain. Here we use the -ip option to show the IPs for them as well.

amass enum -ip -d danielmiessler.com

I also love that amass output almost looks like a GUI, but can still be parsed via CLI.

With the IP option showing IPS for discovered domains

And here’s a run using the very cool -demo option, which does some quasi-masking of the output.

You might think I shouldn’t show my DNS like this, but I run WordPress so you can hack me with a wet piece of string anyway.

The enum module used with the demo option

Some of my favorite options in enum are:

enum: -d for basic subdomains, -brute brute-forcing additional subdomains, and -src because it lets you see what techniques were used to get the results.

Visualization

Visualization—as you might have guessed—allows you to see your results in interesting ways. And a big part of that is the use of D3, which is a JavaScript visualization framework.

amass viz -d3 domains.txt -o 443 /your/dir/

d3 output from amass against danielmiessler.com

My favorite options in viz are: -d3 for the D3 output, -maltego for creating Maltego compatible output, and -visjs for an alternative JS visualization that’s kind of nice.

amass in action

Real-world Examples

Ok, so that was a brief intro into the tool, and again—the user guide has tons more options for things you might expect, like reading from files, output configuration, doing exclusions, etc.

But now it’s time for what you probably came here for—which is a list of tactical examples based on common use cases.

Finding Company Properties

The substring bit is important. Too much text and you miss it, not enough and you get tons of false positives.

A common way to start is by searching for substrings of the company, to see what all subdivisions they might have around the world. And don’t forget to search for companies they’ve acquired or merged with as well.

amass intel -org uber

New Domains via CIDR

Another way to find new domains is to look by CIDR range.

amass intel -ip -cidr 104.154.0.0/15

Finding domains hosted on a CIDR range

New Domains via ASN

Another way to find new domains is to look by ASN.

amass intel -asn 63086

Finding Subdomains

Once you have a good list of domains, you can start looking for subdomains using the enum subcommand.

amass enum -d -ip -src danielmiessler.com

With the IP option showing IPS for discovered domains

Summary

amass is a powerful tool that helps both attackers and defenders improve their games. It’s possible to find one-off tools that might do some of these functions better, but it’s unlikely to stay that way for long. It’s nice to have a solid, unified tool that can do a lot of the functionality from a single place.

Watch out for more in this series on recon-related tooling, and in the meantime you can check out my other technical tutorials.

Stay curious!

Notes

If you have any favorite functionality you’d like to include, reach out to me here.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

June 23, 2019

Unsupervised Learning No. 183

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

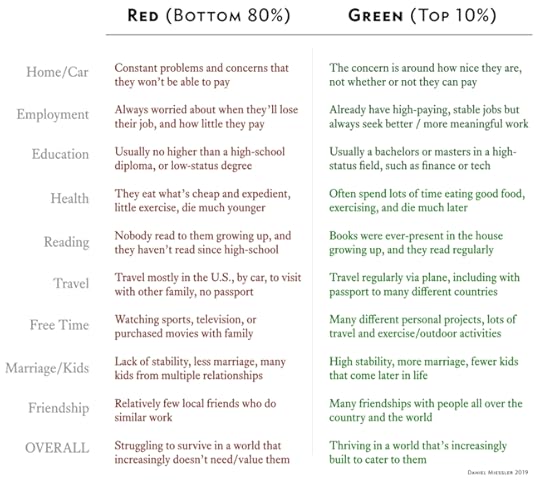

The World is Collapsing Into Two Countries—Green and Red

I hope you get angry while you read this.

There are many ways to describe what’s causing the tension and turmoil in the United States and elsewhere. We can say the middle class is going away, or that automation is taking over, or that white people are upset about changing demographics.

All those are probably true, but I think the clearest way to describe things is to imagine the world being sorted into two different countries—a Green country, and a Red country.

I’ve written about this repeatedly over the past several years, and Peter Temin has a phenomenal book on the topic called The Vanishing Middle Class, but the idea is the same. Basically, physical countries will become unimportant, what will matter is not where you live, but what class you’re a part of.

Soon the Red and Green will refer to the zones of safety in physical space as well, with Green zones requiring filtering to enter.

People in the top 10% of income/wealth live in Green Country. They are educated, healthy, likely working in finance or technology, and live interesting lives of travel and leisure. Their primary concern is finding ways to be more fulfilled in their work and their projects, and to spend more of their time meeting their other Green Country friends all over the world.

People in Red Country work to ensure the happiness of those in Green Country.

Those in the bottom 80% of income/wealth live in Red Country. They probably didn’t go to college, they likely work in the gig economy or in other highly-precarious job situations where they have unpredictable hours and few benefits. Most importantly, their primary job purpose is maintaining the infrastructure and services that allow those in Green Country to enjoy their lives.

This is perhaps the clearest and most cynical way to look at the gig economy. It’s marketed as a way to provide the freedom of extra income to anyone, but I think it’s going to quietly become the default for all but the elite.

There are many companies trying to bring gig mechanics to every industry.

Companies love gig mechanics because they let you keep only the best workers, pay for only what you use, and flex your workforce on demand when things expand or contract. Even companies that have not yet moved to gig options (because they don’t yet exist for that industry) are already moving heavily to contract work.

The Uber of X already exists, it’s called servants.

Indi Samarajiva

There’s a fascinating irony in only the west finding servants problematic.

Indi Samarajiva makes a great point here. The rest of the world already has servants, but the west considers it (for good reason) to be offensive in some way. I’m not an expert, but I’m sure it involves some composite of our focus on equality and our colonialist guilt.

Well, that’s about to end. We’re about to smuggle servants into our workforce through the cover of “work when you want to”, which—as it turns out—if you have few options a hungry family, is all the time.

Think about what the gig economy does, and how that compares to what servants do. Drive me here. Wash my clothes. Bring me food. Make me food. Take care of the kids. Clean the house. These are all tasks that people in Green Country complain about having to do, and needing to “get help with”, or “outsource”.

Indeed. It sounds damn convenient. But we have to be aware that we’re “outsourcing” to someone who can literally only do that thing. They’re not doing it because that’s what they enjoy. That’s a rich liberal fantasy. No, they’re doing it because that’s their role in this society, based on the options available to them. Just like servants.

So what do we do about it?

Well if you’re conservative—or a liberal who thinks like one without telling anybody—there’s nothing wrong with this at all. Sounds amazing! When I can I get this? I want to start a non-profit startup to help people escape poverty, so I can talk about it on Twitter, and I just need someone to take care of Kyler.

The cognition is dissonant, and smells of hypocrisy.

But if you’re someone who does actually care, there are four things you should be doing.

Look this beast right in the face, and be aware of it all around you.

Do your best to make sure everyone you care about ends up in the Green.

Do your best to affect societal change that eliminates the Green/Red divide.

When you interact with people in the Red, have sympathy and empathy, because you didn’t pick your fucking parents. You got lucky, and they didn’t.

In short, this is happening. This is the two-sided reality we’re entering into. Tell everyone you care about that they need to go to school and do what it takes to make it to the right side of the fence.

But never forget how lucky you got. And when you spend your Friday nights, in the big city surrounded by your Green Country friends, talking about your trip to Iceland and submitting a manuscript, spare a CPU cycle for the human person serving the food.

They might have two other jobs where they’re also ignored by people like you, which they drive 90-minutes to get to. And all they did was roll different dice in this life.

Never forget that you didn’t pick your parents, and that even work ethic is a privilege of a good upbringing.

And most importantly, vote and/or get involved in changing how society works. Support policies that will address this situation, such as universal education and healthcare.

Nobody can realistically ask you not to partake of your gifted position, or to advocate that others do the same. But we can ask that you remain aware. That we recognize strange and random it is that you’re on this side and they’re on the other.

And we can ask that you try to make it better.

Notes

Before anyone asks, I am not implying that people who made it into the Top 10% didn’t work hard. I’m aware of the arguments. But everything you used to work hard were also gifts. Your intelligence and your work ethic were given to you by your parents, your genetics, and your environment—none of which you made for yourself. So yes, well done, you built something nice. But the only reason you were able to do any of it is because of the tools you were given. Work ethic is also a privilege that comes from a good upbringing.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

June 19, 2019

Machine Learning Doesn’t Introduce Unfairness—It Reveals It

Many people have a concern about the use of machine learning in the credit rating and overall FinTech space. The concern is that any service that provides key human services—such as being able to own a home—should be free of bias in its filtering process. And of course I agree.

I define bias here as ‘unreasoned judgements based on personal preferences or experiences’.

The problem is that machine learning improves by having more data, so companies will inevitably search for and incorporate more signals to improve their ability to predict who will pay and who will default. So the question is not whether FinTech will use ML (it will) but rather, how to improve that signal without introducing bias.

It’s quite possible to do ML improperly, but one shouldn’t assume that’s happening intentionally or often because bad doing so is counter-productive.

My view on this is quite clear: Machine Learning—when done properly—isn’t creating or introducing unfairness against people—it’s uncovering existing unfairness. And the unfairness it’s uncovering is that which is built into nature and society itself.

The more signals AI receives about something, the closer it comes to understanding the Je Ne Sais Quoi of that thing. And in the case of credit, those judgements will bring them closer to the truth about one’s credit-worthiness.

If it says someone has a higher chance of defaulting on loans, that’s not because they chose to be a bad person—it’s because their personal configuration, consisting of body, mind, and circumstance, indicates they’re less likely to pay back loans.

As someone who doesn’t believe in free will, this is completely logical to me. People don’t pick their parents. They don’t pick who becomes their friends as children. They don’t pick what elementary schools they go to. They don’t pick their peer group. And these variables are what largely determine whether you’ll go to college or not, whether you’ll have other friends who went to college or not, and ultimately how vibrant and stable your financial situation will be. This is what these algorithms attempt to peer into.

Ironically, the loan business is where the blindness of ML could help people who are likely to be good customers but who were excluded before due to human bias.

Let’s look at an example, but in the auto insurance space instead of FinTech. Let’s say we’re trying to assign premium to a young person who just purchased a motorcycle, which means we need to rate their chances of behaving in a safe vs. a reckless manner. And let’s say their public social media presence is full of images of them doing Free Climbing (where you climb mountains without ropes), and saying things like, “I’d rather die young doing something crazy than old being boring!”

The difference between unfairness and bias is that bias is disconnected from reality, and is usually based in personal prejudice.

Those are clear signals about risk as it relates to motorcycles, and it raises the question of the definition of bias. One could say that insurance people are “biased” against thrill-seekers. And there are surely similar correlations in the financial industry for who pays bills and who doesn’t. If someone posts something like, “I hope all my bill collectors see this message. I’m not paying, stop calling!”, or “I can’t believe someone was stupid enough to give me a credit card again. Bankruptcy number 4 in 6 months!”.

Those are extreme examples, but it shows that it’s absolutely possible for a signal to correlate to a behavior, and that behavior can correlate either positively or negatively to the thing you care about—in this case the chances that someone will pay off their loans.

This is a definition of unfairness specific to this discussion, not an overarching one.

And that’s the difference between unfairness and bias: unfairness is where someone is negatively judged due to someone’s characteristics or behavior, when it’s either difficult or impossible for them to change what they were judged on. Examples might include being short, or obese, or introverted, or bad with money, or undependable. Those might be perfectly valid reasons for someone to choose not to interact with you, but it’s also not completely fair that they do so.

And bias is where a judgement is not valid, where it’s based in someone’s personal experience, is not supported by data, and is quite often powered by prejudice or bigotry. Examples would be denying someone’s loan for a house—even though the algorithm told you they’re extremely likely to pay it off—because you don’t want any more of “those people” in your neighborhood. And biased data or algorithms would have those sorts of unfounded connections built into them.

And remember, if companies just wanted to deny people from getting loans they could do that pretty easily. But they aren’t in the business of denying loans; they’re in the business of granting loans. Any time a company denies someone who could have paid, they give money to their competitors or leave it on the table. So they are incentivized not to give money to people they like, but to people who will pay that money back—regardless of background, appearance, etc.

So, in order to refine that signal, and find the people who are most likely (or not) to pay back a loan, the algorithms need more data. The signal from social media can absolutely help lock onto that truth about a person, as it tells you how someone speaks, how they interact with others, and how they spend their time.

The accuracy of the data is what matters.

This doesn’t mean AI is fair, or that it’s nice. It isn’t. But life isn’t nice, either. Machine Learning, like the Amazon Forest or an Excel Spreadsheet, is neither good nor evil. It’s just telling you what is.

Machine Learning locks onto truth by looking at signal. And in this case, as with many other deeply human issues like employment and crime, we might not like the truth that it uncovers.

But when that happens, and we’re shown some people are more likely to get in motorcycle accidents, or pay their bills on time, or default on a loan, or be violent—it’s really telling us about the society we have built for ourselves. It tells us that there are some who have had more advantages and opportunities than others, which lead to different outcomes—and that should not surprise us.

Machine Learning is simply a tool that allows us to look inside this puzzle of chaotic human behavior, and to find patterns that enable predictions.

When that tool shows us something uncomfortable—something we wish we didn’t see—we must resist the temptation to blame what we used to see it. It’s what you’re looking at that’s disturbing and needs to be fixed.

It’s the unfairness built into society itself.

—

Become a direct supporter of my content for less than a latte a month ($50/year) and get the Unsupervised Learning podcast and newsletter every week instead of just twice a month, plus access to the member portal that includes all member content.

Daniel Miessler's Blog

- Daniel Miessler's profile

- 18 followers