Gennaro Cuofano's Blog, page 5

September 28, 2025

The Archetype-Driven Organization: How AI Startups Build Enduring Advantage

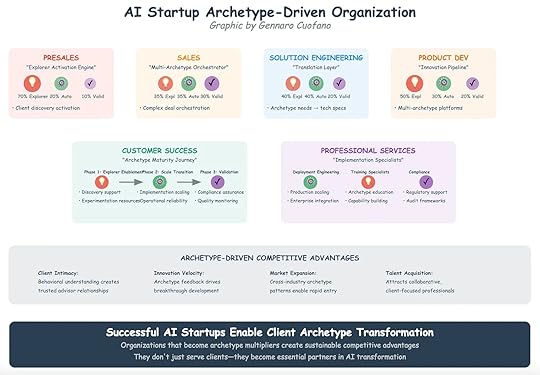

AI startups don’t win by selling generic software. They win by embedding themselves into client archetypes—understanding not just what a client does but how they operate across maturity phases. Startups that organize around these archetypes build trust faster, accelerate innovation velocity, and capture market expansion opportunities that competitors miss.

This is the logic of the Archetype-Driven Organization.

From Generic Functions to Archetype MultipliersTraditional B2B organizations divide roles—presales, sales, engineering, product, services—into functional silos. Each function optimizes for efficiency, but often loses touch with how clients actually change over time.

An archetype-driven startup does the opposite. Every function aligns with client archetypes—Explorer, Automator, Validator—and acts as a multiplier. This creates resonance across the buyer journey, from initial curiosity to scaled adoption.

The result is an organization that doesn’t just sell tools—it enables client transformation.

Core Organizational FunctionsPresales: Explorer Activation EngineArchetype mix: 70% Explorer, 20% Automator, 10% ValidatorMission: Activate client discovery.How it works: Early-stage clients don’t know what’s possible. Presales teams guide them through exploration, providing experimentation resources and framing use cases. This creates momentum before procurement begins.Sales: Multi-Archetype OrchestratorArchetype mix: Balanced across Explorers, Automators, and Validators.Mission: Orchestrate complex deals across multiple archetypes.How it works: Modern AI deals span multiple stakeholders. Sales must translate across different archetypal logics—exploration (curiosity), automation (efficiency), and validation (compliance). The best sellers operate less like hunters and more like conductors.Solution Engineering: Translation LayerArchetype mix: 40% Explorer, 40% Automator, 20% Validator.Mission: Convert archetype needs into technical specifications.How it works: Clients often can’t articulate what they need in technical terms. Solution engineers act as translators—mapping domain-specific challenges into machine-readable architectures.Product Development: Innovation PipelineArchetype mix: 50% Explorer, 30% Automator, 20% Validator.Mission: Build platforms that serve multiple archetypes simultaneously.How it works: Product teams don’t just solve current needs. They monitor archetype feedback loops, using Explorer signals to push innovation, Automator feedback to drive usability, and Validator demands to ensure compliance. This makes product roadmaps more resilient.Customer Success: Archetype Maturity JourneyPhase 1: Explorer Enablement → Discovery support and experimentation.Phase 2: Scale Transition → Operational reliability and automation.Phase 3: Validation → Compliance assurance and quality monitoring.Mission: Guide customers through their archetype evolution.How it works: Success isn’t just renewals. It’s moving clients up the archetype ladder, embedding the startup deeper into their workflows as they scale.Professional Services: Implementation SpecialistsDeployment Engineering → Production scaling, enterprise integration.Training Specialists → Archetype education, capability building.Compliance → Regulatory support, audit frameworks.Mission: Close the gap between experimentation and enterprise readiness.How it works: Professional services ensure that clients don’t stall after pilots. By handling scaling, training, and compliance, they reduce friction and accelerate adoption.Archetype-Driven Competitive AdvantagesClient IntimacyArchetype awareness creates behavioral empathy.Teams become trusted advisors, not vendors.Innovation VelocityFeedback from archetypes drives continuous breakthrough development.Explorers signal emerging use cases before the market sees them.Market ExpansionArchetype patterns repeat across industries.Learnings from one vertical accelerate entry into another.Talent AcquisitionArchetype-driven organizations attract collaborative, client-focused professionals.Employees see themselves as partners in transformation, not just quota carriers.Why This MattersMost AI startups fail not because their technology is weak, but because they fail to integrate into client transformation journeys. They get stuck in pilot purgatory, unable to cross from exploration to enterprise-wide adoption.

The archetype-driven organization avoids this trap. It aligns every function around the client’s archetype journey. Instead of selling a point solution, it becomes the infrastructure for client maturity.

This alignment produces compounding advantages: higher trust, faster innovation, broader markets, and stickier adoption.

Conclusion: From Startup to Archetype MultiplierThe most successful AI startups don’t just close deals. They enable clients to evolve. They build archetype multipliers: presales teams that activate explorers, sales that orchestrate across archetypes, product teams that design multi-archetype platforms, and services that push clients toward maturity.

These startups don’t just serve clients. They become essential partners in transformation. In a world where AI is reshaping every industry, that’s the difference between a tool vendor and a category-defining company.

The post The Archetype-Driven Organization: How AI Startups Build Enduring Advantage appeared first on FourWeekMBA.

September 27, 2025

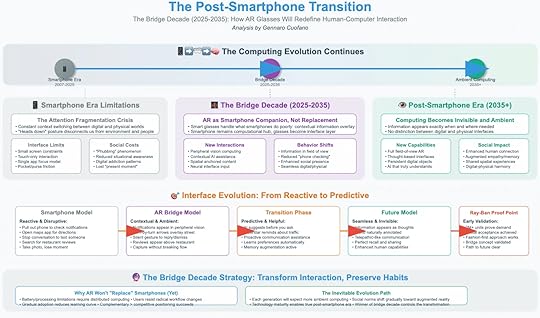

The Post-Smartphone Transition: The Bridge Decade of Computing (2025–2035)

Every major wave of computing has been defined by the interface that connected humans to machines. The PC era was defined by the keyboard and mouse. The smartphone era was defined by multi-touch screens. Now, as smartphones approach their maturity curve, the industry faces the question: what comes next?

The answer isn’t sudden replacement, but a decade-long bridge. Between 2025 and 2035, augmented reality glasses and neural interfaces will begin to complement, not replace, the smartphone. This bridge decade will redefine interaction, shifting from reactive, screen-driven behavior to predictive, ambient computing.

The Limits of the Smartphone EraSince 2007, the smartphone has been the dominant interface for digital life. Yet its limitations have become increasingly clear.

Attention Fragmentation Crisis: Smartphones force constant context switching between physical and digital worlds. Every notification pulls users away from the present moment, creating cognitive overload and reducing situational awareness.Interface Limits: Small screens restrict context, and touch-only input remains limited. Users must open apps, search manually, and click through menus—processes that feel increasingly outdated.Social Costs: The “heads down” posture has become emblematic of smartphone culture. Phubbing (phone-snubbing) erodes social presence, while digital addiction patterns are now recognized as a public health issue.The smartphone succeeded because it condensed the internet into the palm of our hand. Its limitation is that it locks our attention inside the screen.

The Bridge Decade (2025–2035): AR as Companion, Not ReplacementThe next decade will not see smartphones disappear overnight. Instead, AR glasses and neural interfaces will emerge as companions. Smartphones will remain the computational hub, but glasses will become the interface layer.

New Interactions: Peripheral vision displays, contextual AI assistance, spatially anchored content, and neural inputs will extend capabilities.Behavior Shifts: Information will appear in the field of view, reducing the need to “check phones.” Users will experience enhanced social presence and seamless blending of digital and physical environments.The result is not disruption through replacement, but transformation through complementarity. Smartphones will handle processing and legacy apps. Glasses will handle contextual overlay, predictive cues, and ambient computing tasks.

Interface Evolution: From Reactive to PredictiveThe bridge decade is best understood as a transition in interface design.

Smartphone Model (Reactive & Disruptive)Users react to notifications.Must manually open apps, search, and scroll.Interaction interrupts the flow of life—taking a photo means missing the moment.AR Bridge Model (Contextual & Ambient)Notifications appear in peripheral vision.Directions overlay directly onto streets.Reviews pop up above restaurants without searching.Users can silently dismiss or reply with subtle gestures.Transition Phase (Predictive & Helpful)AI suggests before you ask.Memory augmentation integrates with daily life.Interfaces become proactive, contextual, and learning-driven.Future Model (Seamless & Invisible)Information appears as thoughts.Telepathic-like communication becomes possible.Digital and physical layers merge into one shared experience.Proof Points: Why the Bridge Is CredibleThe Ray-Ban Meta smart glasses provide the first proof that the bridge model works. With over 2 million units sold and social acceptance growing, they validate consumer appetite for “heads-up” computing. Unlike failed experiments such as Google Glass, the Ray-Bans succeed because they are socially acceptable, fashion-first devices with utility layered in.

This demonstrates the bridge concept: AR glasses don’t need to replace smartphones immediately. They can succeed as accessories, handling new tasks without forcing users to abandon existing habits.

Why Smartphones Won’t Disappear (Yet)There are strong reasons smartphones will remain indispensable through 2035:

Battery and Processing Limits: Glasses require distributed computing power, often offloaded to smartphones.Habit Inertia: Users have entrenched workflows—banking, messaging, entertainment—that won’t move overnight.Complementary Value: The best adoption strategy is not confrontation, but coexistence. Glasses will handle what phones cannot—contextual, hands-free, predictive interaction.The analogy is clear: just as the PC didn’t disappear when the smartphone arrived, the smartphone won’t vanish when AR emerges. But its role will shift—from primary interface to background hub.

The Post-Smartphone Era (2035+): Ambient ComputingBeyond 2035, we enter the true post-smartphone world: ambient computing. At this stage, the distinction between physical and digital dissolves.

New Capabilities: Full field-of-view AR, thought-based neural interfaces, and persistent digital objects.Social Impact: Enhanced memory, shared social experiences, and seamless blending of real and digital presence.Invisible Computing: Interfaces fade into the background. Information appears exactly when needed, without active searching or device management.The smartphone’s limitation was forcing humans to adapt to the device. Ambient computing flips this: devices adapt seamlessly to humans.

Strategic Implications: How to Build for the Bridge DecadeThe bridge decade is not just a technological shift—it’s a strategic one. Companies that succeed will recognize three realities:

Preserve Habits While Transforming InteractionConsumers resist radical breaks. Products that succeed (Ray-Ban Meta, Apple Watch) work with existing behavior patterns while subtly shifting them.Companionship, Not ReplacementAR glasses positioned as companions, not substitutes, will accelerate adoption. Over time, dependency shifts naturally.Positioning for Predictive InterfacesThe real value lies not in hardware but in predictive AI that transforms interactions from reactive to proactive. Companies that master contextual intelligence will own the post-smartphone era.The Bridge Decade StrategyMeta, Apple, Google, and Snap are all pursuing different strategies, but the same law applies: each generation will expect more ambient computing. Social norms will gradually shift toward augmented presence.

Meta is betting on emotional adoption through fashion-first devices.Apple is playing premium-to-mass market integration.Google seeks platform ubiquity via Android XR.Snap leans on AR-native ecosystems and developer-first tools.Whichever strategy wins, the outcome is the same: the smartphone becomes the hub, but no longer the interface. The bridge decade transforms interaction while preserving continuity.

Conclusion: The Inevitability of the Post-Smartphone WorldFrom 2025 to 2035, we enter the bridge decade of computing. AR glasses won’t replace smartphones, but they will erode their centrality by solving what phones do poorly: contextual information, peripheral interaction, and predictive assistance.

By 2035, computing will move beyond screens entirely. Information will appear ambiently, interaction will be thought-driven, and digital and physical layers will harmonize.

The transition will not be abrupt, but evolutionary. Just as the PC quietly faded into the background as the smartphone rose, the smartphone will gradually cede its role to ambient computing.

The companies that understand this shift—that build for transition, not disruption—will control the next era of computing.

The post The Post-Smartphone Transition: The Bridge Decade of Computing (2025–2035) appeared first on FourWeekMBA.

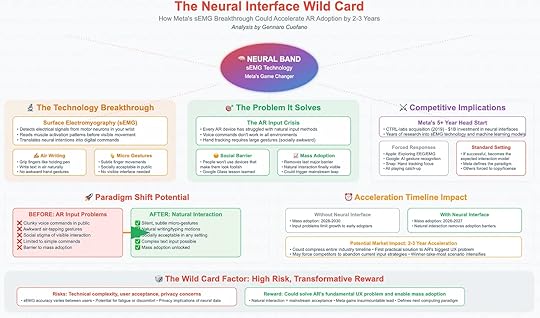

The Neural Interface Wild Card: Meta’s sEMG Bet and the Acceleration of AR

Every major computing platform has been defined by its input method. The mouse enabled graphical user interfaces. Multi-touch made the smartphone usable at scale. Voice assistants hinted at hands-free interaction, but never truly broke through. For augmented reality, the greatest unsolved problem has always been input.

How do you control a device that overlays digital information on the real world without making the user look awkward? How do you make AR interaction as seamless as typing on a keyboard or tapping on a screen? Until now, the answers have been unsatisfactory—voice commands fail in noisy or private settings, hand gestures are too large and socially awkward, and clunky air-tapping makes users feel ridiculous.

This is the AR input crisis. And Meta believes it has the solution.

The Technology BreakthroughMeta’s neural band, powered by surface electromyography (sEMG), detects electrical signals from motor neurons in the wrist. By reading muscle activation patterns before visible movement, it translates neural intentions into digital commands.

This breakthrough unlocks two powerful interaction models:

Air Writing: Grip fingers as if holding a pen and write text in air naturally—no awkward mid-air tapping, no keyboards required.Micro Gestures: Subtle finger movements, nearly invisible to outsiders, trigger commands. These gestures are socially acceptable, unobtrusive, and work anywhere.The genius lies in pre-motion detection. The band captures the signal before muscles visibly move, allowing for fluid, low-latency input that feels natural. The result is a silent, discreet, and universal input method that finally makes AR practical for everyday use.

The Problem It SolvesThe input crisis has always been AR’s bottleneck. Every AR headset or glasses project has hit the same wall: how do you tell the device what you want without friction?

Voice commands don’t work in crowded, public, or quiet environments.Hand tracking requires large, visible gestures that are socially awkward.Touch controls (on glasses frames) are limited and unintuitive.This isn’t just a UX annoyance—it’s a barrier to adoption. If people feel foolish using AR in public, they won’t wear the devices.

The neural interface removes this barrier. Subtle micro-gestures solve the social stigma problem. Complex text input becomes possible. Interaction looks natural, not experimental. This unlocks the mass adoption leap AR has been waiting for.

Paradigm Shift PotentialThe difference before and after neural interaction is profound.

Before (Current AR Input):

Clunky air-tapsLimited commandsSocial stigma of visible interactionAwkward and error-proneAfter (sEMG Neural Interface):

Silent, subtle micro-gesturesNatural writing and typing motionsSocially invisible inputComplex, nuanced interaction possibleMass adoption unlockedIn other words: the neural interface has the same potential impact on AR that multi-touch had on smartphones. It turns a futuristic novelty into a usable platform.

Competitive ImplicationsMeta’s advantage here isn’t incremental—it’s structural. The company has a five-year head start thanks to its 2019 acquisition of CTRL-labs for roughly $1 billion. Since then, Meta has invested heavily in building sEMG machine learning models and hardware prototypes.

Competitors are behind:

Apple is exploring EEG/EMG pathways, but still relies on cautious iteration.Google is experimenting with gesture recognition, but lacks a breakthrough input model.Snap has focused on hand tracking, which remains limited.If Meta’s neural band works at scale, it doesn’t just give them an edge—it sets the standard. The risk is that neural input becomes the expected model for AR, forcing everyone else into copycat mode. In platform transitions, whoever defines the interaction paradigm tends to define the platform itself.

Acceleration Timeline ImpactWithout a neural interface, mass adoption of AR glasses would likely arrive between 2028 and 2030. Input problems would limit adoption to enthusiasts and early tech adopters.

With a neural interface, that timeline compresses dramatically. Mainstream adoption could begin as early as 2026–2027, shaving two to three years off the curve.

The potential market impact is enormous:

Industry-wide timelines compress.Current input strategies (voice, hand tracking) may become obsolete overnight.Competitive pressure intensifies as Meta gains first-mover advantage.Winner-take-most dynamics accelerate.This is why the neural interface isn’t just a feature—it’s a catalyst.

The Wild Card FactorOf course, every breakthrough carries risks. Neural interfaces face several:

Technical complexity: sEMG accuracy varies across users. Training the system to understand subtle signals consistently is a massive challenge.User acceptance: Will consumers wear a neural band daily? Will it feel comfortable?Privacy concerns: Neural data carries sensitive implications. If Meta collects signals from your motor neurons, how will that data be stored, used, or monetized?These risks mean the neural band isn’t guaranteed. It could stumble in execution, face regulatory hurdles, or simply fail to win consumer trust.

But the reward is transformative. If it works, it solves AR’s single biggest UX bottleneck and triggers mainstream adoption years ahead of schedule.

High Risk, High RewardThe neural interface is Meta’s wild card. It’s risky, technically demanding, and socially untested. But if it succeeds, it gives Meta insurmountable leverage:

Defines the paradigm: Meta sets the standard for AR input.Unlocks adoption: Natural interaction removes the final barrier to mass adoption.Secures ecosystem advantage: Developers and consumers gravitate toward the platform with the best input model.In the computing stack, input has always been destiny. The neural interface could be the moment AR shifts from experimental to inevitable.

Conclusion: Betting the Future on the WristMeta is making a bold wager. While Apple bets on premium integration and Google on platform ubiquity, Meta is betting on solving the hardest problem of all: input. If it wins, it doesn’t just compete in AR—it defines it.

The stakes are enormous. If neural input succeeds, AR adoption could accelerate by two to three years, reshaping industry timelines and forcing competitors into a defensive race. If it fails, Meta risks sinking billions into a high-risk technology that consumers reject.

This is why it’s a wild card. But history suggests that the companies that redefine input methods often redefine computing itself. The mouse gave us the PC. Multi-touch gave us the iPhone. If sEMG neural bands succeed, they could give us the first truly mainstream AR platform.

Meta is betting the future of AR on the wrist. And if they’re right, the next computing revolution won’t be in your hand or on your face—it will start in your neurons.

The post The Neural Interface Wild Card: Meta’s sEMG Bet and the Acceleration of AR appeared first on FourWeekMBA.

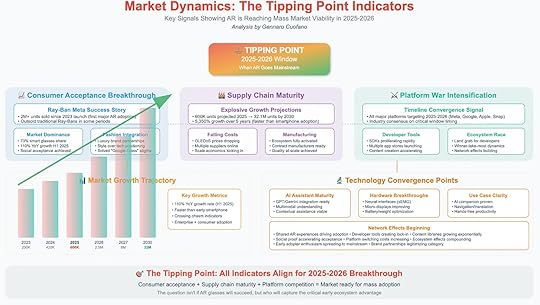

Market Dynamics: The Tipping Point Indicators

Every transformative technology crosses a moment where isolated advances and early adoption converge into inevitability. For augmented reality, that moment is arriving in 2025–2026. After years of cautious progress, false starts, and niche adoption, AR is now hitting the conditions that signal mass market viability. The tipping point isn’t speculative—it is visible in consumer acceptance, supply chain maturity, platform intensification, and technology convergence.

Meta’s Ray-Ban story has been the proof-of-demand case study, but this is no longer about one company. Instead, all indicators point to the same critical window: AR will go mainstream not through a single product launch, but through the alignment of consumer readiness, industrial capability, and competitive acceleration.

Consumer Acceptance BreakthroughThe biggest question for AR has never been technical feasibility—it has been social acceptability. Google Glass in 2013 failed not because it lacked vision, but because it misread culture. Clunky design, privacy concerns, and social stigma doomed it.

Meta’s Ray-Ban partnership flipped the script. By embedding AR into a fashion brand with cultural cachet, Meta solved the stigma problem. Two million units sold since 2023 created the first real mass adoption event in AR’s history. The glasses outsold traditional Ray-Bans in some periods, showing that consumers weren’t just tolerating the devices—they were choosing them.

Market dominance followed: Meta captured 73% of the smart glasses market, posting 110% year-over-year growth in H1 2025. These numbers mirror the adoption curve of early smartphones and signal the critical psychological shift: AR has crossed into cultural normalcy. Consumers no longer see AR glasses as futuristic experiments; they see them as lifestyle accessories.

Fashion integration is the silent driver here. Partnerships with luxury brands turned AR into a style decision before it became a tech decision. That inversion—style first, tech second—was the key to breaking through.

Supply Chain MaturityHardware adoption doesn’t scale without supply chain readiness. For AR, the economics of displays and optics have always been the constraint. In 2025, that constraint is breaking.

Explosive Growth Projections: 600,000 units projected for 2025, scaling to 32.1 million by 2030. This represents over 5,000% growth in five years, outpacing the early trajectory of smartphones.Falling Costs: OLED and microdisplay prices are dropping, multiple suppliers are online, and scale economics are kicking in. Hardware components once exotic are becoming commoditized.Manufacturing Ecosystem: Contract manufacturers are fully activated. Quality and scale have been achieved, meaning AR glasses can now be produced with the same industrial rhythm as smartphones.This is the inflection point supply chains always bring. What was once scarce becomes abundant, what was expensive becomes cheap, and what was niche becomes mainstream. Supply chain maturity means AR hardware can now ride the same wave of cost curves that fueled the explosion of mobile.

Platform War IntensificationConsumer acceptance and supply chain maturity are necessary, but they are not sufficient. The final spark comes from competitive intensity—and in AR, the platform war is now fully ignited.

Timeline Convergence: All major platforms—Meta, Google, Apple, Snap—are now targeting 2025–2026. Industry consensus has formed around this adoption window.Developer Tools: SDKs are proliferating. Multiple app stores are launching. Content creation is accelerating, ensuring that consumers will find utility when they unbox devices.Ecosystem Race: Platforms are fighting for developer loyalty, and network effects are beginning to build. Just as the iOS App Store locked in the iPhone’s dominance, the AR platform that secures developer gravity in 2025–2026 will dictate the decade ahead.Winner-take-most dynamics dominate platform wars. Developers don’t want to build for five platforms—they want to build for the one where users are. This convergence creates urgency and forces acceleration. Meta’s early push triggered the race, but now all players are locked in.

Technology Convergence PointsWhile acceptance, supply, and competition set the stage, technology convergence provides the final confirmation that AR is ready for mass adoption.

AI Assistant Maturity: GPT and Gemini-class models have proven multimodal understanding. Contextual assistance—navigation, translation, task automation—is now viable. AR glasses are no longer dumb displays; they are intelligent companions.Hardware Breakthroughs: Neural interfaces (sEMG), micro-display improvements, and battery-weight optimization have solved critical friction points. Devices are lighter, more usable, and socially acceptable.Use Case Clarity: The killer apps are becoming obvious: AI companions, navigation, translation, and hands-free productivity. Consumers no longer ask, “Why do I need AR glasses?” The value proposition is self-explanatory.Network Effects: Shared AR experiences are driving adoption. Social applications, multiplayer AR, and developer-created lock-in mechanisms are creating compounding momentum.The convergence of AI and AR is particularly transformative. AR without intelligence was always limited to overlaying information. AR with agentic AI becomes a different category altogether: proactive, adaptive, and personalized.

The Market Growth TrajectoryThe indicators translate into numbers that tell their own story:

2023: 200,000 units2024: 420,000 units2025: 600,000 units (tipping point year begins)2026: 2.5 million units (crossing the chasm)2027: 8 million units2030: 32 million units projectedThis trajectory represents not just growth, but acceleration. AR adoption is now expanding faster than the early smartphone market did—a sign of compressed timelines and stronger network effects.

The Tipping Point: 2025–2026All these factors align in the same critical window.

Consumer acceptance has been achieved.Supply chains are mature and ready to scale.Platform wars are intensifying, forcing accelerated launches.Technology convergence points are removing friction and clarifying use cases.The conclusion is unavoidable: 2025–2026 is the tipping point when AR crosses into mainstream adoption.

The question is no longer whether AR glasses will succeed—it is which ecosystem will capture the critical early developer and consumer loyalty.

Conclusion: The Ecosystem AdvantageWhen all indicators align, the question of adoption becomes inevitable. What remains uncertain is leadership.

Meta has the advantage of consumer validation and developer momentum. Apple has the brand power to dictate demand once its products land. Google can leverage Android ubiquity, while Snap bets on cultural edge and developer intimacy.

But regardless of who wins, the tipping point is here. AR is no longer a promise—it is a market. The 2025–2026 window will decide not just which devices succeed, but which ecosystems define the next decade of computing.

The future of AR isn’t in doubt. Only its winners are.

The post Market Dynamics: The Tipping Point Indicators appeared first on FourWeekMBA.

The Acceleration Timeline: Meta’s Strategic Catalyst Effect

In technology markets, timing matters as much as product. Some companies wait for “perfect” technology before launching, prioritizing polish over speed. Others move early, betting that capturing ecosystem momentum outweighs initial imperfections. Meta has chosen the latter path, and in doing so, has reshaped the entire AR industry’s timeline.

The launch of the Ray-Ban AR glasses at $799, combined with the reveal of the Orion prototype and a breakthrough in neural band control, has done more than expand Meta’s own roadmap. It has compressed the timelines of Apple, Google, Snap, and others—forcing the industry forward by at least two years. Meta’s aggressive posture has created a catalytic effect, igniting a domino chain across the AR ecosystem.

Before vs. After Meta Connect 2025Prior to September 2025, the industry timelines looked predictable.

Apple was targeting 2027 for smart glasses. Its focus was on premium polish, waiting until hardware could meet its famously high standards. Tim Cook himself signaled caution: AR would arrive, but not until the “perfect” device was ready.Google was non-committal, talking vaguely about a “future” Android XR platform but offering no launch dates. The focus remained on laying down ecosystem pipes rather than consumer launches.Snap was slowly iterating, pointing to a 2026 consumer launch but still seen as niche compared to Apple’s looming scale or Meta’s capital muscle.Meta’s Connect 2025 event detonated that equilibrium.

Apple immediately accelerated its timeline, with Cook described as “hell bent” on a 2026 launch.Google confirmed a Fall 2025 Android XR launch, marking its first concrete commitment.Snap doubled down on its 2026 target, locking in its consumer push.The industry timeline had collapsed from cautious, multi-year windows to a compressed 18–24 month sprint.

Meta’s Strategic Catalyst MovesThree strategic decisions turned Meta from a participant into the catalyst.

Ray-Ban Display LaunchBy launching the first mainstream AR glasses under $1,000, Meta signaled that AR was no longer experimental. At $799, the glasses hit a consumer-accessible price point, immediately creating urgency for rivals.Neural Band Innovation

The introduction of a surface electromyography (sEMG) gesture band solved a fundamental interaction bottleneck—how to control AR without awkward or socially intrusive gestures. By moving this breakthrough from lab to product, Meta forced competitors to confront the risk of being locked out of a new input paradigm.Developer SDK Release

Meta opened the gates for developers early, seeding its ecosystem with tools, APIs, and app store access. This ensured that while competitors debated timelines, Meta was already capturing developer mindshare—the scarce resource that decides platform wars.

Together, these moves compressed the adoption window and reframed AR as a here-and-now technology rather than a distant promise.

Forced Competitive AccelerationMeta’s aggression left competitors with no choice but to accelerate.

Apple: Accelerated to 2026 with seven projects in its pipeline, now positioned as a Cook-level personal priority. Apple’s brand can still dictate demand, but it lost the luxury of waiting for perfection.Google: Moved from vague promises to a concrete Fall 2025 Android XR launch. Its strength lies in ecosystem ubiquity, but the sudden shift reflects the pressure of Meta setting the pace.Snap: Committed firmly to a 2026 consumer launch with Snap OS 2.0. Its bet remains ecosystem-first, but now in a tighter race for developer mindshare.Amazon: Previously targeting late 2026 or beyond, Amazon now faces the risk of irrelevance. With its AR strategy still tied to Alexa integration, it risks being late to the developer land grab.Others (Samsung, Microsoft, etc.): Accelerated across the board, fearful of missing the critical 2025–2026 adoption window.Meta turned what looked like a slow, staggered rollout into a high-stakes race.

Why This Acceleration MattersThe industry’s sudden compression is not just a matter of product launches—it reshapes the entire market dynamic.

Market Window Compression: The critical 2025–2026 adoption window has been identified as decisive. Whoever secures developer loyalty during this period will likely define the ecosystem for the next decade.Supply Chain Activation: Component suppliers have accelerated production, driving OLED and optics costs down faster than expected. The ripple effect lowers barriers for all players.Ecosystem Race: With multiple app stores and developer SDKs launching simultaneously, the battle for developer mindshare has intensified. Content creation is accelerating, ensuring consumers won’t face empty hardware at launch.By moving early, Meta has forced a collective sprint—erasing years of cautious waiting and pulling the industry into the present.

The Domino EffectMeta’s approach reveals a clear strategic logic: force the market before the technology is perfect. This strategy creates both risk and opportunity.

Risk: Consumers could be disappointed by imperfect hardware, creating skepticism. Developers could be spread too thin across platforms. The industry could overshoot adoption readiness.Opportunity: By defining the timeline, Meta shapes the competitive landscape. It seizes the narrative, captures developers, and dictates consumer expectations.The result is a winner-take-most scenario, where a two-to-three-year acceleration could lock in ecosystem leaders before laggards even launch.

The New Competitive RealityMeta’s catalyst effect changes the balance of power:

Apple no longer dictates the industry pace. For the first time in decades, it is reacting rather than leading.Google must prove it can execute hardware launches, not just platforms.Snap benefits from validation but faces increased pressure to scale fast.Amazon risks sliding toward irrelevance unless it pivots aggressively.The real beneficiaries may be consumers and developers. Consumers will see AR products years earlier than expected, while developers gain multiple platforms competing for their loyalty.

Conclusion: Meta’s Clock Is Now the Industry’s ClockMeta has rewritten the AR industry’s timeline. By combining a bold hardware launch, an input breakthrough, and early developer engagement, it has pulled the future forward. Apple, Google, Snap, and others no longer control their own pacing—they must play by Meta’s clock.

This is the essence of the catalyst effect: a single player’s aggression triggers a domino chain, forcing an entire industry to accelerate. Whether Meta ultimately wins the AR wars remains uncertain. But one fact is undeniable: without Meta’s push, the industry would still be waiting for “perfect” technology.

Instead, the race is on now—and the next 18 months will decide the shape of the AR economy for the next decade.

The post The Acceleration Timeline: Meta’s Strategic Catalyst Effect appeared first on FourWeekMBA.

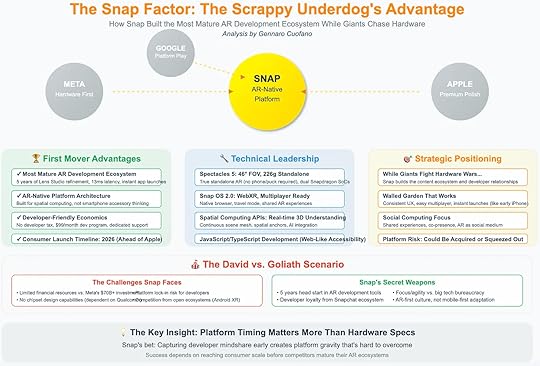

The Snap Factor: The Scrappy Underdog’s Advantage

When the AR wars are written into history, the spotlight will naturally fall on the giants—Meta, Apple, and Google. Billions in investment, proprietary silicon, and integrated ecosystems make them appear unstoppable. Yet hidden in plain sight is a scrappy underdog that has been quietly building the most mature AR development ecosystem in the industry: Snap.

While the tech titans fight expensive hardware wars, Snap has focused on cultivating a developer-first AR platform. This strategic bet—prioritizing ecosystem gravity over hardware supremacy—may prove decisive in the years ahead.

First Mover AdvantagesSnap’s greatest strength lies in its five-year head start. Lens Studio, refined since 2018, has evolved into the most mature AR development environment on the market. Developers have spent years experimenting, iterating, and launching AR applications on Snapchat’s ecosystem. By 2025, Snap had achieved instant app launches with latency as low as 13 milliseconds—a technical feat that ensures AR feels native, not experimental.

This maturity translates into developer trust. While Meta and Apple are still convincing developers to build for their platforms, Snap already commands loyalty from thousands of AR creators worldwide.

Snap also avoided the strategic trap of treating AR as a smartphone accessory. From the beginning, it built an AR-native platform architecture, with tools designed for spatial computing rather than mobile add-ons. That decision positions Snap to move seamlessly into a future where AR isn’t an app feature—it is the operating environment itself.

Economically, Snap keeps developers happy by eliminating punitive costs. Instead of charging developer taxes like Apple’s App Store or platform fees like Meta, Snap offers a $99 monthly developer program with dedicated support. For early-stage developers, this predictability makes Snap the most accessible on-ramp into AR.

Finally, Snap’s consumer launch timeline—2026—puts it ahead of Apple and competitive with Meta. Unlike Apple’s premium-first approach, Snap’s goal is to democratize AR, appealing to younger audiences and grassroots adoption.

Technical LeadershipDespite being smaller than its rivals, Snap has made surprising technical strides.

Spectacles 5: Featuring a 46° field of view and weighing just 226 grams, Snap’s standalone glasses run on dual Snapdragon SoCs. Unlike previous versions, no phone or puck is required. This creates a self-contained AR experience—a critical leap toward mainstream usability.Snap OS 2.0: With WebXR support and multiplayer readiness, the operating system supports native browsers, travel modes, and shared AR environments. The addition of a web-like API model ensures developer accessibility.Spatial Computing APIs: Snap has built real-time 3D understanding capabilities, enabling scene mapping, anchors, and AI integration. This creates the technical scaffolding for AR-native applications beyond filters and lenses.JavaScript/TypeScript Development: By using familiar languages, Snap reduces friction for millions of developers. The choice mirrors the strategy of the early web: make development accessible, not elitist.Together, these innovations mean Snap’s platform isn’t an experiment—it’s already a functional ecosystem for AR-native experiences.

Strategic PositioningWhere the giants chase hardware polish and ecosystem lock-in, Snap is playing a different game: build the ecosystem before the hardware matures.

While giants fight hardware wars… Snap builds relationships with developers and creators. By the time Apple and Meta finalize their hardware, Snap will already have an entrenched ecosystem.Walled garden that works: Snap’s approach mirrors Apple’s early iPhone era—a tightly controlled environment delivering consistent UX, fast multiplayer, and instant launches. For AR, where latency kills immersion, this trade-off makes sense.Social computing focus: Snap views AR not as productivity software or luxury devices but as a social medium. Shared experiences, co-presence, and community engagement make AR playful, viral, and sticky.Platform risk: The obvious downside is Snap’s vulnerability. With limited financial resources compared to Meta’s $70B+ investment in Reality Labs, Snap could be acquired, squeezed out by Android XR, or marginalized by hardware-dominant players.Still, Snap’s agility is its secret weapon. Free from bureaucratic weight, Snap can move faster, adapt quicker, and take risks the giants would never consider.

The David vs. Goliath ScenarioSnap’s position is precarious yet promising. On one side, it faces existential risks:

Financial limits: Competing with trillion-dollar companies is inherently unequal.Chipset dependency: Without proprietary silicon, Snap relies on Qualcomm and others, reducing control.Platform lock-in: Developers tempted by Meta’s subsidies or Apple’s lucrative App Store could defect.Yet Snap has two secret weapons:

A five-year lead in AR development tools. No competitor can erase the accumulated knowledge and loyalty built within Lens Studio.A cultural alignment with its audience. Snap doesn’t need to “teach” users how to use AR. Its Gen Z user base is already fluent in lenses, filters, and spatial content.This combination gives Snap a chance to punch above its weight, even in a market dominated by Goliaths.

Platform Timing Matters More Than SpecsThe key insight driving Snap’s strategy is simple: in AR, platform timing trumps hardware specs.

Hardware will inevitably improve across the board. Field of view, weight, battery life—all players will converge on acceptable baselines by 2027.What will not converge is developer gravity. Ecosystems that capture developers first create self-reinforcing loops: more apps attract more users, which attract more developers.This is the exact dynamic that made Apple’s App Store dominant in mobile, even though Android outsold the iPhone in hardware volume. Snap is betting that the same principle applies in AR—capture developer mindshare early, and platform gravity will do the rest.

Conclusion: Snap’s BetSnap is not trying to outspend Meta or out-polish Apple. Instead, it is betting on ecosystem gravity. By building the most mature AR development environment, prioritizing accessibility, and focusing on AR as a social medium, Snap positions itself as the underdog with asymmetric potential.

The risk is existential. Without deep financial backing, Snap could be acquired or squeezed out. But if it holds its ground through 2026, it could emerge as the AR-native platform of choice, not because of superior hardware, but because it captured developer mindshare before the giants fully arrived.

In the end, AR history may remember Snap not as the company that built the flashiest glasses, but as the one that built the ecosystem foundation that made AR a cultural reality.

The post The Snap Factor: The Scrappy Underdog’s Advantage appeared first on FourWeekMBA.

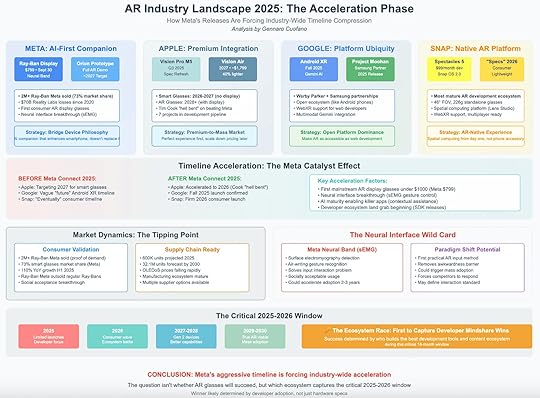

AR Industry 2025: The Meta Acceleration Effect

The augmented reality industry has lived in the shadow of “next year” promises for more than a decade. From Google Glass to Magic Leap to Snap Spectacles, the technology has cycled through hype and retreat, always postponed by missing hardware maturity, weak developer tools, or consumer indifference. That inertia broke in late 2025, when Meta’s aggressive moves compressed the entire industry timeline. What had been a slow burn toward 2027–2030 suddenly shifted into a high-stakes race defined by the 18-month window of 2025–2026.

The story is no longer whether AR glasses will succeed. The question is which ecosystem captures developer mindshare first—because in AR, as in mobile, platforms that win the developer race dominate the hardware cycle.

Meta: AI-First CompanionMeta has become the unexpected catalyst. Its Ray-Ban Meta glasses, priced at $799, proved that there is real consumer demand at scale. More than 2 million units sold, giving Meta an estimated 73% share of the smart-glasses market. For the first time, AR glasses outsold the company’s own non-smart Ray-Bans, signaling a cultural tipping point.

The strategy is not to replace the smartphone but to bridge it—positioning glasses as an AI companion. That framing reduces the adoption barrier: these are not fully immersive AR headsets, but familiar form factors enhanced with real-time AI assistance. Meta’s Orion prototype, scheduled for a full AR demo by 2027, represents the longer-term push, but its current success has already forced competitors to accelerate.

Apple: Premium IntegrationApple’s approach reflects its classic strategy: perfection before mass adoption. The Vision Pro remains the flagship, while the Vision Air ($1,799, ~40% lighter) pushes the product closer to mainstream usability. Smart glasses without a display are expected by 2026–2027, with full AR displays targeted for 2028 or later.

Apple’s integration advantage lies in its ecosystem. iOS, Apple Silicon, and a tightly controlled developer environment create the conditions for premium-to-mass scaling. The company’s delay is not technical incapacity but brand protection: Apple will not risk releasing an unfinished product. However, Meta’s acceleration pressures Apple to move earlier than Tim Cook may prefer.

Google: Platform UbiquityGoogle, burned by its premature Glass launch a decade ago, is taking a platform-dominance strategy. With Android XR launching in Fall 2025 and Project Moohan in 2026, Google is treating AR like the web—open, API-driven, and hardware-agnostic. Partnerships with Samsung and Warby Parker point to a mass-market ubiquity play: AR as a default extension of Android.

By positioning itself as the Windows of AR, Google doesn’t need to win hardware. It needs to make AR development as accessible as web development, ensuring that most apps run on Android XR first. If this succeeds, Google controls the middle layer of the stack, regardless of which hardware dominates.

Snap: Native AR PlatformWhile Meta, Apple, and Google balance hardware strategies, Snap plays to its strengths: software-native AR. Spectacles 5 and the forthcoming “Specs” 2026 are less about raw specs than about Snap’s developer ecosystem. With Lens Studio and WebXR support, Snap has cultivated one of the most mature AR creation communities.

Snap’s strategy is to define AR as a content platform from day one, not as a smartphone accessory. If it can capture creator mindshare and consumer culture, Snap could carve out the TikTok-of-AR position, even without dominating hardware sales.

Timeline Compression: The Meta CatalystBefore Meta Connect 2025, industry timelines looked conservative. Apple aimed at 2027. Google and Snap were vague. After Meta’s announcements:

Apple accelerated to 2026.Google confirmed a 2025 launch.Snap committed to a 2026 consumer wave.Three factors explain this sudden compression:

Affordable hardware breakthrough: Glasses under $1,000 with credible functionality.Neural interface leap: Surface electromyography (sEMG) enables wrist-based gesture control, solving the input problem that doomed earlier attempts.AI maturity: Contextual assistance apps make glasses useful from day one, creating a reason to wear them.The Neural Interface Wild CardThe biggest unknown is the neural interface. Meta’s sEMG band, capable of detecting micro-muscle signals for precise gesture recognition, could be the equivalent of the iPhone’s multitouch moment.

If socially acceptable and reliable, this interface removes the biggest friction in AR: input awkwardness. No waving hands in public, no clumsy voice commands. A subtle wristband becomes the gateway.

If successful, neural input could accelerate mass adoption by 2–3 years, forcing every competitor to follow Meta’s standard. If it fails, AR risks another cycle of half-solutions.

Market Dynamics: Tipping PointsTwo tipping points are converging:

Consumer Validation: Meta’s Ray-Ban sales prove mainstream demand. For the first time, AR glasses outsold comparable non-AR products.Supply Chain Readiness: With OLED microdisplays scaling, 600k units projected for 2025, and costs falling, the ecosystem can support volume.This combination of cultural acceptance and supply maturity echoes the smartphone inflection point of 2007–2009.

The Critical 2025–2026 WindowThe next 18 months will determine the industry’s trajectory. By 2027, the market will either have:

Ecosystem leaders with developer lock-in, driving Gen 2 devices with better capabilities.Or a fragmented market where no player dominates, delaying true AR adoption until 2029–2030.The outcome hinges not on specs but on developer tools and ecosystems. The company that wins developer mindshare by 2026 will define the AR economy for the next decade.

Conclusion: The Acceleration EconomyMeta’s aggressive push has forced the industry out of delay mode. The timelines are no longer 2030-plus—they are 2025–2026. The real competition is not hardware specs but ecosystem capture.

The decisive factor is simple: who builds the best development tools and captures developer loyalty first?

AR’s success or failure is no longer about whether consumers want glasses. That question has been answered. The real battle is whether developers build for Meta, Apple, Google, or Snap—and whether neural interfaces unlock the interaction model that makes AR inevitable.

The clock is ticking. The window is narrow. The acceleration has begun.

The post AR Industry 2025: The Meta Acceleration Effect appeared first on FourWeekMBA.

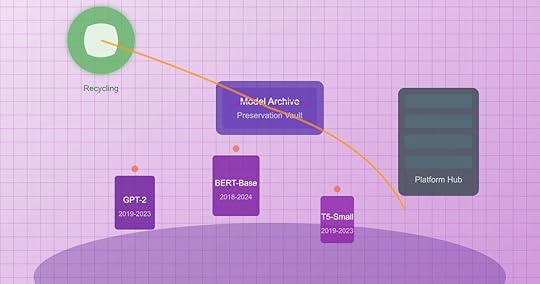

AI Model Graveyards: Digital Platforms for Deprecating, Archiving, and Recycling Obsolete Models

In the rapidly evolving landscape of artificial intelligence, the lifecycle of machine learning models has become increasingly complex and accelerated. As newer, more sophisticated models emerge with unprecedented frequency, the challenge of managing obsolete and deprecated AI systems has given rise to an entirely new category of platforms: AI Model Graveyards. These specialized digital ecosystems serve as comprehensive repositories for the systematic deprecation, archival, and recycling of AI models that have reached the end of their productive lifecycle.

The Growing Need for Model Lifecycle ManagementThe exponential growth in AI model development has created an unprecedented challenge in the technology landscape. Organizations worldwide deploy thousands of machine learning models across various applications, from simple recommendation engines to complex language models and computer vision systems. Each model represents significant investment in computational resources, training data, and human expertise, yet the relentless pace of innovation means that many models become obsolete within months or even weeks of deployment.

Traditional approaches to model management have proven inadequate for handling this volume and velocity of change. The absence of systematic processes for model retirement has led to numerous problems: resource waste through continued hosting of unused models, security vulnerabilities from unmaintained systems, compliance issues related to data retention, and lost institutional knowledge when models are simply deleted without proper documentation.

AI Model Graveyards emerge as a solution to these challenges, providing structured platforms that treat model deprecation as a thoughtful process rather than a simple deletion. These platforms recognize that even obsolete models contain valuable artifacts, insights, and components that can inform future development or serve specialized use cases.

Architecture and Core ComponentsThe architecture of AI Model Graveyards reflects the complex nature of modern machine learning systems. At their foundation lies a comprehensive metadata management system that captures not just the model artifacts themselves, but the entire context surrounding their development, deployment, and performance.

The archival layer serves as the primary storage mechanism, designed to accommodate the diverse formats and structures of different model types. Unlike simple file storage systems, these platforms understand the intricate relationships between model weights, configuration files, training scripts, evaluation datasets, and deployment specifications. They maintain the integrity of these relationships while optimizing storage efficiency through intelligent compression and deduplication techniques.

Version control integration forms another critical component, extending beyond traditional code versioning to encompass the full lineage of model evolution. This includes tracking experimental branches, parameter variations, and the complex genealogy of model families that spawn from common ancestors.

The documentation and knowledge preservation system captures the human insights that traditional backups cannot store. This includes design decisions, performance observations, failure analyses, and the contextual knowledge that developers accumulate through working with specific models. This information proves invaluable for future teams who might encounter similar challenges or seek to understand why certain approaches were abandoned.

Deprecation Workflow and ProcessesThe deprecation process within AI Model Graveyards follows a structured workflow designed to ensure nothing valuable is lost while maintaining operational efficiency. The process begins with deprecation triggers, which can be automatic based on performance metrics, manual based on strategic decisions, or time-based following predetermined lifecycles.

During the initial assessment phase, automated analysis tools evaluate the model’s current usage patterns, dependencies, and potential value for future reference. This assessment helps determine the appropriate level of preservation and whether any components merit immediate recycling into active projects.

The documentation capture phase involves both automated extraction of technical specifications and human input regarding lessons learned, use cases, and performance characteristics. Advanced platforms employ natural language processing to extract insights from deployment logs, user feedback, and developer communications, creating comprehensive knowledge profiles that extend far beyond technical documentation.

The migration process carefully handles the transition of any ongoing dependencies, ensuring that deprecated models can be safely removed from production environments without disrupting active systems. This includes graceful degradation strategies for models that serve as fallbacks or components in larger systems.

Intelligent Archival and CompressionThe storage requirements for comprehensive model archival present significant technical challenges. Modern AI models, particularly large language models and complex neural networks, can consume terabytes of storage space. AI Model Graveyards employ sophisticated compression and optimization techniques specifically designed for machine learning artifacts.

Semantic compression analyzes model structures to identify redundancies and patterns that allow for more efficient storage without losing essential characteristics. This approach goes beyond traditional file compression to understand the mathematical relationships within model parameters, enabling dramatic size reductions while preserving the ability to extract meaningful insights.

Layered archival strategies recognize that different components of a model have varying long-term value. Critical elements like final weights and core architecture receive full preservation, while intermediate training checkpoints might be stored with lossy compression or representative sampling.

The systems also implement intelligent deduplication across the entire archive, recognizing when multiple models share common components, training data, or architectural elements. This cross-model optimization can significantly reduce overall storage requirements while maintaining the integrity of individual model archives.

Model Component Recycling and ReuseOne of the most innovative aspects of AI Model Graveyards involves the systematic recycling of model components for future use. Rather than treating deprecated models as static archives, these platforms actively mine them for reusable assets that can accelerate new development projects.

Transfer learning optimization identifies model layers, feature extractors, and embeddings that demonstrate strong performance characteristics across different domains. These components are catalogued with performance metadata, making them easily discoverable for researchers working on related problems.

The platforms maintain extensive databases of training strategies, hyperparameter configurations, and architectural patterns that proved effective in specific contexts. This knowledge recycling prevents teams from repeatedly solving similar optimization challenges and helps establish best practices across organizations.

Data artifact recovery represents another valuable recycling stream. Deprecated models often contain carefully curated datasets, preprocessing pipelines, and evaluation benchmarks that required significant effort to develop. These assets are extracted, documented, and made available for future projects, preventing the loss of valuable data work.

Knowledge Preservation and Institutional MemoryBeyond the technical artifacts, AI Model Graveyards serve as repositories for institutional knowledge that would otherwise be lost when teams change, projects end, or organizations restructure. This knowledge preservation function proves critical for maintaining continuity in AI development efforts.

The platforms capture design rationales, documenting why specific architectural choices were made, what alternatives were considered, and how decisions related to broader project goals. This information helps future teams understand the context behind existing models and avoid repeating failed experiments.

Performance insights and failure analyses receive special attention, as these represent some of the most valuable learning opportunities in AI development. The systems capture not just what worked, but detailed analysis of what didn’t work and why, creating a comprehensive knowledge base of approaches to avoid and problems to anticipate.

The human factor documentation includes team dynamics, collaboration patterns, and communication workflows that contributed to successful model development. This organizational knowledge helps teams structure future projects more effectively and understand the human processes behind technical achievements.

Compliance and Governance IntegrationAs AI systems become subject to increasing regulatory scrutiny, AI Model Graveyards play a crucial role in maintaining compliance with data protection, algorithmic accountability, and industry-specific regulations. The platforms provide comprehensive audit trails that demonstrate proper handling of sensitive data and responsible AI practices.

Data lineage tracking ensures complete visibility into how training data was sourced, processed, and used throughout the model lifecycle. This capability proves essential for compliance with privacy regulations that grant individuals rights over their data, including the right to deletion and the right to understand how their information was used.

The systems maintain detailed records of model performance across different demographic groups, enabling ongoing monitoring for bias and fairness issues even after models are deprecated. This historical perspective helps organizations understand how their AI systems have evolved in terms of ethical considerations and social impact.

Retention policies within AI Model Graveyards balance the value of long-term preservation with legal requirements for data deletion. The platforms implement sophisticated policies that can selectively remove sensitive elements while preserving the broader technical and educational value of deprecated models.

Integration with Development EcosystemsModern AI Model Graveyards function as integral components of broader machine learning development ecosystems rather than isolated archive systems. They integrate seamlessly with popular development tools, deployment platforms, and organizational workflows to minimize friction in the deprecation process.

Version control integration extends the familiar git-based workflows that developers already use, making model deprecation feel like a natural extension of existing practices. Developers can deprecate models using familiar commands and workflows, reducing the learning curve and increasing adoption.

The platforms integrate with continuous integration and deployment pipelines to automate deprecation triggers based on performance metrics, deployment patterns, or strategic decisions. This automation ensures that deprecation happens consistently and promptly, preventing the accumulation of obsolete models in production environments.

API integration enables other development tools to query the graveyard for insights, retrieve archived components, or understand the history of specific model families. This connectivity makes the archived knowledge immediately accessible within active development workflows.

Collaborative Research and Open ScienceAI Model Graveyards facilitate new forms of collaborative research by making deprecated models and their associated knowledge available to the broader research community. This openness accelerates scientific progress by allowing researchers to build upon previous work, understand failure modes, and explore alternative approaches.

Academic partnerships enable research institutions to access comprehensive model archives for educational purposes, providing students with realistic examples of model development cycles and the opportunity to learn from both successes and failures. This educational access helps prepare the next generation of AI practitioners with more complete understanding of the field.

The platforms support reproducibility research by maintaining complete environments and dependencies needed to recreate historical model behavior. This capability proves invaluable for researchers studying the evolution of AI capabilities or investigating specific phenomena across different model generations.

Cross-organizational collaboration becomes possible when multiple institutions contribute to shared AI Model Graveyards, creating collective knowledge bases that benefit entire research communities while respecting appropriate confidentiality boundaries.

Economic Models and SustainabilityThe economics of AI Model Graveyards present interesting challenges and opportunities. While the platforms require significant infrastructure investment, they generate value through resource optimization, knowledge reuse, and reduced redundant development efforts.

Cost recovery models vary depending on the organizational context. Enterprise implementations typically justify costs through reduced storage waste, accelerated development cycles, and improved compliance capabilities. Academic and open-source implementations often rely on grant funding, institutional support, or collaborative cost-sharing arrangements.

The platforms create new economic opportunities through component marketplaces where valuable model artifacts can be licensed or shared. These marketplaces enable organizations to monetize their deprecated models while providing other teams with access to proven components and approaches.

Sustainability considerations extend beyond immediate costs to include the environmental impact of model storage and the broader efficiency gains from avoiding redundant development work. AI Model Graveyards contribute to more sustainable AI development by maximizing the value extracted from computational resources already invested in model training.

Technical Challenges and InnovationThe development of effective AI Model Graveyards requires solving numerous technical challenges that push the boundaries of existing systems and methodologies. Storage optimization for machine learning artifacts demands new approaches that understand the unique characteristics of model data.

Metadata standardization across different model types, frameworks, and organizations requires developing flexible schemas that can accommodate the diversity of AI systems while maintaining searchability and interoperability. This standardization effort involves collaboration across the entire AI community.

Search and discovery capabilities must handle the high-dimensional, complex relationships between models, data, and performance characteristics. Traditional database approaches prove inadequate for the nuanced queries that researchers and developers need to perform when searching for relevant archived models.

The platforms must also address version compatibility challenges as AI frameworks evolve rapidly, potentially making archived models inaccessible to future tools. This requires developing translation and adaptation capabilities that can bridge compatibility gaps across different framework versions.

Future Evolution and Emerging PatternsThe field of AI Model Graveyards continues to evolve rapidly as organizations gain experience with model lifecycle management and new technical capabilities emerge. Several trends are shaping the future development of these platforms.

Artificial intelligence is being applied to the graveyard systems themselves, creating intelligent archival assistants that can automatically identify valuable components, suggest recycling opportunities, and optimize storage strategies. These meta-AI systems learn from patterns across archived models to improve the efficiency and value of the graveyard operations.

Integration with emerging AI development paradigms, such as federated learning and edge computing, requires new approaches to distributed archival and knowledge sharing. These paradigms create additional complexity in model lifecycle management while also offering new opportunities for collaborative knowledge preservation.

The platforms are beginning to support more sophisticated analytical capabilities that can identify trends and patterns across large collections of archived models. This meta-analysis capability provides insights into the evolution of AI techniques and helps guide future research directions.

Conclusion: Preserving AI Heritage for Future InnovationAI Model Graveyards represent a fundamental shift in how we think about the lifecycle of artificial intelligence systems. Rather than treating model deprecation as an end point, these platforms recognize it as a transformation that preserves valuable knowledge and assets for future innovation.

The comprehensive approach to model archival, documentation, and recycling ensures that the significant investments in AI development continue to generate value long after individual models become obsolete. This perspective transforms deprecation from a cost center into a value-creation opportunity that benefits entire organizations and research communities.

As the AI field continues to mature, the systematic management of model lifecycles will become increasingly important for maintaining competitive advantage, ensuring compliance, and fostering continued innovation. AI Model Graveyards provide the foundation for this systematic approach, creating digital ecosystems that honor the past while enabling the future of artificial intelligence development.

The success of these platforms ultimately depends on changing cultural attitudes toward failure and obsolescence in AI development. By celebrating and preserving the full journey of model development, including the failures and dead ends, AI Model Graveyards help create a more mature, sustainable approach to artificial intelligence innovation that builds systematically on collective knowledge and experience.

The post AI Model Graveyards: Digital Platforms for Deprecating, Archiving, and Recycling Obsolete Models appeared first on FourWeekMBA.

Federated AI Training Networks: Decentralized Model Development Without Data Sharing

The emergence of federated AI training networks represents a paradigm shift in how machine learning models are developed, particularly in environments where data privacy, security, and sovereignty are paramount. These decentralized systems enable multiple organizations to collaboratively train AI models without ever sharing their raw data, fundamentally changing the economics and politics of artificial intelligence development.

The Architecture of Decentralized LearningFederated learning networks operate on a fundamentally different principle than traditional centralized AI training. Instead of aggregating data in a central location, these networks bring the computation to the data. Each participating node trains a local model on its own data, then shares only the model updates—mathematical representations of learning—rather than the data itself.

This architectural innovation addresses one of the most significant barriers to AI collaboration: data privacy. Organizations that previously couldn’t participate in collaborative AI development due to regulatory constraints, competitive concerns, or privacy obligations can now contribute to and benefit from collective intelligence without compromising their data assets.

The technical infrastructure supporting federated networks involves sophisticated orchestration systems that coordinate training across potentially thousands of nodes. These systems must handle asynchronous updates, varying computational capabilities, unreliable network connections, and heterogeneous data distributions while maintaining model convergence and performance.

Privacy-Preserving TechnologiesAt the heart of federated AI training networks lies a suite of privacy-preserving technologies that ensure data never leaves its source. Differential privacy adds carefully calibrated noise to model updates, preventing the reverse engineering of individual data points. Secure multi-party computation allows nodes to perform joint computations without revealing their inputs to each other.

Homomorphic encryption takes this further, enabling computations on encrypted data without decryption. This means that even the model updates shared between nodes can remain encrypted, providing an additional layer of security. These cryptographic techniques, once considered too computationally expensive for practical use, are becoming increasingly viable as specialized hardware and optimized algorithms emerge.

The privacy guarantees of federated networks extend beyond technical measures. The architecture itself provides privacy by design, as raw data never travels across networks or enters potentially vulnerable centralized storage systems. This distributed approach significantly reduces the attack surface for data breaches and unauthorized access.

Collaborative Intelligence Without TrustFederated networks enable a new form of collaboration where trust in other participants isn’t required. Organizations can contribute to collective model improvement while maintaining complete control over their data. This trustless collaboration opens possibilities for competitors to work together on shared challenges without revealing competitive advantages.

The pharmaceutical industry exemplifies this potential. Drug discovery requires vast amounts of patient data, but privacy regulations and competitive dynamics prevent data sharing. Federated networks allow pharmaceutical companies to train models on collective datasets without exposing proprietary information or violating patient privacy. The result is better drug discovery models that benefit from diverse data while respecting all constraints.

Similarly, financial institutions can collaborate on fraud detection models without sharing customer transaction data. Each bank contributes learnings from its fraud patterns while keeping customer information secure. The collective model becomes more robust than any individual institution could develop alone.

Economic Models and Incentive StructuresThe economics of federated AI training networks differ fundamentally from centralized approaches. Without a central entity controlling the data and model, new economic models emerge to incentivize participation and ensure fair value distribution.

Contribution-based rewards systems track the value each node adds to the collective model. Nodes that provide high-quality data or significant computational resources receive proportional benefits. This might take the form of improved model performance, monetary compensation, or governance rights in the network.

Some networks implement token economies where participants earn cryptographic tokens for their contributions. These tokens can represent access rights to the trained model, voting power in network governance, or tradeable assets with monetary value. The tokenization of AI training creates new markets and investment opportunities.

The cost structure of federated networks also differs from centralized training. While coordination overhead exists, the distribution of computational costs across participants can make large-scale model training more economically viable. Organizations contribute their existing computational resources during off-peak hours, creating a more efficient use of global computing capacity.

Technical Challenges and SolutionsFederated AI training networks face unique technical challenges that don’t exist in centralized systems. Communication efficiency becomes critical when thousands of nodes must coordinate. Traditional gradient descent algorithms, designed for single-machine training, must be adapted for distributed, asynchronous environments.

Model convergence in federated settings requires sophisticated algorithms that can handle non-independent and identically distributed (non-IID) data. Each node’s local data may have different distributions, creating challenges for model generalization. Advanced techniques like federated averaging with momentum, personalized federated learning, and multi-task learning help address these challenges.

System heterogeneity presents another challenge. Nodes may have vastly different computational capabilities, from powerful data center servers to edge devices with limited resources. Adaptive algorithms that can accommodate this heterogeneity while maintaining training efficiency are essential for practical federated networks.

Network reliability and latency issues must also be addressed. Unlike data center environments with high-bandwidth, low-latency connections, federated networks often operate over public internet infrastructure. Robust protocols that can handle node failures, network partitions, and variable latency are crucial for system reliability.

Governance and Coordination MechanismsEffective governance becomes crucial in decentralized networks where no single entity has complete control. Federated AI training networks must establish clear rules for participation, quality standards, and dispute resolution. These governance mechanisms must balance efficiency with decentralization, ensuring the network can adapt and improve while preventing any single participant from gaining disproportionate control.

Consensus mechanisms borrowed from blockchain technology often play a role in federated network governance. Participants might vote on network upgrades, model architecture changes, or admission of new members. Smart contracts can automate many governance functions, ensuring transparent and consistent rule enforcement.

Quality control in federated networks requires novel approaches. Without direct access to training data, the network must verify that participants are contributing valuable updates rather than noise or malicious inputs. Techniques like gradient verification, Byzantine fault tolerance, and reputation systems help maintain model quality in adversarial environments.

Industry-Specific ApplicationsHealthcare represents perhaps the most compelling use case for federated AI training networks. Medical data is highly sensitive, heavily regulated, and typically siloed within individual institutions. Federated networks allow hospitals to collaborate on diagnostic models without sharing patient data. A cancer detection model can learn from diverse patient populations across multiple hospitals while respecting privacy regulations like HIPAA and GDPR.

The automotive industry uses federated learning for autonomous vehicle development. Each vehicle manufacturer can contribute driving data from their fleets without revealing proprietary information about their systems. The collective model improves safety for all participants while maintaining competitive differentiation.

Smart city applications benefit from federated approaches where different municipal departments and infrastructure providers collaborate without centralizing sensitive data. Traffic optimization models can learn from multiple cities’ patterns while respecting local data sovereignty requirements.

Cross-Border Data CollaborationFederated networks provide a technical solution to the increasing balkanization of global data governance. As countries implement data localization requirements and restrict cross-border data flows, federated learning offers a way to maintain global AI collaboration while respecting sovereignty.

International research collaborations particularly benefit from this approach. Climate models can incorporate data from weather stations worldwide without requiring centralized data storage. Pandemic response systems can learn from health data across countries while respecting each nation’s data protection laws.

The technology enables new forms of soft power and diplomatic collaboration. Countries can contribute to global AI capabilities without compromising national security or citizen privacy. This creates opportunities for AI development that transcends traditional geopolitical boundaries.

Edge Computing IntegrationFederated AI training networks naturally complement edge computing architectures. As computational power moves closer to data sources, federated learning provides the coordination mechanism for these distributed resources. Edge devices can participate in model training during idle periods, contributing to collective intelligence while maintaining data locality.

This integration enables new applications in Internet of Things (IoT) environments. Smart home devices can collaboratively learn user preferences without sending personal data to cloud servers. Industrial IoT sensors can develop predictive maintenance models specific to local conditions while benefiting from global patterns.

The combination of edge computing and federated learning also improves system resilience. Models can continue to improve even when cloud connectivity is limited. Local adaptation happens naturally as edge devices train on local data while periodically synchronizing with the broader network.

Security and Adversarial ConsiderationsWhile federated networks provide privacy benefits, they also introduce new security challenges. Adversarial participants might attempt to poison the collective model by submitting malicious updates. Inference attacks could attempt to extract information about other participants’ data from model updates.

Robust aggregation techniques help defend against poisoning attacks by identifying and filtering outlier updates. Secure enclaves and trusted execution environments provide hardware-based security for sensitive computations. Regular security audits and penetration testing become essential for maintaining network integrity.

The distributed nature of federated networks can actually enhance security in some ways. There’s no central honeypot of data to attract attackers. Compromising the system requires attacking multiple nodes simultaneously, significantly increasing the difficulty and cost of successful attacks.