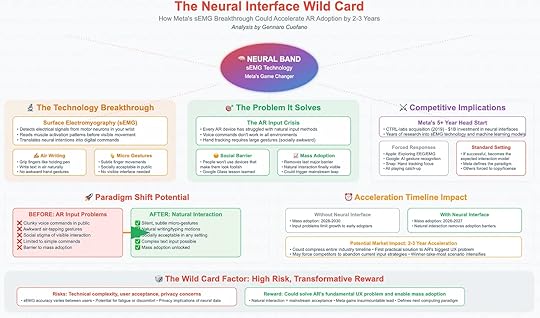

The Neural Interface Wild Card: Meta’s sEMG Bet and the Acceleration of AR

Every major computing platform has been defined by its input method. The mouse enabled graphical user interfaces. Multi-touch made the smartphone usable at scale. Voice assistants hinted at hands-free interaction, but never truly broke through. For augmented reality, the greatest unsolved problem has always been input.

How do you control a device that overlays digital information on the real world without making the user look awkward? How do you make AR interaction as seamless as typing on a keyboard or tapping on a screen? Until now, the answers have been unsatisfactory—voice commands fail in noisy or private settings, hand gestures are too large and socially awkward, and clunky air-tapping makes users feel ridiculous.

This is the AR input crisis. And Meta believes it has the solution.

The Technology BreakthroughMeta’s neural band, powered by surface electromyography (sEMG), detects electrical signals from motor neurons in the wrist. By reading muscle activation patterns before visible movement, it translates neural intentions into digital commands.

This breakthrough unlocks two powerful interaction models:

Air Writing: Grip fingers as if holding a pen and write text in air naturally—no awkward mid-air tapping, no keyboards required.Micro Gestures: Subtle finger movements, nearly invisible to outsiders, trigger commands. These gestures are socially acceptable, unobtrusive, and work anywhere.The genius lies in pre-motion detection. The band captures the signal before muscles visibly move, allowing for fluid, low-latency input that feels natural. The result is a silent, discreet, and universal input method that finally makes AR practical for everyday use.

The Problem It SolvesThe input crisis has always been AR’s bottleneck. Every AR headset or glasses project has hit the same wall: how do you tell the device what you want without friction?

Voice commands don’t work in crowded, public, or quiet environments.Hand tracking requires large, visible gestures that are socially awkward.Touch controls (on glasses frames) are limited and unintuitive.This isn’t just a UX annoyance—it’s a barrier to adoption. If people feel foolish using AR in public, they won’t wear the devices.

The neural interface removes this barrier. Subtle micro-gestures solve the social stigma problem. Complex text input becomes possible. Interaction looks natural, not experimental. This unlocks the mass adoption leap AR has been waiting for.

Paradigm Shift PotentialThe difference before and after neural interaction is profound.

Before (Current AR Input):

Clunky air-tapsLimited commandsSocial stigma of visible interactionAwkward and error-proneAfter (sEMG Neural Interface):

Silent, subtle micro-gesturesNatural writing and typing motionsSocially invisible inputComplex, nuanced interaction possibleMass adoption unlockedIn other words: the neural interface has the same potential impact on AR that multi-touch had on smartphones. It turns a futuristic novelty into a usable platform.

Competitive ImplicationsMeta’s advantage here isn’t incremental—it’s structural. The company has a five-year head start thanks to its 2019 acquisition of CTRL-labs for roughly $1 billion. Since then, Meta has invested heavily in building sEMG machine learning models and hardware prototypes.

Competitors are behind:

Apple is exploring EEG/EMG pathways, but still relies on cautious iteration.Google is experimenting with gesture recognition, but lacks a breakthrough input model.Snap has focused on hand tracking, which remains limited.If Meta’s neural band works at scale, it doesn’t just give them an edge—it sets the standard. The risk is that neural input becomes the expected model for AR, forcing everyone else into copycat mode. In platform transitions, whoever defines the interaction paradigm tends to define the platform itself.

Acceleration Timeline ImpactWithout a neural interface, mass adoption of AR glasses would likely arrive between 2028 and 2030. Input problems would limit adoption to enthusiasts and early tech adopters.

With a neural interface, that timeline compresses dramatically. Mainstream adoption could begin as early as 2026–2027, shaving two to three years off the curve.

The potential market impact is enormous:

Industry-wide timelines compress.Current input strategies (voice, hand tracking) may become obsolete overnight.Competitive pressure intensifies as Meta gains first-mover advantage.Winner-take-most dynamics accelerate.This is why the neural interface isn’t just a feature—it’s a catalyst.

The Wild Card FactorOf course, every breakthrough carries risks. Neural interfaces face several:

Technical complexity: sEMG accuracy varies across users. Training the system to understand subtle signals consistently is a massive challenge.User acceptance: Will consumers wear a neural band daily? Will it feel comfortable?Privacy concerns: Neural data carries sensitive implications. If Meta collects signals from your motor neurons, how will that data be stored, used, or monetized?These risks mean the neural band isn’t guaranteed. It could stumble in execution, face regulatory hurdles, or simply fail to win consumer trust.

But the reward is transformative. If it works, it solves AR’s single biggest UX bottleneck and triggers mainstream adoption years ahead of schedule.

High Risk, High RewardThe neural interface is Meta’s wild card. It’s risky, technically demanding, and socially untested. But if it succeeds, it gives Meta insurmountable leverage:

Defines the paradigm: Meta sets the standard for AR input.Unlocks adoption: Natural interaction removes the final barrier to mass adoption.Secures ecosystem advantage: Developers and consumers gravitate toward the platform with the best input model.In the computing stack, input has always been destiny. The neural interface could be the moment AR shifts from experimental to inevitable.

Conclusion: Betting the Future on the WristMeta is making a bold wager. While Apple bets on premium integration and Google on platform ubiquity, Meta is betting on solving the hardest problem of all: input. If it wins, it doesn’t just compete in AR—it defines it.

The stakes are enormous. If neural input succeeds, AR adoption could accelerate by two to three years, reshaping industry timelines and forcing competitors into a defensive race. If it fails, Meta risks sinking billions into a high-risk technology that consumers reject.

This is why it’s a wild card. But history suggests that the companies that redefine input methods often redefine computing itself. The mouse gave us the PC. Multi-touch gave us the iPhone. If sEMG neural bands succeed, they could give us the first truly mainstream AR platform.

Meta is betting the future of AR on the wrist. And if they’re right, the next computing revolution won’t be in your hand or on your face—it will start in your neurons.

The post The Neural Interface Wild Card: Meta’s sEMG Bet and the Acceleration of AR appeared first on FourWeekMBA.