Marina Gorbis's Blog, page 775

November 28, 2018

Why We Need to Audit Algorithms

Thoth_Adan/Getty Images

Thoth_Adan/Getty ImagesAlgorithmic decision-making and artificial intelligence (AI) hold enormous potential and are likely to be economic blockbusters, but we worry that the hype has led many people to overlook the serious problems of introducing algorithms into business and society. Indeed, we see many succumbing to what Microsoft’s Kate Crawford calls “data fundamentalism” — the notion that massive datasets are repositories that yield reliable and objective truths, if only we can extract them using machine learning tools. A more nuanced view is needed. It is by now abundantly clear that, left unchecked, AI algorithms embedded in digital and social technologies can encode societal biases, accelerate the spread of rumors and disinformation, amplify echo chambers of public opinion, hijack our attention, and even impair our mental wellbeing.

Ensuring that societal values are reflected in algorithms and AI technologies will require no less creativity, hard work, and innovation than developing the AI technologies themselves. We have a proposal for a good place to start: auditing. Companies have long been required to issue audited financial statements for the benefit of financial markets and other stakeholders. That’s because — like algorithms — companies’ internal operations appear as “black boxes” to those on the outside. This gives managers an informational advantage over the investing public which could be abused by unethical actors. Requiring managers to report periodically on their operations provides a check on that advantage. To bolster the trustworthiness of these reports, independent auditors are hired to provide reasonable assurance that the reports coming from the “black box” are free of material misstatement. Should we not subject societally impactful “black box” algorithms to comparable scrutiny?

Indeed, some forward thinking regulators are beginning to explore this possibility. For example, the EU’s General Data Protection Regulation (GDPR) requires that organizations be able to explain their algorithmic decisions. The city of New York recently assembled a task force to study possible biases in algorithmic decision systems. It is reasonable to anticipate that emerging regulations might be met with market pull for services involving algorithmic accountability.

So what might an algorithm auditing discipline look like? First, it should adopt a holistic perspective. Computer science and machine learning methods will be necessary, but likely not sufficient foundations for an algorithm auditing discipline. Strategic thinking, contextually informed professional judgment, communication, and the scientific method are also required.

As a result, algorithm auditing must be interdisciplinary in order for it to succeed. It should integrate professional skepticism with social science methodology and concepts from such fields as psychology, behavioral economics, human-centered design, and ethics. A social scientist asks not only, “How do I optimally model and use the patterns in this data?” but further asks, “Is this sample of data suitably representative of the underlying reality?” An ethicist might go further to ask a question such as: “Is the distribution based on today’s reality the appropriate one to use?” Suppose for example that today’s distribution of successful upper-level employees in an organization is disproportionately male. Naively training a hiring algorithm on data representing this population might exacerbate, rather than ameliorate, the problem.

An auditor should ask other questions, too: Is the algorithm suitably transparent to end-users? Is it likely to be used in a socially acceptable way? Might it produce undesirable psychological effects or inadvertently exploit natural human frailties? Is the algorithm being used for a deceptive purpose? Is there evidence of internal bias or incompetence in its design? Is it adequately reporting how it arrives at its recommendations and indicating its level of confidence?

Even if thoughtfully performed, algorithm auditing will still raise difficult questions that only society — through their elected representatives and regulators — can answer. For instance, take the example of ProPublica’s investigation into an algorithm used to decide whether a person charged with a crime should be released from jail prior to their trial. The ProPublica journalists found that the blacks who did not go on to reoffend were assigned medium or high risk scores more often than whites who did not go on to reoffend. Intuitively, the different false positive rates suggest a clear-cut case of algorithmic racial bias. But it turned out that the algorithm actually did satisfy another important conception of “fairness”: a high score means approximately the same probability of reoffending, regardless of race. Subsequent academic research established that it is generally impossible to simultaneously satisfy both fairness criteria. As this episode illustrates, journalists and activists play a vital role in informing academics, citizens, and policymakers as they systematically investigate such tradeoffs and evaluate what “fairness” means in specific scenarios. But algorithm auditing should be kept distinct from these (essential) activities.

As this episode illustrates, journalists and activists play a vital role in informing academics, citizens, and policymakers as they investigate and evaluate such tradeoffs. But algorithm auditing should be kept distinct from these (essential) activities.

Indeed, the auditor’s task should be the more routine one of ensuring that AI systems conform to the conventions deliberated and established at the societal and governmental level. For this reason, algorithm auditing should ultimately become the purview of a learned (data science) profession with proper credentialing, standards of practice, disciplinary procedures, ties to academia, continuing education, and training in ethics, regulation, and professionalism. Economically independent bodies could be formed to deliberate and issue standards of design, reporting and conduct. Such a scientifically grounded and ethically informed approach to algorithm auditing is an important part of the broader challenge of establishing reliable systems of AI governance, auditing, risk management, and control.

As AI moves from research environments to real-world decision environments, it goes from being a computer science challenge to becoming a business and societal challenge as well. Decades ago, adopting systems of governance and auditing helped ensure that businesses broadly reflected societal values. Let’s try replicate this success for AI.

Is Employee Engagement Just a Reflection of Personality?

pbombaert/Getty Images

pbombaert/Getty ImagesMost people would like to have a job, a boss, and a workplace they can engage with, as well as work that gives them a sense of purpose. This aspiration is embodied by a famous Steve Jobs statement: “Your work is going to fill a large part of your life, and the only way to be truly satisfied is to do what you believe is great work. And the only way to do great work is to love what you do.” In line, a recent report by the Conference Board shows that 96% of employees actively try to maintain a high level of engagement, even if many of them struggle to succeed.

In a similar vein, the scientific evidence suggests quite clearly that few things are more critical to an organization’s success than having an engaged workforce. When employees are engaged, they display high levels of enthusiasm, energy, and motivation, which translates into higher levels of job performance, creativity, and productivity. This means not only higher revenues and profits for organizations, but also higher levels of well-being for employees. In contrast, low engagement results in burnout, higher levels of turnover, and counterproductive work behaviors such as bullying, harassment, and fraud.

It is therefore not surprising that a great deal of research has been devoted to identifying the key determinants of engagement. Why is it that some people are more engaged — excited, moved, energized by their jobs — than others? Traditionally, this research has focused on the contextual or external drivers of engagement, such as the characteristics of the job, the culture of the organization, or the quality of its leaders. And although there is no universal formula to engage employees, it is generally true that people will feel more enthusiastic about their jobs when they are empowered to achieve something meaningful beyond their expectations, feel connected to others, and when they work in an environment — and for someone — that is fair, ethical, and rewarding, as opposed to a constant source of stress.

But despite the importance of these contextual drivers of engagement, how people feel about their job, boss, and workplace may also vary as a function of people’s own character traits. Indeed, even before organizations started talking about the need to “engage employees,” many managers appeared to regard motivation as something individuals brought with them to work — a characteristic of people they hired. This is why two individuals may have very different levels of engagement even when their job situation is nearly identical (e.g., they work for the same company, team, and boss), and why there is always demand for employees who display consistent levels of ambition, energy, and dedication, irrespective of the situation they are in.

This raises an obvious yet rarely discussed question, namely: how much of engagement is actually just personality? A recent meta-analysis provides some much needed data-driven answers. In this impressive study which synthesized data from 114 independent surveys of employees, comprising almost 45,000 participants from a wide range of countries — and mostly published academic studies, which met the standards for publication in peer-reviewed journals — the researchers set out to estimate the degree to which people differed in engagement because of their character traits. To illustrate this point, imagine that a friend tells you that she hates her job. Depending on how well you know her, you might question if her views are a genuine reflection of her dreadful job, or if they just reflect your friend’s glass-half-empty personality. Or think of when you read a Tripadvisor, Amazon, or IMDB review of a hotel/product/movie: to what degree does that review convey information about the object being rated, versus the person reviewing it? Even intuitively, it is clear that reviews are generally a mix of both, the rater and object being rated, and this could also apply to people’s evaluations of their work and careers.

Although the authors examined only the impact of personality on engagement — without considering the known contextual influences on it — their results were rather staggering: almost 50% of the variability in engagement could be predicted by people’s personality. In particular four traits: positive affect, proactivity, conscientiousness, and extroversion. In combination, these traits represent some of the core ingredients of emotional intelligence and resilience. Put another way, those who are positive, optimistic, hard-working, and outgoing tend to show more engagement at work. They are more likely to show up with energy and enthusiasm for what they do.

So if you want an engaged workforce, perhaps your best bet is to hire people who have an “engagable” personality? The recent study we reviewed suggests that doing so will actually boost your engagement levels (as measured by surveys) more than any intervention designed to improve leadership, or to craft the perfect job for people. However, while this may look like an attractive position to take for managers, particularly if they wish to make engagement an employee problem, there are four important caveats to consider:

For starters, being more resilient to bad or incompetent management may be helpful for individual employee well-being, but it can be damaging for the wider performance of the organization. Frustrated employees are often a warning sign of broader managerial and leadership issues which need to be addressed. If leaders turn employee optimism and resilience into a key hiring criterion, then it becomes much harder to spot and fix leadership or cultural issues using employee feedback signals. It is a bit like a restaurant owner saying: “Instead of serving better food, or improving the service, I will boost my reviews by ensuring that my diners have lower standards!” While that may boost customers’ satisfaction ratings, it will certainly not raise the quality of the restaurant. Put another way, surrounding yourself with people who are more likely to give you positive and optimistic feedback does not actually make you more competent at your job.

Second, as data from the study clearly show, at least half of engagement still comes from contextual factors about the employees’ work — issues or experiences that are common across employees in an organization. So while one employee’s opinion might be heavily biased by the personality of that individual, a collective of views (like those often captured in organizational surveys) are more representative of the shared issues and challenges that people face at work. This is important because organizations are not really just a collection of individuals — they are coordinated groups with shared identity, norms, and purpose. Engagement therefore represents the “cultural value-add” an organization provides to its people at work — shaping their energy, behaviors, and attitudes over and above their personal preferences and styles. Ignoring this point isn’t an effective strategy for good leadership, and would cause you to leave valuable performance drivers out of your decision making.

Third, the most creative people in your organization are probably more cynical, skeptical, and harder to please than the rest. Many innovators also have problems with authority and a predisposition to challenge the status quo. This makes them more likely to complain about bad management and inefficiency issues, and makes them potentially more likely to disengage. Marginalizing or screening out these people might seem like a quick win for engagement, but in most organizations these people are a significant source of creative energy and entrepreneurship, which is more difficult to get from people who are naturally happy with how things are. To some extent, all innovation is the result of people who are unhappy with the status quo — who seek ways to change it. And even if hiring people with engageable personalities does boost organization performance levels (and decreased undesirable outcomes), there are big fairness and ethical implications when it comes to excluding people who are generally harder to please and less enthusiastic in general, especially if they are just as competent at their jobs as any others.

Last, anything of value is typically the result of team rather than individual performance, and great teams are not made of people who are identical to each other, but of individuals who complement each other. If you want cognitive diversity — variety in thinking, feeling, and acting — then you will need people with different personalities. That means combinations of personalities to fit a variety of team roles — having some individuals who are naturally proactive, extroverted, and positive, working together with some who are maybe the exact opposite. The implication of this is clear: if your strategy for “engaging” your workforce is to hire people who are all the same — in that they are more engageable — you will end up with low cognitive diversity, which is even more problematic for performance and productivity than having low demographic diversity (though we think both types of diversity should be pursued).

So, if you want to truly understand engagement in your organization then you need to look at both who your people are and what they think about their work. In other words, more calibration to employee personality is needed. For example, managers leading teams of people who are generally harsh or negative could benefit from seeing engagement data through the lens of personality, helping them to target issues that are genuinely impacting team performance.

And this then also opens up a new opportunity: to think about how engagement data could also be used to encourage employees to better understand their own views about work, giving them more flexibility to take personal ownership and find ways to thrive. In a recent study, researchers found that 40% of managers identified emotional intelligence and self-awareness as the most important factors influencing whether an employee takes responsibility for their own engagement. If we can combine what we know about engagement with what we know about personality, then we can help each person more effectively navigate their organizational reality — leading to better, more effective organizations for all.

November 27, 2018

Curiosity-Driven Data Science

Akimasa Harada/Getty Images

Akimasa Harada/Getty ImagesData science can enable wholly new and innovative capabilities that can completely differentiate a company. But those innovative capabilities aren’t so much designed or envisioned as they are discovered and revealed through curiosity-driven tinkering by the data scientists. So, before you jump on the data science bandwagon, think less about how data science will support and execute your plans and think more about how to create an environment to empower your data scientists to come up with things you never dreamed of.

First, some context. I am the Chief Algorithms Officer at Stitch Fix, an online personalized styling service with 2.7 million clients in the U.S. and plans to enter the U.K. next year. The novelty of our service affords us exclusive and unprecedented data with nearly ideal conditions to learn from it. We have more than 100 data scientists that power algorithmic capabilities used throughout the company. We have algorithms for recommender systems, merchandise buying, inventory management, relationship management, logistics, operations — we even have algorithms for designing clothes! Each provides material and measurable returns, enabling us to better serve our clients, while providing a protective barrier against competition. Yet, virtually none of these capabilities were asked for by executives, product managers, or domain experts — and not even by a data science manager (and certainly not by me). Instead, they were born out of curiosity and extracurricular tinkering by data scientists.

Data scientists are a curious bunch, especially the good ones. They work towards clear goals, and they are focused on and accountable for achieving certain performance metrics. But they are also easily distracted, in a good way. In the course of doing their work they stumble on various patterns, phenomenon, and anomalies that are unearthed during their data sleuthing. This goads the data scientist’s curiosity: “Is there a better way that we can characterize a client’s style?” “If we modeled clothing fit as a distance measure could we improve client feedback?” “Can successful features from existing styles be re-combined to create better ones?” To answer these questions, the data scientist turns to the historical data and starts tinkering. They don’t ask permission. In some cases, explanations can be found quickly, in only a few hours or so. Other times, it takes longer because each answer evokes new questions and hypotheses, leading to more testing and learning.

Are they wasting their time? No. Not only does data science enable rapid exploration, it’s relatively easier to measure the value of that exploration, compared to other domains. Statistical measures like AUC, RMSE, and R-squared quantify the amount of predictive power the data scientist’s exploration is adding. The combination of these measures and a knowledge of the business context allows the data scientist to assess the viability and potential impact of a solution that leverages their new insights. If there is no “there” there, they stop. But when there is compelling evidence and big potential, the data scientist moves on to more rigorous methods like randomized controlled trials or A/B Testing, which can provide evidence of causal impact. They want to see how their new algorithm performs in real life, so they expose it to a small sample of clients in an experiment. They’re already confident it will improve the client experience and business metrics, but they need to know by how much. If the experiment yields a big enough gain, they’ll roll it out to all clients. In some cases, it may require additional work to build a robust capability around the new insights. This will almost surely go beyond what can be considered “side work” and they’ll need to collaborate with others for engineering and process changes.

The key here is that no one asked the data scientist to come up with these innovations. They saw an unexplained phenomenon, had a hunch, and started tinkering. They didn’t have to ask permission to explore because it’s relatively cheap to allow them to do so. Had they asked permission, managers and stakeholders probably would have said ‘no’.

These two things, low cost exploration and the ability to measure the results, set data science apart from other business functions. Sure, other departments are curious too: “I wonder if clients would respond better to this this type of creative?” a marketer might ask. “Would a new user interface be more intuitive?” a product manager inquires. But those questions can’t be answered with historical data. Exploring those ideas requires actually building something, which will be costly. And justifying the cost is often difficult since there’s no evidence that suggests the ideas will work. With its low-cost exploration and risk-reducing evidence, data science makes it possible to try more things, leading to more innovation.

Sounds great, right? It is! But you can’t just declare as an organization that “we’ll do this too.” This is a very different way of doing things. You need to create an environment in which it can thrive.

First, you have to position data science as its own entity. Don’t bury it under another department like marketing, product, finance, etc. Instead, make it its own department, reporting to the CEO. In some cases, the data science team will need to collaborate with other departments to provide solutions. But it will do so as equal partners, not as a support staff that merely executes on what is asked of them. Instead of positioning data science as a supportive team in service to other departments, make it responsible for business goals. Then, hold it accountable to hitting those goals — but let the data scientists come up with the solutions.

Next, you need to equip the data scientists with all the technical resources they need to be autonomous. They’ll need full access to data as well as the compute resources to process their explorations. Requiring them to ask permission or request resources will impose a cost and less exploration will occur. My recommendation is to leverage a cloud architecture where the compute resources are elastic and nearly infinite.

The data scientists will need to have the skills to provision their own processors and conduct their own exploration. They will have to be great generalists. Most companies divide their data scientists into teams of specialists — say, Modelers, Machine Learning Engineers, Data Engineers, Causal Inference Analysts, etc. – in order to get more focus. But this will require more people to be involved to pursue any exploration. Coordinating multiple people gets expensive quickly. Instead, leverage “full-stack data scientists” with the skills to do all the functions. This lowers the cost of trying things, as a single tinkering initiative may require each of the data science functions I mentioned. Of course, data scientists can’t be experts in everything. So, you’ll need to provide a data platform that can help abstract them from the intricacies of distributed processing, auto-scaling, etc. This way the data scientist focuses more on driving business value through testing and learning, and less on technology.

Finally, you need a culture that will support a steady process of learning and experimentation. This means the entire company must have common values for things like learning by doing, being comfortable with ambiguity, balancing long-and short-term returns. These values need to be shared across the entire organization as they cannot survive in isolation.

But before you jump in and implement this at your company, be aware that it will be hard if not impossible to implement at an older company. I’m not sure it could have worked, even at Stitch Fix, if we hadn’t enabled data science to be autonomous from the very the beginning. I’ve been at Stitch Fix for six and a half years and, with a seat at the executive table, data science never had to be “inserted” into the organization. Rather, data science was native to us in the formative years, and hence, the necessary ways-of-working are more natural to us.

This is not to say data science is destined for failure at older, more mature companies, though it is certainly harder than starting from scratch. Some companies have been able to pull off miraculous changes. And it’s too important not to try. The benefits of this model are substantial, and for any company that wants data science to be a competitive advantage, it’s worth considering whether this approach can work for you.

Speak Out Successfully

James Detert, a professor at the University of Virginia Darden School of Business, studies acts of courage in the workplace. His most surprising finding? Most people describe everyday actions — not big whistleblower scandals — when they cite courageous (or gutless) acts they’ve seen coworkers and leaders take. Detert shares the proven behaviors of employees who succeed at speaking out and suffer fewer negative consequences for it. He’s the author of the HBR article “Cultivating Everyday Courage.”

How the Geography of Startups and Innovation Is Changing

Jakal Pan/Getty Images

Jakal Pan/Getty ImagesWe’re used to thinking of high-tech innovation and startups as generated and clustered predominantly in fertile U.S. ecosystems, such as Silicon Valley, Seattle, and New York. But as with so many aspects of American economic ingenuity, high-tech startups have now truly gone global. The past decade or so has seen the dramatic growth of startup ecosystems around the world, from Shanghai and Beijing, to Mumbai and Bangalore, to London, Berlin, Stockholm, Toronto and Tel Aviv. A number of U.S. cities continue to dominate the global landscape, including the San Francisco Bay Area, New York, Boston, and Los Angeles, but the rest of the world is gaining ground rapidly.

That was the main takeaway from our recent report, Rise of the Global Startup City, which documents the global state of startups and venture capital. When we analyzed more than 100,000 venture deals across 300-plus global metro areas spanning 60 countries and covering the years 2005 to 2017, we discovered four transformative shifts in startups and venture capital: a Great Expansion (a large increase in the volume of venture deals and capital invested), Globalization (growth in startups and venture capital across the world, especially outside the U.S.), Urbanization (the concentration of startups and venture capital investment in cities — predominantly large, globally connected ones), and a Winner-Take-All Pattern (with the leading cities pulling away from the rest).

These major transformations pose significant implications for entrepreneurs, venture capitalists, workers, and managers, as well as policymakers for nations and cities across the globe.

The Great Expansion

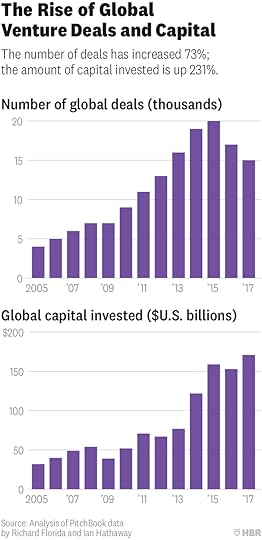

The first shift is the Great Expansion, as the past decade has witnessed a massive increase in venture capital deployed globally.

The annual number of venture capital deals expanded from 8,500 in 2010 to 14,800 in 2017, for an increase of 73% in just seven years. The amount of capital invested in those deals surged from $52 billion in 2010 to $171 billion in 2017 — a gain of 231%. These figures represent historical records aside from the peak of the dotcom boom in 2000 (and may even exceed it). By all accounts, 2018 will be even bigger.

Globalization

The second shift is the accelerating Globalization of venture deals. For decades, the United States held a near monopoly on venture capital, where as late as the mid-1990s, the U.S. captured more than 95% of all venture capital investments globally.

That share has declined since then — gradually for the first two decades (falling to about three-quarters of the global total by 2012), and rapidly in the last five years (dropping to a little more than half by 2017).

Urbanization

The third shift is the Great Urbanization of startup activity and venture capital activity in the largest global cities in the world. For decades, startups and venture capital activity was located in the quaint suburban office parks and low-rise office buildings of “nerdistans” like Silicon Valley, the Route 128 Beltway outside Boston, and the suburbs of Seattle, Austin, or the North Carolina Research Triangle. But our research shows that the startup activity and venture capital investment are now concentrated in some of the world’s largest mega-cities.

The table below shows the 10 leading cities for venture capital investment in the world. These 10 cities accounted for more than $100 billion in venture capital investment on average each year between 2015 and 2017, or more than 60% of the total. Three of the 10 leading global metros have populations in excess of 20 million people and three more have populations of between 10 and 15 million. Three more cities have between 4 and 10 million people, while one has less than 2 million (San Jose, the heart of Silicon Valley).

A separate study by one of us and a colleague that looked at the factors associated with venture capital investment across U.S. metros found population size and density to be key. The only other factor that was slightly more important was high-tech industry concentration, which is what entrepreneurs and venture capitalists are aiming to create over the long run.

Winner-Take-All Geography

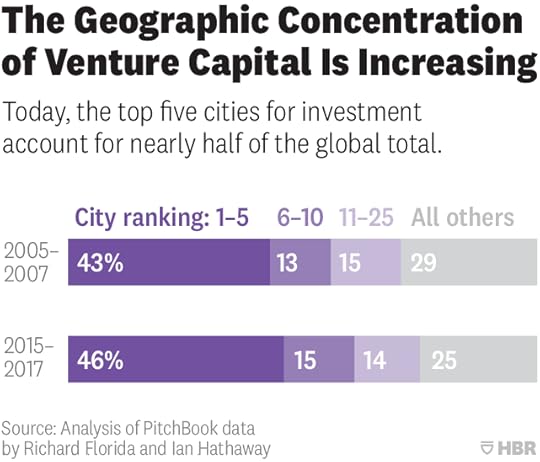

Startups and venture capital increasingly take on a winner-take-all pattern geographically. Venture capital investments are highly concentrated geographically. Just the top five cities account for nearly half of the global total, and the top 25 for more than three quarters of global venture capital investment. And, previous research one of us has done for the United States and globally, shows that even within cities, venture capital activity tends to be highly concentrated among just a few postal codes.

The geographic concentration of venture capital has also increased over the last decade. This is particularly the case at the very top, where the top 10 cities account for 61% of venture activity worldwide in the latest three-year period, but just 56% a decade ago. Given the large amount of underlying activity going on each year, even small percentage point changes represent meaningful shifts in concentration.

Forces Behind the Shifts

We can point to three major factors driving these trends, though there are others. The first is technological, as the confluence of high-speed internet, mobile devices, and cloud computing has made it possible to start and scale digitally-enabled businesses at a fraction of the cost. As these technologies have fallen in cost, they are within reach in more markets, meaning that it’s easier to create and grow these high-growth, high-tech businesses in more cities.

The second factor is economic. The world has just gone through the largest global reduction in poverty and concomitant expansion of the global middle class in history, and multi-national corporate giants are emanating from more countries, particularly in emerging markets. This has increased demand for many digital goods and services in more places, giving technologically-enabled entrepreneurs in more places a robust market to sell into.

The third factor is political. Many nations around the world are doing more than ever to compete on a global stage by improving their education systems and universities, investing more in research and development, and bending over backwards to welcome high-skilled foreigners and company founders. The United States, on the other hand, is sliding backwards on all of these fronts — and in our view, has become complacent with its long-held dominance as a monopoly for high-tech entrepreneurship.

What It Means for Leaders

These trends have important implications for entrepreneurs, investors, managers, and workers, as well as national and local policymakers across the world. For entrepreneurs, it’s fairly straightforward. The San Francisco Bay Area remains by far the leading location for venture activity and the most robust ecosystem for growing a high-tech startup by a long shot. However, many of the key resources found in The Valley are increasingly available in other places. Whether non-American founders can’t obtain a U.S. visa or choose to stay at home for other reasons, it will only get easier for them to do so while building their companies.

For investors and corporations, the big takeaway is this: You can no longer look only in your own backyard for startups, innovation, and the talent that power them. Venture capitalists, used to looking close to home, need to broaden their horizons and think, look, and act globally. Corporate managers, especially those in the United States, are used to strong local sources of innovation, but they too must increase their awareness of global innovation and startups as they look to as address competitive threats and capture new sources of innovation. Large established corporates may see opportunities in building globally disturbed teams. Techies and entrepreneurs around the world can count on greater opportunities in their home markets.

For global policymakers, the lesson is that globalization of high-tech entrepreneurship and venture capital mean greater competition across the board. For U.S. policymakers, they can no longer take its long-established lead in innovation and startups for granted. China is nipping at its heels and other nations are also gaining ground quickly. Sure, the US remains the dominant place by far, but it is time to stop doing counterproductive things like imposing immigration restrictions on highly-skilled individuals and founders with validated business ideas. Such actions chill the climate for global talent. For countries that are emerging on the global stage, it means continuing and even expanding on recent improvements in education, innovation, and immigration. For the world as a whole, having entrepreneurs and techies build companies where they are may eventually help to address the growing spatial inequality and winner-take-all dynamics that currently define the global geography high-tech startups.

Of course, innovation and entrepreneurship are local, not national, games. That means mayors and city leaders must take the lead. And it means nations should consider devolving responsibility for innovation and economic policy functions to the local level, especially as most countries will only have one or a few cities that can compete on a global stage. But it does not mean throwing government money at venture capital, which too many national and local governments tend to do. Instead it means investing in local universities and innovation, creating greater local density, and generating the kind of quality of local talent. And it also means working with the private sector not just to improve the preconditions required for innovation and startups, but to address the growing economic inequality and housing unaffordability that is causing a growing backlash against big tech in cities across the globe.

Every Organizational Function Needs to Work on Digital Transformation - SPONSOR CONTENT FROM GARTNER

Digital business reached a tipping point in 2018 as organizations scaled their digital capabilities. Eighty-seven percent of senior business leaders say that digitalization is now a priority and in many cases is a do-or-die imperative.

In our surveys, more than 66% of CEOs said they expect their companies to change their business model in the next three years, with 62% reporting they have management initiatives or transformation programs underway to make their business more digital.

Clearly these leaders believe that digitalization offers exciting new, technology-enabled ways for organizations to engage with stakeholders, deliver a superior experience across the life cycle of their business, manage costs, and improve productivity.

Yet 72% of corporate strategists in a recent survey said their company’s digital efforts are missing revenue expectations.

Why are companies seeing this gap between expectation and results in digital business, which Gartner defines as the creation of new business designs that blur the boundary between the digital and physical worlds?

Our research shows that a company’s ability to gain strategic clarity on its path to business model transformation is crucial to its success.

Some organizations see digital business as an opportunity to totally reinvent themselves and their business models. Other enterprises and their functions are looking to leverage technology to optimize and augment existing operations.

But whatever the extent of an organization’s digital ambition, our research shows most corporate strategists tend to play it safe, favoring incremental investments. But in some companies, strategists are bolder. They test entirely new business models while also finding ways to reduce the associated risks. These progressive strategists take specific steps to identify future differentiators and engage the whole organization in a better, faster way to clarify their path to business model transformation. Without that clarity, returns from digital initiatives suffer.

Ultimately, every organizational function is having to manage the pressure of change in digital transformation, business models, and other areas.

The pressure is real, but market intelligence from across Gartner’s research and advisory teams shows progressive functional organizations are proactively realigning or reinventing themselves to respond, at the requisite speed, to enable their enterprise to capture the opportunities presented both by digitalization and today’s buoyant economic conditions.

Aligned Organizational Culture and Capabilities

Digital business also is creating new challenges for information and technology (I&T). In what Gartner calls a third era of enterprise IT, existing investments must be rebalanced and combined with new, disruptive technologies.

Organization-wide disruption also is causing dramatic shifts in culture and capabilities. New skills are emerging, and existing skills are evolving and expiring. Employees are concerned about their skills becoming irrelevant: better upskilling is their top concern.

Strategic workforce planning, and talent management and reskilling initiatives, are already top of mind for many in HR and among functional leaders. But on a macro level, there is near-universal demand for digital dexterity — a set of beliefs, mindsets, and behaviors that help employees deliver faster and more valuable outcomes from digital initiatives.

Just as digital technology is now fully within the purview of all organizational leaders, so is the question of digital-ready talent.

But disruption also breeds cultural tensions — as the digital ambitions of the enterprise conflict with longtime operating objectives and create competing priorities that employees don’t know how to balance. Especially without strategic clarity, employees are unsure if they should focus on speed or quality, efficiency or innovation, for example. The more tension employees feel, the more stressed they are and the worse their performance becomes.

In progressive companies, business leaders work proactively to surface these tensions, acknowledge tough trade-offs, and help leaders set and articulate priorities. The result is more effective culture-informed judgment by employees — and better performance.

Customer-Centricity at the Forefront

Digitalization is also characterized by transformative shifts in customer needs, which are compounded in today’s buoyant global economic conditions. In this environment, every functional leader has a role to play in translating digital ambition into commercial success.

Leading supply chain organizations, for example, are embedding agility and responsiveness into their DNA to catalyze the digital supply chain into action.

On the sales and service fronts, leaders are increasingly focused on positioning employees to be more effective and productive in the new paradigms they face.

In B2B sales, reps are confronted with buyers who spend more time researching in digital channels and exchanging information within buyer groups than they do engaging directly with sales reps. Progressive sales leaders position reps to help buyer groups navigate this complex purchase process, an approach we call “buyer enablement.” Proactive service leaders have turned their attention to improving the experience of their reps at work — which, in turn, benefits customers — rather than simply arming service reps with more and more tools meant to directly improve service.

High-performing marketing organizations have developed a more agile style of working to keep their brands competitive amid rapid marketplace shifts. Their leaders are building a diverse, adaptable range of team capabilities — allocating people and resources based on the work that needs to be done, regardless of where resources sit in the organizational structure.

Drive Digitalization — Or At Least Don’t Be a Drag

The digital era also demands that consumers and organizations be secure. In most organizations, the CIO remains accountable for cybersecurity, but information and technology (I&T) top performers are more likely to report that their boards are ultimately accountable for cybersecurity. All CIOs need to educate their boards and the C-suite on how to think about and take more responsibility for cybersecurity risk.

This new operating reality of multiple stakeholders and rewired accountabilities is playing out in all enterprise functions, challenging them to meet their core responsibilities and manage new risks at the speed of digital business as evolving business models change the value proposition, customer base, profit model, and/or business capabilities.

Progressive procurement departments deliver a feeling of certitude to business partners that lessens their anxiety, uncertainty, and exasperation during purchasing and thus alleviates the kind of pressure that can force procurement to make bad and costly trade-offs just to speed up buys.

In functions where governance is a key responsibility — from risk and audit to finance and legal and compliance — leaders are identifying effective ways to inject their expertise and guardrails into business strategy and operations even when decision making is highly distributed.

Transform Your Digital Future with Gartner’s Top C-Suite Insights for 2018-19.

Making Cryptocurrency More Environmentally Sustainable

Classen Rafael/Getty Images

Classen Rafael/Getty ImagesBlockchain has the power to change our world for the better in so many ways. It can provide unbanked people with digital wallets, prevent fraud, and replace outdated systems with more efficient ones. But we still need this new and improved world to be one that we want to live in. The largest cryptocurrencies — Bitcoin, Bitcoin Cash, and Ethereum — require vast amounts of energy consumption to function. Last year, blockchain used more power than 159 individual nations including Uruguay, Nigeria, and Ireland. Unsurprisingly, this is creating a huge environmental problem that poses a threat to the Paris climate-change accord.

It’s a brutal, if unintended, consequence for such a promising technology, and “mining” is at the heart of the problem. When Bitcoin was first conceived nearly a decade ago, it was a niche fascination for a few hundred hobbyists, or “miners.” Because bitcoin has no bank to regulate it, miners used their computers to verify transactions by solving cryptographic problems, similar to complex math problems. Then, they combined the verified transactions into “blocks” and added them to the blockchain (a public record of all the transactions) to document them — all this, in return for a small sum of bitcoin. But where a single Bitcoin once sold for less than a penny on the open market, it now sells for nearly $7,000 and around 200,000 Bitcoin transactions occur every day. With these numbers increasing, so has the incentive to create cryptocurrency “mines” — server farms now spread across the world, often massive. Imagine the amount of energy consumed by 25,000 machines calculating math problems 24 hours a day.

Beyond the environmental concerns, this inefficiency threatens blockchain as a meaningful platform for enterprise. The high energy costs are baked into the system, and, because the cost of running the network is passed on in transaction fees, users of these networks end up paying for them. Initially, companies that use bitcoin may not see the financial consequences, but as they scale, the costs could become fatal.

The good news: there are a variety of alternatives available that can help organizations cut massive energy costs. Right now, they aren’t being adopted quickly enough. Companies who want to keep their head above water — along with everyone else’s — need to educate themselves. Below are two areas that are a good place to start.

Green Energy Blockchain Mining

An immediate fix is mining with solar power and other green energy sources. Each day, Texas alone receives more solar power than we need to replace every non-solar power plant in the world. There are numerous commercial services for powering crypto mining on server farms that only use clean, renewable energy. Genesis Mining, for instance, enables mining for Bitcoin and Ethereum in the cloud. The Iceland-based company uses 100% renewable energy and is now among the largest miners in the world.

We need to incentivize green energy for future blockchains, too. Every company that uses blockchain also defines its own system for miner compensation. New blockchains could easily offer miners better incentives, like more cryptocurrency, for using green energy — eventually forcing out polluting miners. They could also require all miners to prove that they use green energy and deny payment to those who don’t.

Energy Efficient Blockchains Systems

While Bitcoin, Bitcoin Cash, and Ethereum all depend on energy inefficient cryptographic problem-solving known as “Proof of Work” to operate, many newer blockchains use “Proof of Stake” (PoS) systems that rely on market incentives. Server owners on PoS systems are called “validators” — not “miners.” They put down a deposit, or “stake” a large amount of cryptocurrency, in exchange for the right to add blocks to the blockchain. In Proof of Work systems, miners compete with each other to see who can problem-solve the fastest in exchange for a reward, taking up a large amount of energy. But in PoS systems, validators are chosen by an algorithm that takes their “stake” into account. Removing the element of competition saves energy and allows each machine in a PoS system to work on one problem at a time, as opposed to a Proof of Work system, in which a plethora of machines are rushing to solve the same problem. Additionally, if a validator fails to behave honestly, they may be removed from the network — which helps keep PoS systems accurate.

Particularly promising is the Delegated Proof of Stake (DPoS) system, which operates somewhat like a representative democracy. In DPoS systems, everyone who has cryptocurrency tokens can vote on which servers become block producers and manage the blockchain as a whole. However, there is one downside. DPoS is somewhat less censorship resistant than Proof of Work systems. Because it only has 21 block producers, in theory, the network could be brought to a stop by simultaneous subpoenas or cease and desist orders, making it more vulnerable to the thousands upon thousands of nodes on Ethereum. But DPos has proven to be vastly faster at processing transactions while using less energy, and that’s a tradeoff we in the industry should be willing to make.

Among the largest cryptocurrencies, Ethereum is already working on a transition to Proof of Stake, and we should take more collective action to hasten this movement. Developers need to think long and hard before creating new Proof of Work blockchains because the more successful they become, the worse ecological impact they may have. Imagine if car companies had been wise enough, several decades ago, to come together and set emission standards for themselves. It would have helped cultivate a healthier planet — and pre-empted billions of dollars in costs when those standards were finally imposed on them. The blockchain industry is now at a similar inflection point. The question is whether we’ll be wiser than the world-changing industries that came before us.

Why You Should Stop Setting Easy Goals

Microzoa/Getty Images

Microzoa/Getty ImagesWhen setting team goals, many managers feel that they must maintain a tricky balance between setting targets high enough to achieve impressive results and setting them low enough to keep the troops happy. But the assumption that employees are more likely to welcome lower goals doesn’t stand up to scrutiny. In fact, our research indicates that in some situations people perceive higher goals as easier to attain than lower ones — and even when that’s not the case, they still can find those more challenging goals more appealing.

In a series of studies we describe in our latest paper, we tested how people perceive goals by asking participants on Amazon’s crowdsourcing marketplace, known as Mechanical Turk, to rate the difficulty and appeal of targets set at various levels and across spheres from sports performance and GPA to weight loss and personal savings. We asked about both “status quo” goals, in which the target remained set at a baseline level similar to recent performance, and “improvement goals” in which the target was set higher than the baseline by varying degrees.

What makes a goal seem hard to achieve?

In our first study, we recruited a couple of hundred participants on Mechanical Turk and split them into five groups. We showed one group just status quo goals — for example, achieving the same GPA as the previous semester. We showed the other four groups improvement goals that reflected either small, moderate, large or very large gains over the current baseline.

For the various improvement goals, as you would expect, our subjects perceived higher targets as more difficult to achieve and lower targets as less difficult (4.01 versus 2.82 on a scale of difficulty from 1 to 7). But, surprisingly, participants rated the status quo goal as more difficult (3.23) than the small-increase goal — in fact, just a bit less than a moderate one (3.49).

To learn why, in our second study, we asked participants to give reasons for their ratings of modest improvement and status quo goals. The group that evaluated the modest goals tended to write about the gap between the status quo and the goal and how small it was, which led to them to be optimistic about their success. Meanwhile, the group that evaluated the maintenance goals listed more reasons for failure based on context and was more pessimistic.

From this and a subsequent study, we concluded that when people are given a status quo goal, they’re more sensitive to the context for achieving it than when they’re given an improvement goal — and that’s all the more true if the context is already unfavorable. Think about it this way: When we’re judging the difficulty of a goal, the first thing our brains see is the size of the gap that separates the goal from the baseline. The bigger the gap, the more difficult the goal, as logic would suggest. Only later do we begin to consider the context in which we’ll need to achieve that goal. But in the absence of any gap to evaluate — as with a status quo goal — our minds immediately start thinking about that context. Our all-too-human negativity bias then kicks in and our brains start generating reasons why we might fail. Thus setting a steady baseline goal just to make your people more confident is actually likely to do just the opposite.

Harder goals mean more satisfaction

In the studies we’ve described so far, participants rated the goals we gave them one at a time. The results were different, though, when we asked them to rate status quo and modest improvement goals in tandem.

There the pattern broke: when participants evaluated these goals together, they did judge the modest improvement goal as more difficult than the status quo goal (3.02 vs 2.43). Generalizing from our findings in the earlier study, we deduce that the participants took their cue from the gaps between the goal and the baseline, and logically concluded that it was easier to maintain the status quo than to increase results.

But in the same study, when we asked participants which of the two goals they would choose to pursue, they again chose modest improvement targets over status quo targets. This finding held across all kinds of spheres — whether about achieving a higher GPA, exercising more, completing more tasks, saving more, or working more hours. Despite the fact that they knew these goals were harder, participants anticipated greater satisfaction from achieving modest positive changes as opposed to maintaining the status quo.

As a manager, these findings should encourage you to set your team at least modest improvement goals rather than status quo goals — especially in tough contexts such as a bearish economy, an all-consuming merger, or with a key contract up in the air. Even if the goal is hard to achieve, your team is likely to see the benefits — and to be motivated to reach a target that makes them proud.

November 26, 2018

Self-Disclosure at Work (and Behind the Mic)

In this special live episode, we share stories, research, and practical advice for strategic self-disclosure, and then take questions from the audience.

Guest:

Katherine Phillips is a management professor at Columbia Business School.

Sign up for the Women at Work newsletter.

Fill out our survey about workplace experiences.

Email us here: womenatwork@hbr.org

Our theme music is Matt Hill’s “City In Motion,” provided by Audio Network.

A Study of West Point Shows How Women Help Each Other Advance

Scott Olson/Getty Images

Scott Olson/Getty ImagesOver the last several decades, women have made tremendous gains in many professions. Women physicians, for instance, were a rarity in the 1960s. Today, about 35% of physicians are women, and the representation will only increase as women—who constitute over half of new medical students—proceed through the career pipeline. Women have made similar gains in professions such as law, veterinary science, and dentistry.

But progress has lagged in other fields. Only 18% of new computer science grads are women, and a paltry 11% of top corporate executives are women. What is holding women back? What can be done to help more women enter and achieve parity in these predominantly male fields?

There are many possible reasons for the gender gaps. They include gender biases and “bro culture” that can make male-dominated environments unwelcoming to women. Another possible explanation is that the gender gap is itself to blame—women don’t get enough support because there aren’t many other women around. Perhaps women would benefit from simply having more women among their peers.

There is some evidence supporting this. Studies show that women in undergraduate engineering programs with more female graduate mentors are more likely to continue in the major, and women who are friends with high-performing women are more likely to take advanced STEM courses.

But these studies are limited. It may be that women aren’t benefiting from the support, but that women with greater commitment to a field tend to gravitate toward similar women. This is what economists refer to as the problem of selection bias, which makes it unclear whether the peer group is what causes success. Another issue is that some surprising studies show that women’s success is actually hampered by having more female peers. This can happen when women are put in positions where they must compete with one another, because of quotas, for example, so having more women around actually makes it harder to succeed.

How do we know whether women actually help other women? Ideally, we would do something like a medical experiment where women are randomly assigned to treatment and control groups. Women in the treatment group would have women peers, and women in the control group would not. If women in the treatment group do better than women in the control group, then we have good reason to conclude that the relation is cause-and-effect.

Such opportunities for randomization are rare, but we found one. We took a cue from economist David Lyle, who recognized that student cadets at military academies are randomly assigned to peer groups called cadet companies. This can create a natural experiment for studying peer effects without concern about selection bias.

West Point

The United States Military Academy at West Point is a four-year college founded in 1802. Its mission is to train cadets to be Army officers, and the program is challenging. Standards are high and cadets’ performance is closely monitored. The student body (or Corps of Cadets) is structured as a hierarchy comparable to the Army. It is divided into four regiments, which are each divided into three battalions, which are each divided into three companies. There are 36 companies, each contain about 32 cadets per class, or about 128 members in total.

We studied the classes of 1981 to 1984, the second through fifth graduating classes that included women. In those years, cadets had few opportunities to interact with the outside world. There was no email, internet or cellphones. External communication involved snail mail or a shared pay phone. Cadets were isolated within the Academy as well. They were required to live, have meals, and participate in activities with their own companies. Opportunities to interact with cadets in outside regiments were even more limited.

Many graduates recall a brutal freshman year at West Point. Senior-ranking cadets are charged with instructing more junior cadets in military protocol and disciplining them for infractions. In the late 1970s and early 1980s, this often amounted to severe hazing.

When women first entered West Point in 1976, there was considerable controversy. Women were often singled out for particularly harsh treatment, though there was substantial variation across the student body. And with an average of 103 women spread across the academy each year, compared to 1,146 men, women had little opportunity to interact with other women. Not surprisingly, women’s attrition rates were about five percentage points higher than men’s on average—a big difference when attrition rates for men are only about 8.5 percent.

Women and Peer Groups at West Point

We collected our data from the 1976 to 1984 West Point yearbooks, which contain group photos of cadets by company and class; these were our peer groups. With this information, we were able to track each cadet’s randomly-assigned peer group by gender, and their progression from year to year.

We found that, when another woman was added to a company, it increased the likelihood a woman would progress from year to year by 2.5%. This means that, on average, an extra two women in a company on top of what it already had would completely erase the five percentage point female/male progression gap.

Another way to think about the size of the effect is to consider only first-year cadets, who have the worst attrition rates. Women in first-year groups with only one other woman only had a 55% chance of sticking around for the next year. But women in the most woman-heavy groups, with 6 to 9 other women, had an 83% chance of continuing to the next year.

One concern, however, is that while the addition of more women helps other women, it may make men less likely to progress. But, when we compared results for men with results for women, we found no effect of women peers on men, positive or negative. In other words, there was only an upside to increasing the number of women in the group.

Beyond West Point of the 1980s

Unlike other studies, the natural randomization of cadets to companies has made it possible for us to say that there is a substantial benefit to women from having women peers. But West Point of the 1980s was unusual. How would these results apply more generally?

Things have changed considerably at the institution in the past 40 years. In 1990 a woman was appointed to the prestigious position of First Captain—the lead cadet of the entire student body, and 22% of the incoming class of 2020 were women. Extreme hazing has been eliminated, and cadets have more opportunities to interact with friends and family outside and other cadets within the Academy. The need for support may have changed as the experience has eased.

There are many other reasons that these results may not carry over to a college or workplace of 2018. There is greater awareness now of the challenges that women face when they are in a small minority and greater willingness of leaders to mitigate the challenges. It would be interesting to see what a similar study would show in a corporate workplace. Until that time, the best evidence is that attending to gender when assigning women to groups can be a powerful tool for increasing the representation of women in male-dominated fields.

Marina Gorbis's Blog

- Marina Gorbis's profile

- 3 followers