Matthew S. Williams's Blog, page 48

June 28, 2014

News from Space: “Earth-Sized Diamond” In Space

As our knowledge of the universe beyond our Solar System expands, the true wonder and complexity of it is slowly revealed. At one time, scientists believed that other systems would be very much like our own, with planets taking on either a rocky or gaseous form, and stars conforming to basic classifications that determined their size, mass, and radiation output. However, several discoveries of late have confounded these assumptions, and led us to believe that just about anything could exist out there.

As our knowledge of the universe beyond our Solar System expands, the true wonder and complexity of it is slowly revealed. At one time, scientists believed that other systems would be very much like our own, with planets taking on either a rocky or gaseous form, and stars conforming to basic classifications that determined their size, mass, and radiation output. However, several discoveries of late have confounded these assumptions, and led us to believe that just about anything could exist out there.

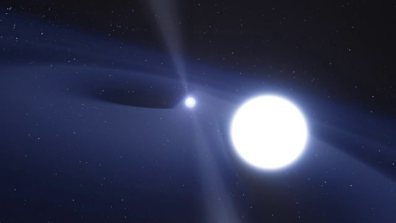

For example, a team of astronomers at the University of Wisconsin-Milwaukee recently identified the coldest, faintest white dwarf star ever detected, some 900 light years from Earth. Hovering near a much larger pulsar, this ancient stellar remnant has a temperature of less than 3,000 K, or about 2,700 degrees Celsius, which made it extremely difficult to detect. But what is especially impressive about this ancient stellar remnant is the fact that it is so cool that its carbon has crystallized.

This means, in effect, that this star has formed itself into an Earth-size diamond in space. The discovery was made by Prof. David Kaplan and his team from the UofW-M using the National Radio Astronomy Observatory’s (NRAO) Green Bank Telescope (GBT) and Very Long Baseline Array (VLBA), as well as other observatories. All of these instruments were needed to spot this star because its low energy output means that it is essentially “a diamond in the rough”, the rough being the endless vacuum of space, that is.

This means, in effect, that this star has formed itself into an Earth-size diamond in space. The discovery was made by Prof. David Kaplan and his team from the UofW-M using the National Radio Astronomy Observatory’s (NRAO) Green Bank Telescope (GBT) and Very Long Baseline Array (VLBA), as well as other observatories. All of these instruments were needed to spot this star because its low energy output means that it is essentially “a diamond in the rough”, the rough being the endless vacuum of space, that is.

White dwarves like this one are what happens after a star about the size of our Sun spends all of its nuclear fuel and throws its outer layers off, leaving behind a tiny, super-dense core of elements (like carbon and oxygen). They burn at an excruciatingly slow pace, taking billions and billions of years to finally go out. Even newly transformed white dwarfs are incredibly hard to spot compared to active stars, and this one was only discovered because it happens to be nestled right up next to a pulsar.

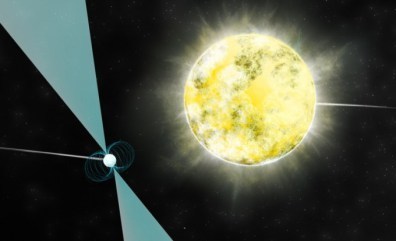

By definition, a pulsar is what is left over when a neutron star when a slightly larger sun also runs its course. Those that spin are given the name of “pulsar” because their magnetic fields force radio waves out in tight beams that give the illusion of pulsations as they whir around, effectively strobing the universe like lighthouse. The pulsar that sits next to the diamond-encrusted white dwarf is known as PSR J2222-0137, and is 1.2 times the mass of our sun, but even smaller than the white dwarf.

By definition, a pulsar is what is left over when a neutron star when a slightly larger sun also runs its course. Those that spin are given the name of “pulsar” because their magnetic fields force radio waves out in tight beams that give the illusion of pulsations as they whir around, effectively strobing the universe like lighthouse. The pulsar that sits next to the diamond-encrusted white dwarf is known as PSR J2222-0137, and is 1.2 times the mass of our sun, but even smaller than the white dwarf.

Astronomers were tipped off to the presence of something near the pulsar by distortions in its radio waves, and an old-fashioned space hunt was then mounted for the culprit. The low mass made a white dwarf the most likely cause, but astronomers couldn’t see it because of its incredibly low luminosity. Because of this, the UofW-M team estimated the age of this object had to be upward of 11 billion years, the same age as the Milky Way Galaxy.

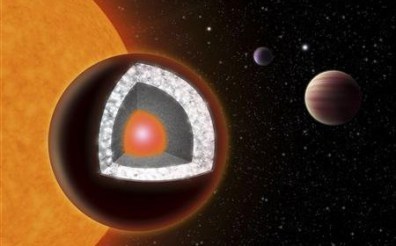

This meant that the object was already old when our galaxy was just beginning to coalesce. After all those eons to cool off, the star has likely collapsed into a crystallized chunk of carbon mixed with oxygen and some other elements. It could actually be possible, though extremely difficult, to land a spacecraft on an object like this. There may be many more stars in the sky with diamonds, perhaps some even older than this one.

This meant that the object was already old when our galaxy was just beginning to coalesce. After all those eons to cool off, the star has likely collapsed into a crystallized chunk of carbon mixed with oxygen and some other elements. It could actually be possible, though extremely difficult, to land a spacecraft on an object like this. There may be many more stars in the sky with diamonds, perhaps some even older than this one.

Spotting this white dwarf was a bit of a fluke, though. Until more powerful instruments are devised that can see an incredibly dim, burnt out star, they’ll remain shrouded in the vast darkness of space. However, this is not the first time that an object composed of diamond was found in space by sheer stroke of luck. Remember the diamond planet, a body located some 40 light years from Earth that orbits the binary star 55 Cancri?

Yep that one! Like I said, such discoveries are demonstrating that the universe is a much more interesting, awesome, and complex place than previously thought. Between diamond worlds, diamond planets, lakes of methane and atmospheres of plastic, it seems that just about anything is possible. Good to know, seeing as how so much of our plans for the future depend upon on getting out there!

Yep that one! Like I said, such discoveries are demonstrating that the universe is a much more interesting, awesome, and complex place than previously thought. Between diamond worlds, diamond planets, lakes of methane and atmospheres of plastic, it seems that just about anything is possible. Good to know, seeing as how so much of our plans for the future depend upon on getting out there!

Sources: cnet.com, extremetech.com

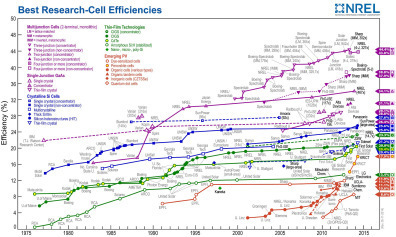

Powered by the Sun: Boosting Solar Efficiency

Improving the efficiency of solar power – which is currently the most promising alternative energy source – is central to ensuring that it an becomes economically viable replacement to fossil fuels, coal, and other “dirty” sources. And while many solutions have emerged in recent years that have led to improvements in solar panel efficiency, many developments are also aimed at the other end of things – i.e. improving the storage capacity of solar batteries.

Improving the efficiency of solar power – which is currently the most promising alternative energy source – is central to ensuring that it an becomes economically viable replacement to fossil fuels, coal, and other “dirty” sources. And while many solutions have emerged in recent years that have led to improvements in solar panel efficiency, many developments are also aimed at the other end of things – i.e. improving the storage capacity of solar batteries.

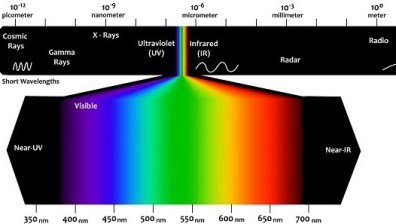

In the former case, a group of scientists working with the University of Utah believe they’ve discovered a method of substantially boosting solar cell efficiencies. By adding a polychromat layer that separates and sorts incoming light, redirecting it to strike particular layers in a multijunction cell, they hope to create a commercial cell that can absorb more wavelengths of light, and therefor generate more energy for volume than conventional cells.

Traditionally, solar cell technology has struggled to overcome a significant efficiency problem. The type of substrate used dictates how much energy can be absorbed from sunlight — but each type of substrate (silicon, gallium arsenide, indium gallium arsenide, and many others) corresponds to capturing a particular wavelength of energy. Cheap solar cells built on inexpensive silicon have a maximum theoretical efficiency of 34% and a practical (real-world) efficiency of around 22%.

Traditionally, solar cell technology has struggled to overcome a significant efficiency problem. The type of substrate used dictates how much energy can be absorbed from sunlight — but each type of substrate (silicon, gallium arsenide, indium gallium arsenide, and many others) corresponds to capturing a particular wavelength of energy. Cheap solar cells built on inexpensive silicon have a maximum theoretical efficiency of 34% and a practical (real-world) efficiency of around 22%.

At the other end of things, there are multijunction cells. These use multiple layers of substrates to capture a larger section of the sun’s spectrum and can reach up to 87% efficiency in theory – but are currently limited to 43% in practice. What’s more, these types of multijunction cells are extremely expensive and have intricate wiring and precise structures, all of which leads to increased production and installation costs.

In contrast, the cell created by the University of Utah used two layers — indium gallium phosphide (for visible light) and gallium arsenide for infrared light. According to the research team, when their polychromat was added, the power efficiency increased by 16 percent. The team also ran simulations of a polychromat layer with up to eight different absorbtion layers and claim that it could potentially yield an efficiency increase of up to 50%.

In contrast, the cell created by the University of Utah used two layers — indium gallium phosphide (for visible light) and gallium arsenide for infrared light. According to the research team, when their polychromat was added, the power efficiency increased by 16 percent. The team also ran simulations of a polychromat layer with up to eight different absorbtion layers and claim that it could potentially yield an efficiency increase of up to 50%.

However, there were some footnotes to their report which temper the good news. For one, the potential gain has not been tested yet, so any major increases in solar efficiency remain theoretical at this time. Second, the report states that the reported gain was a percentage of a percentage, meaning that if the original cell efficiency was 30%, then a gain of 16% percent means that the new efficiency is 34.8%. That’s still a huge gain for a polychromat layer that is easily produced, but not as impressive as it originally sounded.

However, given that the biggest barrier to multi-junction solar cell technology is manufacturing complexity and associated cost, anything that boosts cell efficiency on the front end without requiring any major changes to the manufacturing process is going to help with the long-term commercialization of the technology. Advances like this could help make technologies cost effective for personal deployment and allow them to scale in a similar fashion to cheaper devices.

However, given that the biggest barrier to multi-junction solar cell technology is manufacturing complexity and associated cost, anything that boosts cell efficiency on the front end without requiring any major changes to the manufacturing process is going to help with the long-term commercialization of the technology. Advances like this could help make technologies cost effective for personal deployment and allow them to scale in a similar fashion to cheaper devices.

In the latter case, where energy storage is concerned, a California-based startup called Enervault recently unveiled battery technology that could increase the amount of renewable energy utilities can use. The technology is based on inexpensive materials that researchers had largely given up on because batteries made from them didn’t last long enough to be practical. But the company says it has figured out how to make the batteries last for decades.

The technology is being demonstrated in a large battery at a facility in the California desert near Modeso, 0ne that stores one megawatt-hour of electricity, enough to run 10,000 100-watt light bulbs for an hour. The company has been testing a similar, though much smaller, version of the technology for about two years with good results. It has also raised $30 million in funding, including a $5 million grant from the U.S. Department of Energy.

The technology is being demonstrated in a large battery at a facility in the California desert near Modeso, 0ne that stores one megawatt-hour of electricity, enough to run 10,000 100-watt light bulbs for an hour. The company has been testing a similar, though much smaller, version of the technology for about two years with good results. It has also raised $30 million in funding, including a $5 million grant from the U.S. Department of Energy.

The technology is a type of flow battery, so called because the energy storage materials are in liquid form. They are stored in big tanks until they’re needed and then pumped through a relatively small device (called a stack) where they interact to generate electricity. Building bigger tanks is relatively cheap, so the more energy storage is needed, the better the economics become. That means the batteries are best suited for storing hours’ or days’ worth of electricity, and not delivering quick bursts.

This is especially good news for solar and wind companies, which have remained plagued by problems of energy storage despite improvements in both yield and efficiency. Enervault says that when the batteries are produced commercially at even larger sizes, they will cost just a fifth as much as vanadium redox flow batteries, which have been demonstrated at large scales and are probably the type of flow battery closest to market right now.

This is especially good news for solar and wind companies, which have remained plagued by problems of energy storage despite improvements in both yield and efficiency. Enervault says that when the batteries are produced commercially at even larger sizes, they will cost just a fifth as much as vanadium redox flow batteries, which have been demonstrated at large scales and are probably the type of flow battery closest to market right now.

And the idea is not reserved to just startups. Researchers at Harvard recently made a flow battery that could prove cheaper than Enervault’s, but the prototype is small and could take many years to turn into a marketable version. An MIT spinoff, Sun Catalytix, is also developing an advanced flow battery, but its prototype is also small. And other types of inexpensive, long-duration batteries are being developed, using materials such as molten metals.

One significant drawback to the technology is that it’s less than 70 percent efficient, which falls short of the 90 percent efficiency of many batteries. The company says the economics still work out, but such a wasteful battery might not be ideal for large-scale renewable energy. More solar panels would have to be installed to make up for the waste. What’s more, the market for batteries designed to store hours of electricity is still uncertain.

One significant drawback to the technology is that it’s less than 70 percent efficient, which falls short of the 90 percent efficiency of many batteries. The company says the economics still work out, but such a wasteful battery might not be ideal for large-scale renewable energy. More solar panels would have to be installed to make up for the waste. What’s more, the market for batteries designed to store hours of electricity is still uncertain.

A combination of advanced weather forecasts, responsive fossil-fuel power plants, better transmission networks, and smart controls for wind and solar power could delay the need for them. California is requiring its utilities to invest in energy storage but hasn’t specified what kind, and it’s not clear what types of batteries will prove most valuable in the near term, slow-charging ones like Enervault’s or those that deliver quicker bursts of power to make up for short-term variations in energy supply.

Tesla Motors, one company developing the latter type, hopes to make them affordable by producing them at a huge factory. And developments and new materials are being considered all time (i.e. graphene) that are improving both the efficiency and storage capacity of batteries. And with solar panels and wind becoming increasingly cost-effective, the likelihood of storage methods catching up is all but inevitable.

Sources: extremetech.com, technologyreview.com

June 27, 2014

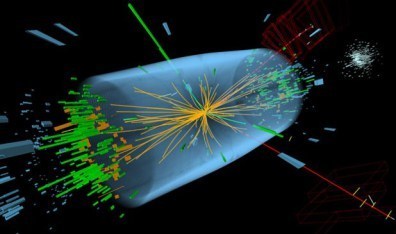

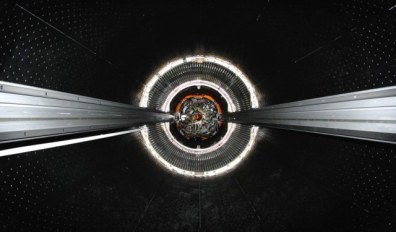

The Large Hadron Collider: We’ve Definitely Found the Higgs Boson

In July 2012, the CERN laboratory in Geneva, Switzerland made history when it discovered an elementary particle that behaved in a way that was consistent with the proposed Higgs boson – otherwise known as the “God Particle”. Now, some two years later, the people working the Large Hadron Collider have confirmed that what they observed was definitely the Higgs boson, the one predicted by the Standard Model of particle physics.

In July 2012, the CERN laboratory in Geneva, Switzerland made history when it discovered an elementary particle that behaved in a way that was consistent with the proposed Higgs boson – otherwise known as the “God Particle”. Now, some two years later, the people working the Large Hadron Collider have confirmed that what they observed was definitely the Higgs boson, the one predicted by the Standard Model of particle physics.

In the new study, published in Nature Physics, the CERN researchers indicated that the particle observed in 2012 researchers indeed decays into fermions – as predicted by the standard model of particle physics. It sits in the mass-energy region of 125 GeV, has no spin, and it can decay into a variety of lighter particles. This means that we can say with some certainty that the Higgs boson is the particle that gives other particles their mass – which is also predicted by the standard model.

This model, which is explained through quantum field theory – itself an amalgam of quantum mechanics and Einstein’s special theory of relativity – claims that deep mathematical symmetries rule the interactions among all elementary particles. Until now, the decay modes discovered at CERN have been of a Higgs particle giving rise to two high-energy photons, or a Higgs going into two Z bosons or two W bosons.

This model, which is explained through quantum field theory – itself an amalgam of quantum mechanics and Einstein’s special theory of relativity – claims that deep mathematical symmetries rule the interactions among all elementary particles. Until now, the decay modes discovered at CERN have been of a Higgs particle giving rise to two high-energy photons, or a Higgs going into two Z bosons or two W bosons.

But with the discovery of fermions, the researchers are now sure they have found the last holdout to the full and complete confirmation that the Standard Model is the correct one. As Marcus Klute of the CMS Collaboration said in a statement:

Our findings confirm the presence of the Standard Model Boson. Establishing a property of the Standard Model is big news itself.

It is certainly is big news for scientists, who can say with absolute certainty that our current conception for how particles interact and behave is not theoretical. But on the flip side, it also means we’re no closer to pushing beyond the Standard Model and into the realm of the unknown. One of the big shortfalls of the Standard Model is that it doesn’t account for gravity, dark energy and dark matter, and some other quirks that are essential to our understanding of the universe.

It is certainly is big news for scientists, who can say with absolute certainty that our current conception for how particles interact and behave is not theoretical. But on the flip side, it also means we’re no closer to pushing beyond the Standard Model and into the realm of the unknown. One of the big shortfalls of the Standard Model is that it doesn’t account for gravity, dark energy and dark matter, and some other quirks that are essential to our understanding of the universe.

At present, one of the most popular theories for how these forces interact with the known aspects of our universe – i.e. electromagnetism, strong and nuclear forces – is supersymmetry. This theory postulates that every Standard Model particle also has a superpartner that is incredibly heavy – thus accounting for the 23% of the universe that is apparently made up of dark matter. It is hoped that when the LHC turns back on in 2015 (pending upgrades) it will be able to discover these partners.

If that doesn’t work, supersymmetry will probably have to wait for LHC’s planned successor. Known as the “Very Large Hadron Collider” (VHLC), this particle accelerator will measure some 96 km (60 mile) in length – four times as long as its predecessor. And with its proposed ability to smash protons together with a collision energy of 100 teraelectronvolts – 14 times the LHC’s current energy – it will hopefully have the power needed to answer the questions the discovery of the Higgs Boson has raised.

If that doesn’t work, supersymmetry will probably have to wait for LHC’s planned successor. Known as the “Very Large Hadron Collider” (VHLC), this particle accelerator will measure some 96 km (60 mile) in length – four times as long as its predecessor. And with its proposed ability to smash protons together with a collision energy of 100 teraelectronvolts – 14 times the LHC’s current energy – it will hopefully have the power needed to answer the questions the discovery of the Higgs Boson has raised.

These will hopefully include whether or not supersymmetry holds up and how gravity interacts with the three other fundamental forces of the universe – a discovery which will finally resolve the seemingly irreconcilable theories of general relativity and quantum mechanics. At which point (and speaking entirely in metaphors) we will have gone from discovering the “God Particle” to potentially understanding the mind of God Himself.

I don’t think I’ve being melodramatic!

Source: extremetech.com, blogs.discovermagazine.com

June 26, 2014

Down with Big Brother: Supreme Court Rules Against Cell Phone Taps

In another landmark decision, the Supreme Court issued a far-reaching defense of digital privacy by declaring that law enforcement officials are henceforth forbidden from searching cell phones without a warrant at the scene of an arrest or after. The decision was based on two cases in which police searches of mobile devices led to long prison sentences. This decision is just the latest nail in the coffin of warrantless surveillance, a battle that began over a decade ago and has persisted despite promises for change.

In another landmark decision, the Supreme Court issued a far-reaching defense of digital privacy by declaring that law enforcement officials are henceforth forbidden from searching cell phones without a warrant at the scene of an arrest or after. The decision was based on two cases in which police searches of mobile devices led to long prison sentences. This decision is just the latest nail in the coffin of warrantless surveillance, a battle that began over a decade ago and has persisted despite promises for change.

The ruling opinion notes that cell phones have in fact become tiny computers in Americans’ pockets teeming with highly private data, and that gaining access to them is now fundamentally different from rifling through someone’s pockets or purse. This contradicts the argument from U.S. prosecutors that a search of a cell phone should instead be treated “as materially indistinguishable” from a search of any other box or bag found on an arrestee’s body.

The Court ruling takes this into consideration, and asserted the counter-argument in the ruling opinion:

The Court ruling takes this into consideration, and asserted the counter-argument in the ruling opinion:

That is like saying a ride on horseback is materially indistinguishable from a flight to the moon. Modern cell phones, as a category, implicate privacy concerns far beyond those implicated by a cigarette pack, a wallet or a purse… A decade ago officers might have occasionally stumbled across a highly personal item such as a diary, but today many of the more than 90% of American adults who own cell phones keep on their person a digital record of nearly every aspect of their lives.

In one of the two cases at issue, a California man was charged with assault and attempted murder in relation to a street gang in which he was allegedly a member. The connection between his actions and gang activity were made when police searched his smartphone without a warrant and found videos and photos that prosecutors claimed demonstrated that he was associated with the “Bloods” gang. In short, the contents of his phone were used to put his crime into a context that carried with it a stiffer penalty.

In the second case, a Boston man had his cell phone searched when he was arrested after an apparent drug sale. By finding his home address on his flip phone, police were able search his home and find a larger stash of drugs. Both defendants argued that the warrantless searches violated the fourth amendment. The Supreme Court’s Wednesday ruling sided with both defendants and declared that the searches were unconstitutional.

In the second case, a Boston man had his cell phone searched when he was arrested after an apparent drug sale. By finding his home address on his flip phone, police were able search his home and find a larger stash of drugs. Both defendants argued that the warrantless searches violated the fourth amendment. The Supreme Court’s Wednesday ruling sided with both defendants and declared that the searches were unconstitutional.

Privacy groups celebrated the ruling, with Hanni Fakhoury of the Electronic Frontier Foundation (EFF) calling it a “bright line, uniform, pro-privacy standard.” The American Civil Liberties Union, the long-time champion of privacy rights and an opponent to all forms of warrantless surveillance, declared the decision “revolutionary.” As Steven R. Shapiro, the ACLU’s national legal director, said in a statement:

By recognizing that the digital revolution has transformed our expectations of privacy, today’s decision…will help to protect the privacy rights of all Americans. We have entered a new world but, as the court today recognized, our old values still apply and limit the government’s ability to rummage through the intimate details of our private lives.

In its ruling, the court also rejected prosecutors’ notion that the ability to remotely wipe or lock a phone required police to search the devices immediately upon arrest before evidence could be destroyed. The court responded that police can easily prevent evidence from being remotely destroyed by turning the phone off or removing its battery, or putting it into a Faraday cage that blocks radio waves until a search warrant can be obtained.

In its ruling, the court also rejected prosecutors’ notion that the ability to remotely wipe or lock a phone required police to search the devices immediately upon arrest before evidence could be destroyed. The court responded that police can easily prevent evidence from being remotely destroyed by turning the phone off or removing its battery, or putting it into a Faraday cage that blocks radio waves until a search warrant can be obtained.

But perhaps the most remarkable portion of the ruling was its recognition of the unique nature of modern smartphones as personal objects. Even calling them mere “cell phones” is a misnomer. As the ruling reads:

The term ‘cell phone’ is itself misleading shorthand. Many of these devices are in fact minicomputers that also have the capacity to be used as a telephone. They could just as easily be called cameras, video players, rolodexes, calendars, tape recorders, libraries, diaries, albums, televisions, maps or newspapers.

The EFF’s Fakhoury pointed to language in the ruling about how the sheer volume of data stored on cell phones makes them fundamentally different from other personal objects that might contain private information. This statement that the quantity of information searched matters – rather than merely the kind of information – might influence other privacy cases. As Fakhoury stated, this could have implications for other NSA data-collection programs:

The EFF’s Fakhoury pointed to language in the ruling about how the sheer volume of data stored on cell phones makes them fundamentally different from other personal objects that might contain private information. This statement that the quantity of information searched matters – rather than merely the kind of information – might influence other privacy cases. As Fakhoury stated, this could have implications for other NSA data-collection programs:

The court recognizes that two pictures reveal something limited but a thousand reveals something very different. Does it mean something different when you’re collecting one person’s phone calls versus collecting everyone’s phone calls over five years? Technology allows the government to see things in quantities they couldn’t see otherwise.

Granted, the court did provide for some exceptions, but only in cases of extraordinary and specific danger – like child abduction or the threat of a terrorist attack. But such specifications require that police establish that an emergency exists before taking drastic action. Occurring on the heels of the Supreme Court decision that outlawed the use of cell phones to track people’s movements, this latest decision is another victory in the battle of privacy versus state security.

And given the precedent it has set, perhaps we can look forward to some meaningful ruling against everything the NSA has been doing in Fort Meade for the past few years. One can always hope, can’t one?

Sources: cnet.com, wired.com, time.com

News from Space: Insight Lander and the LDSD

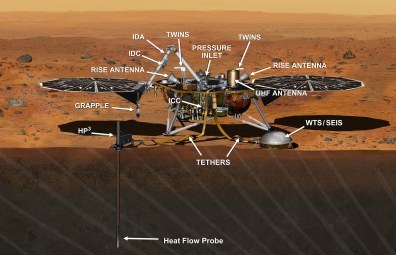

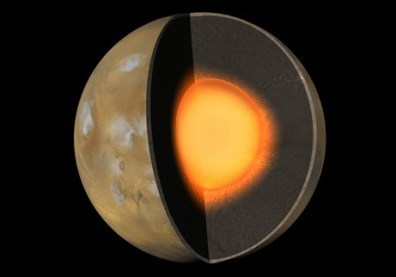

Scientists have been staring at the surface of Mars for decades through high-powered telescopes. Only recently, and with the help of robotic missions, has anyone been able to look deeper. And with the success of the Spirit, Opportunity and Curiosity rovers, NASA is preparing to go deeper. The space agency just got official approval to begin construction of the InSight lander, which will be launched in spring 2016. While there, it’s going to explore the subsurface of Mars to see what’s down there.

Scientists have been staring at the surface of Mars for decades through high-powered telescopes. Only recently, and with the help of robotic missions, has anyone been able to look deeper. And with the success of the Spirit, Opportunity and Curiosity rovers, NASA is preparing to go deeper. The space agency just got official approval to begin construction of the InSight lander, which will be launched in spring 2016. While there, it’s going to explore the subsurface of Mars to see what’s down there.

Officially, the lander is known as the Interior Exploration Using Seismic Investigations, Geodesy and Heat Transport, and back in May, NASA passed the crucial mission final design review. The next step is to line up manufacturers and equipment partners to build the probe and get it to Mars on time. As with many deep space launches, the timing is incredibly important – if not launched at the right point in Earth’s orbit, the trip to Mars would be far too long.

Unlike the Curiosity rover, which landed on the Red Planet by way of a fascinating rocket-powered sky crane, the InSight will be a stationary probe more akin to the Phoenix lander. That probe was deployed to search the surface for signs of microbial life on Mars by collecting and analyzing soil samples. InSight, however, will not rely on a tiny shovel like Phoenix (pictured above) – it will have a fully articulating robotic arm equipped with burrowing instruments.

Unlike the Curiosity rover, which landed on the Red Planet by way of a fascinating rocket-powered sky crane, the InSight will be a stationary probe more akin to the Phoenix lander. That probe was deployed to search the surface for signs of microbial life on Mars by collecting and analyzing soil samples. InSight, however, will not rely on a tiny shovel like Phoenix (pictured above) – it will have a fully articulating robotic arm equipped with burrowing instruments.

Also unlike its rover predecessors, once InSight sets down near the Martian equator, it will stay there for its entire two year mission – and possibly longer if it can hack it. That’s a much longer official mission duration than the Phoenix lander was designed for, meaning it’s going to need to endure some harsh conditions. This, in conjunction with InSight’s solar power system, made the equatorial region a preferable landing zone.

For the sake of its mission, the InSight lander will use a sensitive subsurface instrument called the Seismic Experiment for Interior Structure (SEIS). This device will track ground motion transmitted through the interior of the planet caused by so-called “marsquakes” and distant meteor impacts. A separate heat flow analysis package will measure the heat radiating from the planet’s interior. From all of this, scientists hope to be able to shed some light on Mars early history and formation.

For the sake of its mission, the InSight lander will use a sensitive subsurface instrument called the Seismic Experiment for Interior Structure (SEIS). This device will track ground motion transmitted through the interior of the planet caused by so-called “marsquakes” and distant meteor impacts. A separate heat flow analysis package will measure the heat radiating from the planet’s interior. From all of this, scientists hope to be able to shed some light on Mars early history and formation.

For instance, Earth’s larger size has kept its core hot and spinning for billions of years, which provides us with a protective magnetic field. By contrast, Mars cooled very quickly, so NASA scientists believe more data on the formation and early life of rocky planets will be preserved. The lander will also connect to NASA’s Deep Space Network antennas on Earth to precisely track the position of Mars over time. A slight wobbling could indicate the red planet still has a small molten core.

If all goes to plan, InSight should arrive on Mars just six months after its launch in Spring 2016. Hopefully it will not only teach us about Mars’ past, but our own as well.

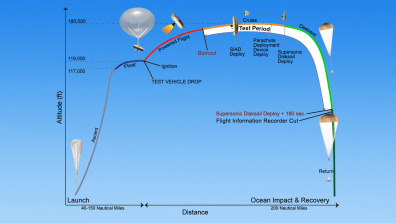

After the daring new type of landing that was performed with the Curiosity rover, NASA went back to the drawing table to come up with something even better. Their solution: the “Low-Density Supersonic Decelerator”, a saucer-shaped vehicle consisting of an inflating buffer that goes around the ship’s heat shield. It is hopes that this will help future spacecrafts to put on the brakes as they enter Mar’s atmosphere so they can make a soft, controlled landing.

After the daring new type of landing that was performed with the Curiosity rover, NASA went back to the drawing table to come up with something even better. Their solution: the “Low-Density Supersonic Decelerator”, a saucer-shaped vehicle consisting of an inflating buffer that goes around the ship’s heat shield. It is hopes that this will help future spacecrafts to put on the brakes as they enter Mar’s atmosphere so they can make a soft, controlled landing.

Back in January and again in April, NASA’s Jet Propulsion Laboratory tested the LDSD using a rocket sled. Earlier this month, the next phase was to take place, in the form of a high-altitude balloon that would take it to an altitude of over 36,600 meters (120,000 feet). Once there, the device was to be dropped from the balloon sideways until it reached a velocity of four times the speed of sound. Then the LDSD would inflate, and the teams on the ground would asses how it behaved.

Unfortunately, the test did not take place, as NASA lost its reserved time at the range in Hawaii where it was slated to go down. As Mark Adler, the Low Density Supersonic Decelerator (LDSD) project manager, explained:

Unfortunately, the test did not take place, as NASA lost its reserved time at the range in Hawaii where it was slated to go down. As Mark Adler, the Low Density Supersonic Decelerator (LDSD) project manager, explained:

There were six total opportunities to test the vehicle, and the delay of all six opportunities was caused by weather. We needed the mid-level winds between 15,000 and 60,000 feet [4,500 meters to 18,230 meters] to take the balloon away from the island. While there were a few days that were very close, none of the days had the proper wind conditions.

In short, bad weather foiled any potential opportunity to conduct the test before their time ran out. And while officials don’t know when they will get another chance to book time at the U.S. Navy’s Pacific Missile Range in Kauai, Hawaii, they’re hoping to start the testing near the end of June. NASA emphasized that the bad weather was quite unexpected, as the team had spent two years looking at wind conditions worldwide and determined Kauai was the best spot for testing their concept over the ocean.

If the technology works, NASA says it will be useful for landing heavier spacecraft on the Red Planet. This is one of the challenges the agency must surmount if it launches human missions to the planet, which would require more equipment and living supplies than any of the rover or lander missions mounted so far. And if everything checks out, the testing goes as scheduled and the funding is available, NASA plans to use an LDSD on a spacecraft as early as 2018.

And in the meantime, check out this concept video of the LDSD, courtesy of NASA’s Jet Propulsion Laboratory:

Sources: universetoday.com, (2), extremetech.com

June 25, 2014

NASA’s Proposed Warp-Drive Visualized

It’s no secret that NASA has been taking a serious look at Faster-Than-Light (FTL) technology in recent years. It began back in 2012 when Dr Harold White, a team leader from NASA’s Engineering Directorate, announced that he and his team had begun work on the development of a warp drive. His proposed design, an ingenious re-imagining of an Alcubierre Drive, may eventually result in an engine that can transport a spacecraft to the nearest star in a matter of weeks — and all without violating Einstein’s law of relativity.

It’s no secret that NASA has been taking a serious look at Faster-Than-Light (FTL) technology in recent years. It began back in 2012 when Dr Harold White, a team leader from NASA’s Engineering Directorate, announced that he and his team had begun work on the development of a warp drive. His proposed design, an ingenious re-imagining of an Alcubierre Drive, may eventually result in an engine that can transport a spacecraft to the nearest star in a matter of weeks — and all without violating Einstein’s law of relativity.

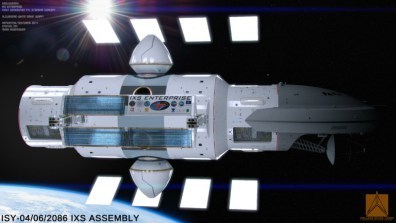

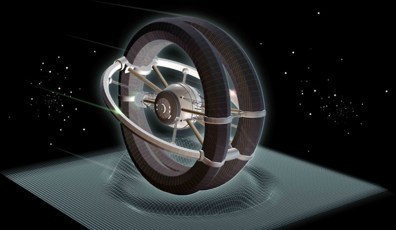

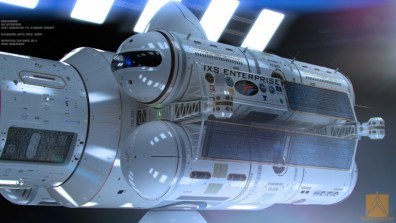

In the spirit of this proposed endeavor, White chose to collaborate with an artist to visualize what such a ship might look like. Said artist, Mark Rademaker, recently unveiled the fruit of this collaboration in the form of a series of concept images. At the heart of them is a sleek ship nestled at the center of two enormous rings that create the warp bubble. Known as the IXS Enterprise, the ship has one foot in the world of science fiction, but the other in the realm of hard science.

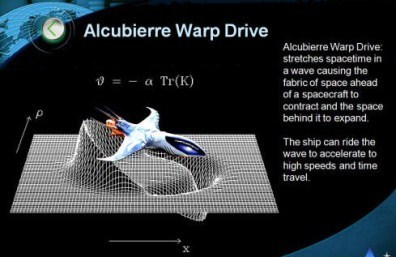

The idea for the warp-drive comes from the work published by Miguel Alcubierre in 1994. His version of a warp drive is based on the observation that, though light can only travel at a maximum speed of 300,000 km/sec (186,000 miles per second, aka. c), spacetime itself has a theoretically unlimited speed. Indeed, many physicists believe that during the first seconds of the Big Bang, the universe expanded at some 30 billion times the speed of light.

The idea for the warp-drive comes from the work published by Miguel Alcubierre in 1994. His version of a warp drive is based on the observation that, though light can only travel at a maximum speed of 300,000 km/sec (186,000 miles per second, aka. c), spacetime itself has a theoretically unlimited speed. Indeed, many physicists believe that during the first seconds of the Big Bang, the universe expanded at some 30 billion times the speed of light.

The Alcubierre warp drive works by recreating this ancient expansion in the form of a localized bubble around a spaceship. Alcubierre reasoned that if he could form a torus of negative energy density around a spacecraft and push it in the right direction, this would compress space in front of it and expand space behind it. As a result, the ship could travel at many times the speed of light while the ship itself sits in zero gravity – hence sparing the crew from the effects of acceleration.

Unfortunately, the original maths indicated that a torus the size of Jupiter would be needed, and you’d have to turn Jupiter itself into pure energy to power it. Worse, negative energy density violates a lot of physical limits itself, and to create it requires forms of matter so exotic that their existence is largely hypothetical. In short, what was an idea proposed to circumvent the laws of physics itself fell prey to their limitations.

Unfortunately, the original maths indicated that a torus the size of Jupiter would be needed, and you’d have to turn Jupiter itself into pure energy to power it. Worse, negative energy density violates a lot of physical limits itself, and to create it requires forms of matter so exotic that their existence is largely hypothetical. In short, what was an idea proposed to circumvent the laws of physics itself fell prey to their limitations.

However, Dr Harold “Sonny” White of NASA’s Johnson Space Center reevaluated Alcubierre’s equations and made adjustments that corrected for the required size of the torus and the amount of energy required. In the case of the former, White discovered that making the torus thicker, while reducing the space available for the ship, allowed the size of it to be greatly decreased – from the size of Jupiter down to a width of 10 m (30 ft), roughly the size of the Voyager 1 probe.

In the case of the latter, oscillating the bubble around the craft would reduce the stiffness of spacetime, making it easier to distort. This would reduce the amount of energy required by several orders of magnitude, for a ship traveling ten times the speed of light. According to White, with such a setup, a ship could reach Alpha Centauri in a little over five months. A crew traveling on a ship that could accelerate to just shy of the speed of light be able to make the same trip in about four and a half years.

In the case of the latter, oscillating the bubble around the craft would reduce the stiffness of spacetime, making it easier to distort. This would reduce the amount of energy required by several orders of magnitude, for a ship traveling ten times the speed of light. According to White, with such a setup, a ship could reach Alpha Centauri in a little over five months. A crew traveling on a ship that could accelerate to just shy of the speed of light be able to make the same trip in about four and a half years.

Rademaker’s renderings reflect White’s new calculations. The toruses are thicker and, unlike the famous warp nacelles on Star Trek’s Enterprise, their design is the true function of hurling the craft between the stars. Also, the craft, which is divided into command and service modules, fits properly inside the warp bubble. There are some artistic additions, such as some streamlining, but no one said an interstellar spaceship couldn’t be functional and pretty right?

For the time being, White’s ideas can only be tested on special interferometers of the most exacting precision. Worse, the dependence of the warp on negative energy density is a major barrier to realization. While it can, under special circumstances, exist at a quantum level, in the classical physical world that this ship must travel through, it cannot exist except as a property of some form of matter so exotic that it can barely be said to be capable of existing in our universe.

For the time being, White’s ideas can only be tested on special interferometers of the most exacting precision. Worse, the dependence of the warp on negative energy density is a major barrier to realization. While it can, under special circumstances, exist at a quantum level, in the classical physical world that this ship must travel through, it cannot exist except as a property of some form of matter so exotic that it can barely be said to be capable of existing in our universe.

Though no one can say with any certainty when such a system might be technically feasible, it doesn’t hurt to look ahead and dream of what may one day be possible. And in the meantime, you can check out Rademaker’s entire gallery by going to his Flickr account here. And be sure to check out the video of Dr. White explaining his warp-drive concept at SpaceVision 2013:

Sources: gizmag.com, IO9.com, cnet.com

, flickr.com

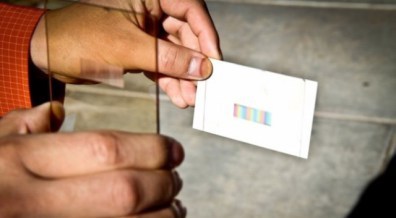

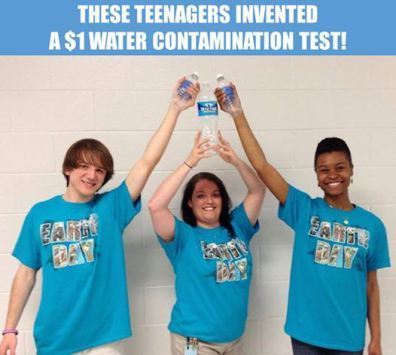

A Cleaner Future: Contaminant-Detecting Water Sensor

Jack Andraka is at it again! For those who follow this blog (or subscribe to Forbes or watch TED Talks), this young man probably needs no introduction. But if not, then you might not known that Andraka is than the young man who – at 15 years of age – invented an inexpensive litmus test for detecting pancreatic cancer. This invention won him first prize at the 2012 Intel International Science and Engineering Fair (ISEF), and was followed up less than a year later with a handheld device that could detect cancer and even explosives.

Jack Andraka is at it again! For those who follow this blog (or subscribe to Forbes or watch TED Talks), this young man probably needs no introduction. But if not, then you might not known that Andraka is than the young man who – at 15 years of age – invented an inexpensive litmus test for detecting pancreatic cancer. This invention won him first prize at the 2012 Intel International Science and Engineering Fair (ISEF), and was followed up less than a year later with a handheld device that could detect cancer and even explosives.

And now, Andraka is back with yet another invention: a biosensor that can quickly and cheaply detect water contaminants. His microfluidic biosensor, developed with fellow student Chloe Diggs, recently took the $50,000 first prize among high school entrants in the Siemens We Can Change the World Challenge. The pair developed their credit card-sized biosensor after learning about water pollution in a high school environmental science class.

We had to figure out how to produce microfluidic [structures] in a classroom setting. We had to come up with new procedures, and we custom-made our own equipment.

According to Andraka, the device can detect six environmental contaminants: mercury, lead, cadmium, copper, glyphosate, and atrazine. It costs a dollar to make and takes 20 minutes to run, making it 200,000 times cheaper and 25 times more efficient than comparable sensors. At this point, make scaled-down versions of expensive sensors that can save lives has become second nature to Andraka. And in each case, he is able to do it in a way that is extremely cost-effective.

For example, Andraka’s litmus test cancer-detector was proven to be 168 times faster than current tests, 90% accurate, and 400 times more sensitive. In addition, his paper test costs 26,000 times less than conventional methods – which include CT scans, MRIs, Ultrasounds, or Cholangiopancreatography. These tests not only involve highly expensive equipment, they are usually administered only after serious symptoms have manifested themselves.

For example, Andraka’s litmus test cancer-detector was proven to be 168 times faster than current tests, 90% accurate, and 400 times more sensitive. In addition, his paper test costs 26,000 times less than conventional methods – which include CT scans, MRIs, Ultrasounds, or Cholangiopancreatography. These tests not only involve highly expensive equipment, they are usually administered only after serious symptoms have manifested themselves.

In much the same vein, Andraka’s handheld cancer/explosive detector was manufactured using simple, off-the-shelf and consumer products. Using a simple cell phone case, a laser pointer and an iPhone camera, he was able to craft a device that does the same job as a raman spectrometer, but at a fraction of the size and cost. Whereas a conventional spectrometer is the size of a room and costs around $100,000, his handheld device is the size of a cell phone and costs $15 worth of components.

As part of the project, Diggs and Andraka also developed an inexpensive water filter made out of plastic bottles. Next, they hope to do large-scale testing for their sensor in Maryland, where they live. They also want to develop a cell-phone-based sensor reader that lets users quickly evaluate water quality and post the test results online. Basically, its all part of what is fast becoming the digitization of health and medicine, where the sensors are portable and the information can be uploaded and shared.

As part of the project, Diggs and Andraka also developed an inexpensive water filter made out of plastic bottles. Next, they hope to do large-scale testing for their sensor in Maryland, where they live. They also want to develop a cell-phone-based sensor reader that lets users quickly evaluate water quality and post the test results online. Basically, its all part of what is fast becoming the digitization of health and medicine, where the sensors are portable and the information can be uploaded and shared.

This isn’t the only project that Andraka has been working on of late. Along with the two other Intel Science Fair finalists – who came together with him to form Team Gen Z – he’s working on a handheld medical scanner that will be entered in the Tricorder XPrize. This challenge offers $10 million to any laboratory or private inventors that can develop a device that can diagnose 15 diseases in 30 patients over a three-day period. while still being small enough to carry.

For more information on this project and Team Gen Z, check out their website here. And be sure to watch their promotional video for the XPrize competition:

Source: fastcoexist.com

The Future is Here: Google’s New Self-Driving Car

Google has just unveiled its very first, built-from-scratch-in-Detroit, self-driving electric robot car. The culmination of years worth of research and development, the Google vehicle is undoubtedly cuter in appearance than other EV cars – like the Tesla Model S or Toyota Prius. In fact, it looks more like a Little Tikes plastic car, right down to smiley face on the front end. This is no doubt the result of clever marketing and an attempt to reduce apprehension towards the safety or long-term effects of autonomous vehicles.

Google has just unveiled its very first, built-from-scratch-in-Detroit, self-driving electric robot car. The culmination of years worth of research and development, the Google vehicle is undoubtedly cuter in appearance than other EV cars – like the Tesla Model S or Toyota Prius. In fact, it looks more like a Little Tikes plastic car, right down to smiley face on the front end. This is no doubt the result of clever marketing and an attempt to reduce apprehension towards the safety or long-term effects of autonomous vehicles.

The battery-powered electric vehicle has as a stop-go button, but no steering wheel or pedals. It also comes with some serious expensive hardware – radar, lidar, and 360-degree cameras – that are mounted in a tripod on the roof. This is to ensure good sightlines around the vehicle, and at the moment, Google hasn’t found a way to integrate them seamlessly into the car’s chassis. This is the long term plan, but at the moment, the robotic tripod remains.

As the concept art above shows, the eventual goal appears to be to to build the computer vision and ranging hardware into a slightly less obtrusive rooftop beacon. In terms of production, Google’s short-term plan is to build around 200 of these cars over the next year, with road testing probably restricted to California for the next year or two. These first prototypes are mostly made of plastic with battery/electric propulsion limited to a max speed of 25 mph (40 kph).

As the concept art above shows, the eventual goal appears to be to to build the computer vision and ranging hardware into a slightly less obtrusive rooftop beacon. In terms of production, Google’s short-term plan is to build around 200 of these cars over the next year, with road testing probably restricted to California for the next year or two. These first prototypes are mostly made of plastic with battery/electric propulsion limited to a max speed of 25 mph (40 kph).

Instead of an engine or “frunk,” there’s a foam bulkhead at the front of the car to protect the passengers. There’s just a couple of seats in the interior, and some great big windows so passengers can enjoy the view while they ride in automated comfort. In a blog post on their website, Google expressed that their stated goal is in “improving road safety and transforming mobility for millions of people.” Driverless cars could definitely revolutionize travel for people who can’t currently drive.

Improving road safety is a little more ambiguous, though. It’s generally agreed that if all cars on the road were autonomous, there could be some massive gains in safety and efficiency, both in terms of fuel usage and being able to squeeze more cars onto the roads. In the lead-up to that scenario, though, there are all sorts of questions about how to effectively integrate a range of manual, semi- and fully self-driving vehicles on the same roadways.

Improving road safety is a little more ambiguous, though. It’s generally agreed that if all cars on the road were autonomous, there could be some massive gains in safety and efficiency, both in terms of fuel usage and being able to squeeze more cars onto the roads. In the lead-up to that scenario, though, there are all sorts of questions about how to effectively integrate a range of manual, semi- and fully self-driving vehicles on the same roadways.

Plus, there are the inevitable questions of practicality and exigent circumstances. For starters, having no other controls in the car but a stop-go button may sound simplified and creative, but it creates problems. What’s a driver to do when they need to move the car just a few feet? What happens when a tight parking situation is taking place and the car has to be slowly moved to negotiate it? Will Google’s software allow for temporary double parking, or off-road driving for a concert or party?

Can you choose which parking spot the car will use, to leave the better/closer parking spots for someone with special needs (i.e. the elderly or physically disabled)? How will these cars handle the issue of “right of way” when it comes to pedestrians and other drivers? Plus, is it even sensible to promote a system that will eventually make it easier to put more cars onto the road? Mass transit is considered the best option for a cleaner, less cluttered future. Could this be a reason not to develop such ideas as the Hyperloop and other high-speed maglev trains?

All good questions, and ones which will no doubt have to be addressed as time goes on and production becomes more meaningful. In the meantime, there are no shortage of people who are interested in the concept and hoping to see where it will go. Also, there’s plenty of people willing to take a test drive in the new robotic car. You can check out the results of these in the video below. In the meantime, try not to be too creeped out if you see a car with a robotic tripod on top and a very disengaged passenger in the front seat!

Sources: extremetech.com, scientificamerican.com

June 24, 2014

Reinstating Net Neutrality: New Bill Before US Congress

A new “net neutrality” bill is on its way towards Congress, one which seeks to reinstate the free and open nature of the net – something that has been under fire in recent years. And one week ago, Senator Patrick Leahy of Vermont and Representative Doris Matsui of California took another decisive step when they announced that they will propose a bill to stop the Federal Communications Commission from allowing paid “fast lanes” on the internet.

A new “net neutrality” bill is on its way towards Congress, one which seeks to reinstate the free and open nature of the net – something that has been under fire in recent years. And one week ago, Senator Patrick Leahy of Vermont and Representative Doris Matsui of California took another decisive step when they announced that they will propose a bill to stop the Federal Communications Commission from allowing paid “fast lanes” on the internet.

In short, the proposed bill demands that the FCC to use whatever authority it sees fit to make sure that Internet providers don’t speed up certain types of content (like Netflix videos) at the expense of others (like e-mail). It wouldn’t give the commission new powers, but the bill – known as the Online Competition and Consumer Choice Act – would give the FCC crucial political cover to prohibit what consumer advocates say would harm startup companies and Internet services by requiring them to pay extra fees to ISPs.

And this past spring, after a federal court struck down the FCC’s existing net neutrality rules – which sought to ensure that ISPs didn’t discriminate against certain internet traffic – the commission proposed a new set of rules that has left many worried that ISPs could start charging web companies like Google and Netflix to deliver their content at faster speeds. Such an arrangement, these sources say, would squeeze out newer and smaller operations that can’t pay the fees.

And this past spring, after a federal court struck down the FCC’s existing net neutrality rules – which sought to ensure that ISPs didn’t discriminate against certain internet traffic – the commission proposed a new set of rules that has left many worried that ISPs could start charging web companies like Google and Netflix to deliver their content at faster speeds. Such an arrangement, these sources say, would squeeze out newer and smaller operations that can’t pay the fees.

Leahy and Matsui, both Democrats, are part of a widespread effort to ensure that all web companies, from Google to Netflix to Snapchat, are treated equally on the internet. On the other side, big-name internet service providers such as Comcast and Verizon are fighting to maintain control over how their networks operate. Caught in the middle are internet users who stand to lose if the ISPs create a new internet where its harder for certain services to reach them.

After holding a hearing on net neutrality in Vermont this past summer, Leahy came to an invariable conclusion:

After holding a hearing on net neutrality in Vermont this past summer, Leahy came to an invariable conclusion:

Americans are speaking loud and clear. They want an Internet that is a platform for free expression and innovation, where the best ideas and services can reach consumers based on merit rather than based on a financial relationship with a broadband provider.

Though FCC chairman Ted Wheeler has claimed that internet fast lanes would be “commercially unreasonable” and therefore forbidden under its own proposed new rules, critics worry that the rules are too broad and would allow for loopholes as to what counts as commercially reasonable activity. Since the new rules were proposed, protests have taken place in front of the FCC’s offices, massive internet petitions have been mounted, and an epic rant was made by Last Week Tonight host John Oliver.

The new bill would provide a mandate regarding how the FCC deals with any sort of paid prioritization, but it wouldn’t reclassify providers. Also, the new bill would only apply to connections from internet service providers to customers’ homes, commonly referred to as last mile connections. It wouldn’t pertain to “peering”, the deals governing the ways that internet service providers connect with each other or with content providers like Netflix and Google.

The new bill would provide a mandate regarding how the FCC deals with any sort of paid prioritization, but it wouldn’t reclassify providers. Also, the new bill would only apply to connections from internet service providers to customers’ homes, commonly referred to as last mile connections. It wouldn’t pertain to “peering”, the deals governing the ways that internet service providers connect with each other or with content providers like Netflix and Google.

Despite these limitations, Public Knowledge supports the proposed legislation. As vice president of government affairs Chris Lewis said in a statement:

This bill sends a clear signal to the FCC that fast lanes and paid prioritization could endanger the internet ecosystem as we know it. The reason we have seen so much financial investment and innovation online is because the playing field for new entrepreneurs is level. As the FCC continues to evaluate new net neutrality rules, it’s important they understand that Americans want an internet that everyone can succeed in, not just the companies with enough money to pay a toll to ISPs.

The bill may face serious challenges, however. Republicans control the House and have proposed their own bill to block the FCC from reclassifying internet service providers. In this respect, net neutrality is dividing lawmakers along partisan lines, and Republicans are not expected to support the proposed Leahy-Matsui bill. But in theory, a bipartisan agreement could be reached, especially since the Leahy-Matsui bill leaves reclassification off the table.

The bill may face serious challenges, however. Republicans control the House and have proposed their own bill to block the FCC from reclassifying internet service providers. In this respect, net neutrality is dividing lawmakers along partisan lines, and Republicans are not expected to support the proposed Leahy-Matsui bill. But in theory, a bipartisan agreement could be reached, especially since the Leahy-Matsui bill leaves reclassification off the table.

And given the level of public pressure on law makers and regulators to protect the function of the internet, it’s too early to count this or any other legislation that addressing the issue of neutrality out. Network neutrality has become a hot button issue, much like domestic surveillance and data collection. And the people are sending a clear message: they want the internet to be a level playing field and won’t rest until the rules clearly reflect that.

Sources: wired.com, washingtonpost.com

Accelerando: A Review

It’s been a long while since I did a book review, mainly because I’ve been immersed in my writing. But sooner or later, you have to return to the source, right? As usual, I’ve been reading books that I hope will help me expand my horizons and become a better writer. And with that in mind, I thought I’d finally review a book I finished reading some months ago, one which was I read in the hopes of learning my craft.

It’s been a long while since I did a book review, mainly because I’ve been immersed in my writing. But sooner or later, you have to return to the source, right? As usual, I’ve been reading books that I hope will help me expand my horizons and become a better writer. And with that in mind, I thought I’d finally review a book I finished reading some months ago, one which was I read in the hopes of learning my craft.

It’s called Accelerando, one of Charle’s Stross better known works that earned him the Hugo, Campbell, Clarke, and British Science Fiction Association Awards. The book contains nine short stories, all of which were originally published as novellas and novelettes in Azimov’s Science Fiction. Each one revolves around the Mancx family, looking at three generations that live before, during, and after the technological singularity.

This is the central focus of the story – and Stross’ particular obsession – which he explores in serious depth. The title, which in Italian means “speeding up” and is used as a tempo marking in musical notation, refers to the accelerating rate of technological progress and its impact on humanity. Beginning in the 21st century with the character of Manfred Mancx, a “venture altruist”; moving to his daughter Amber in the mid 21st century; the story culminates with Sirhan al-Khurasani, Amber’s son in the late 21st century and distant future.

This is the central focus of the story – and Stross’ particular obsession – which he explores in serious depth. The title, which in Italian means “speeding up” and is used as a tempo marking in musical notation, refers to the accelerating rate of technological progress and its impact on humanity. Beginning in the 21st century with the character of Manfred Mancx, a “venture altruist”; moving to his daughter Amber in the mid 21st century; the story culminates with Sirhan al-Khurasani, Amber’s son in the late 21st century and distant future.

In the course of all that, the story looks at such high-minded concepts as nanotechnology, utility fogs, clinical immortality, Matrioshka Brains, extra-terrestrials, FTL, Dyson Spheres and Dyson Swarms, and the Fermi Paradox. It also takes a long-view of emerging technologies and predicts where they will take us down the road.

And to quote Cory Doctorw’s own review of the book, it essentially “Makes hallucinogens obsolete.”

Plot Synopsis: Part I, Slow Takeoff, begins with the short story “Lobsters“, which opens in early-21st century Amsterdam. Here, we see Manfred Macx, a “venture altruist”, going about his business, making business ideas happen for others and promoting development. In the course of things, Manfred receives a call on a courier-delivered phone from entities claiming to be a net-based AI working through a KGB website, seeking his help on how to defect.

Part I, Slow Takeoff, begins with the short story “Lobsters“, which opens in early-21st century Amsterdam. Here, we see Manfred Macx, a “venture altruist”, going about his business, making business ideas happen for others and promoting development. In the course of things, Manfred receives a call on a courier-delivered phone from entities claiming to be a net-based AI working through a KGB website, seeking his help on how to defect.

Eventually, he discovers the callers are actually uploaded brain-scans of the California spiny lobster looking to escape from humanity’s interference. This leads Macx to team up with his friend, entrepreneur Bob Franklin, who is looking for an AI to crew his nascent spacefaring project—the building of a self-replicating factory complex from cometary material.

In the course of securing them passage aboard Franklin’s ship, a new legal precedent is established that will help define the rights of future AIs and uploaded minds. Meanwhile, Macx’s ex-fiancee Pamela pursues him, seeking to get him to declare his assets as part of her job with the IRS and her disdain for her husband’s post-scarcity economic outlook. Eventually, she catches up to him and forces him to impregnate and marry her in an attempt to control him.

The second story, “Troubador“, takes place three years later where Manfred is in the middle of an acrimonious divorce with Pamela who is once again seeking to force him to declare his assets. Their daughter, Amber, is frozen as a newly fertilized embryo and Pamela wants to raise her in a way that would be consistent with her religious beliefs and not Manfred’s extropian views. Meanwhile, he is working on three new schemes and looking for help to make them a reality.

These include a workable state-centralized planning apparatus that can interface with external market systems, a way to upload the entirety of the 20th century’s out-of-copyright film and music to the net. He meets up with Annette again – a woman working for Arianspace, a French commercial aerospace company – and the two begin a relationship. With her help, his schemes come together perfectly and he is able to thwart his wife and her lawyers. However, their daughter Amber is then defrosted and born, and henceforth is being raised by Pamela.

The third and final story in Part I is “Tourist“, which takes place five years later in Edinburgh. During this story, Manfred is mugged and his memories (stored in a series of Turing-compatible cyberware) are stolen. The criminal tries to use Manfred’s memories and glasses to make some money, but is horrified when he learns all of his plans are being made available free of charge. This forces Annabelle to go out and find the man who did it and cut a deal to get his memories back.

Meanwhile, the Lobsters are thriving in colonies situated at the L5 point, and on a comet in the asteroid belt. Along with the Jet Propulsion Laboratory and the ESA, they have picked up encrypted signals from outside the solar system. Bob Franklin, now dead, is personality-reconstructed in the Franklin Collective. Manfred, his memories recovered, moves to further expand the rights of non-human intelligences while Aineko begins to study and decode the alien signals.

Part II, Point of Inflection, opens a decade later in the early/mid-21st century and centers on Amber Macx, now a teen-ager, in the outer Solar System. The first story, entitled “Halo“, centers around Amber’s plot (with Annette and Manfred’s help) to break free from her domineering mother by enslaving herself via s Yemeni shell corporation and enlisting aboard a Franklin-Collective owned spacecraft that is mining materials from Amalthea, Jupiter’s fourth moon.

Part II, Point of Inflection, opens a decade later in the early/mid-21st century and centers on Amber Macx, now a teen-ager, in the outer Solar System. The first story, entitled “Halo“, centers around Amber’s plot (with Annette and Manfred’s help) to break free from her domineering mother by enslaving herself via s Yemeni shell corporation and enlisting aboard a Franklin-Collective owned spacecraft that is mining materials from Amalthea, Jupiter’s fourth moon.

To retain control of her daughter, Pamela petitions an imam named Sadeq to travel to Amalthea to issue an Islamic legal judgment against Amber. Amber manages to thwart this by setting up her own empire on a small, privately owned asteroid, thus making herself sovereign over an actual state. In the meantime, the alien signals have been decoded, and a physical journey to an alien “router” beyond the Solar System is planned.

In the second story “Router“, the uploaded personalities of Amber and 62 of her peers travel to a brown dwarf star named Hyundai +4904/-56 to find the alien router. Traveling aboard the Field Circus, a tiny spacecraft made of computronium and propelled by a Jupiter-based laser and a lightsail, the virtualized crew are contacted by aliens.

Known as “The Wunch”, these sentients occupy virtual bodies based on Lobster patterns that were “borrowed” from Manfred’s original transmissions. After opening up negotiations for technology, Amber and her friends realize the Wunch are just a group of thieving, third-rate “barbarians” who have taken over in the wake of another species transcending thanks to a technological singularity. After thwarting The Wunch, Amber and a few others make the decision to travel deep into the router’s wormhole network.

In the third story, “Nightfall“, the router explorers find themselves trapped by yet more malign aliens in a variety of virtual spaces. In time, they realize the virtual reaities are being hosted by a Matrioshka brain – a megastructure built around a star (similar to a Dyson’s Sphere) composed of computronium. The builders of this brain seem to have disappeared (or been destroyed by their own creations), leaving an anarchy ruled by sentient, viral corporations and scavengers who attempt to use newcomers as currency.

With Aineko’s help, the crew finally escapes by offering passage to a “rogue alien corporation” (a “pyramid scheme crossed with a 419 scam”), represented by a giant virtual slug. This alien personality opens a powered route out, and the crew begins the journey back home after many decades of being away.

Part III, Singularity, things take place back in the Solar System from the point of view of Sirhan – the son of the physical Amber and Sadeq who stayed behind. In “Curator“, the crew of the Field Circus comes home to find that the inner planets of the Solar System have been disassembled to build a Matrioshka brain similar to the one they encountered through the router. They arrive at Saturn, which is where normal humans now reside, and come to a floating habitat in Saturn’s upper atmosphere being run by Sirhan.

Part III, Singularity, things take place back in the Solar System from the point of view of Sirhan – the son of the physical Amber and Sadeq who stayed behind. In “Curator“, the crew of the Field Circus comes home to find that the inner planets of the Solar System have been disassembled to build a Matrioshka brain similar to the one they encountered through the router. They arrive at Saturn, which is where normal humans now reside, and come to a floating habitat in Saturn’s upper atmosphere being run by Sirhan.

The crew upload their virtual states into new bodies, and find that they are all now bankrupt and unable to compete with the new Economics 2.0 model practised by the posthuman intelligences of the inner system. Manfred, Pamela, and Annette are present in various forms and realize Sirhan has summoned them all to this place. Meanwhile, Bailiffs—sentient enforcement constructs—arrive to “repossess” Amber and Aineko, but a scheme is hatched whereby the Slug is introduced to Economics 2.0, which keeps both constructs very busy.

In “Elector“, we see Amber, Annette, Manfred and Gianna (Manfred’s old political colleague) in the increasingly-populated Saturnian floating cities and working on a political campaign to finance a scheme to escape the predations of the “Vile Offspring” – the sentient minds that inhabit the inner Solar System’s Matrioshka brain. With Amber in charge of this “Accelerationista” party, they plan to journey once more to the router network. She loses the election to the stay-at-home “conservationista” faction, but once more the Lobsters step in to help by offering passage to uploads on their large ships if the humans agree to act as explorers and mappers.

In the third and final chapter, “Survivor“, things fast-forward to a few centuries after the singularity. The router has once again been reached by the human ship and humanity now lives in space habitats throughout the Galaxy. While some continue in the ongoing exploration of space, others (copies of various people) live in habitats around Hyundai and other stars, raising children and keeping all past versions of themselves and others archived.

Meanwhile, Manfred and Annette reconcile their differences and realize they were being manipulated all along. Aineko, who was becoming increasingly intelligent throughout the decades, was apparently pushing Manfred to fulfill his schemes to help bring the humanity to the alien node and help humanity escape the fate of other civilizations that were consumed by their own technological progress.

Summary:

Needless to say, this book was one big tome of big ideas, and could be mind-bendingly weird and inaccessible at times! I’m thankful I came to it when I did, because no one should attempt to read this until they’ve had sufficient priming by studying all the key concepts involved. For instance, don’t even think about touching this book unless you’re familiar with the notion of the Technological Singularity. Beyond that, be sure to familiarize yourself with things like utility fogs, Dyson Spheres, computronium, nanotechnology, and the basics of space travel.

You know what, let’s just say you shouldn’t be allowed to read this book until you’ve first tackled writers like Ray Kurzweil, William Gibson, Arthur C. Clarke, Alastair Reynolds and Neal Stephenson. Maybe Vernon Vinge too, who I’m currently working on. But assuming you can wrap your mind around the things presented therein, you will feel like you’ve digested something pretty elephantine and which is still pretty cutting edge a decade or more years after it was first published!

But to break it all down, the story is essentially a sort of cautionary tale of the dangers of the ever-increasing pace of change and advancement. At several points in the story, the drive toward extropianism and post-humanity is held up as both an inevitability and a fearful prospect. It’s also presented as a possible explanation for the Fermi Paradox – which states that if sentient life is statistically likely and plentiful in our universe, why has humanity not observed or encountered it?

According to Stross, it is because sentient species – which would all presumably have the capacity for technological advancement – will eventually be consumed by the explosion caused by ever-accelerating progress. This will inevitably lead to a situation where all matter can be converted into computing space, all thought and existence can be uploaded, and species will not want to venture away from their solar system because the bandwidth will be too weak. In a society built on computronium and endless time, instant communication and access will be tantamount to life itself.

All that being said, the inaccessibility can be tricky sometimes and can make the read feel like its a bit of a labor. And the twist at the ending did seem like it was a little contrived and out of left field. It certainly made sense in the context of the story, but to think that a robotic cat that was progressively getting smarter was the reason behind so much of the story’s dynamic – both in terms of the characters and the larger plot – seemed sudden and farfetched.

And in reality, the story was more about the technical aspects and deeper philosophical questions than anything about the characters themselves. As such, anyone who enjoys character-driven stories should probably stay away from it. But for people who enjoy plot-driven tales that are very dense and loaded with cool technical stuff (which describes me pretty well!), this is definitely a must-read.