Steven Lyle Jordan's Blog, page 25

September 24, 2015

We’ll get to Mars—just maybe not soon.

Next week’s premier of The Martian is going to show audiences, better than almost any other medium can, how incredibly hard it’s going to be to get to and live on our sister planet. And for every problem is shows us, it is not inclusive of every danger Mars offers to human visitors. Mars is just plain hazardous to human health. But the public is already seeing this as a love-letter to the space program, an acceptance of the challenges involved, and the human desire to stand on another planet.

Next week’s premier of The Martian is going to show audiences, better than almost any other medium can, how incredibly hard it’s going to be to get to and live on our sister planet. And for every problem is shows us, it is not inclusive of every danger Mars offers to human visitors. Mars is just plain hazardous to human health. But the public is already seeing this as a love-letter to the space program, an acceptance of the challenges involved, and the human desire to stand on another planet.

There are detractors and nay-sayers, it’s true, and some say we should never go to Mars. But there always are opponents to any new idea, and for good reason: New ideas are often hard and dangerous things when first conceived of or attempted.

Yes, going to Mars now would be almost impossibly hard for us to pull it off. But that’s how a lot of scientific progress goes: Conceived of in one era; pulled off in the next one.

If you think about most major advances in technology and how they were used, you begin to realize that science has conceived of many types of technologies a long time before anyone was able to actually create them; and even then, it might take quite a longer time before it was used effectively.

Take human powered flight, for instance: The first principles of flight were found by Greek scientists. Leonardo DaVinci later built on those principles to create his human-powered flyer designs, but DaVinci’s designs still weren’t practical to construct for another 500 years. So, from conception to the accomplishment of flight, about two millenniums had to pass.

The Wright Flyer: 2000 years in the making.

A lot of that was due to the needed technologies and engineering knowledge taking time to develop. The Greeks didn’t have the engineering knowledge or materials resources to build a workable human flyer. DaVinci had much of the needed engineering knowledge, but the resources needed to accomplish his designs still weren’t available. Not until the early twentieth century did the combination of theories, available resources and engineering finally come together in the Wright Flyer.

Today, we’re developing the technologies for extra-terrestrial flight and life support first conceived about a century ago. We didn’t fly to the Moon when we first started to work out the possibility… it took us decades to finally do it. We are only now working out what we need to survive a long space voyage and a short stay on a planet too far away to expect outside help or escape if something goes wrong. We shouldn’t expect to be able to accomplish that in a few short years; it could take decades to reach the point where such a trip is no more dangerous than a jet flight across country today.

(In fact, this reminded me a lot of a short story I wrote for The Onuissance Cells—The First Expedition (read it, it’s free)—which describes a dedicated but doomed first Mars expedition; followed by another expedition, two centuries later, that had learned enough to be successful. A sad story, but it reflects the true reality of technological progress.)

(In fact, this reminded me a lot of a short story I wrote for The Onuissance Cells—The First Expedition (read it, it’s free)—which describes a dedicated but doomed first Mars expedition; followed by another expedition, two centuries later, that had learned enough to be successful. A sad story, but it reflects the true reality of technological progress.)

That humans will go to Mars is almost inevitable, assuming we don’t blow ourselves up first. We just have to accept that some of the things we need to actually accomplish a Mars visit aren’t ready yet, so it won’t happen right away. In fact, we may briefly visit soon; but it may take centuries before we’re truly ready to safely travel to and live on Mars long-term.

September 22, 2015

New (sort of): Unwelcome Guest

Now available from Amazon and Barnes & Noble, Unwelcome Guest is the latest revision of my sexy detective noir novel, originally titled Despite Our Shadows:

Now available from Amazon and Barnes & Noble, Unwelcome Guest is the latest revision of my sexy detective noir novel, originally titled Despite Our Shadows:

In 2007, heiress Ellen Levinson vanishes from a downtown Washington hotel under mysterious circumstances. Four years later, a series of blackmail letters leads investigator Alain Guest to Nashville, digging into the local goth and bondage scene in search of the missing heiress. But things go wrong quickly, leaving Alain wondering who’s in more danger: The heiress; or himself…

This repackaging includes a fresh writing-proofing pass, a spanking (heh) new cover for your titillation and enjoyment, and the scandalously low price of $0.99 ($1.00 even on B&N… don’t ask).

September 15, 2015

Runaway: Decades ahead of its time

It’s really a shame that, when Michael Crichton brought Runaway to the big screen, he wasn’t graced with a big budget or serious Hollywood support. The lack of both shows in his film about an America filled with automation, including self-driving cars, robot maids, flying drones… and a terrorist dedicated to hacking those things in order to kill people.

It’s really a shame that, when Michael Crichton brought Runaway to the big screen, he wasn’t graced with a big budget or serious Hollywood support. The lack of both shows in his film about an America filled with automation, including self-driving cars, robot maids, flying drones… and a terrorist dedicated to hacking those things in order to kill people.

Michael Crichton’s only mistake in creating this movie was giving it to us so early. If he had brought this to Hollywood in the 2000s, it could have been his greatest hit.

Runaway was released in 1984, a decade after Crichton had given us Westworld and three years after another low-budget SF thriller, Looker. In Runaway, Tom Selleck plays police officer Jack Ramsay, who specializes in catching and shutting down malfunctioning, “runaway” robots that become a threat to the public. Just as he’s breaking in a new partner, a terrorist named Luther (played by—gulp—Gene Simmons!) has bribed some electronics programmers to obtain robot-controlling chips that can be programmed to kill a target.

When the terrorist kills one of the programmers for going back on the deal, and tries to kill the other using a domestic assistant bot that gets ahold of the family gun, Ramsay gets involved and discovers Luthor’s operation. Then it’s a race to find Luthor and shut him down, before he gets away with the chips and kills anyone else.

Kirstie Alley poses with LOIS, a household maid robot (that, between the two of them, did the better acting job).

This film looks good in a lot of ways; most notably, the practical effects used for many of the robots in the film, like Lois, Ramsay’s maid-bot. Also, most of the actors are fairly easy on the eyes. Other robots didn’t look so hot, due to much-less-polished mechanicals… the robotic assassin “spiders,” supposed to be menacing and creepy, are particularly hilarious. (Oh, the rickety wire-work when they would jump at the camera…) Attempts at visual effects were simple and cheap-looking as well. As these were supposed to be the seminal threats of the movie, they brought the entire experience down. Other production values suffered… even the great Jerry Goldsmith was just wasted here by tasking him with creating a fully electronic score (something he’d already demonstrated he wasn’t particularly good at two years earlier, with Tron).

And it didn’t seem like the actors were particularly into their roles, either: Only half of the cast, pretty as they were, were ready for prime-time; and Gene Simmons’ (bum-bum-bummmm!) evil terrorist was not much better than a cardboard KISS cutout.

A (chuckle) lethal robot spider creeps up on an unsuspecting victim. Oh, the (hyuk) horror!

But consider how prescient this flick was! A future of robots assisting us on a daily basis! Robots in the home… robots driving our cars. Terrorist-assassins! Hacking electronics to tap private networks, control drones and design lethal chipsets. Runaway was at least thirty years ahead of its time.

I would love to see someone remake this movie today. Crighton’s story itself needs little improvement, interestingly… just a tweaking here and there to really punch up the depiction and use of modern robotics in the story. The potential of domestic and industrial robots, miniature programmed assassins, rolling and flying bombs, network hacking and other computer-based shenanigans would seem like it was straight out of our modern headlines, and much more of a serious threat than 1984’s critters were at the time.

And with the full capability of modern practical and visual effects at their disposal, a modern production crew could make Runaway seriously creepy, borderline-horror… the kind of thing that would leave you sneaking second glances at every electronic device in your house.

Runaway should have been saved for a post-9-11 America; but it’s not too late. 30 years has passed since it was originally made. That’s plenty of time between a lackluster original and a blockbuster remake.

September 12, 2015

Under the skin

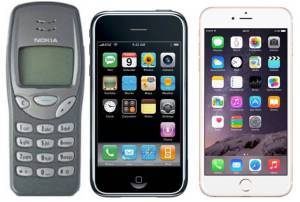

Facebooker Roy Kristovski submitted this photo comparison of three cellphones to illustrate a point he was making:

Facebooker Roy Kristovski submitted this photo comparison of three cellphones to illustrate a point he was making:

Each of these are/were arguably the most popular phones of their time, each released around 8 years apart.

How come the advance between the first 2 are so much more than the second two. I’m only 22 so I may be bias but I feel like during the period say 2000-2007 the everyday advances we saw in technology were massive think CD walkman to iPod, VHS to DVD the difference ever year in cell phones and computers, Dial Up to broadband. In the last 8 years we have been given Wifi and Digital storage of movies/music, but I feel as tho these are just upgrades of preexisting items. So far wearable tech has failed to make an impact see google glass or apple watch…. Idk correct me if i’m wrong but compared to the first 7 or so years of the 21st century this second 7 has been pretty lacklustre in the terms of life changing tech…

I see Roy’s dilemma, but in this case, I think he’s allowed the mere appearance of the phones to cloud his impressions of advancing technology. Here’s how I responded:

First, advancement doesn’t happen on a smooth timeline. Innovation happens in spurts, and that’s exactly what we’ve seen.

Secondly, I’d say wifi and digital music/video storage are MONSTROUS advancements over the tech of the past, and not to be sneezed at. When I can store in a millimeter of space what once took an entire library to hold on paper, vinyl or tape… that freaks the crap out of me.

Thirdly, these photos don’t do the issue justice. While the iPhones may look pretty similar, their quality and capabilities have increased significantly in that time… from memory increases significantly above the older versions, to many more available apps and versions of apps, meaning, the things you can store and choose to do with that phone have increased a thousandfold. Whether you choose to do them is up to you… but the potential is there.

So don’t be fooled by similar packaging: There have been crazy innovations in the last 8 years; you just won’t see them by looking at the case, they’ve all happened under the skin.

Technology can’t be judged by its external appearance. Technology always does its most visually-distinctive changes—working out the form-factors, ergonomics, etc—first; and in the case of cellphones, it’s already done that. But once that’s done, the real work happens… under the hood.

In fact, using a car analogy fits this perfectly, as the basic configuration of the modern automobile hasn’t changed in over 50 years; but under the skin, incredible innovation has been going on, improving engine efficiency, fuel efficiency (and, in some cases, fuel alternatives), component quality, automation assistance, safety, entertainment and performance features. Today’s cars can play movies for the kids in the back seat, while the dashboard gives you turn-by-turn directions, plays music from your collection of a few hundred songs (or beamed from a satellite), automatically cleans your windshield when the rain starts, adjusts engine operation to maximize fuel efficiency, detects obstacles in your path and stops the car, using advanced computers to keep your brakes from locking up. Soon, the car that hasn’t much changed its outside appearance in over 50 years will be doing the driving for you.

Consumer electronic devices have gone through the same kind of evolution in the past decade, making significant changes in the software that utterly transform the capabilities of the hardware. The advances of the original cellphones, for example, came when the phones were combined with handheld computers, which greatly expanded their capabilities. Once that happened, the apps available for cellphones exploded, not only in number but in features and capabilities. And the cellphones themselves have not only added memory storage by an order of magnitude, but have improved audio and video capabilities, to the extent that you can download and play an entire motion picture on a high-quality output screen. Then connect wirelessly to the internet to get information on that movie via IMDB, google its stars, find them on Twitter and add them to your list of following accounts, query industry insiders to find out about the expected date of the movie sequel, and add it to the calendar that you share with your friends in realtime alongside video clips of how awesome a time you’re gonna have.

You couldn’t do most of that 8 years ago… in fact, you wouldn’t have even seen the movie to kick off the process. And if that’s been accomplished in the last 8 years… think about what the next 8 will bring.

You couldn’t do most of that 8 years ago… in fact, you wouldn’t have even seen the movie to kick off the process. And if that’s been accomplished in the last 8 years… think about what the next 8 will bring.

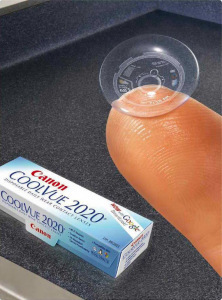

Just don’t expect to be able to see all that cool stuff by staring at your phone. In 8 years, you might not even be taking it out of your pocket…

September 9, 2015

“Why I love wearing hijab” (no, not me)

Ruqaiya Haris

I love this post, from Ruqaiya Haris on Dazed. She’s found a solution to finding her personal comfort zone around the gender gap and the oppressive male gaze, and found a way to feel liberated and in control: She has adopted traditional Muslim hijab for daily wear. From her post:

I grew up in a fairly relaxed Muslim household, in a pretty much all-white suburb of North London. Like a lot of diaspora kids I was somewhat culturally confused, as well as totally disengaged from Islam, which I generally associated with stifling restrictions and rules. And so in my early teenage years I began to take great pleasure in rebelling against my cultural upbringing in any way I could. I partied a lot and fell into long periods of reckless highs and consuming, depressive lows. By the age of 16 I began to mature into somebody considered attractive by mainstream beauty standards, and I noticed that I was getting a lot more male attention.

But Ruqaiya came to realize that the attention she was getting was not what she really wanted: Men were treating her as a sex object—and while that was flattering on one level, it also made her feel judged by her beauty alone and not treated by men as an equal, but solely as an object of desire.

By practicing hijab and covering some of the most traditionally attractive parts of a woman, the hair and body, I feel so much more in control. I no longer feel like an object ready for public consumption.

I’ve been known to say from time to time that it amazes me how much women in the United States, demanding to be seen as equals in the workplace, will at the same time come to work in clothing often better suited for a club than an office; highlighting their attributes in tight clothing, wearing heels, using heavy makeup and “showing skin,” in a work environment where (in theory) no one is supposed to notice gender or treat a woman differently than a man wearing long slacks and a button-down shirt. In a work area, where that behavior is supposed to be frowned upon, women almost seem to be deliberately tempting men… and with their favorite bait. And I think that this dichotomy is a main reason for workplace inequality and unneeded social stress.

It’s been my opinion that if women want to be treated as equals in the workplace, as opposed to looking fashionable (which, for American women, equals male-gaze attraction), either they should dress for business—cutting back on or eliminating makeup, adopting business suits including tailored long slacks, no low-neckline/cleavage-baring tops, avoiding form-fitting clothing, heels, etc—or men should start wearing more physically revealing and tighter clothing in the office, to even the scales. (We’d probably be better off if women started covering up… but if it goes the other way, I am fully prepared to rock a Bermuda suit and crew-neck top next summer.)

A new television series, Mr. Robot, helps drive this point home. In it, Trenton, a Muslim girl and one of the hackers that works with the main character, wears hijab… as opposed to Darlene, another hacker who wears more fashionable American women’s clothing. Trenton is seen (and treated by the writers) as a hacker first, a girl second; Darlene is seen (and treated by the writers) as a girl first, a hacker second. It’s a subtle but relevant example of the difference in how women are seen and treated, depending on how sexually overt their clothing is.

(Most American television is a lot more blatant than this: A businesswoman’s implied sexual prowess is always defined by the very skin-baring workplace fashions she wears, which always include low-cut tops, tight knee-length or shorter skirts and stiletto heels; women being portrayed as not sexually active, introverted, etc wear mid- or high-neck shirts, leg-covering skirts or slacks and flat-heeled shoes. Hijab has not yet become standard wear for American TV women, but “schoolmarm” fashions are accomplishing the same thing.)

Ruqaiya’s solution for women would make great sense in the US, as well as any number of regions in the world, to better even the playing field for women in business and other social situations… and to take some of the sexual pressure off men, who are uncomfortable in a work environment where a woman can dress pretty and even alluring, but even an innocent complement to that co-worker can quite literally cost him his job.

Ruqaiya’s solution for women would make great sense in the US, as well as any number of regions in the world, to better even the playing field for women in business and other social situations… and to take some of the sexual pressure off men, who are uncomfortable in a work environment where a woman can dress pretty and even alluring, but even an innocent complement to that co-worker can quite literally cost him his job.

Here’s hoping Ruqaiya’s style choices catch on in more (intended-to-be) gender-neutral workplaces.

September 8, 2015

Are humans “hive-minds”?

An interesting IO9 article by George Dvorsky started an equally interesting discussion on Facebook about the subject:

An interesting IO9 article by George Dvorsky started an equally interesting discussion on Facebook about the subject:

How Much Longer Until Humanity Becomes A Hive Mind?

Much of the IO9 discussion centered around the idea that, for humans to become a true hive-mind, physical technology would have to be added to the brain, allowing people to communicate with each other and control each others’ actions. A recent experiment where two lab rats were wired together to “share each other’s thoughts” was cited as a logical next step in creating the hive-mind. Much of the Facebook chatter agreed with this, and mostly debated when it was likely that we’d be wiring our brains to each other.

I say: Humans are already hive-minds. We have been, in fact, since we invented society.

Man has developed a collective consciousness, created through language and shared culture, and directed through communal institutions and modern telecommunications—television, radio, social media and the web. We are taught the same lessons, given the same guidance and expected to accomplish the same goals, ultimately designed to integrate our needs into those of society. We are told what threatens us and who the enemy is, and act as a unit to repel invaders to our shores and preserve our way of life.

If that’s not a collective consciousness, I don’t know what is.

Author John T. Steiner’s comment on that was:

Telecommunications isn’t the same as a collective consciousness. For that to be true our must basic and unconscious impulses must affect others.

And, in fact, they do. We respond in kind to love, hate, distrust, disgust, curiosity, suffering… our basic impulses affecting others. But more, our higher impulses also affect others, again, through telecommunication (and, to a great extent, good old fashioned talking and listening).

Think about this: Ants, bees, etc—the original templates of what we refer to as “hive-minds”—aren’t literally conscious of each other’s minds; they respond to basic communications (mostly visual, aural and olfactory) and act according to prearranged patterns, some instinctual, some learned. And in truth, it’s not as organized as the name suggests… a great deal of it is instinctual, and just happens to work out. (The ones that didn’t work out suffered the expected Darwinian fate.)

And hive creatures don’t have some kind of telepathic warning systems: If an ant reacts negatively to its surroundings, it communicates to other ants nearby with visual, aural or olfactory signals that something is wrong, so they can avoid it. That is no different than the actions and reactions you’d see in humans.

More of humans’ actions are learned than instinctual, and we often formalize rules (as laws) to pass on to others… but we still respond to communications and act according to instinctive and/or learned patterns. And despite our individual independence, a great deal of our actions can still be boiled down to instinctive or social-based responses to communications stimuli. A check-out line, a highway or a riot are all great examples of hive minds at work. So are elections, factories, farm collectives and movie productions.

Yes, we have more autonomy than most instinct-based critters; but we are still a lot more rigidly controlled by society’s rules than even we’d like to admit. We allow society to guide our actions through risk-reward methods. When a commercial shows you a green shirt, then shows you people who look complimentary in it, or are actually complimented, that is society telling you: “Buy that shirt, and other people will approve.” Sure, you could buy a red shirt… but in more cases, you will buy the green shirt, and look forward to the accompanying approval. You are acting in accordance with the wishes of the hive-mind.

Okay, so we don’t look like Star Trek’s Borgs… but if you look again at the people who pay more attention to their cellphones than where they’re walking, listening to voices in their ears, letting social media instruct them on what TV shows to watch and what bars to drink at and which politicians to vote for and whose news is more accurate… you might start to see the real picture.

Okay, so we don’t look like Star Trek’s Borgs… but if you look again at the people who pay more attention to their cellphones than where they’re walking, listening to voices in their ears, letting social media instruct them on what TV shows to watch and what bars to drink at and which politicians to vote for and whose news is more accurate… you might start to see the real picture.

No, we don’t have wires running into our heads… but we still know what others are thinking and saying and doing, and what they want us to think and say and do. And because we (mostly) want to be a part of the greater society, we very often do them, sometimes without even a further thought about whether we should. So don’t let the missing wires fool you: Humans are a hive-mind, in full operational mode.

Taking it to the next step (and what some people are obviously thinking): Would we want to literally hand over control of others’ actions to a controller through a neural interface?

To answer this, consider: Would such a system share everyone’s input with everyone else? A million voices all talking at once in my head? No—no one could sort through the cacophany of minds, all trying to communicate at once; that’s not going to work. The only way for that to work would be for one POV to be broadcast to everyone, with the result that people whose individual POV may have some value to a situation will only be subsumed by the one controller’s POV, and subsequently lost. All other possible opinions… gone. Only one person’s opinion matters. And there’s absolutely no value in that. You are wasting a valuable resource… a human mind. Might as well have a robot do your work.

And it’s inefficient. Your one-POV mindcast may be actionable by the one human lemming who happens to be at the right place at the right time… but all your other lemmings, wherever they are and whatever they are doing, will be taking the same action. So, while one person is screwing in a light bulb, a hundred others are standing in the middle of the office, the McDonald’s line, the bathroom, rotating their wrists in the air like they just don’t care. Individual control is needed so individuals can realize they are not the ones standing in front of a light fixture, and they can stand down. And no one person is going to be able to send individual commands to more than a few people at a time… no future Patton is going to control his entire army from the hilltop through his mind.

So, do we want to go further? Absolutely not, there’s not a single good reason for it. We’re already the hive-minds we want to be. Want to hard-wire it in? Fine. But it won’t make your hive-mindedness any more efficient than it already is.

September 3, 2015

And the winner of the 2015 Alien August competition is…

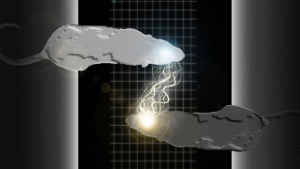

The SciFi Ideas website has announced the winner of the 2015 Alien August competition. The alien that was given the nod this year is this little bugger to the left, the Mindswarm: A species of insect-like organisms that communicate with vibrations and can join into groups to manipulate their environment and maybe even share higher concepts. The winner, as well as descriptions of the best of the entries this year, were discussed by Mark and David Ball on their podcast (listen to all of it—you should—but FYI, the part where they describe the Mindswarm starts at 25:00).

The SciFi Ideas website has announced the winner of the 2015 Alien August competition. The alien that was given the nod this year is this little bugger to the left, the Mindswarm: A species of insect-like organisms that communicate with vibrations and can join into groups to manipulate their environment and maybe even share higher concepts. The winner, as well as descriptions of the best of the entries this year, were discussed by Mark and David Ball on their podcast (listen to all of it—you should—but FYI, the part where they describe the Mindswarm starts at 25:00).

Now: Guess who’s got two thumbs and is the guy who submitted this one to the competition?

That’s right—this guy’s the winner of Alien August for 2015! There were lots of great entries, and I really am surprised and pleased to have won this year. But besides the judges, I received a lot of accolades from the members of the SciFi Ideas website for this one… people really enjoyed it! In fact, Mark and David indicated in the podcast that this was one of the few times when the judges were all in agreement as to the best submittal as well! (Gad, but it’s nice to be appreciated.)

I submitted the above graphic as well, basically a beetle with a little photoshopping done to fit the profile. It may have helped to sell the concept (Can I haz Photoshop skillz?)…

They also enjoyed another “pseudo-entry” I’d submitted by invitation from Mark and David: The Monoliths, another popular entry with members, reconceptualized what most SF fans know as the Monoliths in Arthur C. Clarke’s 2001 series, and described them as an incredibly-advanced life form that may have created itself from whatever there was before the Big Bang, and has a mysterious directive to manipulate life wherever it is found, possibly in order to guarantee its own future.

Considering that I was borrowing from a fairly popular item that’s probably copywritten to Arthur C. Clarke and trademarked by 20th Century Fox, this was not really eligible to win the contest. But the Monoliths was supposed to be an example for writers to think a bit outside the box; and it seemed to accomplish that. And as with the Mindswarm, it was just plain fun to write!

So, I’d like to thank the SciFi Ideas judges, its members, and everyone else who regularly (or even occasionally) encourages me to keep writing… because you never know what may come of it.

Looking forward to 2016!

September 2, 2015

More cover changes a’coming

To coincide with the new cover for Chasing the Light created by Farah Evers Design—and, frankly, because it’s not like I have anything better to do—I’ve committed to updating my other novel covers. Some of them are going to have the “futurist and artisan author” tag removed from above my byline… since, apparently, it isn’t impressing anyone, and may actually be confusing some.

To coincide with the new cover for Chasing the Light created by Farah Evers Design—and, frankly, because it’s not like I have anything better to do—I’ve committed to updating my other novel covers. Some of them are going to have the “futurist and artisan author” tag removed from above my byline… since, apparently, it isn’t impressing anyone, and may actually be confusing some.

Other covers will get a full makeover.

I haven’t fully decided which covers will get makeovers, though obviously the top contenders are those for which I never created a cover under the new design template; Evoguía, Despite Our Shadows and The Onuissance Cells are all overdue. And besides, I’ve never been happy with those covers—just looking at The Onuissance Cells makes me ridiculously unwell—and I desperately need to address that.

The first two I’ve treated were Verdant Skies and Verdant Pioneers: They got the tag removal, and a bit of adjustment in their elements. In a day or so, the books will have their new covers online and in Amazon and Barnes & Noble. The rest will come as time and inspiration hits.

August 30, 2015

Celebrity sells

I was just introduced to an independent writer, Chris Stevenson, whose blog is dedicated to increasing the odds of indie writers to get ahead. The first post I read, , describes the trend he’s noticed in publishing: That authors and celebrities with significant name recognition are landing the big publishing contracts and getting the big bucks… often before they’ve produced actual books. He singled out some examples in multiple genres, as well, and the kinds of deals they’re getting off name recognition alone. And there are plenty more examples like them: TV and movie stars, politicians, musicians, etc, who use their fame to promote books that, in many cases, they had ghost-written for them. (Yes, look up.)

I was just introduced to an independent writer, Chris Stevenson, whose blog is dedicated to increasing the odds of indie writers to get ahead. The first post I read, , describes the trend he’s noticed in publishing: That authors and celebrities with significant name recognition are landing the big publishing contracts and getting the big bucks… often before they’ve produced actual books. He singled out some examples in multiple genres, as well, and the kinds of deals they’re getting off name recognition alone. And there are plenty more examples like them: TV and movie stars, politicians, musicians, etc, who use their fame to promote books that, in many cases, they had ghost-written for them. (Yes, look up.)

Hard to argue the fact, either (As my constant holding up of “Selfish” would suggest). Names sell, the more famous, the better, and content or quality be damned. The book business has gone crazy, going for the biggest common denominator above all else in a desperate quest for all the monies they can get from a celebrity-crazed public. And, amazingly, the public seems to be completely okay with it. Makes you wonder why we want to break into this biz so bad, doesn’t it?

I spend far more time on promotion of my books than I ever spent writing them. And despite excellent reviews and a 4.4-star average in quality, I get no sales. Yet people with no demonstrated ability to write (Chris mentions Chelsea Clinton, who’s landed a book deal for a not-yet-written book solely on her relationship to Bill and Hillary Clinton) get contracts and big money. Could there be any other reason than raw celebrity?

I spend far more time on promotion of my books than I ever spent writing them. And despite excellent reviews and a 4.4-star average in quality, I get no sales. Yet people with no demonstrated ability to write (Chris mentions Chelsea Clinton, who’s landed a book deal for a not-yet-written book solely on her relationship to Bill and Hillary Clinton) get contracts and big money. Could there be any other reason than raw celebrity?

It might be worth noting that many of these literary celebrities were made celebrities, quite literally, by their publishers. Major publishers use the media to sell their books, and part of that process is teaching their authors to embody a specific persona (best described as “rilly good author”) who can stand in front of the crowds (that the publisher arranged for through media promotion), be photogenic (for the photographers the publisher duly alerted to their presence) and sell their wares. And if you’re already a celebrity, that’s half the work done; publishers, at that point, only need to stand you in front of the right promotional venues to sell books along with your other products.

I honestly think promotion (and connections to the most popular literary outlets and reporters) is the most important service major publishers offer. They aren’t just playing the system; they are the system. They invented the system. And it’s the main reason independent authors find it so hard to penetrate the business: They are directly opposed by the people who created the literary hierarchy, built the castle and hold the keys to the gate.

And is there anything that an independent author can do to break through that impenetrable gate? Is the only answer to become a celebrity? I wish I could say different… but I can’t. A few people have learned how to get noticed on the outside of the publishing castle; ironically enough, the usual response for a successful independent is for the castle to open up and offer the indie a ridiculous pile of money to come inside and join them. Presto: No more successful independent—they’ve gone pro, no longer associated with the dung-coated peasants outside.

A few authors have achieved celebrity and success, have been offered a place inside the castle, and have declined. Can you name them? You may know one or two, but possibly none… because the promotional machine inside the castle is actively steering you away from them and into the arms of James Patterson and John Scalzi.

And other authors, like me, wonder if the only thing that’s going to work for us is donning a ridiculous costume and running around in public like an idiot in order to win notoriety, generate media buzz, and create an “in” to get our books noticed. (Go ahead, laugh. It worked for Matt Lesko, didn’t it?)

And other authors, like me, wonder if the only thing that’s going to work for us is donning a ridiculous costume and running around in public like an idiot in order to win notoriety, generate media buzz, and create an “in” to get our books noticed. (Go ahead, laugh. It worked for Matt Lesko, didn’t it?)

August 28, 2015

Chasing the Light: New cover

Introducing: The 2015 cover for my novel Chasing the Light, by Farah Evers Design:

Chasing the Light is a romantic adventure that takes place amidst the very serious backdrop of a future where corporate and political corruption, over energy, has turned America into a ticking time bomb.

As with my other novels, Chasing the Light takes pains to be as realistic and believable as possible—this could very well be America’s energy situation in the near future. The technology that may save us, as depicted in the novel, is also very real and not too far away. The other technologies, such as the self-driving vehicles, ID systems and personal electronic devices, are also right around the corner (or, in some cases since this book was originally written, pretty much here).

The American energy situation may be the backdrop to the action, but it is the main character’s quest to find the girl he loves, start a business and carve out a life for themselves that is its focus. Chasing the Light shows the reader how far people will go for love, and why it’s worth every bit of the effort.

Chasing the Light, newly edited and proofed to go with the new cover, is available at Amazon and Barnes & Noble.