Jeremy Keith's Blog, page 93

December 17, 2015

Pseudo and pseudon’t

I like CSS pseudo-classes. They come in handy for adding little enhancements to interfaces based on interaction.

Take the form-related pseudo-classes, for example: :valid, :invalid, :required, :in-range, and many more.

Let’s say I want to adjust the appearance of an element based on whether it has been filled in correctly. I might have an input element like this:

Then I can write some CSS to put green border on it once it meets the minimum requirements for validity:

input:valid {

border: 1px solid green;

}

That works, but somewhat annoyingly, the appearance will change while the user is still typing in the field (as soon as the user types an @ symbol, the border goes green). That can be distracting, or downright annoying.

I only want to display the green border when the input is valid and the field is not focused. Luckily for me, those last two words (“not focused”) map nicely to some more pseudo-classes: not and focus:

input:not(:focus):valid {

border: 1px solid green;

}

If I want to get really fancy, I could display an icon next to form fields that have been filled in. But to do that, I’d need more than a pseudo-class; I’d need a pseudo-element, like :after

input:not(:focus):valid::after {

content: '���';

}

…except that won’t work. It turns out that you can’t add generated content to replaced elements like form fields. I’d have to add a regular element into my markup, like this:

So I could style it with:

input:not(:focus):valid + span::after {

content: '���';

}

But that feels icky.

December 15, 2015

Shadows and smoke

When I wrote about a year of learning with Charlotte, I made an off-hand remark in parentheses:

Hiring Charlotte was an experiment for Clearleft���could we hire someone in a ���junior��� position, and then devote enough time and resources to bring them up to a ���senior��� level? (those quotes are air quotes���I find the practice of labelling people or positions ���junior��� or ���senior��� to be laughably reductionist; you might as well try to divide the entire web into ���apps��� and ���sites���).

It breaks my heart to see so many of my colleagues prefix their job titles “senior” (not least because it becomes completely meaningless when every single Visual Designer is also a “Senior Visual Designer”).

I remember being at a conference after-party a few years ago chatting to a very talented front-end developer. She wasn’t happy with where she was working. I advised to get a job somewhere else After all, she lived and worked in San Francisco, where her talents are in high demand. But she was hesitant.

“They’ve promised me that in a few more months, my job title would become ‘Senior Developer’”, she said. “Ah, right,” I said, “and what happens then?” “Well”, she said, “I get to have the word ‘senior’ on my resum��.” That was it. No pay rise. No change in responsibilities. Just a word on a piece of paper.

I had always been suspicious of job titles, but that exchange put me over the edge. Job titles can be downright harmful.

Dan recently wrote about the importance of job titles. I love Dan, but I couldn’t disagree with him more in this instance.

He cite two situations where he believes job titles have value:

Your title tells your colleagues how to interact with you.

No. Talking to your colleagues tells your colleagues how to interact you. Job titles attempt to short-cut that. They do a terrible job of it.

What you need to know are the verbs that your colleagues are adept in: designing, developing, thinking, communicating, facilitating …all of that gets squashed down into one reductionist noun like “Copywriter” or “Designer”.

At Clearleft, we’ve recently started kicking off projects with an exercise called “Fuzzy Edges” that Boxman has been refining. In it, we look ahead to all the upcoming project roles (e.g. “Who will lead playbacks and demos?”, “Who will run stakeholder interviews?”, “Who will lead design direction?”). Together, everyone on the project comes to a consensus on who has which roles.

It’s really, really important to clarify these roles at the start of each project, and it’s exactly the kind of thing that can’t be summed up in a job title. In fact, the existence of job titles can lead to harmful assumptions like “Oh, I figured you were leading playbacks and demos!” or “Oh, I assumed they were running stakeholder interviews!”, or worse: “Hey, you can’t lead design direction because that’s not in your job title!”

The role assignments can vary hugely from project to project, which is great. People are varied and multi-faceted. Trying to force the same people into the same roles over and over again would be demoralising and counter-productive. I fear that’s exactly what job titles do���they reinforce barriers.

Here’s the second reason Dan gives for the value of job titles:

Your title tells your clients how to interact with you.

Again, no. Talking to your clients tells your clients how to interact with you.

Dan illustrates his point by recounting a tale of deception, demonstrating that a well-placed lie about someone’s job title can mollify the kind of people who place great stock in job titles. That’s not solving the real problem. Again, while job titles might appear to be shortcuts to a shared understanding, they’re actually more like fa��ades covering up trapdoors.

In recounting the perceived value of job titles, there’s an assumption that the titles were arrived at fairly. If someone’s job title is “Senior Designer” and someone’s job title is “Junior Designer”, then the senior person must be the better, more experienced designer, right?

But that isn’t always the case. And that’s when job titles go from being silly pointless phrases to being downright damaging, causing real harm.

Over on Rands in Repose, there’s a great post called Titles are Toxic. His experience mirrors mine:

Never in my life have I ever stared at a fancy title and immediately understood the person���s value. It took time. I spent time with those people ��� we debated, we discussed, we disagreed ��� and only then did I decide: ���This guy��� he really knows his stuff. I have much to learn.��� In Toxic Title Douchebag World, titles are designed to document the value of an individual sans proof. They are designed to create an unnecessary social hierarchy based on ego.

See? There’s no shortcut for talking to people. Job titles are an attempt to cut out one of the most important aspects of humans working together.

The unspoken agreement was that these titles were necessary to map to a dimwitted external reality where someone would look at a business card and apply an immediate judgement on ability based on title. It���s absurd when you think about it ��� the fact that I���d hand you a business card that read ���VP��� and you���d leap to the immediate assumption: ���Since his title is VP, he must be important. I should be talking to him���. I understand this is how a lot of the world works, but it���s precisely this type of reasoning that makes titles toxic.

So it’s not even that I think that job titles are bad at what they’re trying to do …I think that what they’re trying to do is bad.

December 11, 2015

Where to start?

A lot of the talks at this year���s Chrome Dev Summit were about progressive web apps. This makes me happy. But I think the focus is perhaps a bit too much on the ���app” part on not enough on ���progressive”.

What I mean is that there���s an inevitable tendency to focus on technologies���Service Workers, HTTPS, manifest files���and not so much on the approach. That���s understandable. The technologies are concrete, demonstrable things, whereas approaches, mindsets, and processes are far more nebulous in comparison.

Still, I think that the most important facet of building a robust, resilient website is how you approach building it rather than what you build it with.

Many of the progressive app demos use server-side and client-side rendering, which is great ���but that aspect tends to get glossed over:

Browsers without service worker support should always be served a fall-back experience. In our demo, we fall back to basic static server-side rendering, but this is only one of many options.

I think it���s vital to not think in terms of older browsers ���falling back��� but to think in terms of newer browsers getting a turbo-boost. That may sound like a nit-picky semantic subtlety, but it���s actually a radical difference in mindset.

Many of the arguments I���ve heard against progressive enhancement���like Tom���s presentation at Responsive Field Day���talk about the burdensome overhead of having to bolt on functionality for older or less-capable browsers (even Jake has done this). But the whole point of progressive enhancement is that you start with the simplest possible functionality for the greatest number of users. If anything gets bolted on, it���s the more advanced functionality for the newer or more capable browsers.

So if your conception of progressive enhancement is that it���s an added extra, I think you really need to turn that thinking around. And that���s hard. It���s hard because you need to rewire some well-engrained pathways

There is some precedence for this though. It was really, really hard to convince people to stop using tables for layout and starting using CSS instead. That was a tall order���completely change the way you approach building on the web. But eventually we got there.

When Ethan came out with Responsive Web Design, it was an equally difficult pill to swallow, not because of the technologies involved���media queries, percentages, etc.���but because of the change in thinking that was required. But eventually we got there.

These kinds of fundamental changes are inevitably painful ���at first. After years of building websites using tables for layout, creating your first CSS-based layout was demoralisingly difficult. But the second time was a bit easier. And the third time, easier still. Until eventually it just became normal.

Likewise with responsive design. After years of building fixed-width websites, trying to build in a fluid, flexible way was frustratingly hard. But the second time wasn���t quite as hard. And the third time ���well, eventually it just became normal.

So if you���re used to thinking of the all-singing, all-dancing version of your site as the starting point, it���s going to be really, really hard to instead start by building the most basic, accessible version first and then work up to the all-singing, all-dancing version ���at first. But eventually it will just become normal.

For now, though, it���s going to take work.

The recent redesign of Google+ is true case study in building a performant, responsive, progressive site:

With server-side rendering we make sure that the user can begin reading as soon as the HTML is loaded, and no JavaScript needs to run in order to update the contents of the page. Once the page is loaded and the user clicks on a link, we do not want to perform a full round-trip to render everything again. This is where client-side rendering becomes important ��� we just need to fetch the data and the templates, and render the new page on the client. This involves lots of tradeoffs; so we used a framework that makes server-side and client-side rendering easy without the downside of having to implement everything twice ��� on the server and on the client.

This took work. Had they chosen to rely on client-side rendering alone, they could have built something quicker. But I think it was worth laying that solid foundation. And the next time they need to build something this way, it���s going to be less work. Eventually it just becomes normal.

But it all starts with thinking of the server-side rendering as the default. Server-side rendering is not a fallback; client-side rendering is an enhancement.

That���s exactly the kind of mindset that enables Jack Franklin to build robust, resilient websites:

Now we���ll build the React application entirely on the server, before adding the client-side JavaScript right at the end.

I had a chance to chat briefly with Jack at the Edge conference in London and I congratulated him on the launch of a Go Cardless site that used exactly this technique. He told me that the decision to flip the switch and make it act as a single page app came right at the end of the project. Server-side rendering was the default; client-side rendering was added later.

The key to building modern, resilient, progressive sites doesn���t lie in browser technologies or frameworks; it lies in how we think about the task at hand; how we approach building from the ground up rather than the top down. Changing the way we fundamentally think about building for the web is inevitably going to be challenging ���at first. But it will also be immensely rewarding.

December 2, 2015

A year of learning

An anniversary occurred last week that I don���t want to let pass by unremarked. On November 24th of last year, I made this note:

Welcoming @LotteJackson on her first day at @Clearleft.

Charlotte���s start at Clearleft didn���t just mark a new chapter for her���it also marked a big change for me. I���ve spent the last year being Charlotte���s mentor. I had no idea what I was doing.

Lyza wrote a post about mentorship a while back that really resonated with me:

I had no idea what I was doing. But I was going to do it anyway.

Hiring Charlotte coincided with me going through one of those periods when I ask myself, ���Just what is it that I do anyway?��� (actually, that���s pretty much a permanent state of being but sometimes it weighs heavier than others).

Let me back up a bit and explain how Charlotte ended up at Clearleft in the first place.

Clearleft has always been a small agency, deliberately so. Over the course of ten years, we might hire one, maybe two people a year. Because of that small size, anyone joining the company had to be able to hit the ground running. To put it into jobspeak, we could only hire ���senior” level people���we just didn���t have the resources to devote to training up anyone less experienced.

That worked pretty well for a while but as the numbers at Clearleft began to creep into the upper teens, it became clear that it wasn���t a sustainable hiring policy���most of the ���senior” people are already quite happily employed. So we began to consider the possibility of taking on somebody in a ���junior” role. But we knew we could only do that if it were somebody else���s role to train them. Like I said, this was ���round about the time I was questioning exactly what my role was anyway, so I felt ready to give it a shot.

Hiring Charlotte was an experiment for Clearleft���could we hire someone in a ���junior” position, and then devote enough time and resources to bring them up to a ���senior” level? (those quotes are air quotes���I find the practice of labelling people or positions ���junior” or ���senior” to be laughably reductionist; you might as well try to divide the entire web into ���apps” and ���sites”).

Well, it might only be one data point, but this experiment was a resounding success. Charlotte is a fantastic front-end developer.

Now I wish I could take credit for that, but I can���t. I���ve done my best to support, encourage, and teach Charlotte but none of that would matter if it weren���t for Charlotte���s spirit: she���s eager to learn, eager to improve, and crucially, eager to understand.

Christian wrote something a while back that stuck in my mind. He talked about the Full Stack Overflow Developer:

Full Stack Overflow developers work almost entirely by copying and pasting code from Stack Overflow instead of understanding what they are doing. Instead of researching a topic, they go there first to ask a question hoping people will just give them the result.

When we were hiring for the junior developer role that Charlotte ended up filling, I knew exactly what I didn���t want and Christian described it perfectly.

Conversely, I wasn���t looking for someone with plenty of knowledge���after all, knowledge was one of the things that I could perhaps pass on (stop sniggering). As Philip Walton puts it:

The longer I work on the web, the more I realize that what separates the good people from the really good people isn���t what they know; it���s how they think. Obviously knowledge is important���critical in some cases���but in a field that changes so quickly, how you go about acquiring that knowledge is always going to be more important (at least in the long term) than what you know at any given time. And perhaps most important of all: how you use that knowledge to solve everyday problems.

What I was looking for was a willingness���nay, an eagerness���to learn. That���s what I got with Charlotte. She isn���t content to copy and paste a solution; she wants to know why something works.

So a lot of my work for the past year has been providing a framework for Charlotte to learn within. It���s been less of me teaching her, and more of me pointing her in the right direction to teach herself.

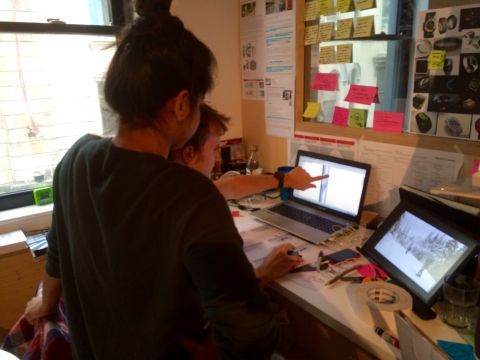

There has been some traditional instruction along the way: code reviews, pair programming, and all of that stuff, but often the best way for Charlotte to learn is for me to get out of the way. Still, I���m always on hand to try to answer any questions or point her in the direction of a solution. I think sometimes Charlotte might regret asking me things, like a simple question about the box model.

I���ve really enjoyed those moments of teaching. I haven���t always been good at it. Sometimes, especially at the beginning, I���d lose patience. When that happened, I���d basically be an asshole. Then I���d realise I was being an asshole, apologise, and try not to do it again. Over time, I think I got better. I hope that those bursts of assholery are gone for good.

Now that Charlotte has graduated into a fully-fledged front-end developer, it���s time for me to ask myself once again, ���Just what is it that I do anyway?���

But at least now I have some more understanding about what I like to do. I like to share. I like to teach.

I can very much relate to Chen Hui Jing���s feelings:

I suppose for some developers, the job is a just a means to earn a paycheck. But I truly hope that most of us are in it because this is what we love to do. And that we can raise awareness amongst developers who are earlier in their journey than ourselves on the importance of best practices. Together, we can all contribute to building a better web.

I���m writing this to mark a rewarding year of teaching and learning. Now I need to figure out how to take the best parts of that journey and apply it to the ongoing front-end development work at Clearleft with Mark, Graham, and now, Charlotte.

I have no idea what I���m doing. But I���m going to do it anyway.

November 29, 2015

Cache-limiting in Service Workers ���again

Okay, so remember when I was talking about cache-limiting in Service Workers?

It wasn’t quite working:

The cache-limited seems to be working for pages. But for some reason the images cache has blown past its allotted maximum of 20 (you can see the items in the caches under the ���Resources��� tab in Chrome under ���Cache Storage���).

This is almost certainly because I���m doing something wrong or have completely misunderstood how the caching works.

Sure enough, I was doing something wrong. Thanks to Brandon Rozek and Jonathon Lopes for talking me through the problem.

In a nutshell, I’m mixing up synchronous instructions (like “delete the first item from a cache”) with asynchronous events (pretty much anything to do with fetching and caching with Service Workers).

Instead of trying to clean up a cache at the same time as I’m adding a new item to it, it’s better for me to have clean-up function to run at a different time. So I’ve written that function:

var trimCache = function (cacheName, maxItems) {

caches.open(cacheName)

.then(function (cache) {

cache.keys()

.then(function (keys) {

if (keys.length > maxItems) {

cache.delete(keys[0])

.then(trimCache(cacheName, maxItems));

}

});

});

};

But now the question is ���when should I run this function? What’s a good event to trigger a clean-up? I don’t think the activate event is going to work. I probably want something like background sync but I don’t think that’s quite ready for primetime yet.

In the meantime, if you can think of a good way of doing a periodic clean-up like this, please let me know.

Anyone? Anyone? Bueller?

In other Service Worker news, I’ve added a basic Service Worker to The Session. It caches static caches���CSS and JavaScript���and keeps another cache of site section index pages topped up. If the network connection drops (or the server goes down), there’s an offline page that gives a few basic options. Nothing too advanced, but better than nothing.

November 28, 2015

Metadata markup

When something on your website is shared on Twitter or Facebook, you probably want a nice preview to appear with it, right?

For Twitter, you can use Twitter cards���a collection of meta elements you place in the head of your document.

For Facebook, you can use the grandiosely-titled Open Graph protocol���a collection of meta elements you place in the head of your document.

What’s that you say? They sound awfully similar? Why, no! I mean, just look at the difference. Here’s how you’d mark up a blog post for Twitter:

Whereas here’s how you’d mark up the same blog post for Facebook:

See? Completely different.

Okay, I’ll attempt to dial down my sarcasm, but I find this wastage annoying. It adds unnecessary complexity, which in turn, I suspect, puts a lot of people off even trying to implement this stuff. In short: 927.

We’ve seen this kind of waste before. I remember when Netscape and Microsoft were battling it out in the browser wars: Internet Explorer added a proprietary acronym element, while Netscape added the abbr element. They both basically did the same thing. For years, Internet Explorer refused to implement the abbr element out of sheer spite.

A more recent example of the negative effects of competing standards was on display at this year’s Edge conference in London. In a session on front-end data, Nolan Lawson decried the fact that developers weren’t making more use of the client-side storage options available in browsers today. After all, there are so many to choose from: LocalStorage, WebSQL, IndexedDB…

(Hint: if developers aren’t showing much enthusiasm for the latest and greatest API which is sooooo much better than the previous APIs they were also encouraged to use at the time, perhaps their reticence is understandable.)

Anyway, back to metacrap.

Matt has written a guide to what you need to do in order to get a preview of your posts to appear in Slack. Fortunately the answer is not yet another collection of meta elements to place in the head of your document. Instead, Slack piggybacks on the existing combatants: oEmbed, Twitter Cards, and Open Graph.

So to placate both Twitter and Facebook (with Slack thrown in for good measure), your metadata markup is supposed to look something like this:

There are two things on display here: redundancy, and also, redundancy.

Now the eagle-eyed amongst you will have spotted a crucial difference between the Twitter metacrap and the Facebook metacrap. The Twitter metacrap uses the name attribute on the meta element, whereas the Facebook metacrap uses the property attribute. Technically, there is no property attribute in HTML���it’s an RDFa thing. But the fact that they’re using two different attributes means that we can squish the meta elements together like this:

There. I saved you at least a little bit of typing.

The metacrap situation is even more ridiculous for “add to homescreen”/”pin to start”/whatever else browser makers can’t agree on…

Microsoft:

Apple:

(Repeat four or five times with different variations of icon sizes, and be sure to create icons with new sizes after every. single. Apple. keynote.)

Fortunately Google, Opera, and Mozilla appear to be converging on using an external manifest file:

Perhaps our long national nightmare of balkanised metacrap is finally coming to an end, and clearer heads will prevail.

November 21, 2015

Notice

We’ve been doing a lot of soul-searching at Clearleft recently; examining our values; trying to make implicit unspoken assumptions explicit and spoken. That process has unearthed some activities that have been at the heart of our company from the very start���sharing, teaching, and nurturing. After all, Clearleft would never have been formed if it weren’t for the generosity of people out there on the web sharing with myself, Andy, and Richard.

One of the values/mottos/watchwords that’s emerging is “Share what you learn.” I like that a lot. It echoes the original slogan of the World Wide Web project, “Share what you know.” It’s been a driving force behind our writing, speaking, and events.

In the same spirit, we’ve been running internship programmes for many years now. John is the latest of a long line of alumni that includes Anna, Emil, and James.

By the way���and this should go without saying, but apparently it still needs to be said���the internships are always, always paid. I know that there are other industries where unpaid internships are the norm. I’ve even heard otherwise-intelligent people defend those unpaid internships for the experience they offer. But what kind of message does it send to someone about the worth of their work when you withhold payment for it? Our industry is young. Let’s not fall foul of the pernicious traps set by older industries that have habitualised exploitation.

In the past couple of years, Andy concocted a new internship scheme:

So this year we decided to try a different approach by scouring the end of year degree shows for hot new talent. We found them not in the interaction courses as we���d expected, but from the worlds of Product Design, Digital Design and Robotics. We assembled a team of three interns , with a range of complementary skills, gave them a space on the mezzanine floor of our new building, and set them a high level brief.

The first such programme resulted in Ch��ne. The latest Clearleft internship project has just come to an end. The result is Notice.

This time ‘round, the three young graduates were Chloe, Chris and Monika. They each have differing but complementary skill sets: Chloe is a user interface designer; Chris is a product designer; Monika is an artist who knows her way around hardware hacking and coding.

Once again, they were set a fairly loose brief. They should come up with something “to enrich the lives of local residents” and it should have a physical and digital component to it.

They got stuck in to researching and brainstorming ideas. At the end of each week, we’d all gather together to get a playback of what they were coming up with. It was at these playbacks that the interns were introduced to a concept that they will no doubt encounter again in their professional lives: seagulling AKA the swoop and poop. For once, it was the Clearlefties who were in the position of being swoop-and-poopers, rather than swoop-and-poopies.

As the midway point of the internship approached, there were some interesting ideas, but no clear “winner” to pursue. Something else was happening around this time too: dConstruct 2015.

The interns pitched in with helping out at the event, and in return, we kidnapped some of the speakers���namely John Willshire and Chris Noessel���to offer them some guidance.

There was also plenty of inspiration to be had from the dConstruct talks themselves. One talk in particular struck a chord: Dan Hill’s The City Of Things …especially the bit where he railed against the terrible state of planning application notices:

Most of the time, it ends up down the bottom of the lamppost���soiled and soggy and forgotten. This should be an amazing thing!

Hmm… sounds like something that could enrich the lives of local residents.

Not long after that, Matt Webb came to visit. He encouraged the interns to focus in on just the two ideas that really excited them rather then the 5 or 6 that they were considering. So at the next playback, they presented two potential projects���one about biking and the other about city planning. They put it to a vote and the second project won by a landslide.

That was the genesis of Notice. After that, they pulled out all the stops.

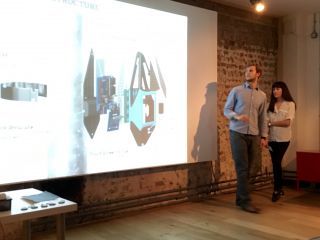

Not content with designing one device, they came up with a range of three devices to match the differing scope of planning applications. They set about making a working prototype of the device intended for the most common applications.

Last week marked the end of the project and the grand unveiling.

They’ve done a great job. All the details are on the website, include this little note I wrote about the project:

This internship programme was an experiment for Clearleft. We wanted to see what would happen if you put through talented young people in a room together for three months to work on a fairly loose brief. Crucially, we wanted to see work that wasn���t directly related to our day-��to-��day dealings with web design.

We offered feedback and advice, but we received so much more in return. Monika, Chloe, and Chris brought an energy and enthusiasm to the Clearleft office that was invigorating. And the quality of the work they produced together exceeded our wildest expectations.

We hereby declare this experiment a success!

Personally, I think the work they’ve produced is very strong indeed. It would be a shame for it to end now. Perhaps there’s a way that it could be funded for further development. Here’s hoping.

As impressed as I am with the work, I’m even more impressed with the people. They’re not just talented and hard work���they���re a jolly nice bunch to have around.

November 19, 2015

Cache-limiting in Service Workers

When I was documenting my first Service Worker I mentioned that every time a user requests a page, I store that page in a cache for later (offline) use:

Right now I���m stashing any HTML pages the user visits into the cache. I don���t think that will get out of control���I imagine most people only ever visit just a handful of pages on my site. But there���s the chance that the cache could get quite bloated. Ideally I���d have some way of keeping the cache nice and lean.

I was thinking: maybe I should have a separate cache for HTML pages, and limit the number in that cache to, say, 20 or 30 items. Every time I push something new into that cache, I could pop the oldest item out.

I could imagine doing something similar for images: keeping a cache of just the most recent 10 or 20.

Well I’ve done that now. Here’s the updated Service Worker code.

I’ve got a function in there called stashInCache that takes a few arguments: which cache to use, the maximum number of items that should be in there, the request (URL), and the response:

var stashInCache = function(cacheName, maxItems, request, response) {

caches.open(cacheName)

.then(function (cache) {

cache.keys()

.then(function (keys) {

if (keys.length < maxItems) {

cache.put(request, response);

} else {

cache.delete(keys[0])

.then(function() {

cache.put(request, response);

});

}

})

});

};

It looks to see if the current number of items in the cache is less than the specified maximum:

if (keys.length < maxItems)

If so, go ahead and cache the item:

cache.put(request, response);

Otherwise, delete the first item from the cache and then put the item in the cache:

cache.delete(keys[0])

.then(function() {

cache.put(request, response);

});

For HTML requests, I limit the cache to 35 items:

var copy = response.clone();

var cacheName = version + pagesCacheName;

var maxItems = 35;

stashInCache(cacheName, maxItems, request, copy);

return response;

For images, I’m limiting the cache to 20 items:

var copy = response.clone();

var cacheName = version + imagesCacheName;

var maxItems = 20;

stashInCache(cacheName, maxItems, request, copy);

return response;

Here’s my updated Service Worker.

The cache-limited seems to be working for pages. But for some reason the images cache has blown past its allotted maximum of 20 (you can see the items in the caches under the “Resources” tab in Chrome under “Cache Storage”).

This is almost certainly because I’m doing something wrong or have completely misunderstood how the caching works. If you can spot what I’m doing wrong, please let me know.

November 17, 2015

Brighton device lab

People of Brighton (and environs), I have a reminder for you. Did you know that there is an open device lab in the Clearleft office?

That’s right! You can simply pop in at any time and test your websites on Android, iOS, Windows Phone, Blackberry, Kindles, and more.

The address is 68 Middle Street. Ring the “Clearleft” buzzer and say you’re there to use the device lab.. There’ll always be somebody in the office. They’ll buzz you in and you can take the lift to the first floor. No need to make a prior appointment���feel free to swing by whenever you like.

There is no catch. You show up, test your sites on whatever devices you want, and maybe even stick around for a cup of tea.

Tell your friends.

I was doing a little testing this morning, helping Charlotte with a pesky bug that was cropping up on an iPad running iOS 8. To get the bottom of the issue, I needed to be able to inspect the DOM on the iPad. That turns out to be fairly straightforward (as of iOS 6):

Plug the device into a USB port on your laptop using a lightning cable.

Open Safari on the device and navigate to the page you want to test.

Open Safari on your laptop.

From the “Develop” menu in your laptop’s Safari, select the device.

Use the web inspector on your laptop’s Safari to inspect elements to your heart’s content.

It’s a similar flow for Android devices:

Plug the device into a USB port on your laptop.

Open Chrome on the device and navigate to the page you want to test.

Open Chrome on your laptop.

Type chrome://inspect into the URL bar of Chrome on your laptop.

Select the device.

On the device, grant permission (a dialogue will have appeared by now).

Use developer tools on your laptop’s Chrome to inspect elements to your heart’s content.

November 16, 2015

Full Meaning Ampersand

In the space of one week, Brighton played host to three excellent conferences:

FF Conf on Friday, November 6th,

Meaning on Thursday, November 12th, and

Ampersand on Friday, November 13th.

I made it to two of the three���alas, I couldn���t make it to Meaning this year because it clashed with Richard���s superb workshop on Responsive Web Typography.

FF Conf and Ampersand were both superb. Despite having very different subject matter, the two events have a lot in common. They���re both affordable, one-day, single-track, focused gatherings.

Both events really benefit from having a mastermind overseeing the line-up: Remy in the case of FF Conf, and Richard in the case of Ampersand. That really paid off. Both events were superbly curated, with a diverse mix of speakers and topics.

It was really interesting to see both conferences break out of the boundary of what happens inside web browsers. At FF Conf, we were treated to talks on linguistics and inclusivity. At Ampersand, we enjoyed talks on physiology and culture. But of course we also had the really deep dives into the minutest details of JavaScript, SVG, typography, and layout.

Videos will be available from FF Conf, and audio will be available from Ampersand. Be sure to check them out once they���re released.

Jeremy Keith's Blog

- Jeremy Keith's profile

- 55 followers