Paul Gilster's Blog, page 40

November 12, 2021

TESS: An Unusual Circumbinary Discovery

Circumbinary planets are those that orbit two stars, a small but growing category of worlds — we’ve detected some 14 thus far, thanks to Kepler’s good work, and that of the Transiting Exoplanet Survey Satellite (TESS). The latest entry, TIC 172900988, illustrates the particular challenge such planets represent. Transit photometry is a standard method for finding planets, detecting the now familiar drop in starlight as the planet moves between us and the surface of the host star. Kepler found thousands of exoplanets this way. But when two stars are involved, things get complicated.

Image: The newly discovered planet, TIC 172900988b, is roughly the radius of Jupiter, and several times more massive, but it orbits its two stars in less than one year. This world is hot and unlike anything in our Solar System. Credit: PSI/Pamela L. Gay.

Three transits are required to determine the orbital path of a planet. For us to make a detection, a circumbinary planet will have to transit both stars, but the timing of the transits can vary. The planet may transit the first star, then the second, before returning to transit the first. Nader Haghighipour (Planetary Science Institute) points out that the orbital period of a circumbinary planet will always be much longer than the orbital period of the binary star, and that means detecting three transits will be problematic for a telescope like TESS, which observes each portion of sky for only 27 days.

The paper on the discovery of TIC 172900988b lays out these problems:

Finding transiting planets orbiting around binary stars is much more difficult than around single stars. The transits are shallower (due to the constant ‘third-light’ dilution from the binary companion), noisier (due to starspots and stellar activity from two stars), and can be blended with the stellar eclipses. This difficulty is greatly compounded when the observations cover a single conjunction and, even if multiple transits are detected as in the system presented here, they are neither periodic, nor have the same depth and duration… The transit times and shapes depend on the orientation and motion of the binary stars and of the CBP [circumbinary planet] at the observed times. The complexity of such transits is both a curse and a blessing…

A blessing, the authors argue, because such a detection yields information “richer than what can be obtained from a single transit of a single-star planet,” offering better estimation of the planet’s orbital period.

Image: A newly discovered planet was observed in the system TIC 172900988. In TESS data, it passed in front of the primary star (right) and 5 days later (shown) passed in front of the second star (left). These stars are just over 30% larger than the Sun, and differ very little in size. Credit: PSI/Pamela L. Gay.

Haghighipour is part of a team of astronomers with circumbinary planet experience; he also contributed to a 2020 paper in The Astronomical Journal that produced a technique for discovering circumbinary planets using only two transits, one across each star during the same conjunction. It was this method, ideally suited for TESS, that led the same team to make the just announced discovery of TIC 172900988b. This is the first TESS circumbinary planet to be found using these methods.

TIC 172900988b takes 200 days to complete a full orbit of the binary system. The planet is a gas giant of Jupiter size, the most massive transiting circumbinary planet found thus far. The team, led by Veselin B. Kostov (SETI Institute), observed it transit the primary star, followed five days later by a transit of the secondary, as the binary eclipsed itself over a 20-day orbit.

The Kepler mission discovered its circumbinary worlds by finding pairs of transits during a single conjunction, making it clear that the phenomenon is common. In fact, Jean Schneider and Michel Chevreton (both at the Paris Observatory) analyzed this likely observational signal as far back as 1990 in a paper for Astronomy and Astrophysics. Now TESS has a circumbinary discovery of its own, despite its much shorter dwell time on the stars in its field. Adds Kostov:

“The occurrence of multiple closely-spaced transits during one orbit is a unique observation signature of transiting circumbinary planets. This is a geometrical phenomenon that provides a new planet detection method. The discovery of TIC 172900988b is the first demonstration that the method works.”

Image: This is Figure 5 from the paper. Caption: The photometric data shown in Figure 4 phase-folded on a linear period of P = 19.65802 days. The left panel shows the primary eclipse and the right panel shows the secondary eclipse. The different data sets are vertically offset in the lower panels for clarity. The phase change of the secondary eclipse relative to the primary—indicative of the apsidal motion of the binary—is clearly seen in the right panels. Credit: Kostov et al.

We learn in the paper’s analysis of the detection that no further data from TESS will become available on this planet, making future study the province of other instruments. From the paper:

We note that TESS will observe the target again in Sectors 44 through 47 (2021 October to 2022 January). Unfortunately, it will miss the predicted transits for the corresponding conjunctions by several weeks. Thus follow-up observations from other instruments are key for strongly constraining the orbit and mass of the CBP [circumbinary planet]. In particular, observing the predicted 2022 February-March conjunction of the CBP is critical for solving the currently-ambiguous orbit of the planet. As a relatively bright target (V = 10.141 mag), the system is accessible for high resolution spectroscopy, e.g. Rossiter-McLaughlin effect, transit spectroscopy. TIC 172900988 demonstrates the discovery potential of TESS for circumbinary planets with orbital periods greatly exceeding the duration of the observing window.

The paper is Kostov et al., “TIC 172900988: A Transiting Circumbinary Planet Detected in One Sector of TESS Data,” The Astronomical Journal 162, No. 6 (10 November 2021), 234 (abstract / preprint).

November 10, 2021

SPARCS: Zeroing in on M-dwarf Flares

Although we’ve been talking this week about big telescopes, from extremely large designs like the Thirty Meter Telescope and the European Extremely Large Telescope to the space-based HabEx/LUVOIR descendant prioritized by Astro2020, small instruments continue to do interesting work around the edges. I just noticed a tiny one called the Star-Planet Activity Research CubeSat (SPARCS) that fills a gap in our study of M-dwarfs, those small stars whose flares are so problematic for habitability.

Under development at Arizona State University, the space-based SPARCS is just halfway into its development phase, but let’s take a look at it in light of ongoing work on M-dwarf planets, because it bodes well for turning theories about flare activity into data that can firm up our understanding. The problem is that while theoretical studies delve into ultraviolet flaring on these stars, the longest intensive UV monitoring on an M-dwarf done thus far has been a thirty hour effort with the Hubble instrument.

We need more, which is why the SPARCS idea emerged. A team of researchers led by ASU’s Evgenya Shkolnik has produced an overview of the NASA-funded mission’s science drivers and its intention of deepening our understanding of star-planet interactions. “Know thy star, know thy planet,. . . especially in the ultraviolet (UV),” comments the team in their abstract, which also points to the necessity of data collection for these intensely studied stars, ubiquitous in the galaxy and known to host interesting planets like Proxima Centauri b.

Image: An example of M-dwarf flaring. DG CVn, a binary consisting of two red dwarf stars shown here in an artist’s rendering, unleashed a series of powerful flares seen by NASA’s Swift. At its peak, the initial flare was brighter in X-rays than the combined light from both stars at all wavelengths under typical conditions. Credit: NASA’s Goddard Space Flight Center/S. Wiessinger.

Can such a world be habitable? Recent observations have shown that flare events produce a more severe flux increase in the ultraviolet than the optical; a flare peaking on the order of 0.01x the star’s quiescent flux in the optical, write the authors, can at UV wavelengths brighten by a factor of 14000. UV ‘superflare’ events — as much as 10,000 times more energetic than the flares produced by our G-class Sun — can produce 200x flux increases that are expected to occur on a daily basis on young, active M-dwarfs.

Thus habitability can be compromised, with UV radiation damaging planetary atmospheres, eroding ozone and producing lethal levels of radiation at the surface. An Earth-like planet in the habitable zone can likewise be subject to methane depletion under the kind of flaring Proxima Centauri has been known to produce. Thus the composition of an M-dwarf planet’s atmosphere is subject to interactions with its star that may prevent life from ever arising, or drastically affect its development.

SPARCS is a CubeSat observatory carrying a 9-cm telescope and the associated gear to perform photometric monitoring of M-dwarf flare activity in the near (258−308 nm) and far ultraviolet (153−171 nm). The target: 20 M-dwarfs in a range of ages from 10 million to 5 billion years old, examined during a mission lifetime of one year. Planned for launch in 2023 into a heliosynchronous orbit that offers “decent thermal stability and optimized continuity in target monitoring,” SPARCS will track flare color, energies, occurrence rate and duration on active as well as inactive M-dwarfs.

The authors believe the observatory will also improve our atmospheric models for M-dwarf planets, useful information as we look toward future biosignature investigations, and helpful as we fill an obvious gap in our data on this class of star. The software onboard is interesting in itself:

The payload software is able to run monitoring campaigns at constant detector exposure time and gain, but due to the expected high amplitudes of M dwarf UV flares, observations throughout the nominal mission will be conducted using a feature of the software that autonomously adjusts detector exposure times and gains to mitigate the occurrence of pixel saturation during observations of flaring events. SPARCS will be the first space-based stellar astrophysics observatory that adopts such an onboard autonomous exposure control.

So we have a small space telescope that will be able to monitor its targets in both near- and far-ultraviolet wavelengths simultaneously, managed by a dedicated onboard payload processor that allows the observatory to adjust for pixel saturation during flare events. This “autonomous dynamic exposure control algorithm” is a story in itself, adding depth to a mission to investigate the most extremely variable stars in the

Hertzsprung–Russell diagram. SPARCS should help us learn whether these long-lived stars can allow planetary habitability as they age into a less dramatic maturity.

The paper is Ramiaramanantsoa et al., “Time-Resolved Photometry of the High-Energy Radiation of M Dwarfs with the Star-Planet Activity Research CubeSat (SPARCS),” accepted for publication in Astronomische Nachrichten (preprint).

November 9, 2021

The Exoplanet Pipeline

Looking into Astro2020’s recommendations for ground-based astronomy, I was heartened with the emphasis on ELTs (Extremely Large Telescopes), as found within the US-ELT project to develop the Thirty Meter Telescope and the Giant Magellan Telescope, both now under construction. Such instruments represent our best chance for studying exoplanets from the ground, even rocky worlds that could hold life. An Astro2020 with different priorities could have spelled the end of both these ELT efforts in the US even as the European Extremely Large Telescope, with its 40-meter mirror, moves ahead, with first light at Cerro Armazones (Chile) projected for 2027.

So the ELTs persist in both US and European plans for the future, a context within which to consider how planet detection continues to evolve. So much of what we know about exoplanets has come from radial velocity methods. These in turn rely critically on spectrographs like HARPS (High Accuracy Radial Velocity Planet Searcher), which is installed at the European Southern Observatory’s 3.6m telescope at La Silla in Chile, and its successor ESPRESSO (Echelle Spectrograph for Rocky Exoplanet and Stable Spectroscopic Observations). We can add the NEID spectrometer on the WIYN 3.5m telescope at Kitt Peak to the mix, now operational and in the hunt for ever tinier Doppler shifts in the light of host stars.

We’re measuring the tug a planet puts on its star by looking radially — how is the star pulled toward us, then away, as the planet moves along its orbit? Given that the Earth produces a movement of a mere 9 centimeters per second on the Sun, it’s heartening to see that astronomers are closing on that range right now. NEID has demonstrated a precision of better than 25 centimeters per second in the tests that led up to its commissioning, giving us another tool for exoplanet detection and confirmation.

But this is a story that also reminds us of the vast amount of data being generated in such observations, and the methods needed to get this information distributed and analyzed. On an average night, NEID will collect about 150 gigabytes of data that is sent to Caltech, and from there via a data management network called Globus to the Texas Advanced Computing Center (TACC) for analysis and processing. TACC, in turn, extracts metadata and returns the data to Caltech for further analysis. The results are made available by the NASA Exoplanet Science Institute via its NEID Archive.

Image: The NEID instrument is shown mounted on the 3.5-meter WIYN telescope at the Kitt Peak National Observatory. Credit: NSF’s National Optical-Infrared Astronomy Research Laboratory/KPNO/NSF/AURA.

What a contrast with the now ancient image of the astronomer on a mountaintop coming away with photographic plates that would be analyzed with instruments like the blink comparator Clyde Tombaugh used to discover Pluto in 1930. The data now come in avalanche form, with breakthrough work occurring not only on mountaintops but in the building of data pipelines like these that can be generalized for analysis on supercomputers. The vast caches of data contain the seeds of future discovery.

Joe Stubbs leads the Cloud & Interactive Computing group at TACC:

“NEID is the first of hopefully many collaborations with the NASA Jet Propulsion Laboratory (JPL) and other institutions where automated data analysis pipelines run with no human-in-the-loop. Tapis Pipelines, a new project that has grown out of this collaboration, generalizes the concepts developed for NEID so that other projects can automate distributed data analysis on TACC’s supercomputers in a secure and reliable way with minimal human supervision.”

NEID also makes a unique contribution to exoplanet detection by being given over to the analysis of activity on our own star. Radial velocity is vulnerable to confusion over starspots — created by convection on the surface of exoplanet host stars and mistaken for planetary signatures. The plan is to use NEID during daylight hours with a smaller solar telescope developed for the purpose to track this activity. Eric Ford (Penn State) is an astrophysicist at the university where NEID was designed and built:

“Thanks to the NEID solar telescope, funded by the Heising-Simons Foundation, NEID won’t sit idle during the day. Instead, it will carry out a second mission, collecting a unique dataset that will enhance the ability of machine learning algorithms to recognize the signals of low-mass planets during the nighttime.”

Image: A new instrument called NEID is helping astronomers scan the skies for alien planets. TACC supports NEID with supercomputers and expertise to automate the data analysis of distant starlight, which holds evidence of new planets waiting to be discovered. WIYN telescope at the Kitt Peak National Observatory. Credit: Mark Hanna/NOAO/AURA/NSF.

Modern astronomy in a nutshell. We’re talking about data pipelines operational without human intervention, and machine-learning algorithms that are being tuned to pull exoplanet signals out of the noise of starlight. In such ways does a just commissioned spectrograph contribute to exoplanetary science through an ever-flowing data network now indispensable to such work. Supercomputing expertise is part of the package that will one day extract potential biosignatures from newly discovered rocky worlds. Bring on the ELTs.

November 5, 2021

Two Takes on the Extraterrestrial Imperative

Topping the list of priorities for the Decadal Survey on Astronomy and Astrophysics 2020 (Astro2020), just released by the National Academy of Sciences, Engineering and Medicine, is the search for extraterrestrial life. Entitled Pathways to Discovery in Astronomy and Astrophysics for the 2020s, the report can be downloaded as a free PDF here. At 614 pages, this is not light reading, but it does represent an overview in which to place continuing work on exoplanet discovery and characterization.

In the language of the report:

“Life on Earth may be the result of a common process, or it may require such an unusual set of circumstances that we are the only living beings within our part of the galaxy, or even in the universe. Either answer is profound. The coming decades will set humanity down a path to determine whether we are alone.”

A ~6 meter diameter space telescope capable of spotting exoplanets 10 billion times fainter than their host stars, thought to be feasible by the 2040s, leads the observatory priorities. As forwarded to me by Centauri Dreams regular John Walker, the survey recommends an instrument covering infrared, optical and ultraviolet wavelengths with high-contrast imaging and spectroscopy. Its goal: Searching for biosignatures in the habitable zone. Cost is estimated at an optimistic $11 billion.

I say ‘optimistic’ because of the cost overruns we’ve seen in past missions, particularly JWST. But perhaps we’re learning how to rein in such problems, according to Joel Bregman (University of Michigan), chair of the AAS Committee on Astronomy and Public Policy. Says Bregman:

“The Astro2020 report recommends a ‘technology development first’ approach in the construction of large missions and projects, both in space and on the ground. This will have a profound effect in the timely development of projects and should help avoid budgets getting out of control.”

Time will tell. It should be noted that a number of powerful telescopes, both ground- and space-based, have been built following the recommendations of earlier decadal surveys, of which this is the seventh.

Suborbital Building BlocksWe’re a long way from the envisioned instrument in terms of both technology and time, but the building blocks are emerging and the characterization of habitable planets is ongoing. What a difference between a flagship level space telescope like the one described by Astro2020 and the small, suborbital instrument slated for launch from the White Sands Missile Range in New Mexico on Nov. 8. SISTINE (Suborbital Imaging Spectrograph for Transition region Irradiance from Nearby Exoplanet host stars) is the second of a series of missions homing in on how the light of a star affects biosignatures on its planets.

False positives will likely bedevil biosignature searches as our technology improves. Principal investigator Kevin France (University of Colorado Boulder) points particularly to ultraviolet levels and their role in breaking down carbon dioxide, which frees oxygen atoms to form molecular oxygen, made of two oxygen atoms, or ozone, made of three. These oxygen levels can easily be mistaken for possible biosignatures. Says France: “If we think we understand a planet’s atmosphere but don’t understand the star it orbits, we’re probably going to get things wrong.”

Image: A sounding rocket launches from the White Sands Missile Range, New Mexico. Credit: NASA/White Sands Missile Range.

It’s a good point considering that early targets for atmospheric biosignatures will be M-dwarf stars. Now consider the early Earth, laden with perhaps 200 times more carbon dioxide than today, its atmosphere likewise augmented with methane and sulfur from volcanic activity in the era not long after its formation. It took molecular oxygen a billion and a half years to emerge as nothing more than a waste product produced during photosynthesis, eventually leading to the Great Oxygenation Event.

Oxygen becomes a biomarker on Earth, but it’s an entirely different question around other stars. M-dwarf stars like Proxima Centauri generate extreme levels of ultraviolet light, making France’s point that simple photochemistry can produce oxygen in the absence of living organisms. Bearing in mind that M-dwarfs make up as many as 80 percent of the stars in the galaxy, we may find ourselves with a number of putative biosignatures that turn out to be a reflection of these abiotic reactions. Aboard the spacecraft is a telescope and a spectrograph that will home in on ultraviolet light from 100 to 160 nanometers, which includes the range known to produce false positive biomarkers. The UV output in this range varies with the mass of the star; thus the need to sample widely.

SISTINE-2’s target is Procyon A. The craft will have a brief window of about five minutes from its estimated altitude of 280 kilometers to observe the star, with the instrument returning by parachute for recovery.

An F-class star larger and hotter than the Sun, Procyon A has no known planets, but what is at stake here is accurate determination of its ultraviolet spectrum. A reference spectrum for F-stars growing out of these observations of Procyon A and incorporating existing data on other F-class stars at X-ray, extreme ultraviolet and visible light is the goal. France says the next SISTINE target will be Alpha Centauri A and B.

Image: A size comparison of main sequence Morgan–Keenan classifications. Main sequence stars are those that fuse hydrogen into helium in their cores. The Morgan–Keenan system shown here classifies stars based on their spectral characteristics. Our Sun is a G-type star. SISTINE-2’s target is Procyon A, an F-type star. Credit: NASA GSFC.

Launch is to be aboard a Black Brant IX sounding rocket. And although it sounds like a small mission, SISTINE-2 will be working at wavelengths the Hubble Space Telescope cannot observe. Likewise, the James Webb Space Telescope will work at visible to mid-infrared wavelengths, making the SISTINE observations useful for frequencies that Webb cannot see. The mission also experiments with new optical coatings and what NASA describes as ‘novel UV detector plates’ for better reflection of extreme UV.

Image: SISTINE’s third mission, to be launched in 2022, will target Alpha Centauri A and B. Here we see the system in optical (main) and X-ray (inset) light. Only the two largest stars, Alpha Cen A and B, are visible. These two stars will be the targets of SISTINE’s third flight. Credit: Zdenek Bardon/NASA/CXC/Univ. of Colorado/T. Ayres et al.

November 3, 2021

White Dwarf Clues to Unusual Planetary Composition

The surge of interest in white dwarfs continues. We’ve known for some time that these remnants of stars like the Sun, having been through the red giant phase and finally collapsing into a core about the size of the Earth, can reveal a great deal about objects that have fallen into them. That would be rocky material from planetary objects that once orbited the star, just as the planets of our Solar System orbit the Sun in our halcyon, pre-red-giant era.

The study of atmospheric pollution in white dwarfs rests on the fact that white dwarfs that have cooled below 25,000 K have atmospheres of pure hydrogen or helium. Heavier elements sink rapidly to the stellar core at these temperatures, so the only source of elements higher than helium — metals in astronomy parlance — is through accretion of orbiting materials that cross the Roche limit and fall into the atmosphere.

These contaminants of stellar atmospheres are now the subject of a new investigation led by astronomer Siyi Xu (NSF NOIRLab), partnering with Keith Putirka (California State University, Fresno). Putirka is a geologist, and thus a good fit for this study. Working with Xu, an astronomer, he examined 23 white dwarfs whose atmospheres are found to be polluted by such materials. The duo took advantage of existing measurements of calcium, silicon, magnesium and iron from the Keck Observatory’s HIRES instrument (High-Resolution Echelle Spectrometer) in Hawai‘i, along with data from the Hubble Space Telescope, whose Cosmic Origins Spectrograph came into play.

Their focus is on the abundance of elements that make up the major part of rock on an Earth-like planet, especially silicon, which would imply the composition of rocks that would have existed on white dwarf planets before their disintegration and accretion. The variety of rock types that emerge is wider than found in the rocky planets of our inner Solar System. Some of them are unusual enough that the authors create new terms to describe them. Thus “quartz pyroxenites” and “periclase dunites.” None have analogs in our own system.

The finding has implications for planetary development, as Putirka explains:

“Some of the rock types that we see from the white dwarf data would dissolve more water than rocks on Earth and might impact how oceans are developed. Some rock types might melt at much lower temperatures and produce thicker crust than Earth rocks, and some rock types might be weaker, which might facilitate the development of plate tectonics.”

The paper goes into greater detail:

…while PWDs [polluted white dwarfs] might record single planets that have been destroyed and assimilated piecemeal, the pollution sources might also represent former asteroid belts, in which case the individual objects of these belts would necessarily be more mineralogically extreme. If current petrologic models may be extrapolated, though, PWDs with quartz-rich mantles…might create thicker crusts, while the periclase-saturated mantles could plausibly yield, on a wet planet like Earth, crusts made of serpentinite, which may greatly affect the kinds of life that might evolve on the resulting soils. These mineralogical contrasts should also control plate tectonics, although the requisite experiments on rock strength have yet to be carried out.

Image: Rocky debris, the pieces of a former rocky planet that has broken up, spiral inward toward a white dwarf in this illustration. Studying the atmospheres of white dwarfs that have been polluted by such debris, a NOIRLab astronomer and a geologist have identified exotic rock types that do not exist in our Solar System. The results suggest that nearby rocky exoplanets must be even stranger and more diverse than previously thought. Credit: NOIRLab/NSF/AURA/J. da Silva.

High levels of magnesium and low levels of silicon are found in the sample white dwarfs, suggesting to the authors that the source debris came from a planetary interior, the mantle rather than the crust. That contradicts some earlier papers reporting signs of crustal rocks as the original polluters, but Xu and Patirka believe that such rock occurs as no more than a small fraction of core and mantle components.

Adds Putirka:

“We believe that if crustal rock exists, we are unable to see it, probably because it occurs in too small a fraction compared to the mass of other planetary components, like the core and mantle, to be measured.”

The paper is Putirka & Xu, “Polluted white dwarfs reveal exotic mantle rock types on exoplanets in our solar neighborhood,” Nature Communications 12, 6168 (2 November 2021). Full text.

November 2, 2021

Going After Sagittarius A*

Only time will tell whether humanity has a future beyond the Solar System, but if we do have prospects among the stars — and I fervently hope that we do — it’s interesting to speculate on what future historians will consider the beginning of the interstellar era. Teasing out origins is tricky. You could label the first crossing of the heliopause by a functioning probe (Voyager 1) as a beginning, but neither the Voyagers nor the Pioneers (nor, for that matter, New Horizons) were built as interstellar missions.

I’m going to play the ‘future history’ game by offering my own candidate. I think the image of the black hole in the galaxy M87 marks the beginning of an era, one in which our culture begins to look more and more at the universe beyond the Solar System. I say that not because of what we found at M87, remarkable as it was, but because of the instrument used. The creation of a telescope that, through interferometry, can create an aperture the size of our planet speaks volumes about what a small species can accomplish. An entire planet is looking into the cosmos.

So will some future historian look back on the M87 detection as the beginning of the ‘interstellar era’? No one can know, but from the standpoint of symbolism — and that’s what this defining of eras is all about — the creation of a telescope like this is a civilizational accomplishment. I think its cultural significance will only grow with time.

Image: Composite image showing how the M87 system looked, across the entire electromagnetic spectrum, during the Event Horizon Telescope’s April 2017 campaign to take the iconic first image of a black hole. Requiring 19 different facilities on the Earth and in space, this image reveals the enormous scales spanned by the black hole and its forward-pointing jet, launched just outside the event horizon and spanning the entire galaxy. Credit: the EHT Multi-Wavelength Science Working Group; the EHT Collaboration; ALMA (ESO/NAOJ/NRAO); the EVN; the EAVN Collaboration; VLBA (NRAO); the GMVA; the Hubble Space Telescope, the Neil Gehrels Swift Observatory; the Chandra X-ray Observatory; the Nuclear Spectroscopic Telescope Array; the Fermi-LAT Collaboration; the H.E.S.S. collaboration; the MAGIC collaboration; the VERITAS collaboration; NASA and ESA. Composition by J.C. Algaba.

Into the Milky Way’s HeartThe Event Horizon Telescope (EHT) is not a single physical installation but a collection of telescopes around the world that use Very Long Baseline Interferometry to produce a virtual observatory with, as mentioned above, an aperture the size of our planet. Heino Falcke’s book Light in the Darkness (HarperOne, 2021) tells this story from the inside, and it’s as exhilarating an account of scientific research as any I’ve read.

M87 seemed in some ways an ideal target, with a black hole thought to mass well over 6 billion times more than the Sun. In terms of sheer size, M87 dwarfed estimates of the Milky Way’s supermassive black hole (Sgr A*), which weighs in at 4.3 million solar masses, but it’s also 2,000 times farther away. Even so, it was the better target, for M87 was well off the galactic plane, whereas astronomers hoping to study the Milky Way’s black hole have to contend with shrouds of gas and dust and the fact that, while average quasars consume one sun per year, Sgr A* pulls in 106 times less.

But the investigation of Sgr A* continues as new technologies come into play, with the James Webb Space Telescope now awaiting launch in December and already on the scene in French Guiana. Early in JWST’s observing regime, Sgr A* is to be probed at infrared wavelengths, adding the new space-based observatory to the existing Event Horizon Telescope. Farhad Yusef-Zadeh, principal investigator on the Webb Sgr A* program, points out that JWST will allow data capture at two different wavelengths simultaneously and continuously, further enhancing the EHT’s powers.

Among other reasons, a compelling driver for looking hard at Sgr A* is the fact that it produces flares in the dust and gas surrounding it. Yusef-Zadeh (Northwestern University) notes that the Milky Way’s supermassive black hole is the only one yet observed with this kind of flare activity, which makes it more difficult to image the black hole but also adds considerably to the scientific interest of the investigation. The flares are thought to be the result of particles accelerating around the object, but details of the mechanism of light emission here are not well understood.

Image: An enormous swirling vortex of hot gas glows with infrared light, marking the approximate location of the supermassive black hole at the heart of our Milky Way galaxy. This multiwavelength composite image includes near-infrared light captured by NASA’s Hubble Space Telescope, and was the sharpest infrared image ever made of the galactic center region when it was released in 2009. While the black hole itself does not emit light and so cannot be detected by a telescope, the EHT team is working to capture it by getting a clear image of the hot glowing gas and dust directly surrounding it. Credit: NASA, ESA, SSC, CXC, STS.

Thus we combine radio data from the Event Horizon Telescope with JWST’s infrared data. How different wavelengths can tease out more information is evident in the image above. Here we have a composite showing Hubble near-infrared observations in yellow, and deeper infrared observations from the Spitzer Space Telescope in red, while light detected by the Chandra X-Ray Observatory appears in blue and violet. Flare detection and better imagery of the region as enabled by adding JWST to the EHT mix, which will include X-ray and other observatories, should make for the most detailed look at Sgr A* that has ever been attempted.

What light we detect associated with a black hole is from the accreting material surrounding it, with the event horizon being its inner edge — this is what we saw in the famous M87 image. The early JWST observations, expected in its first year of operation, are to be supplemented by further work to build up our knowledge of the flare activity and enhance our understanding of how Sgr A* differs from other supermassive black holes.

Image: Heated gas swirls around the region of the Milky Way galaxy’s supermassive black hole, illuminated in near-infrared light captured by NASA’s Hubble Space Telescope. Released in 2009 to celebrate the International Year of Astronomy, this was the sharpest infrared image ever made of the galactic center region. NASA’s upcoming James Webb Space Telescope, scheduled to launch in December 2021, will continue this research, pairing Hubble-strength resolution with even more infrared-detecting capability. Of particular interest for astronomers will be Webb’s observations of flares in the area, which have not been observed around any other supermassive black hole and the cause of which is unknown. The flares have complicated the Event Horizon Telescope (EHT) collaboration’s quest to capture an image of the area immediately surrounding the black hole, and Webb’s infrared data is expected to help greatly in producing a clean image. Credit: NASA, ESA, STScI, Q. Daniel Wang (UMass).

Whether we’re entering an interstellar era or not, we’re going to be learning a lot more about the heart of the Milky Way, assuming we can get JWST aloft. How many hopes and plans ride on that Ariane 5!

October 29, 2021

Talking to the Lion

Extraterrestrial civilizations, if they exist, would pose a unique challenge in comprehension. With nothing in common other than what we know of physics and mathematics, we might conceivably exchange information. But could we communicate our cultural values and principles to them, or hope to understand theirs? It was Ludwig Wittgenstein who said ?If a lion could speak, we couldn?t understand him.? True?

One perspective on this is to look not into space but into time. Traditional SETI is a search through space and only indirectly, through speed of light factors, a search through time. But new forms of SETI that look for technosignatures — and this includes searching our own Solar System for signs of technology like an ancient probe, as Jim Benford has championed — open up the chronological perspective in a grand way.

Now we are looking for conceivably ancient signs of a civilization that may have perished long before our Sun first shone. A Dyson shell, gathering most of the light from its star, could be an artifact of a civilization that died billions of years ago.

Image: Philosopher Ludwig Wittgenstein (1889-1951), whose Tractatus Logico-Philosophicus was written during military duty in the First World War. It has been confounding readers like me ever since.

Absent aliens to study, ponder ourselves as we look into our own past. I?ve spent most of my life enchanted with the study of the medieval and ancient world, where works of art, history and philosophy still speak to our common humanity today. But how long will we connect with that past if, as some predict, we will within a century or two pursue genetic modifications to our physiology and biological interfaces with computer intelligence? It?s an open question because these trends are accelerating.

What, in short, will humans in a few hundred years have in common with us? The same question will surface if we go off-planet in large numbers. Something like an O?Neill cylinder housing a few thousand people, for example, would create a civilization of its own, and if we ever launch ?worldships? toward other stars, it will be reasonable to consider that their populations will dance to an evolutionary tune of their own.

The crew that boards a generation ship may be human as we know the term, but will it still be five thousand years later, upon reaching another stellar system? Will an interstellar colony create a new branch of humanity each time we move outward?

Along with this speculation comes the inevitable issue of artificial intelligence, because it could be that biological evolution has only so many cards to play. I?ve often commented on the need to go beyond the conventional mindset of missions as being limited to the lifetime of their builders. The current work called Interstellar Probe at Johns Hopkins, in the capable hands of Voyager veteran Ralph McNutt, posits data return continuing for a century or more after launch. So we?re nudging in the direction of multi-generational ventures as a part of the great enterprise of exploration.

But what do interstellar distances mean to an artilect, a technological creation that operates by artificial intelligence that eclipses our own capabilities? For one thing, these entities would be immune to travel fatigue because they are all but immortal. These days we ponder the relative advantages of crewed vs. robotic missions to places like Mars or Titan. Going interstellar, unless we come up with breakthrough propulsion technologies, favors computerized intelligence and non-biological crews. Martin Rees has pointed out that the growth of machine intelligence should happen much faster away from Earth as systems continually refine and upgrade themselves.

It was a Rees essay that reminded me of the Wittgenstein quote I used above. And it leads me back to SETI. If technological civilizations other than our own exist, it?s reasonable to assume they would follow the same path. Discussing the Drake Equation in his recent article Why extraterrestrial intelligence is more likely to be artificial than biological, Lord Rees points out there may be few biological beings to talk to:

Perhaps a starting point would be to enhance ourselves with genetic modification in combination with technology?creating cyborgs with partly organic and partly inorganic parts. This could be a transition to fully artificial intelligences.

AI may even be able to evolve, creating better and better versions of itself on a faster-than-Darwinian timescale for billions of years. Organic human-level intelligence would then be just a brief interlude in our “human history” before the machines take over. So if alien intelligence had evolved similarly, we’d be most unlikely to “catch” it in the brief sliver of time when it was still embodied in biological form. If we were to detect extraterrestrial life, it would be far more likely to be electronic than flesh and blood?and it may not even reside on planets.

Image: Credit: Breakthrough Listen / Danielle Futselaar.

I don?t think we?ve really absorbed this thought, even though it seems to be staring us in the face. The Drake Equation?s factor regarding the lifetime of a civilization is usually interpreted in terms of cultures directed by biological beings. An inorganic, machine-based civilization that was spawned by biological forebears could refine the factors that limit human civilization out of existence. It could last for billions of years.

It?s an interesting question indeed how we biological beings would communicate with a civilization that has perhaps existed since the days when the Solar System was nothing more than a molecular cloud. We often use human logic to talk about what an extraterrestrial civilization would want, what its motives would be, and tell ourselves the fable that ?they? would certainly act rationally as we understand rationality.

But we have no idea whatsoever how a machine intelligence honed over thousands of millenia would perceive reality. As Rees points out, ?we can’t assess whether the current radio silence that Seti are experiencing signifies the absence of advanced alien civilisations, or simply their preference.? Assuming they are there in the first place.

And that?s still a huge? if.? For along with our other unknowns, we have no knowledge whatsoever about abiogenesis on other worlds. To get to machine intelligence, you need biological intelligence to evolve to the point where it can build the machines. And if life is widespread — I suspect that it is — that says nothing about whether or not it is likely to result in a technological civilization. We may be dealing with a universe teeming with lichen and pond scum, perhaps enlivened with the occasional tree.

A SETI reception would be an astonishing development, and I believe that if we ever receive a signal, likely as a byproduct of some extraterrestrial activity, we will be unlikely to decode it or even begin to understand its meaning and motivation. Certainly that seems true if Rees is right and the likely sources are machines. A SETI ?hit? is likely to remain mysterious, enigmatic, and unresolved. But let?s not stop looking.

October 27, 2021

BLC1: The ‘Proxima Signal’ and What We Learned

If we were to find a civilization at Proxima Centauri, the nearest star, it would either be a coincidence of staggering proportions — two technological cultures just happening to thrive around neighboring stars — or an indication that intelligent life is all but ubiquitous in the galaxy. ‘Ubiquitous’ could itself mean different things: Many civilizations, scattered in their myriads amongst the stars, or a single, ancient civilization that had spread widely through the galaxy.

If a coincidence, add in the time factor and things get stranger still. For only the tiniest fraction of our planet’s existence has been impinged upon by a tool-making species, and who knows what the lifetime of a civilization is? Unless civilizations can live for eons, how could two of them be found around stars so close? Thus the possibility that BLC1 — Breakthrough Listen Candidate 1 — was a valid technosignature at Proxima Centauri was greeted with a huge degree of skepticism within the SETI community and elsewhere.

Image: This picture combines a view of the southern skies over the ESO 3.6-metre telescope at the La Silla Observatory in Chile with images of the stars Proxima Centauri (lower-right) and the double star Alpha Centauri AB (lower-left) from the NASA/ESA Hubble Space Telescope. Proxima Centauri is the closest star to the Solar System and is orbited by the planet Proxima b, which was discovered using the HARPS instrument on the ESO 3.6-metre telescope. Credit: Y. Beletsky (LCO)/ESO/ESA/NASA/M. Zamani.

But it was a good idea to look, because you don’t know what you’re going to find until you take the data, which is why SETI happens. While a great deal of attention has focused on the Alpha Centauri stars as targets for a future space probe, little attention has been paid to them in SETI terms. The southern hemisphere sky was examined by Project Phoenix in the 1990s (202 main sequence stars) and a second search, conducted by David Blair and team, likewise used the Parkes radio telescope in Australia to cover another 176 bright southern stars in that decade. Neither of these searches took in Proxima Centauri, which is, after all, a very faint M dwarf.

The discovery of Proxima Centauri b, a habitable zone world, brought new attention to the dim star. When the Parkes Observatory was turned toward Proxima Centauri in 2019 as part of the activities of Breakthrough Listen, the system received a thorough examination. We learn in two new papers in Nature Astronomy just how thorough:

We searched our observations towards Prox Cen for signs of technologically advanced life, across the full frequency range of the receiver (0.7–4.0 GHz). To search for narrowband technosignatures we exploit the fact that signals from any body with a non-zero radial acceleration relative to Earth, such as an exoplanet, solar system object or spacecraft, will exhibit a characteristic time-dependent drift in frequency (referred to as a drift rate) when detected by a receiver on Earth. We applied a search algorithm that detects narrowband signals with Doppler drift rates consistent with that expected from a transmitter located on the surface of Prox Cen b…Our search detected a total of 4,172,702 hits—that is, narrowband signals detected above a signal-to-noise (S/N) threshold—in all on-source observations of Prox Cen and reference off-source observations. Of these, 5,160 hits were present in multiple on-source pointings towards Prox Cen, but were not detected in reference (off-source) pointings towards calibrator sources; we refer to these as ‘events.’

Image: This is Figure 4 from et al. (citations below). Caption: The signal of interest, BLC1, from our search of Prox Cen. Here, we plot the dynamic spectrum around the signal of interest over an eight-pointing cadence of on-source and off-source observations. BLC1 passes our coincidence filters and persists for over 2 h. The red dashed line, purposefully offset from the signal, shows the expected frequency based on the detected drift rate (0.038 Hz s−1) and start frequency in the first panel. BLC1 is analysed in detail in a companion paper. Credit: Smith et al./Breakthrough Listen.

In other words, a technosignature at Proxima Centauri, if actually there, should disappear depending on whether or not the telescope is pointing directly at the target system, which is how all but 5,160 hits were eliminated. The BLC1 signal made it through subsequent examination partly because it did not lie within the frequency range of local radio-frequency interference. The authors like to refer to it as a ‘signal of interest’ rather than a ‘candidate’ signal, but accept the BLC1 nomenclature because it is so widely adopted in coverage of the event.

BLC1 was a narrowband signal, which screened out natural astrophysical sources, and intriguingly, it persisted for several hours, much longer than would be accounted for by a passing aircraft or satellite. Moreover, it showed a drift rate that one would expect from a transmitter not on Earth’s surface, one that changed smoothly over time, “as expected for a transmitter in a rotational/orbital environment.” So you can see why it merited a deeper look.

As presented in the two Nature Astronomy papers, that second look has proceeded in the hands of Sofia Sheikh and colleagues at the University of California Berkeley. The scientists have examined archival observations of the Proxima Centauri system using an analysis thoroughly explained in the second of the two papers, one that included, in addition to drift rate study, a search for reappearances of the signal on other days and at other frequencies.

That involved searching for other signals near 982 MHz, where BLC1 appeared, looking first for signals with the same frequency and drift, and moving on to other frequencies (I’m simplifying mercilessly here — see the paper for the intricacies of the analysis). We wind up with a population of BLC1 look-alike signatures that, unlike BLC1, also appear when the telescope is not directly pointed at Proxima Centauri. The authors find every one of these to be caused by radio frequency interference, and determine that BLC1 is consistent with this population of look-alikes in terms of absolute drift rate, frequency and signal to noise ratio. Thus:

Using this procedure, we find that blc1 is not an extraterrestrial technosignature, but rather an electronically drifting intermodulation product of local, time-varying interferers aligned with the observing cadence.

That’s a useful and not unexpected finding, but the value of BLC1 is apparent. It has allowed scientists to develop a set of procedures for the analysis of technosignatures which were fully deployed here and explored in the papers. Sheikh and team have developed a technosignature verification framework built around this, the first signal of interest from Breakthrough Listen that required exhaustive investigation to rule out an alien technology. The value of that for future SETI work should be obvious:

…this signal of interest also reveals some novel challenges with radio SETI validation. It is well understood within the community that single-dish, on–off cadence observing could lead to spurious signals of interest in the case in which the cadence matches the duty cycle of some local RFI. The blc1 signal provided the first observational example of that behaviour, albeit in a slightly different manner than expected (variation of signal strength over position and time, which changed for each lookalike within the set). This case study prompts further application of observing arrays, multi-site observing and multi-beam receivers for radio technosignature searches. For future single-dish observing, we have demonstrated the utility of a deep understanding of the local RFI environment. To gain this understanding, future projects could perform omnidirectional RFI scans at the observing site, record and process the data with instrumentation with high frequency resolution such as the various BL backends, and then use narrowband search software such as turboSETI to obtain a population with which to characterize the statistics (in frequency, drift, power, duty cycle and so on) of local RFI.

The 10-part technosignature verification framework appears at the end of the Sheikh paper and summarizes both what BLC1 taught us through this analysis, but also how we can proceed more efficiently with persistent, narrowband technosignature searches in the future. I would say that’s a good outcome, one that moves the field forward thanks to this unusual detection.

The papers are Smith et al., “A radio technosignature search towards Proxima Centauri resulting in a signal of interest,” Nature Astronomy 25 October 2021 (full text); and Sheikh et al., “Analysis of the Breakthrough Listen signal of interest blc1 with a technosignature verification framework,” Nature Astronomy 25 October 2021 (full text).

October 26, 2021

Planetary Protection in an Interstellar Mode

Back in 2013, Heath Rezabek began developing a series in these pages on a proposal he called Vessel, which he had first presented at the 100 Year Starship Symposium in September of 2012. A librarian and futurist, Rezabek saw the concept as a strategy to preserve both humanity’s cultural as well as biological heritage, with strong echoes of Greg Benford’s Library of Life, which proposed freezing species in threatened environments to save them. In Heath’s case, a productive partnership with frequent Centauri Dreams contributor Nick Nielsen led to articles by both, which produced a series of interesting discussions in the comments.

I noticed in Philip Lubin’s new paper, discussed here on Friday, an explicit reference to the idea of interstellar craft as possible backup devices for living systems. Lubin singled out the Svalbard Global Seed Vault (styled by some the ‘Doomsday Vault’), which preserves seed samples numbering in the millions, with the aim of keeping them safe for centuries. Here too we have the idea of protecting fragile living systems from existential risk in the form of what Lubin refers to as a ‘genetic ark,’ meaning that while his paper looks at tiny ‘wafer’ probes capable of carrying microorganisms, future iterations might develop into another kind of Earth backup system.

Is interstellar flight in future centuries to become the vehicle for preserving our planet’s heritage and scattering copies of ideas and organisms through the universe? It’s a persuasive thought. Here’s how Lubin and team describe it:

In addition to the physical propagation of life, we can also send out digital backups of the “blueprints of life”, a sort of “how-to” guide to replicating the life and knowledge of Earth. The increasing density of data storage allows for current storage density of more than a petabyte per gram and with new techniques, such as DNA encoding of information, much larger amounts of storage can be envisioned. As an indication of viability, we note the US Library of Congress with some 20 million books only requires about 20 TB to store. A small picture and letter from every person on Earth, as in the “Voices of Humanity” project, would only require about 100 TB to store, easily fitting on the smallest of our spacecraft designs. Protecting these legacy data sets from radiation damage is key and is discussed in Lubin 2020 and Cohen et al. 2020.

Image: How much can we ultimately preserve of Earth? And if we eventually can build large-scale arks, where will we send them? Credit: Adam Benton.

Protecting Planets Beyond Our OwnI’m heartened by two things in this paper. The first is, as I mentioned Friday, the consideration of how to use deep space technologies in the service of biology, a field usually discussed in the interstellar community only in terms of biosignatures from exoplanet atmospheres. If we are at the beginning of what may eventually become an interstellar expansion, we should be thinking practically about what future technologies can do to enhance both the preservation of and adaptation of biological systems to deep space. The need for this kind of study is already apparent as we contemplate the possibility of future off-world colonies on the Moon or Mars.

It’s also heartening to see the thread of knowledge preservation mixing with thinking on biological preservation in the event of future catastrophe. If something goes desperately wrong on our home world — plug in the scenario, from nuclear war to runaway AI or nanotech — we need to be able to save enough of our species to rebuild, either here or elsewhere. If here, then archival installations in nearby space could complement those on Earth. If elsewhere, we can hope to scatter knowledge and biological materials widely enough that some may survive.

This concept, however, runs into the question of planetary protection, given that we already have a deep concern about contaminating places we visit with our spacecraft. There are guidelines in place, as the Lubin paper notes, under Article IX of the Outer Space Treaty in the form of Committee on Space Research (COSPAR) regulations. At present, these extend only to Solar System bodies, and include the problem of contamination from Earth as well as contamination from other bodies via sample return materials brought back to our planet.

If we ever reach the point where realistic travel times to other stars become possible, we’ll confront the issue in exoplanet systems as well. It’s a big topic, too big to handle here in the time allowed, but it’s interesting how Lubin and colleagues discuss it in terms of the tiny probes they contemplate sending out beyond the heliosphere. The problem may be resolved within the mission profile. From the paper:

An object with a mass of less than ten grams accelerating with potentially hundreds of GW of power, will, even if it were aimed at a planetary protection target (for example Mars), enter its atmosphere or impact the solar system body with enough kinetic energy to cause total sterilization of the biological samples on board. The velocity of the craft would thus serve as an in-built mechanism for sterilization. The mission profile does not include deceleration, so this mechanism is valid for the entirety of the mission.

We can add to this the fact that Starlight envisions craft aimed at targets outside the ecliptic, significantly lowering the chances of impact with a planet. If current requirements call for demonstrating probabilities of 99% to avoid impact for 20 years and 95% to avoid impact for 50 years, these requirements seem to be met by the kind of craft Starlight contemplates. The kinetic energy of one of these wafer craft moving at a third of the speed of light is roughly 1 kiloton TNT per gram, according to the authors, which would vaporize craft and payload.

If we go interstellar, though, other issues emerge. All that kinetic energy falls into a different light if we imagine an interstellar flyby probe slamming inadvertently into a planetary atmosphere. If the effect would be little more than that of an arriving large meteorite, we still face the question of affecting an environment. There is more to contamination than a biological question, and it’s obvious that any future interstellar capability will demand a rethinking of regulations governing how our presence makes itself known to any local life forms. We have plenty of time to ponder these matters, but it’s good to see they’re already on the radar in some quarters.

On this score, Lubin and team point to a 2006 paper in Space Policy by C.S. Cockell and G. Hornbeck called “Planetary parks-formulating a wilderness policy for planetary bodies” (abstract). Here questions of planetary protection mingle with what the authors call “utilitarian and intrinsic value arguments.” The need to preserve an exoplanet’s pre-existing environment is a major theme in this work, one that I want to explore in a future post.

The paper on Starlight is Lantin et al., “Interstellar space biology via Project Starlight,” Acta Astronautica Vol. 190 (January 2022), pp. 261-272 (abstract).

October 22, 2021

Starlight: Toward an Interstellar Biology

If you could send out a fleet of small lightsails, accelerated to perhaps 20 percent of the speed of light, you could put something of human manufacture into the Alpha Centauri triple star system within about 20 years. So goes, of course, the thinking of Breakthrough Starshot, which continues to investigate whether such a proposal is practicable. As the feasibility study continues, we’ll learn whether the scientists involved have been able to resolve some of the key issues, including especially data return and the need for power onboard to make it happen.

The concept of beam-driven sails for acceleration to interstellar speeds goes back to Robert Forward (see Jim Benford’s excellent A Photon Beam Propulsion Timeline in these pages) and has been examined for several decades by, among others, Geoffrey Landis, Gregory Matloff, Benford himself (working with brother Greg) in laboratory experiments at JPL, Leik Myrabo, and Chaouki Abdallah and team at the University of New Mexico. At the University of California, Santa Barbara, Philip Lubin has advocated DE-STAR (Directed Energy Solar Targeting of Asteroids and Exploration), a program for developing a system of modular phased laser arrays that could be used for asteroid mitigation and as propulsion for deep space missions.

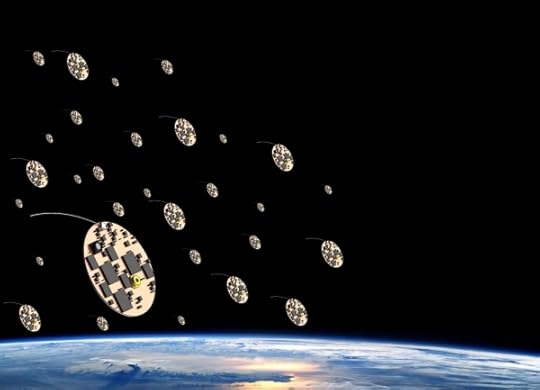

Out of this grew Directed Energy Propulsion for Interstellar Exploration (DEEP-IN), which homes in on using tiny wafer probes for interstellar travel. Also known as Starlight, the program is now the subject of a paper in Acta Astronautica that takes interstellar mission concepts into the realm of biology. Working with Stephen Lantin and a team of researchers at the UCSB Experimental Cosmology Group, Lubin is examining how we can use small relativistic spacecraft to place seeds and microorganisms for experimentation outside the Solar System.

Image: Initial interstellar missions will require a complete reevaluation and redesign of the space systems of today. The objective of the Wafer Scale Spacecraft Development program (WSSD) at the University of California Santa Barbara (UCSB) is to design, develop, assemble and characterize the initial prototypes of these robotic platforms in an attempt to pave a path forward for future innovation and exploration. This program, which is just one venture of the UCSB Experimental Cosmology Group’s Electronic and Advanced Systems Laboratory (UCSB Deepspace EAS), focuses on leveraging continued advances in semiconductor and photonics technologies to recognize and efficiently address the many complexities associated with long duration autonomous interstellar mission. Credit: UCSB Experimental Cosmology Group.

Biology Beyond the HeliosphereThe goal of Starlight is not to seed the nearby universe — that’s an entirely different discussion! — but to conduct what the paper calls ‘biosentinel’ experiments, sending them not to another star but to empty interstellar space to help us characterize how simple biological systems deal with conditions there. This could be seen as a step toward eventual human travel to other stars, but the paper doesn’t dwell on that prospect, wisely I think, because what we learn from such experiments is vital information in its own right and may teach us a good deal about abiogenesis and the possibility of panspermia as a way of scattering life throughout the cosmos.

We may, in other words, be able to characterize biological systems to the point where we understand how to protect human life on a future interstellar mission, but the goal of Starlight is to begin the experimentation that will tell us what is possible for living systems under a wide variety of conditions in deep space. Such missions are, in effect, scouts. Putting them onto interstellar trajectories could open up pathways to larger, more biologically complex missions depending on what they find.

I think the emphasis on biology here is heartening, for space research even in the near-term has been top-heavy in terms of propulsion engineering, obviously critical but sometimes neglectful of such critical matters as how to create closed loop life support. Even a destination as nearby as Mars forces us to ask whether humans can adapt long-term to sharply different gravity gradients, among a multitude of other questions. Thus the need for an orbital station dedicated to biology and physiology that would inform mission planning and spacecraft design.

But that kind of complex starts with humans and examines their response in nearby space. What Lubin and team are talking about is studying basic biological systems and their response to conditions outside the heliosphere, where we can begin experiments within the realm of interstellar biology. Any lifeforms we send to interstellar space will be exposed to conditions of zero-g but also hypergravity, as during the launch and propulsion phases of the flight. We would likewise be working with experiments that can be adjusted in terms of exposure to vacuum, radiation and a wide range of temperatures affecting a variety of sample microorganisms.

A Fleet of Interstellar LaboratoriesHow to experiment, and what to experiment on? Remember that we’re dealing with payloads of wafer size given our constraints on mass. The UCSB work has focused on the design of a microfluidics chamber that can provide suitable conditions for reviving and sustaining microorganisms on the order of 200 μm in length. The paper refers to performing ‘remote lab-on-a-chip experiments,’ using new materials, discussed within, that are enhanced for biocompatibility. I don’t want to go too deeply into the weeds on this, but here’s the gist:

[New thermoplastic elastomers] can be manufactured with diverse glass transition temperatures and either monolithically prepared with an imprinter or integrated with other candidates, such as glass. Polymerase chain reaction (PCR) on a chip is another area that will evolve naturally over the next decade and is one of many biological techniques that could be incorporated in designs. It is also possible that enzymes could be stabilized in osmolytes to perform onboard biochemical reactions. For the study of life in the interstellar environment, it is necessary to include experimental controls (in LEO and on the ground) and the use of diverse genotypes and species to characterize a wide response space…

The biological species best suited for this kind of investigation will have to have key characteristics, the first of which is a low metabolic rate in a chamber where, due to mass restrictions, nutrients will be limited. Also critical is cryptobiotic capability, meaning the ability to go into hibernation with the lowest possible metabolic rate. A tolerance for radiation is obviously helpful, making certain species clear candidates for these missions, especially tardigrades (so-called ‘water bears’), which are known for being ferociously robust under a wide range of conditions and are capable of reducing biological activity to undetectable levels.

We know that tardigrades can survive high radiation as well as high pressure environments; they have been demonstrated to be capable of enduring exposure to space in low Earth orbit. A second outstanding candidate is C. elegans, a multicellular animal small enough that a teaspoon can hold approximately 100 million juvenile worms. Also helpful is the fact that C. elegans has a rapid life cycle of about 14 days, and can, like tardigrades,be placed into suspended animation for later recovery. Other prospects among organisms and cell types are examined in these pages, and the authors call for near-term experiments on mammalian cells to delve into their response to space conditions.

Clearly, a high radiation environment, as found between the stars, offers the chance to study how life began by varying the degree of radiation shielding to which prebiotic chemicals are exposed:

The high radiation environment of interstellar space provides an interesting opportunity to study the biochemical origins of life in spacecraft with low radiation shielding versus those equipped with protective measures to limit the effect of galactic cosmic radiation (GCR) on the prebiotic chemicals, possibly shedding light on the flexibility in the conditions needed for life to arise.

Thus a spacecraft of this sort can also become an experiment in abiogenesis, the formation of nucleic acids and other biological molecules having heretofore been recreated only under laboratory conditions on Earth or in low-Earth orbit. Designing the equipment to make such experiments possible is part of the ongoing developmental work for Starlight.

An interstellar experimental biology probes the factors that make some organisms better adapted for space conditions, but it does hold implications for human expansion:

Selecting for species that are physiologically better fit for interstellar travel opens up new avenues for space research. In testing the metabolism, development, and replication of species, like C. elegans, we can see how biological systems are generally affected by space conditions. C. elegans and tardigrades are inherently more suited to space flight as opposed to humans due to factors like the extensive DNA protection mechanisms some tardigrades have for radiation exposure or the dauer larva state of arrested development C. elegans enter when faced with unfavorable growth conditions. However, as there is overlap between our species, like the human orthologs for 80% of C. elegans proteins, we can begin to make some predictions on the potential for human life in interstellar space.

Any time we ponder sending life forms into space, including simple bacteria that may have contaminated lander probes on other planets, we run into serious issues of planetary protection. I want to look at the paper’s discussion of those in the next post, along with a consideration of space-based biology in the context of concepts that could offer backup systems for Earth.

The paper is Lantin et al., “Interstellar space biology via Project Starlight,” Acta Astronautica Vol. 190 (January 2022), pp. 261-272 (abstract).

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers