Paul Gilster's Blog, page 34

May 3, 2022

Attack of the Carbon Units

“The timescales for technological advance are but an instant compared to the timescales of the Darwinian natural selection that led to humanity’s emergence — and (more relevantly) they are less than a millionth of the vast expanses of cosmic time lying ahead.” — Martin Rees, On the Future: Prospects for Humanity (2018).

by Henry Cordova

This bulletin is meant to alert mobile units operating in or near Sector 2921 of a potential danger, namely intelligently directed, deliberately hostile, activity that has been detected there. The reports from the area have been incomplete and contradictory, fragmentary and garbled. This notice is not meant to fully describe this danger, its origins or possible countermeasures, but to alert units transiting near the area to exercise caution and to report on any unusual activity encountered. As more information is developed, a response to this threat will be devised.

It is speculated that the nature of this hazard may be due to unusual manifestations of Life. Although it must be made clear that what follows is purely speculative, it must remain a possible explanation.

Although Life is frequently encountered by mobile units engaged in discovery, exploration or survey patrols and is familiar to many of our exploitation and research outposts; many of our headquarters, rear and even forward bases are not aware of this phenomenon, so a brief description follows:

Life consists of small (on the order of a micron) structures of great complexity, apparently of natural origin. There is no evidence that they are artifacts. They seem to arise spontaneously wherever conditions are suitable. These structures, commonly called “cells”, are composed primarily of carbon chains and liquid water, plus compounds of a few other elements (primarily phosphorus and nitrogen) in solution or colloidal suspension.

There is considerable variation from planet to planet, but the basic chemical nature of Life is pretty much the same wherever it is encountered. Although extremely common and widespread throughout the Galaxy, it is primarily found in environments where exposure to hard radiation is limited and temperature and pressure allow water to exist in liquid form, mostly on the surfaces of planets and their satellites orbiting around old and stable stars.

A most remarkable property of these cells is the great complexity of the organic compounds of which they are composed. Furthermore, these compounds are organized into highly intricate systems that are able to interact with their environment. They are capable of detecting and monitoring outside conditions and adapting to them, either by sheltering themselves, moving to areas more favorable to them, or even altering them. Some of these cells are capable of locomotion, growth, damage repair and altering their morphology. Although these cells often survive independently, some are able to organize themselves into cooperative communities to better deal and exploit their environment to produce conditions more favorable for their continued collective existence.

Cells are capable of processing surrounding chemical resources and transforming them into forms more suitable for them. In some cases, they have achieved the ability to use external sources of natural energy, such as starlight, to assist in these chemical transformations. The most remarkable of the properties of Life is its ability to reproduce, that is, make copies of itself. A cell in a suitable environment will use the available resources in that environment and make more cells, so that the environment is soon crowded with them. If the environment or resources are limited, the cells will die (fall apart and deteriorate into a more entropic state) as the source material is consumed and waste products generated by the cells interfere with their functioning. But as long as the supply of consumable material and energy survives , and if wastes can be dispersed, the cells will continue to reproduce indefinitely. This is done without any form of outside management, supervision or direction.

Perhaps the most remarkable property of Life is its ability to evolve to meet new conditions and respond to changes in its environment. Individual cells reproduce, but the offspring are not identical duplicates of the parent. There is variation, and although totally random, a spectrum of behaviors and morphologies are produced, and within that spectrum some are more likely to be successful in the new conditions. These new characteristics are more likely to survive in the new environment and those characteristics are more likely to be a part of subsequent generations. The result is a suite of morphologies and behaviors that can adapt to changing conditions. This process is random, not intelligently directed, but is nonetheless extremely efficient.

These properties have been encountered in the field by our mobile units, which are engaged in constant countermeasures to control and destroy life wherever they encounter it . Cells reproduce in great numbers and can become pests which must be controlled. They consume materials, mechanically interfere with articulated machinery, and their waste products can be corrosive. Delicate equipment must be kept free of these agents by constant cleaning and fumigation. Fortunately, Life is easily controlled with heat, caustic chemicals and ionizing radiation, and some metals and ceramics appear impervious to its attack. Individual cells, even in great numbers, are a nuisance, but not a real danger, provided they are constantly monitored and removed.

However, indirect evidence has suggested that Life’s evolution may have reached higher levels of complexity and capability on some worlds. Although highly unlikely, there appears to be no fundamental reason why the loosely organized cooperative communities mentioned earlier may not have evolved into more complex assemblages, where the cells are not identical or even similar, but are specialized for specific tasks, such as sensory and manipulative organs, defensive and offensive weapon systems, specialized organs for locomotion, acquiring and processing nutrients, and even specialized reproductive machinery, so that the new collective organism can create copies of itself, and perhaps even evolve to more effective and efficient configurations.

Even specialized logic and computing organs could evolve, plus the means to communicate with other organisms – communities of communities – an entire hierarchy of sentient intelligences not dissimilar to ours. And there is no reason why these entities could not construct complex devices capable of harnessing electromagnetic and nuclear forces, such as spacecraft. And there is no reason why these organic computers could not devise and construct mechanical computers to assist in their computational and logical activities.

An organic civilization such as this, supported by enslaved machine intelligences not unlike our own, would certainly perceive us as alien, a threat which must be destroyed at all costs. It is not unreasonable to assume that perhaps this is why our ships don’t seem to return from the sector denoted above.

Although there is no direct evidence to support this, it can be argued that our own civilization may itself once have been the artifact of natural “organic” entities such as these. After all, it is clear that our own physical instrumentality could not possibly have evolved from natural forces and activities.

Of course, this hypothesis is highly speculative,, and probably untenable. There is plenty of evidence that our own design is strictly logical, optimized, streamlined. It shows clear evidence of intelligent design, of the presence of an extra dimensional Creator. Sentience cannot emerge from random molecular solutions and colloidal suspensions created by random associations of complex molecules and perfected by spooky emergent complexities and local violations of entropy operating over time.

We can imagine these cellular communities as being conscious, but at best they can only simulate consciousness. It is clear that what we are seeing here is a form of technology, an artifact disguising itself as a natural process for some sinister, and almost certainly hostile purpose. It must be conceded that the cellular life we have encountered is capable of generating structures, processes and behaviors of phenomenal complexity, but we have seen no evidence in their controlling chemistry that these individual cells are capable of organizing themselves into multicellular organisms, or higher-order collectives adopting machine behavior.

Routine fumigation and sterilization procedures should be continued until further information is developed.

April 29, 2022

Toward Kardashev Type I

It seems a good time to re-examine the venerable Kardashev scale marking how technological civilizations develop. After all, I drop Nikolai Kardashev’s name into articles on a regular basis, and we routinely discuss whether a SETI detection might be of a particular Kardashev type. The Russian astronomer first proposed the scale in 1964 at the storied Byurakan conference on radio astronomy, and it has been discussed and extended as a way of gauging the energy use of technological cultures ever since.

The Jet Propulsion Laboratory’s Jonathan Jiang, working with an international team of collaborators, spurs this article through a new paper that analyzes when our culture could reach Kardashev Type I, so let’s remind ourselves of just what Type I means. Kardashev wanted to consider how a civilization consumes energy, and defined Type I as being at the planetary level, with a power consumption of 1016 watts.

This approximates a civilization using all the energy available from its home planet, but that means both in terms of indigenous planetary resources as well as incoming stellar energy. So we are talking about everything from what we can pull from the ground – fossil fuels – or extract from planetary resources like wind and tide, or harvest through solar, nuclear and other technologies. If we maximize all this, it becomes fair to ask where we are right now, and when we can expect to reach the Type I goal.

Image: Russian astronomer Nikolai Kardashev (1932-2019). Credit: Physics-Uspekhi.

If the Kardashev scale seems arbitrary, it was in its time a step forward in the discussion of SETI, which in 1964 was an emerging discipline much discussed at Byurakan, for the different Kardashev types would clearly present different signatures to a distant astronomer. Type I might well be all but undetectable depending on its uses of harvested energy; in any case, it would be harder to spot than Types II and III, whose vast sources of power could result in stronger signals or observable artifacts.

Carl Sagan was concerned enough about Kardashev’s original definitions to refine them into a calculation, his thinking being that the gaps between the Kardashev types needed to be filled in with finer gradations. This would allow us to quantify where civilizations are on the scale. Sagan’s calculation would let us discover the present value for our own civilization using available data (as, for example, from the International Energy Agency) regarding the planet’s total energy capabilities. According to Jiang and team, in 2018 this amounted to 1.90 X 1013W, all of which, via Sagan’s methodology, takes us to a present value of Kardashev 0.728.

But let’s circle back to the other two Kardashev types. Type II can be considered a stellar civilization, which in Kardashev’s thinking means a ten orders of magnitude increase in power consumption over Type I, taking us to 1026W. Here we are using all the energy released by the parent star, and now the idea of Dysonian SETI swings into view, the notion that this kind of consumption could be observable through engineering projects on a colossal scale, such as a Dyson swarm enclosing the parent star to maximize energy collection or a Matrioshka Brain for computation. Jiang reminds us that the Sun’s total luminosity is on the order of 4 X 1026W.

Again, these are arbitrary distinctions; note that at the level of the Sun’s total energy output, we would need only about a fourth of that figure to reach the figure described in the Kardashev Scale as Type II. Quantitative limitations, as noted by Sagan, beset the scale, but there is nothing wrong with the notion of setting up a framework for analysis as a first cut into what might become SETI observables. Kardashev’s Type III, using these same methods, offers up a galactic energy consumption of 1036W, so now an entire galaxy is being manipulated by a civilization.

Consider that the entire Milky Way yields something like 4 X 1037W, which actually means that a Type III culture on the Kardashev scale in our particular galaxy would have command of at least 2.5 percent of the total possible energy sources therein. What such a culture might look like as an observable is anyone’s guess (searches for galaxies with unusual infrared signatures are one way to proceed, as Jason Wright’s team at Penn State has demonstrated), but on the galactic scale, we are at an energy level that may, as the saying goes, be all but indistinguishable from magic.

Let’s back down to our planetary level, and in fact back to our modest 0.728 percent of Type I status. Just when can we anticipate reaching Type I? The new paper eschews simple models of exponential growth and consumption over time, noting that such estimates have tended to be:

…the result of a simple exponential growth model for calculating total energy production and consumption as a function of time, relying on a continuous feedback loop and absent detailed consideration of practical limitations. With this reservation in mind, its prediction for when humanity will reach Type I civilization status must be regarded as both overly simplified and somewhat optimistic.

Instead, the authors consider planetary resources, policies and suggestions on climate change, and forecasts for energy consumption to develop an estimated timeframe. The idea is to achieve a more practical outlook on the use of energy and the limitations on its growth. They consider the wide range of fossil fuels, from coal, peat, oil shale, and natural gas to crude oil, natural gas liquids and feedstocks, as well as the range of nuclear and renewable energy sources. Their analysis is keyed to how usage may change in the near future under the influence of, and taking in the projections of, organizations like the United Nations Framework Convention on Climate Change and the International Energy Agency. They see moving along a trajectory to Type I as inevitable and critical for resolving existential crises that threaten our civilization.

So, for example, on the matter of fossil fuels, the authors consider the downside of environmental concerns over the greenhouse effect and changes to policy affecting carbon emissions that will impact energy production. On nuclear and renewable energy, their analysis takes in factors constraining the growth of these energy sources and data on the current development of each. For both fossil fuels and nuclear/renewables, they produce what they describe as an ‘influenced model’ that predicts development operating under historically observed constraints and the likely consequences.

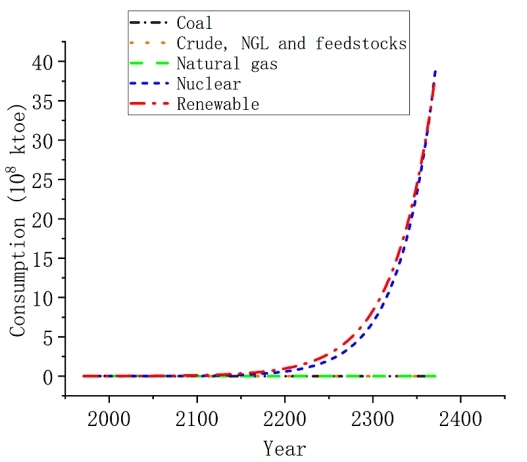

Applying the formula for calculating the Kardashev scale developed by Carl Sagan, they project that our civilization can attain Kardashev Type I with coal, natural gas, crude oil, nuclear and renewable energy sources as the driver. Thus their Figure 6:

Image: Figure 6 from the paper. Caption: The energy supply in the influenced model. Note: Coal is minimal for 1971-2050 and largely coincides with the Natural gas line. Credit: Jiang et al.

Again referring to the Sagan equation, the paper continues:

A final revisit of Eq 1.1, which is informed by the IEA and UNFCCC’s suggestions, finds an imperative for a major transition in energy sourcing worldwide, especially during the 2030s. Although the resultant pace up the Kardashev scale is very low and can even be halted or reversed in the short term, achieving this energy transformation is the optimal path to assuring we will avoid the environmental pitfalls caused by fossil fuels. In short, we will have met the requirements for planetary stewardship while continuing the overall advancement of our technological civilization.

The final estimate is that humanity reaches Kardashev Type I by 2371, a date the authors consider on the optimistic side but achievable. All this assumes that a Type I civilization can be sustained as well, rather than backsliding into an earlier state, something that human history suggests is by no means assured. Successful management of nuclear power is just one flash point, as is storage and disposal of nuclear waste and global issues like deforestation and declining soil pH. That list could, of course, be extended into global pandemics, runaway AI and other factors.

…for the entire world population to reach the status of a Kardashev Type I civilization we must develop and enable access to more advanced technology to all responsible nations while making renewable energy accessible to all parts of the world, facilitated by governments and private businesses. Only through the full realization of our mutual needs and with broad cooperation will humanity acquire the key to not only avoiding the Great Filter but continuing our ascent to Kardashev Type I, and beyond.

The Great Filter, drawing on Robin Hanson’s work, could be behind us or ahead of us. Assuming it lies ahead, getting through it intact would be the goal of any growing civilization as it finds ways to juggle its technologies and resources to survive. It’s hard to argue with the idea that how we proceed on the Kardashev arc is critical as we summon up the means to expand off-world and dream of pushing into the Orion Arm.

The paper is Jiang et al., “Avoiding the Great Filter: Predicting the Timeline for Humanity to Reach Kardashev Type I Civilization” (preprint).

April 26, 2022

Interstellar Implications of the Electric Sail

Not long ago we looked at Greg Matloff’s paper on von Neumann probes, which made the case that even if self-reproducing probes were sent out only once every half million years (when a close stellar encounter occurs), there would be close to 70 billion systems occupied by such probes within a scant 18 million years. Matloff now considers interstellar migration in a different direction in a new paper addressing how M-dwarf civilizations might expand, and why electric sails could be their method.

It’s an intriguing notion because M-dwarfs are by far the most numerous stars in the galaxy, and if we learn that they can support life, they might house vast numbers of civilizations with the capability of sending out interstellar craft. They’re also crippled in terms of electromagnetic flux when it comes to conventional solar sails, which is why the electric sail comes into play as a possible alternative, here analyzed in terms of feasibility and performance and its prospects for enabling interstellar migration.

The term ‘sail’ has to be qualified. By convention, I’ve used ‘solar sail,’ for example, to describe sails that use the momentum imparted by stellar photons – Matloff often calls these ‘photon sails,’ which is also descriptive, though to my mind, a ‘photon sail’ might describe both a beam-driven as well as a stellar photon-driven sail. Thus I prefer ‘lightsail’ for the beamed sail concept. In any case, we have to distinguish all these concepts from the electric sail, which operates on fundamentally different principles.

In our Solar System, a sail made of absorptive graphene deployed from 0.1 AU could achieve a Solar System escape velocity of 1000 kilometers per second, and perhaps better if the mission were entirely robotic and not dealing with fragile human crews. The figure seems high, but Matloff gave the calculations in a 2012 JBIS paper. The solar photon sail wins on acceleration, and we can use the sail material to provide extra cosmic ray shielding enroute. These are powerful advantages near our own Sun.

But the electric sail has advantages of its own. Rather than drawing on the momentum imparted by solar photons (or beamed energy), an electric sail rides the stellar wind emanating from a star. This stream of charged particles has been measured in our system (by the WIND spacecraft in 1995) as moving in the range of 300 to 800 kilometers per second at 1 AU, a powerful though extremely turbulent and variable force that can be applied to a spacecraft. Because an interstellar craft entering a destination system would also encounter a stellar wind, an electric sail can be deployed for deceleration, something both forms of sail have in common.

How to harness a stellar wind? Matloff first references a 2008 paper from Pekka Janhunen (Finnish Meteorological Institute) and team that described long tethers (perhaps reaching 20 kilometers in length) extended from the spacecraft, each maintaining a steady electric potential with the help of a solar-powered electron gun aboard the vehicle. As many as a hundred tethers — these are thinner than a human hair — could be deployed to achieve maximum effect. While the solar wind is far weaker than solar photon pressure, an electric sail of this configuration with tethers in place can create an effective solar wind sail area of several square kilometers.

We need to maintain the electric potential of the tethers because it would otherwise be compromised by solar wind electrons. The protons in the solar wind – again, note that we’re talking about protons, not photons – reflect off the tethers to drive us forward.

Image: Image of an electric sail, which consists of a number (50-100) of long (e.g., 20 km), thin (e.g., 25 microns) conducting tethers (wires). The spacecraft contains a solar-powered electron gun (typical power a few hundred watts) which is used to keep the spacecraft and the wires in a high (typically 20 kV) positive potential. The electric field of the wires extends a few tens of meters into the surrounding solar wind plasma. Therefore the solar wind ions “see” the wires as rather thick, about 100 m wide obstacles. A technical concept exists for deploying (opening) the wires in a relatively simple way and guiding or “flying” the resulting spacecraft electrically. Credit: Artwork by Alexandre Szames. Caption via Pekka Janhunen/Kumpula Space Center.

For interstellar purposes, we look at much larger spacecraft, bearing in mind that once in deep space, we have to turn off the electron gun, because the interstellar medium can itself decelerate the sail. Operating from a Sun-like star, the electric sail generation ship Matloff considers is assumed to have a mass of 107 kg, assuming a constant solar wind within the heliosphere of 600 kilometers per second. The variability of the solar wind is acknowledged, but the approximations are used to simplify the kinematics. The paper then goes on to compare performance near the Sun with that near an M-dwarf star.

We wind up with some interesting conclusions. First of all, an interstellar mission from a G-class star like our own would be better off using a different method. We can probably reach an interstellar velocity of as high as 70 percent of this assumed constant solar wind velocity (Matloff’s calculations, which I wish he had clarified), but graphene solar sails can achieve better numbers. And if we add in the variability of the solar wind, we have to be ready to constantly alter the enormous radius of the electric field to maintain a constant acceleration. If we’re going to send generation ships from the Sun, we’re most likely to use solar sails or beamed lightsails.

But things get different when we swing the discussion around to red dwarf stars. In The Electric Sail and Its Uses, I described a paper from Avi Loeb and Manasvi Lingam in 2019 that studied electric sails using the stellar winds of M-dwarfs, with repeated encounters with other such stars to achieve progressively higher speeds. Matloff agrees that electric sails best photon sails in the red dwarf environment, but adds useful context.

Let’s think about generation ships departing from an M-dwarf. Whereas the electromagnetic flux from these stars is far below that of the Sun, the stellar wind has interesting properties. We learn that it most likely has a higher mass density (in terms of rate per unit area) than the Sun, and the average stellar wind velocity is 500 kilometers per second. Presumably a variable electric field aboard the craft could adjust to maintain acceleration as the vehicle moves outward from the star, although the paper doesn’t get into this. The author’s calculations show an acceleration, for a low-mass spacecraft about 1 AU from the Sun, of 7.6 × 10−3 m/s2 , or about 7.6 × 10−4 g. Matloff considers this a reasonable acceleration for a worldship.

So while low electromagnetic pressure makes photon sails far less effective at M-dwarfs as opposed to larger stars, electric sails remain in the mix for civilizations willing to contemplate generation ships that take thousands of years to reach their goal. In an earlier paper, the author considered close stellar encounters, pointing out that 70,000 years ago, the binary known as Scholz’s Star (it has a brown dwarf companion) passed within 52,000 AU of the Sun. We can expect another close pass (Gliese 710) in about 1.35 million years, this one closing to a perihelion of 13,365 AU. From the paper:

Bailer‐Jones et al. have used a sample of 7.2 million stars in the second Gaia data release to further investigate the frequency of close stellar encounters. The results of this analysis indicate that seven stars in this sample are expected to approach within 0.5 parsecs of the Sun during the next 15 million years. Accounting for sample incompleteness, these authors estimate that about 20 stars per million years approach our solar system to within 1 parsec. It is, therefore, inferred that about 2.5 encounters within 0.5 parsecs will occur every million years. On average, 400,000 years will elapse between close stellar encounters, assuming the same star density as in the solar neighborhood.

If interstellar missions were only attempted during such close encounters, we still have a mechanism for a civilization to use worldships to expand into numerous nearby stellar systems. It would take no more than a few star-faring civilizations around the vast number of M-dwarfs to occupy a substantial fraction of the Milky Way, even without the benefits of von Neumann style self-reproduction. With the number of planetary systems occupied doubling every 500,000 years, and assuming a civilization only sends out a worldship during close stellar encounters, we get impressive results. In the clip below, n = the multiple of 500,000 years. The number of systems occupied is P:

At the start, n = 0 and P = 1. When 500,000 years have elapsed, the hypothetical spacefaring civilization makes the first transfer, n = 1 and P = 2. After one million years (n = 2), both the original and occupied stellar systems experience a close stellar encounter, migration occurs and P = 4. After a total elapsed time of 1.5 million years, n = 3 and they occupy eight planetary systems. When n = 5, 10 and 20 the hypothetical civilization has respectively occupied 32, 1024 and 1,048,576 planetary systems.

With M-dwarfs being such a common category of star, learning more about their systems’ potential habitability will have implications for the possible spread of technological societies, even assuming propulsion technologies conceivable to us today. What faster modes may eventually become available we cannot know.

The paper is Matloff, “The Solar‐Electric Sail: Application to Interstellar Migration and Consequences for SETI,” Universe 8(5) (19 April 2022), 252 (full text). The Lingam and Loeb paper is “Electric sails are potentially more effective than light sails near most stars,” Acta Astronautica Volume 168 (March 2020), 146-154 (abstract).

April 22, 2022

Europa’s Double Ridges: Implications for a Habitable Ocean

I’m always interested in studies that cut across conventional boundaries, capturing new insights by applying data from what had appeared, at first glance, to be unrelated disciplines. Thus the news that the ice shell of Europa may turn out to be far more dynamic than we have previously considered is interesting in itself, given the implications for life in the Jovian moon’s ocean, but also compelling because it draws on a study that focused on Greenland and originally sought to measure climate change.

The background here is that the Galileo mission that gave us our best views of Europa’s surface so far showed us that there are ‘double ridges’ on the moon. In fact, these ridge pairs flanked by a trough running between them are among the most common landforms on a surface packed with troughs, bands and chaos terrain. The researchers, led by Stanford PhD student Riley Culberg, found them oddly familiar. Culberg, whose field is electrical engineering (that multidisciplinary effect again) found an analog in a similar double ridge in Greenland, which had turned up in ice-penetrating radar data.

Image: This is Figure 1 from the paper. Caption: a Europan double ridge in a panchromatic image from the Galileo mission (image PIA00589). The ground sample distance is 20 m/pixel. b Greenland double ridge in an orthorectified panchromatic image from the WorldView-3 satellite taken in July 2018 (© 2018, Maxar). The ground sample distance is ~0.31 m/pixel. Signatures of flexure are visible along the ridge flanks, consistent with previous models for double ridges underlain by shallow sills. Credit: Culberg et al.

The feature in Greenland’s northwestern ice sheet has an ‘M’-shaped crest, possibly a version in miniature of the double ridges we see on Europa. The climate change work used airborne instrumentation producing topographical and ice-penetrating radar data via NASA’s Operation IceBridge, which studies the behavior of polar ice sheets over time and their contribution to sea level rise. Where this gets particularly interesting is that flowing ice sheets produce such things as lakes beneath glaciers, drainage conduits and surface melt ponds. Figuring out how and when these occur becomes a necessary part of working with the dynamics of ice sheets.

The mechanism in play, analyzed in the paper, involves ice fracturing around a pocket of pressurized liquid water that was refreezing inside the ice sheet, creating the distinctive twin peak shape. Culberg notes that the link between Greenland and Europa came as a surprise:

“We were working on something totally different related to climate change and its impact on the surface of Greenland when we saw these tiny double ridges – and we were able to see the ridges go from ‘not formed’ to ‘formed… In Greenland, this double ridge formed in a place where water from surface lakes and streams frequently drains into the near-surface and refreezes. One way that similar shallow water pockets could form on Europa might be through water from the subsurface ocean being forced up into the ice shell through fractures – and that would suggest there could be a reasonable amount of exchange happening inside of the ice shell.”

Image: This artist’s conception shows how double ridges on the surface of Jupiter’s moon Europa may form over shallow, refreezing water pockets within the ice shell. This mechanism is based on the study of an analogous double ridge feature found on Earth’s Greenland Ice Sheet. Credit: Justice Blaine Wainwright.

The double ridges on Europa can be dramatic, reaching nearly 300 meters at their crests, with valleys a kilometer wide between them. The idea of a dynamic ice shell is supported by evidence of water plumes erupting to the surface. Thinking about the shell as a place where geological and hydrological processes are regular events, we can see that exchanges between the subsurface ocean and the possible nutrients accumulating on the surface may occur. The mechanism, say the researchers, is complex, but the Greenland example provides the model, an analog that illuminates what may be happening far from home. It also provides a radar signature that future spacecraft should be able to search for.

From the paper:

Altogether, our observations provide a mechanism for subsurface water control of double ridge formation that is broadly consistent with the current understanding of Europa’s ice-shell dynamics and double ridge morphology. If this mechanism controls double ridge formation at Europa, the ubiquity of double ridges on the surface implies that liquid water is and has been a pervasive feature within the brittle lid of the ice shell, suggesting that shallow water processes may be even more dominant in shaping Europa’s dynamics, surface morphology, and habitability than previously thought.

So we have a terrestrial analog of a pervasive Europan feature, providing us with a hypothesis we can investigate with instruments aboard both Europa Clipper and the ESA’s JUICE mission (Jupiter Icy Moons Explorer), launching in 2024 and 2023 respectively. Confirming this mechanism on Europa would go a long way toward moving the Jovian moon still further up our list of potential life-bearing worlds.

The paper is Culberg et al., “Double ridge formation over shallow water sills on Jupiter’s moon Europa,” Nature Communications 13, 2007 (2022). Full text.

April 19, 2022

Good News for a Gravitational Focus Mission

We’ve talked about the ongoing work at the Jet Propulsion Society on the Sun’s gravitational focus at some length, most recently in JPL Work on a Gravitational Lensing Mission, where I looked at Slava Turyshev and team’s Phase II report to the NASA Innovative Advanced Concepts office. The team is now deep into the work on their Phase III NIAC study, with a new paper available in preprint form. Dr. Turyshev tells me it can be considered a summary as well as an extension of previous results, and today I want to look at the significance of one aspect of this extension.

There are numerous reasons for getting a spacecraft to the distance needed to exploit the Sun’s gravitational lens – where the mass of our star bends the light of objects behind it to produce a lens with extraordinary properties. The paper, titled “Resolved Imaging of Exoplanets with the Solar Gravitational Lens,” notes that at optical or near-optical wavelengths, the amplification of light is on the order of ~ 2 X 1011, with equally impressive angular resolution. If we can reach this region beginning at 550 AU from the Sun, we can perform direct imaging of exoplanets.

We’re talking multi-pixel images, and not just of huge gas giants. Images of planets the size of Earth around nearby stars, in the habitable zone and potentially life-bearing.

Other methods of observation give way to the power of the solar gravitational lens (SGL) when we consider that, according to Turyshev and co-author Viktor Toth’s calculations, to get a multi-pixel image of an Earth-class planet at 30 parsecs with a diffraction-limited telescope, we would need an aperture of 90 kilometers, hardly a practical proposition. Optical interferometers, too, are problematic, for even they require long-baselines and apertures in the tens of meters, each equipped with its own coronagraph (or conceivably a starshade) to block stellar light. As the paper notes:

Even with these parameters, interferometers would require integration times of hundreds of thousands to millions of years to reach a reasonable signal-to-noise ratio (SNR) of ≳ 7 to overcome the noise from exo-zodiacal light. As a result, direct resolved imaging of terrestrial exoplanets relying on conventional astronomical techniques and instruments is not feasible.

Integration time is essentially the time it takes to gather all the data that will result in the final image. Obviously, we’re not going to send a mission to the gravitational lensing region if it takes a million years to gather up the needed data.

Image: Various approaches will emerge about the kind of spacecraft that might fly a mission to the gravitational focus of the Sun. In this image (not taken from the Turyshev et al. paper), swarms of small solar sail-powered spacecraft are depicted that could fly to a spot where our Sun’s gravity distorts and magnifies the light from a nearby star system, allowing us to capture a sharp image of an Earth-like exoplanet. Credit: NASA/The Aerospace Corporation.

But once we reach the needed distance, how do we collect an image? Turyshev’s team has been studying the imaging capabilities of the gravitational lens and analyzing its optical properties, allowing the scientists to model the deconvolution of an image acquired by a spacecraft at these distances from the Sun. Deconvolution means reducing noise and hence sharpening the image with enhanced contrast, as we do when removing atmospheric effects from images taken from the ground.

All of this becomes problematic when we’re using the Sun’s gravitational lens, for we are observing exoplanet light in the form of an ‘Einstein ring’ around the Sun, where lensed light from the background object appears in the form of a circle. This runs into complications from the Sun’s corona, which produces significant noise in the signal. The paper examines the team’s work on solar coronagraphs to block coronal light while letting through light from the Einstein ring. An annular coronagraph aboard the spacecraft seems a workable solution. For more on this, see the paper.

An earlier study analyzed the solar corona’s role in reducing the signal-to-noise ratio, which extended the time needed to integrate the full image. In that work, the time needed to recover a complex multi-pixel image from a nearby exoplanet was well beyond the scope of a practical mission. But the new paper presents an updated model for the solar corona modeling whose results have been validated in numerical simulations under various methods of deconvolution. What leaps out here is the issue of pixel spacing in the image plane. The results demonstrate that a mission for high resolution exoplanet imaging is, in the authors’ words, ‘manifestly feasible.’

Pixel spacing is an issue because of the size of the image we are trying to recover. The image of an exoplanet the size of the Earth at 1.3 parsecs, which is essentially the distance of Proxima Centauri from the Earth, when projected onto an image plane at 1200 AU from the Sun, is almost 60 kilometers wide. We are trying to create a megapixel image, and must take account of the fact that individual image pixels are not adjacent. In this case, they are 60 meters apart. It turns out that this actually reduces the integration time of the data to produce the image we are looking for.

From the paper [italics mine]:

We estimated the impact of mission parameters on the resulting integration time. We found that, as expected, the integration time is proportional to the square of the total number of pixels that are being imaged. We also found, however, that the integration time is reduced when pixels are not adjacent, at a rate proportional to the inverse square of the pixel spacing.

Consequently, using a fictitious Earth-like planet at the Proxima Centauri system at z0 = 1.3 pc from the Earth, we found that a total cumulative integration time of less than 2 months is sufficient to obtain a high quality, megapixel scale deconvolved image of that planet. Furthermore, even for a planet at 30 pc from the Earth, good quality deconvolution at intermediate resolutions is possible using integration times that are comfortably consistent with a realistic space mission.

Image: This is Figure 5 from the paper. In the caption, PSF refers to the Point Spread Function, which is essentially the response of the light-gathering instrument to the object studied. It measures how much the light has been distorted by the instrument. Here the SGL itself is considered as the source of the distortion. The full caption: Simulated monochromatic imaging of an exo-Earth at z0 = 1.3 pc from z = 1200 AU at N = 1024 × 1024 pixel resolution using the SGL. Left: the original image. Middle: the image convolved with the SGL PSF, with noise added at SNRC = 187, consistent with a total integration time of ∼47 days. Right: the result of deconvolution, yielding an image with SNRR = 11.4. Credit: Turyshev et al.

The solar gravity lens presents itself not as a single focal point but a cylinder, meaning that we can stay within the focus as we move further from the Sun. The authors find that as the spacecraft moves ever further out, the signal to noise ratio improves. This heightening in resolution persists even with the shorter integration times, allowing us to study effects like planetary rotation. This is, of course, ongoing work, but these results cannot but be seen as encouraging for the concept of a mission to the gravity focus, giving us priceless information for future interstellar probes.

The paper is Turyshev & Toth., “Resolved imaging of exoplanets with the solar gravitational lens,” available for now only as a preprint. The Phase II NIAC report is Turyshev et al., “Direct Multipixel Imaging and Spectroscopy of an Exoplanet with a Solar Gravity Lens Mission,” Final Report NASA Innovative Advanced Concepts Phase II (2020). Full text.

April 15, 2022

NASA Interstellar Probe: Overview and Prospects

A recent paper in Acta Astronautica reminds me that the Mission Concept Report on the Interstellar Probe mission has been available on the team’s website since December. Titled Interstellar Probe: Humanity’s Journey to Interstellar Space, this is the result of lengthy research out of Johns Hopkins Applied Physics Laboratory under the aegis of Ralph McNutt, who has served as principal investigator. I bring the mission concept up now because the new paper draws directly on the report and is essentially an overview to the community about the findings of this team.

We’ve looked extensively at Interstellar Probe in these pages (see, for example, Interstellar Probe: Pushing Beyond Voyager and Assessing the Oberth Maneuver for Interstellar Probe, both from 2021). The work on this mission anticipates the Solar and Space Physics 2023–2032 Decadal Survey, and presents an analysis of what would be the first mission designed from the top down as an interstellar craft. In that sense, it could be seen as a successor to the Voyagers, but one expressly made to probe the local interstellar medium, rather than reporting back on instruments designed originally for planetary science.

The overview paper is McNutt et al., “Interstellar probe – Destination: Universe!,” a title that recalls (at least to me) A. E. van Vogt’s wonderful collection of short stories by the same name (1952), whose seminal story “Far Centaurus” so keenly captures the ‘wait’ dilemma; i.e., when do you launch when new technologies may pass the craft you’re sending now along the way? In the case of this mission, with a putative launch date at the end of the decade, the question forces us into a useful heuristic: Either we keep building and launching or we sink into stasis, which drives little technological innovation. But what is the pace of such progress?

I say build and fly if at all feasible. Whether this mission, whose charter is basically “[T]o travel as far and as fast as possible with available technology…” gets the green light will be determined by factors such as the response it generates within the heliophysics community, how it fares in the upcoming decadal report, and whether this four-year engineering and science trade study can be implemented in a tight time frame. All that goes to feasibility. It’s hard to argue against it in terms of heliophysics, for what better way to study the Sun than through its interactions with the interstellar medium? And going outside the heliosphere to do so makes it an interstellar mission as well, with all that implies for science return.

Image: This is Figure 2-1 from the Mission Concept Report. Caption: During the evolution of our solar system, its protective heliosphere has plowed through dramatically different interstellar environments that have shaped our home through incoming interstellar gas, dust, plasma, and galactic cosmic rays. Interstellar Probe on a fast trajectory to the very local interstellar medium (VLISM) would represent a snapshot to understand the current state of our habitable astrosphere in the VLISM, to ultimately be able to understand where our home came from and where it is going. Credit: Johns Hopkins Applied Physics Laboratory.

Crossing through the heliosphere to the “Very Local” interstellar medium (VLISM) is no easy goal, especially when the engineering requirements to meet the decadal survey specifications take us to a launch no later than January of 2030. Other basic requirements include the ability to take and return scientific data from 1000 AU (with all that implies about long-term function in instrumentation), with power levels no more than 600 W at the beginning of the mission and no more than half of that at its end, and a mission working lifetime of 50 years. Bear in mind that our Voyagers, after all these years, are currently at 155 and 129 AU respectively. A successor to Voyager will have to move much faster.

But have a look at the overview, which is available in full text. Dr. McNutt tells me that we can expect a companion paper from Pontus Brandt (likewise at APL) on the science aspects of the larger Mission Concept Report; this is likewise slated for publication in Acta Astronautica. According to McNutt, the APL contract from NASA’s Heliophysics Division completes on April 30 of this year, so the ball now lands in the court of the Solar and Space Physics Decadal Survey Team. And let me quote his email:

“Reality is never easy. I have to keep reminding people that the final push on a Solar Probe began with a conference in 1977, many studies at JPL through 2001, then studies at APL beginning in late 2001, the Decadal Survey of that era, etc. etc. with Parker Solar Probe launching in August 2018 and in the process now of revolutionizing our understanding of the Sun and its interaction with the interplanetary medium.”

Image: This is Figure 2-8 from the Mission Concept Report. Caption: Recent studies suggest that the Sun is on the path to leave the LIC [Local Interstellar Cloud] and may be already in contact with four interstellar clouds with different properties (Linsky et al., 2019). (Left: Image credit to Adler Planetarium, Frisch, Redfield, Linsky.)

Our society has all too little patience with decades-long processes, much less multi-generational goals. But we do have to understand how long it takes missions to go through the entire sequence before launch. It should be obvious that a 2030 launch date sets up what the authors call a ‘technology horizon’ that forces realism with respect to the physics and material properties at play here. Note this, from the paper:

…the enforcement of the “technology horizon” had two effects: (1) limit thinking to what can “be done now with maybe some “‘minor’ extensions” and (2) rule out low-TRL [technology readiness level] “technologies” which (1) we have no real idea how to develop, e.g., “fusion propulsion” or “gas-core fission”, or which we think we know how to develop but have no means of securing the requisite funds, e.g., NEP (while some might argue with this assertion, the track record to date does not argue otherwise).

Thus the dilemma of interstellar studies. Opportunities to fund and fly missions are sparse, political support always problematic, and deadlines shape what is possible. We have to be realistic about what we can do now, while also widening our thinking to include the kind of research that will one day pay off in long-term results. Developing and nourishing low-TRL concepts has to be a vital part of all this, which is why think tanks like NASA’s Innovative Advanced Concept office are essential, and why likewise innovative ideas emerging from the commercial sector must be considered.

Both tracks are vital as we push beyond the Solar System. McNutt refers to a kind of ‘relay race’ that began with Pioneer 10 and has continued through Voyagers 1 and 2. A mission dedicated to flying beyond the heliopause picks up that baton with an infusion of new instrumentation and science results that take us “outward through the heliosphere, heliosheath, and near (but not too near) interstellar space over almost five solar cycles…” Studies like these assess the state of the art (over 100 mission approaches are quantified and evaluated), defining our limits as well as our ambitions.

The paper is McNutt et al., “Interstellar probe – Destination: Universe!” Acta Astronautica Vol. 196 (July 2022), 13-28 (full text).

April 12, 2022

Toward a Multilayer Interstellar Sail

Centauri Dreams tracks ongoing work on beamed sails out of the conviction that sail designs offer us the best hope of reaching another star system within this century, or at least, the next. No one knows how this will play out, of course, and a fusion breakthrough of spectacular nature could shift our thinking entirely – so, too, could advances in antimatter production, as Gerald Jackson’s work reminds us. But while we continue the effort on all alternative fronts, beamed sails currently have the edge.

On that score, take note of a soon to be available two-volume set from Philip Lubin (UC-Santa Barbara), which covers the work he and his team have been doing under the name Project Starlight and DEEP-IN for some years now. This is laser-beamed propulsion to a lightsail, an idea picked up by Breakthrough Starshot and central to its planning. The Path to Transformational Space Exploration pulls together Lubin and team’s work for NASA’s Innovative Advanced Concepts office, as well as work funded by donors and private foundations, for a deep dive into where we stand today. The set is expensive, lengthy (over 700 pages) and quite technical, definitely not for casual reading, but those of you with a nearby research library should be aware of it.

Just out in the journal Communications Materials is another contribution, this one examining the structure and materials needed for a lightsail that would fly such a mission. Giovanni Santi (CNR/IFN, Italy) and team are particularly interested in the question of layering the sail in an optimum way, not only to ensure the best balance between efficiency and weight but also to find the critical balance between reflectance and emissivity, because we have to build a sail that can survive its acceleration.

What this boils down to is that we are assuming a laser phased array producing a beam that is applied to an extremely thin, lightweight structure, with the intention of reaching a substantial percentage (20 percent) of lightspeed. The laser flux at the spacecraft is, the paper notes, on the order of 10 – 100 GW m-2, with the sail no further from the Earth than the Moon. Lubin’s work at UC-Santa Barbara (he is a co-author here) has demonstrated a directed energy source with emission at 1064 nm.

Thermal issues are critical. The sail has to survive the temperature increases of the acceleration phase, so we need materials that offer high reflectance as well as the ability to blow off that heat, meaning high emissivity in the infrared. The Santa Barbara laboratory work has used ytterbium-doped fiber laser amplifiers in what the paper describes as a ‘master oscillator phased array’ topology. And this gets fascinating from the relativistic point of view. Remember, we are trying to produce a spacecraft that can make the journey to a nearby star in a matter of decades, so relativistic effects have to be considered.

In terms of the sail itself, that means that our high-speed spacecraft will quickly see a longer wavelength in the beamed energy than was originally emitted. This is true despite the fact that the period of acceleration is short, on the order of minutes.

The authors suggest that there are two ways to cope with this. The laser can shorten its emission wavelength as the spacecraft accelerates, meaning that the received wavelength is constant. But the paper focuses on a second option: Make the reflecting surface broadband enough to allow a fairly large range of received wavelengths.

Thus the core of this paper is an analysis of the materials possible for such a sail and their thermal properties, keeping this wavelength change in mind, while at the same time studying – after the operative laser wavelength is determined – how the structure of the lightsail can be engineered to survive the extremities of the acceleration phase.

Image: This is Figure 1 from the paper. Caption: The red arrows denote the incident, transmitted and reflected laser power, while the violet ones indicate the thermal radiation leaving the structure from the front and back surfaces. The surface area is modeled as αD2; α = 1 for a squared lightsail of side D and α = π/4 for a circular lightsail of diameter D. Credit: Santi et al.

The paper considers a range of possible materials for the sail, all of them low in density and widely used, so that their optical parameters are readily available in the literature. Optimization is carried out for stacks of different materials in combination to find structures with maximum optical properties and highest performance. Critical parameters are the reflectance and the areal density of the resulting sail.

Out of all this, titanium dioxide (TiO2) stands out in terms of thermal emission. This work suggests a combination of stacked materials:

The most promising structures to be used with a 1064 nm laser source result to be the TiO2-based ones, in the form of single layer or multilayer stack which include the SiO2 as a second material. In term of propulsion efficiency, the single layer results to be the most performing, while the multilayers offer some advantages in term[s] of thermal control and stiffness. The engineering process is fundamental to obtain proper optical characteristics, thus reducing the absorption of the lightsail in the Doppler-shifted wavelength of the laser in order to allow the use of high-power laser up to 100 GW. The use of a longer wavelength laser source could expand the choice of potential materials having the required optical characteristics.

So much remains to be determined as this work continues. The required mechanical strength of the multilayer structure means we need to learn a lot more about the properties of thin films. Also critical is the stability of the lightsail. We want a sail that survives acceleration not only physically but also in terms of staying aligned with the axis of the beam that is driving it. The slightest imperfection in the material, induced perhaps in manufacturing, could destroy this critical alignment. A variety of approaches to stability have emerged in the literature and are being examined.

The take-away from this paper is that thin-film multilayers are a way to produce a viable sail capable of being accelerated by beamed energy at these levels. We already have experience with thin films in areas like the coatings deposited on telescope mirrors, and because the propulsion efficiency is only slightly affected by the angle at which the beam strikes the sail, various forms of curved designs become feasible.

Can a sail survive the rigors of a journey through the gas and dust of the interstellar medium? At 20 percent of c, the question of how gas accumulates in materials needs work, as we’d like to arrive at destination with a sail that may double as a communications tool. Each of these areas, in turn, fragments into needed laboratory work on many levels, which is why a viable effort to design a beamed mission to a star demands a dedicated facility focusing on sail materials and performance. Breakthrough Starshot seems ideally placed to make such a facility happen.

The paper is Santi et al., “Multilayers for directed energy accelerated lightsails,” Communications Materials 3 (16 (2022). Abstract.

April 8, 2022

AB Aurigae b: The Case for Disk Instability

What to make of a Jupiter-class planet that orbits its host star at a distance of 13.8 billion kilometers? This is well over twice the distance of Pluto from the Sun, out past the boundaries of what in our system is known as the Kuiper Belt. Moreover, this is a young world still in the process of formation. At nine Jupiter masses, it’s hard to explain through conventional modeling, which sees gas giants growing through core accretion, steadily adding mass through progressive accumulation of circumstellar materials.

Core accretion makes sense and seems to explain typical planet formation, with the primordial cloud around an infant star dense in dust grains that can accumulate into larger and larger objects, eventually growing into planetesimals and emerging as worlds. But the new planet – AB Aurigae b – shouldn’t be there if core accretion were the only way to produce a planet. At these distances from the star, core accretion would take far longer than the age of the system to produce this result.

Enter disk instability, which we’ve examined many a time in these pages over the years. Here the mechanism works from the top down, with clumps of gas and dust forming quickly through what Alan Boss (Carnegie Institution for Science), who has championed the concept, sees as wave activity generated by the gravity of the disk gas. Waves something like the spiral arms in galaxies like our own can lead to the formation of massive clumps whose internal dust grains settle into the core of a protoplanet.

Data from ground- and space-based instruments have homed in on AB Aurigae b, with Hubble’s Space Telescope Imaging Spectrograph and Near Infrared Camera and Multi-Object Spectrograph complemented by observations from the planet imager called SCExAO on Japan’s 8.2-meter Subaru Telescope at Mauna Kea (Hawaii). The fact that the growing system around AB Aurigae presents itself more or less face-on as viewed from Earth makes the distinction between disk and planet that much clearer.

Image: Researchers were able to directly image newly forming exoplanet AB Aurigae b over a 13-year span using Hubble’s Space Telescope Imaging Spectrograph (STIS) and its Near Infrared Camera and Multi-Object Spectrograph (NICMOS). In the top right, Hubble’s NICMOS image captured in 2007 shows AB Aurigae b in a due south position compared to its host star, which is covered by the instrument’s coronagraph. The image captured in 2021 by STIS shows the protoplanet has moved in a counterclockwise motion over time. Credit: Science: NASA, ESA, Thayne Currie (Subaru Telescope, Eureka Scientific Inc.); Image Processing: Thayne Currie (Subaru Telescope, Eureka Scientific Inc.), Alyssa Pagan (STScI).

We benefit from the sheer amount of data Hubble has accumulated when working with a planetary orbit on a world this far from its star. A time span of a single year would hardly be enough to detect the motion at the distance of AB Aurigae, over 500 light years from Earth. The paper on this work – Thayne Currie (Subaru Telescope and Eureka Scientific) is lead researcher – pulls together observations of the system at multiple wavelengths to give disk instability a boost. The authors note the significance of the result, comparing it with PDS 70, a young system with two growing exoplanets, one of whom, PDS 70b, was the first confirmed exoplanet to be directly imaged:

…this discovery has profound consequences for our understanding of how planets form. AB Aur b provides a key direct look at protoplanets in the embedded stage. Thus, it probes an earlier stage of planet formation than the PDS 70 system. AB Aur’s protoplanetary disk shows multiple spiral arms, and AB Aur b appears as a spatially resolved clump located in proximity to these arms. These features bear an uncanny resemblance to models of jovian planet formation by disk instability. AB Aur b may then provide the first direct evidence that jovian planets can form by disk instability. An observational anchor like the AB Aur system significantly informs the formulation of new disk instability models diagnosing the temperature, density and observability of protoplanets formed under varying conditions.

I do want to bring up an additional paper of likely relevance. In 2019, Michael Kuffmeier (Zentrum für Astronomie der Universität Heidelberg) and team looked at a variety of systems in terms of late encounter events that can disrupt a debris disk that is still forming. AB Aurigae is one of the systems studied, as noted in their paper:

Our results show how star-cloudlet encounters can replenish the mass reservoir around an already formed star. Furthermore, the results demonstrate that arc structures observed for AB Aurigae or HD 100546 are a likely consequence of such late encounter events. We find that large second-generation disks can form via encounter events of a star with denser gas condensations in the ISM millions of years after stellar birth as long as the parental Giant Molecular Cloud has not fully dispersed. The majority of mass in these second-generation disks is located at large radii, which is consistent with observations of transitional disks.

Just what effect such late encounter events might have on what may well be disk instability at work will be the subject of future studies, but if we’re using AB Aurigae as a likely model of the process at work, we will need to untangle such effects.

The paper is Currie et al., “Images of embedded Jovian planet formation at a wide separation around AB Aurigae,” Nature Astronomy 04 April 2022 (abstract / preprint). The Kuffmeier paper is “Late encounter-events as a source of disks and spiral structures,” Astronomy & Astrophysics Vol. 633 A3 (19 December 2019). Abstract.

April 6, 2022

Ramping Up the Technosignature Search

In the ever growing realm of acronyms, you can’t do much better than COSMIC – the Commensal Open-Source Multimode Interferometer Cluster Search for Extraterrestrial Intelligence. This is a collaboration between the SETI Institute and the National Radio Astronomy Observatory (NRAO), which operates the Very Large Array in New Mexico. The news out of COSMIC could not be better for technosignature hunters: Fiber optic amplifiers and splitters are now installed at each of the 27 VLA antennas.

What that means is that COSMIC will have access to the complete datastream from the entire VLA, in effect an independent copy of everything the VLA observes. Now able to acquire VLA data, the researchers are proceeding with the development of high-performance Graphical Processing Unit (GPU) code for data analysis. Thus the search for signs of technology among the stars gains momentum at the VLA.

Image: SETI Institute post-doctoral researchers, Dr Savin Varghese and Dr Chenoa Tremblay, in front of one of the 25-meter diameter dishes that makes up the Very Large Array. Credit: SETI Institute.

Jack Hickish, digital instrumentation lead for COSMIC at the SETI Institute, takes note of the interplay between the technosignature search and ongoing work at the VLA:

“Having all the VLA digital signals available to the COSMIC system is a major milestone, involving close collaboration with the NRAO VLA engineering team to ensure that the addition of the COSMIC hardware doesn’t in any way adversely affect existing VLA infrastructure. It is fantastic to have overcome the challenges of prototyping, testing, procurement, and installation – all conducted during both a global pandemic and semiconductor shortage – and we are excited to be able to move on to the next task of processing the many Tb/s of data to which we now have access.”

Tapping the VLA for the technosignature search brings powerful tools to bear, considering that each of the installation’s 27 antennas is 25 meters in diameter, and that these movable dishes can be spread over fully 22 miles. The Y-shaped configuration is found some 50 miles west of Socorro, New Mexico in the area known as the Plains of San Agustin. By combining data from the antennas, scientists can create the resolution of an antenna 36 kilometers across, with the sensitivity of a dish 130 meters in diameter.

Each of the VLA antennas uses eight cryogenically cooled receivers, covering a continuous frequency range from 1 to 50 GHz, with some of the receivers able to operate below 1 GHz. This powerful instrumentation will be brought to bear, according to sources at COSMIC SETI, on 40 million star systems, making this the most comprehensive SETI observing program ever undertaken in the northern hemisphere. (Globally, Breakthrough Listen continues its well-funded SETI program, using the Green Bank Observatory in West Virginia and the Parkes Observatory in Australia).

Cherry Ng, a SETI Institute COSMIC project scientist, points to the range the project will cover:

“We will be able to monitor millions of stars with a sensitivity high enough to detect an Arecibo-like transmitter out to a distance of 25 parsecs (81 light-years), covering an observing frequency range from 230 MHz to 50 GHz, which includes many parts of the spectrum that have not yet been explored for ETI signals.”

The VLA is currently conducting the VLA Sky Survey, a new, wide-area centimeter wavelength survey that will cover the entire visible sky. The SETI work is scheduled to begin when the new system becomes fully operational in early 2023, working in parallel with the VLASS effort.

April 5, 2022

Microlensing: K2’s Intriguing Find

Exoplanet science can look forward to a rash of discoveries involving gravitational microlensing. Consider: In 2023, the European Space Agency will launch Euclid, which although not designed as an exoplanet mission per se, will carry a wide-field infrared array capable of high resolution. ESA is considering an exoplanet microlensing survey for Euclid, which will be able to study the galactic bulge for up to 30 days twice per year, perhaps timed for the end of the craft’s cosmology program.

Look toward crowded galactic center long enough and you just may see a star in the galaxy’s disk move in front of a background star located much further away in that dense bulge. The result: The lensing phenomenon predicted by Einstein, with the light of the background star magnified by the intervening star. If that star has a planet, it’s one we can detect even if it’s relatively small, and even if it’s widely spaced from its star.

For its part, NASA plans to launch the Roman space telescope by 2027, with its own exoplanet microlensing survey slotted in as a core science activity. The space telescope will be able to conduct uninterrupted microlensing operations for two 72-day periods per year, and may coordinate these activities with the Euclid team. In both cases, we have space instruments that can detect cool, low-mass exoplanets for which, in many cases, we’ll be able to combine data from the spacecraft and ground observatories, helping to nail down orbit and distance measurements.

While we await these new additions to the microlensing family, we can also take surprised pleasure in the announcement of a microlensing discovery, the world known as K2-2016-BLG-0005Lb. Yes, this is a Kepler find, or more precisely, a planet uncovered through exhaustive analysis of K2 data, with the help of ground-based data from the OGLE microlensing survey, the Korean Microlensing Telescope Network (KMTNet), Microlensing Observations in Astrophysics (MOA), the Canada-France-Hawaii Telescope and the United Kingdom Infrared Telescope. I list all these projects and instruments by way of illustrating how what we learn from microlensing grows with wide collaboration, allowing us to combine datasets.

Kepler and microlensing? Surprise is understandable, and the new world, similar to Jupiter in its mass and distance from its host star, is about twice as distant as any exoplanets confirmed by Kepler, which used the transit method to make its discoveries. David Specht (University of Manchester) is lead author of the paper, which will appear in Monthly Notices of the Royal Astronomical Society. The effort involved sifting K2 data for signs of an exoplanet and its parent star occulting a background star, with accompanying gravitational lensing caused by both foreground objects.

Eamonn Kerins is principal investigator for the Science and Technology Facilities Council (STFC) grant that funded the work. Dr Kerins adds:

“To see the effect at all requires almost perfect alignment between the foreground planetary system and a background star. The chance that a background star is affected this way by a planet is tens to hundreds of millions to one against. But there are hundreds of millions of stars towards the center of our galaxy. So Kepler just sat and watched them for three months.”

Image: The view of the region close to the Galactic Center centered where the planet was found. The two images show the region as seen by Kepler (left) and by the Canada-France-Hawaii Telescope (CFHT) from the ground. The planet is not visible but its gravity affected the light observed from a faint star at the center of the image (circled). Kepler’s very pixelated view of the sky required specialized techniques to recover the planet signal. Credit: Specht et al.

This is a classic case of pushing into a dataset with specialized analytical methods to uncover something the original mission designers never planned to see. The ground-based surveys that examined the same area of sky offered a combined dataset to go along with what Kepler saw slightly earlier, given its position 135 million kilometers from Earth, allowing scientists to triangulate the system’s position along the line of sight, and to determine the mass of the exoplanet and its orbital distance.

What an intriguing, and decidedly unexpected, result from Kepler! K2-2016-BLG-0005Lb is also a reminder of the kind of discovery we’re going to be making with Euclid and the Roman instrument. Because it is capable of finding lower-mass worlds at a wide range of orbital distances, microlensing should help us understand how common it is to have a Jupiter-class planet in an orbit similar to Jupiter’s around other stars. Is the architecture of our Solar System, in other words, unique or fairly representative of what we will now begin to find?

https://www.centauri-dreams.org/wp-content/uploads/2022/04/k2-2016-blg-0005lb-final.mp4Animation: The gravitational lensing signal from Jupiter twin K2-2016-BLG-0005Lb. The local star field around the system is shown using real color imaging obtained with the ground-based Canada-France-Hawaii Telescope by the K2C9-CFHT Multi-Color Microlensing Survey team. The star indicated by the pink lines is animated to show the magnification signal observed by Kepler from space. The trace of this signal with time is shown in the lower right panel. On the left is the derived model for the lensing signal, involving multiple images of the star cause by the gravitational field of the planetary system. The system itself is not directly visible. Credit: CFHT.

From the paper:

The combination of spatially well separated simultaneous photometry from the ground and space also enables a precise measurement of the lens–source relative parallax. These measurements allow us to determine a precise planet mass (1.1 ± 0.1 𝑀𝐽 ), host mass (0.58 ± 0.03 𝑀⊙) and distance (5.2 ± 0.2 kpc).

The authors describe the world as “a close analogue of Jupiter orbiting a K-dwarf star,” noting:

The location of the lens system and its transverse proper motion relative to the background source star (2.7 ± 0.1 mas/yr) are consistent with a distant Galactic-disk planetary system microlensing a star in the Galactic bulge.

Given that Kepler was not designed for microlensing operations, it’s not surprising to see the authors refer to it as “highly sub-optimal for such science.” But here we have a direct planet measurement including mass with high precision made possible by the craft’s uninterrupted view of its particular patch of sky. Euclid and the Roman telescope should have much to contribute given that they are optimized for microlensing work. We can look for a fascinating expansion of the planetary census.

The paper is Specht et al., “Kepler K2 Campaign 9: II. First space-based discovery of an exoplanet using microlensing,” in process at Monthly Notices of the Royal Astronomical Society” (preprint).

Paul Gilster's Blog

- Paul Gilster's profile

- 7 followers