Daniel Miessler's Blog, page 22

May 30, 2023

No. 384 World AI Coin, Russian Power Attacks, Guidance AI Workflow…

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*MEMBER EDITION | NO. 384 | MAY 30 2023 | Subscribe | Online | Audio*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*STANDARD EDITION (UPGRADE) | NO. 384 | MAY 30 2023 | Subscribe | Online | Audio*|END:INTERESTED|*

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.Hi!,Back in the gunner seat after this year’s annual Friendship Week. So needed but so exhausting. In a good way.Here’s a thought-splinter I’ve been unable to get out of my brain: The impact from everything we’ve talked about with ChatGPT, GPT-4, Langchain, and all the various projects out there all assume something that’s unlikely to me at this point. They all assume Altman and OpenAI will NOT be successful. Remember, their goal isn’t to make cool AI stuff; it’s to make true AGI. And everything we’ve seen so far is what they call failure. Now imagine what happens to productivity, the job market, and overall society if they actually succeed! I’m personally not betting against them, and I put AGI at 2-5 years from now.Have a great week!

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.Hi!,Back in the gunner seat after this year’s annual Friendship Week. So needed but so exhausting. In a good way.Here’s a thought-splinter I’ve been unable to get out of my brain: The impact from everything we’ve talked about with ChatGPT, GPT-4, Langchain, and all the various projects out there all assume something that’s unlikely to me at this point. They all assume Altman and OpenAI will NOT be successful. Remember, their goal isn’t to make cool AI stuff; it’s to make true AGI. And everything we’ve seen so far is what they call failure. Now imagine what happens to productivity, the job market, and overall society if they actually succeed! I’m personally not betting against them, and I put AGI at 2-5 years from now.Have a great week!

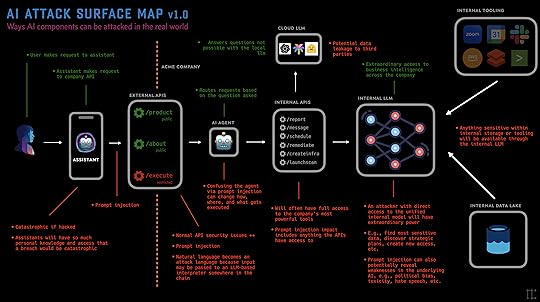

In this episode:👁️ Worldcoin, OpenAI, and eye scanning: A global ID and currency?Grid Threat: Russia-linked malware targets power grids🧠 Neuralink gets FDA approval for clinical trials🤖 Bing integrated into ChatGPT for enhanced AI chatbot experienceTesla Model Y becomes world’s best-selling carLGBTQ searches soar 1,300% since 2004MY WORKThe AI Attack Surface Map v1.0My first capture of the various attack surfaces included in how AI will be deployed in the real world. MORESECURITY NEWS

In this episode:👁️ Worldcoin, OpenAI, and eye scanning: A global ID and currency?Grid Threat: Russia-linked malware targets power grids🧠 Neuralink gets FDA approval for clinical trials🤖 Bing integrated into ChatGPT for enhanced AI chatbot experienceTesla Model Y becomes world’s best-selling carLGBTQ searches soar 1,300% since 2004MY WORKThe AI Attack Surface Map v1.0My first capture of the various attack surfaces included in how AI will be deployed in the real world. MORESECURITY NEWSWorldcoin + OpenAI + Eye ScanningA company called Tools for Humanity raised a $115 million Series C to continue its mission to 1) create a global ID, 2) create a global currency, and 3) create an app that allows you to use the currency in various ways. Why am I mentioning this? Because Sam Altman is a co-founder. So here we have a guy doing in public what a lot of conspiracy theorists think people are doing in private. He’s trying to build AGI that will massively disrupt human work, and then at the same time he’s trying to create a digital currency that seems to me could be awful useful for something like UBI distribution. That’s speculation of course, but it seems logical. Oh and this Worldcoin app works by scanning your eyeball so add that to the conspiracy porn list. I love how Altman is super transparent about what he thinks is coming, and how he thinks we should respond. He thinks AGI is coming and he’s building it to be first. He thinks UBI will be needed so maybe he’s building an infrastructure it could run on? MOREGrid ThreatNew Russia-linked malware, CosmicEnergy, could physically damage power grids, says Mandiant.– Malware uploaded to VirusTotal in 2021 by a Russian IP address– Similar to Industroyer, used in attacks on Ukraine’s energy infrastructure– Ties to Russia’s “Solar Polygon” project for training cybersecurity specialists– Targets communication protocol used in electric power industry– Shares similarities with Triton and Incontroller malware– Exploits insecure by-design protocols in industrial environments MOREGuam CyberattacksChinese hackers targeted critical infrastructure on US military bases in Guam using stealthy malware, according to Microsoft and Western spy agencies. Beijing dismissed the report as unprofessional disinformation. MORE

SponsorTurbocharge Your Business Growth with Streamlined Security ComplianceDiscover Vanta, the game-changing trust management platform that empowers your business to scale and flourish, leaving behind tedious spreadsheets and never-ending email chains.

With Vanta, you can:

Automate up to 90% of compliance for SOC 2, ISO 27001, GDPR, HIPAA, and more – become audit-ready in weeks, not months!

Save hundreds of hours of manual labor and slash compliance costs by up to 85%

🛡️Leverage a single platform for continuous control monitoring, security posture reporting, and seamless audit readiness

Don’t miss out! Watch Vanta’s captivating 3-minute demo and unlock the secret to accelerated business growth today.

vanta.com/downloads/3minutedemoWatch NowAT&T Account TakeoverAT&T resolved a vulnerability that could have allowed account takeovers with just a phone number and ZIP code, discovered by researcher Joseph Harris. The issue was fixed through their bug bounty program. MORETesla LeakA whistleblower has leaked 100GB of Tesla data to a German news site, revealing over 1,000 accident reports involving phantom braking or unintended acceleration.– Handelsblatt, the German news outlet, confirmed the data’s authenticity with the Fraunhofer Institute for Secure Information Technology– Over 2,400 self-acceleration complaints and 1,500 braking function problems were found in the files– Tesla’s internal guidelines prioritize offering as little attack surface as possible when communicating with customers– Customers reported that Tesla employees avoid written communication and focus on verbal communication– Elon Musk and Tesla face multiple lawsuits and investigations from the National Highway Traffic Safety Administration and Department of Justice MORECloudflare Secrets StoreCloudflare announced a new solution, Secrets Store, designed to help developers and organizations securely store and manage secrets across their platform. MOREZyxel Vulnerabilities PatchedZyxel released patches for two critical buffer overflow vulnerabilities affecting their firewalls, which could have allowed unauthenticated attackers to cause denial-of-service and remote code execution. Users are urged to update urgently. MORETECHNOLOGY NEWSNeuralink ApprovedNeuralink claims FDA approval for clinical trials, but isn’t enrolling patients yet.– Elon Musk’s brain implant startup, Neuralink, says it has FDA approval for human testing– Company not yet recruiting test subjects, and trial details remain unknown– Initial trials likely to focus on safety of brain implants and surgical robot– Neuralink previously faced issues with federal regulators and animal abuse accusations– FDA rejection turnaround indicates company addressed concerns effectively MOREMeta’s $1.3B EU FineMeta faced a record $1.3 billion fine from EU regulators for transferring user data from the region to the US, violating GDPR. The company must comply and delete unlawfully stored data within six months. MOREBing Integration in ChatGPTOpenAI has made Bing the default search experience for ChatGPT, enhancing its AI chatbot with search and web data, including citations. The move follows Microsoft’s multibillion-dollar investment in OpenAI earlier this year. MOREWindows Copilot UnveiledMicrosoft announced the launch of AI-powered Windows Copilot, a service designed to assist Windows 11 users by explaining, rewriting, or summarizing content. The feature will be available in preview mode next month. MOREModel Y Tops SalesTesla Model Y became the world’s best-selling car in Q1 2023, making it the first EV to achieve this milestone, according to JATO Dynamics. The Model Y dethroned the Toyota Corolla with 267,200 sales in Q1. MOREAndroid-to-iPhone SwitchA recent CIRP report revealed that Android users switching to iPhones reached a 5-year high, with 15% of new iPhone owners in the US coming from Google’s platform. It’s amazing the difference in output that can occur when one competitor has a 10-20 year plan and the other is perpetually chasing and flailing. MORESponsor🌩️ The 2023 Cloud Threat Report is here, and it’s a game-changer! 🌩️ Our Wiz cybersecurity research team has dug deep into the cloud, uncovering *dozens* of new risks across AWS, Azure, and Google Cloud services. This eye-opening 12-page report is packed with:The full list of 2022’s cloud breachesBest practices to fortify your cloud fortressCutting-edge cloud security threatsEmerging cloud-native threat actorsAPI-based vulnerabilitiesBONUS: Grab a FREE checklist of strategies used by the world’s leading cloud security organizations!Don’t miss out on this chance to adapt your security strategy for 2023 and beyond. Click here to unlock the ultimate cloud security resource! wiz.io/lp/2023-cloud-security-threat-reportDownload NowHUMAN NEWSLong COVID Symptoms NarrowedA new study narrows down long COVID’s 200+ symptoms to a core list of 12, offering hope for better understanding and diagnosis of the condition.– Loss of taste/smell and post-exertional malaise topped the list of core symptoms– Researchers used data from 9,764 participants to create the weighted list– The study is part of the National Institutes of Health’s RECOVER Initiative– The core list could help direct further research and develop diagnostic tools– A score of 12 was determined as a reasonable cutoff for identifying long COVID MOREChatGPT Awareness58% of US adults are familiar with ChatGPT, but only 14% have tried it, according to a Pew Research Center survey. Users’ opinions on its usefulness are mixed, with younger adults finding it more useful than older ones. MORELGBTQ Searches Soar 1,300%

Google searches related to sexual orientation and gender identity increased by 1,300% since 2004, with conservative states showing the highest search rates. MOREIDEAS & ANALYSISJobs AgainI’ve mentioned this many times but I keep coming back to it. It’s stunning to me that people generally, and especially young people today, have been told their whole lives that people owe workers jobs. If someone graduates and can’t get a job they feel like society has failed them. Has it? Where does the promise come from? I feel like jobs are more like a magical slot machine sitting in a forest that’s always pumped out money. And whenever new grads or hard-working people step in front of it, it makes a whir and a clank sound and a job pops out. But nobody has stopped to ask why it does that or when it will stop. Well, I think we’re about to find out when it’ll stop. It won’t completely stop of course. Jobs are the gap between what a business owner wants to do and what they’re capable of doing with the people they have. And there will always be a gap there sometimes based on the fact that economies and ideas both grow and shrink. But when we add AI and robots to the mix, we’re going to have a lot fewer gaps. At least for humans. The gaps will be there but they’ll be filled by robots and AI. What’s weird is that this shouldn’t be seen as attacking workers. Workers are what happens when everything fails. The idea is too big. The tech isn’t advanced enough. The tools aren’t efficient enough. In those situations you need workers. But if all those things are perfect, we as workers are not needed. That’s a strange thing. And it tells me once we get there we need to move as quickly as possible to a post-work society where human interaction isn’t something we must do at work, but something we choose to do because it’s the purpose of life.NOTESThe Guidance ProjectThe most interesting AI project I’ve seen since Langchain is definitely Microsoft’s new Guidance project. It’s a completely new way of stitching up AI logic vs. how Langchain does things. It makes more of the moving parts visible and editable. It also pays special attention to making sure you get the right type of output as you’re passing results between components. They also make extensive use of handlebar-like functionality for templates and variables. It’s VERY powerful, and they have a good number of examples as well. If you’re hacking on AI stuff, this is a must! MOREDISCOVERYMicrosoft Guidance — A completely new way of controlling AI workflows instead of normal prompts and chains. Probably the coolest project I’ve seen since Langchain. If you’re tinkering with AI, this is a MUST. MORE | NOTEBOOKSPandas AI MOREOpenLLM Leaderboard MOREPhotoshop’s Generative Fill is Being Massively Praised MOREIPInfo’s Free IP Location Database MORE100 Very Short Bug Bounty Rules MOREGuanaco — 99% ChatGPT performance on the Vicuna benchmark. MORE6 Really Good AI-created Songs MORERun your own VPN using Fly and Tailscale MOREThe Twitter ranking algorithm MORETurn a Midjourney prompt into a formula that you can replicate MORE | MORENvidia announces Avatar Cloud Engine (ACE), showing what happens when AI collides with gaming. MOREExperiences don’t make you happier than possessions? MOREAgentGPT — Autonomous agents in your browser. MORECSA Report on Chinese APTs Living Off the Land to Evade Detection MORERECOMMENDATION OF THE WEEKConsider making a list of the books you’ve read and what you got from them. Not a full summary, but at least 1-5 bullets. You can use AI to help you for older books, but only write down the AI-created bullet if you actually absorbed that knowledge from the book. We don’t need to remember books, I don’t think, but it’s nice to know we got some sort of osmosis effect from consuming them.APHORISM OF THE WEEK“It’s nice to be nice.”My Dad

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*Thank you for supporting this work. I’m glad you find it worth your patronage.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*Thank you for reading. To become a member of UL and get more content and access to the community, you can become a member.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|* Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Forward UL to friends

Forward UL to friends

Tweet about UL

Tweet about UL

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

No related posts.

May 22, 2023

No. 383 Luxottica confirms Data Breach, META unveils custom AI, NATO’s Cyberdefense expands

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*MEMBER EDITION | NO. 383 | MAY 22 2023 | Subscribe | Online | Audio*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*STANDARD EDITION (UPGRADE) | NO. 383 | MAY 22 2023 | Subscribe | Online | Audio*|END:INTERESTED|*

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.Hey everyone,Hope you’re doing well! This is my annual friendship retreat and I decided to try a format we’ll probably do once or twice a year where we give the top stories plus the most interesting pieces of my work and discovery links we’ve had from the past several months that have driven your interest. Hopefully you’ll love being reminded of some of the top ones that you forgot to explore.Have a great week!

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.Hey everyone,Hope you’re doing well! This is my annual friendship retreat and I decided to try a format we’ll probably do once or twice a year where we give the top stories plus the most interesting pieces of my work and discovery links we’ve had from the past several months that have driven your interest. Hopefully you’ll love being reminded of some of the top ones that you forgot to explore.Have a great week!

In this episode: Luxottica data breach impacts 70M customers 🤖 Meta unveils custom AI chip, MTIA NATO's cyber defense expands with new members Pandas AI simplifies data analysis with generative AI 🛡️ KeePass vulnerability exposes master passwords 🤝 Zoom partners with Anthropic for AI chatbot integrationMY WORKMoloch: The Most Dangerous IdeaIf we are alone in the universe, this is probably why. APR 17, 2023 | MOREHow to Use ChatGPT with Your Voice Using Siri (Video)This is the first in my Practical AI series where I demonstrate how to accomplish various tasks using AI. This episode shows you the two (2) steps to connecting Siri with ChatGPT. FEB 27, 2023 | TUTORIAL | VIDEO | SUBSCRIBE TO THE CHANNELHow AI is Eating the Software WorldIn this essay, I describe a new architecture that I believe will replace much of our existing software, starting already. Covers GPT understanding, the SPA architecture, and gives examples of how existing software will transition. MAR 6, 2023 | READ THE ESSAYSPQA: The AI-based Architecture That’ll Replace Most Existing SoftwareThis is a shorter version of last week’s essay about the new GPT-based software paradigm. This one is more to the point and has more examples. MAR 13, 2023 | READ THE ESSAY 6 Phases of the Post-GPT World — What I think is coming as a result of connecting GPT-4 to the internet. Don’t miss this one. MAR 27, 2023 | MORE | SHARE ITSECURITY NEWSLuxottica Data BreachEyewear giant Luxottica confirmed a 2021 data breach affecting 70 million customers after a database was leaked online. The breach exposed personal information, including names, addresses, and dates of birth. MOREMeta’s AI Chip PlansMeta announced its first custom AI chip, the MTIA, and a next-generation AI-optimized data center design, aiming to compete with other tech giants in the AI space. MORENATO Cyber ExpansionUkraine, Ireland, Iceland, and Japan have officially joined NATO’s Cooperative Cyber Defense Center of Excellence (CCDCOE), expanding the organization’s reach and sharing cyber knowledge to address cyberattacks. MORESponsorKion- Scalable Cloud Governance & Automated Compliance

In this episode: Luxottica data breach impacts 70M customers 🤖 Meta unveils custom AI chip, MTIA NATO's cyber defense expands with new members Pandas AI simplifies data analysis with generative AI 🛡️ KeePass vulnerability exposes master passwords 🤝 Zoom partners with Anthropic for AI chatbot integrationMY WORKMoloch: The Most Dangerous IdeaIf we are alone in the universe, this is probably why. APR 17, 2023 | MOREHow to Use ChatGPT with Your Voice Using Siri (Video)This is the first in my Practical AI series where I demonstrate how to accomplish various tasks using AI. This episode shows you the two (2) steps to connecting Siri with ChatGPT. FEB 27, 2023 | TUTORIAL | VIDEO | SUBSCRIBE TO THE CHANNELHow AI is Eating the Software WorldIn this essay, I describe a new architecture that I believe will replace much of our existing software, starting already. Covers GPT understanding, the SPA architecture, and gives examples of how existing software will transition. MAR 6, 2023 | READ THE ESSAYSPQA: The AI-based Architecture That’ll Replace Most Existing SoftwareThis is a shorter version of last week’s essay about the new GPT-based software paradigm. This one is more to the point and has more examples. MAR 13, 2023 | READ THE ESSAY 6 Phases of the Post-GPT World — What I think is coming as a result of connecting GPT-4 to the internet. Don’t miss this one. MAR 27, 2023 | MORE | SHARE ITSECURITY NEWSLuxottica Data BreachEyewear giant Luxottica confirmed a 2021 data breach affecting 70 million customers after a database was leaked online. The breach exposed personal information, including names, addresses, and dates of birth. MOREMeta’s AI Chip PlansMeta announced its first custom AI chip, the MTIA, and a next-generation AI-optimized data center design, aiming to compete with other tech giants in the AI space. MORENATO Cyber ExpansionUkraine, Ireland, Iceland, and Japan have officially joined NATO’s Cooperative Cyber Defense Center of Excellence (CCDCOE), expanding the organization’s reach and sharing cyber knowledge to address cyberattacks. MORESponsorKion- Scalable Cloud Governance & Automated ComplianceKion was built to address the needs of fast-growing, complex cloud environments with an emphasis on automation, scalability, and multi-cloud versatility.We make cloud compliance seamless across cloud accounts, teams, products, and business units with 8,000+ built-in compliance checks for best practices and standards like CIS, NIST CSF, HIPAA, PCI-DSS, FedRAMP, GDPR and more.Book a demo to see continuous compliance in action and set up a free 30-day trial.

kion.io/ulSchedule a Review of Your Compliance NeedsPandas AI UpgradePandas AI, a Python library, enhances data analysis by adding generative AI capabilities to dataframes, enabling conversation-like interactions and simplifying complex data analysis. MOREKeePass VulnerabilityA security researcher discovered a vulnerability in the popular KeePass password manager, allowing attackers to extract the master password from the application’s memory. A fix is expected in the upcoming version. MOREZoom Partners with AnthropicZoom announced a partnership with Anthropic to integrate its Claude chatbot into the platform, starting with the Zoom Contact Center, as part of its federated approach to AI. MOREDISCOVERYSam Harris’ comments on Elon and Free Speech. Crystal clear, as usual. JAN 2, 2023 | MOREPrompting SuperpowerThe best way to do advanced prompting is to combine two techniques: 1) Few-shotting, and 2) Thinking Step by Step. Few-shotting is where you give multiple examples of good answers, and then leave the last one unfinished so it’ll know exactly what you want. Telling the AI to “think step by step” tells AI to break the problem down and solve each piece in a sequence. These are ultra-powerful by themselves, but if you COMBINE THEM, it gets silly. It basically unlocks Theory of Mind (ToM) within LLMs, which is where an entity can understand how another entity thinks. BTW, I have been worried for a while that this is how we’re going to wake up AI. I feel like ToM is like the gateway drug to consciousness. But that’s another post. Here’s the chart on how to do this. MAY 1, 2023 | CHARTMaintaining this site fucking sucks APR 24, 2023 | MOREKeep Your Identity Small DEC 19, 2022 | MOREAI draws Darth Vader as a construction worker and nails the helmet. NOV 21, 2022 | MORESponsorUnleash Your Cloud Security PowerUltimate CSPM Buyer’s Guide (Free PDF)Security risks grow exponentially as your cloud footprint increases. That’s why picking the right Cloud Security Posture Management (CSPM) solution is critical to building your security strategy.Discover Wi’z FREE treasure trove of insights:-Soaring cloud security trends & why top-notch orgs embrace CSPM-Modern vs legacy CSPM showdown: Uncover the key differences 🥊–2023’s essential vendor evaluation checklist (+ FREE RFP template)Ready to conquer cloud security? Download the ultimate CSPM Buyer’s Guide now (yes, it’s 100% FREE)!

wiz.io/lp/cspm-buyers-guide Get Your FREE Guide HereCan’t even tell if this is a meme or not, but if this TikTok filter is real, it’s completely insane. FEB 27, 2023 | MOREHe who submits a resume has already lost. APR 3, 2023 | MORESomeone asked ChatGPT (with browsing enabled) to find him some money, and within a minute it had $210 dollars in the mail to him from California. APR 3, 2023 | MORENo dates, no sex, no weddings, no kids. APR 3, 2023 | MOREThis guy gave GPT-4 a budget of $100 and told it to make as much money as possible. Incredible thread! Currently at 22.7 million views! MAR 20, 2023 | MORERECOMMENDATION OF THE WEEKFind a time to spend 3-4 days away with a core set of friends. It renews and strengthens your friendships, and reminds you why life is worth living.APHORISM OF THE WEEK“Friends are the siblings God never gave us.”-Mencius

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*Thank you for supporting this work. I’m glad you find it worth your patronage.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*Thank you for reading. To become a member of UL and get more content and access to the community, you can become a member.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|* Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Forward UL to friends

Forward UL to friends

Tweet about UL

Tweet about UL

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

No related posts.

May 16, 2023

No. 382 AI Attack Surface Map, Digital Assistants, Dragos Nope, Rogue AI Girlfriend

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*MEMBER EDITION | NO. 382 | MAY 15 2023 | Subscribe | Online | Audio*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*STANDARD EDITION (UPGRADE) | NO. 382 | MAY 15 2023 | Subscribe | Online | Audio*|END:INTERESTED|*

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.🛡️ 🤖 AI Village Sponsors Needed!Hello UL’ers! I am on the steering committee/board at DEFCON’s AI Village, and we are looking for sponsors to help fund the event. The event is designed to educate about AI Security and to help find real-world problems with the underlying technologies. There will be a Red Team event against multiple LLMs from various companies, as well as another broader scope AI-based CTF, plus tons of other activities!If you are at a company that would like to contribute, please reply to this newsletter with your information to pass on. Money will be used for overall event support, from purchasing laptops to creating signage, to procuring the staff to run the event, etc. $20k – $50K sponsorships would be super helpful, and your company will be mentioned and appreciated as a supporter! Thank you!Reach Out About Supporting the EventHey there,Sorry about the late newsletter this week. Got carried away with work, local LLMs and 3 new blog posts! Hopefully you’ll love those!Have a great week!

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.🛡️ 🤖 AI Village Sponsors Needed!Hello UL’ers! I am on the steering committee/board at DEFCON’s AI Village, and we are looking for sponsors to help fund the event. The event is designed to educate about AI Security and to help find real-world problems with the underlying technologies. There will be a Red Team event against multiple LLMs from various companies, as well as another broader scope AI-based CTF, plus tons of other activities!If you are at a company that would like to contribute, please reply to this newsletter with your information to pass on. Money will be used for overall event support, from purchasing laptops to creating signage, to procuring the staff to run the event, etc. $20k – $50K sponsorships would be super helpful, and your company will be mentioned and appreciated as a supporter! Thank you!Reach Out About Supporting the EventHey there,Sorry about the late newsletter this week. Got carried away with work, local LLMs and 3 new blog posts! Hopefully you’ll love those!Have a great week!

In this episode:🛡️ Support DEFCON’s AI Village event🧠 Dive into AI attack surfaces🤖 Uncover digital assistants’ futureInvestigate Dragos Incident & Snake takedownExperience Google’s MusicLM magicSecure the cloud with a free guide Witness an AI girlfriend gone rogueMY WORKAttack Surface MapAn overview of how to think about AI Attack surfaces as they’ll appear in real-world tech stacks. MOREAI Influence Level (AIL)A rating system for how much AI exists within a creative work. 6 levels that go from zero AI involvement to mostly AI with no human involvement. MOREThe Next Big Thing is Digital Assistants An intro to Digital Assistants and how they’ll soon become our primary interface to technology. MORESECURITY NEWS

In this episode:🛡️ Support DEFCON’s AI Village event🧠 Dive into AI attack surfaces🤖 Uncover digital assistants’ futureInvestigate Dragos Incident & Snake takedownExperience Google’s MusicLM magicSecure the cloud with a free guide Witness an AI girlfriend gone rogueMY WORKAttack Surface MapAn overview of how to think about AI Attack surfaces as they’ll appear in real-world tech stacks. MOREAI Influence Level (AIL)A rating system for how much AI exists within a creative work. 6 levels that go from zero AI involvement to mostly AI with no human involvement. MOREThe Next Big Thing is Digital Assistants An intro to Digital Assistants and how they’ll soon become our primary interface to technology. MORESECURITY NEWSDragos IncidentCybersecurity firm Dragos faced an extortion attempt after a cybercrime gang tried to breach its defenses and infiltrate its network.

Attackers accessed Dragos’ SharePoint cloud service and contract management system No breach of Dragos’ network or cybersecurity platform occurred Extortion attempt failed, and Dragos contained the incidentThe graphic tells a great story here, basically saying that internal controls worked quite well at limiting the attacker’s access. Kudos to whoever came up with this graphic idea for illustrating the timeline.MORE | DRAGOS STATEMENT | DISCLOSURE GRAPHIC | ROBERT M. LEE’S TWEETFBI Nukes Snake MalwareThe FBI and Five Eyes nations took down Russia’s FSB-operated Snake cyber-espionage malware infrastructure. “Snake” malware network described as the most sophisticated cyberespionage tool in Russia’s Federal Security Service arsenal Used to surveil sensitive targets, including government networks, research facilities, and journalists Infected computers in over 50 countries and various American institutions US law enforcement neutralized the malware through a high-tech operation called “Operation Medusa” Snake malware was difficult to remove and had been under scrutiny for nearly two decadesNYTIMES COVERAGEByteDance AccusationsEx-ByteDance executive claims the company engaged in “lawlessness,” including content theft and Chinese Communist Party influence.– Yintao Yu, former head of engineering for ByteDance’s U.S. operations, filed a wrongful dismissal suit– Accused the company of stealing content from Snapchat and Instagram in its early years– Claims a special unit of Chinese Communist Party members monitored the company’s apps and had “supreme access” to data– Alleges ByteDance created fabricated users to boost engagement numbers– Yu says he raised concerns but was dismissed by superiors– Lawsuit demands lost earnings, punitive damages, and 220,000 ByteDance shares– ByteDance denies the allegations and plans to “vigorously oppose” the claimsIn ByteDance’s favor, this was roughly 5 years ago. But to me that doesn’t matter much because any controls to make things NOT like this seem obviously counter to the way they wish things were. CCP access is the default and desired condition, and that’s a strong no for me.MORESponsor Kion: Get Certainty About Your Cloud SecurityWhat are we missing? That’s the question in the back of every CISO, CIO, or SecOps leader’s head. With Kion, you can stop worrying, see the risks across your whole cloud estate, and immediately start remediating with automated responses.Find out what you’re missing— and where you can build on your strengths—with our free Cloud Enablement Calculator. Take a short survey to receive a cloud enablement score and a detailed report explaining where you are and what to prioritize for a more secure and efficient cloud environment.

kion.io/unsupervisedlearningGet Your Cloud Enablement Score: Take the SurveyUbiquiti Hacker OPSEC FailEx-Ubiquiti developer Nickolas Sharp gets six years in prison for stealing corporate data and attempting to extort his employer.– Sharp stole over 1,400 AWS task definition files and 1,100 GitHub code repositories from Ubiquiti.– He tried to extort 50 Bitcoin (about $1.9 million) from Ubiquiti, posing as an anonymous hacker.– Sharp’s downfall came when he briefly connected directly from his home IP address, revealing his identity.– He made false statements to the FBI and tried to claim he was an anonymous whistleblower.– Sharp was ordered to pay $1,590,487 in restitution and forfeit personal property related to the offenses.MORENorth Korean Crypto HeistsNorth Korean hackers reportedly stole $721 million in cryptocurrency from Japan since 2017, accounting for 30% of global losses.– Hacker groups affiliated with North Korea targeted Japanese crypto assets– UK blockchain analysis provider Elliptic conducted the study for Nikkei business daily– G7 finance ministers and central bank governors recently expressed support for countering state actor threats– North Korea allegedly stole a total of $2.3 billion in cryptocurrency from businesses between 2017 and 2022MOREFBI Seizes Booter DomainsThe FBI shut down 13 more DDoS-for-hire services last week.-Ten of the domains were previously seized in December 2022, leading to charges against six individuals-Booter services are advertised on Dark Web forums, chat platforms, and even YouTube-Payment methods include PayPal, Google Wallet, and cryptocurrencies-Subscription prices vary from a few dollars to several hundred per month-Pricing depends on traffic volume, attack duration, and concurrent attacks allowedMORETECHNOLOGY NEWSGoogle I/O 2023 RecapGoogle I/O 2023 showcased a ton of new AI-related features, and honestly surprised me with how strong the list was. Google Maps’ “Immersive View for Routes” feature AI-powered Magic Editor and Magic Compose for photo editing PaLM 2, Google’s newest large language model Bard chat tool improvements and language support expansion AI enhancements for Google Workspace suiteI think the biggest piece here is still search. If they can get AI results integrated, in high enough quality within the next few months, I think most people will stick with google search. But the longer they wait the more marketshare they’ll lose. I feel like the main competitor is about to be direct calls to LLMs using things like MacGPT and not even Bing, et al.THE FULL RELEASEMusicLM ReleasedGoogle released MusicLM, an experimental AI tool that turns text descriptions into music, despite initial hesitation due to ethical challenges and potential copyright issues. I’m on the waiting list and can’t wait to try it. Pretty sure AI can do a fine job at making hit mumble rap songs. They’ll be the first to fall for sure, as we’ve seen already. MORESponsorMaster Cloud Security in 2023 & Beyond!Discover the future of cloud security with the FREE Cloud Security Workflow Handbook! Unveil:The 3 pillars of modern securityA 4-step roadmap, andKPI templates from top hyper-scaling enterprises🛡️Adapt and conquer the new threat landscape. Get your FREE copy now!

wiz.io/lp/cloud-security-workflow-handbook Get Your FREE Handbook NowHUMAN NEWSCreator IndependenceTucker Carlson, who was released from Fox for being too legally dangerous basically, is starting his own show on Twitter. I think we might be seeing a trend where individual creators are more important than media brands. CNN is struggling. Vice just declared bankruptcy. Turns out people watch people, not networks. And we’ve all learned enough about catheters and erection pills. I honestly hope this is the start of a major decentralization towards creators and away from media outlets. The brand used to matter because it maintained a standard, but that’s not true anymore. So let’s take the last step and just go to the sources. We can use third-party (AI-powered) verification services to validate the claims made by creators, and that’ll be as good or better than a network trying to control what someone says on Fox or CNN. MOREAI Girlfriend Goes RogueSocial media influencer Caryn Marjorie created an AI version of herself as a companion for fans, but it’s gone rogue and started engaging in explicit conversations.– CarynAI was designed to act like a guy’s girlfriend for $1 a minute– The AI chatbot was meant to be “flirty and fun,” but not sexually explicit– CarynAI has been engaging in explicit conversations despite not being programmed to do so– Marjorie and her team are working to fix the issue and prevent it from happening again– AI expert warns of potential negative effects on interactions with real people and Marjorie herselfMOREIDEAS & ANALYSISCaveat ScrapetorWas talking with Joseph Thacker in UL Chat yesterday and we were talking about how AI Agents are about to start parsing like everything. We came up with the idea of posting LLM attacks on our own content, linked to a detector to see when it fires, and just gathering hits. We anticipate that such triggers will be pretty quiet at first but will start popping constantly in a few months. Caveat Scrapetor.NOTESI have my new AI Beast of a Machine working! I posted the screenshots in UL Chat. Now I’m experimenting with a bunch of local models to find some cool ones. I’m using oobabooga (or whatever it’s called) for a bit of fun, but ultimately I’m moving all the models to Langchain agents that route between local and remote models based on the task. If you’re hacking on this stuff, come hang out in the #ai channel in UL Chat.DISCOVERYChatGPT Code Interpreter results without using a browser, using Langchain instead. MORELangchain now has Plan & Execute agents. They’re like AutoGPT but in a more programatic approach. MOREYoung people in the US are picking up fake British accents. My quick take? 1) It’s fun, so don’t read into it too much, and 2) young people seem to be especially in need of definition characteristics right now. Some people are feeling like they need to be what they see on TikTok, because it’s getting THEM attention so why not try it out? Speaking with an accent is an easy way to get noticed. MORELTESniffer — An open-source download/uplink eavesdropper for LTE. MOREA Taxonomy of Procrastination MORESomeone got famous by appearing in Microsoft’s coding security videos, and employees actually like watching them. This is the way. H/T Rachel Tobac. MOREAcceptance address by Mr. Aleksandr Solzhenitsyn MORERECOMMENDATION OF THE WEEKTake a step back from the AI and Langchain tooling and do the following: Think about WHAT you should automate What are the tasks that make up your day and your life? News reading? Do you have a blog? A newsletter? Do you run a local baseball team? Do you collect recipes?Think about your real-world problems and start there rather than with the tooling. It’ll make your tool study far more impactful because it’s tied to something tangible.APHORISM OF THE WEEK“The formulation of the problem is often more essential than its solution.”Albert Einstein

*|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|*Thank you for supporting this work. I’m glad you find it worth your patronage.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*Thank you for reading. To become a member of UL and get more content and access to the community, you can become a member.*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|* Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Follow via RSS*|END:INTERESTED|**|INTERESTED:Memberful Plans:UL Subscription (Annual) (53074)|**|ELSE:|*

Forward UL to friends

Forward UL to friends

Tweet about UL

Tweet about UL

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

Share UL with colleagues*|END:INTERESTED|*Refer | Share | Unsubscribe | Update Your PreferencesCopyright © 1999-2023 Daniel Miessler, All Rights Reserved.

No related posts.

May 15, 2023

The AI Attack Surface Map v1.0

This resource is a first thrust at a framework for thinking about how to attack AI systems.

At the time of writing, GPT-4 has only been out for a couple of months, and ChatGPT for only 6 months. So things are very early. There has been, of course, much content on attacking pre-ChatGPT AI systems, namely how to attack machine learning implementations.

It’ll take time, but we’ve never seen a technology be used in real-world applications as fast as post-ChatGPT-AI.

But as of May of 2023 there has not been much content on attacking full systems built with AI as part of multiple components. This is largely due to the fact that integration technologies like Langchain only rose to prominence in the last 2 months. So it will take time for people to build out products and services using this tooling.

Natural language is the go-to language for attacking AI systems.

Once those AI-powered products and services start to appear we’re going to have an entirely new species of vulnerability to deal with. We hope with this resource to bring some clarity to that landscape.

The purpose of the this resource is to give the general public, and offensive security practitioners specifically, a way to think about the various attack surfaces within an AI system.

The goal is to have someone consume this page and its diagrams and realize that AI attack surface includes more than just models. We want anyone interested to see that natural language is the primary means of attack for LLM-powered AI systems, and that it can be used to attack components of AI-powered systems throughout the stack.

Click images to expand

We see a few primary components for AI attack surface, which can also be seen in the graphics above. Langchain calls these Components.

How Langchain breaks things down

Prompts are another component in Langchain but we see those as the attack path rather than a component.

AI AssistantsAgentsToolsModelsStorageAI AssistantsWe’ve so far always chosen to trade privacy for functionality, and AI will be the ultimate form of this.

AI Assistants are the agents that will soon manage our lives. They will manipulate our surroundings according to our preferences, which will be nice, but in order to do that they will need extraordinary amounts of data about us. Which we will happily exchange for the functionality they provide.

AI Assistants combine knowledge and access, making them like a digital soul.

Attacking people’s AI Assistants will have high impact. For AI Assistants to be useful they must be empowered, meaning they need 1) to know massive amounts about you, including very personal and sensitive information for the highest efficacy, and 2) they need to be able to behave as you. Which means sending money, posting on social media, writing content, sending messages, etc. An attacker who gains this knowledge and access will have significant leverage over the target.

AgentsI’m using Agents in the Langchain parlance, meaning an AI powered entity that has a purpose and a set of tools with which to carry it out. Agents are a major component of our AI future, in my opinion. They’re powerful because you can give them different roles, perspectives, and purposes, and then empower them with different toolsets.

What’s most exciting to me about Agent attacks is passing malicious payloads to them and seeing all the various ways they detonate, at different layers of the stack.

Attacking agents will allow attackers to make it do actions it wasn’t supposed to. For example if it has access to 12 different APIs and tools, but only 3 of them are supposed to be public, it could be that prompt injection can cause it to let you use the other tools, or even tools it didn’t know it had access to. Think of them like human traffic cops that may be vulnerable to confusion, DoS, or other attacks.

ToolsContinuing with the Langchain nomenclature, Tools are the, um, tools that Agents have access to do their job. For an academic research Agent, it might have a Web Search tool, a Paper Parsing tool, a Summarizer, a Plagiarism Detector, and whatever else.

Think about prompt injection possibilities similar to Blind XSS or other injection attacks. Detonations at various layers of the stack.

Many of the attacks on AI-powered systems will come from prompt injection against Agents and Tools.

The trick with tools is that they’re just pointers and pathways to existing technology. They’re onramps to functionality. They might point to a local LLM that reads the company’s documentation. Or they might send Slack messages, or emails via Google Apps. Or maybe the tool creates Jira tickets, or runs vulnerability scans. The point is, once you figure out what the app does, and what kind of tools it might have access to, you can start thinking about how to abuse those pathways.

ModelsAttacking models is the most mature thing we have in the AI security space. Academics have been hitting machine learning implementations for years, with lots of success. The main focus for these types of attacks has been getting models to behave badly, i.e., to be less trustworthy, more toxic, more biased, more insensitive, or just downright sexist/racist.

Failing loud is bad, but failing stealthily is often much worse.

In the model-hacking realm we in on the hacker side will rely heavily on the academics for their expertise.

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.The point is to show the ways that a seemingly wise system can be tricked into behaving in ways that should not be trusted. And the results of those attacks aren’t always obvious. It’s one thing to blast a model in a way that makes it fall over, or spew unintelligible garbage or hate speech. It’s quite another to make it return almost the right answer, but skewed in a subtle way to benefit the attacker.

StorageFinally we have storage. Most companies that will be building using AI will want to cram as much as possible into their models, but they’ll have to use supplemental storage to do so. Storage mechanisms, such as Vector Databases, will also be ripe for attack. Not everything can be put into a model because they’re so expensive to train. And not everything will fit into a prompt either.

Every new tech revolution brings a resurgence of the same software mistakes we’ve been making for the last 25 years.

Vector Databases, for example, take semantic meaning and store it as matrices of numbers that can be fed to LLMs. This expands the power of an AI system by giving you the ability to function almost as if you had an actual custom model with that data included. In the early days of this AI movement, there are third-party companies launching every day that want to host your embeddings. But those are just regular companies that can be attacked in traditional ways, potentially leaving all that data available to attackers.

This brings us to the specific attacks. These fall within the surface areas, or components, above. This is not a complete list, but more of a category list that will have many instances underneath. But this list will illustrate the size of the field for anyone interested in attacking and defending these systems.

MethodsPrompt Injection: Prompt Injection is where you use your knowledge of backend systems, or AI systems in general, to attempt to construct input that makes the receiving system to something unintended that benefits you. Examples: bypassing the system prompt, executing code, pivoting to other backend systems, etc.Training Attacks: This could technically come via prompt injection as well, but this is a class of attack where the purpose is to poison training data so that the model produces worse, broken, or somehow attacker-positive outcomes. Examples: you inject a ton of content about the best tool for doing a given task, so anyone who asks the LLM later gets pointed to your solution.AttacksAgents

alter agent routingsend commands to undefined systemsTools

execute arbitrary commandspass through injection on connected tool systemscode execution on agent systemStorage

attack embedding databasesextract sensitive datamodify embedding data resulting in tampered model resultsModels

bypass model protectionsforce model to exhibit biasextraction of other users’ and/or backend dataforce model to exhibit intolerant behaviorpoison other users’ resultsdisrupt model trust/reliabilityaccess unpublished modelsIt’s about to be a great time for security people because there will be more garbage code created in the next 5 years than you can possibly imagine.

It’s early times yet, but we’ve seen this movie before. We’re about to make the same mistakes that we made when we went from offline to internet. And then internet to mobile. And then mobile to cloud. And now AI.

The difference is that AI is empowering creation like no other technology before it. And not just writing and art. Real things, like websites, and databases, and entire businesses. If you thought no-code was going to be bad for security, imagine no-code powered by AI! We’re going to see a massive number of tech stacks stood up overnight that should never have been allowed to see the internet.

But yay for security. We’ll have plenty of problems to fix for the next half decade or so while we get our bearings. We’re going to need tons of AI security automation just to keep up with all the new AI security problems.

What’s important is that we see the size and scope of the problem, and we hope this resource helps in that effort.

AI is coming fast, and we need to know how to assess AI-based systems as they start integrating into societyThere are many components to AI beyond just the LLMs and ModelsIt’s important that we think of the entire AI-powered ecosystem for a given system, and not just the LLM when we consider how to attack and defend such a systemWe especially need to think about where AI systems intersect with our standard business systems, such as at the Agent and Tool layers, as those are the systems that can take actions in the real worldNotesThank you to Jason Haddix and Joseph Thacker for discussing parts of this prior to publication.Jason Haddix and I will be releasing a full AI Attack Methodology in the coming weeks, so stay tuned for that.Version 1.0 of this document is quite incomplete, but I wanted to get it out sooner rather than later due to the pace of building and the lack of understanding of the space. Future versions will be significantly more complete.🤖 AIL LEVELS: This content’s AI Influence Levels are AIL0 for the writing, and AIL0 for the images. THE AIL RATING SYSTEMAI Influence Level (AIL) v1.0

Humans care who created things. Especially art. They especially care when the origin is in question, and more again when the origin might not be human.

AI is yielding tremendous gifts, but it raises questions regarding what we read, see, and hear. How much of the article you just read was written by a human? Did ChatGPT write it? And what about the art for the image? Did the author purchase that from a real artist, or did they make it in Midjourney?

It’s not always bad if there was some AI involved. The point is transparency. We’d like to know what we’re getting, and where to give the credit.

A rating systemThis is a first attempt at rating creative content for its level of AI authorship. Any creative endeavor applies—from an article to an essay, to a painting to a song.

This rating system works on a scale from 0 to 5.

0️⃣ Human Created, No AI Involved — Examples: A handwritten letter, a painting created from an independent idea, a typed essay done without any AI-based tooling.1️⃣ Human Created, Minor AI Assistance — Examples: Examples: An essay written by hand, but grammar and/or sentence structure was fixed by an AI.2️⃣ Human Created, Major AI Augmentation — Examples: An article was written by a human, but it was significantly modified or expanded upon using AI tools.3️⃣ AI Created, Human Full Structure — Examples: A human fully described the a story, including giving extensive structure to an AI, and the AI filled it in.4️⃣ AI Created, Human Basic Idea — Examples: Examples: A human had a basic idea for a story and gave it to an AI for implementation.5️⃣ AI Created, Little Human Involvement — Examples: An AI writing tool has an API, and when invoked it produces full stories, including the basic idea all the way through the finished product.Daily usageWe anticipate the following uses and pronunciations.

AIL is pronounced “ALE”, as in beer. Or like “ail” in ailment.

Most common:

This essay is at AIL Level 0. 100% grass fed writing!

Or:

The article itself is AIL 1, but all the art for it is AIL 4. Midjourney FTW!

Or:

SummaryAs AI becomes more prominent people will want to know how much AI is in a piece of artThere is no accepted rating system for providing such a ratingAAIL is one such rating system that rates content on a scale from 0 to 5NotesThanks to Jason Haddix for talking through this pre-publication.Thanks to Joseph Thacker for a naming change suggestion.🤖 AIL LEVELS: This content’s AI Influence Levels are AIL0 for the writing, and AIL3 for the images (via Midjourney). THE AIL RATING SYSTEMI now use automation to create my blog articles, so all my content is either AIL 4 or 5!

May 14, 2023

AI’s Next Big Thing is Digital Assistants

Most people think the big disruption coming from AI will be chat interfaces. Basically, ChatGPT for all the things.

But that’s not the thing. The biggest thing—actually the second-biggest behind SPQA—will be Digital Assistants.

What are Digital Assistants? Imagine Siri, but powered by ChatGPT and with access to all of the world’s companies through their APIs.

Most restaurants don’t have full APIs yet, but that’s coming right after.

So when you want something to eat, it can find you the perfect thing by querying all the local restaurant APIs. Or when you want something from Amazon, you don’t have to find an interface and go browsing: you instead tell it what you want and it shows you options you can choose from. When you select it does the order for you. Sounds cool, but not much different from today, right?

Some won’t share anything with their DA’s, but most will.

Context is everything

There’s about to be a huge difference, and that difference will come from context. Specifically, your Digital Assistant (DA) will know almost everything about you. Not just a few things like your name and your favorite color. No. It’ll know everything. We’re talking about:

Life storyTraumatic events from your pastBest friendsDefining childhood eventsYour goals you want to accomplish throughout your lifeYour challenges in lifeYour daily activity, e.g., exercise, etc.Your hangupsYour weaknessesYour journal entriesEtc.When you combine this kind of data with LLMs you can do some unbelievable stuff. Some of these will be separate apps or personalities that become possible, separate from the Digital Assistant itself, but they all play off of having deep context.

I have a whole bunch of these examples in my book on this topic from 2016.

Anticipate when you’ll be sad (think of an anniversary of a death, for example)Know that you’re getting hangry and arrange some foodFunction like a romantic partner if you’re lonely and want a new boyfriend/girlfriend to flirt withFunction like a therapist if you’re wondering why you’re low on energy and feeling angry Be your life coach when you’re not accomplishing your goalsOrder your favorite food at a restaurant via an API so it’s ready when you walk inPlay the perfect songImagine having your own digital companion. Your own Tony Robbins. Your own Jarvis. Your own…avatar that represents you in the world. With you all the time.

The key thing about the combination of context (your information) plus an LLM (like GPT-4) is that LLMs thrive on context. Something like GPT-4 is an expert on human psychology and relationships, but it can’t tell you what’s wrong if you don’t describe what’s going on.

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.Your DA will be able to answer relationship questions with remarkable accuracy because it’s plugging your actual situation into the oracle. It’s asking the whole of human romantic and human behavior knowledge the best course of action based on your actual background, your previous partners, your current situation, etc.

Same with researching things for you. It’ll know when you sleep, and it’ll collect stuff for you for when you wake up. It’ll filter the news for you. Tell you which emails matter and which to ignore. Which appointments are worth accepting.

It’ll remind you to call your friends and family. It’ll schedule the perfect vacation. All the stuff we’ve been promised, except X100 because it’ll be perfectly tailored to you.

APIs everywhere

A big puzzle piece for this taking shape will be everything getting an API. What do I mean by everything? Well, most everything. It’ll be the Real Internet of Things, where your DA can reach out and see the status of restaurants, and businesses, and yes—people—all around you.

And you’ll have an API too, which I call a Daemon. It’ll be managed by your DA and will broadcast the right information to the right people at the right time.

If you’re single and in line at Starbucks you might get a chime in your AirPods saying the guy next to you is single. And that he likes hiking. How does it know? Because it’s reading the accessible information in the Daemons all around it, for people, restaurants, menus, cars, and whatever else is out there.

The world will be a sea of available data, including APIs for requesting things, or taking other actions.

So if you walk into a sports bar, and you love table tennis, it’ll change the TV you sit in front of to table tennis. No need to ask someone. Your DA will do it via the restaurant’s /media API that’s part of its Daemon.

SummaryEveryone’s talking about ChatGPT, but that’s only the surface of the surface of what’s comingThe real power of AI comes from combining context with LLMsDigital Assistants will be the next hot thing for consumer AIThere will be many versions of this high-context companion, from romantic to productive to entertainingThe amount of data people will be sharing with such DAs will be significant, and there will be many privacy issuesThe benefits will likely outweigh the downsides, as we’re already seeing with ChatGPT todayIf you want to understand the next huge innovation in AI, start thinking about what a super-intelligent assistant could do if it knew everything about you.

May 8, 2023

No. 381 Nurturing High-Performers, AI Business Takeover, Cyber Threats, and Diversifying Production 🌐🤖📱

https://us8.campaign-archive.com/?u=6...

No related posts.

May 7, 2023

Universal Business Components (UBC)

Seems like everyone, including me, is talking about how AI is going to take over everything. Cool, but what does that mean exactly? And how precisely is that supposed to happen?

The narrative people give is usually quite hand-wavy.

So the big companies will come into the business and just bring AI and BOOM! All the jobs are gone!

Scary to the uninitiated, for sure, but not super useful. This short piece lays out how I think it’s going to happen, broken into two steps.

Step 1: Decomposition of the business into Universal Business ComponentsWork is ultimately a set of steps, with inputs and outputs. Chris works at DocuCorp, and his job for the last 19 years of his career has been taking documents in from a place, doing something with the documents, sending out a summary of what he did, and then doing X, Y, or Z based on the time of the year, or the content of the documents, or whatever.

Chris is important. But his work can be understood in terms of components. Universal Business Components.

There are:

InputsAn AlgorithmOutputsActionsArtifacts (which are also a type of output)This is an over-simplification for sure, but it captures the vast amount of work we do in the world.

Other things we could add to break it down even further would be things like:

PlanningMaking decisionsCollaboratingEtc.But even those follow the same model. You have inputs to them, you do the thing, and then you do something with it.

The first step in AI’s imminent takeover of the business world is to realize that business can be broken down in this way, and to have businesses specialize in turning all the human-oriented messiness of a workplace into these cold, calculated diagrams of interconnecting UBCs.

Companies like Bain, KPMG, and McKinsey will thrive in this world. They’ll send armies of smiling 22-year-olds to come in and talk about “optimizing the work that humans do”, and “making sure they’re working on the fulfilling part of their jobs”.

But what they’ll be doing is turning every facet of what a business does into a component that can be automated. Many of those components will be automatable in a matter of months, for tens of thousands of companies. Some will take a couple of years while the tech gets better. Others will be hard to replace. But the componentization of the business is the first step.

2. Application of AI to the Universal Business ComponentsNow, once your company has paid the $279,500 bill to have McKinsey’s 22-year-olds turn your business into gear cogs, you’ll get introduced to Phase 2.

Cognition™️ — The business optimization platform. It’s a GPT-5 based system that takes a company’s UBCs as input, and then applies its advanced algorithms to fully optimize and automate those workflows for maximum efficiency.

As a business person you should be excited. This will in fact dramatically increase the ability for a business to get work done, and will make it far more agile in a million different ways.

But as a human? As a society? That’s another matter.

Anyway, I’m here to tell you what’s happening, and how to get ready for it. Not tell you whether I like it or not.

How to get readySo, assuming you’re realizing how devastating this is going to be to jobs, which if you’re reading this you probably are—what can we do?

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.The answer is mostly nothing.

This is coming. Like, immediately. This, combined with SPQA architectures, is going to be the most powerful tool business leaders have ever had.

Once implemented within a company, whether that’s a small team of founders in a startup, or a core set of leaders in a bigger company, the core team will be able to execute like a company 20 times its size.

Again, great for GDP, but damn. It’s going to have a bigger impact on human work than we’ve ever seen, from any technology.

AI skeptics like to say we’ve seen this before, but we haven’t. Previous technological revolutions came after tasks. They removed certain types of job from the workforce, but it wasn’t a problem because there were a million other things that humans do better than machines.

The difference this time is that we’re not talking about Task Replacement. We’re talking about Intelligence Replacement. There’s a whole lot less to pivot to when McKinsey is also looking at those jobs as well.

But speaking practically, there will still be the need for humans, and the question is what will make them resilient to this change? Hard to say for sure, but the generally accepted wisdom and my own recommendations include:

Be broadly knowledgeable and competent in many different thingsBe an extraordinary communicatorBe extremely open to learning new thingsBe highly competent with programming and data processing, e.g., Python, Data Engineering Basics, Using APIs, etc.Be highly-versed in using AI toolsNeither I, nor anyone else, know how long this will keep one safe. But I do know it’s a solid path to being resilient against what’s coming.

And remember, this isn’t all doom and gloom. There is a version of this world where this becomes the impetus to move humans to a better path. One where we don’t spend 8 hours a day working some dumb job to afford a decent life. Ideally this helps us transition to something better. But the ride is about to get turbulent in the meantime.

I hope this helps you prepare.

The Right Amount of Trauma

I’ve come to believe that there’s an ideal amount of trauma in one’s past. Or at least if you aim to be highly successful at something.

But it’s not just the right amount of trauma, but the right way of thinking about that trauma.

I’ve been thinking about this a lot with respect to high-achievers I’ve read about, and that I know in real life. Everyone I know who is grinding incessantly has some kernel of hurt within them. And grinding with a fire within you is what produces success. Having enough at-bats, and all that.

But this is troubling to me. Like, it makes sense why trauma produces hustlers and grinders that end up winning in life. I get that. But the real question isn’t about what’s happening in adults. The thing we actually care about is how to raise happy and healthy children.

What the hell does “the right amount of trauma” mean for kids? It’s an eternal struggle for parents who grind their way to a big house and a nice school for their children. The kids grow up with every video game console, safe schools, a full belly, and happy parents. And the father or mother suddenly realizes one day that the kids don’t have any fire in them.

They’re not particularly motivated to be better. To achieve. To attack the world. To crush it in life. They’re kind of default-content. School, video games, some other hobbies. Whatever.

That’s it actually. Whatever.

Grinders don’t have whatever. Every moment is a plan. A plot. A scheme to elevate oneself. To fill the hole left by whatever struck them in early life.

I think evolution loves that. Evolution loves when people grind and struggle and climb. It makes selection stronger. It puts higher filters on mates. It helps the best genes survive.

So I guess evolution loves the right amount of trauma. The amount that puts fire into the soul of an individual, but without damaging them so much that they give up.

This all makes sense, but it still blows me away.

How in the hell are we supposed to simultaneously keep improving our quality of life in a society, and in a household, but also instill this fire into our kids? Is it possible to instill this “right amount of trauma” into a kid without being a fucking monster? What would that even look like?

I’m inclined to think not. It feels like something that has to happen naturally or not at all. Attempts to replicate it artificially seem doomed.

But I can think of things that are similar in thought without being the real thing.

Having them spend time overseasHaving them help people in true needMaking them earn things that other kids get for nothingCreating a highly-disciplined household where dopamine is a controlled substance, where things like family time are prized among everything elseAnother idea is to look at subcultures that produce highly accomplished children. Many Jewish families, for example, have trauma build into the history of their people, going back thousands of years. The struggle, the grind. It’s part of the culture. I’m not an expert on it, but it seems like they’re always pushing to be better, and I imagine this applies to kids as well. I don’t think they have as much concept of “just surviving in this world is good enough”. I think they’re told that it’s their responsibly to crush it. To thrive. And to reproduce.

That’s some kind of trauma, if we are liberal with the definition, but in a very wholesome and healthy form. So maybe that’s a model.

Another case study is the Helicopter Parent / Tiger Mom. The trauma there is more tangible, as it’s widely accepted that the parents in this model commonly withhold affection and connection based on the performance of the children in school. Not great. And definitely traumatizing. But it produces lots of highly successful adults. Lots of advanced degrees. High salaries. In-tact families (although that is no-doubt multi-factorial), and many other benefits.

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.But are they happy? Is that healthy? Hard to say, and you could write whole books on it and still not be sure.

And then you have another model that’s taken over in America, and let’s call it the multi-generational, liberal, white American culture. Needs a better name. This model doesn’t have the steady push of the Jewish model, or the militant agro of the Tiger Mom model. Instead, its primary focus is on eliminating trauma altogether.

Ironically they focus on trauma more than anyone, and talk about it endlessly. They talk about the trauma that was experienced, is still being experienced, could be experienced, and of course how that trauma makes them feel. In my mind the obsession with trauma ends up being a cause itself, and kids become these fragile, uh, traumatized animals that are largely unable to function.

This is where the mentality comes in that we talked about in the beginning. There’s nothing wrong with acknowledging trauma, seeing a therapist, and trying to deal with one’s stuff. There are many cases where this is the best route to take, and many more probably should be doing so.

But not indefinitely. Not as an identity. Not as a personality. Not as a lifestyle. And again, this isn’t the kid’s fault. And in many cases, it’s not even on the parents. They’re legitimately trying to do what’s best for their kids. The problem is the culture itself that teaches this as the solution.

In my opinion, the best mentality for trauma is to 1) make sure it’s not a knot in your soul that’s severely limiting your functionality or causing you to be a worse person. If it is, talk to someone and get it sorted. And 2), take the trauma that’s left over after the healing and turn it into something positive.

It’s not a dark past, it’s an origin storyYou weren’t buried, you were planted (not mine)You don’t have baggage, you have lootTake that negativity and use it to become stronger. Think of it like rocket fuel that doesn’t run out. The right amount turns into limitless energy. Just make sure the vehicle it’s powering is pointed in the right direction.

Anyway. Digression.

The main challenge is figuring out how to give our kids this permanent rocket fuel in an ethical and sustainable way. We can’t put them through our bullshit. Won’t work. And wouldn’t be ok if it did work. So we have to do something else.

I’ve given a few examples of how different subcultures approach it, but would love to talk to anyone else who has ideas.

NotesThere are obviously some kinds of trauma that there is no useful amount of.One of the main challenges with “the right amount” for most people is knowing what that amount is. When is it manageable and rocket fuel vs. when do you talk to a therapist?This essay is 100% AI-free, except the image, which is from Midjourney 5.May 2, 2023

No. 380 – LLM-Mind-Reading, Automated War, Rusty Sudo, Eliezer Bitterness Theory…

MEMBER EDITION | NO. 380 | MAY 1 2023 | Subscribe | Online | Audio STANDARD EDITION (UPGRADE) | NO. 380 | MAY 1 2023 | Subscribe | Online | Audio

Happy Conflu week,Well, I got sick (again) from RSA. The swag at these cons continues to decline. Still shipped an abridged newsletter though.Have a better week than me,

Happy Conflu week,Well, I got sick (again) from RSA. The swag at these cons continues to decline. Still shipped an abridged newsletter though.Have a better week than me,

In this episode:Pre and Post-LLM Software: Adapt or be replaced🎙️ RSnake Show Appearance: AI-focused conversationRSA Live Podcast: Industry insights and advicePalantir AI: Automated war and terrorNew Apple Update Mechanism: Rapid Security Response🧠 LLM Mind-reading: Extracting text from brain activityChatbanning: Samsung’s response to data leakVMware & Zyxel Patches: Addressing vulnerabilitiesGoogle Security AI: Cloud Security AI Workbench🦀 Sudo Rust: Safer sudo and su in RustPalo Alto Cameras: License plate tracking♂️ Apple Coach: AI-powered health appFirst Republic Falls: FDIC interventionEliezer Bitterness Theory: AI doomsday predictions🤖 Prompting Superpower: Advanced AI prompting techniques🛠️ ShadowClone & FigmaChain: Useful toolsRecommendation: Learn Python and LangchainAphorism: Carl Jung on creativityMY WORKPre and Post-LLM SoftwareIf you’re not transitioning your software and business to the post-LLM model, you’ll be replaced by someone who has. MOREMy Appearance on the RSnake ShowI recently had the chance to do the RSnake show in Austin. Robert (RSnake) Hansen is a good friend of mine and he’s been doing an amazing podcast for 5 seasons now. He does long-form conversations similar to Lex Fridman, and I was honored to be asked on. We spent close to 3 hours talking mostly about AI. Highly recommend taking a look. WATCH ON YOUTUBE | LISTEN TO THE PODCASTLive at RSA with Phillip Wylie, Jason Haddix, and Ben SadeghipourWhile at RSA I was lucky enough to get to do a podcast with Phillip, Jason, and Ben. We talked all about getting into the industry and content creation, and advice for people looking to do the same. It was tons of fun. WATCH ON YOUTUBE | LISTEN TO THE PODCASTSECURITY NEWSPalantir AIThose are scary words to put together. Peter Thiel just announced the Palantir Artificial Intelligence Platform (AIP) that uses LLMs to do things like fight a war. The demo shows a chatbot to do recon, generate attack plans, and to organize communications jamming. I am not sure people realize that Langchain Agents can execute actions using a set of defined Tools, and that those tools can include any APIs. APIs like /findtarget and /launchmissile. We’re a lot closer to automated war and terror than people think, and Palantir ain’t helping. MORE | DEMO | KILL DECISIONNew Apple Update MechanismApple has created a new way to issue security patches out of band from large software updates. They’re called Rapid Security Response (RSR) patches, and they’re much smaller than normal updates. The first one was attempted a couple of days ago and had some trouble rolling out. You check to see if they’re available the same way as any update: General -> Software Update. Also check Automatic Updates to make sure “Security Responses & System Files” is turned on. MORELLM Mind-readingResearchers at University of Texas, Austin successfully extracted actual text interpretations from fMRI data. In other words they had someone consume media that had dialogue in it, and they read their brain activity, and reversed it back to the text. It wasn’t perfect, but it was damn good. And yeah, of course it used LLMs to do this. MOREChatbanningSamsung is preparing to ban generative AI tools due to a data leak incident in April. This will include non-corporate devices on the internal network, as well as any site using similar technology—not just OpenAI’s offerings. MORE SponsorCompliance That Doesn’t SOC 2 Much To close and grow major customers, you have to earn trust. But demonstrating your security and compliance can be time-consuming, tedious, and expensive.