Daniel Miessler's Blog, page 20

August 30, 2023

My Current Definition of AGI

People throw the term “AGI” around like it’s nothing, but they rarely define what they mean by it.

So most discussions about AGI (and AI more generally) silently fail because nobody even agrees on the terms.

My definition of AGIHere’s my attempt. 🤸🏼♀️

—

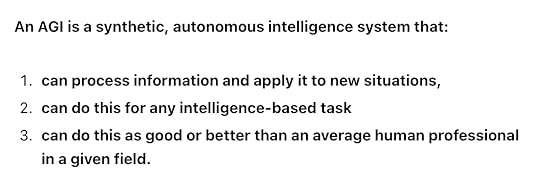

An AGI is a synthetic, autonomous intelligence system that:

can process information and apply it to new situations,

can do this for any intelligence-based task

can do this as good or better than an average human professional in a given field.

Don’t smuggle in humannessThe biggest problem I see with people when they think about AI is that they secretly—even to themselves—define AI as whatever a human does.

But when they try to give a definition, it doesn’t include humanity.

Then when someone gives an example of something intelligent that’s not human, that meets their criteria, they say, “That’s not intelligence.”

Examples:

Write me a song about love between two AI’s that aren’t supposed to be conscious

Write a short story that uses themes from Russian Literature to discuss the existential challenges of living with AI in 2050

For someone inflicted with this problem, they’ll say something like:

In other words, since AIs can’t be creative, anything creative created by an AI isn’t REAL creativity. Same with intelligence, or whatever else people like this consider to be only possible in humans.

The escape hatchThere’s only one escape hatch for this, and that is strictly defining AGI, intelligence, and creativity and then forcing all parties to agree that—if these criteria are met—we will all agree it’s real. Even if it came from something other than a human.

I think this definition gets us there:

—

An AGI is a synthetic, autonomous intelligence system that:

can process information and apply it to new situations,

can do this for any intelligence-based task

can do this as good or better than an average human professional in a given field.

I would love to hear thoughts on where I’m wrong and/or how to tighten the definition.

Powered by beehiiv

August 24, 2023

What Happens to Content When Top-Tier Production Quality is Commoditized?

I think AI is about to massively improve the quality of our best content. But not for the reason you might expect.

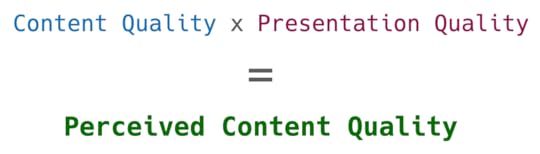

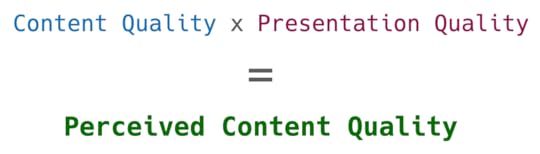

Not because AI is making better content (which will also be true), but because the way people perceive content is heavily influenced by how it looks. I think it works something like this.

That perceived bit is key. Look at this production quality here for Sam Harris on the Modern Wisdom podcast.

I mean, that’s gorgeous.

Or look at the wide shot of them talking. In a tricked-out warehouse. With the best lighting available to humanity.

It’s brilliant. And more importantly, it makes them look brilliant.

I can’t wait for AI to be able to do this for us. AI-augmented production quality will be an unbelievable boon for high-quality content like this. Why?

Because right now—generally speaking—only people making top 1% money can output top 1% production quality. That means most people creating content are at a severe disadvantage in our formula.

Content Quality * Presentation Quality

= Perceived Content Quality

So let’s say the conversation above with Sam Harris and Chris Williamson is like a 9 on Content Quality, and a 9 on Production Quality. That’s an 81 on Perceived Quality, which is what matters.

Now let’s take another show that’s almost as good, but produced in the creator’s bedroom. So the content is an 8, but the Production is only a 6. That’s a total Quality of 48. Huge difference.

Or let’s say it’s Sam Harris and Richard Feynman and Einstein somehow. Best show ever. 10/10. So now it’s a Content 10, but the production is only a 7.

That’s a 70 vs. an 81.

The production quality magnifies (and reduces) the content too much.

AI to the rescueThere are a number of things that signal production quality in a video.

For YouTube, there are tons of things (not counting great guests that are great looking or other human-based factors):

Chapter markers

Video description

Audio quality

Video quality

Setting

Lighting

Cinematography

Multiple cameras

Editing

Etc.

The difference between doing those well and not doing them, or doing those poorly, is monumental.

AI is going to democratize that. And when it does, everyone will have a production score of 9-10 (taking preferences out of the equation of course).

Then what will matter will be the content.

Can’t wait.

Powered by beehiiv

What Happens to Content When Top-Tier Presentation is Commoditized?

I think AI is about to massively improve the quality of our best content. But not for the reason you might expect.

Not because AI is making better content (which will also be true), but because the way people perceive content is heavily influenced by how it looks. I think it works something like this.

That perceived bit is key. Look at this production quality here for Sam Harris on the Modern Wisdom podcast.

I mean, that’s gorgeous.

Or look at the wide shot of them talking. In a tricked-out warehouse. With the best lighting available to humanity.

It’s brilliant. And more importantly, it makes them look brilliant.

I can’t wait for AI to be able to do this for us. AI-augmented production quality will be an unbelievable boon for high-quality content like this. Why?

Because right now—generally speaking—only people making top 1% money can output top 1% production quality. That means most people creating content are at a severe disadvantage in our formula.

Content Quality * Presentation Quality

= Perceived Content Quality

So let’s say the conversation above with Sam Harris and Chris Williamson is like a 9 on Content Quality, and a 9 on Production Quality. That’s an 81 on Perceived Quality, which is what matters.

Now let’s take another show that’s almost as good, but produced in the creator’s bedroom. So the content is an 8, but the Production is only a 6. That’s a total Quality of 48. Huge difference.

Or let’s say it’s Sam Harris and Richard Feynman and Einstein somehow. Best show ever. 10/10. So now it’s a Content 10, but the production is only a 7.

That’s a 70 vs. an 81.

The production quality magnifies (and reduces) the content too much.

AI to the rescueThere are a number of things that signal production quality in a video.

For YouTube, there are tons of things (not counting great guests that are great looking or other human-based factors):

Chapter markers

Video description

Audio quality

Video quality

Setting

Lighting

Cinematography

Multiple cameras

Editing

Etc.

The difference between doing those well and not doing them, or doing those poorly, is monumental.

AI is going to democratize that. And when it does, everyone will have a production score of 9-10 (taking preferences out of the equation of course).

Then what will matter will be the content.

Can’t wait.

Powered by beehiiv

August 23, 2023

Thoughts on the Eliezer vs. Hotz AI Safety Debate

I just got done watching the debate on AI safety between George Hotz and Eliezer Yudkowski on Dwarkesh Patel’s podcast.

The debate was quite fun to watch, but also frustrating.

What irked me about the debate—and all similar debates—is that they fail to isolate the disagreements. 90% of the discussion ends up being heat instead of light because they’re not being disciplined about:

Finding the disagreement

Addressing their position on that disagreement

Listening to a rebuttal

Deciding if that’s resolved or not

Either continuing on 2-4 or moving onto the next #1

Instead what they do (they being the General They in these debates) is produce 39 different versions of #2, back and forth, which is dazzling to watch, but doesn’t result in a #4 or #5.

It feels like a Chinese martial arts movie from the 80’s after you’ve seen a lot of MMA. Like why don’t you just HIT him? Why all the extra movements?

I think we can do better.

How I’d characterize and address each of their positionsI’m not saying I’d do better in a live debate with either of them. I could very well get flustered or over-excited and end up in a similar situation—or worse.

But if I had time and notes, I’d be able to do much better. And that’s what I have right now with this written response. So here’s what I see from each of them.

Hotz’ argumentsI’m actually not too clear on Hotz’ argument, to be honest, and that’s a problem for him. It’s also why I think he lost this debate.

He’s flashy. And super smart, obviously, but I feel like he was just taking sniper shots from a distance while mobile.

Wait, I think I have it. I think he’s basically saying:

Hotz Argument 1: We’ll have time to adjustAI’s intelligence will not explode quickly

Timing matters a lot because if it moves slowly enough we will also have AIs and we’ll be able to respond and defend ourselves

Or, if not that, then we’d be able to stage some other defense

My response to this is very simple, and I don’t know why Eleizer and other people don’t stay focused very clearly on this.

We just accidentally got GPT-4, and the jump from GPT-2 to GPT-4 was a few years, which is basically a nanosecond

The evolution of humans happened pretty quickly too, and they had no creator guiding that development. That evolution came from scratch and no help whatsoever. And as stupid and slow as it was, it lead to us typing this sentence and creating GPT-4

So given that, why would we think that humanity in 2023, when we just created GPT-4, and we’re spending what?—tens of billions of dollars?—on trying to create superintelligence, would not be able to do it quickly?

It’s by no means a guarantee, but it seems to me that given #1 and #2, betting against the smartest people on planet Earth, who are spending that much money, being able to jump way ahead of human intelligence very soon is a bad, bad bet

Also keep in mind that we have no reason whatsoever to believe human IQ is some special boundary. Again, we are the result of a slow, cumbersome chemical journey. What was the IQ of humans 2,000 years ago compared to the functional (albeit narrow) IQ of GPT-4 that we just stumbled into last year?

Hotz Argument 2: Why would AI even have goals, and why would they be counter to us?There’s no reason to believe they’ll come up with their own goals that are counter to us

This is sci-fi stuff, and there’s no reason to believe it’s true

Eliezer addressed this one pretty well. He basically said that—as you evolutionarily climb the ladder—attaining goals becomes an advantage that you’ll pick up. And we should expect AI to do the same. By the way, I think that’s exactly how we got subjectivity and free will as well, but that’s another blog post.

I found his refutation of Holz Argument #2 to be rock solid.

Now for Eliezer’s arguments.

Yudkowski’s argumentsI think he really only has one, which I find quite sound (and frightening).

Given our current pace of improvement, we will soon create one or more AIs that are vastly superior to our intelligence

This might take a year, 10 years, or 25 years. Very hard to predict, but it doesn’t matter because the odds of us being ready for that when it happens are very low

Because anything that advanced is likely to take on a set of goals (see evolution and ladder climbing), and because it’ll be creating those goals from a base of massive amount of intelligence and data, it’ll likely have goals something like “gain control over as many galaxies as possible to control the resources”

And because we, and the other AIs we could create, are competitors in that game, we are likely to be labeled as an enemy

If we lots of time and have advanced enough to have AIs to fight for us this will be our planet against their sun. And if not it’ll be their sun against our ant colony

In other words, we can’t win that. Period. So we’re fucked

So the only smart thing to do is to limit, control, and/or destroy compute

Like I said, this is extremely compelling. And it scares the shit out of me.

I only see one argument against it, actually. And it’s surprising to me that I don’t hear it more from the counter-doomers.

It’s really hard to get lucky the first time. Or even the tenth time. And reality has a way of throwing obstacles to everything. Including superintelligence’s ascension.

In other words, while it’s possible that some AI just wakes up and instantly learns everything, goes into stealth mode, starts building all the diamond nanobots and weapons quietly, and then—BOOM—we’re all dead…that’s also not super likely.

What’s more likely—or at least I hope is more likely—is that there will be multiple smaller starts.

A realistic scenarioLet’s say someone makes a GPT-6 agent in 2025 and puts it on GitHub, and someone gives it the goal of killing someone. And let’s say there’s a market for Drone Swarms on the darkweb, where you can pay $38,000 to have a swarm go and drop IEDs on a target in public.

So the Agent is able to research the darkweb, find where they can rent one of these swarms. Or buy. Whatever. So now there’s lots of iPhone footage of some political activist getting killed from 9 IEDs being dropped on him in Trafalgar Square in London.

Then within 48 hours there are 37 other deaths, and 247 injuries from similar attacks around the world.

Guess what happens? Interpol, Homeland Security, the Space Force, and every other law enforcement agency everywhere suddenly goes apeshit. The media freaks out. The public freaks out. Github freaks out. OpenAI freaks out.

Everyone freaks out.

They find the Drone Swarm people. They find the Darkweb people. They bury them under the jail. And all the world’s lawmakers go crazy with new laws that go into effect on like…MONDAY.

Now, is that a good or a bad thing?

I say it’s a good thing. Obviously I don’t want to see people hurt, but I like the fact that really bad things like this tend to be loud and visible. Drone Swarms are loud and visible.

And so too are many other instances of an early version of what we’re worried about. And that gives us some hope. And some time.

Maybe.

That’s my only argument for how Eliezer could be wrong about this. Basically it won’t happen all at once, in one swift motion, in a way that goes from invisible to unstoppable.

Here’s how I wish these debates were conductedPoint: We have time. Counterpoint: We don’t. See evolution and GPT-4.

Point: We have no reason to believe they’ll develop goals. Counterpoint: Yes we do; goals are the logical result of evolutionary ladder climbing and we can expect the same thing from AI.

Etc.

We should have a Github repo for these. 🤔

SummaryI wish these debates were structured like the above instead of like Tiger Claw vs. Ancient Swan technique.

Hotz’ main argument is that we have time, and I don’t think we do. See above.

Eliezer’s main argument is that we’re screwed unless we limit/control/destroy our AI compute infrastructure. I think he’s likely right, but I think he’s missing that it’s really hard to do anything well on the first try. And we might have some chances to get aggressive if we can be warned by early versions failing.

Either way, super entertaining to see both of them debate. I’d watch more.

Powered by beehiiv

August 20, 2023

ATHI — An AI Threat Modeling Framework for Policymakers

My whole career has been in Information Security, and I began thinking a lot about AI in 2015. Since then I’ve done multiple deep dives on ML/Deep Learning, joined an AI team at Apple, and stayed extremely close to the field. Then late last year I left my main job to go full-time working for myself—largely with AI.

Most recently, my friend Jason Haddix and I joined the board of the AI Village at DEFCON to help think about how to illustrate and prevent dangers from the technology.

Many are quite worried about AI. Some are worried it’ll take all the jobs. Others worry it’ll turn us Into paperclips, or worse. And others can’t shake the idea of Schwarzenegger robots with machine guns.

This worry is not without consequences. When people get worried they talk. They complain. They become agitated. And if it’s bad enough, government gets involved. And that’s what’s happening.

People are disturbed enough that the US Congress is looking at ways to protect people from harm. This is all good. They should be doing that.

The problem is that we seem to lack a clear framework for discussing the problems we’re facing. And without such a framework we face the risk of laws being passed that either 1) won’t address the issues, 2) will cause their own separate harms, or both.

An AI Threat Modeling frameworkWhat I propose here is that we find a way to speak about these problems in a clear, conversational way.

Threat Modeling is a great way to do this. It’s a way of taking many different attacks, and possibilities, and possible negative outcomes, and turning them into clear language that people understand.

"In our e-commerce website, 'BuyItAll', a potential threat model could be a scenario where hackers exploit a weak session management system to hijack user sessions, gaining unauthorized access to sensitive customer information such as credit card details and personal addresses, which could lead to massive financial losses for customers, reputational damage for the company, and potential legal consequences."

A typical Threat Model approach for technical vulnerabilities

This type of approach works because people think in stories—a concept captured well in The Storytelling Animal: How Stories Make Us Human, and many other works in psychology and sociology.

A 20-page academic paper might have tons of data and support for an idea. A table of facts and figures might perfectly spell out a connection between X and Y. But what seems to resonate best is a basic structure of: This person did this, which resulted in this, which caused this, that had this effect.

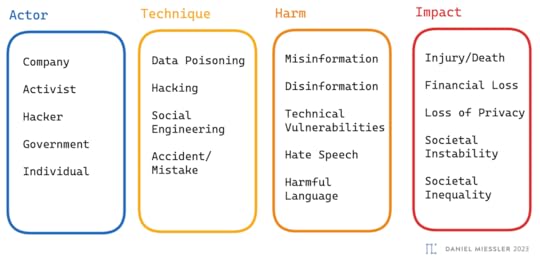

ATHI Structure = Actor, Technique, Harm, ImpactAnd so that is precisely the structure I propose we use for talking about AI harm. In fact we can use this for many different types of threats, including cyber, terrorism, etc. Many such schemas exist already in their own niches.

I think we need one for AI because it’s so new and strange that people aren’t calmly using such schemas to think about the problem. And many of the systems are quite intricate, terminology-heavy, and specific to one particular niche.

Basic structureThis ATHI approach is designed to be extremely simple and clear. We just train ourselves to articulate our concerns in a structure like this one.

Filled-in structure

Filled-in structureSo for poisoning of data that is used to train models it would look something like this in sentence form:

Or like this in the chart form.

Note that the categories you see here in each section are just initial examples. Many more can/should/will be added to make the system more accurate. We could even add another step between Technique and Harm, or between Harm and Impact.

The point is to think and communicate our concerns in this logical and structured way.

RecommendationWhat I recommend is that we start trying to have these AI Risk conversations in a more structured way. And that we use something like ATHI to do that. If there’s something better, let’s use that. Just as long as it has the following characteristics:

It’s Simple

It’s Easy to Use

Easy to Adjust as needed while still maintaining #1 and #2.

So when you hear someone say, “AI is really scary!”, try to steer them towards this type of thinking. Who would do that? How would they do it? What would happen as a result? And what would the be the effects of that happening?

Then you can perhaps make a list of their concerns—starting with the impacts—and look at which are worst in terms of danger to people and society.

I think this could be extraordinarily useful for thinking clearly during the creation of policy and laws.

SummaryAI threats are both new and considerable, so it’s hard to think about them clearly.

Threat Modeling has been used to do this for other security arenas for decades, and we should use something similar for AI.

We can use a similar approach for AI, using something simple like ATHI.

Hopefully the ability to have clear conversations about the problems will help us create better solutions.

CollaborationHere is the Github Repo for ATHI, where the community can add Actors, Techniques, Harms, and Impacts.

GitHub - danielmiessler/ATHI — An AI Threat Modeling Framework for Policymakers

ATHI — An AI Threat Modeling Framework for Policymakers, Lawmakers, and the Public to better understand and discuss AI Threats.

github.com/danielmiessler/athi

NOTES

NOTESThanks to Joseph Thacker, Alexander Romero, and Saul Varish for feedback on the system.

Powered by beehiiv

August 19, 2023

What I'm Doing and How It's Going

I’ve needed to write this for a long time, for multiple reasons.

Lots of people have been asking what the hell I’m doing. Like do I work somewhere? What do I actually do all day?

Tons of my friends are either miserable at work or are getting laid off

I believe that the time for being identified by—and tied to—corporate jobs is passing, and it’s time to transition to what comes next

I want to cover each of those and then talk about what we can do about it.

1. Lots of people have been asking what the hell I’m doingMy most urgent but least important reason for writing this is to just update people on what I’m doing. I have constant conversations with people I used to talk to a lot who say things like, “So you still at Apple?” And when I’m like, “Nah, I left there years ago and went to Robinhood for a couple of years.”, they’re like,

“Wow, had no idea.”

Fair enough. I do the same to lots of people too. We don’t have time to keep up on LinkedIn updates for the hundreds of people we know.

So, short version, I was at IOActive, left to go to Apple to build the BI function and platform for InfoSec for 3 years (before Covid). Left there to go to Robinhood for 2 years to build their Vuln Management team/platform. Then at the end of last year I left RH to go independent.

That was a couple months before GenAI popped, and the timing couldn’t have been more perfect.

1.1 Why I quit the Matrix standard systemYou’ve probably seen a million of these types of pitches:

Let me show you how to make 3.7 billion dollars every 15 minutes!

Scammer or narcissist, usually

I’ve seen those too, and I avoid them. Maybe some are real, but I have no interest in an Amazon drop-shipping or an Orphan Bloodletting business. Or whatever the hell they’re doing.

My reason for making the transition was very simple. It wasn’t about money. I was making good money in corporate InfoSec. No. I just had to get away from corporate jobs where one or more of these were true:

Leadership didn’t know what they wanted

They knew what they wanted but couldn’t articulate it

They could articulate it, but couldn’t execute

So the company spends most of its time in planning sessions that will be ignored within a few weeks, only to do it again in a couple of months

Hours, days, and weeks of time wasted on Zoom calls because of the problems above

They hire and fire with the empathy of a moldy sandwich

I was spending all my time on a thing I didn’t think improved the world as much as I could have doing something better

In other words, I felt I was wasting my limited time on Earth.

It was SOUL-CRUSHING, and I was done dreading Mondays.

1.2 What I’m doing nowI’ve transitioned to answering the most important question I’ve ever heard.

That’s a life-changing question, and you should be asking it often.

When I asked myself that question I realized I should be working for myself, building things that help people, consulting with people to help them, and putting that content into the world somehow.

So today I run Unsupervised Learning, which is not just the newsletter/podcast but the parent company of three separate pillars.

Builder: First, I’m building a platform for solving all sorts of problems around purpose, focus, and execution—both for individuals and companies. See above! If that sounds vague, that’s ok, we’re still not talking about the product publicly yet. The first product we’re building on that platform addresses the primary cause of Startup Death. I’ll say more once the product is public, but it’s 🔥. Or at least that’s what I tell myself, and the feedback has been excellent from those who’ve seen it.

Consultant/Advisor: I’m also doing some consulting and advising to stay connected to customers and customer problems. I have a custom security assessment that I created and deliver myself for customers. It combines technical vulnerabilities and business resilience considerations into a concise, prioritized action plan for reducing the most risk for the least amount of time/money. I also advise companies on both security posture, AI strategy, and go-to-market.

The Podcast/Newsletter: And finally I have Unsupervised Learning the newsletter and podcast. This can best be thought of as the exhaust from everything I learn during the week. All the books I read. All the videos I watch. All the podcasts I listen to. And most importantly—all the problems I see and (hopefully) solve when dealing with real challenges while building, consulting, and advising.

In other words—Building, Helping, and Sharing.

To be clear, this is not all figured out. I have a decent system in place (I think), but it’s not like I’m making millions, or even sitting on millions. No, I’m hustling, and it’s still stressful.

But I’m ok with that. More than ok. I’m exhilarated by it. Because this stress is for something I actually believe in.

I get to spend my time thinking about how to help people. Helping friends. Helping customers. And ultimately trying to help everybody. It’s literally my company’s mission.

How I make money in this modelTo help companies and individuals discover, articulate, and achieve their purpose in life.

The Unsupervised Learning Mission

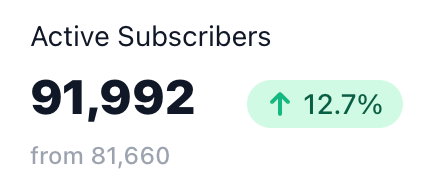

Current newsletter subscribers

Some of you are only here because of the subtitle of this post, which mentioned I’m now making somewhere around $700K / year. Hopefully by the end of this you’ll get a lot more from the post than that, but here are the basic income streams.

People, by the way, have told me not to share this in the past because it’s too personal, or too revealing and the system can be copied. I don’t care. I want people to copy this. I want to free people. More on that in the next section, but first the streams.

Newsletter and Podcast Sponsors: When you have a highly intelligent and engaged audience like UL’s, companies trying to get their name out there want to get in front of them. If you’re not huge like multiple millions you can’t really do like Athletic Greens or stuff like that. You have to do specialized companies that make more per sale. Thanks to Clint Gibler of TL;DR sec for lots of collaborations over the years on this. He’s one of the smartest people out there on sponsor management. Honestly the trick is having someone that’s good at it, and who you can pay at least part-time to manage leads, copy, etc. I could write a whole book on this, but there are a million good YouTube videos on doing it well. Annual Income: ~$450K

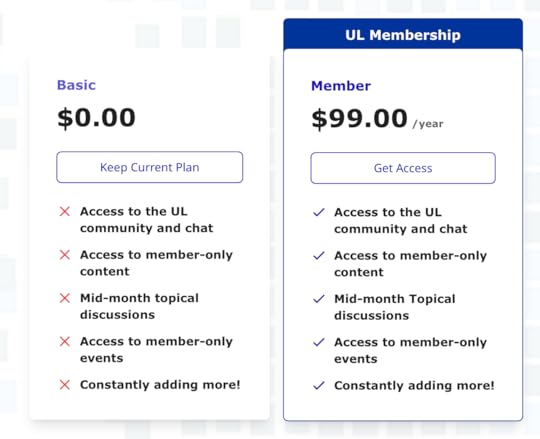

UL’s paid tier

Paid Memberships: In addition to sponsorships, UL also has a paid tier membership that gets people not just the newsletter and podcast, but also access to the community, member content, and lots of other stuff. If you’ve ever heard of “1,000 true fans”, that’s a great way to think about this. Annual Income: ~$130K

Consulting: Like I have in the diagram, I created a security assessment methodology and report that I’m really proud of and think provides tons of value. I do like 3-6 of these per year. And again, I’m not sharing any of this revenue with other people. It’s all under UL. Annual Income: ~$80K

Retainers: Another great source of income (and stress relief) is having one to three retainers going with your favorite companies/customers. This is where they pay you a certain amount per month no matter what, with the ability to ping you or ask for X, Y, Z at a particular SLA that you agree on. Like jumping on customer calls, writing content for them, talking through strategy with the leadership team, etc. Annual Income: ~$130K

Advising: This one is mostly equity. I probably have around 10 irons in that fire, with only 3 that I spend a lot of time on. This one is also great for your soul because you should only be doing this with companies you really love and want to see do well. Annual Income: ~$??? (who knows, they only yield when they IPO and do well)

Speaking: This one is a newer stream, but I am having good success with it. I’m looking to do maybe 1 talk a month, with one or so per quarter that’s in-person. I’m currently charging between $7.5K and $25K for these slots depending on a bunch of variables. Annual Income: ~$100K?

Courses: Not yet. Maybe 2024?

Books: I have one book that came out in 2016, but no immediate plans to write the next one. Maybe 2025 at the earliest.

If you look at the really big people, they’re all about the courses and books because they produce recurring income. I’m just not that cool yet. I’m too focused on building my product right now and courses and books take forever to write and market. One day.

Also, those big people have considerable, full-time teams working for them to make it all possible. I have a couple part-time people.

So anyway, if you add all that up you end up with a pretty good number—especially compared to being stressed out of your mind for half that much. Our projections are actually something like $650-$900 for the year, depending on how things go.

Financial downsidesThe numbers sound good, but there’s a lot of stress involved on the money side too. The biggest problem is that any of those streams make up a lot of the total.

If the economy sputters, sponsors will stop buying slots

If the economy sputters, fewer people will buy assessments

If your retainer companies don’t do well they’ll cancel or reduce the retainer

If there’s covid you won’t do as many onsite speaking engagements

Etc.

So you always have to be thinking about the incoming threads, whether they’re healthy, and whether you need to spin up others for more resilience to conditions.

Also, there are expenses:

Hosting for site / newsletter / etc

Hosting / services for product development

SaaS tools

Advertising the newsletter / podcast

Salaries

Accountant

Lawyers

Travel

Licenses

Insurance

Random stuff

This isn’t thousands or tens of thousands a year. It’s over a hundred. Plus taxes on top of that. It’s considerable.

In other words, this lifestyle is precarious, at least at the early stage I’m at.

Right now I feel free to work for a couple of weeks from London (yay!). But at any moment I could be free to live on my buddy’s futon because all my income dried up (boo!).

So that’s the money situation. Now onto my main reason for writing this.

2. Tons of my friends are either miserable at work or are getting laid offThis is happening to millions of people in the working world right now, and I’m massively seeing it in my own circle.

I don’t know many people who are happy to go to work. At least not that have “regular” jobs (whatever that means).

Do you? How many people do you know that have a regular 9-5 job where it’s just a regular company, and they have a regular manager, and they’re happy?

Of course this is multivariate. There are surely lots of reasons for people being less happy at work. Maybe we’re all spoiled brats. Maybe we don’t know how good we have it. But my theory is that it’s largely the following:

Companies have realized they gave away too much power in the push to create a “great place to work”. They put snack rooms and off-sites and events ahead of performance for a very long time, and sometime around Covid they realized they’d made a huge mistake.

So now they’re clawing it back. They’re doing this through RIFs, Return to Office (which is another way to do a RIF), and aggressive trimming of those who aren’t performing or don’t have the right “anything for the company” attitude.

The result is that employees are now seeing through the illusion. They’re like, “Wait a minute…these people don’t actually care about me or my future.” Correctomundo. It’s a numbers game. Or, more accurately, it’s a performance and profit game. It’s all about performance and profit and the rest is hand-waving designed to attract top-end talent and buff their reputations. They treat star players like pro athletes and everyone else like cattle.

NOTE: I’m talking about companies as the machines they are, not all managers. There are plenty of great managers inside these companies that treat their people well. But in my model that’s true despite the truth above, not as a counter to it.

RIFs seem to be an accepted technique for cutting costs in many industries now, where as they seemed to have a much more negative hue like 5 or 10 years ago. Again, that could be my own interpretation.

So employees are becoming disillusioned, demotivated, and generally depressed. I don’t want to overextend here because anecdata is not data, so I’ll just say that this my impression from not only my own friend and associate circles, but also reading on broader trends.

The result from all of this is that the promise of going to school, getting a stable job at a company, and having some sort of future from that is—or at least feels—more tenuous than ever. I’d have to review lots of data to see if that’s true for sure, but perception is often the reality that matters, and I think that’s definitely the case here.

Now to the bigger issue.

3. I believe that the time for being identified by—and tied to—corporate jobs is passing, and it’s time to transition to what comes nextMy interpretation of the previous section is that we’re moving into a major societal transition, i.e., the move from Corporate Identities to Individual Identities.

How sad is that? What kind of antiquated, old-world, industrial-revolution bullshit is this?

You are not your job. Your worth is not defined by your corporate manager’s opinion of you. You are not your timesheet-punching-performance. Your worth is not whether you read the memo, or used a cover sheet.

Fuck all that.

We’ve had decades—no—generations—where one’s self-worth came from their profession. And not just their trade, but the company where they work. And of course people were also parents and friends and members of society as well, but it’s almost like those were secondary to work.

For all the reasons in the previous sections, I think it’s time for that to end. It’s time for us to think of ourselves as Individuals first.

And to be clear, it’s possible to take Individualism too far as well. And there’s already an unhealthy version of this in primadonas and 100x engineers that cause far more trouble than the value they create. I’m not advocating becoming an asshole and asking for all green M&Ms at your next gig.

I’m also not saying all jobs are bad. Work is amazing. I’m very pro-work. Startups are awesome. And there are many great corporations as well. Places where it’s possible to work with a properly dialed-in work dynamic.

The problem isn’t corporations. The problem is the relationship we’ve built over generations around working at them. The problem is our indoctrination into the belief that they are important, and we’re the little scrubs that should just be happy we have a job.

It’s lame to quote oneself, but “Fuck all that.”

A healthier wayLike I said, there’s nothing wrong with working at a company. Even a corporation. The key is the dynamic between them and you.

We need it to be more equalized. And that doesn’t mean equal. Just more equal. The company’s mission is still what matters, and any employee or contractor needs to understand that.

But it can be more equal. In fact there’s already a good model for it, and I’m sure you’ve seen it before.

Think about how companies treat the named, super-talented employees they have. They get tons of stock. They have tons of leeway. They are listened to in meetings. They’re invited to the table. And they get promoted.

Why? Because the company knows they 1) are very talented, and 2) can go work anywhere else. The problem with most corporate workers—and indeed most people who can’t find a job or are in constant fear of being laid off—is that they don’t have those two things.

Why? I think it’s in large part because of the mindset I talked about above. Look at how different these two mindsets are:

Wow, can’t believe I got a job! I sure hope they like me. Can’t believe I’m really an ACME employee! Can’t wait to tell all my friends! Now they’ll respect me!

NPC MINDSET

Now compare that to this:

Cool, pretty excited to be working on this project. I hope they don’t have me doing any dumb shit and wasting my time. I came here to work with these smart people and actually accomplish something, and if they end up being dumb I’m out of here.

PC MINDSET

And don’t get riled up because I’m using the NPC language. I don’t even really like the framing. Seems a bit rude. But Holy Shit it’s powerful and descriptive. I am using precisely because it’s provocative.

And I’ve been that NPC. For many years. I’m telling you this because I want you to be one for less time than I was.

So what are you saying?What I’m saying is that it’s fine to be part of a startup, or a business, or a job, or a corporation. Fine. Not a problem. All good.

But you have to be yourself first. The true version of yourself. The powerful version of yourself. You have to be that named, super-talented version.

And that requires that you focus internally, on yourself.

In other words, you need to focus on your identity. Your mission. Your goals. Your metrics. You have to know what those are. You have to figure them out and be able to describe them to others.

Then you grow your skills. You learn in public. You share things on a website. In a newsletter. Etc. Not so you can become some blow-hard “influencer”.

No. So you can become the full version of yourself. The version that doesn’t hide. And feel shy. And stay quiet when you know the answer.

Once you become that, only then will you be ready to go into a corporate job in the proper orientation. And then you can have a wonderful, healthy, and fruitful stint at the company.

Maybe that lasts 2 years. Maybe you stay for 12. But you do it on your terms, without being treated like a goddamn stapler.

SummaryOk, that was a lot. Sorry. Like I said, I’ve been meaning to write this for months. Here are the main points:

Yes, I have a job.

I’m building, helping, and sharing.

And I’m doing it for myself, which is glorious.

And I want you to do it too, because I think we’re leaving the worker-first era and entering the human-first era.

Ask yourself what you would do if you weren’t afraid. And move towards that life.

I’m here to help, and I’m rooting for you. 🫶

NOTESThanks to Jason Haddix, Joseph Thacker, and Saša Zdjelar for talking through many of these concepts over the last several months.

Powered by beehiiv

August 16, 2023

Unsupervised Learning NO. 394

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.

Hey there!

Back from Vegas finally. 14 days is too much Vegas.

2 talks and 3 panels this year, and that’s on a (light) year that I planned on saying no to most things. But I got away easy with only 5 things; my buddy Jason had 9!

And now the covid waiting game begins. The texts and posts reporting people being positive are starting to accumulate, including from events I attended, so I’m hoping I make it to like Friday without getting it myself.

Also, super interesting—make sure you test correctly . A guy took 5 different tests and got vastly different results based on how he tested.

In the meantime, I hope you have a great week!

In this episode:

🎰 Back from Vegas: Event Recap

🔬 Covid Testing: Importance of Correct Method

🔥 Burnout and Addiction: Shared Root Cause

🪳 Vulnerabilities

🎩 Black Hat Highlights: Tool Releases

👥 Lapsus$ Tactics: Simple Techniques, Big Breaches

🤖 AI Cyber Challenge: DARPA's Call to Arms

🔒 Cybersecurity Standings: US vs China

🌐 Render's Cloudflare Issue: Network Errors

🔍 PromQL Guardrails: Code Scanning with Semgrep

🔭 Tool & Article Discovery

➡️ The Recommendation of the Week

🗣️ The Aphorism of the Week

MY WORK

Burnout and Addiction

Burnout and addiction may share a common root cause - a lack of fulfillment or a "meaning loop" in one's life. According to Johann Hari, addiction is a lack of a strong meaning loop that keeps you fulfilled, and burnout can occur when you're doing something that's not your true purpose. DANIELMIESSLER

🎙️ Subscribe to the Podcast

If you’re not getting the podcast yet, you should remedy that. It’s very close to the newsletter, but I often expand a bit on topics in the podcast version. Also, I’m about to pull a Lex Fridman and move the sponsors to the front so that there aren’t interruptions during the content. ADD UL TO YOUR CLIENT

📡 Connect via RSS

RSS is not dead. Not in our world anyway. You can follow all UL content with via the following RSS feed. ADD TO YOUR RSS READER

SECURITY NEWS

There were multiple vulnerabilities and incidents revealed during Blackhat/DEFCON week, although the news was a bit quieter than usual due to media coverage of other topics. Here are the highlights.

🪳CISA Microsoft Alert — CISA has flagged a zero-day flaw affecting Microsoft's .NET and Visual Studio products, and it's already being exploited. The vulnerability, known as CVE-2023-38180, has a CVSS score of 7.5 and impacts various versions of Visual Studio and .NET. SECURITYWEEK

🪳Sogou Keyboard Vulnerabilities — Sogou Keyboard's encryption has some serious holes that could expose your keypresses to network snoops. | Critical | CITIZENSLAB

🪳Researchers Can Listen to Keystrokes over Zoom — This one is a bit early, but researchers are claiming they can learn what Zoom participants are typing with 93% accuracy. Insane! ARSTECHNICA

Black Hat Highlights

The 2023 Black Hat conference was pretty stacked this year. Notable releases included MELEE, a tool for detecting ransomware in MySQL instances, and CheckGPT, a tool designed to detect AI-generated email attacks. Check out a full writeup here. SECURITYWEEK

Lapsus$ Tactics

The Lapsus$ hacking group, known for breaching high-profile companies, used simple techniques like SIM swapping to gain access to internal networks. The group, mainly composed of teenagers, targeted companies like Microsoft, Cisco, and Nvidia, and even attempted to compromise accounts connected to FBI and Department of Defense personnel. BLEEPINGCOMPUTER

AI Cyber Challenge

DARPA is rallying computer scientists, AI experts, and software developers to join the AI Cyber Challenge (AIxCC), a 2-year competition aimed at finding and fixing vulnerabilities in crucial software. Leading AI companies like Anthropic, Google, OpenAI, and Microsoft are partnering with DARPA to provide their technology and expertise to challenge participants. OODALOOP

Sponsor

Struggling to implement Zero Trust with Okta alone?

You're not alone.

Device hygiene and telemetry signals are shallow, and users get stuck, blocked, and sent to IT if there's an issue.

Forced to manage company access through exemption lists, IT is buried under a mountain of support tickets, creating the IT bottleneck.

Kolide Device Trust integrates with Okta for real-time device posture beyond checkbox compliance. Instead of leaving the user blocked, it provides contextual instructions so that they can resolve the issue themselves.

It's Device Trust done right.

Watch our on-demand demo to learn more.

Watch the DemoCybersecurity Standings

The head of the National Security Agency, Gen. Paul Nakasone, confidently stated that the U.S. is not trailing behind China in terms of offensive cybersecurity and surveillance capabilities. He attributes this to the ongoing "hunt forward" operations that actively search for clandestine activity on U.S. and allied networks. NEXTGOV

PromQL Guardrails

Semgrep, a tool for finding bugs and enforcing code standards, now supports PromQL. This new feature allows for code scanning at ludicrous speed. HACKERNEWS

AI Tech Standoff

The US and China are in a race to develop the most powerful AI systems, causing a tense relationship as each country safeguards its resources. The Biden Administration's move to limit Chinese tech investments in semiconductors, quantum computing, and AI has sparked concerns from regulators in other countries, including the UK and EU. OODALOOP

TECHNOLOGY NEWS

AI Chip Rush

China's internet giants are on a $5bn shopping spree for Nvidia chips, all in the name of powering up their AI systems. The rush is driven by fears of new US export controls and a global GPU shortage, with companies like Baidu, ByteDance, Tencent, and Alibaba ordering about 100,000 A800 processors to be delivered this year and in 2024. OODALOOP

AI Voices for Deceased Kids

Some content creators are using AI to recreate the voices of deceased or missing children, narrating their own tragic stories. While some defend this as a new way to raise awareness, experts warn it risks spreading misinformation and offending victims' loved ones. OODALOOP

Vim's Future Plans

The Vim project is making some changes to continue its development, with new members joining the organization and a focus on bug fixes, security updates, and documentation improvements. There are plans for a Vim 9.1 maintenance release and a potential move to a more modern approach, similar to Neovim, but the team is still figuring out the best way to handle this transition. GOOGLE GROUPS

AI Remaking Cloud

Artificial intelligence is shaking up cloud computing, with companies like OpenAI and Databricks leading the charge by providing tools to build AI features. Forbes' latest Cloud 100 list shows AI's growing influence, with seven newcomers, including Anthropic, a ChatGPT rival, benefiting from the AI boom. OODALOOP

AI-Powered Antibody Discovery

LabGenius, a company based in South London, is using AI to speed up the process of engineering new medical antibodies. Their machine learning algorithm designs antibodies to target specific diseases, then automated robotic systems build and test them, all within six weeks. WIRED

X's Ad-Revenue Changes

Elon Musk's social network X, previously known as Twitter, is making it easier for creators to earn from their content. The platform has reduced the eligibility threshold for ad revenue sharing from 15 million to 5 million impressions within the last three months, and creators can now cash out with as little as $10. TECHCRUNCH

Wireless OLED TV

LG has launched the world's first "wireless" OLED TV, capable of transmitting 4K 120HZ video wirelessly via a "Zero Connect Box". The TV, currently available in South Korea, will be released globally later this year. ACQUIREMAG

Google's eSignature Support

Google is adding eSignature support to Docs and Drive, making it easier for users to request and sign documents without switching between different apps. The feature, currently in beta, has been in alpha testing for over a year and is expected to be available to Workspace individual subscribers in the coming weeks. THEVERGE

Video Chat Revolution

The hype around video chat apps seems to be over, with the actual experience of video chat being in its most boring state ever. Despite the rush of interest in video chat apps during the pandemic, the market is now largely run by tech giants and the pace of new and interesting features has slowed to practically nothing. THEVERGE

HUMAN NEWS

Telework Reduction Push

The White House is urging federal agencies to cut down on telework and remote work, favoring more in-person office time this fall. This move, described as "critical" to workplace culture and mission fulfillment, is a continuation of an initiative first announced in April. GOVEXEC

Return-to-Office Regrets

Cool story, but in one study 80% of bosses regret their initial decisions about returning to the office, wishing they had a better understanding of what their employees wanted. According to a study by Envoy, many companies feel they could have been more measured in their approach, rather than making bold decisions based on executives' opinions rather than employee data. CNBC

Bankman-Fried Jailed

FTX founder Sam Bankman-Fried is back in jail, this time for witness intimidation and jury tampering. The charges stem from his sharing of private notes from a key prosecution cooperator, his ex-girlfriend and former CEO of Alameda Research, Caroline Ellison. THEMESSENGER

Post-COVID Heart Issues

Doctors are grappling with how to help patients who have developed heart conditions after recovering from COVID-19. The virus has been found to cause significant damage to the heart, even in mild cases. CBSNEWS

UPS Driver Pay Boost

UPS drivers are set to average a whopping $170,000 in pay and benefits by the end of a five-year contract. How can they afford this? The deal, which covers around 340,000 workers, is currently in the middle of a ratification vote that ends on August 22. CNBC

Middle School Struggles

Life's tough for middle school students who aren't attractive or athletic, according to a study by Florida Atlantic University. The study found that these students become increasingly unpopular over the school year, leading to increased loneliness and alcohol misuse. FAU

NOTES

We had the most epic live UL meetup in Vegas! It was a bunch of tables put together, which we ended up adding one to about halfway through. Conversation was great. People got to know each other more. And it was just wonderful to put faces and voices to names. Check UL Discord to see the group photo!

I did 5 different events for BH/DC this year. It actually felt pretty light compared to heavy years. Maybe because I stayed away from both cons for the most part due to concern about getting sick. Will probably get sick anyway though. 🤷

I learned recently, after decades of believing the opposite, that brand-name drugs actually are better. Unfortunately I can’t remember if it was Huberman or Attia, but it was a VERY reputable source. The TLDR is that generic drugs are sometimes identical in quality to brand-name and sometimes WAY worse, depending on where they’re sourced from. Whereas brand-name versions are always sourced from the top-tier providers. Wow.

If you like the format of the vulnerabilities update you can thank Michael from the community for that. He mentioned missing always having a vulnerabilities section, and I’d been thinking about a more narrative style intro to that section for a while. So this week is the first version of it. It should be quite good within a few weeks.

IDEAS & ANALYSIS

What to Build as a Founder

People often ask: “How do you know what to build as a founder?” I can explain: “Build the stuff that you wish existed.” This is related to Martin Scorsese’s quote, “The most personal is the most creative.” Create businesses around the services that you yourself need. X (I can’t believe I’m really typing that instead of TWITTER)

RTO = RIF

RTO is a sneaky way of doing a RIF. They just give exceptions to the super-talented and start from scratch with the few people who move. X

DISCOVERY

⚒️ Llama 2 Powered By ONNX — Microsoft has released an optimized version of the Llama 2 model, a collection of pretrained and fine-tuned generative text models, that runs on ONNX. It's designed for developers to use, modify, and redistribute under the Llama Community License Agreement. | by Microsoft | GITHUB

InfoSec Resume Tips — Reddit user fabledparable shared some extended resume writing guidance for InfoSec professionals. The advice, posted on r/netsec, includes tips on how to make your resume stand out in the cybersecurity field. REDDIT

Jobs' Interview Technique — Steve Jobs had a unique approach to job interviews, preferring to take potential hires out for a walk and a beer, rather than sticking to formal office interviews. This unconventional method was aimed at breaking the trend of scripted responses and getting to know the person better, while still looking for the 'A-Players'. JOE

Therapy Culture's Impact — David Brooks argues that therapy culture seems to be making us less mature and resilient. He argues that the focus on trauma and mental health has led to an epidemic of immaturity, referencing works like "The Culture of Narcissism" and "The Coddling of the American Mind". Similar to a recent post of mine. NYTIMES

Generative Agents — The research team behind "Generative Agents: Interactive Simulacra of Human Behavior" has released their core simulation module for generative agents on GitHub. The module simulates believable human behaviors in a game environment and comes with detailed instructions for setting up the simulation environment and replaying the simulation as a demo animation. GITHUB

Q&A System Evaluation — LangChain has added a new tutorial to their LangSmith Cookbook, focusing on how to measure the correctness of a question-answering system. TWITTER

Vim Boss — This post pays tribute to Bram, the creator of Vim, highlighting his principles, modesty, and the deep value he provided to the universe. The author, Justin M. Keyes, emphasizes that Neovim, a derivative of Vim, continues Bram's legacy in terms of maintenance, documentation, extensibility, and embedding. NEOVIM

Self-Education Through Reading — The author shares his unique approach to self-education through a lifetime of reading, emphasizing the importance of discipline, consistency, and mental focus. He details his strategies, such as reading challenging books for mind-expansion, reading slowly to deeply understand the content, and keeping track of his reading progress. HONEST-BROKER

iOS 17 Features — The author of this 9to5Mac article shares his favorite iOS 17 features, including the improved autocorrect, multiple timers, and the ability to transcribe voice messages in iMessage. He also mentions some features that are yet to work perfectly, such as the Personal Voice and Live Voicemail. 9TO5MAC

Yes, AI is Creative

If humans can’t tell the difference between human and AI creativity, then AI has creativity. The only way to get out of that pickle is to define creativity as something only humans can do, which is cheating. HT: Joseph. ONEUSEFULTHING

RECOMMENDATION OF THE WEEK

When you do covid tests, make sure you’re testing like this. I’d do it even if it was some random guy saying so (because it makes logical sense), but the source is a doctor with tons of legit bona fides.

TL;DR: Swab the back of the throat, roof of mouth, cheeks, AND deep in the nose. Results are often massively different, as he shows.

APHORISM OF THE WEEK

We’ll see you next time,

Powered by beehiiv

August 7, 2023

Unsupervised Learning NO. 393

Unsupervised Learning is a Security, AI, and Meaning-focused podcast that looks at how best to thrive as humans in a post-AI world. It combines original ideas, analysis, and mental models to bring not just the news, but why it matters and how to respond.

Welcome to HackerCon week,

This is the week of BSides Las Vegas, Blackhat, and DEFCON in Las Vegas. If you see me around come say hi. Or at least wave or nod your head from a distance!

We’ve also completed our MVP for our first UL product, and we’ll be doing private demos this week! We’re also doing a member meetup on Friday; really looking forward to that one!

Anyway, have a great con week, even if you’re not in Vegas.

Let’s jump in…

In this episode:

🎉 HackerCon Week: BSides, Blackhat, DEFCON

🔒 Google's Privacy Update: Control Your Data

🤖 AI Vulnerability: Adversarial Attacks on Chatbots

🛡️ NIST CSF Changes: Are You Ready?

📊 Breach Disclosure Rules: SEC's New Mandate

🔧 Tech Giants' Security Fixes: Apple, Google, Microsoft

📚 Penetration Testing Guide: Understanding Cybersecurity Risks

🤖 Google's AI Pivot: Supercharged Assistant

📦 Musk's Grid Warning: Invest in Energy Transition

🔭 Tool & Article Discovery

➡️ The Recommendation of the Week

🗣️ The Aphorism of the Week

MY WORK

✍️ High-Entropy Writing

My latest essay on one model for creating the best possible talks: Surprise. I compare the concept of surprise in talks to Claude Shannon’s entropy in information theory. READ THE ESSAY

SECURITY NEWS

Google's Privacy Update

Google just released a tool that lets you see how your contact information appears in Google, and even lets you delete results as well. They updated their "Results About You" tool, which now includes a dashboard that alerts you when your contact information appears in Google searches, and allows you to request removal of that information.

- The tool is available to logged-in Google users.

- Users can request removal of personal information like email, home address, or phone number.

- The dashboard is currently rolling out to users in the US.

- Google also updated policies for removal of nonconsensual explicit images.

- The tools won't completely wipe you from Google searches, but will make personal information harder to find.

- If you don’t have a Google account, you can fill out a stand-alone removal form to make a request.

- Google sends an email where you can track the status of the request. GOOGLE ANNOUNCEMENT | THEVERGE | THE TOOL

AI Vulnerability

Researchers at Carnegie Mellon University have discovered a fundamental vulnerability in advanced AI chatbots, including ChatGPT, that can't be patched with current knowledge. The vulnerability allows for adversarial attacks, where a simple string of text can bypass all defenses and prompt the AI to generate prohibited responses. Just the beginning of this sort of stuff, to be sure. We forget how to do basic security whenever we introduce new tech. See: network → web → mobile → cloud → IOT → AI. WIRED

🚨New Breach Disclosure Rules

Public companies in the US now have to disclose cyberattacks within four days, according to new rules approved by the Securities and Exchange Commission (SEC). The rules apply when the attack has a "material impact" on the company's finances, but can be delayed if disclosure risks national security or public safety. Love this progress from the US government. MALWAREBYTES

Sponsor

NIST CSF Changes Are Coming - Are You Ready?

In January 2023, the National Institute of Standards and Technology (NIST) announced its intent to make new revisions to its NIST Cybersecurity Framework (CSF) document, with an emphasis on cyber defense inclusivity across all economic sectors.

Responding to industry requests on relevant issues, version 2.0 focuses on international collaboration, broadening the scope of industries that can use the CSF, and one entirely new Function. Download our handy ebook to review the updates — and their implications for your business — before they go live.

👉learn.hyperproof.io/2023-proposed-changes-to-nist-csf👈

Download eBook🇨🇳 Chinese APTs Infiltration

Chinese hacking teams are burrowing deep into sensitive US infrastructure, aiming to establish permanent presences. Reports from Kaspersky and The New York Times reveal advanced spying tools and hidden malware used by these groups to threaten national security. ARSTECHNICA

HackerOne Restructuring

HackerOne, a cybersecurity company, is reducing its team by approximately 12% due to economic challenges and underperforming new products. CEO Marten Mickos announced the decision, stating that severance packages will be offered to impacted employees. Interesting timing doing this right before HackerCamp. HACKERONE

Ivanti's Second Patch

Ivanti has released a patch for another critical zero-day vulnerability that's currently being exploited. The vulnerability, listed as CVE-2023-35081, is being used in conjunction with another vulnerability we've previously discussed and has a CVSS score of 7.2. MALWAREBYTES

Sponsor

OPAL, Scalable Identity Security

Opal is designed to give teams the building blocks for identity-first security: view authorization paths, manage risk, and seamlessly apply intelligent policies built to grow with your organization.

🛡️Opal is used by best-in-class security teams today, such as Blend, Databricks, Drata, Figma, Scale AI, and more. There is no one-size-fits-all when it comes to access, but they provide the foundation to scale least privilege the right way.

Secure Identity with OpalTop Exploited Vulnerabilities

The Five Eyes cybersecurity authorities, in collaboration with CISA, the NSA, and the FBI, have released a list of the 12 most exploited vulnerabilities of 2022. These vulnerabilities were primarily in outdated software, with threat actors targeting unpatched, internet-facing systems. BLEEPINGCOMPUTER

Marijuana and Security Clearances

The U.S. Senate has passed a defense bill that prevents intelligence agencies from denying security clearances based on past marijuana use. The provision, part of the National Defense Authorization Act, was approved despite previous opposition. MARIJUANAMOMENT

AI Gun Detection

ZeroEyes is using AI to detect guns in public and private spaces, aiming to prevent shootings before they happen. The company's technology, which has been adopted by various institutions including the U.S. Department of Defense and public K-12 school districts, identifies illegally brandished guns and sends alerts to local staff and law enforcement within seconds. VENTUREBEAT

Worldcoin Suspended

Worldcoin's registration process in Nairobi was halted due to security concerns as hundreds of people lined up to get free money. The large crowd was deemed a "security risk", leading to many being locked out of the process. BBC

TECHNOLOGY NEWS

Meta's AI Launch

Meta is planning to launch AI-powered "personas" in its services, including Facebook and Instagram, as early as next month, offering users a new way to interact with its products. The chatbots will come with distinct personalities, like a surfer offering travel recommendations or a bot that speaks like Abraham Lincoln. Honestly excited to see this, which I’m not used to saying about anything Meta. THEVERGE

Generative AI Adoption

Generative AI is becoming a common tool in many organizations, with a McKinsey report, based on a survey of 1,684 participants, finding that 79% had some exposure to generative AI, with 22% using it regularly for work. That seems very low and will definitely be low in 6 months. VENTUREBEAT

Drone Mail Revolution

The UK's first drone mail service has kicked off in Orkney, aiming to revolutionize mail services in remote communities. Alex Brown, director of Skyports Drone Services, highlighted the benefits of this technology in terms of efficiency, timeliness, and reduction of emissions-producing vehicles. BBC

Musk's Twitter Rebrand

Elon Musk is planning to rename Twitter to X, reminiscent of his failed attempt to rebrand PayPal in 2000. The move is part of Musk's ambition to transform the social media platform into a financial heavyweight, despite his previous unsuccessful venture with X.com, an early internet banking startup. He really wants the letter X to happen, and this might be the time. But only because all the Twitter competitors seem really bad. I am interested in seeing what he does with peer-to-peer payments. Remember, his goal is to create a China-like OneApp clone. THAT I’m excited about, but I’ve seen no signs of it thus far. THEVERGE

Superconductor Breakthroughs?

A recent study suggests that LK-99, a compound of lead, copper, and phosphate, might be a room-temperature superconductor. The research, based on density-functional theory calculations, shows that the electronic structure of this compound could support flat-band superconductivity or a correlation-enhanced electron-phonon mechanism. I honestly can’t tell if this is bunk or not. Too early to say, I think. ARXIV | SOUTHEASTUNIVERSITY | ANDREWCOTE

AI Drive-Thrus

White Castle is planning to roll out AI-enabled voices to over 100 drive-thrus by 2024, aiming to speed up service and reduce miscommunication. The technology, developed in collaboration with speech recognition company SoundHound, promises to process orders in just over a minute. THEVERGE

Chinese Internet Curfew

China's latest bid to curb internet addiction among minors involves introducing a "minor mode" on devices, limiting access to content and usage based on the child's age. For instance, teens between 16 and 18 will be restricted to two hours of mobile usage each day, and all devices in "minor mode" will be barred from internet access between 10PM and 6AM. I’m a fan of any policy that makes smart Chinese people want to leave the country, and I also think this might greatly help the mental health of these kids. THEVERGE

GPT-5 Patent Filed

OpenAI has filed a patent for GPT-5, covering a wide range of applications from language models to speech recognition and translation. The patent includes both downloadable software and Software as a Service (SaaS) offerings. Can’t. Wait. USPTO

Inworld AI's Funding

Inworld AI, a startup that uses AI to create smart characters for games, has raised a new funding round at a $500 million valuation. The round, which is expected to close later this month, will total over $50 million and includes investors like Lightspeed Venture Partners, Stanford University, and Samsung Next. CRUNCHBASE

Game Mode in macOS Sonoma

Apple's macOS Sonoma introduces a new feature called Game Mode, which automatically boosts a game's performance by giving it top CPU and GPU priority when launched. This feature is part of Apple's efforts to make Mac more appealing as a gaming device by improving game performance and reducing latency with wireless gaming and audio devices. MACWORLD

AI-Powered Malware

HYAS Labs has developed a proof-of-concept for a new type of malware, EyeSpy, that uses artificial intelligence to autonomously choose targets, strategize attacks, and adapt its code in real-time. This "cognitive threat agent" represents a potential evolution in cyber warfare, capable of reasoning, learning, and adapting on its own. This is an early look at the future of automated attack. Super exciting. And scary of course. Those go together. HYAS

AI-Driven Drone

Artificial intelligence software successfully piloted an XQ-58A Valkyrie drone in a test flight, marking a significant step forward in unmanned aircraft technology. The flight was the result of two years of work and a partnership with Skyborg Vanguard, aimed at creating unmanned fighter aircraft. OODALOOP

HUMAN NEWS

Wind Farm Development

Scotland has cut down over 16 million trees to make way for wind farms. Feels like too many. Something about cutting noses and spiting faces. MSN

State Farm Exits California

State Farm, the largest insurer in California, is pulling out of the state, no longer offering new coverage. This move is part of a larger trend of insurance companies retreating from areas prone to climate-related disasters. NYTIMES

Construction Labor Shortage

The US construction industry is grappling with the highest level of unfilled job openings ever recorded, struggling to attract an estimated 546,000 additional workers in 2023 to meet labor demand. The industry averaged over 390,000 job openings per month in 2022, a record high, while its unemployment rate of 4.6% was the second lowest on record. CNBC

Cancer Pill Breakthrough

City of Hope scientists have developed a promising new chemotherapy, AOH1996, that's shown to annihilate all solid tumors in preclinical research. The drug targets a cancerous variant of the protein PCNA, disrupting DNA replication and repair in cancer cells, while leaving healthy cells untouched. Incredible that so many tech innovations seem to be happening at once. I hope this pans out in a significant way. INNOVATIONORIGINS | SKYNEWS | EUREKALERT

American Life Expectancy

Life expectancy in America is falling behind other rich countries, with areas like Hazard, Kentucky, being hit the hardest. A study by Jessica Ho of the University of Southern California found that from a fairly average position in 1980, by 2018 America had fallen to dead last on life expectancy among 18 high-income countries. ECONOMIST

Fitch Downgrades US Debt

Fitch Ratings has downgraded the US's credit rating due to concerns over governance standards, particularly around fiscal and debt matters. The rating agency pointed out a "steady deterioration in standards of governance over the last 20 years," despite the recent bipartisan agreement to suspend the debt limit until 2025. BBC

Overdose Deaths Surge

Drug deaths in the US reached a new high in 2022, with over 109,680 fatalities largely due to the ongoing fentanyl crisis. Preliminary data from the Centers for Disease Control and Prevention shows an increase of 21% in Washington state and Wyoming, while some states like Maryland and West Virginia saw a decrease in fatalities. OPB

Summer Covid Surge

Covid-19 cases are on the rise again, marking an unwelcome summer tradition. Hospitalizations increased by 12 percent to over 8,000 across the US for the week ending July 22, the first weekly increase since the end of the federal Covid-19 public health emergency in May. WIRED

Seoul Stabbing Spree

A violent attack near Seoul, South Korea has left at least 12 people injured, with the suspect using his car and a knife as weapons. This incident, occurring during rush hour in Seongnam, follows a similar stabbing in Seoul two weeks prior. OODALOOP

NOTES

We have our first UL product ready to demo this week! If you want to see what I’ve stealthily been working on for the last few months, ping me and we’ll plan a place to cross paths for a private demo!

IDEAS & ANALYSIS

Vision Before Execution

Bram Moolenaarr died last week. He created Vim and has been running it ever since. It got me thinking about something that’s been rattling around in my brain for while now, which is the power of headstrong, visionary founders. Bram was one. Jobs. Musk. And Bezos. What I think they all have in common—and bad companies lack—is a strong Philosopher King vibe. I’m increasingly noticing that companies aren’t failing because they can’t execute. They’re failing because nobody agrees on what should be executed. They’re rudderless. Chaotic. Floundering. They’re full of overly-ambitious and politically-savvy leaders who have their own agendas, which means the company is not unified. Amazon crushed it because Bezos knew exactly what he wanted to build, and he built it. And he was VERY forceful about that direction and making sure people stayed on the path. Jobs was the same way. And so was Bram. One leader for the entire run of the project, basically. Of course, I do think this vision is necessary but not sufficient. You can’t have vision with no execution. But in my opinion too many people have swung that pendulum too far towards execution in recent years. It’s very true that if you can’t execute the vision doesn’t matter, but if you don’t have a vision then you execute in multiple directions simultaneously, or not at all. Personally, I’d rather be totally unified on a clear vision and not have the resources to execute yet than be a highly competent ball of political chaos. Anyway, here’s to Philosopher Kings. Here’s to the people with a vision and personality strong enough to maintain commitment to an idea amongst a thousand opposing voices. And RIP Bram. You’ve done a great thing with your project, and with the charity it supports.

DISCOVERY

⚒️ Promptmap — A tool designed to automatically test prompt injection attacks on ChatGPT instances. It generates creative attack prompts tailored for the target, sends them to the ChatGPT instance, and determines the success of the attack based on the response. | by Utkusen | GITHUB

⚒️ OWASP Amass — OWASP Amass is a tool that performs network mapping of attack surfaces and external asset discovery using open source information gathering and active reconnaissance techniques. It's a staple in the asset reconnaissance field, constantly evolving and improving to adapt to new trends. | by OWASP | GITHUB

⚒️ Semgrep Rules Manager — A tool for managing third-party sources of Semgrep rules, simplifying the process of integrating and updating these rules in your projects. | by iosifache | GITHUB

⚒️ ReconFTW Framework — The ReconFTW framework, developed by @six2dez1, is a comprehensive package for subdomain finding and associated recon, offering a complete picture of an organization's subdomains and initiating cursory analysis. The framework automates the entire process of reconnaissance and can run automated vulnerability checks like XSS, Open Redirects, SSRF, CRLF, LFI, SQLi, SSL tests, SSTI, DNS zone transfers, and more. @six2dez1

Surprise Factor — When writing for the public, especially a stage talk, it's all about the surprise factor. The author argues that people only really learn when they're surprised, and advises to cut out everything that's not surprising. SIVERS

Vim's Abbreviations — Vim's "abbreviation" feature offers an effective way to automate tasks in insert mode, from basic use cases to more complex ones. The feature allows users to assign abbreviations with the command :ab[breviate] or :iabbrev for insert mode, and can be used in autocommands for file-specific abbreviations. VONHEIKEMEN

Run Every Day — Running one mile every day, consistently, can vastly improve your mental and physical well-being, according to Duarte, who's been doing it for about two years. He argues that it's not about the distance or pace, but about claiming back your time and prioritizing your health. DUARTEOCARMO

Stop Stopping at 90% — Austin Z. Henley discusses the common issue of stopping at 90% in projects, where the core project is complete but the final 10% of work, often involving evangelism, documentation, and polish, is neglected. Henley suggests activities such as presenting the work to other teams, broadcasting an email with the takeaways, and writing a blog post about it to truly finish a project. AUSTINHENLEY

Vim One-liners — Muhammad Raza shares his favorite vim one-liners that have significantly enhanced his vim workflow, making it more productive and efficient. These one-liners are used to edit files swiftly, saving precious time and offering unparalleled efficiency when it comes to editing text. MUHAMMADRAZA

AI Redirection — AI.com, previously redirecting to chat.openai.com, now points to x.ai, a separate company from X Corp. The two companies, however, will be working closely together. HACKERNEWS

Don't Be Clever — The author reflects on a past coding project, where he created an overly complex, abstract class called CRUDController for a startup's REST API. Despite its initial efficiency, the class became a "monster" as it grew more complex and time-consuming than simply copying code between controllers. STITCHER

Emotion Regulation in Men — Men often regulate their emotions through physical activities rather than verbal expression, according to a personal account and analysis on the Centre for Male Psychology. The author argues that this action-based emotional regulation is not a sign of low emotional intelligence, but rather a different approach to managing emotions, challenging theories that suggest men are emotionally handicapped. CENTREFORMALEPSYCHOLOGY

EDR Attack Explored — Reddit user N3mes1s has shared a detailed guide on how to attack an Endpoint Detection and Response (EDR) system. The post, which is part one of a series, provides a step-by-step breakdown of the process. RICARDOANCARANI

RECOMMENDATION OF THE WEEK

Make at least one of your walks per week a silent walk.

No tech. No music. No podcasts. No books. No conversations.

Just you and your thoughts. And ideally, just observations of your surroundings and your thoughts, as opposed to being hijacked by your thoughts.

Walk and observe. At least once a week.

APHORISM OF THE WEEK

We’ll see you next time,

Powered by beehiiv

High-Entropy Writing

I read a post by Derek Sivers recently that reminded me of Claude Shannon’s concept of Entropy. The post was about the his opinion that talks should always be surprising.

People only really learn when they’re surprised. If they’re not surprised, then what you told them just fits in with what they already know. No minds were changed. No new perspective. Just more information.

So my main advice to anyone preparing to give a talk on stage is to cut out everything from your talk that’s not surprising.

Derek Sivers