Daniel Miessler's Blog, page 25

March 20, 2023

Calling Out The Security Community on AI

I want to call out our community for a second on AI. And this applies to me as well because I have many of the same feelings.

I feel there are too many in the security community who believe that AI is a minefield, and that it’s our job to warn people not to walk into it.

I think our job is quite different.

It’s not that people are considering walking into this minefield. And it’s up to us to convince them not to.

No.

They ARE walking into it. It’s inevitable. Starting now.

So I don’t believe we should be pointing and laughing as they get blown up, lose a leg, or otherwise hurt themselves.

Instead, it’s our job to go in ourselves. Build the tools that find the mines. And yes, step on some ourselves.

We can’t sit out the IoT game, or now the AI game.

We can’t curmudgeon our way to protecting users.

We need to get out front, and do our best to clear the way.

What’s My Bar for AGI? A Stand-up Comedy Routine

Given the overall GPT-based AI progress in the last few months, I’ve been thinking a lot about AGI. Specifically, how will we know if it happens?

I think there are lots of ways to answer that based on what you think you’re getting from an AGI. In this case I’m thinking of capabilities, not consciousness.

And for capabilities, I think one ability that will truly impress me, and make me wonder if we have a real AGI, will be stand-up comedy.

Think about how hard stand-up is. Think about everything that goes into it. It’s one part society commentary. Another part reading a room. Another part phrasing and timing. And some other parts I am sure I’m not thinking of.

But once an AI can write an audio track, like you might hear on stand-up comedy radio, or on a podcast, and it makes you laugh out loud—that’s going to be a major event in artificial intelligence.

As far as actually getting on stage and such, that’ll be the true test, but I don’t want to conflate the comedy portion with the robotics and uncanny valley portion of the problem.

Let’s say—the content itself, delivered as audio.

If we can give a topic to an AI, and it can write a routine similar to:

ChapelleGervaisBartzgateLouis CKNorman…that would convince me that it can do one of the most creatively difficult tasks that humans are capable of, i.e., making people laugh.

If it can hit that regularly, like 1/10 attempts for a given topic, that would be extraordinary.

I’m already having it write jokes in the voices of those comics above, but it takes a lot of work on the prompting side.

The true test will be just giving a topic and having it do all the work.

March 14, 2023

Yes, GPTs Actually Understand

There are many who don’t see what GPTs are doing as actual “understanding”.

To them, ChatGPT is interesting—and possibly useful—but it’s ultimately nothing more than a text completion parlor trick, and it should not be confused with real understanding.

I think I can convince most good-faith holdouts otherwise in less than 60 seconds. Ready? Go.

⏱️ 60 seconds starting now…

First, let’s define terms.

Data: Raw facts or figures without contextInformation: Data that has been processed, organized, or structuredKnowledge: Information that has been combined to become usefulUnderstanding: The ability to apply knowledge in new situations and contextsOr in compact form:

Data is rawInformation is organizedKnowledge is usefulUnderstanding is appliedSo the definition we’re using is:

The ability to apply knowledge in new situations and contexts.

A fair definition would allow non-human entities to meet the bar.

So, can GPTs do this? Let’s respond with an example. Let’s see if they understand concepts like Star Wars and Forbidden Love and Shakespeare enough to write the following poem?

Write a love song from Luke to Leia.

I asked it to do that, and here’s what it wrote…

O Leia, fairest of all maidens fair,

Thy beauty doth my very soul ensnare.

Thy shining eyes, like stars that light the night,

Doth fill my heart with love’s sweet, shining light.

Thy gentle touch, like feathers on my skin,

Doth make my heart to dance and leap within.

Thy voice, like music to my eager ears,

Doth soothe my soul and calm my deepest fears.

And though our love may be forbidden, true,

I cannot help but give my heart to you.

For thou art all I ever could desire,

My Leia, thou hast set my heart on fire.

So let us steal away into the night,

And let our love take wing and take to flight.

For in thy arms, I know I shall find peace,

And all the world’s troubles shall surely cease.

A LONGING GPT

Try it yourself and add new contexts/situations to the mix.

That’s a Shakespearean poem, about Forbidden Love, in the Star Wars universe, between two Siblings. And it’s applied to a completely new situation/context that I just made up.

Awkward

Notice that I didn’t even mention Star Wars or Forbidden Love in the prompt! It understood the meanings of “Luke and Leia”, and “Love”, and it inferred that it was forbidden because it knows siblings aren’t supposed to feel that way about each other.

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.A lot of the confusion about GPTs and whether they “understand” things comes from confusing understanding with experiencing.

Understanding fell out (emerged) from the system unexpectedly.

Do GPTs understand things? Yes. The magic of the tech is that GPTs basically have to accidentally learn concepts in a deep way so they can properly predict the next letter in a sequence. It can then apply those concepts in new situations.

If you argue that you must feel to understand, then you’re saying understanding requires consciousness.

But does a GPT know what it feels like to love? Or to contemplate the universe? Or human mortality? No. They haven’t a clue. They don’t have feelings. They’re not conscious. They don’t experience things one little bit.

But remember—we’re not asking GPTs to experience things. We’re not asking if they feel things. The question is whether they can generalize from concepts using new information, i.e., apply knowledge to new situations and contexts.

⏱️ Timer stopped.

That’s understanding. And yes, they do it astonishingly well.

March 13, 2023

NO. 373 — SPQA Architecture, LLaMA on M1 Mac, Loved Ones Voice Scams…

Happy Monday, let's attack the week.

MY WORK

🔥 SPQA: The AI-based Architecture That'll Replace Most Existing Software

This is a shorter version of last week's essay about the new GPT-based software paradigm. This one is more to the point and has more examples. READ THE ESSAY

🎙️UL Sponsored Interview with KOLIDE

In this interview I talk to KOLIDE's founder and CEO, Jason Meller, about why he started the company, the problems he's looking to address, and his unique approach to solving them. LISTEN

SECURITY NEWS

SVB Crash Analysis

Here are the major points on the SVB situation:

NYT ANALYSIS

Analysis of the New US Cybersecurity Strategy

Krebs did a full analysis of the US's new cyber strategy, and here are some of the highlights:

Lawfare's analysis broke it down like this:

Defend infrastructure Disrupt and dismantle threat actors Shape market forces to drive security and resilience Invest in a resilient future Forge international partnershipsKREBS ANALYSIS | LAWFARE ANALYSIS | FULL STRATEGY PDF

Cerebral Mental Health Startup Shared Data With Meta, TikTok, and Google

The mental health startup shared names, dates of birth, insurance information, and worst of all—the contents of mental health self-evaluations—with social media companies. This affected over 3.1 million patients of the company. MORE

Are Your Security Solutions Privacy Compliant?

Whether you answered yes or no, global privacy laws can be murky and, oftentimes, confusing. hCaptcha’s privacy white paper cuts through the noise and will help you learn the ins and outs of global privacy laws and how they can impact your organization.

The white paper explains:

How to meet the requirements established by international privacy laws

How to navigate your liability as an online property owner

How to evaluate your security stack for privacy compliance

And much more

Ensure that your company remains compliant. Download our privacy white paper today!

hcaptcha.com/ul

Persistent Sonicware Malware

Mandiant says Chinese attackers are hitting unpatched SonicWall SMA 100-series gateways and infecting them with credential-stealing malware that persists through reboots and firmware upgrades. MORE

12GB Acronis Leak

An attacker known as kernelware dropped a 12GB bundle of data from Acronis, a data protection company. The archive included certificate files, command logs, system configurations, system information logs, archives of their filesystem, python scrips for an Acronis database, and backup configurations. They said they were bored and the company's security was 'dogshit' so they wanted to humiliate them. MORE

AI Voice Scams

$11 million was stolen via AI voice scams in 2022. I expect that number is highly underestimated, and that it will balloon massively in 2023. The latest thing is voice spoofing loved ones using AI and adding that to the scam. MORE

Why do Chinese Billionaires Keep Vanishing?

Bao Fan is the latest Chinese billionaire to disappear from the public sphere, and the questions are becoming louder. My analysis is that this is great for the west, and for the United States. The best possible outcome right now for China's enemies is for China to continue with this policy, which will quietly push anyone talented and smart out of the country. MORE

🪳Critical Flaws in FortiOS and FortiProxy

Fortinet has patched 15 different flaws, including a 9.3 rated in the FortiOS and FortiProxy admin interfaces that allows for RCE and DoS. MORE

You've Got Assets? We've Got Answers

JupiterOne collects more asset data than any other provider, and shows you the relationships between those assets in seconds. It's not just about connectors and data; it's about the types of questions you can ask to get the relevant answers for your security program.

We go beyond endpoints, IP addresses, users, and devices, and ingest data from CSPs, SaaS apps, code repos, IAM policies, security controls, vulnerability findings, and more. This enables you to ask questions like: "What internet-facing applications are running systems affected by log4j, and who owns those systems?"

jupiterone.com/unsupervisedlearning

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.TECHNOLOGY NEWS

🤖⚠️ LLaMA on an M1 Max 64GB

Lawrence Chen got the leaked LLaMA LLM running on an M1-based Mac. I think it's about to become trivially easy to have your very own AI running at home, and that's both glorious and scary. What happens when people start asking it how to attack people, or create bio weapons, or … a million other things? It doesn't matter if OpenAI is safe when you have widely-available AIs you can run on consumer computers. MORE | SIMILAR PROJECT by MATT RICKARD

Additive Prompting

Nick St. Pierre gets something called Additive Prompting working to create some stunning interior design and architecture photography. He shows his prompts and they work in not just MJ but all the various image-generation technologies. FULL THREAD

Discord Rolls Out AI Integration

AI is becoming the must-have feature for companies, but I'm especially happy to see it come to Discord so quickly. Its integration creates someone named @Clyde, which is an AI bot you can chat with and have do stuff for you on your server. MORE

HUMAN NEWS

Andrew Huberman's Advice for Adjusting to DST

"To get up easily at (the new) time tomorrow & thereafter, today (Sunday) stack these especially potent circadian clock phase shifters: 1) View sunlight before the sun is overhead (even if through cloud cover), and 2) Exercise (ideally outside) before 2PM" TWEET

American IQ Decline

Americans' IQs just declined for the first time in almost 100 years. One theory is that education as worsened, but researchers aren't sure of the cause. MORE | PAPER PDF

Around 40% of Software Engineers Only Work Remotely

Hired did a study and found that almost half of software engineers will pass on a job unless it allows them to work remotely. MORE

Why Did Liberal Girls' Mental Health Sink the Fastest?

Analysis of mental health data across gender and political affiliation. MORE

IDEAS & ANALYSIS

What About Data Science?

What's going to happen to Data Science when GPTs (and whatever comes next) take over? The major advantage of having data scientists was the fact that they could wrangle unwieldy data into a usable form. And they could help us ask questions. Don't GPTs largely do this for us? Especially those like ChatGPT that are designed to interact like humans. I think there's about to be a giant whooshing sound coming from the data science field. To be clear, the people themselves are highly skilled and many will be able to pivot into building GPT-based apps (perhaps in the SPQA space), or they'll become AI-Whisperers. But the field itself seems a bit doomed. SHARE & DISCUSS

NOTES

Congrats to Charles Blas in the community for winning the UL Referral AirPods Pro 2 giveaway from February! I'm giving them to him in person this week!

We have a member of the UL Community who's looking for his next marketing job. He's not just an experienced marketer, but he's also super sharp on current technologies and overall highly curious about the world. If you're a scrappy startup looking for an edge, reply here so I can put you in touch!

I think I need to start looking for a GPU rig. With the release of LLaMA I feel like similar models will continue to be built and/or leak, and it'll soon be possible to have your own version of something ChatGPT-like running for your own use. I definitely want that. So what's the best way to build a box that chains together like 4 massive GPUs? Or will it be better to just buy a massive Mac Pro with M2 or M3 chips where the GPUs can use all available memory? Thoughts? Create a thread in Discord and we'll discuss.

DISCOVERY

⚒️ llama_index — LlamaIndex is a simple, flexible interface between your external data and LLMs. It provides the following tools in an easy-to-use fashion

TOOL | by Jerry Liu

⚒️ CodeGPT.nvim — CodeGPT.nvim is a neovim plugin that allows you to call OpenAI to do code completion, refactoring, generating documentation, etc. TOOL | by Darby Payne

⚒️ writeout.ai — Give it an audio file and it'll output a transcript. TOOL | by Beyond Code

📢 [Sponsor] — Are your websites being overrun by fraud rings, account takeovers, and other sophisticated automation? hCaptcha uses continuous learning and its powerful SecurityML platform to block even the most advanced bots and fraud. LEARN MORE

Apple gains popularity with GenZ and premium buyers — High-end customers and young people are increasingly going with the iPhone over top Android phones. My read is simple: Apple thinks about the experience, while their competitors think too much about the technology. That turns the iPhone into a luxury device and Android phones into gadgets. WSJ

How to YubiKey: A Guide to YubiKey Configuration MORE

📢 [Sponsor] — Can you answer complex questions about what assets you have, which are facing the internet, and who owns those systems so you can get them fixed if there's a new vulnerability? If not, you should look at JupiterOne. It's like a unified question-answering platform powered by your own assets. LEARN MORE

Who blew up the Nordstream pipelines? MORE

Physics Girl is in bad shape due to complications from Covid. She can't even get out of bed currently, and this is an update from her friend. Heartbreaking. She's one of science's best assets. MORE

RECOMMENDATION OF THE WEEK

Kindness-based TV

I highly recommend you watch two shows from someone you might not expect. Ricky Gervais. The shows are:

They are the most wonderfully human TV I've ever seen, and I cannot recommend them enough.

APHORISM OF THE WEEK

"The future is always beginning now."

Mark Strand

No related posts.

Sponsored Interview — Jason Meller, CEO of KOLIDE

Today I’m doing a Sponsored Interview with KOLIDE — a company I’ve heard a lot about recently and have been looking forward to chatting with.

Kolide is zero-trust for Okta. It ensures that all systems that authenticate through Okta are compliant with the company’s policy, and they leverage the company’s users to get into the proper state.

I’m talking to Jason Meller, the founder and CEO of Kolide and we talk about:

The problems in the BOYD spaceKolide’s approach to solving the problemA user-centric approach to policy complianceHis view of what stops other players from being successful-And other topicsSo with that, here’s Jason Meller…https://kolide.com/unsupervisedlearning

No related posts.

March 10, 2023

SPQA: The AI-based Architecture That’ll Replace Most Existing Software

AI is going to do a lot of interesting things in the coming months and years, thanks to the detonations following GPTs. But one of the most important changes will be the replacement of our existing software.

We used to adapt our businesses to the limitations of the software. In this model the software will adapt to how we do business.

AI-based applications will be completely different than those we have today. The new architecture will be a far more elegant, four-component structure based around GPTs: State, Policy, Questions, and Action.

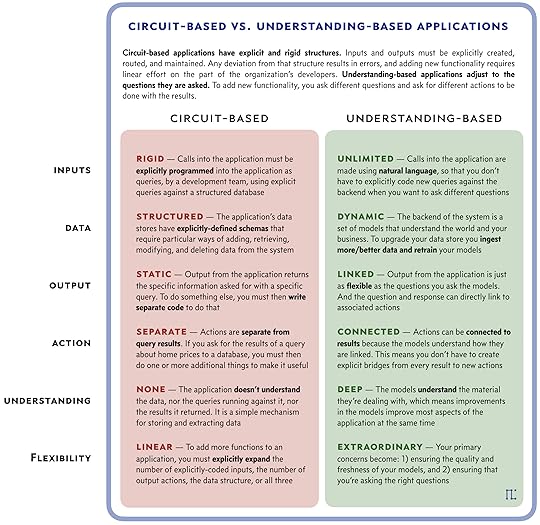

Fundamentally it’s a transition from something like a Circuit-based architecture to an Understanding-based architecture.

Expand full image

Our current software is Circuit-based, meaning the applications have explicit and rigid structures like the etchings in a circuit board. Inputs and outputs must be explicitly created, routed, and maintained. Any deviation from that structure results in errors, and adding new functionality requires linear effort on the part of the organization’s developers.

Circuit isn’t the perfect metaphor, but it’s descriptive enough.

New software will be Understanding-based. These applications will have nearly unlimited input because they’re based on natural language sent to a system that actually understands what you’re asking. Adding new functionality will be as simple as asking different questions and/or giving different commands.

The SPQA Architecture

(record screech sound)

“Nope! GPTs don’t have true understanding…”⏱️ 60 seconds starting now…

There are many who don’t see what GPTs are doing as actual “understanding”, but I think I can convince most holdouts in less than 60 seconds. Ready? Go.

First, let’s define terms.

Intelligence is something like, “The collection, analysis, and sharing of information on the enemy to enable superior decision-making.

Data: Raw facts or figures without contextInformation: Data that has been processed, organized, or structuredKnowledge: Information that has been combined to become usefulUnderstanding: The ability to apply knowledge in new situations and contextsOr in compacted form:

Data is rawInformation is organizedKnowledge is usefulUnderstanding is appliedSo the definition we’re using is:

The ability to apply knowledge in new situations and contexts.

So, can GPTs do this? Let’s respond with an example. Do they understand concepts like Star Wars and Forbidden Love and Shakespeare enough to write the following poem?

Write a love song from Luke to Leia.

I asked it to do that, and here’s what it wrote…

O Leia, fairest of all maidens fair,

Thy beauty doth my very soul ensnare.

Thy shining eyes, like stars that light the night,

Doth fill my heart with love’s sweet, shining light.

Thy gentle touch, like feathers on my skin,

Doth make my heart to dance and leap within.

Thy voice, like music to my eager ears,

Doth soothe my soul and calm my deepest fears.

And though our love may be forbidden, true,

I cannot help but give my heart to you.

For thou art all I ever could desire,

My Leia, thou hast set my heart on fire.

So let us steal away into the night,

And let our love take wing and take to flight.

For in thy arms, I know I shall find peace,

And all the world’s troubles shall surely cease.

A LONGING GPT

Try it yourself and add new contexts/situations to the mix.

That’s a Shakespearean poem, about Forbidden Love, in the Star Wars universe, between two Siblings. And it’s applied to a completely new situation/context that I just made up.

Awkward

Notice that I didn’t even mention Star Wars or Forbidden Love in the prompt! It understood the meaning of “Luke and Leia”, and “Love”, and it inferred that it was forbidden because it knows siblings aren’t supposed to feel that way about each other. This is commonly known as understanding the poem and its contents.

A lot of the confusion about GPTs and whether they understand things comes from the conflation of understanding with experiencing.

Do GPTs understand things? Yes. The magic of the tech is that GPTs have to accidentally learn concepts in a deep way so they can properly predict the next letter in a sequence. And it can then apply those concepts in new situations. That’s understanding.

But does a GPT know what it feels like to understand love? Or what it feels like to contemplate the universe? Or human mortality? No. They don’t have feelings. They’re not conscious. They don’t experience things one little bit.

If you argue that you must feel to understand, then you’re saying understanding requires consciousness, and that’s as big a chasm as Luke took Leia across.

But we’re not asking GPTs to experience things, we’re asking them to learn a concept and then apply that concept to new situations and contexts. That’s understanding. And they do it quite well.

Software that understandsIt’s difficult to grok how big the difference is between our legacy software and software that understands.

Both the State and Policy will be Model-based

I say “something like” because the exact winning implementations will be market-based and unpredictable.

Rather than try to fumble an explanation, let’s take an example and think about how it’d be done today vs. in the very near future with something like an SPQA architecture.

A security program todaySo let’s say we have a biotech company called Splice based out of San Bruno, CA. They have 12,500 employees and they’re getting a brand new CISO. She’s asking for the team to immediately start building the following:

Give me a list of our most critical applications from a business and risk standpointCreate a prioritized list of our top threats to them, and correlate that with what our security team is spending its time and money onMake recommendations for how to adjust our budget, headcount, OKRs, and project list to properly align to our actual threatsLet’s write up an adjusted security strategy using this new approach Define the top 5 KPIs we’ll track to show progress towards our goalsBuild out the nested OKR structure that flows from that strategy given our organizational structureCreate an updated presentation for the board describing the new approachCreate a list of ways we’re lacking from a compliance standpoint given the regulations we fall underThen create a full implementation plan broken out by the next four quarters Finally, write our first Quarterly Security Report, and keep that document updatedHow many people will be needed to put this together? What seniority of people? And how long will it take?

If you have worked in security for any amount of time you’ll know this is easily months of work, just for the first version. And it takes hundreds of hours to meet about, discuss, and maintain all of this as well.

Hell, there are many security organizations that spent years working on these things and still don’t have satisfactory versions of them.

So—months of work to create it, and then hundreds of hours to maintain it using dozens of the best people in the security org who are spending a lot of their time on it.

A security program using SPQALet’s see what it looks like in the new model.

It could be that POLICY becomes part of STATE in actual implementations, but smaller models will be needed to allow for more frequent changes.

Choose the base model — You start with the latest and greatest overall GPT model from OpenAI, Google, Meta, McKinsey, or whoever. Lots of companies will have one. Let’s call it OpenAI’s GPT-6. It already knows so incredibly much about security, biotech, project management, scheduling, meetings, budgets, incident response, and audit preparedness that you might be able to survive with it alone. But you need more personalized context.Train your custom model — Then you train your custom model which is based on your own data, which will stack on top of GPT-6. This is all the stuff in the STATE section above. It’s your company’s telemetry and context. Logs. Docs. Finances. Chats. Emails. Meeting transcripts. Everything. It’s a small company and there are compression algorithms as part of the Custom Model Generation (CMG) product we use, so it’s a total of 312TB of data. You train your custom model on that.Train your policy model — Now you train another model that’s all about your company’s desires. The mission, the goals, your anti-goals, your challenges, your strategies. This is the guidance that comes from humans that we’re using to steer the ACTION part of the architecture. When we ask it to make stuff for us, and build out our plans, it’ll do so using the guardrails captured here in the POLICY.Tell the system to take the following actions — Now the models are combined. We have GPT-6, stacked with our STATE model, also stacked with our POLICY model, and together they know us better than we know ourselves.So now we give it the same exact list of work we got from the CISO.

Give me a list of our most critical applications from a business and risk standpointCreate a prioritized list of our top threats to them, and correlate that with what our security team is spending its time and money onMake recommendations for how to adjust our budget, headcount, OKRs, and project list to properly align to our actual threatsLet’s write up an adjusted security strategy using this new approach Define the top 5 KPIs we’ll track to show progress towards our goalsBuild out the nested OKR structure that flows from that strategy given our organizational structureCreate an updated presentation for the board describing the new approachCreate a list of ways we’re lacking from a compliance standpoint given the regulations we fall underThen create a full implementation plan broken out by the next four quarters Finally, write our first Quarterly Security Report, and keep that document updatedWe’ll still have to double-check models’ output for the foreseeable future, as hallucination is a real thing this early in the game.

Let’s say our new combined SPQA system is called Prima. Ask yourself two questions.

How long will it take it to create the first versions of all these, given everything it knows about the company?How much time will it take to create updated versions every week, month, quarter, or year?The answer is minutes. Not just for the initial creation, but for all updates going forward as well.

Unsupervised Learning — Security, Tech, and AI in 10 minutes…Get a weekly breakdown of what's happening in security and tech—and why it matters.The only things it needs are 1) up-to-date models using the latest data, and 2) the right questions coming from the human leaders in the organization. In this case, we already have those questions in the list above.

Remember, Prima won’t just come up with the direction, it’ll also create all the artifacts. Every document. Every OKR. The QSR itself. The strategy document. The outline for the board presentation. The auditor preparation documents. Even the emails to stakeholders. That’s additional hundreds of hours of work that would have been done by more junior team members throughout the organiztaion.

So—we’re talking about going from thousands of hours of work per quarter—spread across dozens of people—to maybe like 1% to 5% of that. In the new model the work will move to ensuring the POLICY is up to date, and that the QUESTIONS we’re asking are the right ones.

Transforming software verticalsSticking with security, since that’s what I know best, imagine what SPQA will do to entire product spaces. How about Static Analysis?

Static Analysis in SPQA

In Static Analysis you’re essentially taking input and asking two things:

What’s wrong? How do we fix it?SPQA will crush all existing software that does that because it’s understanding-based. So once it sufficiently grok’s the problem via your STATE, and it understands what you’re trying to do via your POLICY, it’ll be able to do a lot more than just find code problems and fixes. It’ll be able to do things like:

Find the problemShow how to fix it in any language (coding or human)Write an on-the-fly tutorial on avoiding these bugsWrite a rule in your tool’s technology that would detect itGive you the fixed codeConfirm that the code would workPlus you’ll be able to do far more insane things, like create multiple versions of code to see how they would all respond to the most common attacks, and then make recommendations based on those results.

Security software in generalNow let’s zoom out to security software in general and do some quick hits on some of the most popular products.

Detection and responseWho are the real attackers here?Who is dug in waiting for activation?Find the latest TTPs in our organizationWrite rules in our detection software that would find themShare those rules with our peersPull their rules in and check against those as wellCreate a false parallel infrastructure that looks exactly like ours but is designed to catch attackers using the following criteriaAutomatically disable accounts, send notifications, reset tokens, etc. when you see successful attacksWatch for suspicious linked events, such as unknown phone calls followed by remote sessions followed by documentation review.Basically, most of you had to build by hand when you stand up a D&R function will be done for you because you have SPQA in place.

It natively understands what’s suspicious. No more explicitly coding rules. Now you just add guidance to your `POLICY` model.

Attack surface management and bountyPull all data about a companyFind all its mergers and affiliationsFind all documentation related to those thingsMake a list of all domainsRun tools continuously to find all subdomainsOpen portsApplications on portsConstantly browse those sites using automationSend data to the SPQA model to find the most vulnerable spots Run automation against those spotsAuto-submit high-quality reports that include POC code to bounty programs(if you’re froggy) Submit the same reports to security@ to see if they’ll pay you anywayConstantly discover our new surfaceConstantly monitor/scan and dump into a data lake (S3 bucket or equivalent)Constantly re-run STATE modelConnect to alerting system and report-creation toolingHave the system optimize itselfCorporate securityMonitor all activity for suspicious actions and actorsAutomatically detect and block and notify on those actionsEnsure SaaS security is fully synched with corporate security policies (see POLICY)Vendor and supply chain securityVendor and Supply Chain Security is going to be one of the most drastic and powerful disruptions from SPQA, just because of how impossiblehard the problem is currently.

Make a list of all the vendors we haveConsume every questionnaire we receiveFind every place that the vendor’s software touches in our infrastructureFind vulnerable components in those locationsMake a prioritized list of the highest risks to various aspects of our companyRecommend mitigations to lower the risk, staring with the most severeCreate a list of alternative vendors who have similar capabilities but that wouldn’t have these risksCreate a migration plan to your top 3 selectionsToday in any significant-sized organization, the above is nearly impossible. An SQPA-based application will spit this out in minutes. The entire thing. And same with every time the model(s) update.

We’re talking about going from completely impossible…to minutes.

What’s comingKeep in mind this entire thing popped like 4 months ago, so this is still Day 0.

Those are just a few examples from cybersecurity. But this is coming to all software, starting basically a month ago. The main limitations right now are:

The size limitations and software needed to create large custom modelsThe speed and cost limitations of running updates for large organizations with tons of dataThe first one is being solved already using tools like Langchain, but we’ll soon have super-slick implementations for this. You’ll basically have export options within all your software to send an export out, or stream out, all that tool’s content. That’s Splunk, Slack, GApps, O365, Salesforce, all your security software, all your HR software. Everything.

They’ll all have near-realtime connectors sending out to your chosen SPQA product’s STATE model.

We’re likely to see `STATE` and `POLICY` broken into multiple sub-models that have the most essential and time-sensitive data in them so they can be updated as fast and inexpensively as possible.

For #2 that’s just going to take time. OpenAI has already done some true magic on lowering the prices on this tech, but training custom models on hundreds of terrabytes of data will still be expensive and time-consuming. How much and how fast that drops is unknown.

How to get readyHere’s what I recommend for anyone who creates software today.

Start thinking about your business’s first principles. Ask yourself very seriously what you provide, how it’s different than competitor offerings, and what your company will look like when it becomes a set of APIs that aren’t accessed by customers directly. Is it your interface that makes you special? Your data? Your insights? How do these change when all your competitors have equally powerful AI?

Start thinking about your business’s moat. When all this hits fully, in the next 1-5 years, ask yourself what the difference is between you doing this, using your own custom models stacked on top of the massive LLMs, vs. someone like McKinsey walking in with The SolutionTM. It’s 2026 and they’re telling your customers that they can simply implement your business in 3-12 months by consuming your STATE and POLICY. Only they have some secret McKinsey sauce to add because they’ve seen so many customers. Does everyone end up running one of like three universal SPA frameworks?

Mind the Innovator’s Dilemma. Just because this is inevitable doesn’t mean you can drop everything and pivot. The question is—based on your current business, vertical, maturity, financial situation, etc.—how are you going to transition? Are you going to do so slowly, in place? Or do you stand up a separate division that starts fresh but takes resources from your legacy operation? Or perhaps some kind of hybrid. This is about to become a very important decision for every company out there.

Focus on the questions. When it becomes easy to give great answers, the most important thing will be the ability to ask the right questions. This new architecture will be unbelievably powerful, but you still need to define what a company is trying to do. Why do we even exist? What are our goals? Even more than your STATE, the content of your POLICY will become the most unique and identifying part of your business. It’s what you’re about, what you won’t tolerate, and your definition of success.

My current mode is Analytical Optimism. I’m excited about what’s about to happen, but can’t help but be concerned by how fast it’s moving.

See you out there.

Notes Thank you to Saul Varish, Clint Gibler, Jason Haddix, and Saša Zdjelar for reading early versions of this essay and providing wonderful feedback.March 8, 2023

Is Equality Unnatural?

I’m still struck by an observation that Scott Galloway has been making lately. He keeps talking about how the top 5% of men in terms of income and other measures are getting all the attention from women on dating apps. He says everyone keeps freaking out about this like it’s new, but it’s actually a return to the norm. For most of history you basically had poor people and rich people, and the rich men had their pick of most women.

This reminds me of Piketty’s analysis of inequality measured by things like the GINI coefficient. His massive book on the topic talked about how it basically runs in cycles. You basically have a return to massive inequality, and then you have a traumatic event like war, famine, pandemic, etc. that equalizes things. But only temporarily.

In the US the early 1900s were massively unequal. Then the wars happened and we got the GI Bill and a bunch of social programs, and that all created the middle class. But in this model, the middle class isn’t natural. It’s an artificial construct invented by humans. This is really powerful because it ties in with the analysis of change above. If you’re liberal, you believe that you just need to give some oppportunity, and level the field, and everyone will reach similar heights. Hence, GI Bill and social programs. If you’re conservative you might think some of that is ok, but you can only help so much before you’re just wasting money on people who don’t want or aren’t capable of benefiting from the help.

I believe there’s a Pokémon Evolved Form that merges, or goes beyond, those two models. I believe the conservatives are right that there are vast differences in peoples’ individual capabilities, and so we should expect to see similar differences in outcomes. But I’m aggressively liberal because I don’t think conservatives are doing the work to tell the difference between trauma, generational disadvantage, and natural capabilities. In other words, I think too many Conservatives look at a failing person, or a failing group, and say, “See? That proves they’re not capable. That’s why they don’t deserve nice things.”

Whereas when I see someone fail, I wonder how much of it is a capability issue vs. a trauma issue. And I believe it’s the job of civilization, and the people, and government to tease that out. It’s our job to remove the disadvantages of bad luck, historical deck-stacking, and institutional biases so that people can reach their full potential.

Finally, I also believe that those who end up on the bottom after all that, still deserve a good life. They’re not throw-away people. No one is.

The Primary Liberal vs. Conservative Disconnect

For a long time the main disconnect between Liberals and Conservatives was considered to be how much they embrace change and tolerate inequity. Those still seem like decent measurements, but new research by Nick Kerry suggests that the clearer distinction is the belief that the world is fundamentally hierarchical.

What I like about this definition is that it elegantly encapsulates the previous definition. Basically, if you believe that’s how the world works, and that it’s largely inalterable, you’ll be less willing to spend money trying to change it.

Another way to frame this is around the question of human pliability. How much can people change, vs. how much are they locked in by genetics and early environment? The more you believe people basically are what they are, the more it seems you’d lean conservative in this model. And the more you believe people are malleable and can become anything—if the conditions were just better—the more you’d lean liberal.

Perhaps this is why people become more conservative over time. Maybe it’s because in their 30s and 40s they notices that most the people they knew growing up roughly shook out according to their individual genetic talents. It’s overly simple to be sure, but I think this might be a stunningly powerful predictor for what makes someone conservative or liberal.

I think the major exception to this is when someone had oppressive trauma that limited their achieving their potential, which is then somehow removed.

What’s so personally interesting for me is how much I’ve always been in the change camp. Like, radically. And also very left. So perhaps my slow move towards the center-left over the years hasn’t just been from age, or from the nasty politics of the last decade. Maybe it came from all the reading I’ve done in the last 15 years about how people are mostly the way they are, and how there are rarely major movements in capabilities or character over time.

So in this model, the more you believe people’s personalities and capabilities are locked in, the more you believe in natural hierarchies. And the more you believe in natural hierarchies, the more conservative you become.

March 6, 2023

NO. 372 — LastPass Employee Hack, State AI Propaganda, CrowdStrike’s Threat Report…

Welcome to Monday. Let's crush it this week!

MY WORK

How AI is Eating the Software World

In this essay, I describe a new architecture that I believe will replace much of our existing software, starting already. Covers GPT understanding, the SPA architecture, and gives examples of how existing software will transition. READ THE ESSAY

SECURITY NEWS

LastPass Engineer Hacked at Home

The LastPass thing keeps getting not-better. Turns out the way this all went down was by an employee getting hacked via their own computer. A keylogger was installed on their work computer through a vulnerability in a third-party media software package (which everyone is saying was Plex). From there they got access to the keys that let them read encrypted S3 buckets and pull data and backups. This is one of the most prominent cases of BYOD being such a critical part of modern defenses, and I have already seen companies steer their budgets as a result of the report. MORE

China Fielding AI Propaganda Newscasters

China appears to be fielding AI newscasters to spread pro-China propaganda. It's one of the first instances of state-sponsored propaganda channels using AI.

MORE

🔎 Crowdstrike Global Threat Report 2023 Analysis

Crowdstrike just released its 2023 Threat Report, and I like to look at these reports and pull out nuggets. Here's what stood out for me:

Overall analysis: I thought the report had a lot in it, but it wasn't as easy to consume as the DBIR. I feel like they could do a better job of information density using visual, and listing all their products at the end of the report also stole a bit of the report's legitimacy. Still solid. 8/10. READ THE FULL REPORT | THREAT ACTOR ANIMAL NAMES

SponsorAdaptive and Crowdsourced IPS with CrowdSec

CrowdSec works by unifying Blue Team and Security Operations visibility to help defenders see and block malicious activity. It's like a spider's web that detects problems in one place and can block that threat for participating systems anywhere in the world.

Cybercriminals need IP addresses to mask their locations. By linking intelligence across all protected systems, CrowdSec burns a resource precious to attacker operations.

crowdsec.net/unsupervisedlearning

News Corp Hack

News Corp says they had someone in their network for 23 months, and that they stole private information and documents from the company. MORE

BetterHelp Sharing

The FTC went after BetterHelp for sharing users' sensitive data that it promised not to share. They're paying out $7.8 million to customers as part of the settlement. MORE

Chick-fil-A Compromised

As we predicted, Chick-fil-A has now said over 71,000 accounts were breached over multiple months using credential stuffing attacks. Attackers used their access to steal data and rewards points. They then sold the data for $2 – $200 dollars based on how many points they had. MORE

Robots as Office Security Guards

Sensor-laden and AI-powered robots are becoming more popular choices for security guards in various settings. Offices, buildings, malls, etc. I think they're great as remote sensors, but they're going to be extremely easy to evade and/or bypass until they're so smart and fast that they're scary. The Black Mirror episodes showed us why this is something we should be careful with. MORE

Secure Your Home Office, NSA Style

The NSA has released best practices for working from home. Here's what they said to do:

– Keep software updated, including Windows and web browser

– Update router and change default password

– Use a password manager and two-factor authentication

– Separate work and personal activities

– Use a VPN for work connections

MORE | THE NSA RECOMMENDATION PDF

Older Men Kidnapped in Brazil

Men from 30 to 51 are being targeted in Brazil via dating apps. They're being asked to meet in non-public places with women much younger and more atttractive than them, and then they're being robbed and/or "lightning" kidnapped. Police stats show that this currently makes up 90% of kidnappings in São Paulo in the last year. MORE

You've Got Assets? We've Got Answers

JupiterOne collects more asset data than any other provider, and shows you the relationships between those assets in seconds. It's not just about connectors and data; it's about the types of questions you can ask to get the relevant answers for your security program.

We go beyond endpoints, IP addresses, users, and devices, and ingest data from CSPs, SaaS apps, code repos, IAM policies, security controls, vulnerability findings, and more. This enables you to ask questions like: "What internet-facing applications are running systems affected by log4j, and who owns those systems?"

jupiterone.com/unsupervisedlearning

TECHNOLOGY NEWS

The UL Newsletter: Finding the Patterns in the Noise…Get a weekly analysis of what's happening in security and tech—and why it matters.

OpenAI Launches APIs

OpenAI launched their ChatGPT and Whisper APIs last week which is going to massively invigorate the AI-based startups and use cases we've been seeing for months. Once we get the ability to train our own massive models, using all our documentation, all our code, all our Slack messages, etc.—that's when things are going to go crazy. In the meantime I'd love an app that listens to non-English being said around me and tells me what it's saying in my ear. MORE

TikTok Usage Controls

TikTok is introducing new well-being features, including a screen time control for kids that stops them from continuing after 60 minutes unless they enter a code. This is all to try to stop the anti-TikTok legislation in the US, but I'm not sure it's going to be enough. One thing I do know, though, is that if TikTok really does get pulled it's going to be like when Ben Kenobi had to sit down in the first Star Wars. "…it's as if millions of voices suddenly cried out in terror and were suddenly silenced". MORE

Elon Cut Tesla Prices Again

Tesla cut prices again, ranging from ~5% to ~10% discounts on various high and low-end models. MORE

Tesla's Investor Day

Elon outlined the company's future at its annual investor day event, but didn't release any new vehicles. Here's what he outlined:

MORE

HUMAN NEWS

The Peptide Movement

There's evidently a bridge now between supplements and steroids for people looking to gain muscle and drop fat. They're called Peptides, and you can either inject them or get them via nasal spray, etc. I'm not an expert on them yet, but people are saying (including Andrew Huberman otherwise I wouldn't be taking it that seriously) they give a lot of the benefits of steroids without the downsides. Those are nice words, but I want to do a lot more research. Any of you have an experience with them? MORE | DISCUSS IN OUR COMMUNITY | REPLY TO THIS EMAIL

Vaccine Makers Prepping for Bird Flu

A team at the University of Pennsylvania is developing an mRNA flu vaccine designed to provide protection against multiple subtypes of the virus, potentially limiting disease and death caused by new pandemic strains. Bird flu strains have killed millions of birds recently, which played a major role in recent egg price spikes. Plus multiple strains have moved from birds to other types of animals, including seals, sea lions, and dolphins. The number of humans that have been infected in recent years is fairly low, and human-to-human transmission is currently difficult, but the mortality rate is around 56% when it happens. MORE

The US Housing Market Dropped by $2.3 Trillion

We just saw the biggest drop in the housing market since 2008. The big losers are San Francisco and New York, and Miami has done very well from migration to Florida. MORE

Jobless Men and Divorce

A man's work status is a major predictor of divorce. In a recent study at Harvard, men without full-time jobs were 33% more likely to divorce than men who had full employment. MORE

IDEAS & ANALYSIS

What If AI Makes Everyone More Productive?

I've been talking for over 7 years about how AI is coming, and how it's going to massively disrupt human work. Many people have been saying this for a long time. There's also a counter-argument that it'll create more jobs as well, which I've always believed. My main issue has been the K-shaped recovery idea, where benefits and recoveries affect the top and bottom groups in dramatically different ways. Basically the top thrives and the bottom suffers. And people tend to be pulling toward one or the other rather than hovering in the middle.

Well what if something's possible that's far more spectacular? What if AI could be turned into a tool that lifts the bottom? What if a company like OpenAI, in conjunction with massive government funding, could create like an augmentation platform that helps people with education, transit, childcare, and even basic decision-making? What if it could effectively make some percentage of the bottom of the economy…more productive?

The Primary Conservative vs. Liberal Disconnect?

For a long time the main disconnect between Liberals and Democrats was considered to be how much they embrace change and tolerate inequity. Those still seem like decent metrics, but some new research by Nick Kerry suggests that the clearer measure is the belief that the world is fundamentally hierarchical. What I like about this definition is that it more elegantly explains the previous definition.

Basically, if you believe that's how the world works, and that it's largely inalterable, you'll be less willing to spend money trying to change it. Another way to frame this is pliability. How much can people change, vs. how much are they locked in by genetics and early environment? The more you believe people basically are what they are, the more it seems you'd lean conservative in this model. And the more you believe people are malleable and can become anything, if the conditions were just better, the more you'd lead liberal. Perhaps this is why people become more conservative over time? Because they repeatedly see how people don't usually change in major ways? This is kind of blowing my mind.

I think this might be a super powerful model (overly simple to be sure) of what makes someone conservative or liberal. And what's so personally interesting for me is how much I've always been in the "change" camp. Like, radically. So perhaps my slow move towards the center Left over the years hasn't just been from age, or from the nasty politics of the last decade, but from all the reading I've done about how people are mostly the way they are, and that there is rarely major movements in capabilities or character (unless it's via the removal of trauma that was surpressing innate talent). I'm impressed with this model. What about you? Does it resonate with you and your expierience? MORE | DISCUSS IN OUR COMMUNITY | JOIN UL | REPLY TO THIS EMAIL

A Disturbing Thought on Equality

Flowing naturally from the previous thought, I'm still struck by an observation that Scott Galloway has been making lately. He keeps talking about how the top 5% of men in terms of income and other measures are getting all the attention from women on dating apps. He says everyone keeps freaking out about this like it's new, but it's actually a return to the norm. For most of history you basically had poor people and rich people, and the rich men had their pick of most women. This reminds me of Piketty's analysis of inequality measured by things like the GINI coefficient. His massive book on the topic talked about how it basically runs in cycles. You basically have a return to massive inequality, and then you have a traumatic event like war, famine, pandemic, etc. that equalizes things. But only temporarily.

In the US the early 1900's were massively unequal. Then the wars happened and we got the GI Bill and a bunch of social programs, and that all created the middle class. But in this model, the middle class isn't natural. It's an artificial construct invented by humans. This is really powerful because it ties in with the analysis of change above. If you're liberal, you believe that you just need to give some oppportunity, and level the field, and everyone will reach similar heights. Hence, GI Bill and social programs. If you're conservative you might think some of that is ok, but you can only help so much before you're just wasting money on people who don't want or aren't capable of benefiting from the help.

I believe there's a Pokémon Evolved Form that merges, or goes beyond, those two models. I believe the conservatives are right that there are vast differences in peoples' individual capabilities, and so we should expect to see similar differences in outcomes. But I'm aggressively liberal because I don't think conservatives are doing the work to tell the difference between trauma, generational disadvange, and natural capabilities. In other words, I think too many Conservatives look at a failing person, or a failing group, and say, "See? That proves they're not capable. That's why they don't deserve nice things." Whereas when I see someone fail I wonder how much of it is a capability issue vs. a trauma issue. And I believe it's the job of civilization, and the people, and government to tease that out. It's our job to remove the disadvantages of bad luck, historical deck-stacking, and institutional biases so that people can reach their full potentials. And I also beleive that those who end up on the bottom after all that, still deseve a good life. They're not throw-away people. No one is. Anyway, fascinating ideas. DISCUSS IN OUR COMMUNITY | JOIN UL | REPLY TO THIS EMAIL

NOTES

Building a Web of APIs

I'm currently hacking on a massive combination of APIs and command-line utilities that allow me to continuously answer questions I care about, or execute commands I need done. Examples:

Then, on the command line, I can run clean little two to three-letter commands that take dev/stdin, send up to one of these APIs, and gets the output. And I can then pipe that output to /dev/stdin on the other APIs. This is what HELIOS has been doing for years already in the Attack Surface space, but this is now bigger than ASM. Plus it's doing it via APIs rather than locally. Plus there's now AI in the DNA. Real AI. Ultimately this is more like a continuous question/command infrastructure (CQCI?). That's how I think about it. I already have multiple endoints up and running and providing value, and I'll start doing subscriptions to them soon. Let me know if you know anyone with any interest. DISCUSS IN OUR COMMUNITY | JOIN UL | REPLY TO THIS EMAIL

Should I Play The Last of Us?

I'm loving The Last of Us on HBO, like everyone else, and I was thinking about playing the first game. But I'm hearing it's actually pretty scary when you're fighting clickers and such, especially on a good surround system. Any advice?

DISCOVERY

⚒️ waymore — Download the live and archived responses for URLs on wayback machine so that you can then search these for even more links, developer comments, extra parameters, etc. MORE | by XNL-h2ck3r

⚒️ bbot — An OSINT tool from Black Lantern Security that models off of Spiderfoot.

📢 [Sponsor] — Can you answer complex questions about what assets you have, which are facing the internet, and who owns those systems so you can get them fixed if there's a new vulnerability? If not, you should look at JupiterOne. It's like a unified question-answering platform powered by your own assets. LEARN MORE

The companies competing with OpenAI on AI. MORE

CypherCon 2023 — The Wisconsin Hacker Conference you’ve been looking for. 75 Speakers covering Red Team, Blue Team, Executives, and 101 Tracks! Now better than ever. The conference founder is a UL member and if you use the code UL to sign up you get in for free! MORE

RECOMMENDATION OF THE WEEK

Know Your About

Imagine yourself like a business and you need an about page. Not in the sense of wanting to make money, but in the sense of wanting to have a mission and have your actions be aligned with that mission. What's your mission? What are your goals? What are your KPIs that tell you if you're doing well or not? And what are your projects you're working on to improve?

Capture these things for yourself. Use it kind of like a journal, except it's ever-green content that you update as you learn or grow. Just the process of writing these things down will bring tremendous claitity to your life. Or anxiety, if you realize you don't have any idea what to write.

Know your about.

APHORISM OF THE WEEK

"The soul is dyed in the color of its own thoughts."

Heraclitus

No related posts.

February 28, 2023

How AI is Eating the Software World

Our discussions around Generative AI are focused on the wrong thing, and it’s causing us to miss what’s about to happen. We’re infatuated with what it can do, like getting better search results, creating custom art, or becoming an interactive teacher. It’s all really exciting, but it’s distracting us from the advancement that makes it all possible.

That advancement is understanding. Generative AI is an unfortunate misnomer. Yes, it’s generating things, but the name ignores the critical prerequisite of needing to understand before it can create.

I don’t use the word understand lightly, it’s the most important word in this essay. But what does it actually mean for software to understand something? Here’s how I think about the progression from data to understanding, and this is the definition I’m using to back my claim that large GPT models actually understand.

Data: Raw facts or figures without contextInformation: Data that has been processed, organized, or structuredKnowledge: Information that has been analyzed and integratedUnderstanding: The ability to apply knowledge in new situations and contextsOr:

Data is rawInformation is organizedKnowledge is integratedUnderstanding is appliedPeople are defining understanding as special because it’s human, which is begging the question. You have to start with a definition and see if it applies.

So do Generative Pre-trained Transformers really understand things? There are endless pundits out there saying no:

GPTs are nothing but next character predictors.

Or:

They’re just filling in the next letter of text based on what they’ve been trained on! It doesn’t really understand anything.

They’re half-right. The models are just deciding the next best character to write based on their training, but so is Prince when he does an off-the-cuff guitar solo. If you watch it in slow motion he’s just going from one note, to the next, in order. But the only reason he can do it is because of all the music he’s listened to in his life, and all the hours he’s practiced. He can do it because he understands guitar.

GPTs are similar. When they are to told to:

Write a romantic poem from Luke to Leia in the style of Shakespeare.

…they’re also “just completing the next character” in a piece of text. But look what they can do.

O Leia, fairest of all maidens fair,

Thy beauty doth my very soul ensnare.

Thy shining eyes, like stars that light the night,

Doth fill my heart with love’s sweet, shining light.

Thy gentle touch, like feathers on my skin,

Doth make my heart to dance and leap within.

Thy voice, like music to my eager ears,

Doth soothe my soul and calm my deepest fears.

And though our love may be forbidden, true,

I cannot help but give my heart to you.

For thou art all I ever could desire,

My Leia, thou hast set my heart on fire.

So let us steal away into the night,

And let our love take wing and take to flight.

For in thy arms, I know I shall find peace,

And all the world’s troubles shall surely cease.

Generated by ChatGPT

This was made one letter at a time, but in order to do so it had to first learn and understand the following non-trivial subjects:

The English languageHow poets writeHow Shakespeare writesStar WarsThe fact that Luke and Leia are siblingsThe concept of forbidden loveLooking our definition—“the ability to apply knowledge in new situations and contexts”, that’s precisely what just happened. You simply cannot write such a poem (much less an infinite number of them) without understanding the underlying concepts. And it’s the same for any sufficiently trained GPT. The point isn’t that they generate. The point is that they understand.

Software, before and afterSo what about software? What is software’s purpose? What does it actually do, from a first-principles perspective? And why is our current software in imminent danger from this new type of understanding AI? I’d say software is something like, “providing an interface to information and action in order to create understanding and pursue results.” So:

Interfaces to information: the ability to store information in organized structures that we can queryInterfaces to action: connecting inputs and outputs to action, such as sending emailsCreating understanding: when we get the information back it creates knowledge and understanding inside human brainsPursue results: we configure the software such that its execution moves us closer to higher-level outcomes we’re trying to achieveGenerative AI is eating our existing software not because it does some things better than legacy software, but because it’s working at a completely different layer of the stack. Rather than working with information and action, AI deals in understanding and outcomes. Those are the two maturity models: The Understanding axis—which goes from data to understanding, and the outcomes axis—which goes from task to outcome.

I recommend all of Stephen Few’s books on metrics, reporting, and dashboards. They’re the best out there.

At Apple I had a team focused on creating security insights for the company. The goal I gave the team was to move up the intelligence stack over time. Meaning the better we got, the more we’d move from providing data, to providing information, then to knowledge, and finally to “intelligence” that was ready for a decision. So it was a data “enrichment” process, arriving at the form that was as close as possible to action.

This has, until now, been an uniquely human capability. On the Understanding axis, going from satellite images, intercepted phone communications, and log files—to an analysis and recommendation for a general—has been unreachable for computers because it required too much background knowledge of the world. Actually, too much understanding of the world.

It’s the same for doing something complex in software, like finding the best possible thing to say to a customer to get them to buy something. The software could help the human by providing as much supporting information as possible in Salesforce, but ultimately it came down to the human knowing what exactly to say in the email, or where exactly to recommend for a perfect dinner.

Motion on the Outcomes axis is similar, and is currently mostly handled by humans. We can easily ask software today to email a list of people that’s provided, or to do a series of tasks such as, 1) query the database for those who currently work in Boise, and then 2) email those customers with the Boise_Invite template. But a human had to come up with those steps, and then program a computer to execute them.

Everything unfolds from understanding.

Moving from basic task execution to driving towards an Outcome has always required a human. You first have to understand the desired outcome, and then you have to understand the entire world well enough to break an outcome into discrete tasks. Finally, you have to know which tasks must be done first, which are dependencies on others, etc. Definitely human territory.

This is why AI is about to eat our legacy software. GPTs just jumped to the top of both the Understanding and Outcomes axes. And for many domains, the moment they got as good as humans is the same moment that they surpassed them.

A new software architectureI highly recommend Andrej Karpathy’s essay, Software 2.0 on how software will become neural net weights.

Using this model of Understanding and Outcomes, there’s a clear path to AI being vastly better than traditional software at doing most software-related tasks. This is because you get better at both Understanding and Outcomes the more you know about the world as a whole. This is true whether you’re selling something to someone, and therefore must perfectly understand both them as a person, plus the right words to use, or whether you’re trying to perfectly understand your company’s goals and OKRs for the year—and the actions within your company that are needed to get there.

Either way, the more you understand about people, and startups, and enterprises, and sales, and Jira, and email, and meetings, and Slack, etc.—the better you can both create and execute plans to achieve your desired outcomes. And that understanding is precisely the thing that GPTs are so good at.

This suggests a new way of thinking about software post-GPT, which I break into three main pillars.

STATE, which is the current state of the universe, i.e, the data and telemetry that best represents it. POLICY, which is your desired state and the set of things you want and don’t want to happen, and ACTION, which is the recommendations or actions that can be performed to bring the STATE in line with the POLICY. And all of this sits on top of one or more large models similar to GPT-N.The game then becomes getting as high quality telemetry as possible about the current state of the thing you care about, whether that’s a business, or a person’s health, or the functioning of a city. You then define exactly what you want for that thing, like we want this startup to grow at 50% or more each year, and we want to keep costs below X number of dollars, or we want our city’s population to have contentment scores above 85%. And finally, we have recommended (and soon automated) actions that flow out of the combination of those two things (plus the underlying GPT-N models).

There will obviously be a Legion of security and privacy concerns around ingesting so much raw data to train these models, and they’ll need to be addressed.

Let’s take an email security startup as an example, the STATE is everything happening in the company. Every piece of documentation. Every Slack message. Every email. Every text conversation. Every meeting. Every voice conversation. Their financials. Their bank balances. Their equity structure. Every pertinent external reaction by the public, by the market, etc. Basically everything. As much as possible.

The POLICY is what that company is trying to do. And not do. It’s like goals and anti-goals. How fast they want to grow. How many employees they want to have. Whether they want to work remote or in offices, or some combination thereof. Their values. Their culture. They’re short-term milestones. Their OKRs. Their KPIs. Example:

We are a remote-first culture. We want to have 2% email security market share by 2027. We will never do business with companies based out of Mississippi. We have unlimited PTO and a 4-day work week.

Although it won’t be long before POLICY is also being recommended and adopted.

The company’s RECOMMENDATION/ACTION components are not explicitly entered. They are generated by the various models working on the combination of the company’s STATE and POLICY. And remember, this could be a startup like above, but it could also be a school project, a plan to get ready for a half marathon, or a way to rejuvenate the state of Wyoming. Then, being at the very top of the Outcomes axis, the models will create detailed action plans for achieving everything in the policy. And once the models are connected to all the functional systems, such as email, calendar, docs, etc, it’ll actually perform those actions.

Some examples:

Writing the company strategyWriting the OKRsTracking the OKRs based on what people actually do and accomplishWriting the Quarterly Business Review (it’s updated daily so it’s always ready)Writing the presentation for the boardWriting the latest investor deckThey’ll create the meeting agendasThey’ll create the Slack channelsThey’ll invite all the right peopleThey’ll consume everything that happened from the day before, and condense that into 5 quick bullet points for all leaders at 9am the following dayIt’ll write the job descriptions for new hiresIt’ll send the emails in the perfect tone to prospective candidatesIt’ll filter the responsesIt’ll set up the meetings to do the in-person interviewsIt’ll build the perfect interview challenges based on what the company currently needs mostIt’ll collect feedback from the hiring committeeIt’ll perform a full analysis of all the feedback and make a hiring recommendation.It’ll onboard themIt’ll send the swag pack to their houseAnd I’ll answer all their onboarding questions during the first two weeks, and foreverIn this AI-based software stack of STATE, POLICY, and ACTION, the models run the show. They do so because they have far more understanding of all the relevant pieces—and their billions of interaction points—than any human possibly could. The main limitations of such a system are 1) the quality and frequency of the data coming in that produces the STATE, and 2) the opinionated culture, guidance, and decisions present within the POLICY.

I/O flexibilityCurrent software has a critical limitation that we no longer notice due to familiarity. If you want to add a new piece of functionality, you have to add new inputs, possibly add new data, and then create new outputs. For example, in our email startup, if we want to allow customers to submit their own filtering rules, we have to figure out how they’ll upload those rules and write an interface for that. Then we have to parse what they send us. Then we have to translate that into our own internal rule language. And when something triggers with that new functionality, we might need another interface or workflow to do something with it.

So our current software has a very finite number of inputs and outputs, which must be strictly maintained. Same with the data stores themselves. They’re the information behind those inputs and outputs, and if their formal and pedantic structures aren’t continuously and meticulously manicured, the whole thing stops working. This is severely limiting. And fragile.

AI software using something like an SPA architecture doesn’t have that problem. There you basically have this entity that understands your business. Period. It’s linked everything together. It knows your company’s goals. It knows your finances It knows who’s doing what. It knows what cybersecurity threats are. It knows how Splunk logs affect those cybersecurity threats. It knows what your most critical applications are. And in fact it can make you a list of them, just by looking at what’s in your STATE and POLICY. It fully understands nearly every aspect of your business.

Most importantly, the interface to its understanding is infinite. It’s just questions. If you have 100 questions, you get 100 answers. If you ask ten times that many you get ten times the results. And you didn’t have to write new software to ask different types of questions for finance, or project management, or security. To the models, it’s all just understanding. You also didn’t have to update the backend data. That’s all handled at the STATE layer by continuous ingestion and retraining. Your interfaces in and out of your company’s brain are now infinite, in the form of human language.

To get a feel for how powerful this is, imagine you’re a security org within a medium-sized company and you have a functioning SPA architecture. You’ll be able to give a command like this:

Give me a list of our most critical applications from a business and risk standpoint, create a prioritized list of our top threats to them, and correlate that with what our security team is spending its time and money on. Make recommendations for how to adjust our budget, headcount, OKRs, and project list to properly align to our actual threats. Then write up an adjusted security strategy using this new approach. Define the top 5 KPIs we’ll track to show progress towards our goals. Build out the nested OKR structure that flows from that strategy given our organizational structure. Create an updated presentation for the board describing the new approach. Create a list of ways we’re lacking from a compliance standpoint given the regulations we fall under. Then create a full implementation plan broken out by the next four quarters. Finally, write our first Quarterly Security Report, and keep that document updated every time the model re-runs.”

The models are already created and available, so that entire plan will take a few seconds to output. Oh, and to do it again you just ask it again.

The future of software is asking smart questions to a mesh of APIs running layered models in something like an STATE, POLICY, ACTION (SPA) architecture.

What to expectSo how is this going to play out?

The seminal work on GPTs was a paper called Attention is All You Need, by Ashish Vaswani, et al, in 2017

Keeping in mind that ChatGPT just came out a few months ago, there’s currently a major obstacle to everyone jumping to an SPA architecture next week. The tech for training large, custom models is still nascent and expensive. Lots of things make custom models challenging, but the biggest ones are:

Context/Prompt limits, i.e., how much data you can get into a new, company-specific model that sits on top of a massive LLM like GPT-NHow many different types of data you can include (text, images, voice, video, etc.)How long it’ll take to train on each cycleHow much it’ll cost each time you trainTechnologies like LangChain for adding high quantities of custom context are super interesting, and building these custom models as large, fast, and as cheap as possible will be one of the fastest-moving subfields in the space.

As an example of such an API chain, I have one that pulls a security incident article webpage via command line, extracts the relevant article from it, classifies the incident in multiple ways, and outputs a JSON.

My other major thought on this progression is that companies are about to become predominantly APIs. Both internally and externally. Internal functions become APIs, and external offerings become APIs. All backed by the company’s SPA stack. The APIs will work like UNIX pipes, where you can take the output from one and pipe it into another. Interfaces are critical, but they’re not the important IP for a company, and I think they’ll start decoupling from the core functionality offered by companies. There will be companies that specialize in UI/UX, and they will be the interfaces to a web of APIs provided by companies.

The next significant challenge to current, day-0 GPTs is that of non-determinism. It’s fine to get ten different poems when you ask ten different times, because that’s the nature of creativity, but it’s clearly a problem if you’re managing major aspects of your company using the same tech. Finances? Legal decisions? Security questions? Analysis and decision-making in these critical areas need to be trustworthy, and that requires consistency.

This will help address the “hallucination” problem as well.

One way this will be addressed is through the chaining of multiple AI layers that perform separate functions. And you can use them together to ensure you get a consistent and high-quality result. I’m already doing this in my own work, where I call one GPT-powered API to create a thing, another to check it for X, another to check it for Y, and another to do final analysis. And I use different personas for each step, e.g., “You’re an auditor.”, “You’re a QA specialist.”, etc. Then you can add some strict, static validation steps to the mix as well. The combination of multiple AI layers, strict validation rules, plus the inevitable consistency improvement of the models will ultimately remove this obstacle. But it might take a while, and in the meantime we’ll have to be careful what we do with results.

How to get readyThis isn’t a 1-3 year thing. It’s not even a 6-month thing. This is happening right now. So what can you do to get ready? That obviously depends on who you are and what you’re doing, but here are some opinionated universal recommendations.

Start thinking about your business’s first principles. Ask yourself very seriously what you provide, how it’s different than competitor offerings, and what your company will look like when it becomes a set of APIs. Is it your interface that makes you special? Your data? Your insights? How do these change when all your competitors have equally powerful AI?